Abstract

Pedestrian walking speeds (PWS) can be used as a “body speedometer” to reveal health status information of pedestrians and positioning indoors with other locating methods. This paper proposes a pose awareness solution for estimating pedestrian walking speeds using the sensors built in smartphones. The smartphone usage pose is identified by using a machine learning approach based on data from multiple sensors. The data are then coupled tightly with an adaptive step detection solution to estimate the pedestrian walking speed. Field tests were carried out to verify the advantages of the proposed algorithms compared to existing solutions. The test results demonstrated that the features extracted from the data of the smartphone built-in sensors clearly reveal the characteristics of the pose pattern, with overall accuracy of 98.85% and a kappa statistic of 98.46%. The proposed walking speed estimation solution, running in real-time on a commercial smartphone, performed well, with a mean absolute error of 0.061 m/s, under a challenging walking process combining various usage poses including texting, calling, swinging, and in-pocket modes.

1. Introduction

Due to the development of microelectronics technology, modern smartphones are equipped with a rich set of sensors and have been explored as a ubiquitous computing platform. With this platform, particular attention has been focused on the estimation of the walking speed of pedestrians, which, as a “body speedometer,” can reveal pedestrians health status information, such as joint strength [1] and lifestyle [2], and predict future health [3]. A precise walking speed is necessary for the use of many location-based services as well, especially in the indoor environment where most global navigation satellite system (GNSS) signals are blocked.

The current pedestrian walking speed estimation methods can be divided into two categories: radio frequency (RF)-based methods and sensor-based methods. In RF-based walking speed estimation and activity recognition, there have been several interesting studies in recent years [4,5,6,7,8,9]. For example, Shi [7] used the fluctuation in ambient FM radio signals to infer pedestrian attention levels by interpreting changes in their walking speed and direction. Sigg [8] considered the detection of activities from noncooperating individuals with features obtained on a radio frequency channel and used the WiFi received signal strength information (RSSI) on a smartphone to estimate walking speed [9]. Generally, these types of approaches exploit both the time and frequency domains of the statistical features (e.g., mean, variance, kurtosis, and skewness), and use a machine learning method (i.e., k-nearest neighbor decision tree) to classify the walking speed. That is, the speed estimation problem is transformed into a classification problem. Therefore, only a qualitative walking speed can be determined.

The sensor-based algorithms use smartphone inertial sensors (e.g., accelerometer, gyroscope, and magnetometer) to estimate the pedestrian walking speed and can be further divided into two subcategories, i.e., machine learning (ML)-based methods [10,11,12,13,14] and speed model-based methods [15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30]. The ML-based methods have the potential to exploit associative information within the data beyond an explicit model chosen by the system designer [10]. In principle, rather than using a certain physical model to estimate the walking speed, the ML-based approach expresses the complex relationship between the measurements from the inertial sensors and the walking speed by training a black-box model [11]. Several research studies have focused on use of a regression model and ML techniques to improve the precision of the walking speed estimation. Vathsangam [12] proposed a nonlinear and nonparametric regression framework to estimate the walking speed from an accelerometer fixed on a subject’s hip. Park [10] estimated the speed from the energy of acceleration magnitude by applying regularized kernel methods on collected accelerometer data to achieve a higher accuracy of walking speed estimation. Yeoh et al. [13] estimated the speed by using a third-order polynomial model that fits the mean value of the average net acceleration (ANA). However, the automatic selection and extraction of notable features remain a challenge. Consequently, a deep convolutional neural network (DCNN) is applied to automatically identify and extract the most effective features from the accelerometer and gyroscope data of the smartphone and to train the network model for speed estimation [14]. Similarly, the deep learning method generally requires a large amount of labeled training data. As the neural network grows larger and deeper, it becomes more difficult to train the network to perform a task. The other group of sensor-based method is model-based approaches, in which the smartphone can be regarded as a pedometer that uses sensor measurements to detect step events. Cox [15] proposed a simple solution to estimate walking speed based on the integration of the acceleration. Cho [16] proposed the opportunistic calibration of the inertial sensor-based speed estimation using the GPS of a smartphone when the user is walking outdoors. Other pedestrian navigation systems have also considered methods of speed estimation, typically using inertial and magnetic sensors along with heuristic- or rule-based speed estimation [10,17,18]. Masaru [19] generated magnetic signatures and obtained a walking speed from walking distance and walking time by using dynamic time warping (DTW). It should be noted that the accuracy of the step length model has a large effect on the final precision of the model-based approaches. Several methods have been proposed to estimate the step length, including human gait-based [20,21], step frequency-based [22,23], and step counting-based methods [24,25,26]. However, these pedestrian walking speed estimation methods suffer from various limitations such as unsuitability for smartphone-based applications [27], a lack of consideration of different pose context [28,29], user-dependency [22,23], and reliance on spatial constraints [24,25,30].

In this work, we aim to develop an adaptive pedestrian walking speed estimation solution that provides pose context awareness and is therefore capable of achieving high accuracy using a normal smartphone. This approach is tightly coupled with real-time pose identification and pedestrian walking information using an adaptive step detection strategy. The multi-sensor data is collected from sixty-one male and thirty-eight female subjects and labeled with the pose type, and these data are used to evaluate the extracted features and train the classifier. To assess the performance of the proposed pedestrian walking speed solution, various field tests are carried out in an indoor environment, and the effectiveness of the solution is verified by comparison with the results from a Leica total station.

The rest of the paper is organized as follows: the system architecture and methodology of the proposed system are demonstrated in Section 2. In Section 3, the experimental platform is described in detail, and numerical results and a performance comparison are presented. Section 4 and Section 5 provide a discussion and conclusion of the whole work, respectively, and give suggestions for future research.

2. Materials and Methods

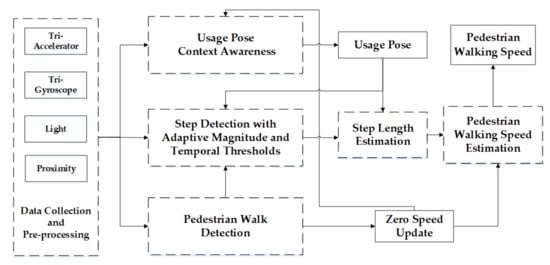

The architecture of the proposed pedestrian walking speed estimation solution on a smartphone is illustrated in Figure 1. In addition to the use of motion sensors (i.e., tri-accelerometer and tri-gyroscope), the estimation process also takes into consideration information obtained from ambient light and proximity sensors. All sensor data are gathered by the developed customized application developed and the Android phone is run with a sampling frequency of 100 Hz. In Figure 1, after receiving the data, the usage pose context awareness module, step detection module and pedestrian walk detection module start to operate. If the walking detection module decides that the pedestrian is in static mode, the zero speed is updated immediately. Alternatively, if the pedestrian is in walking mode, the pose mode is detected by using multi-sensor data and the ML method every 0.6 s. Next, step detection and step length estimation are executed to calculate the step frequency and current step length both with the aid of context information. Finally, the pedestrian walking speed is derived from all of these estimated results. The following sections describe each part of the pedestrian walking speed estimation system as well as its features and advantages.

Figure 1.

Scheme of speed estimation.

2.1. Usage Pose Context Awareness Based on Multi-Sensor Data

2.1.1. Usage Pose Context Definition

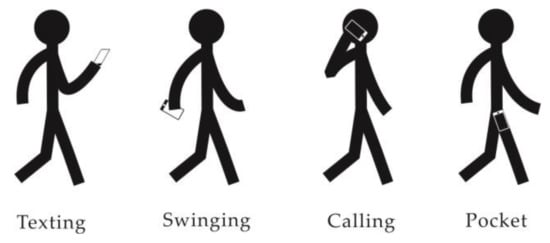

The usage pose context is defined as a series of motion patterns [31] that can be detected with a consumer-grade smartphone. However, smartphones experience a large variety of unrestrained and personal motions, which generate different patterns in sensors. In this section, the daily use modes are divided into two major categories with four subcategories to cope with the complexity of the modes. Based on observations of daily smartphone usage habits and contrasted with previous studies [26,32,33], as shown in Figure 2, four basic usage pose contexts of smartphone covered in this study are considered.

Figure 2.

Four basic pose contexts.

Relatively steady state: This category includes all situations in which the motion state of a mobile device is relatively stable and there is no dramatic relative movement between the mobile device and the user’s body. This includes the following cases:

- Hand texting: This case is the smartphone use case. To perform operations such as text messaging or reading the news, the user’s eyes, hand, and screen should remain relatively stationary.

- Hand calling: In hand calling, the user makes a phone call while walking or remaining stationary. Intuitively, user’s ear, hand, and phone should remain relatively stationary.

- In pocket: The user carries the mobile device in a pocket.

Relatively dynamic state: This class refers to the hand-swinging case in which the user is walking while holding the mobile device in his/her swinging hand. Relatively cyclical swinging occurs between the smartphone and the user’s body while walking

2.1.2. Feature Extraction

Feature selection and extraction play vital roles in processing of the pattern recognition and have a significant effect on the performance and the final precision. In this section, statistics for multi-sensors time-series data are collected to detect the posture context, including the motion context and usage environment context. The readings from accelerometer and gyroscope can reflect dynamic changes in the usage mode, which are used to calculate the pitch and roll of a smartphone. The statistics for pitch and roll in the time series can be used to mine the pose pattern. Statistics for light and proximity sensors data are used to perceive the smartphone usage environment context, such as bright or dark locations, and the proximity to the body. For example, if we know that the light sensor value and proximity sensor value are both quite low, we can speculate that the mobile device is near the ear or in a pocket. Therefore, the mobile device usage environment should be detected simultaneously.

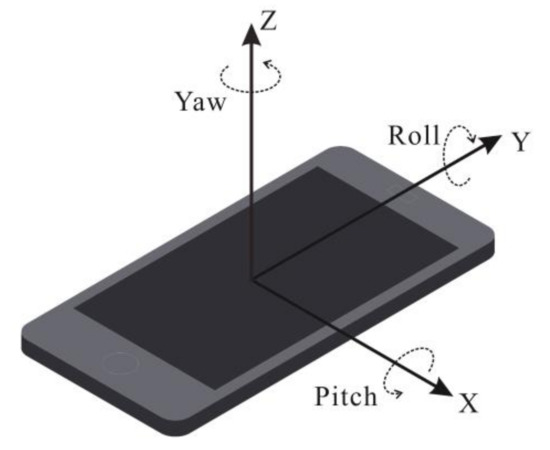

As shown in Figure 3, the coordinate system of the sensors in a smartphone is defined with the screen of the phone and its default orientation. Pitch and roll are the rotation around the x-axis and the rotation around the z-axis, respectively.

Figure 3.

Smartphone reference frame.

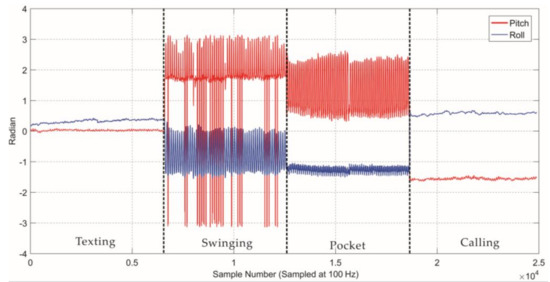

When the pose mode changes, for example, texting mode transit into swinging mode, the pitch and roll change synchronously and sensitively and demonstrate different patterns. As shown in Figure 4, in a relatively steady state (hand texting, hand calling, in the pocket), the mobile device remains relatively static with the body. Therefore, the pitch and roll angles are relatively significant in those processes, whereas in a relatively dynamic state (hand swinging), pitch and roll angle change only periodically.

Figure 4.

Example of the pitch and roll data in different poses.

The statistics of the pitch, roll, and the readings from the light and proximity sensors in the sliding window are studied in this research. The details are listed in Table 1. The size of the sliding windows N is another critical factor that affects the performance. The size of the sliding window should be long enough to be able to observe transitions of a sudden motion mode but short enough to maintain the efficiency of the algorithm. The windows size N is selected as 0.6 s with a 100 Hz sampling frequency in this paper.

Table 1.

Descriptions of the features.

2.1.3. Classification

Various ML algorithms, such as naïve Bayes [34], k-nearest neighbor [35,36], decision tree (DT) [36], neural network [37], support vector machines [38,39], and random forest (RF) [40], etc. are used for the purpose of posture context recognition. Meanwhile the merits and drawbacks of those ML methods are compared and analyzed in many studies [41,42]. These studies demonstrated that RF offers a number of advantages, such as a straightforward learning process, ease of parallelization, a shorter training time, and a higher travel prediction accuracy. Consequently, only the RF methodology is used to solve this real-time classification problem in this paper.

Finding the mean noise is the core step of this methodology. RF is an ensemble of binary decision trees, which grow to their maximum depth and reduce the relevance of the individual decision trees by using randomization. This randomness introduces robustness against noise to the algorithm. According to the strong law of large numbers, as the number of decision trees in a random forest increases, the generalization error converges to a limit, and thus the overfitting can be effectively avoided. The generalization error is dependent on the strength of the individual trees and their correlation.

2.2. Pedestrian Walking Speed Estimation

2.2.1. Walk Detection

Pedestrian walk detection (PWD) is a highly critical step in estimating the walking speed and determining the geospatial location. PWD can effectively avoid unnecessary and expensive computing during the non-motion and promotes the accuracy of the speed estimation. The geospatial location of the user does not significantly change in static state, such as standing still, texting, reading news, answering a phone call, or turning around to find a location. However, with the unconstrained and personal variation in the use of smartphones, there is no absolute static state or zero speed during the speed estimation task or pedestrian navigation.

In this paper, two thresholds were set to detect the pedestrian walking state by using the accelerometer and gyroscope readings. Due to the units of angular rate and acceleration being different, the norm of the output vector from tri-accelerometer and tri-gyroscope was used to detect the pedestrian walk. The dynamic response of the accelerometer is slow, and its range of measurement is restricted. The gyroscope offers advances in excellent dynamic performance and sensitivity; however, the data suffer from the changes of the temperature and unstable torques and thus produce drift errors.

where N is the size of the sliding windows for PWD which was selected as 0.6 s, , and represent the readings of the tri-accelerometer and tri-gyroscope, respectively, and represents the mean value of the accelerometer output vector in the sliding window. is a scale coefficient of gyroscope output vector. and represent the minimum and maximum PWD thresholds, respectively.

2.2.2. Preprocessing

In general, the output of the tri-accelerometer and tri-gyroscope might appear in the form of harmonic oscillation waveforms caused by walking behaviors [32]. Using the repetitiveness and periodicity of the pedestrian’s walking, the number of steps that a pedestrian has traveled can be computed.

Recently, some algorithms based on accelerometers data processing have been developed for step detection. In those approaches, the magnitude of the three axes are used to analyze the step, and can be expressed as:

However, the use of the magnitude to do the step detection task neglects the information of pose context implicit in the attitude of smartphone.

In this paper, the pedestrian steps were detected with knowledge of the different posture modes and its own corresponding sensor data. When a user walks with the smartphone in texting mode, calling mode, or pocket mode, the accelerometer signal can clearly present a cyclic pattern. When the user walks with the smartphone in swing mode, there is synchronization between the arm and foot motion, as has been shown by biomechanical studies. This synchronization relationship between the swinging arm and the reaction moment about the vertical axis of the foot is explained in the context of the dynamics of a multi-body articulated system [43]. Specifically, as a pedestrian walk, the positive torque produced by the arm swing makes the foot move forward. Therefore, a sinusoidal pattern in the gyroscope reading is used to detect the walking step.

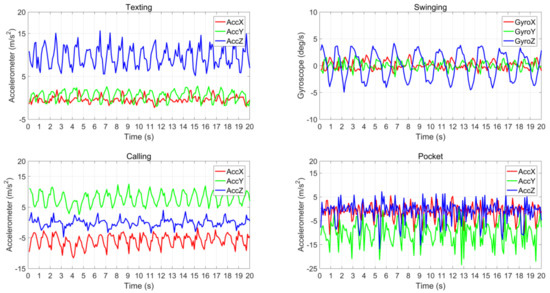

As shown in Figure 5, for texting mode, the y-axis and z-axis acceleration signals show obvious periodicity, and the feature signal can be expressed as:

where and denote the readings of accelerometer on the y- and z-axes.

Figure 5.

Internal sensors data in different poses.

For calling mode, the x-axis and y-axis acceleration signal shows obvious periodicity, and the feature signal can then be expressed as:

where denotes the readings of accelerometer on the x- and y-axes.

For pocket mode, the magnitude of the tri-axis accelerometer is used to detect the step. The feature signal can then be expressed as:

where and denotes the readings of accelerometer on the x-, y- and z-axes.

For swing mode, the z-axis gyroscope signal shows an obvious periodicity, and the feature signal can be expressed as:

where represents the readings of gyroscope on the z-axis.

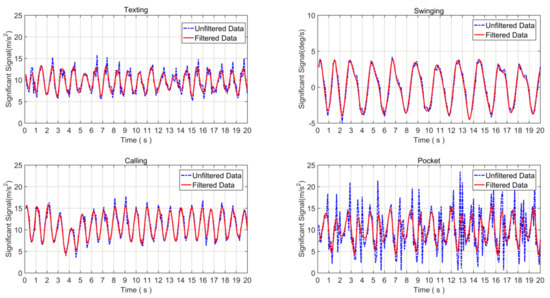

To minimize the impact of the mobile device shaking and sensor drift, and to improve the robustness of the step detection algorithm, a 10th order Butterworth filter [44] with a 3-Hz cut-off frequency was used for preprocessing of the time-series sensors feature signal. The purpose of the above pre-processing phase was to extract the signal’s fundamental frequency that is induced by step events only and therefore only from an undistorted signal [45]. In this manner, the interference from high-frequency noise and unstable output sensors data can be reduced. In Figure 6, the raw data and the output after filtering are compared.

Figure 6.

Comparison of the raw data and the output after filtering.

2.2.3. Step Detection with Adaptive Magnitude and Temporal Thresholds

Pedestrian walking is a continuously changing process that has the three characteristics of periodicity, similarity, and continuity. With the consideration of those characteristics and calculation time, two adaptive thresholds were established from two different aspects. For the vertical aspect, magnitude thresholds were used to detect the step point using peak detection approach. The magnitude of a step point should be the local maximum and larger than the adaptive threshold. The threshold was adapted dynamically based on the magnitudes of previous steps. The threshold consists of the average and standard deviation of the magnitude of the acceleration in a fixed window. However, increasing the window size might degrade the step detection accuracy during the transition of a step mode or device pose, because the threshold calculated from a larger window might be unable to effectively handle the variation in the recent statistics. From the horizontal aspect, temporal thresholds were used to constrain the step point in the step frequency dimension. Walking is an ongoing and relatively stable process, and therefore, the time interval between two steps and the time change of two steps should be within the range of normal human levels. On the basis of this analysis, the following criteria are defined in this paper:

Criteria 1. The feature signal of the candidate step point should be the local maximum:

Criteria 2. The feature signal of the candidate step point should exceed the adaptive threshold according to the current motion mode:

where and represent the mean and standard deviation of the magnitude in a fixed window, respectively, and and represent two magnitude scale constant based on the current pose context.

Criteria 3. The walking step frequency should be within the range of the frequency threshold from to , which is the range of the normal level:

The proposed adaptive step detection is outlined in the pseudo code in Table 2 where and are the samples of tri-accelerometer and tri-gyroscope vector at sample time k, respectively, and is calculated according to Equation (1) for PWD. is the current pose context. and represent the mean and standard deviation of the magnitude in a step fixed window, and , , , and represent the adaptive constants based on .

Table 2.

Pseudocode of Step Detection Algorithm.

2.2.4. Step Length Estimation

Many algorithms have been proposed to estimate the step length, including human gait-based, step frequency-based, and step counting-based methods.

Pratama [46] estimates the step length based on a static model that considers a constant relative to height and sex, where H represents the height, and k is equals to 0.415 for male and 0.413 for female subjects.

The approach of Weinberg [28] assumes that the gait impacts the vertical acceleration and uses the difference between the maximum and minimum values of the vertical acceleration in each step to estimate the step length. The model formula is:

where and represents the maximum and minimum vertical acceleration values measured in a single stride, respectively, and k is a constant model parameter.

The model proposed by Tian [47] estimates the step length based on the step frequency, height, and sex of the subjects as:

where and represents the height of the subject and step frequency, and is a model parameter and that is tuned to 0.3139 for male and 0.2975 for female subjects.

Kim [29] develops an empirical method based on the dependence of the average acceleration on the step length during walking. The step length is calculated using this method as:

where is the acceleration measured on a sample in a single step and N is the number of samples corresponding to each step.

Compared with the above step length model, in this paper, the empirical and linear model in Reference [22] is used to estimate the step length, representing the relationship among the pedestrian’s height, step frequency, and step length. The equation used to estimate the step length is written as follows:

where and represent the step length and step frequency, respectively, is the height of the pedestrian which is manually inserted in this step model, and , , and are model parameters for each person and can be calibrated by pre-training.

3. Results

3.1. Experimental Setup

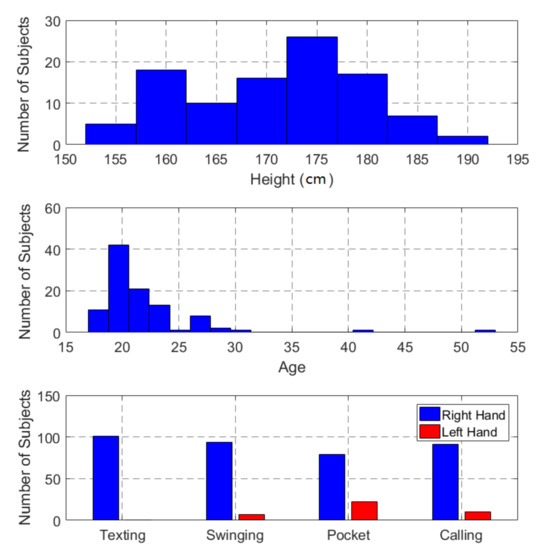

Two experiments were carried out in the indoor environment to test the proposed algorithms. In the first experiment, the performance of the usage pose context awareness algorithm was evaluated based on field tests carried out in the lobby of the Library of Wuhan University. Sixty-three male subjects and thirty-eight female subjects took part in this experiment. As shown in Figure 7, the subject heights varied from 155–192 cm, and their ages ranged from 17 to 53. Because of the subjects’ different patterns in smartphone use, we subdivided the calling, swinging, and pocket modes into left-hand use and right-hand use. The percentages of swinging, pocket, and calling modes with the use of the left hand were 6.93%, 21.78%, and 9.9%, and the percentages of the three modes with the use of the right hand were 93.07%, 78.22%, and 90.1%, respectively. In the training process, sixty percent of the subjects’ recorded multi-sensors data that were used to train the classifier, and in the testing part, the other forty percent of the subjects’ data were used to test the performance of the classifier. Four smartphones, including Huawei Mate9, Huawei P9, Huawei P9 Plus, and Huawei Honor 8, were used in the experiment, and all with Android platforms. The sensor data, including the accelerometer, gyroscope, light sensor, and proximity sensor data, were collected and labeled by an android application in real time, and the sampling rate of the sensors was set to 100 Hz.

Figure 7.

Statistics on subjects participating in our data collection: The height and age distributions of the subjects and the left-hand use and right-hand use ratio of subjects for different poses.

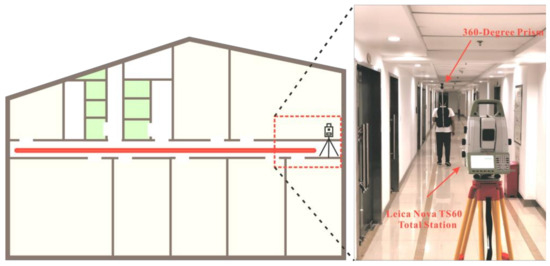

The second experiment was an evaluation of the walking speed estimation algorithm. In total, four smartphones (the same models as in the first test) were used in the experiment. Six men and six women participated in this experiment, with heights from 158–183 cm and of ages 22–53. The sensors data, including the accelerometer, gyroscope, light sensor, and proximity sensor data, were collected using an Android application in real time, and the sampling rate of sensors was set of at 100 Hz. The ground truth of the walking speed was measured using the Leica Nova TS60 total station, which can track a 360-degree prism automatically and supply one observation every 0.15 s with 3-mm precision. As shown in Figure 8, a participant carried the 360-degree prism on his/her back and walked 80 m with four different postures. The ground truth of the step count was read from videos taken during the entire experiment.

Figure 8.

Experimental site. A Leica Nova TS60 total station was placed at the end of the corridor and automatically tracked a 360-degree prism. Participants carried the 360-degree prism on their back and held the smartphone in their hand.

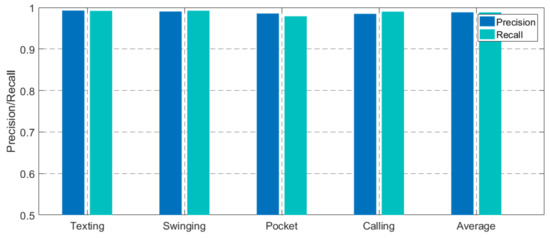

3.2. Perfomance Evaluation of Usage Pose Awareness

Figure 9 provides the precision and recall rate of the proposed pose context awareness. The average precision rate of the proposed algorithm was 98.833% and the average recall rate was 98.828%. Table 3 shows a confusion matrix of the classification results. For the proposed algorithm, an overall accuracy (OA) of 98.85% and a kappa statistic (KS) of 98.46% were obtained. User’s accuracy (UA) and producer’s accuracy (PA) were above 97.88% in all the classes, and the commission error (CE) and omission error (OE) were within 2.12% in all the classes.

Figure 9.

Average accuracy of the proposed posture context awareness algorithm. The rightmost two bars are the average precision and recall rate of the four different poses.

Table 3.

Confusion matrix of pose recognition with seven categories.

We also divided the swinging, pocket, and calling modes into two subclasses of left-handed and right-handed use, and tested the performance of the extracted feature and classifier further. As shown in Table 4, the overall accuracy (OA) and kappa statistic (KS) of the seven categories were 98.85% and 98.46%, respectively, which were both lower than the previous classification results, but the proposed method still delivered a high level of precision. From the result, it is clear that the recognition accuracy of left-hand use was lower than that of right-hand use. This was due to the following two reasons. First, as shown in Figure 7, fewer subjects were tested with their left hand than with their right hand, which resulted in insufficient samples and imbalanced training data. Second, the extraction features for the patterns of left-handed and right-handed use were highly similar, and misclassification within the major categories could lead to a remarkable decrease in the precision of the posture context awareness. For example, the users’ accuracy of all pocket mode was 98.55%, but the users’ accuracy of left-handed pocket mode and right-handed pocket mode were 94.55% and 96.43%, respectively.

Table 4.

Confusion matrix of pose recognition with seven categories.

3.3. Pedestrian Walking Speed Estimation Results and Analysis

Table 5 shows the performance of the proposed step detection algorithm for every combination of pose information. The twelve subjects taking part in this experiment and the ground truth and estimated step count are list in the Table 5. The average precision of texting, swinging, pocket, and calling modes were 99.78%, 99.32%, 99.85%, and 99.78%, respectively. The experimental results demonstrate that the performance of the proposed algorithm was not greatly affected by any pose and achieved a high level of precision (99.68%) consistently over any combination of poses. The result of the eighth subject with the swing pose was the worst (86.14%) because the swing step of this subject was homolateral. In this subject’s walking pattern, the swing arm and leg are on the same side, but the subject regulated the swinging posture deliberately during the experiment.

Table 5.

Metrics for evaluating the adaptive step detection method with different pose.

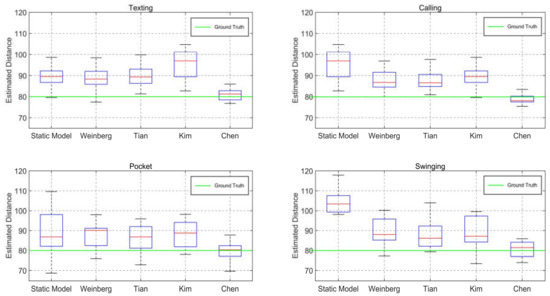

In this test, participants carried the 360-degree prism and walked 80 m with four different poses. a comparison of the performance using five step length estimation models was carried out, and we analyzed their precision in texting, pocket, swinging, and calling modes. Figure 10 illustrates the maximum and minimum values, lower and upper quartiles, and median walking length obtained using the five step length models, including the static [46], Weinberg [28], Tian [47], Kim [29], and Chen [22] models, in four posture modes. In Figure 10, the red line represents the median of estimation distance and the green line represents the actual distance (80 m). The performance of the Chen method showed the highest precision and greatest robustness by significant margins. For texting mode, the length-estimating errors of the Chen method were within (76.75 m, 85.95 m) and the 25% and 75% errors were 78.46 m and 82.83 m. For calling mode, the length-estimating errors were within (75.36 m, 87.37 m) and the 25% and 75% errors were 77.50 m and 82.40 m. For pocket mode, the length-estimating errors were within (69.62 m, 87.82 m), and the 25% and 75% error were 77.05 m and 82.39 m, respectively. For swinging mode, the length-estimating errors were within (73.92 m, 109.51 m), and the 25% and 75% error were 76.97 m and 84.15 m, respectively.

Figure 10.

Comparison of the walking distance estimation method in different poses.

The definition of the evaluation parameters is listed in Table 6. The absolute error rate and the standard deviations (std) of four different poses are compared in Table 7. The average error column presents the mean absolute percentage error of four poses.

Table 6.

Evaluation parameters definition.

Table 7.

Step length error comparison.

The results indicate that the absolute error of the Chen [22] method was much lower than those of the other four methods, which were 3.14%, 3.66%, 4.81%, and 6.99% in the four posture modes. The static model resulted in particularly high errors with an average of 19.01%, whereas the Kim method obtained an average error of 14.61%. The Tian and Weinberg approaches achieved better results, with average error rates of approximately 11%. The average error rate of the distance estimation was reduced to 4.65% with the Chen step length estimating algorithm.

Table 8 and Table 9 show the proposed pedestrian walking speed estimation result from the second test. Twelve subjects took part in this experiment and the average of the ground truth and the estimated speed of four poses are listed in Table 8. The average PWS estimation absolute error was 0.061 m/s. The proposed algorithm combined with the texting pose produced the best result. The error of the texting pose was between 0.006 m/s and 0.09 m/s, and the 50% and 95% errors were 0.031 m/s and 0.089 m/s, respectively. The estimation errors of the calling mode were within (0.022 m/s, 0.122 m/s), and the 50% and 95% error were 0.039 m/s and 0.068 m/s, respectively. The estimation errors of the pocket pose were within (0.013 m/s, 0.176 m/s), and the 50% and 95% errors were 0.046 m/s and 0.126 m/s. The mean error of the swing motion was 0.094 m/s, which was higher than those of the other three posture modes, and the 50% and 95% errors were 0.058 m/s and 0.11 m/s, respectively. The means, standard deviations, and variance of the error are also listed in Table 9.

Table 8.

Metrics for evaluating the pedestrian walking speed estimation method with different pose.

Table 9.

Walking speed estimation error of the different pose.

4.Discussion

Overall, our studies established an adaptive pedestrian walking speed estimation system on a consumer-grade smartphone. Evaluations of our methods with different criteria showed that an adaptive step detection method coupled tightly with pose context can accurately estimate pedestrian walking speed. From the results, extracted features from multi-sensors, including accelerometer, gyroscope, light, and proximity sensors, express the four basic pose features accurately. We also demonstrated the adaptive step detection method aided with real-time pose context recognition, which was not greatly affected by any pose and achieved a high level of precision consistently over any combination of poses.

Numerous recent ML-based works [10,11,12,13,14] predicted the PWS with a pre-trained black-box model; however, automatic feature extraction, generalization, and unbiased dataset remained a challenge on this task. Therefore, we tackled the problems of multi-pose context pedestrian walking speed estimation in a model-based way [20,21,22,23,24,25,26,27,28,29]. Compared with previous studies [28,29], knowing pose context beforehand can improve the PWS estimation precision. The results confirm that the constraints on how the smartphone is carried were reduced in this task, and average absolute speed error achieved was 0.061 m/s. Multi-pose PWS estimation has great potential for indoors smartphone positioning and tracking systems. Our future study will focus on fusing the PWS with multiple measurements (e.g., absolute position, angle-of-arrival, ranging, and time-of-advent) for smartphone indoor positioning.

Although experiments have proven that our pose identification method performed well on four basic trained poses, as an ML application, the performance of our method may be limited for an untrained pose or activity. In future work, we will focus on improving the generalization of the system and extending our method to additional postures and activities. Besides, we also aim to set up an unbiased dataset that covers a wider range of ages, heights, genders, and handedness of the subjects.

5.Conclusions

This paper proposed an adaptive pedestrian walking speed estimation solution aided by pose awareness on the smartphone platform. In this solution, the real-time smartphone-posed context was coupled with an adaptive step detection method to precisely estimate the pedestrian walking speed using the multi-sensors tightly. Field tests were carried out to verify the proposed pose context awareness and adaptive step detection algorithms. The proposed awareness solution was reliable and could achieve a 98.85% overall accuracy and a 98.46% kappa statistic. The performance of the proposed adaptive step detection algorithm was almost unaffected by the pose in the test, and was able to consistently achieve a high level of precision (99.68%) over any combination of posture in the tests. The performance of the proposed solution developed on a commercial smartphone resulted in a mean absolute of 0.061 m/s over the different posture modes in real time. In future work, we will focus on improving the robustness of the system and extending it to additional postures and activities. Additionally, we plan to fuse the pedestrian walking speed with other measurements for indoor positioning.

Author Contributions

This paper is a collaborative work by all the authors. G.G. proposed the idea, implemented the system, performed the experiments, analyzed the data, and wrote the manuscript. R.C. and L.C. aided in proposing the idea, gave suggestions, and revised the rough draft. F.Y., M.L., Z.C., and Y.P. assisted with certain experiments.

Funding

This research was funded by the National Key Research and Development Program of China (grant nos. 2016YFB0502200 and 2016YFB0502201) and the NSFC (grant no. 91638203).

Conflicts of Interest

The authors declare that they have no conflict of interest to disclose.

References

- Hu, J.-S.; Sun, K.-C.; Cheng, C.-Y. A model-based human walking speed estimation using body acceleration data. In Proceedings of the 2012 IEEE International Conference onRobotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1985–1990. [Google Scholar]

- Andriacchi, T.P.; Ogle, J.A.; Galante, J.O. Walking speed as a basis for normal and abnormal gait measurements. J. Biomech. 1977, 10, 261–268. [Google Scholar] [CrossRef]

- Fritz, S.; Lusardi, M. White paper: “Walking speed: The sixth vital sign”. J. Geriatr. Phys. Ther. 2009, 32, 46–49. [Google Scholar] [CrossRef] [PubMed]

- Adib, F.; Katabi, D. See through walls with WiFi! ACM Sigcomm Comput. Commun. Rev. 2013, 43, 75–86. [Google Scholar] [CrossRef]

- Pu, Q.; Sidhant, G.; Gollakota, S.; Patel, S. Whole-home gesture recognition using wireless signals. Comp. Commun. Rev. 2013, 43, 485–486. [Google Scholar] [CrossRef]

- Zhao, M.; Adib, F.; Katabi, D. Emotion recognition using wireless signals. In Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, New York, NY, USA, 3–7 October 2016; pp. 95–108. [Google Scholar]

- Shi, S.; Sigg, S.; Zhao, W.; Ji, Y. Monitoring Attention Using Ambient FM Radio Signals. IEEE Pervasive Comput. 2014, 13, 30–36. [Google Scholar] [CrossRef]

- Sigg, S.; Scholz, M.; Shi, S.; Ji, Y.; Beigl, M. RF-Sensing of Activities from Non-Cooperative Subjects in Device-Free Recognition Systems Using Ambient and Local Signals. IEEE Trans. Mob. Comput. 2014, 13, 907–920. [Google Scholar] [CrossRef]

- Sigg, S.; Blanke, U.; Tröster, G. The telepathic phone: Frictionless activity recognition from WiFi-RSSI. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications, Budapest, Hungary, 24–28 March 2014; pp. 148–155. [Google Scholar]

- Park, J.-G.; Patel, A.; Curtis, D.; Teller, S.; Ledlie, J. Online pose classification and walking speed estimation using handheld devices. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 23–28 April 2017; pp. 113–122. [Google Scholar]

- Yang, S.; Li, Q. Inertial Sensor-Based Methods in Walking Speed Estimation: A Systematic Review. Sensors 2012, 12, 6102–6116. [Google Scholar] [CrossRef] [PubMed]

- Vathsangam, H.; Emken, A.; Spruijt-Metz, D.; Sukhatme, G.S. Toward free-living walking speed estimation using Gaussian process-based regression with on-body accelerometers and gyroscopes. In Proceedings of the 2010 4th International Conference on-NO PERMISSIONS Pervasive Computing Technologies for Healthcare (PervasiveHealth), Munich, Germany, 22–25 March 2010; pp. 1–8. [Google Scholar]

- Yeoh, W.S.; Pek, I.; Yong, Y.H.; Chen, X. Ambulatory monitoring of human posture and walking speed using wearable accelerometer sensors. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 5184–5187. [Google Scholar]

- Shrestha, A.; Won, M. DeepWalking: Enabling Smartphone-based Walking Speed Estimation Using Deep Learning. arXiv, 2018; arXiv:1805.03368. [Google Scholar]

- Cox, J.; Cao, Y.; Chen, G.; He, J.; Xiao, D. Smartphone-Based Walking Speed Estimation for Stroke Mitigation. In Proceedings of the IEEE International Symposium on Multimedia, Taichung, Taiwan, 10–12 December 2014; pp. 328–332. [Google Scholar]

- Cho, D.-K.; Mun, M.; Lee, U.; Kaiser, W.J.; Gerla, M. Autogait: A mobile platform that accurately estimates the distance walked. In Proceedings of the 2010 IEEE international conference on Pervasive computing and communications (PerCom), Mannheim, Germany , 29 March–2 April 2010; pp. 116–124. [Google Scholar]

- Randell, C.; Djiallis, C.; Muller, H. Personal Position Measurement Using Dead Reckoning. In Proceedings of the 7th IEEE International Symposium on Wearable Computers, White Plains, NY, USA, 21–23 October 2003; pp. 166–173. [Google Scholar]

- Foxlin, E. Pedestrian Tracking with Shoe-Mounted Inertial Sensors. IEEE Comput. Graph. Appl. 2005, 25, 38–46. [Google Scholar]

- Matsubayashi, M.; Shiraishi, Y. A Method for Estimating Walking Speed by Using Magnetic Signature to Grasp People Flow in Indoor Passages. In Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing NETWORKING and Services, Hiroshima, Japan, 28 November–1 December 2016; pp. 94–99. [Google Scholar]

- Tien, I.; Glaser, S.D.; Bajcsy, R.; Goodin, D.S.; Aminoff, M.J. Results of using a wireless inertial measuring system to quantify gait motions in control subjects. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 904–915. [Google Scholar] [CrossRef] [PubMed]

- Jahn, J.; Batzer, U.; Seitz, J.; Patino-Studencka, L.; Boronat, J.G. Comparison and evaluation of acceleration based step length estimators for handheld devices. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation, Vienna, Austria, 15–17 December 2010; pp. 1–6. [Google Scholar]

- Chen, R.; Pei, L.; Chen, Y. A smart phone based PDR solution for indoor navigation. In Proceedings of the 24th International Technical Meeting of the Satellite Division of the Institute of Navigation, Portland, OR, USA, 20–23 September 2011; pp. 1404–1408. [Google Scholar]

- Renaudin, V.; Susi, M.; Lachapelle, G. Step length estimation using handheld inertial sensors. Sensors 2012, 12, 8507–8525. [Google Scholar] [CrossRef]

- Wang, H.; Sen, S.; Elgohary, A.; Farid, M.; Youssef, M.; Choudhury, R.R. No need to war-drive:unsupervised indoor localization. In Proceedings of the International Conference on Mobile Systems, Applications, and Services, Lake District, UK, 25–29 June 2012; pp. 197–210. [Google Scholar]

- Jianga, S.; Fuqiang, G.; Xuke, H.; Allison, K. APFiLoc: An Infrastructure-Free Indoor Localization Method Fusing Smartphone Inertial Sensors, Landmarks and Map Information. Sensors 2015, 15, 27251–27272. [Google Scholar]

- Tian, Q.; Salcic, Z.; Kevin, I.; Wang, K.; Pan, Y. A multi-mode dead reckoning system for pedestrian tracking using smartphones. IEEE Sens. J. 2016, 16, 2079–2093. [Google Scholar] [CrossRef]

- Cho, S.Y.; Chan, G.P. MEMS Based Pedestrian Navigation System. J. Navig. 2005, 59, 135–153. [Google Scholar] [CrossRef]

- Weinberg, H. Using the ADXL202 in pedometer and personal navigation applications. In Analog Devices AN-602 Application Note 2.2; Analog Devices, Inc.: Norwood, MA, USA, 2002; Volume 2, pp. 1–6. [Google Scholar]

- Kim, J.W.; Jang, H.J.; Hwang, D.-H.; Park, C. A step, stride and heading determination for the pedestrian navigation system. Positioning 2004, 1. [Google Scholar] [CrossRef]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 421–430. [Google Scholar]

- Chen, R.; Chu, T.; Liu, K.; Liu, J.; Chen, Y. Inferring Human Activity in Mobile Devices by Computing Multiple Contexts. Sensors 2015, 15, 21219–21238. [Google Scholar] [CrossRef]

- Kuang, J.; Niu, X.; Chen, X. Robust Pedestrian Dead Reckoning Based on MEMS-IMU for Smartphones. Sensors 2018, 18. [Google Scholar] [CrossRef]

- Qian, J.; Pei, L.; Ma, J.; Ying, R.; Liu, P. Vector graph assisted pedestrian dead reckoning using an unconstrained smartphone. Sensors 2015, 15, 5032–5057. [Google Scholar] [CrossRef]

- Hand, D.J.; Yu, K. Idiot’s Bayes—Not So Stupid After All? Int. Stat. Rev. 2010, 69, 385–398. [Google Scholar]

- Witten, I.H.; Frank, E. Data Mining: Practical Machine Learning Tools and Techniques with Java Implementations; Morgan Kaufmann Publishers: Burlington, MA, USA, 2000. [Google Scholar]

- Borio, D. Accelerometer signal features and classification algorithms for positioning applications. In Proceedings of the 2011 International Technical Meeting of The Institute of Navigation, San Diego, CA, USA, 24–26 January 2011. [Google Scholar]

- Hagan, M.T.; Demuth, H.B.; Beale, M.H.; De Jesús, O. Neural Network Design; PWS Publishing Company: Boston, MA, USA, 1996; Volume 20. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Yin, J.; Yang, Q.; Pan, J.J. Sensor-Based Abnormal Human-Activity Detection. IEEE Trans. Knowl. Data Eng. 2008, 20, 1082–1090. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Abdulazim, T.; Abdelgawad, H.; Habib, K.; Abdulhai, B. Using smartphones and sensor technologies to automate collection of travel data. Transp. Res. Rec. J. Transp. Res. Board 2013, 44–52. [Google Scholar] [CrossRef]

- Shafique, M.A.; Hato, E. Use of acceleration data for transportation mode prediction. Transportation 2015, 42, 163–188. [Google Scholar] [CrossRef]

- Kuo, A.D. A simple model of bipedal walking predicts the preferred speed–step length relationship. J. Biomech. Eng. 2001, 123, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Butterworth, S. On the theory of filter amplifiers. Wirel. Eng. 1930, 7, 536–541. [Google Scholar]

- Susi, M.; Renaudin, V.; Lachapelle, G. Motion mode recognition and step detection algorithms for mobile phone users. Sensors 2013, 13, 1539–1562. [Google Scholar] [CrossRef] [PubMed]

- Pratama, A.R.; Widyawan; Hidayat, R. Smartphone-based Pedestrian Dead Reckoning as an indoor positioning system. In Proceedings of the International Conference on System Engineering and Technology, Bandung, Indonesia, 11–12 September 2012; pp. 1–6. [Google Scholar]

- Tian, Q.; Salcic, Z.; Kevin, I.; Wang, K.; Pan, Y. An enhanced pedestrian dead reckoning approach for pedestrian tracking using smartphones. In Proceedings of the 2015 IEEE Tenth International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Singapore, 7–9 April 2015; pp. 1–6. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).