A Novel Cloud Removal Method Based on IHOT and the Cloud Trajectories for Landsat Imagery

Abstract

1. Introduction

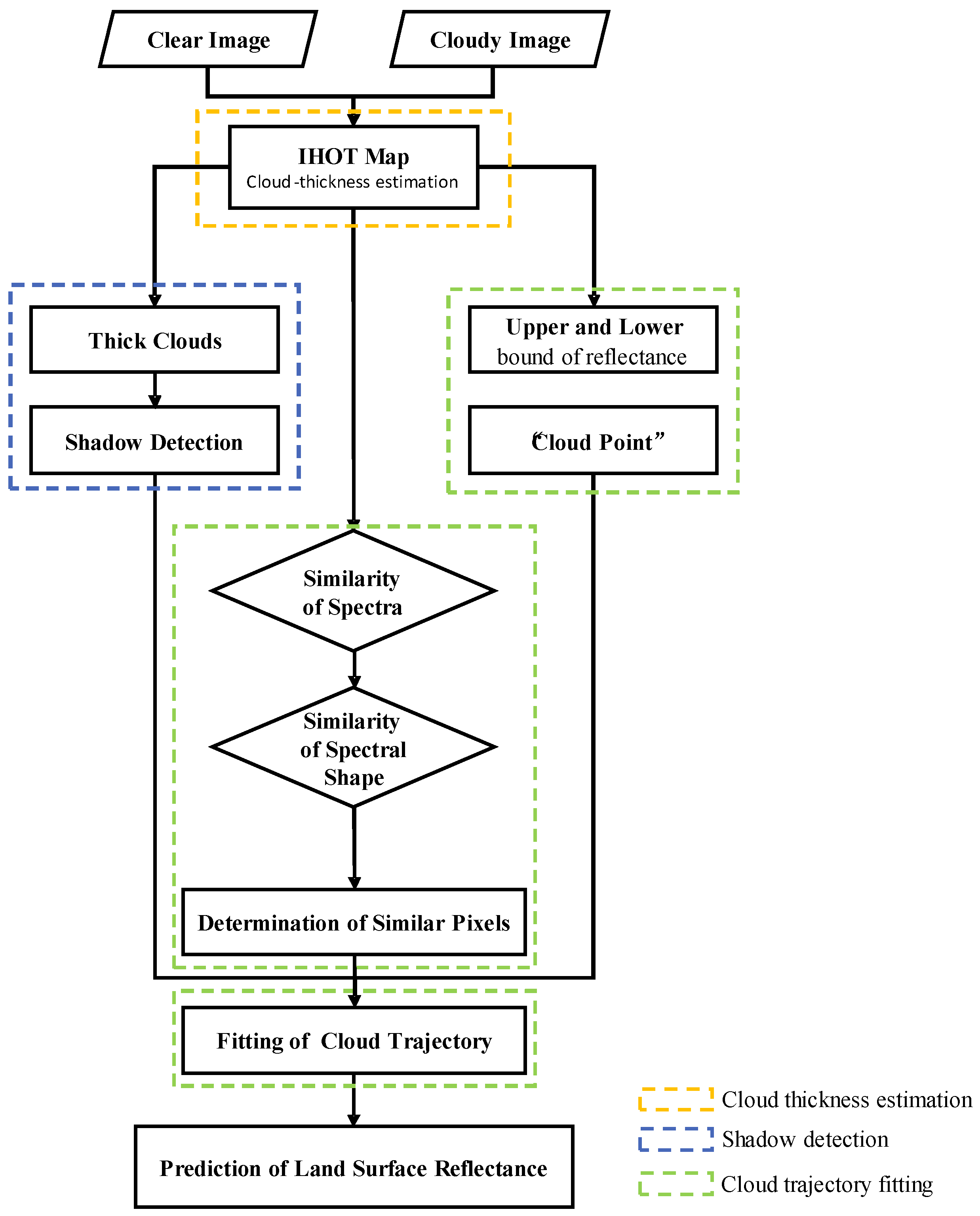

2. Methodology

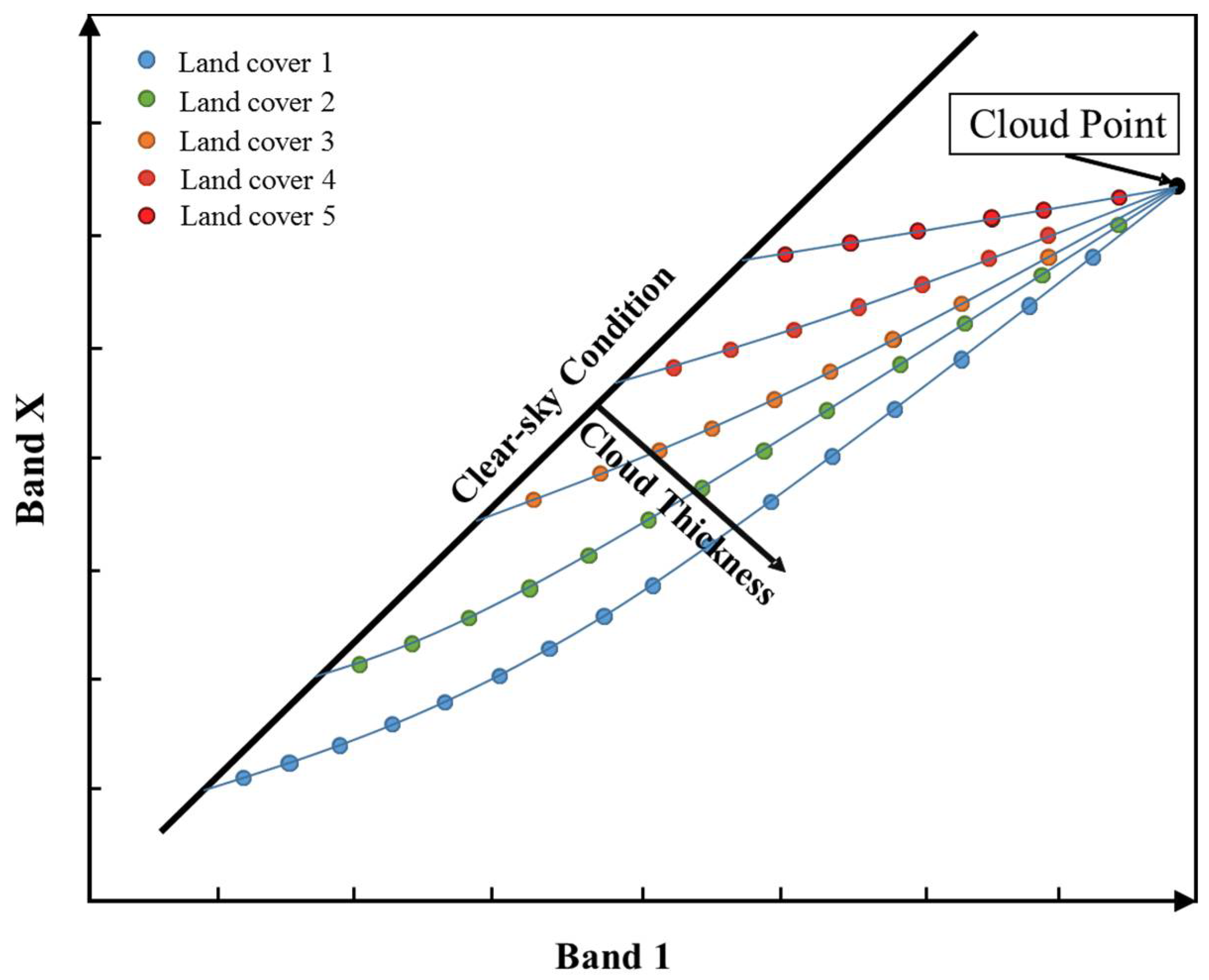

2.1. Cloud Thickness Estimation

2.2. Shadow Detection

2.3. Cloud Trajectory Fitting

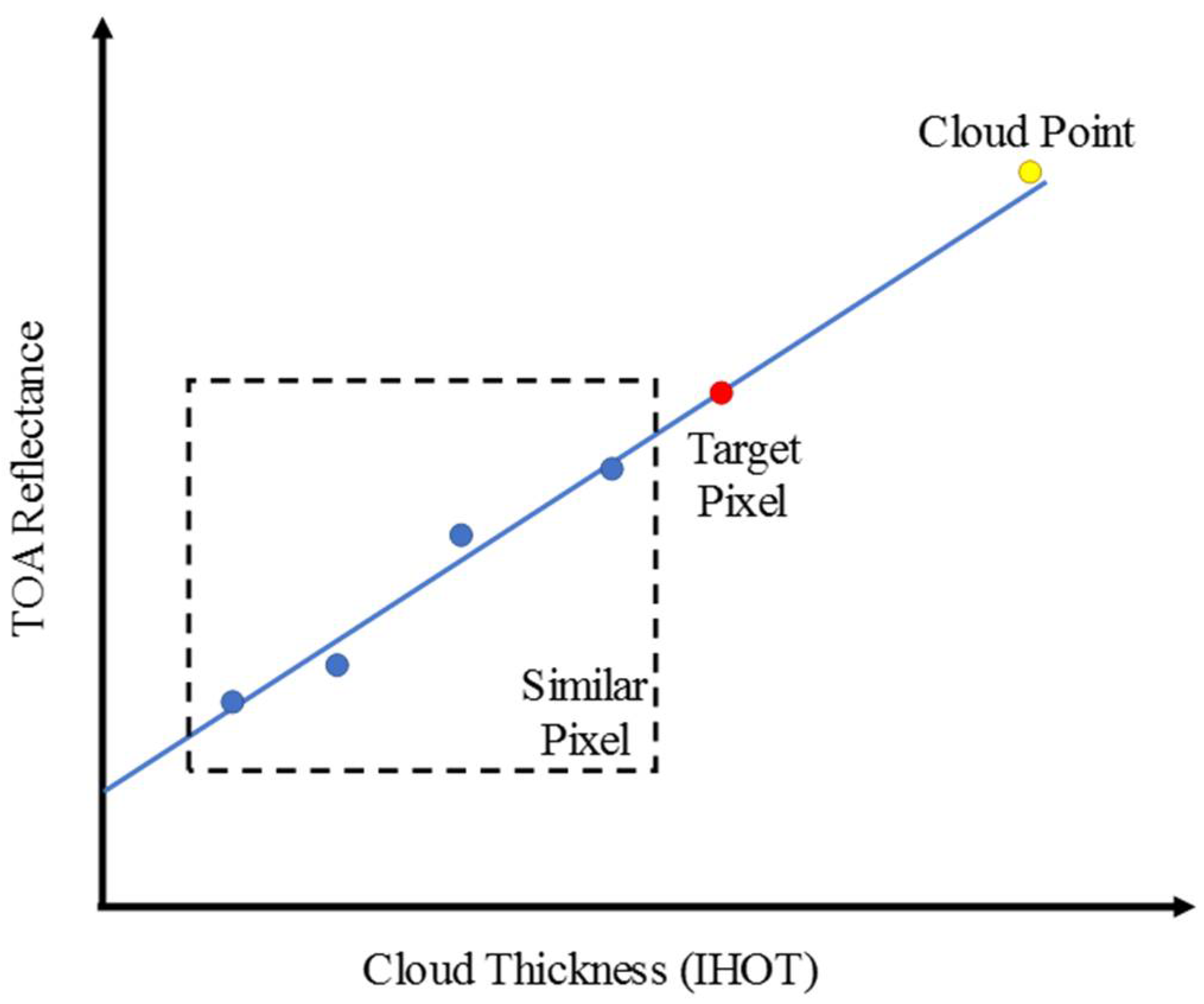

2.3.1. Determining Cloud Point

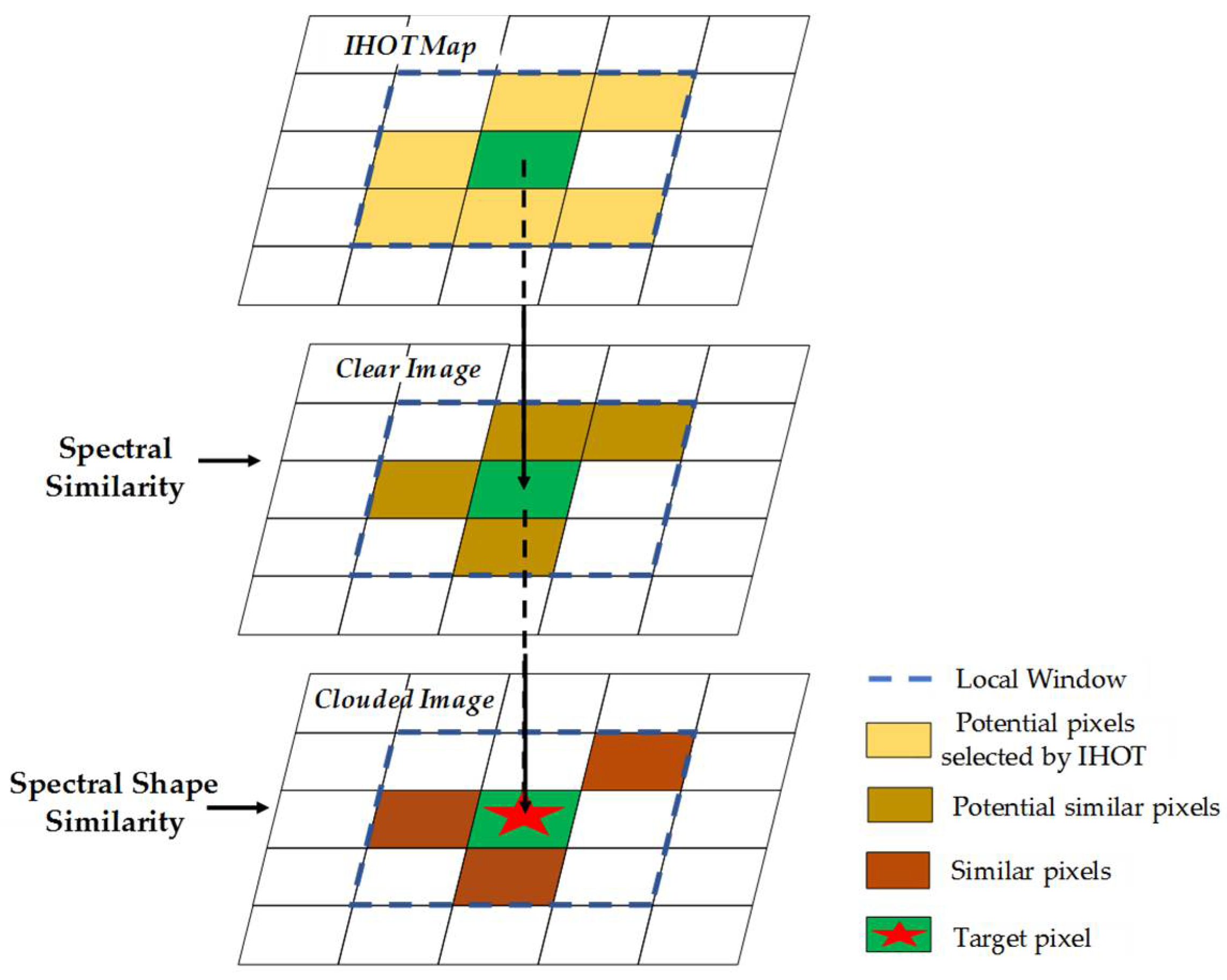

2.3.2. Retrieving Similar Clouded Pixels

2.3.3. Cloud Trajectory Fitting

2.4. Cloud Removal

3. Experiment

3.1. Study Area and Data

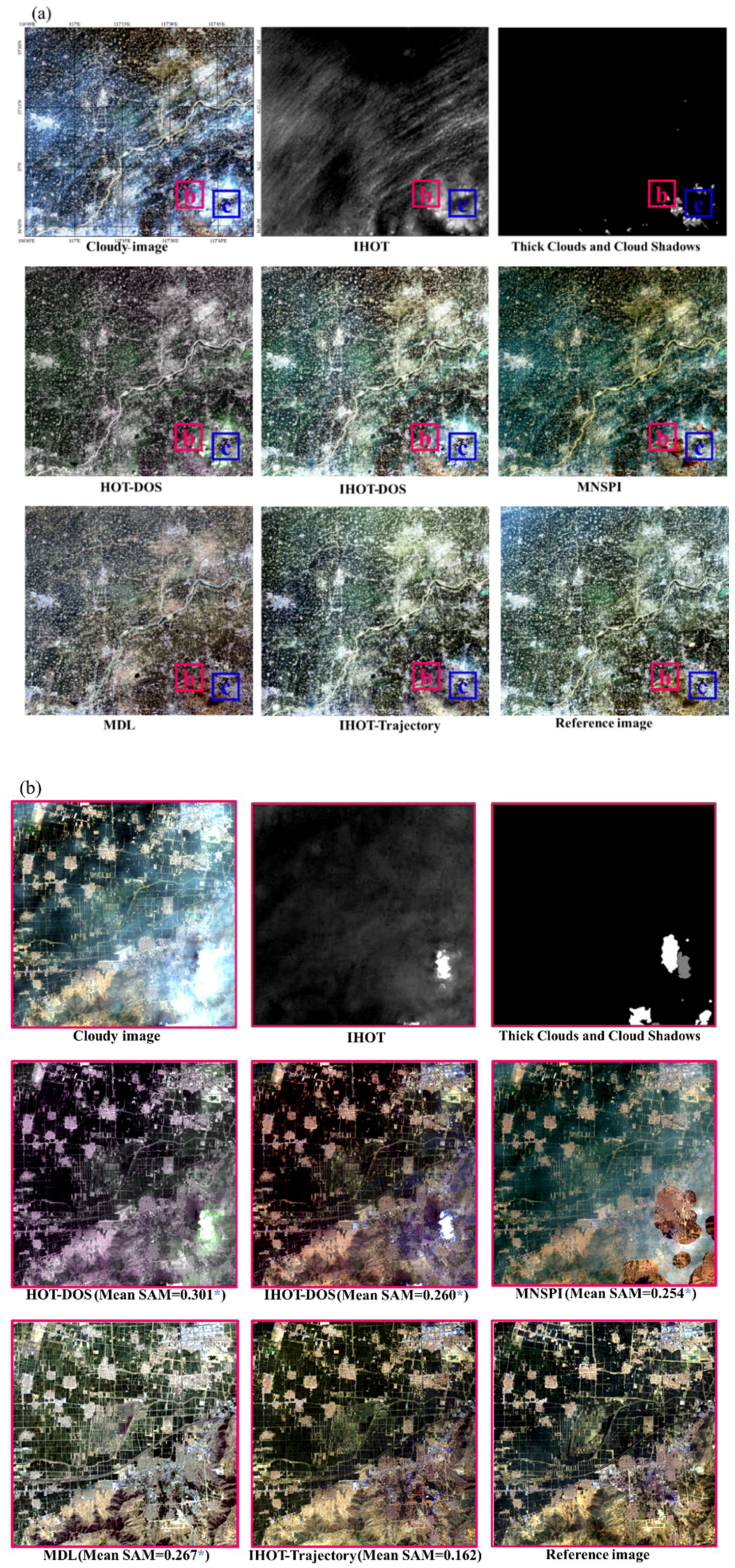

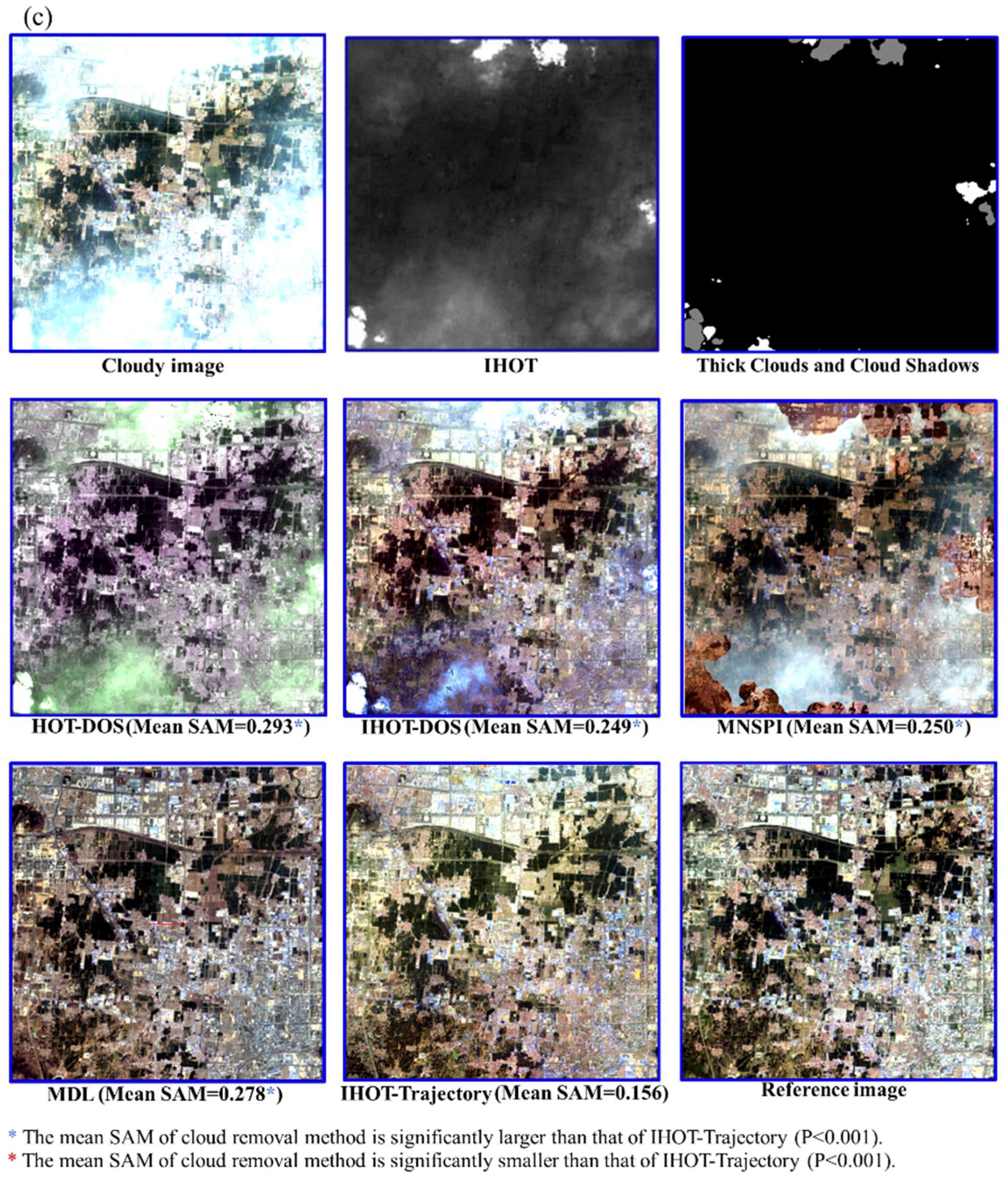

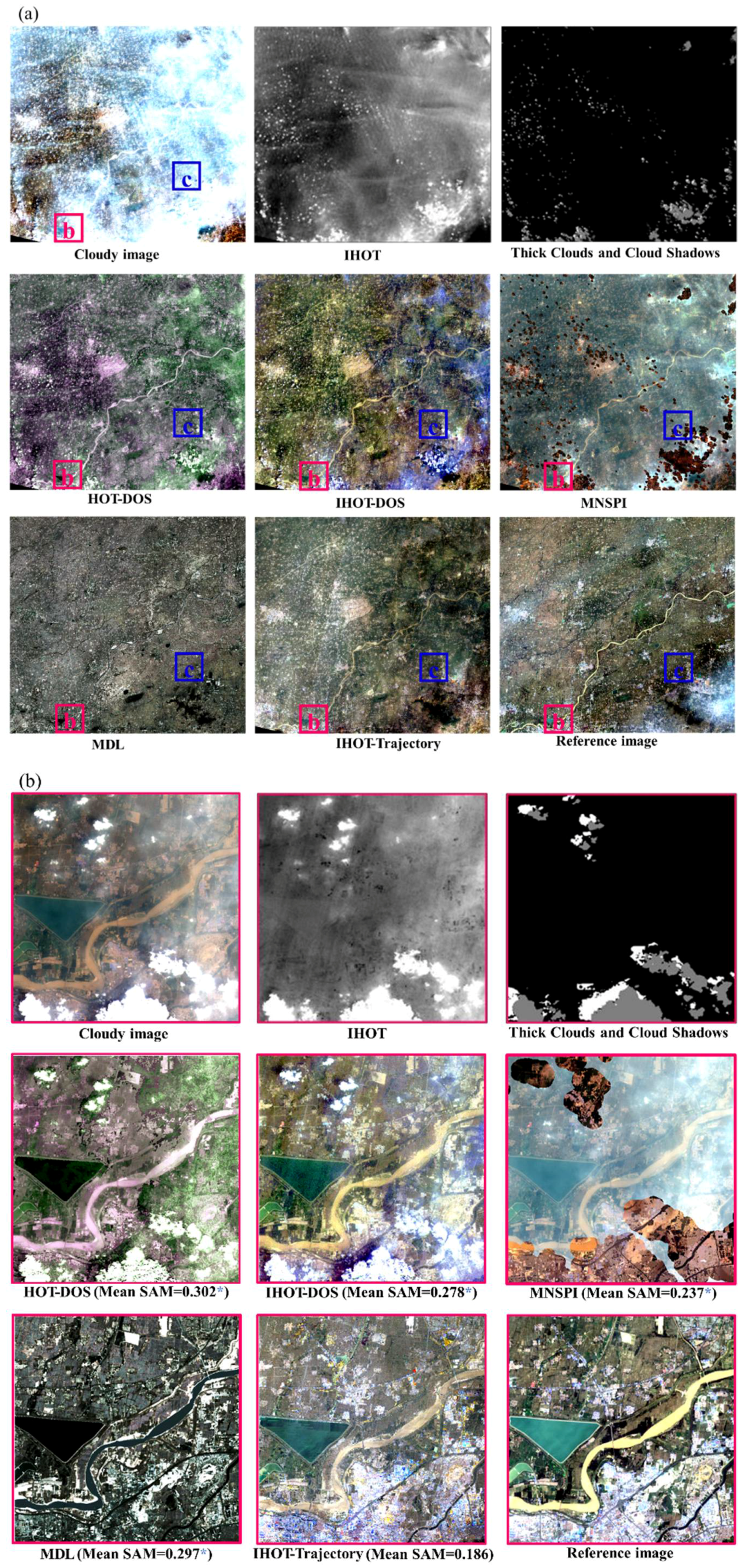

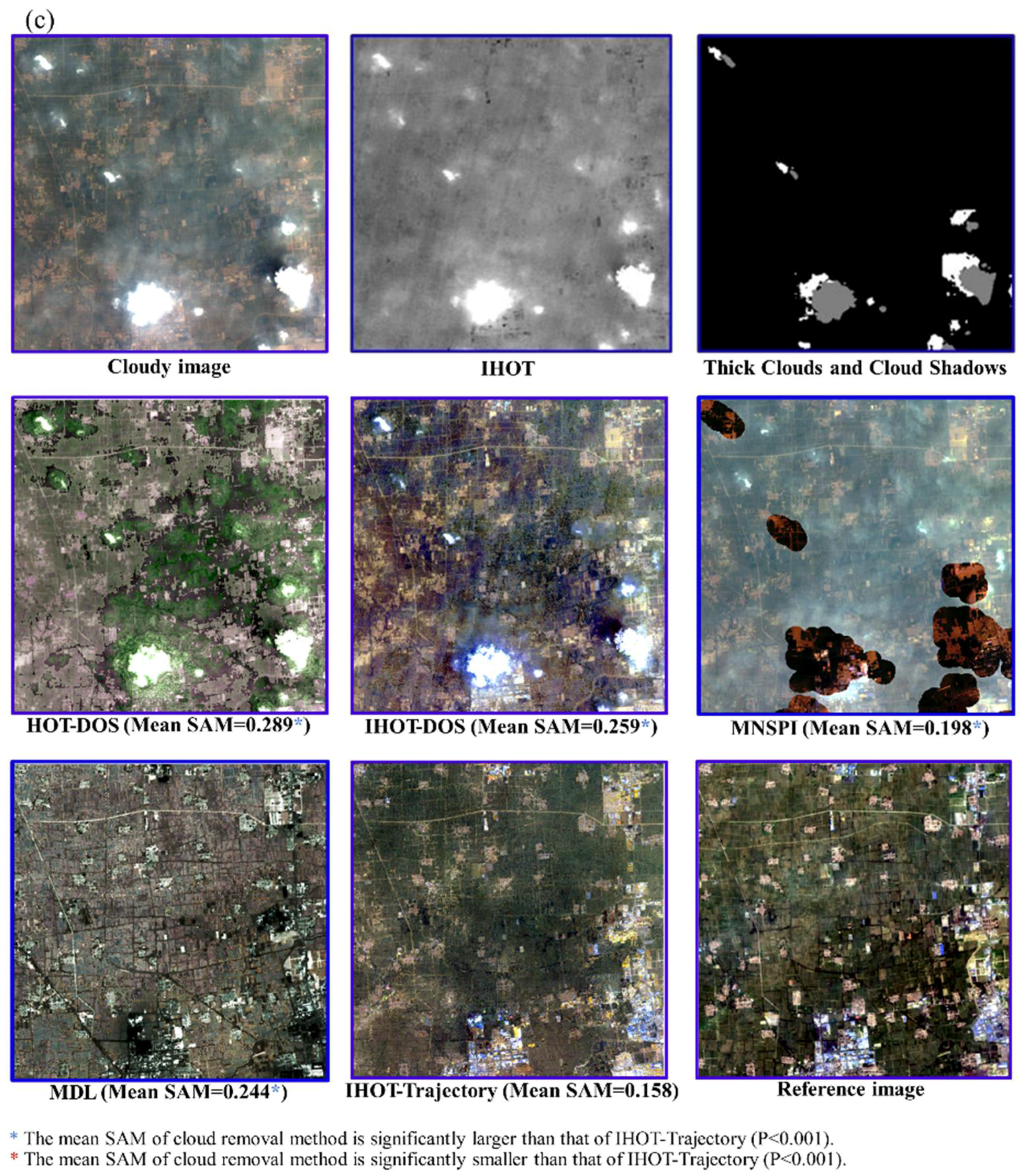

3.2. Cloud Removal Results

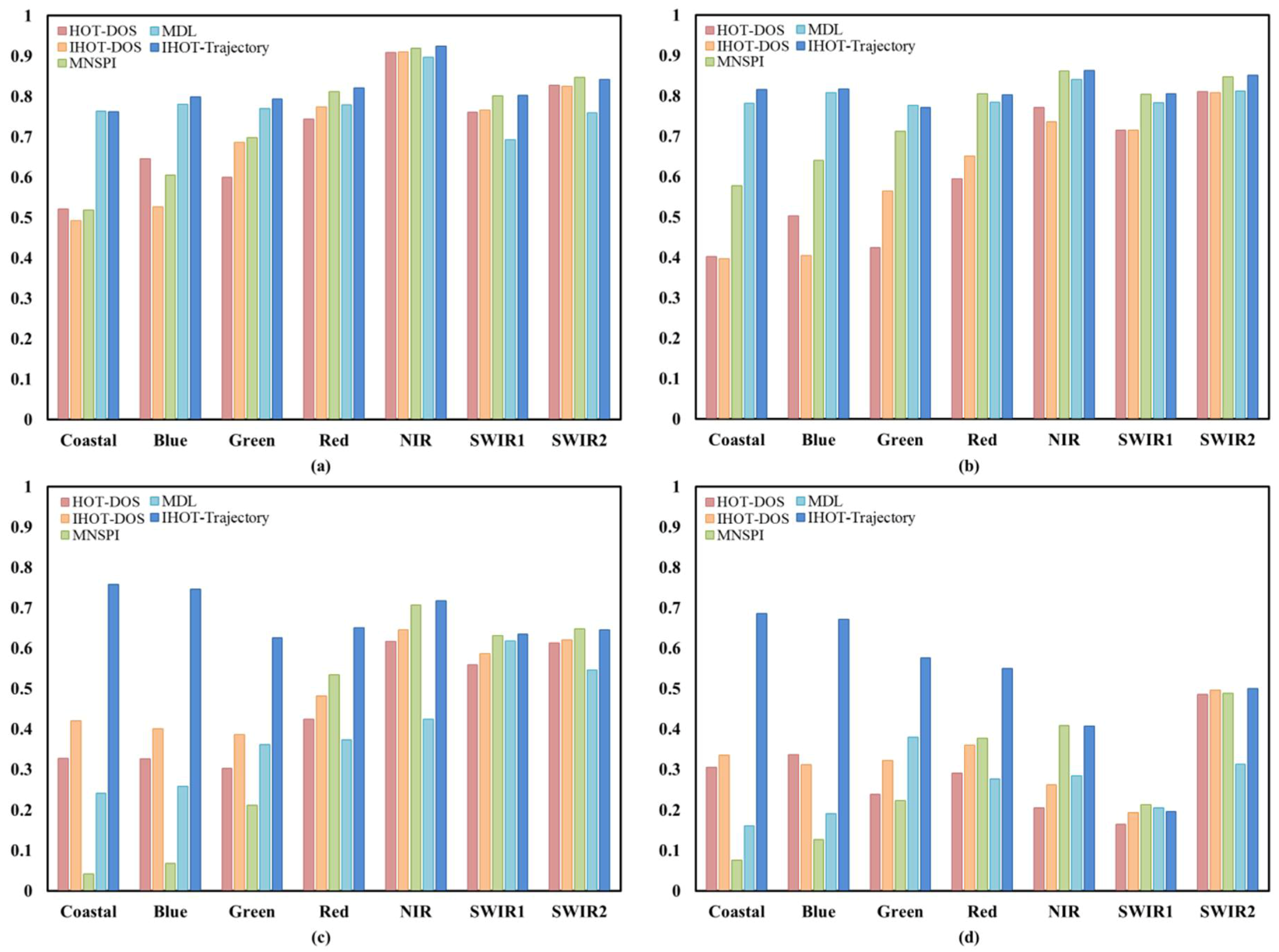

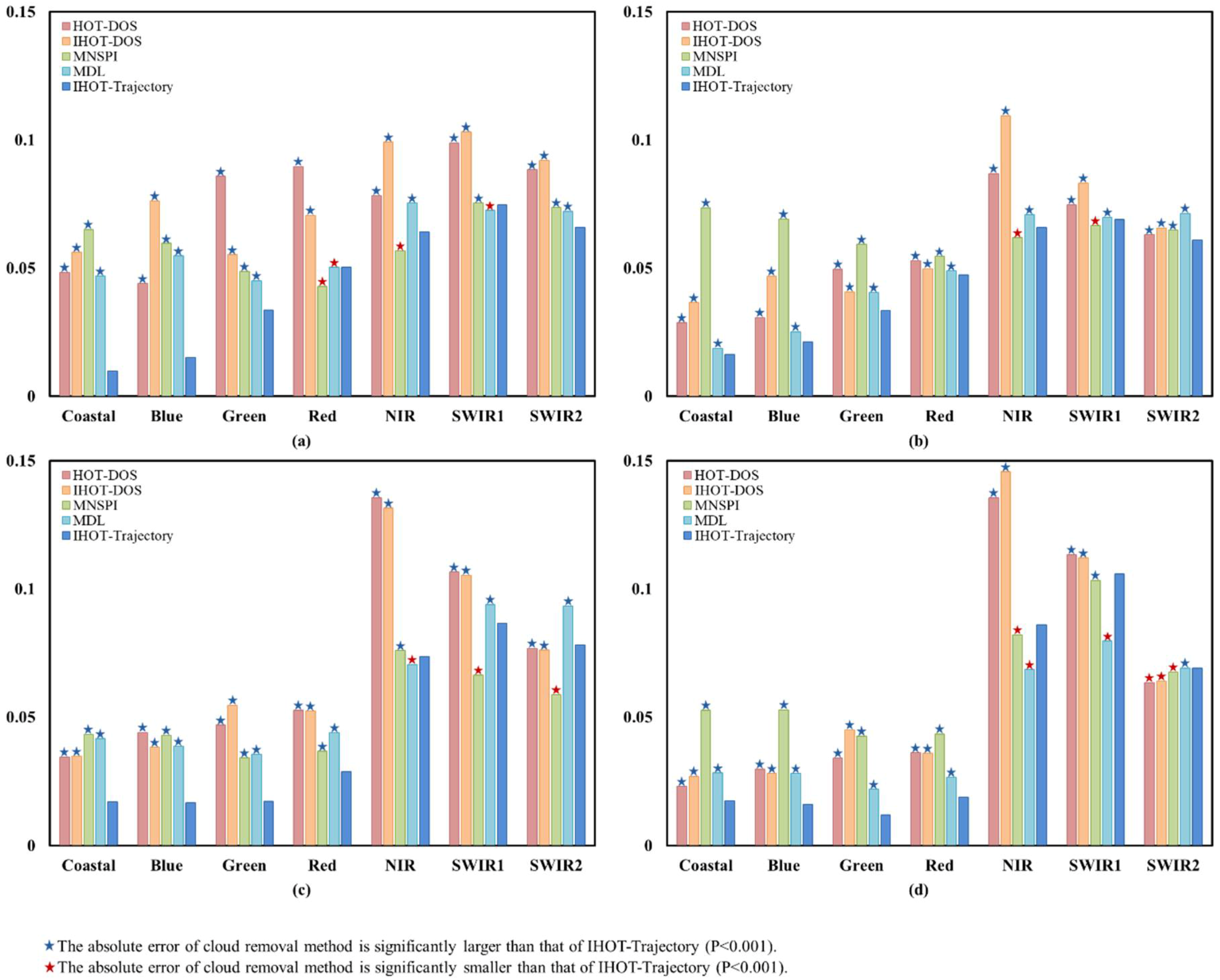

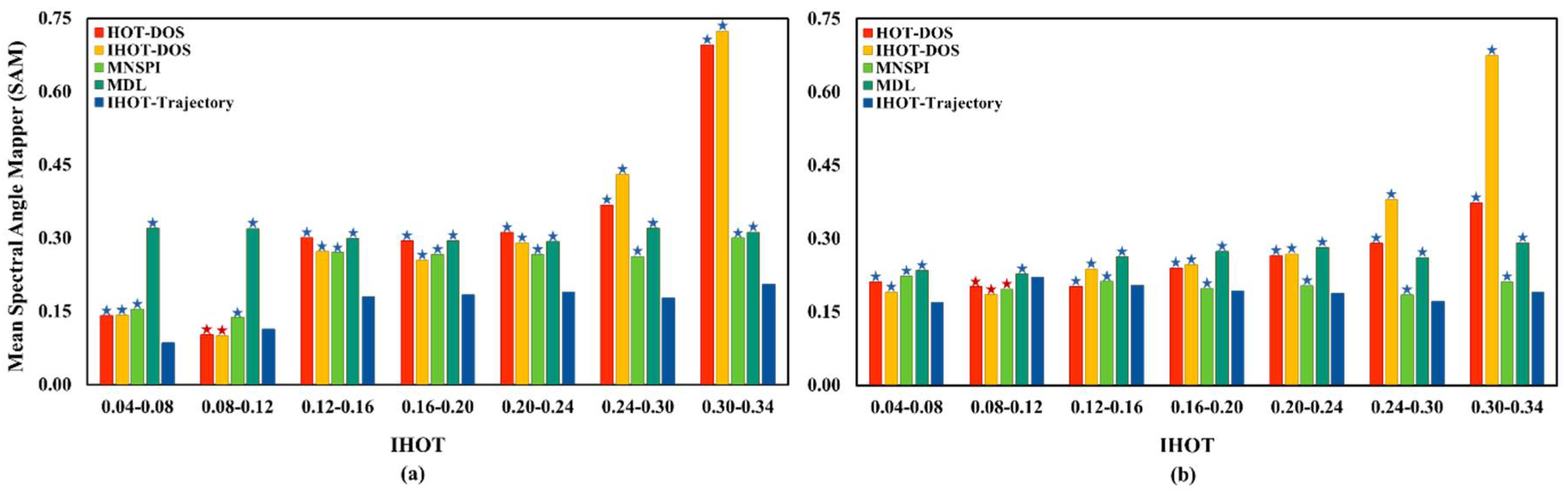

3.3. Quantitative Assessment of the Methods

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, C.; Goward, S.N.; Masek, J.G.; Thomas, N.; Zhu, Z.; Vogelmann, J.E. An automated approach for reconstructing recent forest disturbance history using dense Landsat time series stacks. Remote Sens. Environ. 2010, 114, 183–198. [Google Scholar] [CrossRef]

- Huang, C.; Thomas, N.; Goward, S.N.; Masek, J.G.; Zhu, Z.; Townshend, J.R.; Vogelmann, J.E. Automated masking of cloud and cloud shadow for forest change analysis using Landsat images. Int. J. Remote Sens. 2010, 31, 5449–5464. [Google Scholar] [CrossRef]

- Irish, R.R. Landsat 7 automatic cloud cover assessment. In Proceedings of the AeroSense 2000, Orlando, FL, USA, 24–28 April 2000; International Society for Optics and Photonics: Bellingham, WA, USA, 2000; pp. 348–355. [Google Scholar]

- Kennedy, R.E.; Cohen, W.B.; Schroeder, T.A. Trajectory-based change detection for automated characterization of forest disturbance dynamics. Remote Sens. Environ. 2007, 110, 370–386. [Google Scholar] [CrossRef]

- Vogelmann, J.E.; Tolk, B.; Zhu, Z. Monitoring forest changes in the southwestern United States using multitemporal Landsat data. Remote Sens. Environ. 2009, 113, 1739–1748. [Google Scholar] [CrossRef]

- Asner, G.P. Cloud cover in Landsat observations of the Brazilian Amazon. Int. J. Remote Sens. 2001, 22, 3855–3862. [Google Scholar] [CrossRef]

- Dozier, J. Spectral signature of alpine snow cover from the Landsat Thematic Mapper. Remote Sens. Environ. 1989, 28, 9–22. [Google Scholar] [CrossRef]

- Irish, R.R.; Barker, J.L.; Goward, S.N.; Arvidson, T. Characterization of the Landsat-7 ETM+ automated cloud-cover assessment (ACCA) algorithm. Photogramm. Eng. Remote Sens. 2006, 72, 1179–1188. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar] [CrossRef]

- Arvidson, T.; Gasch, J.; Goward, S.N. Landsat 7’s long-term acquisition plan—An innovative approach to building a global imagery archive. Remote Sens. Environ. 2001, 78, 13–26. [Google Scholar] [CrossRef]

- Simpson, J.J.; Stitt, J.R. A procedure for the detection and removal of cloud shadow from AVHRR data over land. Geoscience and Remote Sensing. IEEE Trans. Geosci. Remote Sens. 1998, 36, 880–897. [Google Scholar] [CrossRef]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote 418 sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Lu, D. Detection and substitution of clouds/hazes and their cast shadows on IKONOS images. Int. J. Remote Sens. 2007, 28, 4027–4035. [Google Scholar] [CrossRef]

- Zhu, X.; Gao, F.; Liu, D.; Chen, J. A modified neighborhood similar pixel interpolator approach for removing thick clouds in Landsat images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 521–525. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Yuan, Q.; Zeng, C. Cloud removal for remotely sensed images by similar pixel replacement guided with a spatio-temporal MRF model. ISPRS J. Photogramm. Remote Sens. 2014, 92, 54–68. [Google Scholar] [CrossRef]

- Li, X.; Shen, H.; Zhang, L.; Zhang, H.; Yuan, Q.; Yang, G. Recovering quantitative remote sensing products contaminated by thick clouds and shadows using multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7086–7098. [Google Scholar]

- He, X.Y.; Hu, J.B.; Chen, W.; Li, X.Y. Haze removal based on advanced haze-optimized transformation (AHOT) for multispectral imagery. Int. J. Remote Sens. 2010, 31, 5331–5348. [Google Scholar] [CrossRef]

- Hasjimitsis, D.G.; Clayton, C.R.I.; Hope, V.S. An assessment of the effectiveness of atmospheric correction algorithms through the remote sensing of some reservoirs. Int. J. Remote Sens. 2004, 25, 3651–3674. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Moro, G.D.; Halounova, L. Haze removal for high-resolution satellite data: A case study. Int. J. Remote Sens. 2007, 28, 2187–2205. [Google Scholar] [CrossRef]

- Olthof, I.; Pouliot, D.; Fernandes, R.; Latifovic, R. Landsat-7 ETM+ radiometric normalization comparison for northern mapping applications. Remote Sens. Environ. 2005, 95, 388–398. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B. Quantitative assessment of a haze suppression methodology for satellite imagery: Effect on land cover classification performance. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1082–1089. [Google Scholar] [CrossRef]

- Zhang, Y.; Guindon, B.; Li, X. A robust approach for object-based detection and radiometric characterization of cloud shadow using haze optimized transformation. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5540–5547. [Google Scholar] [CrossRef]

- Sun, L.; Latifovic, R.; Pouliot, D. Haze Removal Based on a Fully Automated and Improved Haze Optimized Transformation for Landsat Imagery over Land. Remote Sens. 2017, 9, 972. [Google Scholar]

- Liu, C.; Hu, J.; Lin, Y.; Wu, S.; Huang, W. Haze detection, perfection and removal for high spatial resolution satellite imagery. Int. J. Remote Sens. 2011, 32, 8685–8697. [Google Scholar] [CrossRef]

- Xu, M.; Jia, X.; Pickering, M.; Plaza, A.J. Cloud removal based on sparse representation via multitemporal dictionary learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2998–3006. [Google Scholar] [CrossRef]

- Tahsin, S.; Medeiros, S.C.; Hooshyar, M.; Singh, A. Optical Cloud Pixel Recovery via Machine Learning. Remote Sens. 2017, 9, 527. [Google Scholar] [CrossRef]

- Chen, S.; Chen, X.; Chen, J.; Jia, P.; Cao, X.; Liu, C. An Iterative Haze Optimized Transformation for Automatic Cloud/Haze Detection of Landsat Imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2682–2694. [Google Scholar] [CrossRef]

- Xu, M.; Pickering, M.; Plaza, A.J.; Jia, X. Thin cloud removal based on signal transmission principles and spectral mixture analysis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1659–1669. [Google Scholar] [CrossRef]

| Acquisition Date | Sensor | Image Type | Figure |

|---|---|---|---|

| 22 April 2014 | Landsat-8 OLI | Clouded | Figure 6 |

| 25 April 2015 | Landsat-8 OLI | Reference | Figure 6 |

| 6 June 2013 | Landsat-8 OLI | Clouded | Figure 7 |

| 11 July 2014 | Landsat-8 OLI | Reference | Figure 7 |

| 24 March 2014 | Landsat-8 OLI | Clear-sky |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Chen, X.; Chen, X.; Chen, J.; Cao, X.; Shen, M.; Yang, W.; Cui, X. A Novel Cloud Removal Method Based on IHOT and the Cloud Trajectories for Landsat Imagery. Remote Sens. 2018, 10, 1040. https://doi.org/10.3390/rs10071040

Chen S, Chen X, Chen X, Chen J, Cao X, Shen M, Yang W, Cui X. A Novel Cloud Removal Method Based on IHOT and the Cloud Trajectories for Landsat Imagery. Remote Sensing. 2018; 10(7):1040. https://doi.org/10.3390/rs10071040

Chicago/Turabian StyleChen, Shuli, Xuehong Chen, Xiang Chen, Jin Chen, Xin Cao, Miaogen Shen, Wei Yang, and Xihong Cui. 2018. "A Novel Cloud Removal Method Based on IHOT and the Cloud Trajectories for Landsat Imagery" Remote Sensing 10, no. 7: 1040. https://doi.org/10.3390/rs10071040

APA StyleChen, S., Chen, X., Chen, X., Chen, J., Cao, X., Shen, M., Yang, W., & Cui, X. (2018). A Novel Cloud Removal Method Based on IHOT and the Cloud Trajectories for Landsat Imagery. Remote Sensing, 10(7), 1040. https://doi.org/10.3390/rs10071040