Least Angle Regression-Based Constrained Sparse Unmixing of Hyperspectral Remote Sensing Imagery

Abstract

:1. Introduction

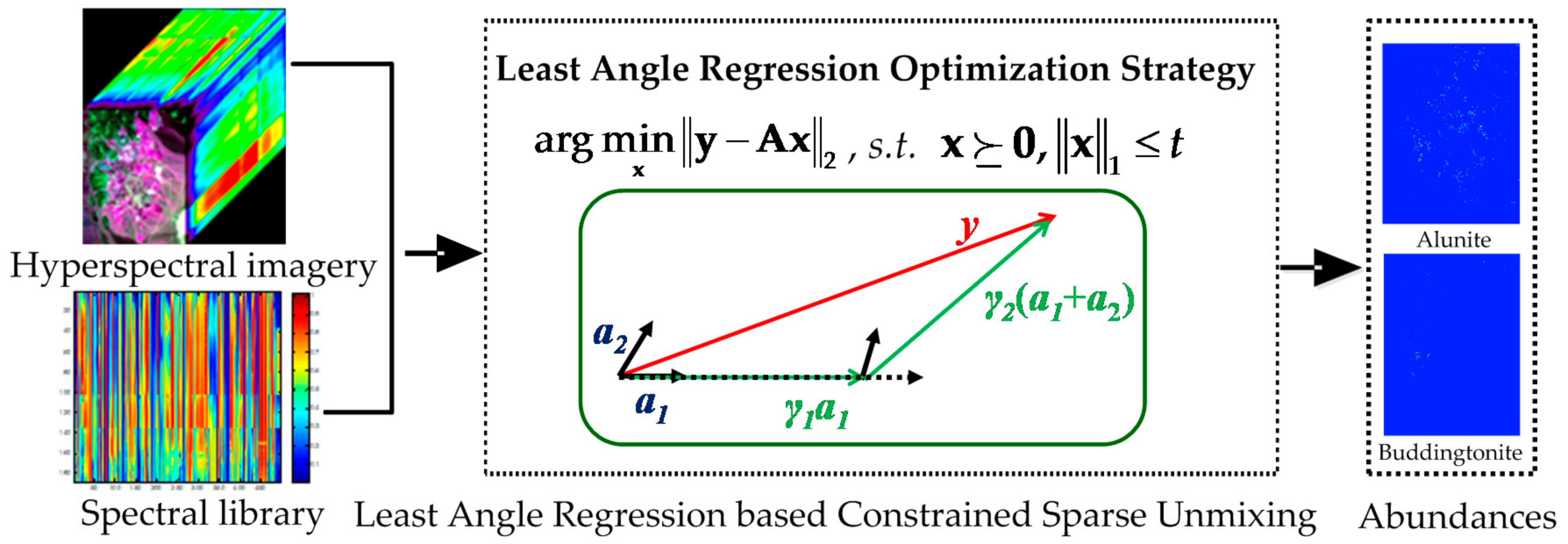

2. Least Angle Regression-Based Constrained Sparse Unmixing

2.1. Least Angle Regression

2.2. Least Angle Regression-Based Constrained Sparse Unmixing

| Algorithm 1 The Least Angle Regression-Based Constrained Sparse Unmixing Algorithm |

| (1) Initialization: |

| (1.1) Set , , and compute the current correlations with ; |

| (1.2) Build up the active set with ; |

| (1.3) Let and , where denotes the j-th endmember. |

| (2) Repeat: |

| (2.1) Update the equiangular vector uΛ: |

| where ; ; ; and is a vector whose elements are all 1 and whose length is equal to . |

| (2.2) Compute the correlations of the different covariates outside the active set Λ: |

| or , where (y − yΛ) is the current residual. |

| (2.3) Find the most correlated covariate: |

| (2.4) Compute the optimal and maximum step size of the new covariate’s direction: |

| Then, add the new direction or covariate into the previous active set Λ as . |

| (2.5) Update the regression coefficient (we call this the “fractional abundance” in unmixing) as well as the current estimation and the residual: |

| , , and . |

| (3) Continue until the stopping condition is satisfied, i.e., , where is a small constant that is used to guarantee the best regression results, and then output the final fraction x. |

3. Experiments and Analysis

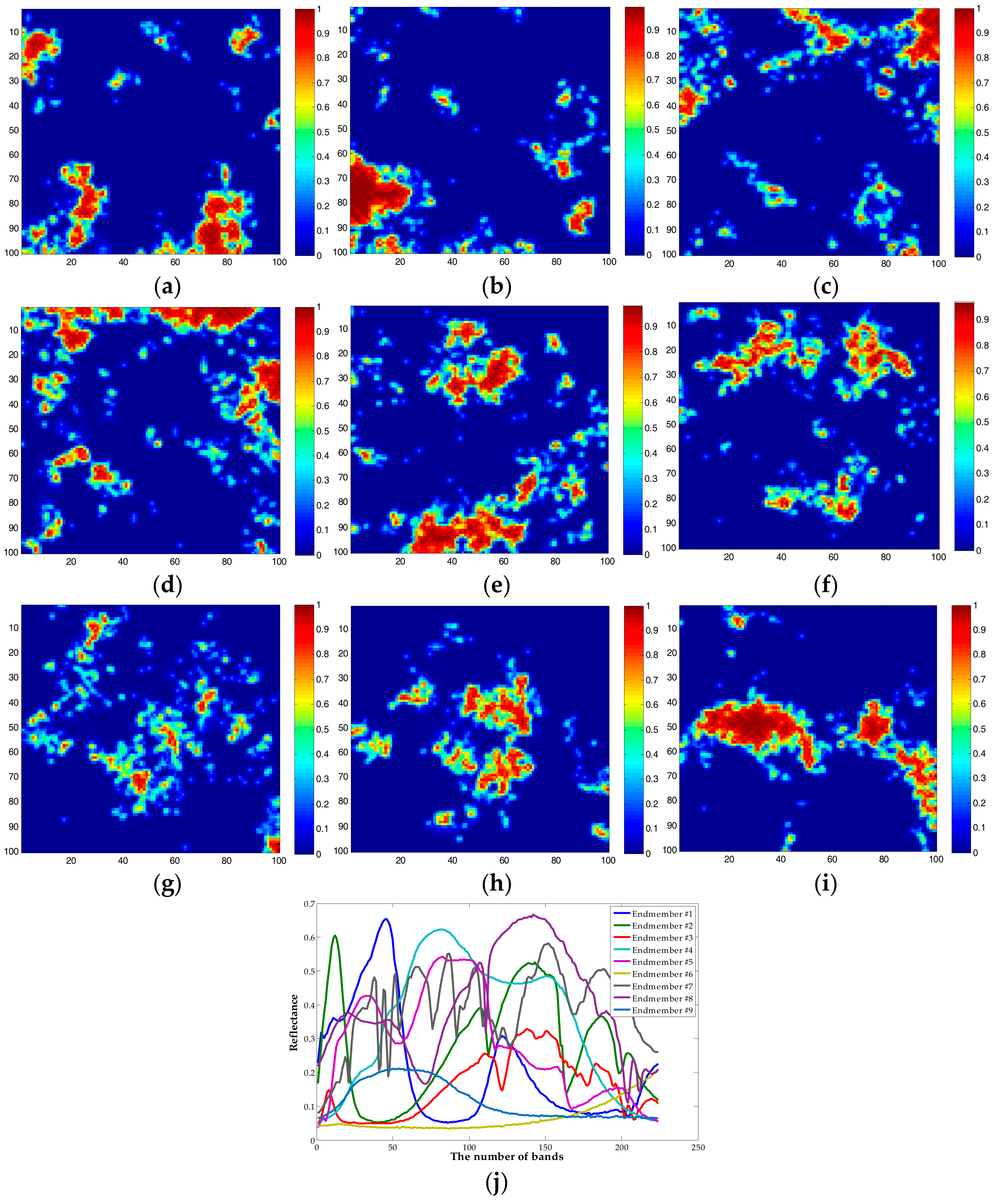

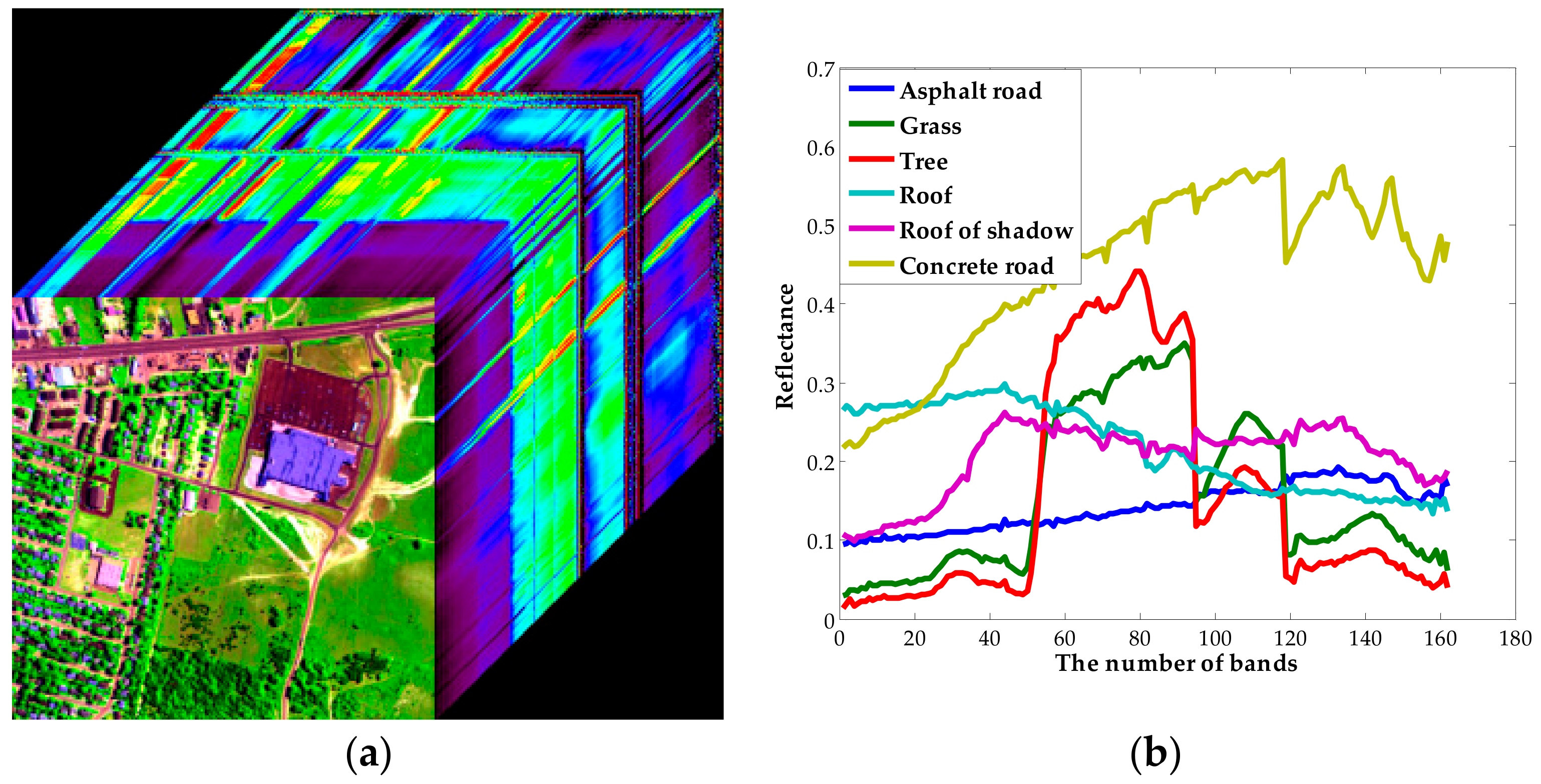

3.1. Experimental Datasets

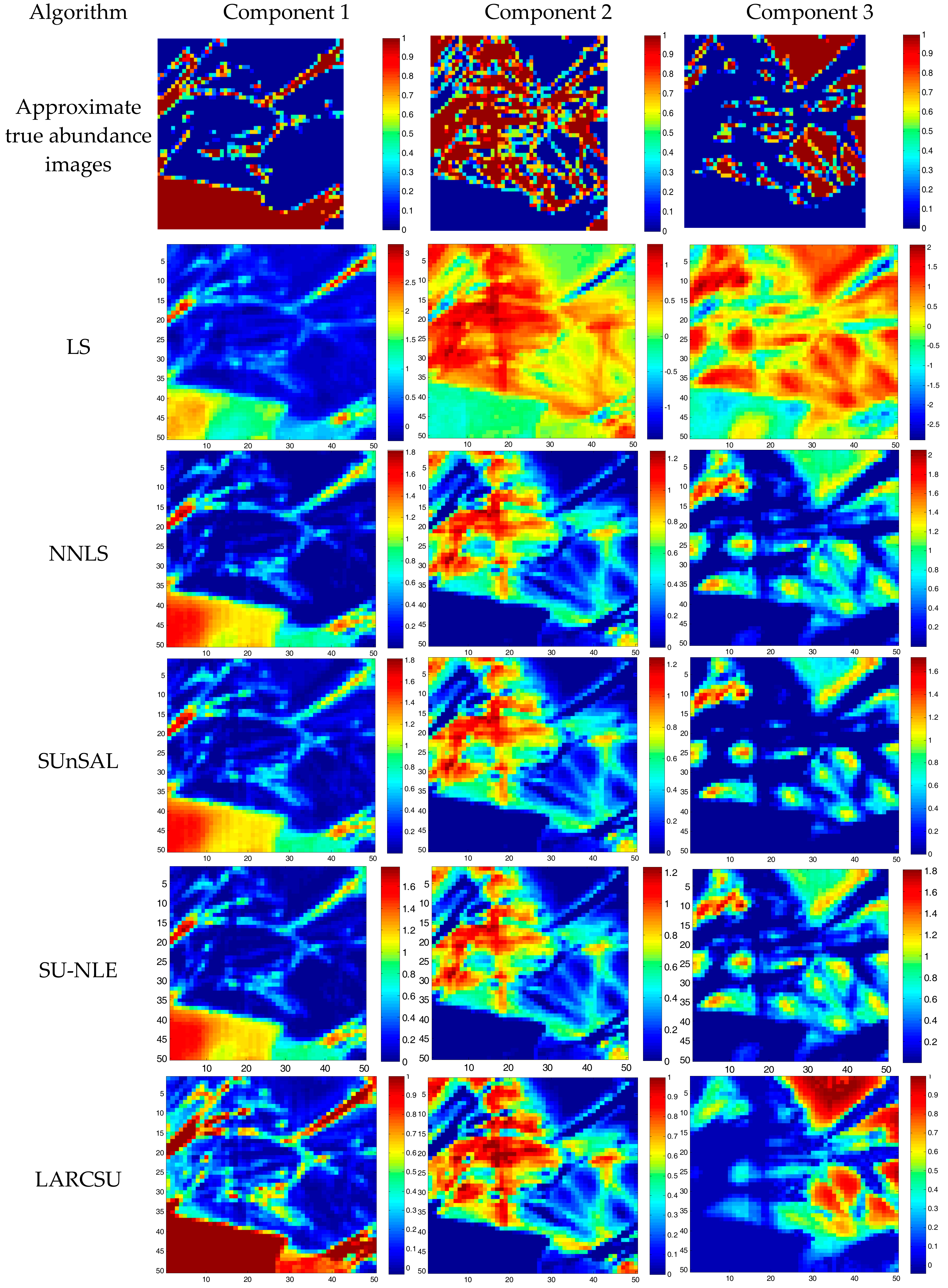

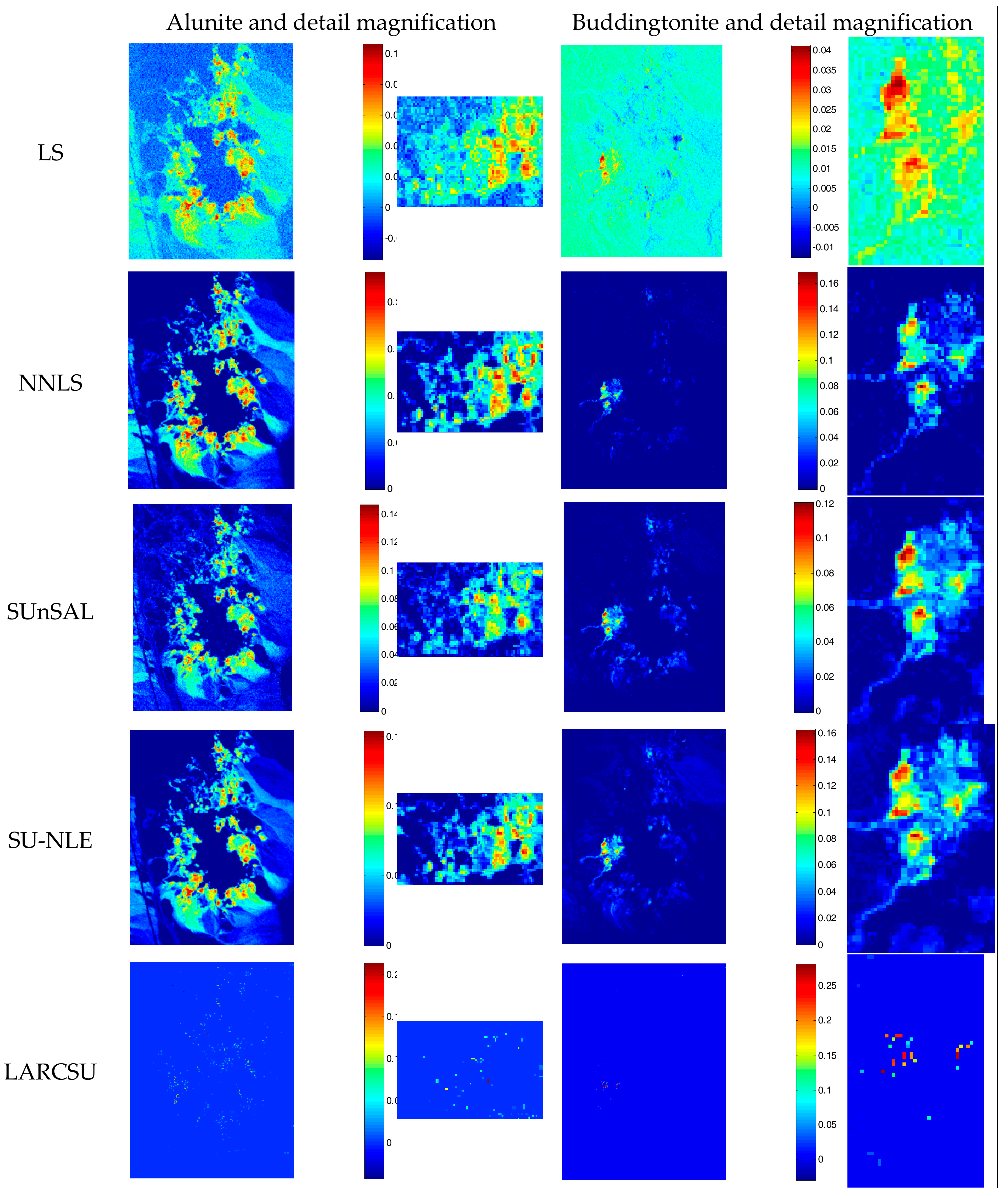

3.2. Results and Analysis

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tong, Q.; Xue, Y.; Zhang, L. Progress in hyperspectral remote sensing science and technology in China over the past three decades. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 70–91. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, 110–122. [Google Scholar] [CrossRef]

- Liu, J.; Luo, B.; Doute, S.; Chanussot, J. Exploration of planetary hyperspectral images with unsupervised spectral unmixing: A case study of planet Mars. Remote Sens. 2018, 10, 737. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.; Li, X. GETNET: A general end-to-end two-dimensional CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, S.; Chanussot, J.; Li, X. Scene classification with recurrent attention of VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Keshave, K.; Mustard, J.F. Spectral unmixing. IEEE Signal Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef]

- Ma, W.K.; Bioucas-Dias, J.M.; Tsung-Han, C.; Gillis, N.; Gader, P.; Plaza, A.; Ambikapathi, A.; Chong-Yung, C. A signal processing perspective on hyperspectral unmixing: Insights from remote sensing. IEEE Signal Process. Mag. 2014, 31, 67–81. [Google Scholar] [CrossRef]

- Heinz, D.C.; Chang, C.-I. Fully constrained least squares linear spectral mixture analysis method for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef]

- Shi, C.; Wang, L. Linear spatial spectral mixture model. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3599–3611. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Cheng, C.; Jiao, L.; Zhou, H. Hybrid unmixing based on adaptive region segmentation for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3861–3875. [Google Scholar] [CrossRef]

- Boardman, J.W.; Kruse, F.A.; Green, R.O. Mapping target signatures via partial unmixing of AVIRIS data. In Proceedings of the Fifth Annual JPL Airborne Earth Science Workshop, Pasadena, CA, USA, 23–26 January 1995. [Google Scholar]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. Proc. SPIE 2003, 3753, 266–275. [Google Scholar]

- Nascimento, J.M.P.; Bioucas-Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Ren, H.; Chang, C.-I. Automatic spectral target recognition in hyperspectral imagery. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1232–1249. [Google Scholar]

- Niroumand-Jadidi, M.; Vitti, A. Reconstruction of river boundaries at sub-pixel resolution: Estimation and spatial allocation of water fractions. ISPRS Int. J. Geo-Inf. 2017, 6, 383. [Google Scholar] [CrossRef]

- Elmore, A.J.; Mustard, J.F.; Manning, S.J.; Lobell, D.B. Quantifying vegetation change in semiarid environments: Prevision and accuracy of spectral mixture analysis and the normalized difference vegetation index. Remote Sens. Environ. 2000, 73, 87–102. [Google Scholar] [CrossRef]

- Iordache, M.D. A Sparse Regression Approach to Hyperspectral Unmixing. Ph. D. Thesis, School of Electrical and Computer Engineering, Ithaca, NY, USA, 2011. [Google Scholar]

- Bruckstein, A.M.; Elad, M.; Zibulevsky, M. On the uniqueness of non-negative sparse solutions to underdetermined systems of equations. IEEE Trans. Inf. Theory 2008, 54, 4813–4820. [Google Scholar] [CrossRef]

- Bruckstein, A.M.; Donoho, D.L.; Elad, M. From sparse solutions of systems of equations to sparse modeling of signals and images. SIAM Rev. 2009, 51, 34–81. [Google Scholar] [CrossRef]

- Candes, E.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Li, C.; Zhang, J.; Chen, Q.; Feng, J.; Jiao, L.; Zhou, H. Hyperspectral unmixing via low-rank representation with sparse consistency constraint and spectral library pruning. Remote Sens. 2018, 10, 339. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Figueiredo, M. Alternating direction algorithms for constrained sparse regression: Application to hyperspectral unmixing. In Proceedings of the 2nd IEEE GRSS Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Reykjavik, Iceland, 14–16 June 2010. [Google Scholar]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Total variation spatial regularization for sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4484–4502. [Google Scholar] [CrossRef]

- Zhong, Y.; Feng, R.; Zhang, L. Non-local sparse unmixing for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2014, 7, 1889–1909. [Google Scholar] [CrossRef]

- Salehani, Y.E.; Gazor, S.; Kim, I.-K.; Yousefi, S. L0-norm sparse hyperspectral unmixing using arctan smoothing. Remote Sens. 2016, 8, 187. [Google Scholar] [CrossRef]

- Shi, Z.; Shi, T.; Zhou, M.; Xu, X. Collaborative sparse hyperspectral unmixing using l0 norm. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5495–5508. [Google Scholar] [CrossRef]

- Gong, M.; Li, H.; Luo, E.; Liu, J.; Liu, J. A multiobjective cooperative coevolutionary algorithm for hyperspectral sparse unmixing. IEEE Trans. Evol. Comput. 2017, 21, 234–248. [Google Scholar] [CrossRef]

- Wang, S.; Huang, T.; Zhao, X.; Liu, G.; Cheng, Y. Double reweighted sparse regression and graph regularization for hyperspectral unmixing. Remote Sens. 2018, 10, 1046. [Google Scholar] [CrossRef]

- Candes, E.; Tao, T. Near-optimal signal recovery from random projections: Universal encoding strategies. IEEE Trans. Inf. Theory 2006, 52, 5406–5424. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive non-local Euclidean medians sparse unmixing for hyperspectral imagery. ISPRS J. Photogramm. Remote Sens. 2014, 97, 9–24. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Zhang, L. Adaptive spatial regularization sparse unmixing strategy based on joint MAP for hyperspectral remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 5791–5805. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Collaborative sparse regression for hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 2014, 52, 341–354. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A.; Somers, B. MUSIC-CSR: Hyperspectral Unmixing via Multiple Signal Classification and Collaborative Sparse Regression. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4364–4382. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Li, J.; Wu, Z.; Plaza, A. Spatial discontinuity-weighted sparse unmixing of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Li, H.; Deng, C.; Plaza, A. Spectral-spatial weighted sparse regression for hyperspectral image unmixing. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3265–3276. [Google Scholar] [CrossRef]

- Wang, Q.; He, X.; Li, X. Locality and structure regularized low rank representation for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the Lasso. J. R. Stat. Soc. B 1996, 58, 267–288. [Google Scholar]

- Fu, W.J. Penalized regressions: The bridge versus the Lasso. J. Comput. Graph. Stat. 1998, 7, 397–416. [Google Scholar]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R. Least angle regression. Ann. Stat. 2004, 32, 407–451. [Google Scholar]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Afonos, M.V.; Bioucas-Dias, J.M.; Figueiredo, M.A.T. An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 2011, 20, 681–695. [Google Scholar] [CrossRef] [PubMed]

- Friedman, J. Greedy function approximation: The gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Element of Statistical Learning: Data Mining, Inference and Prediction; Springer: New York, NY, USA, 2001. [Google Scholar]

- Weisberg, S. Applied Linear Regression; Wiley: New York, NY, USA, 1980. [Google Scholar]

- Li, C.; Ma, Y.; Mei, X.; Fan, F.; Huang, J.; Ma, J. Sparse unmixing of hyperspectral data with noise level estimation. Remote Sens. 2017, 9, 1166. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Zhao, L.; Feng, R.; Zhang, L.; Xu, Y. Blind spectral unmixing based on sparse component analysis for hyperspectral remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2016, 119, 49–63. [Google Scholar] [CrossRef]

- Feng, R.; Zhong, Y.; Wu, Y.; He, D.; Xu, X.; Zhang, L. Nonlocal total variation subpixel mapping for hyperspectral remote sensing imager. Remote Sens 2016, 8, 250. [Google Scholar] [CrossRef]

- Xu, X.; Zhong, Y.; Zhang, L.; Zhang, H. Sub-pixel mapping based on a MAP model with multiple shifted hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 580–593. [Google Scholar] [CrossRef]

- Xu, X.; Tong, X.; Plaza, A.; Zhong, Y.; Xie, H.; Zhang, L. Using linear spectral unmixing for subpixel mapping of hyperspectral imagery: A quantitative assessment. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 1589–1600. [Google Scholar] [CrossRef]

- Xu, X.; Tong, X.; Plaza, A.; Zhong, Y.; Xie, H.; Zhang, L. Joint sparse sub-pixel model with endmember variability for remotely sensed imagery. Remote Sens. 2017, 9, 15. [Google Scholar] [CrossRef]

- Liu, X.; Xia, W.; Wang, B.; Zhang, L. An approach based on constrained nonnegative matrix factorization to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 757–772. [Google Scholar] [CrossRef]

| Data | Algorithm | LS | NNLS | SUnSAL | SU-NLE | LARCSU |

|---|---|---|---|---|---|---|

| S-1 | SRE (dB) | 14.863 | 15.147 | 15.148 | 15.706 | 17.000 |

| RMSE | 0.0435 | 0.0421 | 0.0421 | 0.0394 | 0.0340 | |

| Time (s) | 0.0156 | 0.5156 | 0.7500 | 13.0323 | 8.1563 | |

| S-2 | SRE (dB) | 7.528 | 15.710 | 15.886 | 15.88 | 17.000 |

| RMSE | 0.1068 | 0.0416 | 0.0408 | 0.0408 | 0.0359 | |

| Time (s) | 0.0781 | 0.8281 | 2.7531 | 50.9219 | 20.5313 | |

| R-1 | SRE (dB) | 1.233 | 4.377 | 4.928 | 4.8570 | 6.366 |

| RMSE | 0.4669 | 0.3251 | 0.3051 | 0.3120 | 0.2586 | |

| Time (s) | 0.0156 | 0.1406 | 2.9234 | 3.6563 | 2.5469 | |

| R-2 | Time (s) | 1.875 | 923.1094 | 1.3239 × 103 | 3.0393 × 104 | 594.7188 |

| R-3 | Time (s) | 0.3281 | 11.7031 | 118.4375 | 928.3750 | 170.4219 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, R.; Wang, L.; Zhong, Y. Least Angle Regression-Based Constrained Sparse Unmixing of Hyperspectral Remote Sensing Imagery. Remote Sens. 2018, 10, 1546. https://doi.org/10.3390/rs10101546

Feng R, Wang L, Zhong Y. Least Angle Regression-Based Constrained Sparse Unmixing of Hyperspectral Remote Sensing Imagery. Remote Sensing. 2018; 10(10):1546. https://doi.org/10.3390/rs10101546

Chicago/Turabian StyleFeng, Ruyi, Lizhe Wang, and Yanfei Zhong. 2018. "Least Angle Regression-Based Constrained Sparse Unmixing of Hyperspectral Remote Sensing Imagery" Remote Sensing 10, no. 10: 1546. https://doi.org/10.3390/rs10101546

APA StyleFeng, R., Wang, L., & Zhong, Y. (2018). Least Angle Regression-Based Constrained Sparse Unmixing of Hyperspectral Remote Sensing Imagery. Remote Sensing, 10(10), 1546. https://doi.org/10.3390/rs10101546