Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review

Abstract

1. Introduction

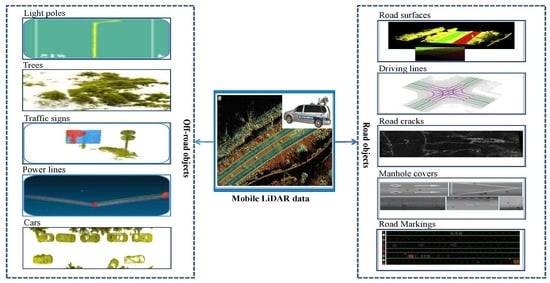

2. Mobile Laser Scanning: The State-of-the-Art

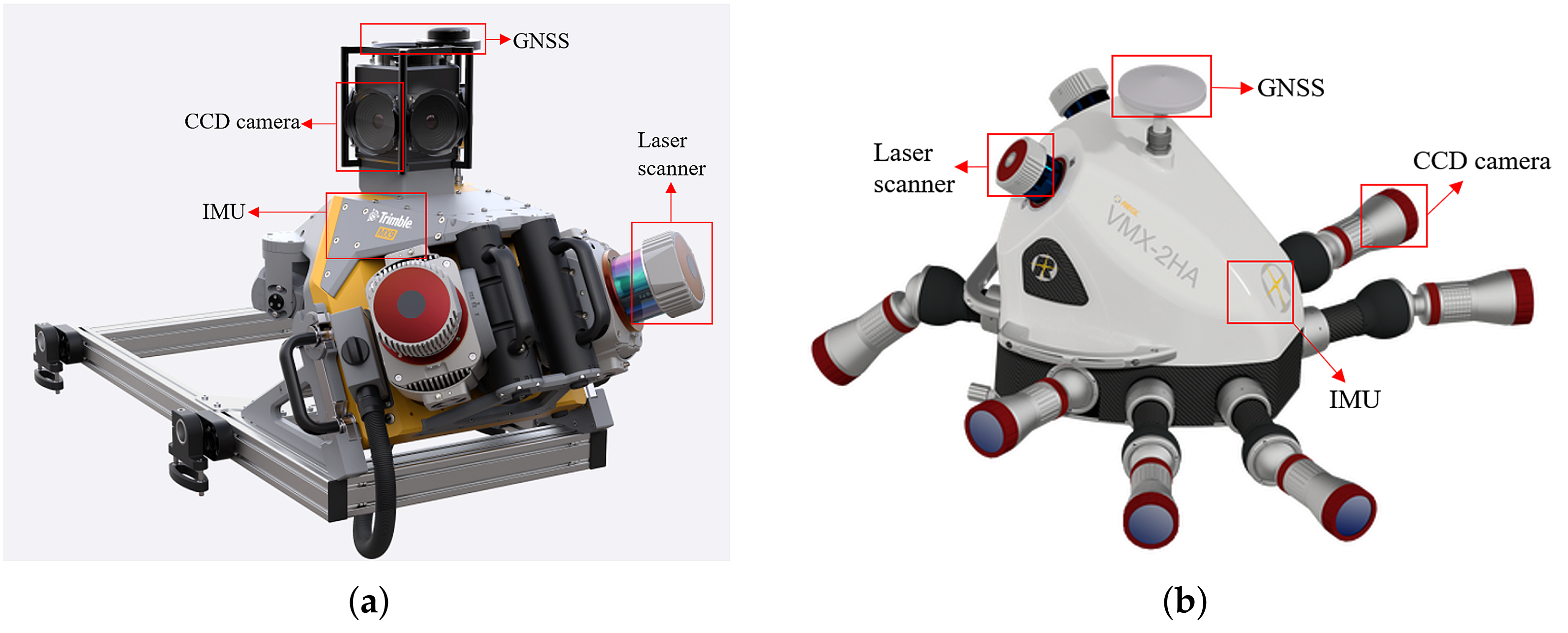

2.1. Components of MLS System

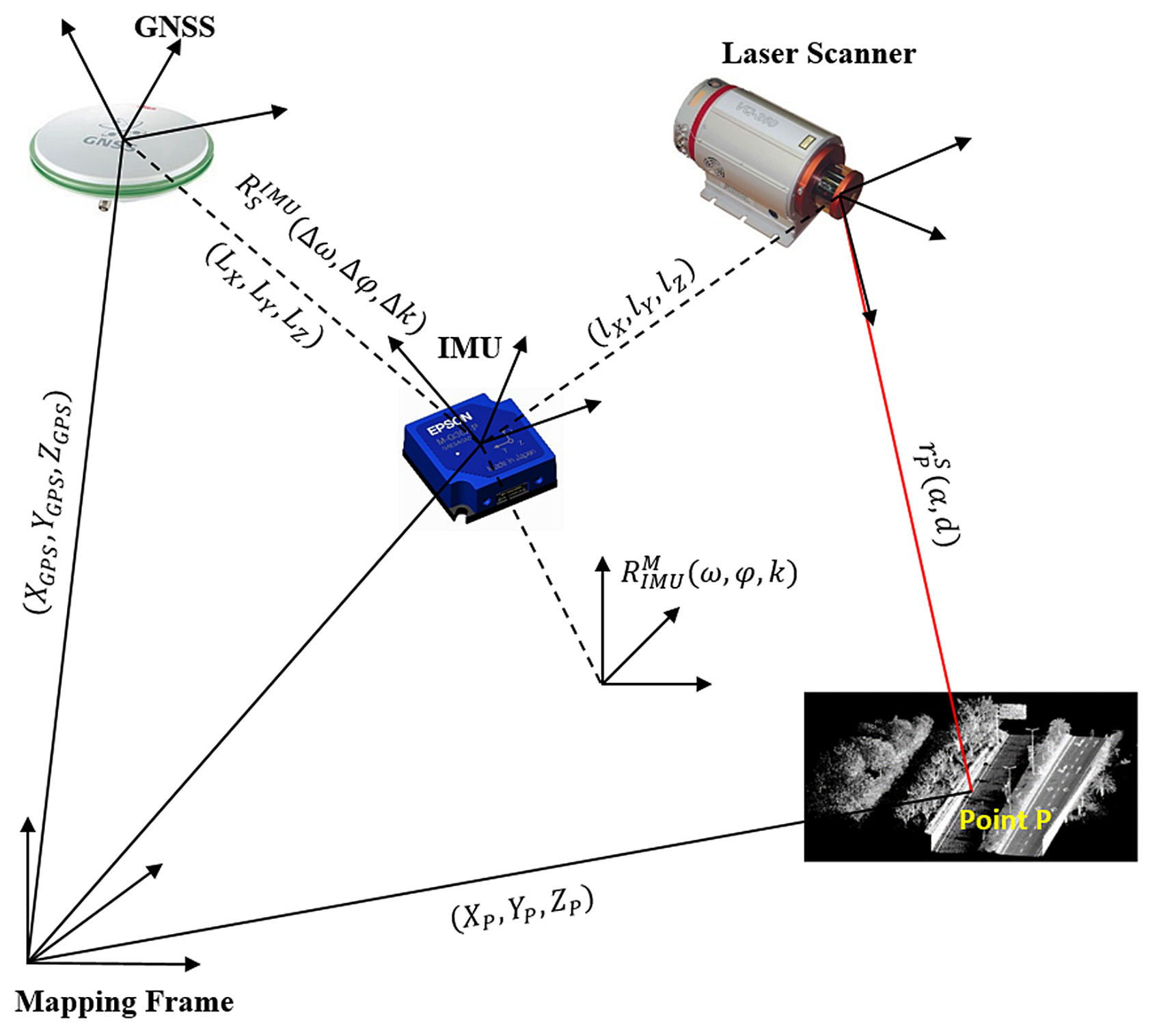

2.2. Direct Geo-Referencing

2.3. Error Analysis for MLS Systems

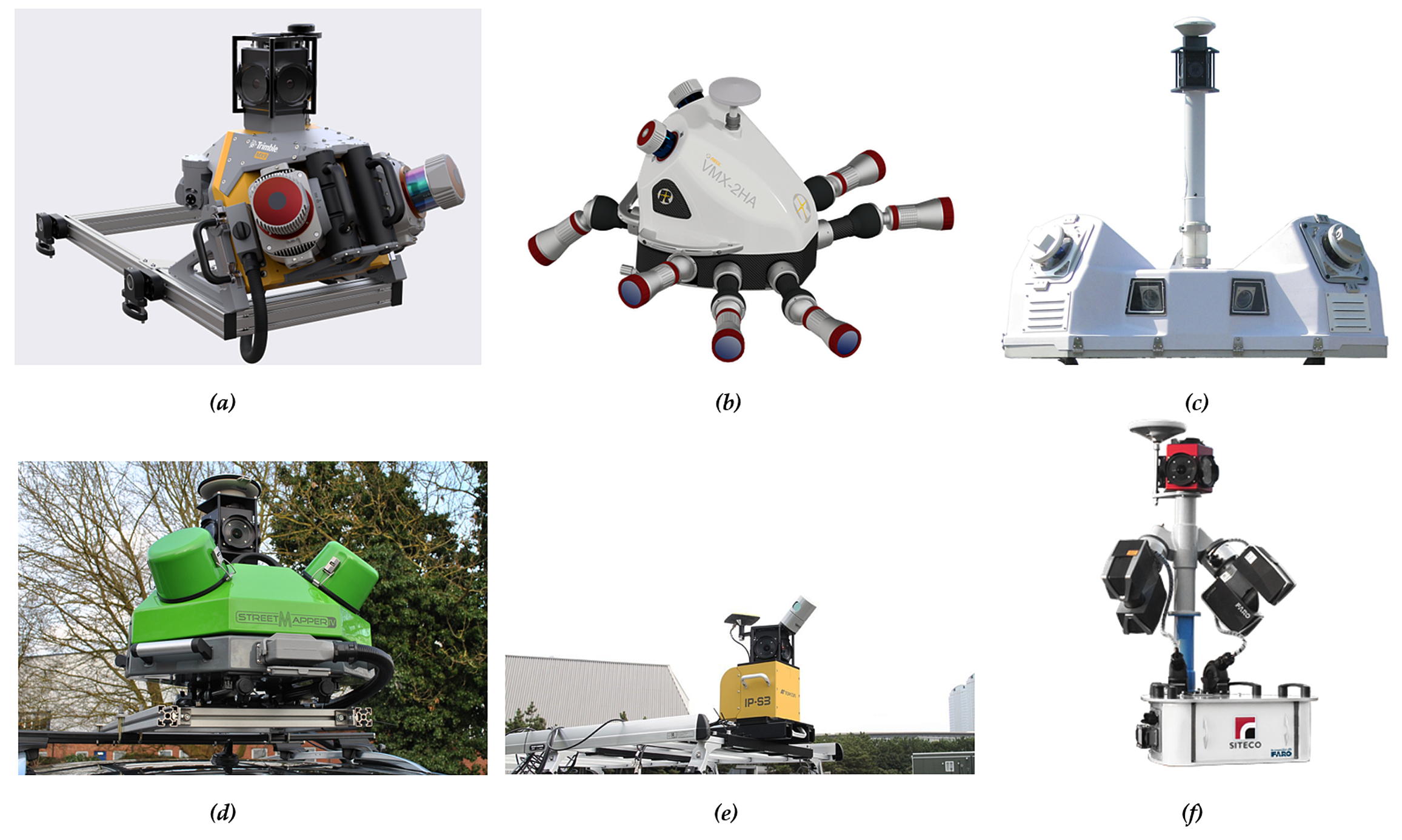

2.4. Introduction of Several Commercial MLS Systems

2.5. Open Access MLS Datasets

3. On-Road Information Extraction

3.1. Road Surface Extraction

3.1.1. 3D Point-Based Extraction

3.1.2. 2D Feature Image-Based Extraction

3.1.3. Other Data-Based Extraction

3.2. Road Marking Extraction and Classification

3.2.1. 2D GRF Image-Driven Extraction

3.2.2. 3D Point-Driven Extraction

3.3. Driving Line Generation

3.4. Road Crack Detection

3.5. Road Manhole Detection

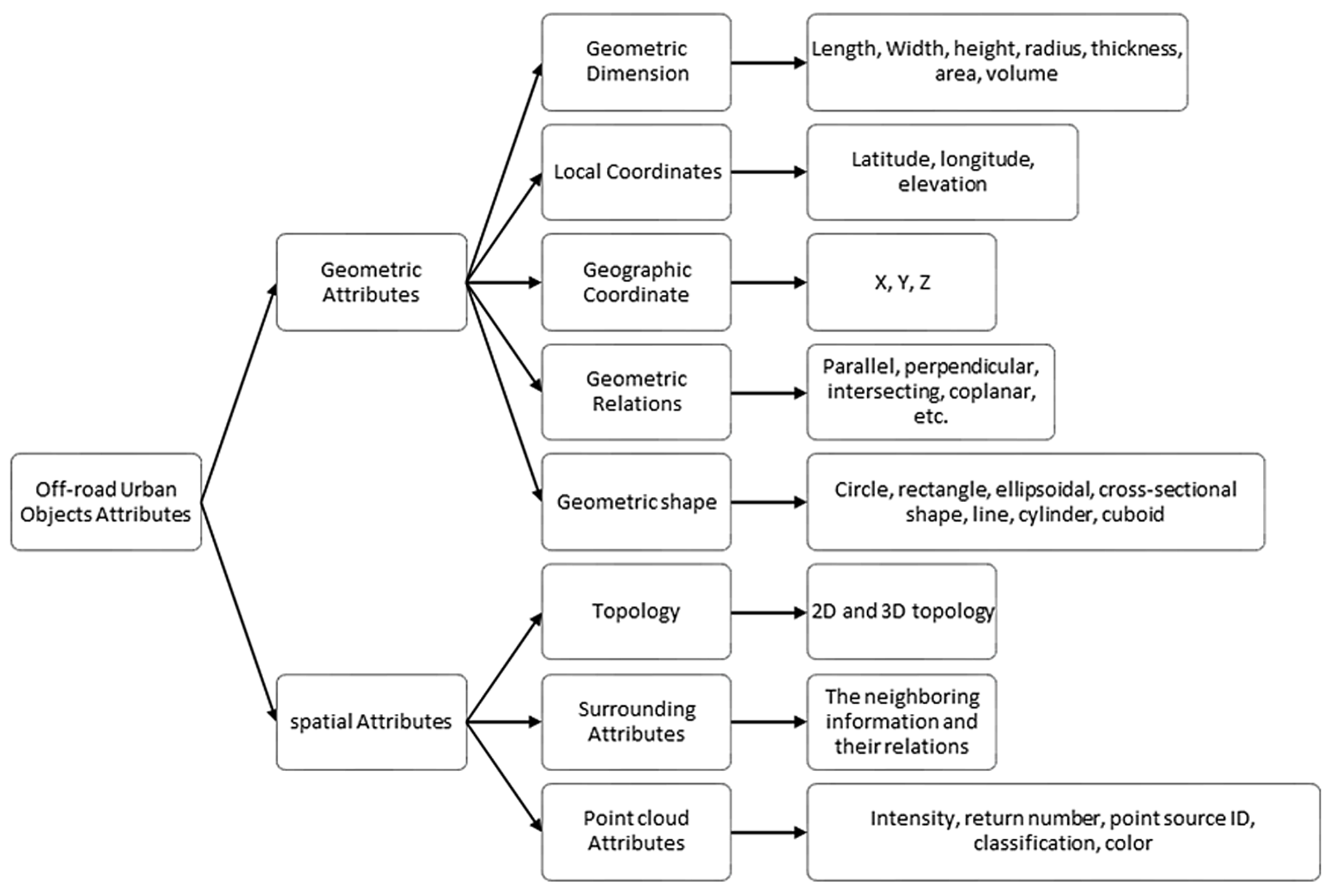

4. Off-Road Information Extraction

- Euclidean clustering. This method is based on Euclidean space to calculate the distances between discrete points and their neighbors. Then the distances are compared with a threshold to group nearest points together. This method is simple and efficient. However, some disadvantages include no initial seeding system and no over- and under-segmentation control. In [2,97], Euclidean clustering was applied to segment the off-terrain points into different clusters. Due to the lack of under-segmentation control, Yu et al. [7,66,96] proposed an extended voxel-based normalized cut segmentation method to split the outputs of Euclidean distance clustering, which are overlapped or occlusive objects.

- DBSCAN. DBSCAN is the most commonly used clustering methods without a priori knowledge of the number of clusters. Besides, the remaining data can be identified as noise or outliers by the algorithm. Riveiro et al. [99] and Soilán et al. [64] used the DBSCAN algorithm to obtain and cluster a down-sampled set of points. Before that, a Gaussian mixture model (GMM) was presented to determine the distribution of those points that matched sign panels. For some points which were not related to traffic signs, PCA was used to analyze the curvature to filter the noise. Yan et al. [100] used the module that was provided by the Scikit-learn to perform DBSCAN. The points with height-normalized above ground features were clustered and then identified and classified as potential light poles and towers.

4.1. Pole-Like Objects Extraction

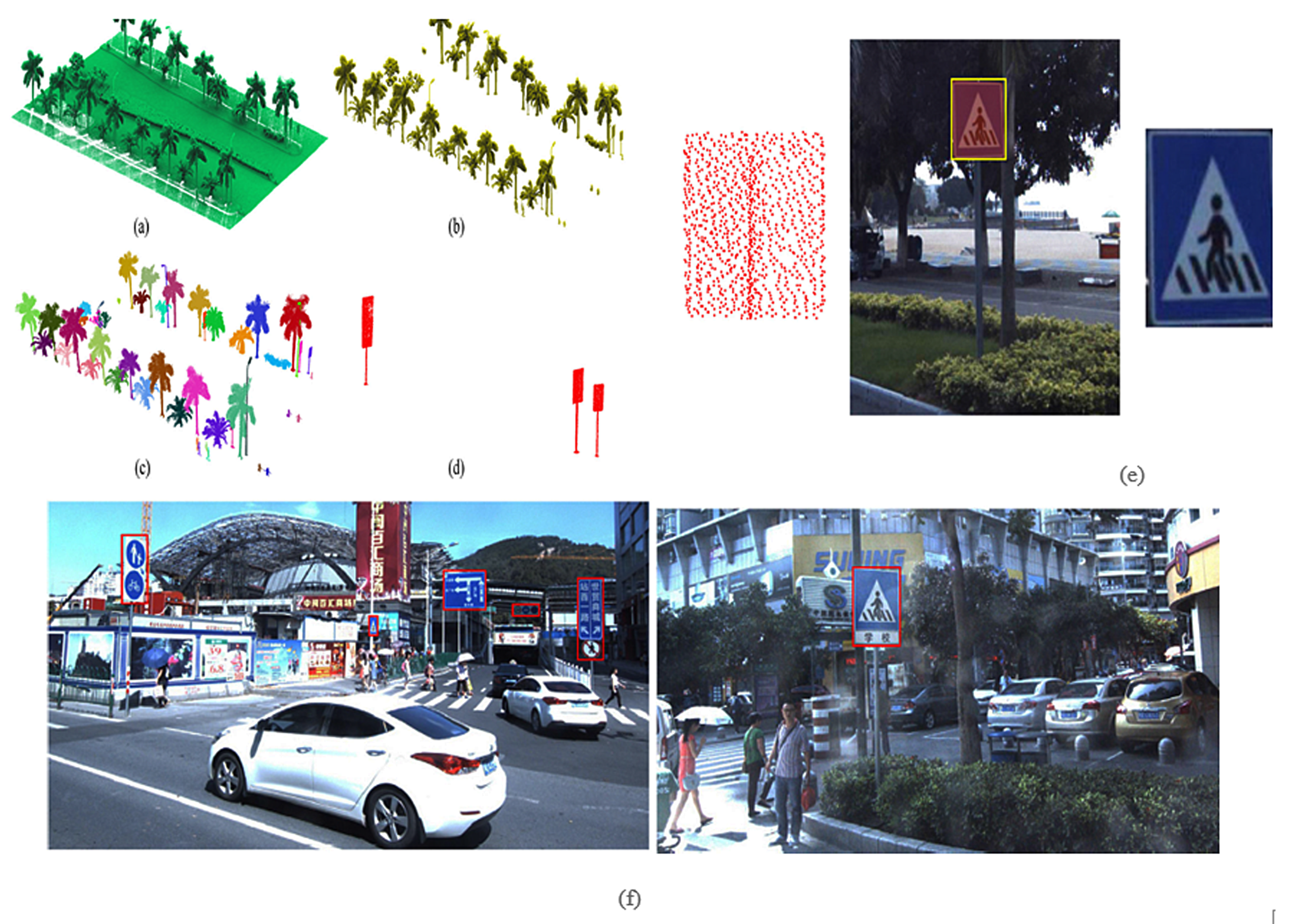

4.1.1. Traffic Sign Detection

- Machine learning methods. Machine learning based TSR methods are most commonly used in the imagery-based TSR tasks in the past years. Wen et al. [65] assigned traffic sign types by a support vector machine (SVM) classifier trained by integral features composed of Histogram of Gradients (HOG) and color descriptor. Tan et al. [109] developed a latent structural SVM-based weakly supervised metric learning (WSMLR) method for the TSR and learned a distance metric between the captured images and the corresponding sign templates. For each sign, recognition was done via soft voting by the recognition results of its corresponding multi-view images. However, machine learning methods need manually designed features that are subjective and highly rely on the prior knowledge of researchers.

- Deep learning methods. Recently, deep learning methods are applied in MLS regions for their powerful computation capacity and superior performance without human design features. By learning multilevel feature representations, deep learning models are effective in TSR. Yu et al. [96] applied the Gaussian–Bernoulli deep Boltzmann machine (GB-DBM) model for TSR tasks. The detected traffic signs point clouds were projected into 2D images, which produced a bounding box on the image. The TSR was achieved among the bounding box. To train a TSR classifier, they arranged the normalized image into a feature vector and then input it into to the hierarchical classifier. Then a GB-DBM model was constructed to classify traffic signs. This model contains three hidden layers and one logistic regression layer, which contains quantity activation units. These units were linked to the predefined class. Guan et al. [108] also conducted TSR using GB-DBM model. Their method was proved for rapidly handling large-volume MLS point clouds toward TSD and TSR. Arcos-García et al. [63] applied a DNN model to finish TSR from the segmented RGB images. This DNN model combined several convolutional, spatial transformers, non-linearity, contrast normalization, and max-pooling layers, which were proved useful in the TSR stage. These deep learning methods are proven to generate good experimental results. However, the point cloud traffic sign detection results have impacts on the recognition results. If the traffic sign is missing in the first stage, the following TSR results will be inaccurate.

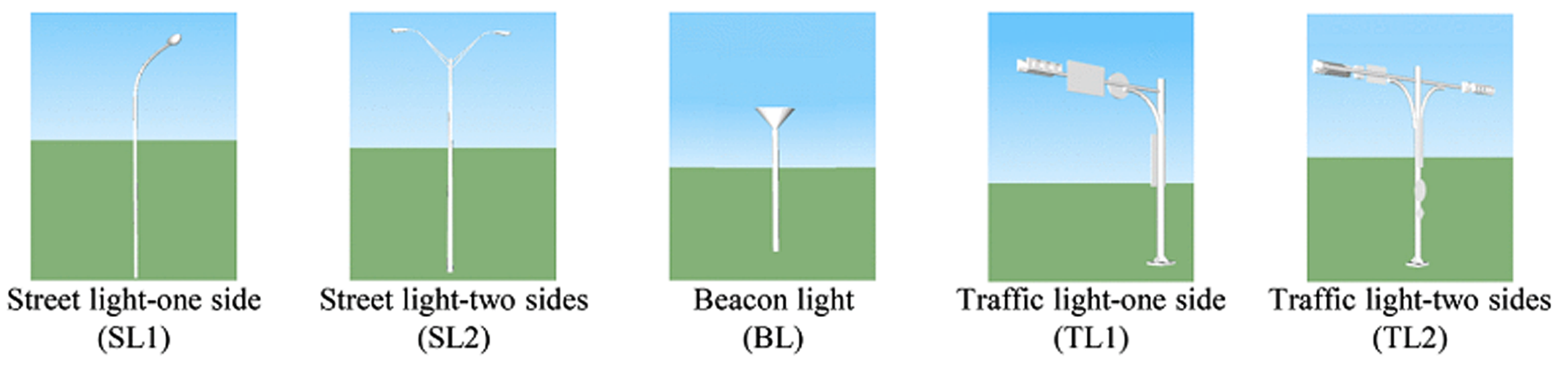

4.1.2. Light Pole Detection

- Matching-based extraction. Matching-based extraction is one of the representative knowledge-driven methods, which is achieved by matching the extracted objects with pre-selected modules. Yu et al. [66] extracted light poles using a pairwise 3D shape context, where a shape descriptor was designed to model the geometric structure of a 3D point-cloud object. One-by-one matching, local dissimilarity, and global dissimilarity were calculated to extract light poles. In [7], light poles were extracted by matching the segmented batch with the pre-selected point cloud object prototype. The prototype was chosen based on prior knowledge. The feature points for this voxel was chosen from the points nearest the centroid of the voxel. Then, the matching cost for feature points between the prototype and individual grouped object was conducted via 3D object matching framework. Finally, the extraction results derived from the filtered matching costs from all segmented objects. Cabo et al. [97] spatially discretized the initial point clouds and chose the initial 20–30% representative point clouds as the inputs. The reduction of the inputs data kept model accessible without losing any information by linking each point to its voxel. Then, they selected and clustered small 2D segments that are compatible with a component of a target pole. Finally, the representative 3D voxel of the detected pole-like objects were determined and the points from the initial point clouds linking to individual pole-like objects were derived. Wang et al. [113] also introduced a similar matching method to recognize lamp poles and street signs. First, the raw data within an octree was grouped into multi-levels via 3D SigVox descriptor. Then, PCA was applied to calculate the eigenvalues of points in each voxel, which were projected onto the suitable triangle of a sphere approximating icosahedron. This step was iterated within multiple scales. Finally, street lights were identified via measuring the similarity of 3D SigVox descriptors between candidate point clusters and training objects. However, these three algorithms highly rely on the parameters and matching module selection. Thus, it is challenging to achieve automatic extraction. One-by-one matching has high computation cost when compared with other methods. These methods are not suitable for the large-volume data processing.

- Rule-based extraction. Rule-based extraction is the other typical knowledge-driven method. Geometric and spatial features of light poles are setting as rules in the process of extraction. Teo et al. [114] applied several geometric features to remove the non-pole-like road objects. This algorithm includes feature extraction for each object and non-pole-like objects removal using geometric features (e.g., area of cross-section, position, size, orientation, and shape). Besides, pole parameters (e.g., location, radius, and height) were computed to extract feature, as well as hierarchical filtering was used to filter the non-pole- like objects. A height threshold (3 m) was used to classify the data into two parts, and do segmentation in horizontal and vertical directions. However, not all the pole-like objects are higher than 3 m, the parameter choosing is subjective. Rodríguez-Cuenca et al. [115] utilized the specified characteristics of the poles in the pole-like object extraction. Since poles stand vertically to the ground, this attribute is employed by the Reed and Xiaoli (RX) anomaly detection method for a linear pole structure with pre-organized point clouds. Finally, the vertical elements were detected as man-made poles or trees using the clustering method. However, if pole-like objects are overlapped by other objects, the disconnected upper parts are discarded as noise. Li et al. [116] used a slice-based method to extract pole-like road objects. A set of common rules were constrained to split and merge the groups. E.g., the connected components that contain vertical poles were analyzed for integrating or separating; the detached components as well as their nearby components were checked for further merging. Rule-based methods are rapid and efficient. But, the quantity of rules is hard to determine. The data would be over-segmented with numerous rules or under-segmented with insufficient rules. Especially, some true objects with poor data distribution are usually removed by strict rules.

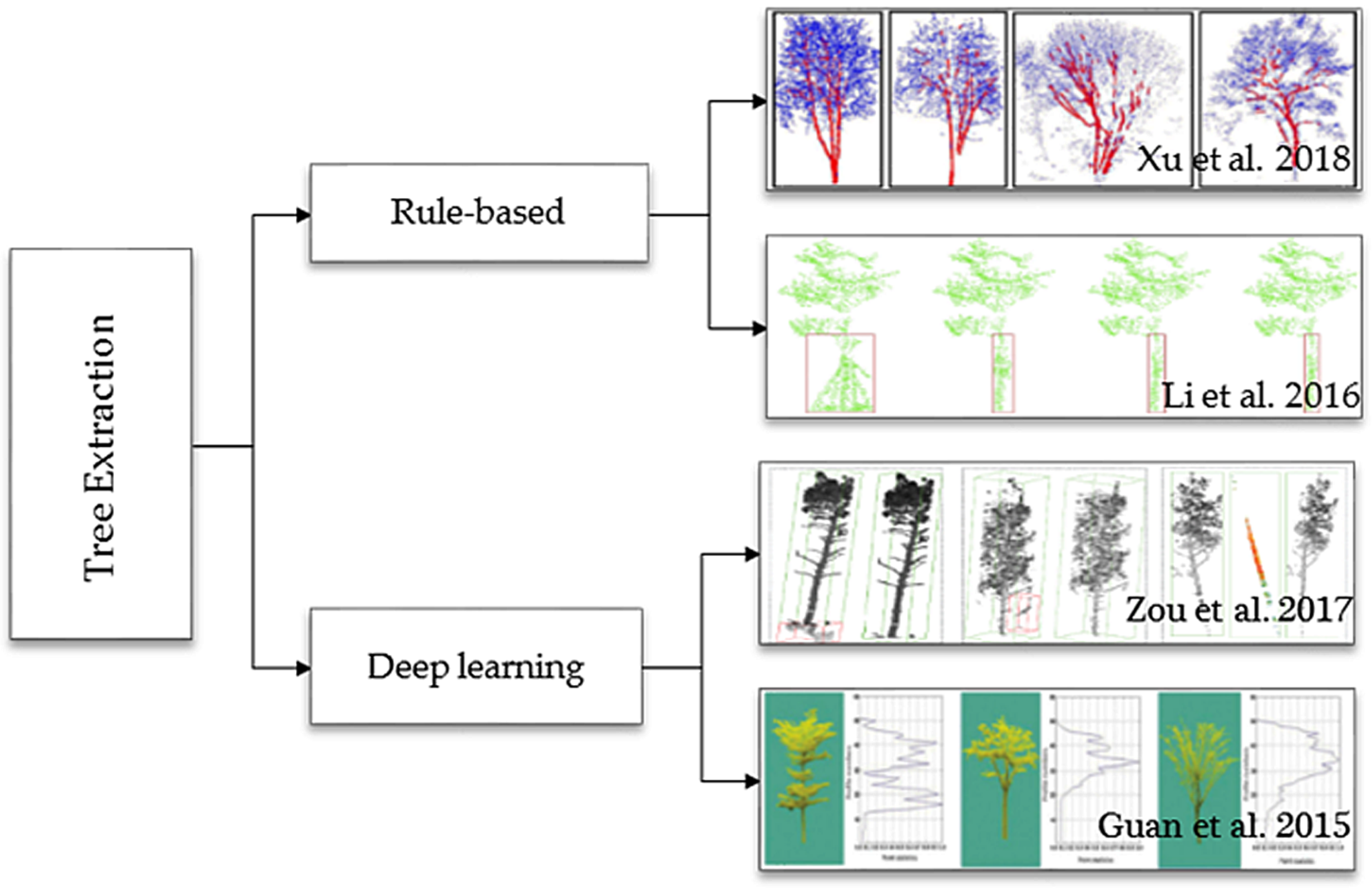

4.1.3. Roadside Trees Detection

4.2. Power Lines Extraction

4.3. Multiple Road Objects Extraction

5. Challenges and Trend

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yang, B.; Dong, Z.; Zhao, G.; Dai, W. Hierarchical extraction of urban objects from mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2015, 99, 45–57. [Google Scholar] [CrossRef]

- Leichtle, T.; Geiß, C.; Wurm, M.; Lakes, T.; Taubenböck, H. Unsupervised change detection in VHR remote sensing imagery—An object-based clustering approach in a dynamic urban environment. Int. J. Appl. Earth Obs. Geoinform. 2017, 54, 15–27. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Chen, D. LiDAR point clouds to 3-D urban models: a review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Lampinen, J.; Kaartinen, H.; Kukko, A.; Puttonen, E.; Hyyppä, H. Object classification and recognition from mobile laser scanning point clouds in a road environment. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1226–1239. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C. Automated extraction of urban road facilities using mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2167–2181. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Cao, S.; Yu, Y. Use of mobile LiDAR in road information inventory: A review. Int. J. Image Data Fusion 2016, 7, 219–242. [Google Scholar] [CrossRef]

- Vosselman, G.; Maas, H.G. Airborne and Terrestrial Laser Scanning; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Lindner, M.; Schiller, I.; Kolb, A.; Koch, R. Time-of-flight sensor calibration for accurate range sensing. Comput. Vis. Image Underst. 2010, 114, 1318–1328. [Google Scholar] [CrossRef]

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Kumar, P.; McElhinney, C.P.; Lewis, P.; McCarthy, T. Automated road markings extraction from mobile laser scanning data. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 125–137. [Google Scholar] [CrossRef]

- Toth, C.K. R&D of mobile LiDAR mapping and future trends. In Proceedings of the ASPRS 2009 Annual Conference, Baltimore, MD, USA, 9–13 March 2009; pp. 829–835. [Google Scholar]

- Guan, H.; Li, J.; Yu, Y.; Chapman, M.; Wang, H.; Wang, C.; Zhai, R. Iterative tensor voting for pavement crack extraction using mobile laser scanning data. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1527–1537. [Google Scholar] [CrossRef]

- Olsen, M.J. NCHRP Report 748 Guidelines for the Use of Mobile Lidar in Transportation Applications; Transportation Research Board: Washington, DC, USA, 2013. [Google Scholar]

- McCormac, J.C.; Sarasua, W.; Davis, W. Surveying; Wiley Global Education: Hoboken, NJ, USA, 2012. [Google Scholar]

- Trimble. Trimble MX9 Mobile Mapping Solution. 2018. Available online: https://geospatial.trimble.com/products-and-solutions/mx9 (accessed on 11 May 2018).

- RIEGL. RIEGL VMX-450 Compact Mobile Laser Scanning System. 2017. Available online: http://www.riegl.com/uploads/tx_pxpriegldownloads/DataSheet_VMX-450_2015-03-19.pdf (accessed on 11 May 2018).

- RIEGL. RIEGL VMX-2HA. 2018. Available online: http://www.riegl.com/nc/products/mobile-scanning/produktdetail/product/scanner/56 (accessed on 11 May 2018).

- Teledyne Optech. Lynx SG Mobile Mapper Summary Specification Sheet. 2018. Available online: https://www.teledyneoptech.com/index.php/product/lynx-sg1 (accessed on 11 May 2018).

- Topcon. IP-S3 Compact+. 2018. Available online: https://www.topconpositioning.com/mass-data-and-volume-collection/mobile-mapping/ip-s3 (accessed on 11 May 2018).

- Vallet, B.; Brédif, M.; Serna, A.; Marcotegui, B.; Paparoditis, N. TerraMobilita/iQmulus urban point cloud analysis benchmark. Comput. Gr. 2015, 49, 126–133. [Google Scholar] [CrossRef]

- Roynard, X.; Deschaud, J.E.; Goulette, F. Paris-Lille-3D: A large and high-quality ground truth urban point cloud dataset for automatic segmentation and classification. CVPR 2017. under review. [Google Scholar] [CrossRef]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. An Approach to Extract Moving Objects from Mls Data Using a Volumetric Background Representation. ISPRS. Ann. 2017, 4, 107–114. [Google Scholar] [CrossRef]

- Pandey, G.; McBride, J.R.; Eustice, R.M. Ford campus vision and lidar data set. Int. J. Robot. Res. 2011, 30, 1543–1552. [Google Scholar] [CrossRef]

- Carlevaris-Bianco, N.; Ushani, A.K.; Eustice, R.M. University of Michigan North Campus long-term vision and lidar dataset. Int. J. Robot. Res. 2016, 35, 1023–1035. [Google Scholar] [CrossRef]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 3213–3223. [Google Scholar]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3D. net: A new large-scale point cloud classification benchmark. ISPRS Ann. 2017, 4, 91–98. [Google Scholar]

- Wu, B.; Yu, B.; Huang, C.; Wu, Q.; Wu, J. Automated extraction of ground surface along urban roads from mobile laser scanning point clouds. Remote Sens. Lett. 2016, 7, 170–179. [Google Scholar] [CrossRef]

- Wang, H.; Luo, H.; Wen, C.; Cheng, J.; Li, P.; Chen, Y.; Wang, C.; Li, J. Road boundaries detection based on local normal saliency from mobile laser scanning data. IEEE Trans. Geosci. Remote Sens. Lett. 2015, 12, 2085–2089. [Google Scholar] [CrossRef]

- Kumar, P.; Lewis, P.; McElhinney, C.P.; Boguslawski, P.; McCarthy, T. Snake energy analysis and result validation for a mobile laser scanning data-based automated road edge extraction algorithm. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 763–773. [Google Scholar] [CrossRef]

- Kumar, P.; McElhinney, C.P.; Lewis, P.; McCarthy, T. An automated algorithm for extracting road edges from terrestrial mobile LiDAR data. ISPRS J. Photogramm. Remote Sens. 2013, 85, 44–55. [Google Scholar] [CrossRef]

- Zai, D.; Li, J.; Guo, Y.; Cheng, M.; Lin, Y.; Luo, H.; Wang, C. 3-D road boundary extraction from mobile laser scanning data via supervoxels and graph cuts. IEEE Trans. Intell. Transp. Syst. 2018, 19, 802–813. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Luo, H.; Li, P.; Chen, Y.; Li, J. 3-D point cloud object detection based on supervoxel neighborhood with Hough forest framework. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1570–1581. [Google Scholar] [CrossRef]

- Hervieu, A.; Soheilian, B. Semi-automatic road pavement modeling using mobile laser scanning. ISPRS Ann. 2013, 2, 31–36. [Google Scholar] [CrossRef]

- Hata, A.Y.; Osorio, F.S.; Wolf, D.F. Robust curb detection and vehicle localization in urban environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 1257–1262. [Google Scholar]

- Guan, H.; Li, J.; Yu, Y.; Wang, C.; Chapman, M.; Yang, B. Using mobile laser scanning data for automated extraction of road markings. ISPRS J. Photogramm. Remote Sens. 2014, 87, 93–107. [Google Scholar] [CrossRef]

- Xu, S.; Wang, R.; Zheng, H. Road curb extraction from mobile LiDAR point clouds. IEEE Geosci. Remote Sens. 2017, 2, 996–1009. [Google Scholar] [CrossRef]

- Riveiro, B.; González-Jorge, H.; Martínez-Sánchez, J.; Díaz-Vilariño, L.; Arias, P. Automatic detection of zebra crossings from mobile LiDAR data. Opt. Laser Technol. 2015, 70, 63–70. [Google Scholar] [CrossRef]

- Pu, S.; Rutzinger, M.; Vosselman, G.; Elberink, S.O. Recognizing basic structures from mobile laser scanning data for road inventory studies. ISPRS J. Photogramm. Remote Sens. 2011, 66, 28–39. [Google Scholar] [CrossRef]

- Wu, F.; Wen, C.; Guo, Y.; Wang, J.; Yu, Y.; Wang, C.; Li, J. Rapid localization and extraction of street light poles in mobile LiDAR point clouds: a supervoxel-based approach. IEEE Trans. Intell. Transp. Syst. 2017, 18, 292–305. [Google Scholar] [CrossRef]

- Cabo, C.; Kukko, A.; García-Cortés, S.; Kaartinen, H.; Hyyppä, J.; Ordoñez, C. An algorithm for automatic road asphalt edge delineation from mobile laser scanner data using the line clouds concept. Remote Sens. 2016, 8, 740. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, J. Semi-automated extraction and delineation of 3D roads of street scene from mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 79, 80–93. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, D.; Xie, X.; Ren, Y.; Li, G.; Deng, Y.; Wang, Z. A fast and accurate segmentation method for ordered LiDAR point cloud of large-scale scenes. IEEE Trans. Geosci. Remote Sens. Lett. 2014, 11, 1981–1985. [Google Scholar] [CrossRef]

- Fan, H.; Yao, W.; Tang, L. Identifying man-made objects along urban road corridors from mobile LiDAR data. IEEE Trans. Geosci. Remote Sens. Lett. 2014, 11, 950–954. [Google Scholar] [CrossRef]

- Ma, L.; Li, J.; Li, Y.; Zhong, Z.; Chapman, M. Generation of horizontally curved driving lines in HD maps using mobile laser scanning point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens 2018. under review. [Google Scholar]

- Guo, J.; Tsai, M.J.; Han, J.Y. Automatic reconstruction of road surface features by using terrestrial mobile lidar. Autom. Constr. 2015, 58, 165–175. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef] [PubMed]

- Díaz-Vilariño, L.; González-Jorge, H.; Bueno, M.; Arias, P.; Puente, I. Automatic classification of urban pavements using mobile LiDAR data and roughness descriptors. Constr. Build. Mater. 2016, 102, 208–215. [Google Scholar] [CrossRef]

- Serna, A.; Marcotegui, B.; Hernández, J. Segmentation of façades from urban 3D point clouds using geometrical and morphological attribute-based operators. ISPRS Int. Geo-Inf. 2016, 5, 6. [Google Scholar] [CrossRef]

- Kumar, P.; Lewis, P.; McCarthy, T. The potential of active contour models in extracting road edges from mobile laser scanning data. Infrastructures 2017, 2, 9–25. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z.; Liu, Y.; Liang, F.; Wang, Y. Computing multiple aggregation levels and contextual features for road facilities recognition using mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2017, 126, 180–194. [Google Scholar] [CrossRef]

- Choi, M.; Kim, M.; Kim, G.; Kim, S.; Park, S.C.; Lee, S. 3D scanning technique for obtaining road surface and its applications. Int. J. Precis. Eng. Manuf. 2017, 18, 367–373. [Google Scholar] [CrossRef]

- Boyko, A.; Funkhouser, T. Extracting roads from dense point clouds in large scale urban environment. ISPRS J. Photogramm. Remote Sens. 2011, 66, 2–12. [Google Scholar] [CrossRef]

- Zhou, L.; Vosselman, G. Mapping curbstones in airborne and mobile laser scanning data. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 293–304. [Google Scholar] [CrossRef]

- Cavegn, S.; Haala, N. Image-based mobile mapping for 3D urban data capture. Photogramm. Eng. Remote Sens. 2016, 82, 925–933. [Google Scholar] [CrossRef]

- Cheng, M.; Zhang, H.; Wang, C.; Li, J. Extraction and classification of road markings using mobile laser scanning point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1182–1196. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C. Automated detection of three-dimensional cars in mobile laser scanning point clouds using DBM-Hough-Forests. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4130–4142. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Ji, Z.; Li, J.; Zhang, Q. Deep learning-based tree classification using mobile LiDAR data. Remote Sens. Lett. 2015, 6, 864–873. [Google Scholar] [CrossRef]

- Huang, P.; Cheng, M.; Chen, Y.; Luo, H.; Wang, C.; Li, J. Traffic sign occlusion detection using mobile laser scanning point clouds. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2364–2376. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C.; Wen, C. Bag of contextual-visual words for road scene object detection from mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3391–3406. [Google Scholar] [CrossRef]

- Arcos-García, Á.; Soilán, M.; Álvarez-García, J.A.; Riveiro, B. Exploiting synergies of mobile mapping sensors and deep learning for traffic sign recognition systems. Expert Syst. Appl. 2017, 89, 286–295. [Google Scholar]

- Soilán, M.; Riveiro, B.; Martínez-Sánchez, J.; Arias, P. Traffic sign detection in MLS acquired point clouds for geometric and image-based semantic inventory. ISPRS J. Photogramm. Remote Sens. 2016, 114, 92–101. [Google Scholar] [CrossRef]

- Wen, C.; Li, J.; Luo, H.; Yu, Y.; Cai, Z.; Wang, H.; Wang, C. Spatial-related traffic sign inspection for inventory purposes using mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2016, 17, 27–37. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C.; Yu, J. Semiautomated extraction of street light poles from mobile LiDAR point-clouds. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1374–1386. [Google Scholar] [CrossRef]

- Yan, L.; Li, Z.; Liu, H.; Tan, J.; Zhao, S.; Chen, C. Detection and classification of pole-like road objects from mobile LiDAR data in motorway environment. Opt. Laser Technol. 2017, 97, 272–283. [Google Scholar] [CrossRef]

- Smadja, L.; Ninot, J.; Gavrilovic, T. Road extraction and environment interpretation from LiDAR sensors. IAPRS 2010, 38, 281–286. [Google Scholar]

- Yan, L.; Liu, H.; Tan, J.; Li, Z.; Xie, H.; Chen, C. Scan line based road marking extraction from mobile LiDAR point clouds. Sensors 2016, 16, 903. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Li, L.; Li, D.; Yang, F.; Liu, Y. A density-based clustering method for urban scene mobile laser scanning data segmentation. Remote Sens. 2017, 9, 331. [Google Scholar] [CrossRef]

- Yang, B.; Fang, L.; Li, Q.; Li, J. Automated extraction of road markings from mobile LiDAR point clouds. Photogramm. Eng. Remote Sens. 2012, 78, 331–338. [Google Scholar] [CrossRef]

- Chen, X.; Kohlmeyer, B.; Stroila, M.; Alwar, N.; Wang, R.; Bach, J. Next generation map making: geo-referenced ground-level LiDAR point clouds for automatic retro-reflective road feature extraction. In Proceedings of the 17th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 4–6 November 2009; pp. 488–491. [Google Scholar]

- Kim, H.; Liu, B.; Myung, H. Road-feature extraction using point cloud and 3D LiDAR sensor for vehicle localization. In Proceedings of the 14th International Conference on URAI, Maison Glad Jeju, Jeju, Korea, 28 June–1 July 2017; pp. 891–892. [Google Scholar]

- Yu, Y.; Li, J.; Guan, H.; Jia, F.; Wang, C. Learning hierarchical features for automated extraction of road markings from 3-D mobile LiDAR point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 709–726. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Soilán, M.; Riveiro, B.; Martínez-Sánchez, J.; Arias, P. Segmentation and classification of road markings using MLS data. ISPRS J. Photogramm. Remote Sens. 2017, 123, 94–103. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 77–85. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Chen, B. PointCNN. arXiv, 2018; arXiv:1801.07791. [Google Scholar]

- Weiskircher, T.; Wang, Q.; Ayalew, B. Predictive guidance and control framework for (semi-)autonomous vehicles in public traffic. IEEE Trans. Control Syst. Technol. 2017, 5, 2034–2046. [Google Scholar] [CrossRef]

- Li, J.; Zhao, H.; Ma, L.; Jiang, H.; Chapman, M. Recognizing features in mobile laser scanning point clouds towards 3D high-definition road maps for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2018. under review. [Google Scholar]

- Li, J.; Ye, C.; Jiang, H.; Zhao, H.; Ma, L.; Chapman, M. Semi-automated generation of road transition lines using mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2018. under revision. [Google Scholar]

- Chen, X.; Li, J. A feasibility study on use of generic mobile laser scanning system for detecting asphalt pavement cracks. ISPRS Arch. 2016, 41. [Google Scholar] [CrossRef]

- Tanaka, N.; Uematsu, K. A crack detection method in road surface images using morphology. In Proceedings of the IAPR Workshop on Machine Vision Applications, Chiba, Japan, 17–19 November 1998; pp. 17–19. [Google Scholar]

- Tsai, Y.C.; Kaul, V.; Mersereau, R.M. Critical assessment of pavement distress segmentation methods. J. Transp. Eng. 2009, 136, 11–19. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C. 3D crack skeleton extraction from mobile LiDAR point clouds. In Proceedings of the IEEE Geoscience and Remote Sensing Symposium, Quebec, QC, Canada, 13–18 July 2014; pp. 914–917. [Google Scholar]

- Saar, T.; Talvik, O. Automatic asphalt pavement crack detection and classification using neural networks. In Proceedings of the 12th Biennial Baltic Electronics Conference, Tallinn, Estonia, 4–6 October 2010; pp. 345–348. [Google Scholar]

- Gavilán, M.; Balcones, D.; Marcos, O.; Llorca, D.F.; Sotelo, M.A.; Parra, I.; Ocaña, M.; Aliseda, P.; Yarza, P.; Amírola, A. Adaptive road crack detection system by pavement classification. Sensors 2011, 11, 9628–9657. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.; Xiong, Z.; Wang, Y.H. Improved classical Hough transform applied to the manhole cover’s detection and location. Opt. Tech. 2006, 32, 504–508. [Google Scholar]

- Timofte, R.; Van Gool, L. Multi-view manhole detection, recognition, and 3D localisation. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops, 6–13 November 2011; pp. 188–195. [Google Scholar]

- Niigaki, H.; Shimamura, J.; Morimoto, M. Circular object detection based on separability and uniformity of feature distributions using Bhattacharyya coefficient. In Proceedings of the 21st International Conference on Pattern Recognition, Stockholm, Sweden, 11–15 November 2012; pp. 2009–2012. [Google Scholar]

- Guan, H.; Yu, Y.; Li, J.; Liu, P.; Zhao, H.; Wang, C. Automated extraction of manhole covers using mobile LiDAR data. Remote Sens. Lett. 2014, 5, 1042–1050. [Google Scholar] [CrossRef]

- Yu, Y.; Guan, H.; Ji, Z. Automated detection of urban road manhole covers using mobile laser scanning data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3258–3269. [Google Scholar] [CrossRef]

- Balali, V.; Jahangiri, A.; Machiani, S.G. Multi-class US traffic signs 3D recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inf. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Ai, C.; Tsai, Y.C. Critical assessment of an enhanced traffic sign detection method using mobile lidar and ins technologies. J. Transp. Eng. 2015, 141, 04014096. [Google Scholar] [CrossRef]

- Serna, A.; Marcotegui, B. Detection, segmentation and classification of 3d urban objects using mathematical morphology and supervised learning. ISPRS J. Photogramm. Remote Sens. 2014, 93, 243–255. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Wen, C.; Guan, H.; Luo, H.; Wang, C. Bag-of-visual-phrases and hierarchical deep models for traffic sign detection and recognition in mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2016, 113, 106–123. [Google Scholar] [CrossRef]

- Cabo, C.; Ordoñez, C.; García-Cortés, S.; Martínez, J. An algorithm for automatic detection of pole-like street furniture objects from mobile laser scanner point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 87, 47–56. [Google Scholar] [CrossRef]

- Wang, H.; Cai, Z.; Luo, H.; Wang, C.; Li, P.; Yang, W.; Ren, S.; Li, J. Automatic road extraction from mobile laser scanning data. In Proceedings of the 2012 International Conference on Computer Vision in Remote Sensing, Xiamen, China, 16–18 December 2012; pp. 136–139. [Google Scholar]

- Riveiro, B.; Díaz-Vilariño, L.; Conde-Carnero, B.; Soilán, M.; Arias, P. Automatic segmentation and shape-based classification of retro-reflective traffic signs from mobile LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 295–303. [Google Scholar] [CrossRef]

- Yan, W.Y.; Morsy, S.; Shaker, A.; Tulloch, M. Automatic extraction of highway light poles and towers from mobile LiDAR data. Opt. Laser Technol. 2016, 77, 162–168. [Google Scholar] [CrossRef]

- Xing, X.F.; Mostafavi, M.A.; Chavoshi, S.H. A knowledge base for automatic feature recognition from point clouds in an urban scene. ISPRS Int. Geo-Inf. 2018, 7, 28. [Google Scholar] [CrossRef]

- Seo, Y.W.; Lee, J.; Zhang, W.; Wettergreen, D. Recognition of highway workzones for reliable autonomous driving. IEEE Trans. Intell. Transp. Syst. 2015, 16, 708–718. [Google Scholar] [CrossRef]

- Golovinskiy, A.; Kim, V.; Funkhouser, T. Shape-based recognition of 3D point clouds in urban environments. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October; pp. 2154–2161.

- Gonzalez-Jorge, H.; Riveiro, B.; Armesto, J.; Arias, P. Evaluation of road signs using radiometric and geometric data from terrestrial lidar. Opt. Appl. 2013, 43, 421–433. [Google Scholar]

- Levinson, J.; Askeland, J.; Becker, J.; Dolson, J.; Held, D.; Kammel, S.; Kolter, J.; Langer, D.; Pink, O.; Pratt, V. Towards fully autonomous driving: systems and algorithms. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 163–168. [Google Scholar]

- Wang, H.; Wang, C.; Luo, H.; Li, P.; Cheng, M.; Wen, C.; Li, J. Object detection in terrestrial laser scanning point clouds based on Hough forest. IEEE Trans. Geosci. Remote Sens. Lett. 2014, 11, 1807–1811. [Google Scholar] [CrossRef]

- Yang, B.; Dong, Z. A shape-based segmentation method for mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2013, 81, 19–30. [Google Scholar] [CrossRef]

- Guan, H.; Yan, W.; Yu, Y.; Zhong, L.; Li, D. Robust traffic-sign detection and classification using mobile LiDAR data with digital images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1715–1724. [Google Scholar] [CrossRef]

- Tan, M.; Wang, B.; Wu, Z.; Wang, J.; Pan, G. Weakly supervised metric learning for traffic sign recognition in a LIDAR-equipped vehicle. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1415–1427. [Google Scholar] [CrossRef]

- Luo, H.; Wang, C.; Wang, H.; Chen, Z.; Zai, D.; Zhang, S.; Li, J. Exploiting location information to detect light pole in mobile LiDAR point clouds. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; pp. 453–456. [Google Scholar]

- Zheng, H.; Wang, R.; Xu, S. Recognizing street lighting poles from mobile LiDAR data. IEEE Geosci. Remote Sens. 2017, 55, 407–420. [Google Scholar] [CrossRef]

- Li, L.; Li, Y.; Li, D. A method based on an adaptive radius cylinder model for detecting pole-like objects in mobile laser scanning data. Remote Sens. Lett. 2016, 7, 249–258. [Google Scholar] [CrossRef]

- Wang, J.; Lindenbergh, R.; Menenti, M. SigVox–A 3D feature matching algorithm for automatic street object recognition in mobile laser scanning point clouds. ISPRS J. Photogramm. Remote Sens. 2017, 128, 111–129. [Google Scholar] [CrossRef]

- Teo, T.A.; Chiu, C.M. Pole-like road object detection from mobile lidar system using a coarse-to-fine approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4805–4818. [Google Scholar] [CrossRef]

- Rodríguez-Cuenca, B.; García-Cortés, S.; Ordóñez, C.; Alonso, M.C. Automatic detection and classification of pole-like objects in urban point cloud data using an anomaly detection algorithm. Remote Sens. 2015, 7, 12680–12703. [Google Scholar] [CrossRef]

- Li, F.; Oude Elberink, S.; Vosselman, G. Pole-like road furniture detection and decomposition in mobile laser scanning data based on spatial relations. Remote Sens. 2018, 10, 531. [Google Scholar]

- Guan, H.; Yu, Y.; Li, J.; Liu, P. Pole-like road object detection in mobile LiDAR data via super-voxel and bag-of-contextual-visual-words representation. IEEE Trans. Geosci. Remote Sens. 2016, 13, 520–524. [Google Scholar] [CrossRef]

- Xu, S.; Xu, S.; Ye, N.; Zhu, F. Automatic extraction of street trees’ non-photosynthetic components from MLS data. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 64–77. [Google Scholar] [CrossRef]

- Li, L.; Li, D.; Zhu, H.; Li, Y. A dual growing method for the automatic extraction of individual trees from mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2016, 120, 37–52. [Google Scholar] [CrossRef]

- Zou, X.; Cheng, M.; Wang, C.; Xia, Y.; Li, J. Tree classification in complex forest point clouds based on deep learning. IEEE Trans. Geosci. Remote Sens. Lett. 2017, 14, 2360–2364. [Google Scholar] [CrossRef]

- Cheng, L.; Tong, L.; Wang, Y.; Li, M. Extraction of urban power lines from vehicle-borne LiDAR data. Remote Sens. 2014, 6, 3302–3320. [Google Scholar] [CrossRef]

- Zhu, L.; Hyyppa, J. The use of airborne and mobile laser scanning for modeling railway environments in 3D. Remote Sens. 2014, 6, 3075–3100. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Li, J.; Ji, Z.; Zhang, Q. Extraction of power-transmission lines from vehicle-borne lidar data. Int. J. Remote Sens. 2016, 37, 229–247. [Google Scholar] [CrossRef]

- Luo, H.; Wang, C.; Wen, C.; Cai, Z.; Chen, Z.; Wang, H.; Yu, Y.; Li, J. Patch-based semantic labeling of road scene using colorized mobile LiDAR point clouds. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1286–1297. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. SVM-based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Tootooni, M.S.; Dsouza, A.; Donovan, R.; Rao, P.K.; Kong, Z.J.; Borgesen, P. Classifying the dimensional variation in additive manufactured parts from laser-scanned three-dimensional point cloud data using machine learning approaches. J. Manuf. Sci. Eng. 2017, 139, 091005. [Google Scholar] [CrossRef]

- Ghamisi, P.; Höfle, B. LiDAR data classification using extinction profiles and a composite kernel support vector machine. IEEE Geosci. Remote Sens. Lett. 2017, 14, 659–663. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-end learning for point cloud based 3D object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Wang, L.; Huang, Y.; Shan, J.; He, L. MSNet: Multi-scale convolutional network for point cloud classification. Remote Sens. 2018, 10, 612. [Google Scholar] [CrossRef]

- Balado, J.; Díaz-Vilariño, L.; Arias, P.; González-Jorge, H. Automatic classification of urban ground elements from mobile laser scanning data. Autom. Construct. 2018, 86, 226–239. [Google Scholar] [CrossRef]

- Li, J.; Yang, B.; Chen, C.; Huang, R.; Dong, Z.; Xiao, W. Automatic registration of panoramic image sequence and mobile laser scanning data using semantic features. ISPRS J. Photogramm. Remote Sens. 2018, 136, 41–57. [Google Scholar] [CrossRef]

| Parameters | Representation | Source |

|---|---|---|

| Coordinate of the laser point P in the mapping system | Mapping frame | |

| Rotation information align mapping frame with IMU | IMU | |

| Transformation parameters from the laser scanner to IMU coordinate system | System calibration | |

| Relative position information of point P in the laser sensor coordinate system | Laser scanners | |

| The offsets between the IMU origin and the laser scanner origin | System calibration | |

| The offsets between the GNSS origin and the IMU origin | System calibration | |

| Position of GNSS sensor in mapping frame | GNSS antenna |

| Corporation | Trimble | RIEGL | Teledy-Ne Optech | 3D Laser Mapping Ltd.& IGI mbH | TOPCON | Siteco | ||

|---|---|---|---|---|---|---|---|---|

| MLS System | MX-9 | VMX-450 | VMX-2HA | Lynx SG | StreetMapperIV | IP-S3 Compact+ | ROAD SCANNER-C | |

| Laser scanner component | Laser scanner | - | RIEGL VQ-450 (2) | RIEGL VUX-1HA (2) | Optech Lynx HS-600 Dual | RIEGL VUX-1HA (2) | SICK LMS 291(5) | Faro Focus3D (2) |

| Laser wavelength | near infrared | 1550 nm | near infrared | 905 nm | 905 nm | |||

| Measuring principle | TOF measurement. Echo signal digitalization. | TOF. | TOF. Echo signal digitalization. | TOF. | Phase difference. | |||

| Maximum range | 420 m | 800 m | 420 m | 250 m | 420 m | 100 m | 330 m | |

| Minimum range | 1.2 m | 1.5 m | 1.2 m | - | 1.2 m | 0.7 m | 0.6 m | |

| Measurement precision | 3 mm | 5 mm | 3 mm | 5 mm | 3 mm | 10 mm | 2 mm | |

| Absolute accuracy | 5 mm | 8 mm | 5 mm | 5 cm | 5 mm | 35 mm | 10 mm | |

| Scan frequency | 500 scans/s | Up to 200 Hz | 500 scans/s | Up to 600 scans/s | 500 scans/s | 75–100 Hz | 96 Hz | |

| Angle measurement resolution | 0.001° | 0.001° | 0.001° | 0.001° | 0.001° | 0.667° | H0.00076° V 0.009° | |

| Scanner field of view | 360 | 360 | 360 | 360 | 360 | 180/90 | H360V 320 | |

| GNSS component | GNSS types | POS LV 520 | POS LV-510 | POS LV-520 | POS LV 420 | POS LV-510 | 40 channels, dual constellation, dual frequency GPS + GLONASS L1/L2 | Dual frequency GPS + GLONASS L1/L2 |

| Roll & Pitch | 0.005 | 0.005 | 0.005 | 0.015 | 0.005 | 0.4 | 0.020 | |

| Heading | 0.015 | 0.015 | 0.015 | 0.020 | 0.015 | 0.2 | 0.015° | |

| Imagery component | Camera types | Point Gray Grasshopper® GRAS-50S5C (4) | 500 MP (6) | Nikon® D810 (up to 9) or FLIR Ladybug® 5+ | Up to four 5-MP cameras and one Ladybug® camera | Ladybug 3 & Ladybug 5 (6) | - | Ladybug 5 30-megapixel Spher. camera |

| Lens size | 2/3” CCD | 2/3” CCD | 2/3” CCD | 1/1.8” or 2/3” CCD | 2/3” | 1/3” CCD | - | |

| Field of view | - | 80° × 65° | 104° × 81° | 57° × 47° | - | - | 360° | |

| Exposure | - | 8 | - | 3 | 2 | 15 | Max. 30 | |

| Reference | [17] | [18] | [19] | [20] | - | [21] | - | |

| Method | Information | Description | Performance | Reference |

|---|---|---|---|---|

| Voxel-based upward growing method |

| First, split the original point cloud into grids with certain width in the XOY plane. Second, use the octree data structure organizes the voxel in each grid. Third, the voxel grows upward to its nine neighbors, then continues to search upward to their corresponding neighbors and terminates when no more voxels can be searched. Finally, the voxel with the highest elevation is compared with a threshold to label it as ground or off-ground part. |

| [7,59,60,61,62,63] |

| Ground Segmentati-on |

| First, voxelize the input data into blocks. Second, cluster neighboring voxels whose vertical mean and variance differences are less than given thresholds and choose the largest partition as the ground. Third, select ground seeds using the trajectory. Finally, use K-Nearest Neighbor algorithm to determine the closest voxel for each point in the trajectory. |

| [63,64] |

| Trajectory-based filter |

| First, using the trajectory data and the distance to filter original data. Second, rasterize the filtered data onto the horizontal plane and then compute different features of point cloud in each voxel. The raster data structure is visualized as an intensity image. Finally, binary the image using different features. Run AND operation between both binary images to filter the ground points. |

| [41,65] |

| Terrain filter |

| First, partition the input data into grids along horizontal XY-plane. Second, determine a representative point based on the given percentile. Then use it and its neighboring cell’s representative points to estimate a local plane. Third, define a rectangle box with a known distance to the estimated plane. Such points located outside the box are labeled as “off-terrain” points. The box is partitioned into four small groups. Repeat Step 1, 2, 3 until meet the termination condition. Finally, Apply the Euclidean clustering to group the off-terrain points into clusters. |

| [66] |

| Voxel-based ground removal method |

| Firstly, the point clouds are vertically segmented into voxels with equal length and width on the XOY plane. Then, the ground points are removed by an elevation threshold in each voxel. |

| [67,68] |

| RANSAC-based filter |

| First, divide the entire point clouds into partitions along the trajectory. Use plane fitting to estimate the average height of ground. Second, iteratively apply RANSAC algorithm to fit a plane. The iteration terminates when the elevation of one point is higher than the mean elevation or the number of points in the plane generated by RANSAC remains unchanged. |

| [42] |

| Categories | Method | Information | Advantages | Limitations |

|---|---|---|---|---|

| 2D image-based extraction | Multiple thresholding segmentation [13,40] |

|

|

|

| Hough Transform [71] |

|

|

| |

| Multi-scale Tensor Voting [14] |

|

|

| |

| 3D MLS-based extraction | Deep learning neural network [74] |

|

|

|

| Profile-based intensity analysis [73] |

|

|

|

| Attributes | Geometric Dimension | Geometric Relations | Geometric Shape | Topology | Intensity |

|---|---|---|---|---|---|

| Traffic signs | Height: 1.2–10 m | Perpendicular to ground; parallel to pole-like objects | circle, rectangle, triangle | planar structures | high |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, L.; Li, Y.; Li, J.; Wang, C.; Wang, R.; Chapman, M.A. Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sens. 2018, 10, 1531. https://doi.org/10.3390/rs10101531

Ma L, Li Y, Li J, Wang C, Wang R, Chapman MA. Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sensing. 2018; 10(10):1531. https://doi.org/10.3390/rs10101531

Chicago/Turabian StyleMa, Lingfei, Ying Li, Jonathan Li, Cheng Wang, Ruisheng Wang, and Michael A. Chapman. 2018. "Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review" Remote Sensing 10, no. 10: 1531. https://doi.org/10.3390/rs10101531

APA StyleMa, L., Li, Y., Li, J., Wang, C., Wang, R., & Chapman, M. A. (2018). Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review. Remote Sensing, 10(10), 1531. https://doi.org/10.3390/rs10101531