An Identification Key for Selecting Methods for Sustainability Assessments

Abstract

:1. Introduction

- (1)

- the perspective of the assessment (e.g., biophysical limits or human wellbeing);

- (2)

- desired features of the assessment (e.g., spatial or temporal focus);

- (3)

- the acceptability criterion of Pope et al. (2004) [24] (e.g., is the goal of the assessment to reduce impacts or to reach explicitly defined sustainability goals?);

- (4)

- values of the stakeholders (e.g., focus on general human well-being, personal well-being, or ecosystem well-being).

- (i)

- identify a method based on explicit choices and all methods available and not necessarily the well-known method by the analyst;

- (ii)

- guide method selection from demand perspective (articulation of the question) rather than supply perspective;

- (iii)

- report the results of the assessment referring to the explicit choices made with question articulation, making results easier to understand, interpret and compare with other assessments.

- (iv)

- make method selection transparent and reproducible

- (i)

- to confront the available assessment methods with the sustainability questions posed by society such as to propose a new organizing framework for selection of sustainability assessment methods: the sustainability assessment identification key;

- (ii)

- to present the design of the sustainability assessment identification key;

- (iii)

- to show how the sustainability assessment identification key (SA-IK) works.

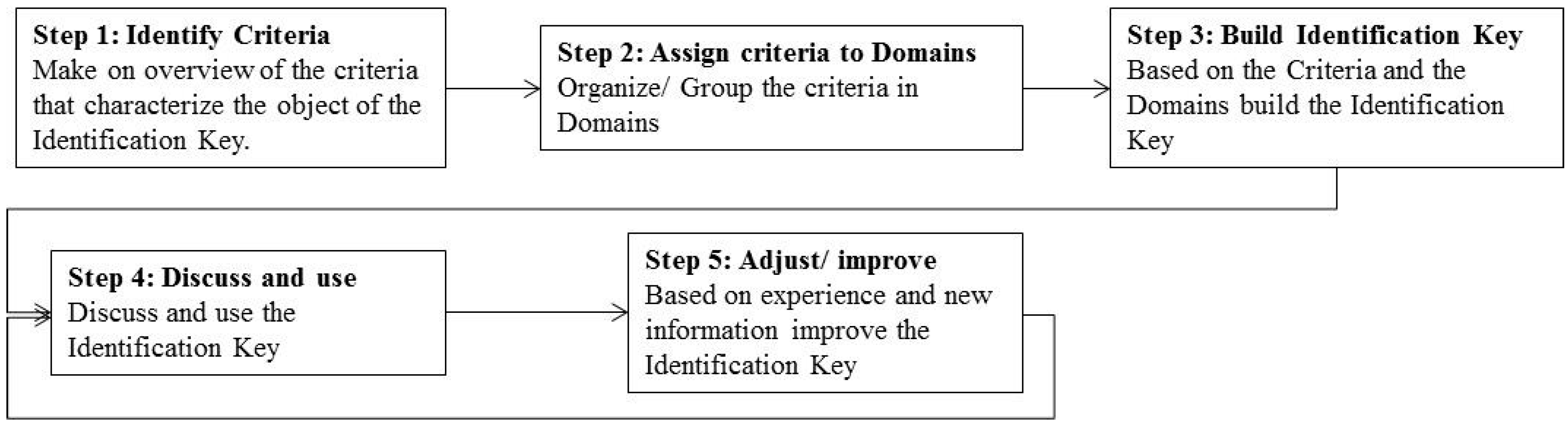

2. Methods: Development of a Sustainability Assessment Identification Key

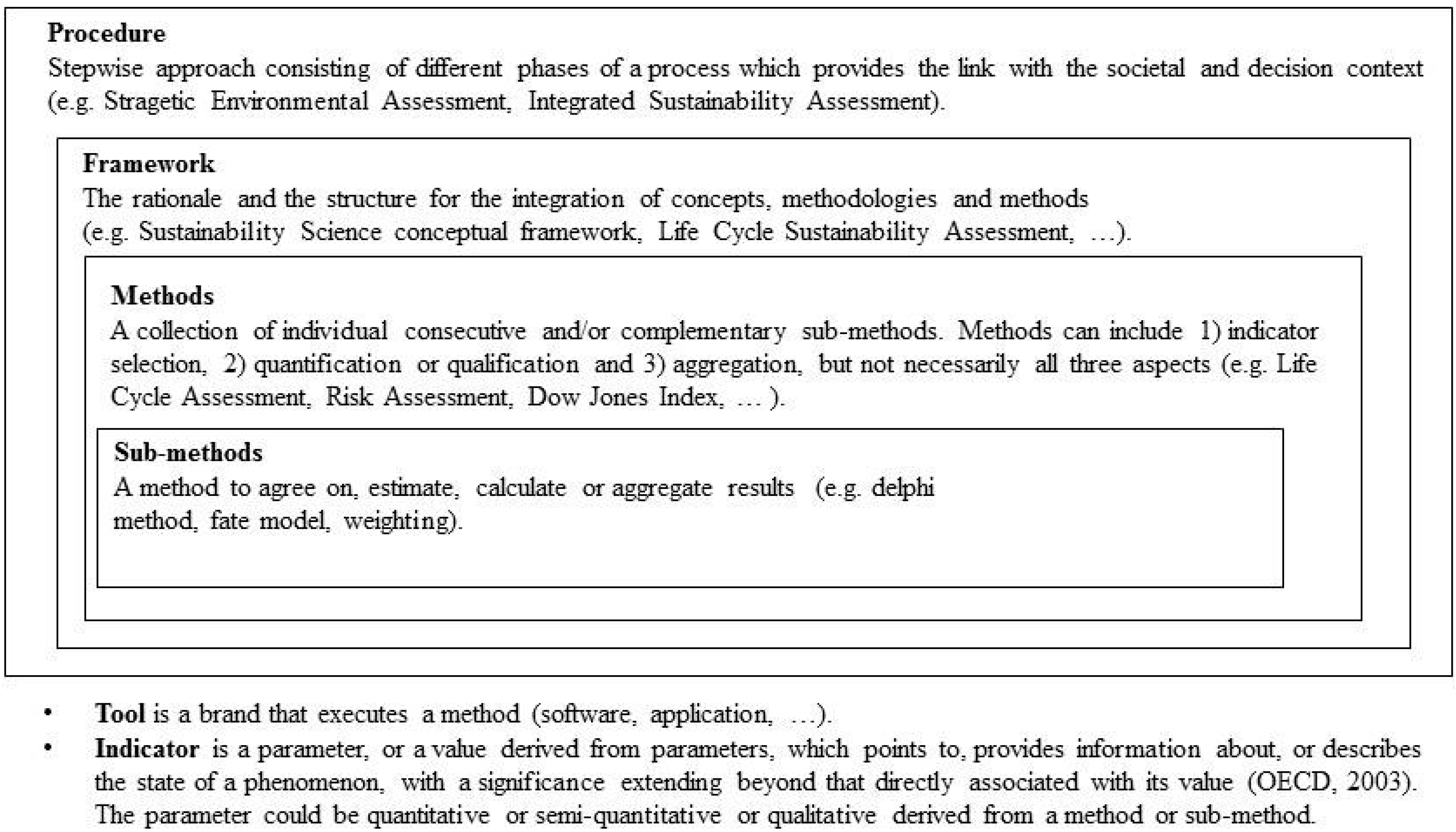

2.1. Terminology Used in This Article

2.2. Review on the Derivation of an Identification Key in General

2.3. Step 1: Identify Criteria

| Criteria | Explanation | References |

|---|---|---|

| Domain: System boundaries/Inventory | ||

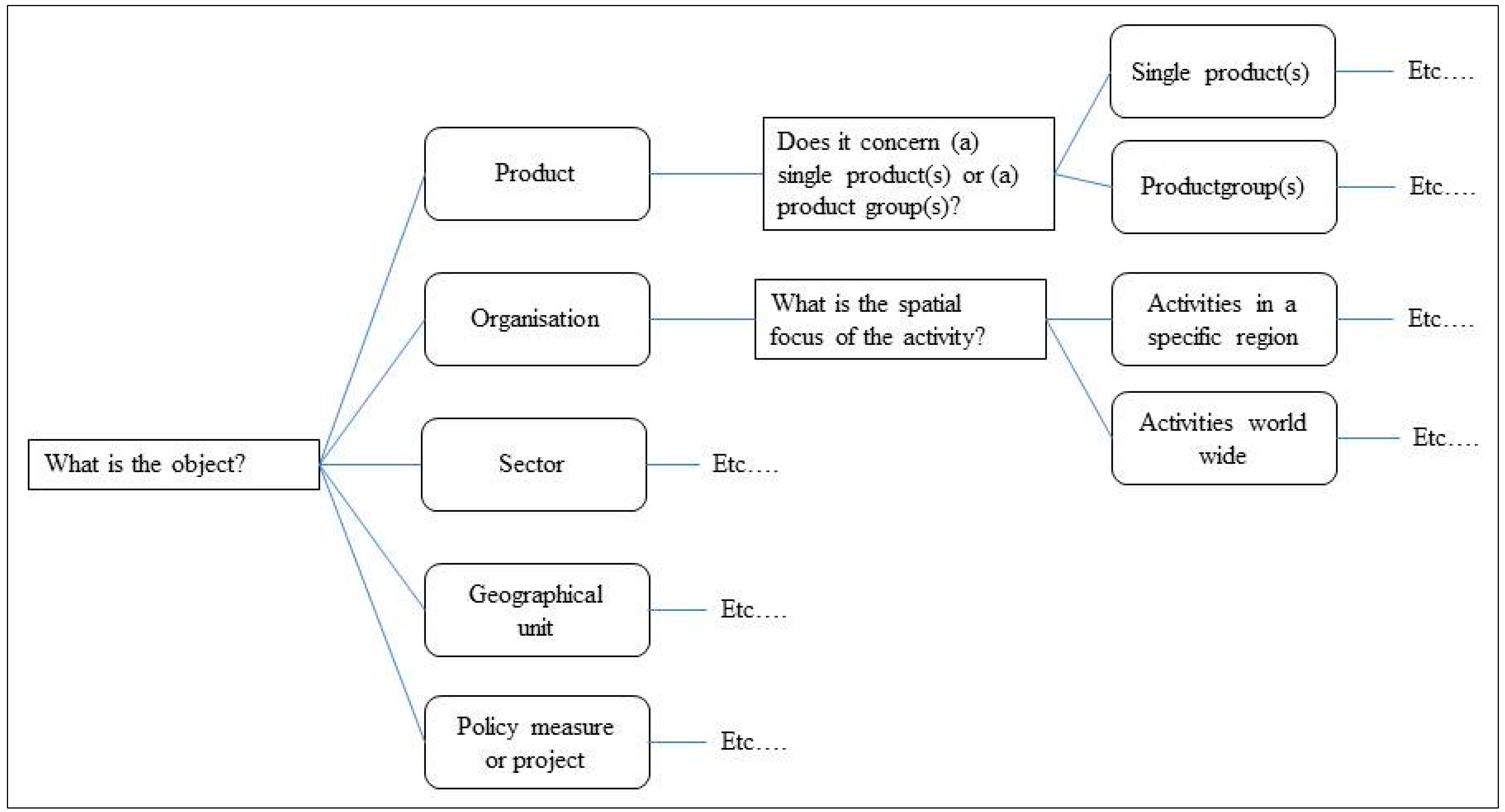

| Object | What is the object of the assessment? Is it a physical object (product, chemical, process), or an organization, a region, a policy measure, an activity, etc… | [12,13,14,17,18,32] |

| Spatial focus | What is the spatial focus of the activity? Is the activity assessed on micro or macro scale, and if on macro on local, regional or global scale? | [8,12,13,17,18,32,33] |

| Temporal focus | What is the temporal focus of the assessment? Is the activity assessed retrospective, prospective or does a snapshot suffice? | [7,8,16,18,24,32] |

| Life cycle thinking | Which parts of the life cycle or supply chain are included in the assessment? Only one phase, the whole life cycle, or something in between? | [8,15,17,34] |

| Domain: Impact Assessment/Theme selection | ||

| What is to be sustained | What is to be sustained? Are these environmental, social, economic and/or institutional endpoints? | [8,16,18,32,33,35,36] |

| Theme and indicator selection | Which themes are selected? Is the method transparent in the selection and use of indicators? What place on the cause effect chain do the indicators have? etc. | [12,14,17,35,37] |

| Spatial focus of impact | What is the spatial scale of the impacts that should be taken into account? Does the assessment include intra-generational impacts? Or in other words: does the assessment aim at internal or external sustainability. Impacts at what scale are taken into account? Are they site-specific/dependent or independent? | [13,16,17,34,37] |

| Temporal focus of the impact | What is the temporal scale of the impacts that should be taken into account? Does the assessment include inter-generational impacts? What time-frame should be included for the impacts? | [7,16,33,36,38] |

| Domain: Aggregation/Interpretation | ||

| Sustainability target | Is a sustainability target necessary? If the goal is to compare alternatives, to perform a hotspot analysis or to improve an object, a sustainability target is not essential. If the goal of the analysis is to determine the sustainability of an object, a target is required. This is also referred to as direction to target (no target needed) or distant from target (target needed); and assessment impact-led (least impact, no target needed), objective-led (best positive contribution, no target needed) or assessment for sustainability (like the other two, but in relation to a specific sustainability target)? | [7,8,16,24,34] |

| Values/View on sustainability | What view on sustainability should be leading in the assessment? Is sustainability understood as weak, strong or partly substitutional? In short: weak means that various capitals are interchangeable. Strong means that each capital should be preserved independently. Partly substitutional means weak until a critical level is reached, e.g., Critical Natural Capital (CNC) or planetary boundary. Also one’s world view (personal believes or risk perception) can influence the assessment. | [7,8,9,12,24,36,38,39,40,41,42,43] |

| View on integration of pillars | How should aggregation of information from different disciplines take place in the assessment? In a multi (separate), inter (connected) or trans (combined/holistic) disciplinary way? | [7,8,15,16,18,36,38] |

| Normalisation/weighting/aggregation method | Which aggregation level is preferred and which methods are used? Both normalisation (make data comparable), weighting (specify interrelationships) and aggregation (get functional relationships) need careful consideration. | [9,12,17,33,34,35,36] |

| Domain: Method Design | ||

| View on stakeholder involvement | Who should be involved in the assessment in which way? Also referred to as legitimacy, in relation to indices or composite indicators. | [7,8,19,33,36,38,40,44] |

| Context of the assessment | How and by whom are the results used? In which (phase of a) procedure are the results of the assessment used? Is the goal of the measure: decision making and management, advocacy, participation and consensus building or research and analysis? Or is it a strategic, capital investment, design and development, communication and marketing or operational question? | [8,13,14,19,32,33] |

| Uncertainties | How are uncertainties to be handled? Salience, credibility and variability? Should an uncertainty, sensitivity and/or perturbation analysis be included? | [7,8,13,16,33,36,45] |

| Domain: Organisational restrictions | ||

| Formal requirements | Should the method be formally recognized? ISO, EC, etc. | [13,33] |

| Expertise requirements and availability | Is there capacity for hiring expertise? Expertise requirements and availability | [12,13,36] |

| Software requirements and availability | Is there capacity for acquiring software? Software requirements and availability | [12,13] |

| Data requirements and availability | Is there capacity for gathering data? Data requirements and availability | [13,33,34,46] |

2.4. Step 2: Assign the Criteria to Domains

2.5. Step 3: Build the Identification Key

2.6. Note on Theme Selection

3. Results: Examples of How the Identification Key Works and What Type of Problems It Solves

3.1. Example of Sustainability Assessment Identification Key Application

| Example 1 | Example 2 | Example 3 | ||||

|---|---|---|---|---|---|---|

| Question | How sustainable is our food pattern? | Question | How sustainable is our food pattern? | Question | How sustainable is our food pattern? | |

| Sub Identification Key on System boundaries/Inventory | ||||||

| What is the object? | Products | What is the object? | Products | What is the object? | Geographical unit (river catchment) | |

| Single product(s) or product group(s)? | Single products | Single product(s) or product group(s)? | Product groups | |||

| Should the product(s) life cycles be included? | Yes | Should a chain analysis be included | Yes | Should a chain analysis be included? | Yes | |

| Which part of the life cycle? | Cradle to grave | Which part of the chain? | Upstream | Which part of the chain | Upstream | |

| What is the spatial focus of the activity | Local | What is the spatial focus of the activity | Regional | What is the spatial focus of the activity | Continental | |

| What is the temporal focus of the activity? | Snapshot | What is the temporal focus of the activity? | Snapshot | What is the temporal focus of the activity? | Prospective | |

| Results sub IK System boundaries/Inventory | Life Cycle Inventory | Input Output Analysis, Material Flow Analysis, Substance Flow analysis, … | Input Output type of analysis in combination with scenario building | |||

| Sub Identification Key on Impact assessment/Theme selection | ||||||

| What is to be sustained? | Environment | What is to be sustained? | Resources | What is to be sustained? | Biodiversity | |

| Which location on the cause effect chain is required? | Impact at endpoint | Which location on the cause effect chain is required? | Pressure | Which location on the cause effect chain is required? | Impact midpoint | |

| Question | How sustainable is our food pattern? | Question | How sustainable is our food pattern? | Question | How sustainable is our food pattern? | |

| Sub Identification Key on Impact assessment/Theme selection | ||||||

| Select themes | Climate change, acidification, eutrophication | Select themes | Economy, energy and material use | Select themes | Toxicity | |

| Results sub IK Impact assessment/Theme selection | Endpoint Life Cycle Impact Assessment (LCIA) method | Input Output Analysis and Material Flow Analysis | Chemical Footprint method or Midpoint LCIA method, | |||

| Sub Identification Key on Aggregation/Interpretation | ||||||

| What type of analysis is required? | Direction to target | What type of analysis is required? | Direction to target | What type of analysis is required? | Distance from target | |

| What type of sustainability goal is required? | A natural boundary | |||||

| Which level of aggregation is required | Capitals | Which level of aggregation is required | Total | Which level of aggregation is required | Categories | |

| What is the view on sustainability | Ecocentric | What is the view on sustainability | Weak | |||

| Result sub IK aggregation | LCIA endpoint damage method | Result sub IK aggregation | A Multi Criteria Analysis (MCA) like weighted summation or Multi Attribute Value Theory (MAVT) | Result sub IK aggregation | Footprint method | |

| Match of sub IKs → method selection | Life Cycle Assessment with Endpoint LCIA method (e.g., ReCiPe) | Match of sub IKs → method selection | Material Flow Analysis and Input Output Analysis aggregated with MCA, e.g., MAVT | Match of sub IKs → method selection | Chemical pollution footprint method in combination with scenario building | |

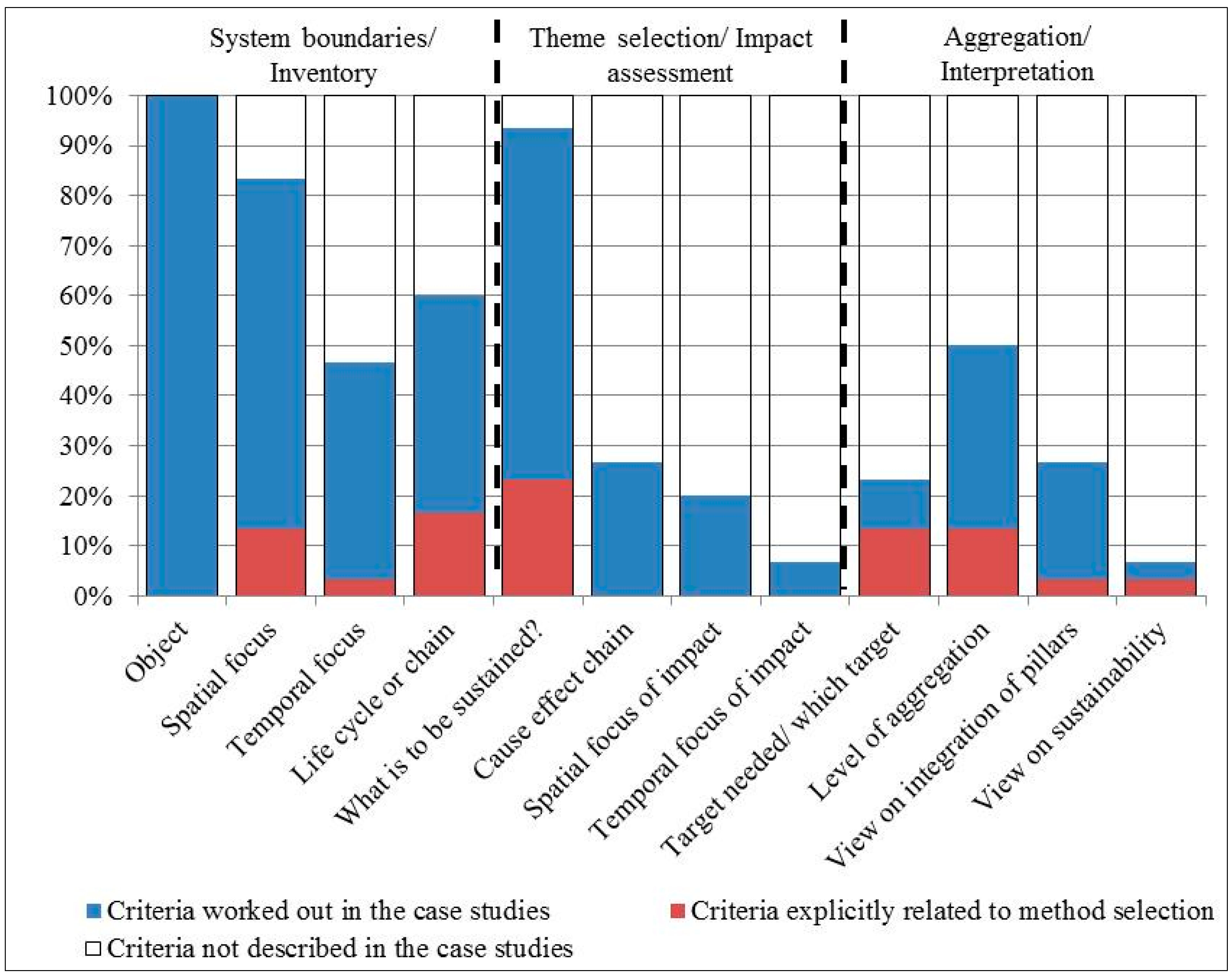

3.2. Confronting Sustainability Assessments in Scientific Literature with the Identification Key

3.2.1. Transparency on Method Selection

3.2.2. Prevent Inconsistencies between Introductions/Case Descriptions and Method Selection

3.2.3. Prevent Inconsistencies in Methodological Design

4. Discussion and Conclusions

- (a)

- guide and make explicit choices in method selection and design, revealing assumptions that remain hidden in many studies;

- (b)

- yield a better understanding of the question raised and how the question guides method selection

- (c)

- enable a more robust interpretation of the results, because the results can be placed in the context of methodological choices;

- (d)

- producing eventually more transparent and reproducible assessments;

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hoekstra, A.Y.; Wiedmann, T.O. Humanity’s unsustainable environmental footprint. Science 2014, 344, 1114–1117. [Google Scholar] [CrossRef] [PubMed]

- Waas, T.; Hugé, J.; Block, T.; Wright, T.; Benitez-Capistros, F.; Verbruggen, A. Sustainability assessment and indicators: Tools in a decision-making strategy for sustainable development. Sustainability 2014, 6, 5512–5534. [Google Scholar] [CrossRef]

- ICLEI. Sustainable cities. Available online: http://www.sustainablecities.eu (accessed on 9 April 2014).

- UN. Outcome Document of Rio+20 Conference; United Nations: Rio de Janeiro, Brazil, 2012. [Google Scholar]

- EC. Commission Recommendation of 9 April 2013 on the Use of Common Methods to Measure and Communicate the Life Cycle Environmental Performance of Products and Organisations. Off. J. Eur. Union 2013, 56, 1–210. [Google Scholar]

- EC. European innovation partnership “agricultural productivity and sustainability”. Available online: http://ec.europa.eu/agriculture/eip/index_en.htm (accessed on 9 April 2014).

- Gasparatos, A.; Scolobig, A. Choosing the most appropriate sustainability assessment tool. Ecol. Econ. 2012, 80, 1–7. [Google Scholar] [CrossRef]

- Sala, S.; Ciuffo, B.; Nijkamp, P. A Meta-Framework for Sustainability Assessment; Free University of Amsterdam: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Özdemir, E.D.; Härdtlein, M.; Jenssen, T.; Zech, D.; Eltrop, L. A confusion of tongues or the art of aggregating indicators—Reflections on four projective methodologies on sustainability measurement. Renew. Sustain. Energy Rev. 2011, 15, 2385–2396. [Google Scholar] [CrossRef]

- Browne, D.; O’Regan, B.; Moles, R. Comparison of energy flow accounting, energy flow metabolism ratio analysis and ecological footprinting as tools for measuring urban sustainability: A case-study of an irish city-region. Ecol. Econ. 2012, 83, 97–107. [Google Scholar] [CrossRef]

- De Ridder, W. Sustainabilitya-Test Inception Report: Progress to Date and Future Tasks. Available online: http://www.pbl.nl/sites/default/files/cms/publicaties/555000001.pdf (accessed on 30 November 2014).

- Singh, R.K.; Murty, H.R.; Gupta, S.K.; Dikshit, A.K. An overview of sustainability assessment methodologies. Ecol. Indic. 2012, 15, 281–299. [Google Scholar] [CrossRef]

- Wrisberg, N.; Udo de Haes, H.A.; Triebswetter, U.; Eder, P.; Clift, R. Analytical Tools for Environmental Design and Management in a Systems Perspective; Centre of Environmental Science, Leiden University: Leiden, The Netherlands, 2000. [Google Scholar]

- Finnveden, G.; Moberg, A. Environmental systems analysis tools-an overview. J. Cleaner Prod. 2005, 13, 1165–1173. [Google Scholar] [CrossRef]

- Sala, S.; Farioli, F.; Zamagni, A. Progress in sustainability science: Lessons learnt from current methodologies for sustainability assessment: Part 1. Int. J. Life Cycle Assess. 2013, 18, 1653–1672. [Google Scholar] [CrossRef]

- Hacking, T.; Guthrie, P. A framework for clarifying the meaning of triple bottom-line, integrated, and sustainability assessment. Environ. Impact Assess. Rev. 2008, 28, 73–89. [Google Scholar] [CrossRef]

- Udo de Haes, H.A.; Sleeswijk, A.W.; Heijungs, R. Similarities, differences and synergisms between hera and lca-an analysis at three levels. Hum. Ecol. Risk Assess. 2006, 12, 431–449. [Google Scholar] [CrossRef]

- Ness, B.; Urbel-Piirsalu, E.; Anderberg, S.; Olsson, L. Categorising tools for sustainability assessment. Ecol. Econ. 2007, 60, 498–508. [Google Scholar] [CrossRef]

- De Ridder, W.; Turnpenny, J.; Nilsson, M.; von Raggamby, A. A framework for tool selection and use in integrated assessment for sustainable development. J. Environ. Assess. Policy Manag. 2007, 9, 423–441. [Google Scholar] [CrossRef]

- Van Passel, S.; Meul, M. Multilevel and multi-user sustainability assessment of farming systems. Environ. Impact Assess. Rev. 2012, 32, 170–180. [Google Scholar] [CrossRef]

- Florin, M.J.; van Ittersum, M.K.; van de Ven, G.W.J. Selecting the sharpest tools to explore the food-feed-fuel debate: Sustainability assessment of family farmers producing food, feed and fuel in brazil. Ecol. Indic. 2012, 20, 108–120. [Google Scholar] [CrossRef]

- Binder, C.R.; Feola, G.; Steinberger, J.K. Considering the normative, systemic and procedural dimensions in indicator-based sustainability assessments in agriculture. Environ. Impact Assess. Rev. 2010, 30, 71–81. [Google Scholar] [CrossRef]

- Carof, M.; Colomb, B.; Aveline, A. A guide for choosing the most appropriate method for multi-criteria assessment of agricultural systems according to decision-makers’ expectations. Agric. Syst. 2013, 115, 51–62. [Google Scholar] [CrossRef]

- Pope, J.; Annandale, D.; Morrison-Saunders, A. Conceptualising sustainability assessment. Environ. Impact Assess. Rev. 2004, 24, 595–616. [Google Scholar] [CrossRef]

- Schneider, A.G.; Townsend-Small, A.; Rosso, D. Impact of direct greenhouse gas emissions on the carbon footprint of water reclamation processes employing nitrification-denitrification. Sci. Total Environ. 2015, 505, 1166–1173. [Google Scholar] [CrossRef] [PubMed]

- Nickerson, R.C.; Varshney, U.; Muntermann, J. A method for taxonomy development and its application in information systems. Eur. J. Inf. Syst. 2013, 22, 336–359. [Google Scholar] [CrossRef]

- Bailey, K.D. Typologies and Taxonomies—An Introduction to Classification Techniques; SAGE: Thousand Oaks, CA, USA, 1994. [Google Scholar]

- Peters, I. Folksonomies: Indexing and Retrieval in Web 2.0; De Gruyter, Saur: Berlin, Germany, 2009. [Google Scholar]

- Kendal, S.; Creen, M. An Introduction to Knowledge Engineering; Springer: London, UK, 2007. [Google Scholar]

- Dijkers, M.P.; Hart, T.; Tsaousides, T.; Whyte, J.; Zanca, J.M. Treatment taxonomy for rehabilitation: Past, present, and prospects. Arch. Phys. Med. Rehabil. 2014, 95, S6–S16. [Google Scholar] [CrossRef] [PubMed]

- Sala, S.; Farioli, F.; Zamagni, A. Life cycle sustainability assessment in the context of sustainability science progress (part 2). Int. J. Life Cycle Assess. 2013, 18, 1686–1697. [Google Scholar] [CrossRef]

- Jeswani, H.K.; Azapagic, A.; Schepelmann, P.; Ritthoff, M. Options for broadening and deepening the lca approaches. J. Cleaner Prod. 2010, 18, 120–127. [Google Scholar] [CrossRef]

- Parris, T.M.; Kates, R.W. Characterizing and Measuring Sustainable Development. Annu. Rev. Env. Resour. 2003, 28, 559–586. [Google Scholar] [CrossRef]

- Mayer, A.L. Strengths and weaknesses of common sustainability indices for multidimensional systems. Environ. Int. 2008, 34, 277–291. [Google Scholar] [CrossRef] [PubMed]

- Böhringer, C.; Jochem, P.E.P. Measuring the immeasurable—A survey of sustainability indices. Ecol. Econ. 2007, 63, 1–8. [Google Scholar] [CrossRef]

- Gasparatos, A.; El-Haram, M.; Horner, M. A critical review of reductionist approaches for assessing the progress towards sustainability. Environ. Impact Assess. Rev. 2008, 28, 286–311. [Google Scholar] [CrossRef]

- Joumard, R. Environmental sustainability assessments: Towards a new framework. Int. J. Sustain. Soc. 2011, 3, 133–150. [Google Scholar] [CrossRef]

- Bond, A.J.; Morrison-Saunders, A. Re-evaluating sustainability assessment: Aligning the vision and the practice. Environ. Impact Assess. Rev. 2011, 31, 1–7. [Google Scholar] [CrossRef]

- Dietz, S.; Neumayer, E. Weak and strong sustainability in the seea: Concepts and measurement. Ecol. Econ. 2007, 61, 617–626. [Google Scholar] [CrossRef]

- Robèrt, K.H.; Schmidt-Bleek, B.; Aloisi De Larderel, J.; Basile, G.; Jansen, J.L.; Kuehr, R.; Price Thomas, P.; Suzuki, M.; Hawken, P.; Wackernagel, M. Strategic sustainable development-selection, design and synergies of applied tools. J. Cleaner Prod. 2002, 10, 197–214. [Google Scholar] [CrossRef]

- Svarstad, H.; Petersen, L.K.; Rothman, D.; Siepel, H.; Wätzold, F. Discursive biases of the environmental research framework dpsir. Land Use Policy 2008, 25, 116–125. [Google Scholar] [CrossRef]

- De Schryver, A.M.; van Zelm, R.; Humbert, S.; Pfister, S.; McKone, T.E.; Huijbregts, M.A.J. Value choices in life cycle impact assessment of stressors causing human health damage. J. Ind. Ecol. 2011, 15, 796–815. [Google Scholar] [CrossRef]

- Zoeteman, K. Sustainability of nations. Tracing stages of sustainable development of nations with integrated indicators. Int. J. Sustain. Dev. World Ecol. 2001, 8, 93–109. [Google Scholar] [CrossRef]

- Thabrew, L.; Wiek, A.; Ries, R. Environmental decision making in multi-stakeholder contexts: Applicability of life cycle thinking in development planning and implementation. J. Cleaner Prod. 2009, 17, 67–76. [Google Scholar] [CrossRef]

- Pintér, L.; Hardi, P.; Martinuzzi, A.; Hall, J. Bellagio stamp: Principles for sustainability assessment and measurement. Ecol. Indic. 2012, 17, 20–28. [Google Scholar] [CrossRef]

- Olsen, S.I.; Christensen, F.M.; Hauschild, M.; Pedersen, F.; Larsen, H.F.; Tørsløv, J. Life cycle impact assessment and risk assessment of chemicals–A methodological comparison. Environ. Impact Assess. Rev. 2001, 21, 385–404. [Google Scholar] [CrossRef]

- OECD. Oecd Environmental Indicators-Development, Measurement and Use; OECD: Paris, France, 2003. [Google Scholar]

- Niemeijer, D.; de Groot, R.S. Framing environmental indicators: Moving from causal chains to causal networks. Environ. Dev. Sustain. 2008, 10, 89–106. [Google Scholar] [CrossRef]

- Blok, K.; Huijbregts, M.; Roes, L.; van Haaster, B.; Patel, M.; Hertwich, E.; Wood, R.; Hauschild, M.; Sellke, P.; Antunes, P.; et al. A Novel Methodology for the Sustainability Impact Assessment of New Technologies; Copernicus Institute: Utrecht, The Netherlands, 2013. [Google Scholar]

- Niemeijer, D.; de Groot, R.S. A conceptual framework for selecting environmental indicator sets. Ecol. Indic. 2008, 8, 14–25. [Google Scholar] [CrossRef]

- Mata, T.M.; Martins, A.A.; Neto, B.; Martins, M.L.; Salcedo, R.L.R.; Costa, C.A.V. Lca tool for sustainability evaluations in the pharmaceutical industry. Mech. Chem. Eng. Trans. 2012, 26, 261–266. [Google Scholar]

- Ibáñez-Forés, V.; Bovea, M.D.; Azapagic, A. Assessing the sustainability of best available techniques (bat): Methodology and application in the ceramic tiles industry. J. Cleaner Prod. 2013, 51, 162–176. [Google Scholar] [CrossRef]

- Traverso, M.; Asdrubali, F.; Francia, A.; Finkbeiner, M. Towards life cycle sustainability assessment: An implementation to photovoltaic modules. Int. J. Life Cycle Assess. 2012, 17, 1068–1079. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zijp, M.C.; Heijungs, R.; Van der Voet, E.; Van de Meent, D.; Huijbregts, M.A.J.; Hollander, A.; Posthuma, L. An Identification Key for Selecting Methods for Sustainability Assessments. Sustainability 2015, 7, 2490-2512. https://doi.org/10.3390/su7032490

Zijp MC, Heijungs R, Van der Voet E, Van de Meent D, Huijbregts MAJ, Hollander A, Posthuma L. An Identification Key for Selecting Methods for Sustainability Assessments. Sustainability. 2015; 7(3):2490-2512. https://doi.org/10.3390/su7032490

Chicago/Turabian StyleZijp, Michiel C., Reinout Heijungs, Ester Van der Voet, Dik Van de Meent, Mark A. J. Huijbregts, Anne Hollander, and Leo Posthuma. 2015. "An Identification Key for Selecting Methods for Sustainability Assessments" Sustainability 7, no. 3: 2490-2512. https://doi.org/10.3390/su7032490

APA StyleZijp, M. C., Heijungs, R., Van der Voet, E., Van de Meent, D., Huijbregts, M. A. J., Hollander, A., & Posthuma, L. (2015). An Identification Key for Selecting Methods for Sustainability Assessments. Sustainability, 7(3), 2490-2512. https://doi.org/10.3390/su7032490