Identifying Challenges to Building an Evidence Base for Restoration Practice

Abstract

:1. Introduction

2. Materials and Methods

2.1. Questionnaire Design and Administration

- rate the adequacy of collection of baseline information in their projects (we use the dictionary meaning [28] of the word adequate as meaning fit for the respondent’s particular purposes or needs);

- identify obstacles to adequate baseline information collection;

- comment on what could be done to increase the adequacy of baseline information collection;

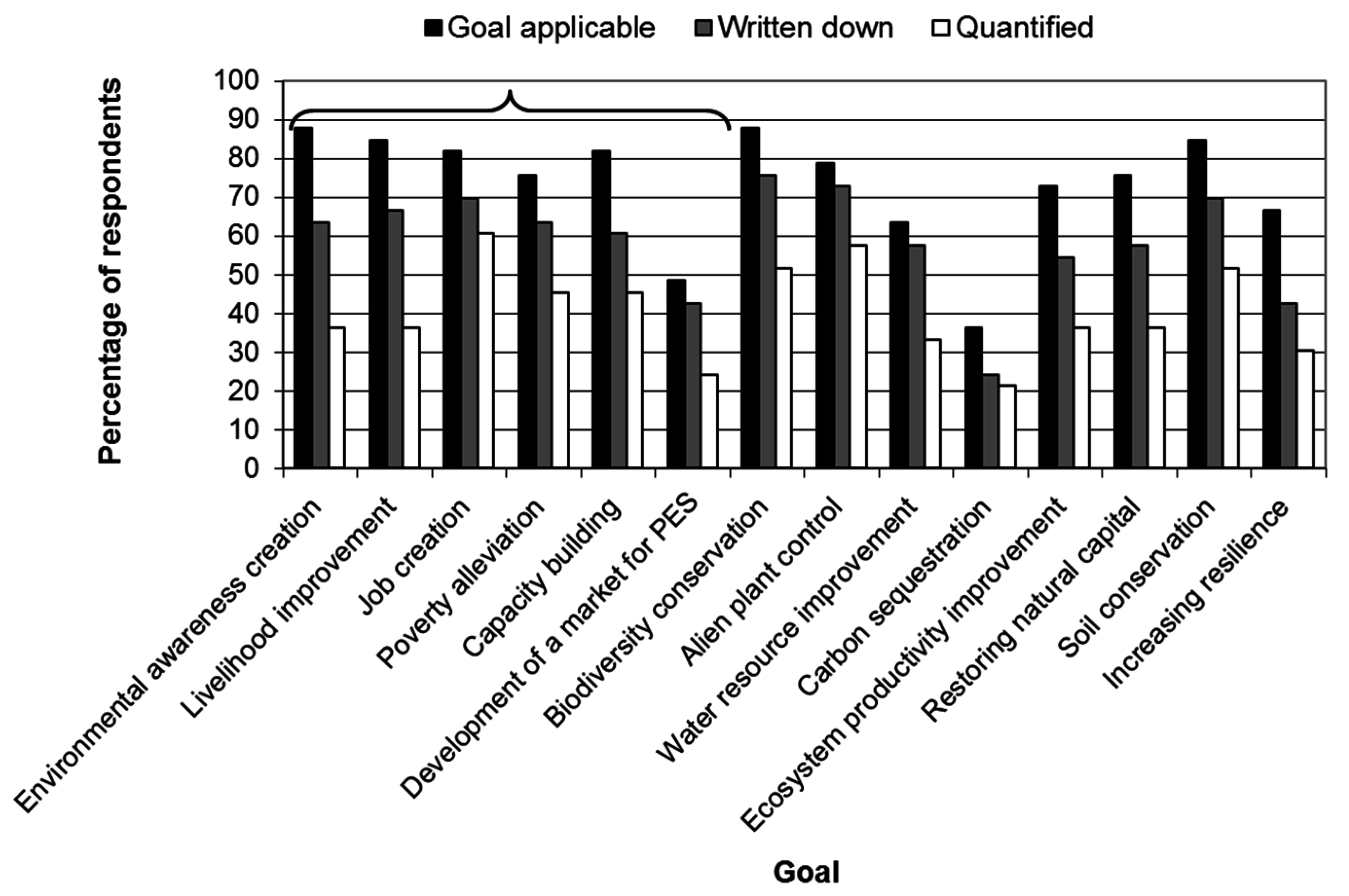

- identify goals applicable to their projects as well as obstacles to the documentation and quantification of goals;

- comment on the setting of goals of restoration;

- identify obstacles to monitoring and comment on what is needed to increase the incidence of long-term monitoring.

| Baseline Measure | Goal | Monitored Indicator |

|---|---|---|

| Environmental awareness levels | Job creation | Person hours worked |

| Unemployment rate | Poverty alleviation | Training provided |

| People living in poverty | Livelihood improvement | Awareness campaigns held |

| Household income | Development of a market for PES | Number of jobs created |

| Literacy | Capacity building | Livelihood impacts |

| Plant species composition | Environmental awareness creation | Environmental awareness levels |

| Density/cover of indigenous species | Alien plant control | Area cleared of invasives |

| Water quality | Water resource improvement | Fences erected |

| Water quantity | Biodiversity conservation | Solid structures built |

| Aquatic diversity | Soil conservation | Area revegetated |

| Above-ground carbon stocks | Carbon sequestration | Water quantity |

| Soil chemical quality | Ecosystem productivity improvement | Water quality |

| Geomorphology | Restoring natural capital | Species richness |

| Erosion/bare patches | Increasing resilience | Soil erodability |

| Levels of degradation | Other (please specify) | Carbon sequestered |

| Other (please specify) | Plant survival/growth | |

| Biomass accumulation | ||

| Other (please specify) |

2.2. Sample Selection

2.3. Data Analysis

3. Results

3.1. Response Rates and Roles of Respondents in Restoration

3.2. Baseline Information Collection, Goal Setting and Monitoring

3.3. Perceived Obstacles

| Variable | Obstacle | % Identifying Obstacle |

|---|---|---|

| Baseline measures | Not part of ToR | 9 |

| Lack of funds | 6 | |

| Lack of time | 6 | |

| Lack of expertise | 3 | |

| Goals | Not all goals can be quantified | 36 |

| Stakeholders are vague about what they want | 15 | |

| Resource constraints | 12 | |

| Not necessary to specify and quantify goals | 12 | |

| Goal not part of Terms of Reference | 6 | |

| Goals change all the time | 3 | |

| Monitoring | Lack of funds | 12 |

| Capacity constraints | 12 | |

| Lack of knowledge | 9 |

4. Discussion

4.1. Strength and Perception of the Evidence Base

4.2. Obstacles to Building the Evidence Base

4.3. Overcoming Obstacles

5. Conclusions

Supplementary Files

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Society for Ecological Restoration (SER) International Science and Policy Working Group. The SER International Primer on Ecological Restoration; Society for Ecological Restoration International: Washington, DC, USA, 2004; Available online: http://www.ser.org/resources/resources-detail-view/ser-international-primer-on-ecological-restoration (accessed on 5 August 2012).

- Aronson, J.; Alexander, S. Ecosystem restoration is now a global priority: Time to roll up our sleeves. Restor. Ecol. 2013, 21, 293–296. [Google Scholar] [CrossRef]

- Hobbs, R.J.; Harris, J.A. Restoration ecology: Repairing the earth’s ecosystems in the new millennium. Restor. Ecol. 2001, 9, 239–246. [Google Scholar] [CrossRef]

- Kapos, V.; Balmford, A.; Aveling, R.; Bubb, P.; Carey, P.; Entwistle, A.; Hopkins, J.; Mulliken, T.; Safford, R.; Stattersfield, A.; et al. Outcomes, not implementation predict conservation success. Oryx 2009, 43, 336–342. [Google Scholar] [CrossRef]

- Cook, C.N.; Hockings, M.; Carter, R.W. Conservation in the dark? The information used to support management decisions. Front. Ecol. Environ. 2009, 8, 181–186. [Google Scholar] [CrossRef]

- Ferraro, P.J.; Pattanayak, S.K. Money for nothing? A call for empirical evaluation of biodiversity conservation investments. PLoS Biol. 2006, 4, 482–488. [Google Scholar] [CrossRef] [PubMed]

- Pullin, A.S.; Stewart, G.B. Guidelines for systematic review in conservation and environmental management. Conserv. Biol. 2006, 20, 1647–1656. [Google Scholar] [CrossRef] [PubMed]

- Roberts, P.D.; Stewart, G.B.; Pullin, A.S. Are review articles a reliable source of evidence to support conservation and environmental management? A comparison with medicine. Biol. Conserv. 2006, 132, 409–423. [Google Scholar] [CrossRef]

- Pullin, A.S.; Knight, T.M. Doing more good than harm—Building an evidence-base for conservation and environmental management. Biol. Conserv. 2009, 142, 931–934. [Google Scholar] [CrossRef]

- Sutherland, W.J.; Pullin, A.S.; Dolman, P.M.; Knight, T.M. The need for evidence-based conservation. Trends Ecol. Evol. 2004, 19, 305–308. [Google Scholar] [CrossRef] [PubMed]

- Hobbs, R. Looking for the silver lining: Making the most of failure. Restor. Ecol. 2009, 17, 1–3. [Google Scholar]

- Holl, K.D.; Howarth, R.B. Paying for restoration. Restor. Ecol. 2000, 8, 260–267. [Google Scholar] [CrossRef]

- Holmes, P.; Esler, K.J.; Richardson, D.; Witkowski, E. Guidelines for improved management of riparian zones invaded by alien plants in South Africa. S. Afr. J. Bot. 2008, 74, 538–552. [Google Scholar] [CrossRef]

- Fule, P.Z.; Covington, W.W.; Smith, H.B.; Springer, J.D.; Heinlein, T.A.; Huisinga, K.D.; Moore, M.M. Comparing ecological restoration alternatives: Grand Canyon, Arizona. For. Ecol. Manag. 2002, 170, 19–41. [Google Scholar] [CrossRef]

- Ntshotsho, P.; Reyers, B.; Esler, K.J. Assessing the evidence base for restoration in South Africa. Restor. Ecol. 2011, 19, 578–586. [Google Scholar] [CrossRef]

- Bash, J.S.; Ryan, C.M. Stream restoration and enhancement projects: Is anyone monitoring? Environ. Manag. 2002, 29, 877–885. [Google Scholar] [CrossRef] [PubMed]

- Christian-Smith, J.; Merenlender, A.M. The disconnect between restoration goals and practices: A case study of watershed restoration in the Russian River Basin, California. Restor. Ecol. 2010, 18, 95–102. [Google Scholar] [CrossRef]

- Caughlan, L.; Oakley, K.L. Cost considerations for long-term ecological monitoring. Ecol. Indic. 2001, 1, 123–134. [Google Scholar] [CrossRef]

- Havstad, K.M.; Herrick, J.E. Long-term ecological monitoring. Arid Land Restor. Manag. 2003, 17, 389–400. [Google Scholar] [CrossRef]

- Legg, C.J.; Nagy, L. Why most conservation monitoring is, but need not be, a waste of time. J. Environ. Manag. 2006, 78, 194–199. [Google Scholar] [CrossRef] [PubMed]

- Field, S.A.; O’Connor, P.J.; Tyre, A.J.; Possingham, H.P. Making monitoring meaningful. Austral Ecol. 2007, 32, 485–491. [Google Scholar] [CrossRef]

- Hobbs, R.J. Setting effective and realistic restoration goals: Key directions for research. Restor. Ecol. 2007, 15, 354–357. [Google Scholar] [CrossRef]

- Morton, S.R.; Hoegh-Guldberg, O.; Lindenmayer, D.B.; Olson, M.H.; Hughes, L.; McCulloch, M.T.; McIntyre, S.; Nix, H.A.; Prober, S.M.; Saunders, D.A.; et al. The big ecological questions inhibiting effective environmental management in Australia. Austral Ecol. 2009, 34, 1–9. [Google Scholar] [CrossRef]

- Roux, D.J.; Rogers, K.H.; Biggs, H.C.; Ashton, P.J.; Sergeant, A. Bridging the Science-Management Divide: Moving from Unidirectional Knowledge Transfer to Knowledge Interfacing and Sharing. 2006. Available online: http://www.ecologyandsociety.org/vol11/iss1/art4/ (accessed on 11 June 2010).

- Gibbons, P.; Zammit, C.; Youngentob, K.; Possingham, H.P.; Lindenmayer, D.B.; Bekessy, S.; Burgman, M.; Colyvan, M.; Considine, M.; Felton, A.; et al. Some practical suggestions for improving engagement between researchers and policy-makers in natural resource management. Ecol. Manag. Restor. 2008, 9, 182–186. [Google Scholar] [CrossRef]

- Biggs, D.; Abel, N.; Knight, A.T.; Leitch, A.; Langston, A.; Ban, N.C. The implementation crisis in conservation planning: Could “mental models” help? Conserv. Lett. 2011, 4, 169–183. [Google Scholar] [CrossRef]

- Urgenson, L.S.; Prozesky, H.E.; Esler, K.J. Stakeholder perceptions of an ecosystem services approach to clearing invasive alien plants on private land. Ecol. Soc. 2013, 18, Article 26. [Google Scholar] [CrossRef]

- Oxford Advanced Learner’s Dictionary. 2011. Available online: http://oald8.oxfordlearnersdictionaries.com/dictionary/adequate (accessed on 25 June 2013).

- Babbie, E.; Mouton, J. The Practice of Social Research; Oxford University Press: Cape Town, South Africa, 2001; pp. 163–206. [Google Scholar]

- Kapos, V.; Balmford, A.; Aveling, R.; Bubb, P.; Carey, P.; Entwistle, A.; Hopkins, J.; Mulliken, T.; Safford, R.; Stattersfield, A.; et al. Calibrating conservation: New tools for measuring success. Conserv. Lett. 2008, 1, 155–164. [Google Scholar] [CrossRef]

- Walpole, M.; Almond, R.E.A.; Besancon, C.; Butchart, S.H.M.; Campbell-Lendrum, D.; Carr, G.M.; Collen, B.; Collette, L.; Davidson, N.C.; Dulloo, E.; et al. Tracking progress toward the 2010 biodiversity target and beyond. Science 2009, 325, 1503–1504. [Google Scholar] [CrossRef] [PubMed]

- Tear, T.H.; Kareiva, P.; Angermeier, P.L.; Comer, P.; Czech, B.; Kautz, R.; Landon, L.; Mehlman, D.; Murphy, K.; Ruckelshaus, M.; et al. How much is enough? The recurrent problem of setting measurable objectives in conservation. BioScience 2005, 55, 835–849. [Google Scholar]

- Bernhardt, E.S.; Sudduth, E.B.; Palmer, M.A.; Allan, J.D.; Meyer, J.L.; Alexander, G.; Follastad-Shah, J.; Hassett, B.; Jenkinson, R.; Lave, R.; et al. Restoring rivers one reach at a time: Results from a survey of U.S. river restoration practitioners. Restor. Ecol. 2007, 15, 482–449. [Google Scholar] [CrossRef]

- Hassett, B.; Palmer, M.A.; Bernhardt, E.S. Evaluating stream restoration in the Chesapeake Bay watershed through practitioner interviews. Restor. Ecol. 2007, 15, 463–472. [Google Scholar] [CrossRef]

- Pullin, A.S.; Knight, T.M.; Stone, D.A.; Charman, K. Do conservation managers use scientific evidence to support their decision-making? Biol. Conserv. 2004, 119, 245–252. [Google Scholar] [CrossRef]

- Cabin, R.J.; Clewell, A.; Ingram, M.; McDonald, T.; Temperton, V. Bridging restoration science and practice: Results and analysis of a survey from the 2009 Society for Ecological Restoration International meeting. Restor. Ecol. 2010, 18, 783–788. [Google Scholar] [CrossRef]

- Fisher, R.J. Social desirability bias and the validity of indirect questioning. J. Consum. Res. 1993, 20, 303–315. [Google Scholar] [CrossRef]

- Rumps, J.M.; Katz, S.L.; Barnas, K.; Morehead, M.D.; Jenkinson, R.; Clayton, S.R.; Goodwin, P. Stream Restoration in the Pacific Northwest: Analysis of Interviews with Project Managers. Restor. Ecol. 2007, 15, 506–551. [Google Scholar] [CrossRef]

- Danielsen, F.; Mendoza, M.M.; Tagtag, A.; Alviola, P.A.; Balete, D.S.; Jensen, A.E.; Enghoff, M.; Poulsen, M.K. Increasing conservation management action by involving local people in natural resource monitoring. Ambio 2007, 36, 566–570. [Google Scholar] [CrossRef]

- Everson, T.M.; Everson, C.S.; Zuma, K.D. Community Based Research on the Influence of Rehabilitation Techniques on the Management of Degraded Catchments; Water Research Commission: Pretoria, South Africa, 2007. [Google Scholar]

- Independent Evaluation Group (IEG). Writing Terms of Reference for An Evaluation: A How-to Guide. 2011. Available online: http://siteresources.worldbank.org/EXTEVACAPDEV/Resources/ecd_writing_TORs.pdf (accessed on 15 February 2013).

- Ryder, D.S.; Miller, W. Setting goals and measuring success: Linking patterns and processes in stream restoration. Hydrobiologia 2005, 552, 147–158. [Google Scholar] [CrossRef]

- Slocombe, D.S. Defining goals and criteria for ecosystem-based management. Environ. Manag. 1998, 22, 483–493. [Google Scholar] [CrossRef]

- Ludwig, D. The era of management is over. Ecosystems 2001, 4, 758–764. [Google Scholar] [CrossRef]

- Gonzalo-Turpin, H.; Couix, N.; Hazard, L. Rethinking partnerships with the aim of producing knowledge with practical relevance: A case study in the field of ecological restoration. Ecol. Soc. 2008, 13, Article 53. [Google Scholar]

- Cohn, J.P. Citizen Science: Can volunteers do real research? BioScience 2008, 58, 192–197. [Google Scholar] [CrossRef]

- Sunderland, T.; Sunderland-Groves, J.; Shanley, P.; Campbell, B. Bridging the gap: How can information access and exchange between conservation biologists and field practitioners be improved for better conservation outcomes? Biotropica 2009, 41, 549–554. [Google Scholar] [CrossRef]

- Esler, K.J.; Prozesky, H.; Sharma, G.P.; McGeoch, M. How wide is the “knowing-doing” gap in invasion biology? Biol. Invasions 2010, 12, 4065–4075. [Google Scholar] [CrossRef]

- Folke, C.; Hahn, T.; Olsson, P.; Norberg, J. Adaptive governance of social-ecological systems. Annu. Rev. Environ. Resour. 2005, 30, 441–473. [Google Scholar] [CrossRef]

- Armitage, D.; Marschke, M.; Plummer, R. Adaptive co-management and the paradox of learning. Glob. Environ. Chang. 2008, 18, 86–98. [Google Scholar] [CrossRef]

- Williams, B.K. Passive and active adaptive management: Approaches and an example. J. Environ. Manag. 2011, 92, 1371–1378. [Google Scholar] [CrossRef] [PubMed]

- Ingram, M. Editorial: You don’t have to be a scientist to do science. Ecol. Restor. 2009, 27. [Google Scholar] [CrossRef]

- Segan, D.B.; Bottrill, M.C.; Baxter, P.W.J.; Possingham, H.P. Using conservation evidence to guide management. Conserv. Biol. 2010, 25, 200–202. [Google Scholar] [CrossRef] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ntshotsho, P.; Esler, K.J.; Reyers, B. Identifying Challenges to Building an Evidence Base for Restoration Practice. Sustainability 2015, 7, 15871-15881. https://doi.org/10.3390/su71215788

Ntshotsho P, Esler KJ, Reyers B. Identifying Challenges to Building an Evidence Base for Restoration Practice. Sustainability. 2015; 7(12):15871-15881. https://doi.org/10.3390/su71215788

Chicago/Turabian StyleNtshotsho, Phumza, Karen J. Esler, and Belinda Reyers. 2015. "Identifying Challenges to Building an Evidence Base for Restoration Practice" Sustainability 7, no. 12: 15871-15881. https://doi.org/10.3390/su71215788

APA StyleNtshotsho, P., Esler, K. J., & Reyers, B. (2015). Identifying Challenges to Building an Evidence Base for Restoration Practice. Sustainability, 7(12), 15871-15881. https://doi.org/10.3390/su71215788