The Persistence Puzzle: Bibliometric Insights into Dropout in MOOCs

Abstract

1. Introduction

1.1. Setting the Scene

1.2. The Objective of the Study

- How has MOOC abandonment research evolved over time?

- What are the characteristics of the articles that stood out in the field of MOOC dropout?

- Who are the top authors in MOOC dropout research?

- Which journals are the researchers’ favorite journals for publishing articles in this field?

- Which are the leading universities in publishing work on MOOC dropout?

1.3. Manuscript Contribution

1.4. Paper Roadmap

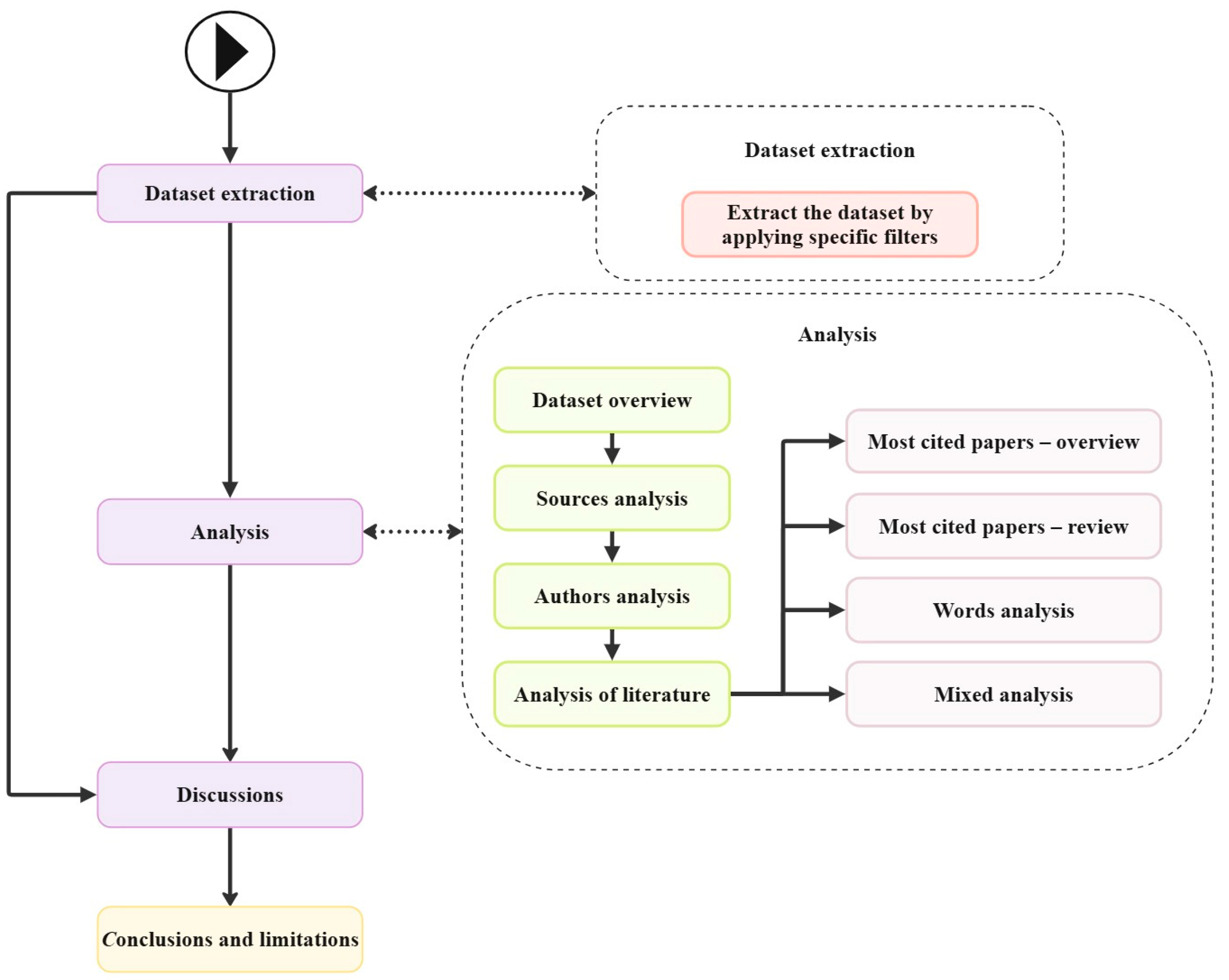

2. Materials and Methods

- Dataset extraction: Downloading the dataset from the selected platform, Clarivate Analytics’ Web of Science Core Collection, also commonly known as Web of Science (WoS) database, in our case;

- Analysis: Conducting analyses through the use of a specific software—Biblioshiny 4.2.3 library in R version 4.4.1 in our case;

- Discussions;

- Conclusions and limitations.

2.1. Dataset Extraction

2.2. Analysis

2.3. Discussion

2.4. Conclusions and Limitations

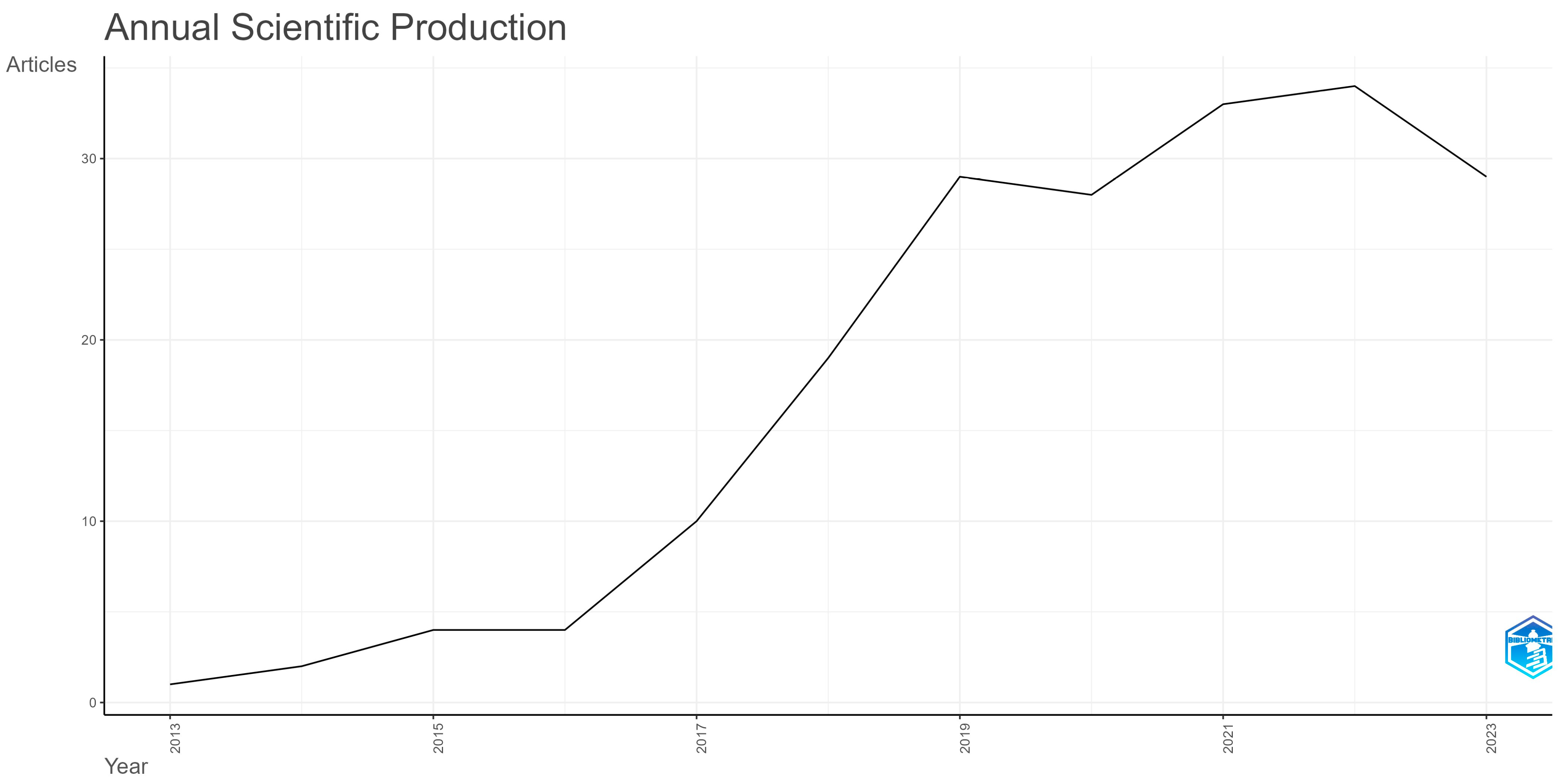

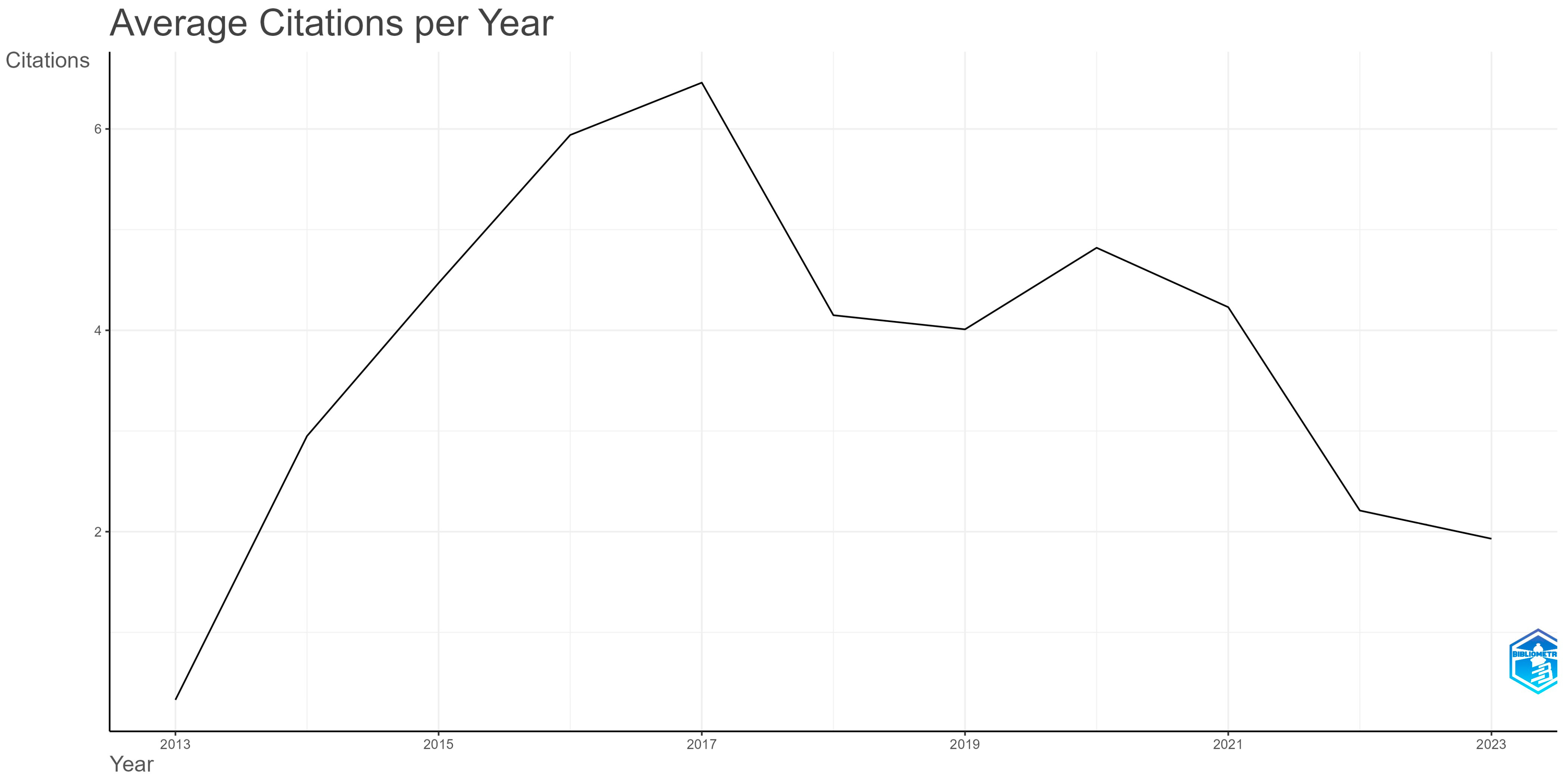

3. Dataset Analysis

3.1. Dataset Overview

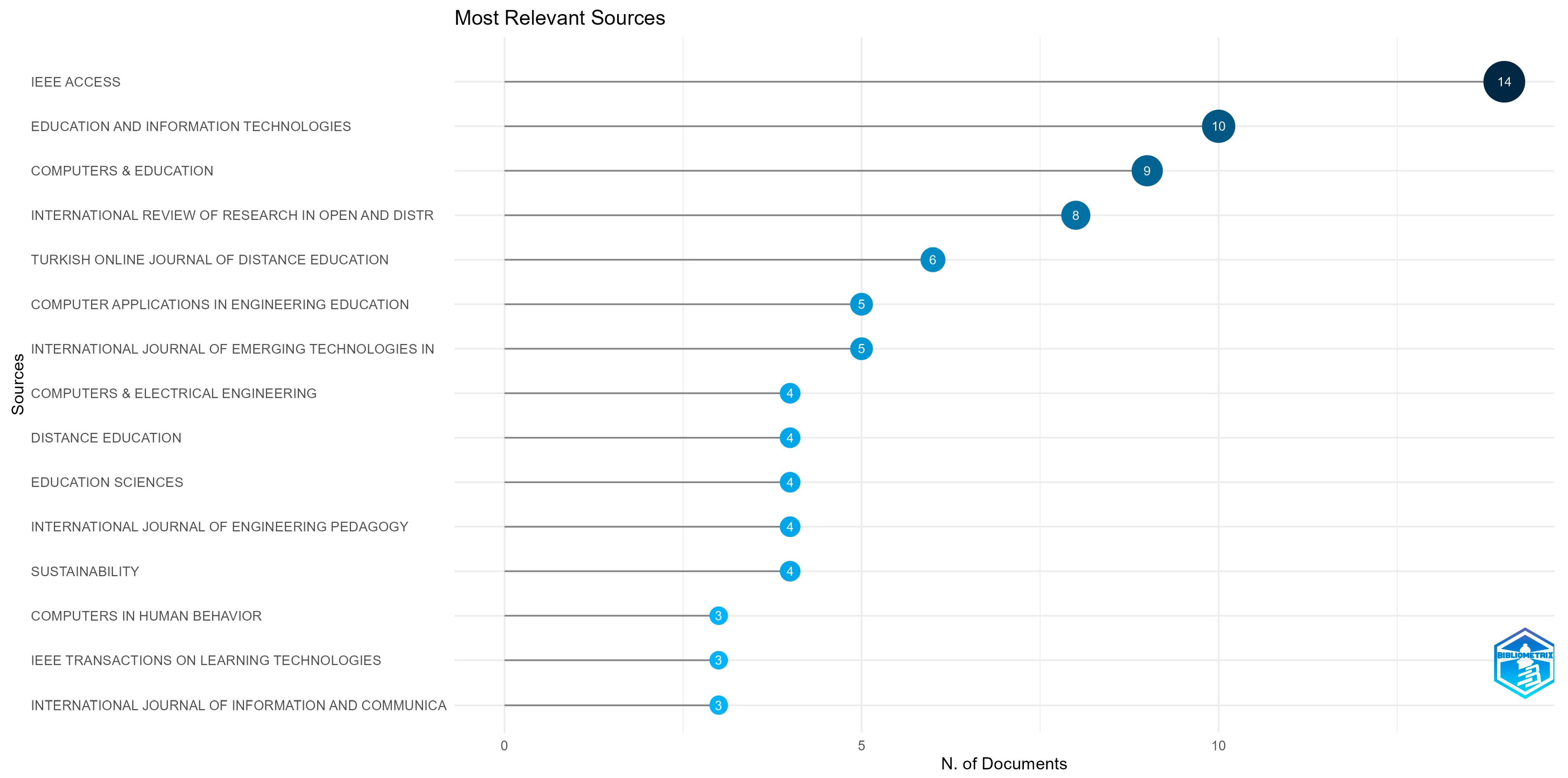

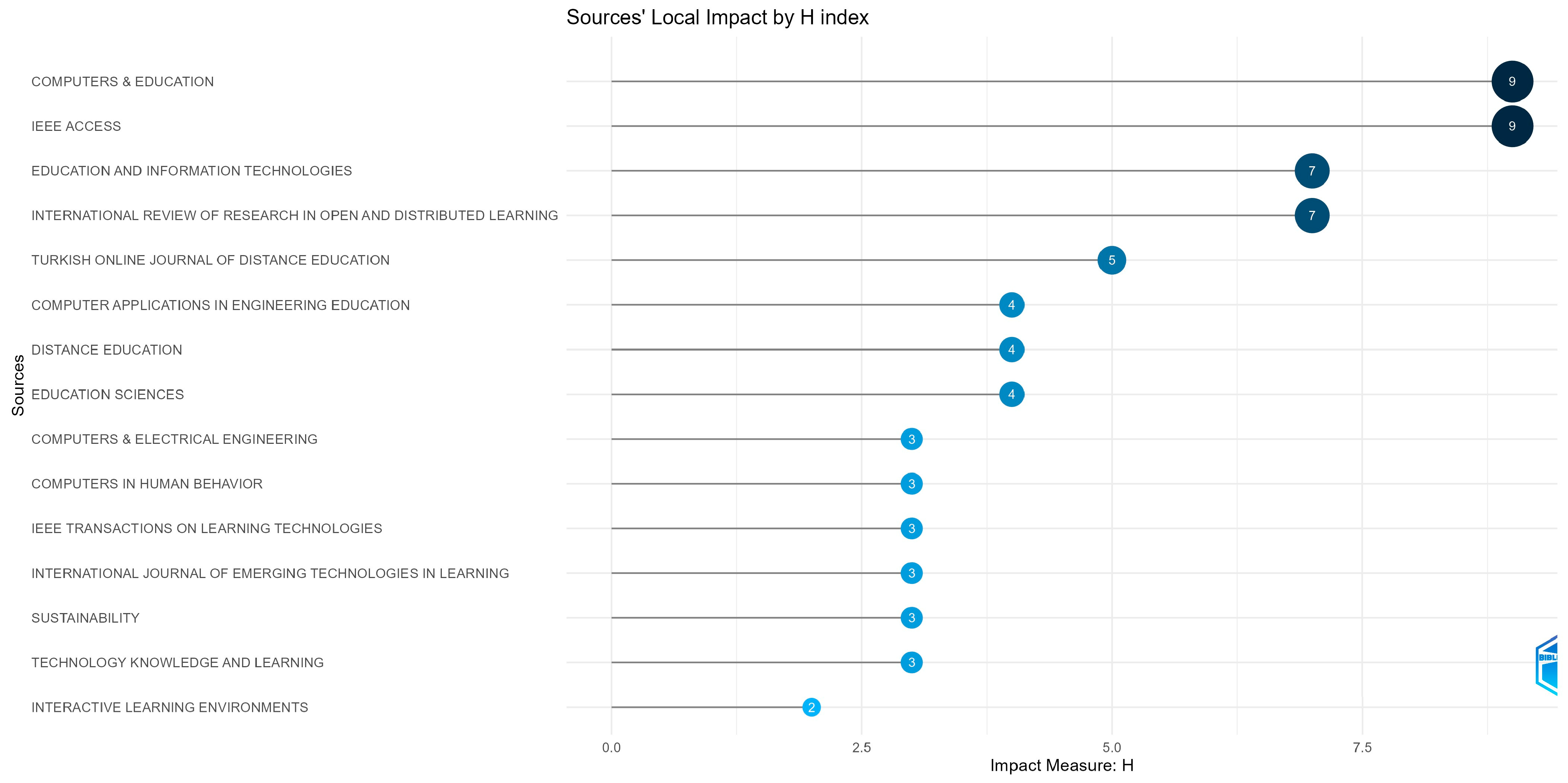

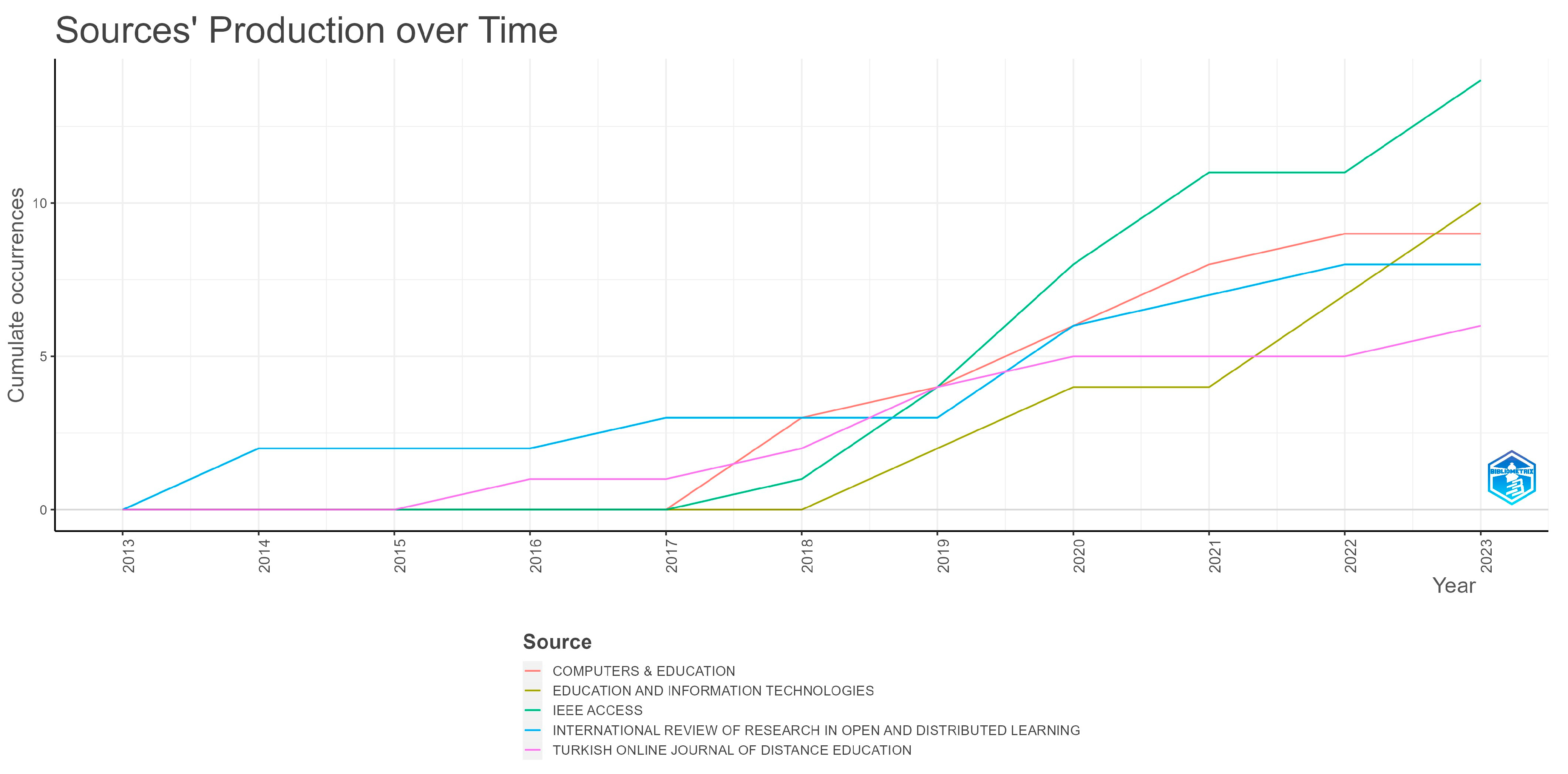

3.2. Sources

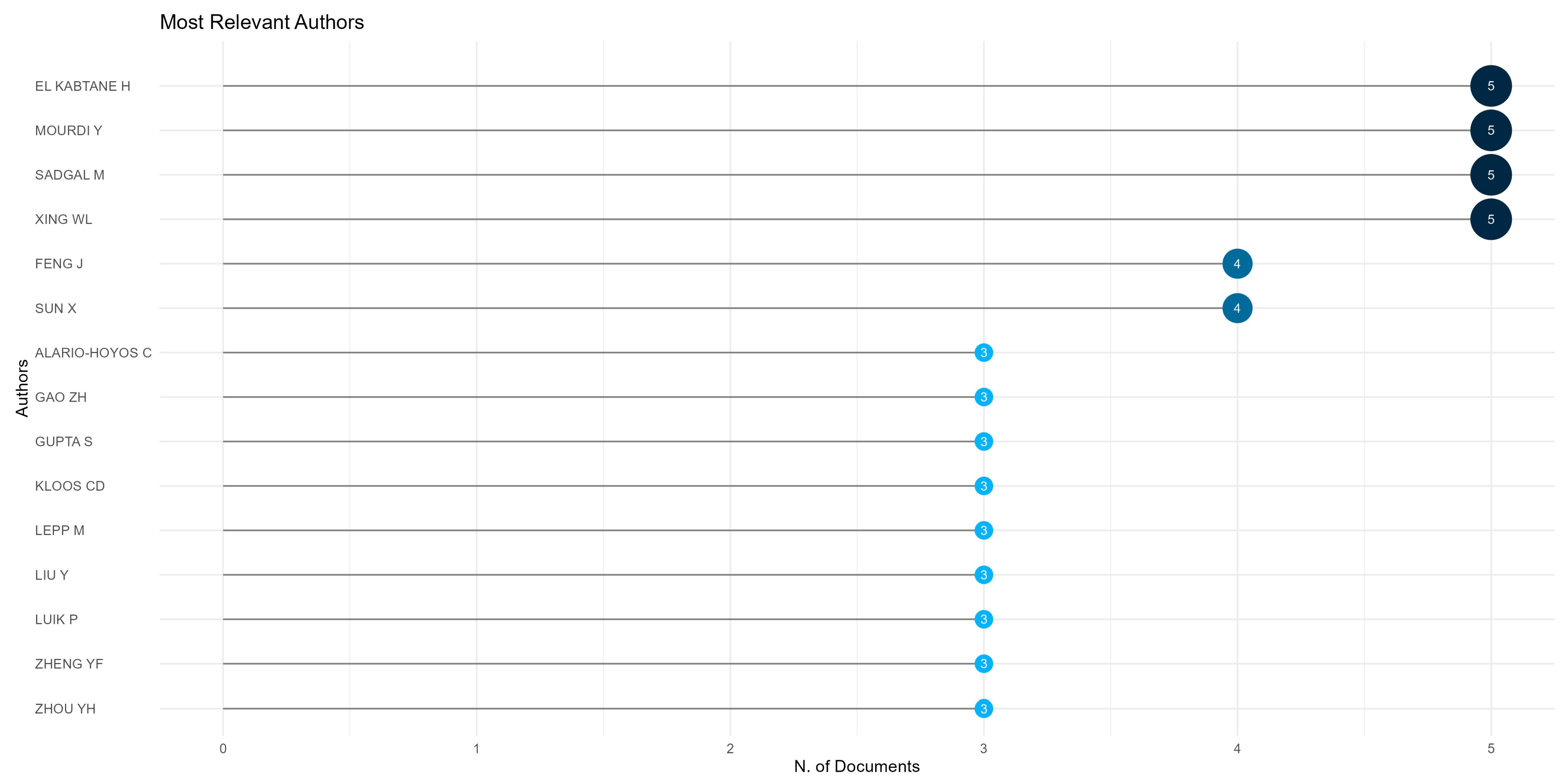

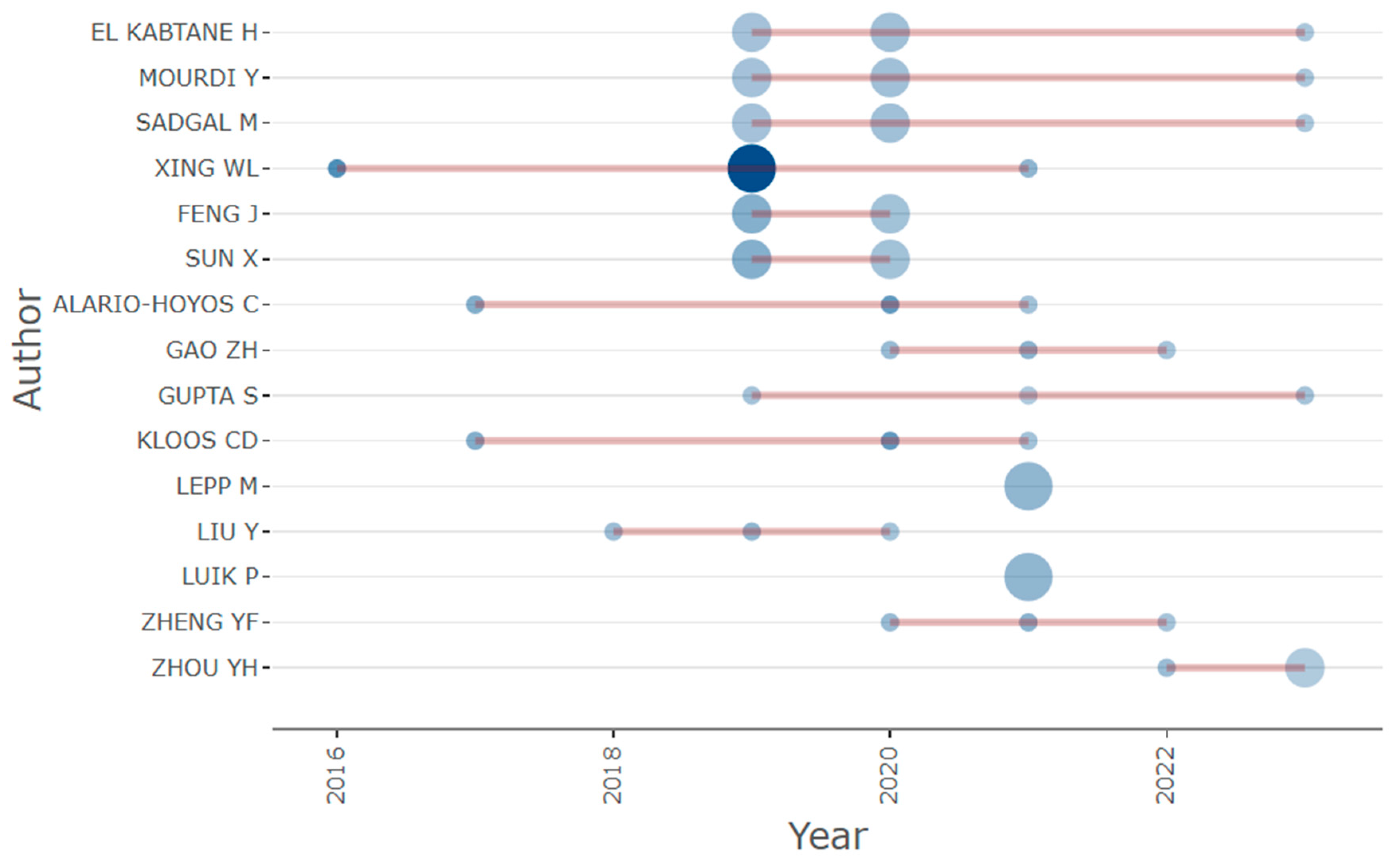

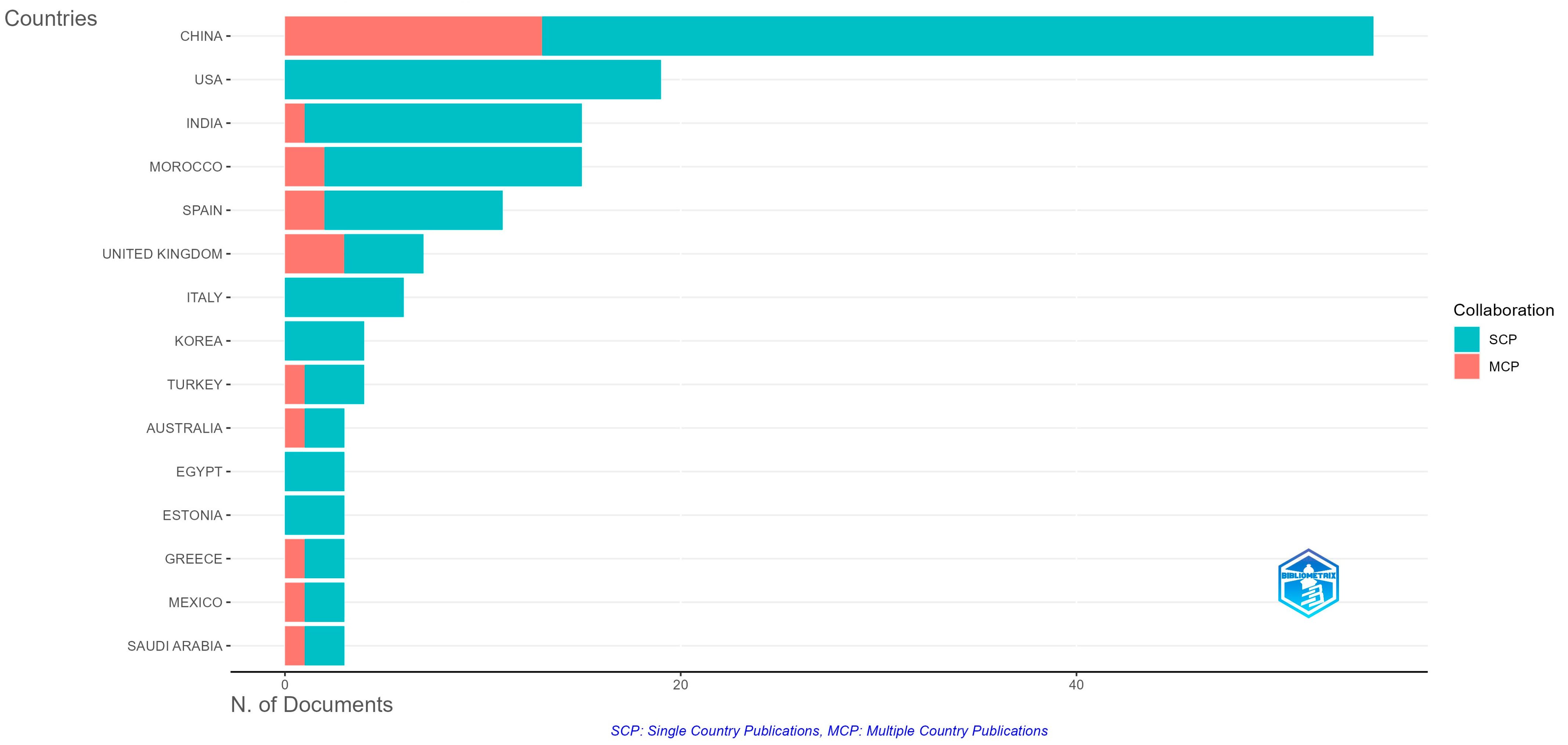

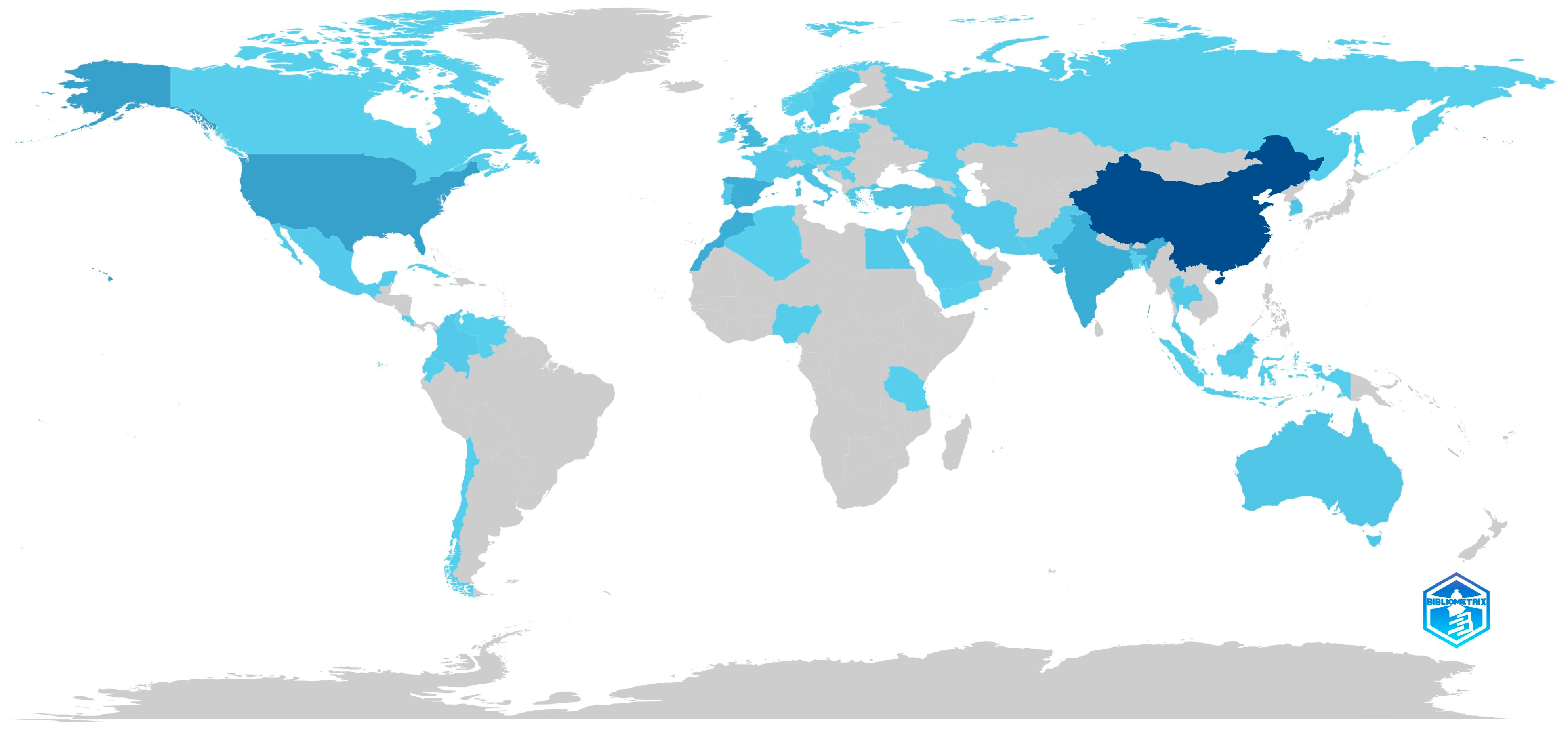

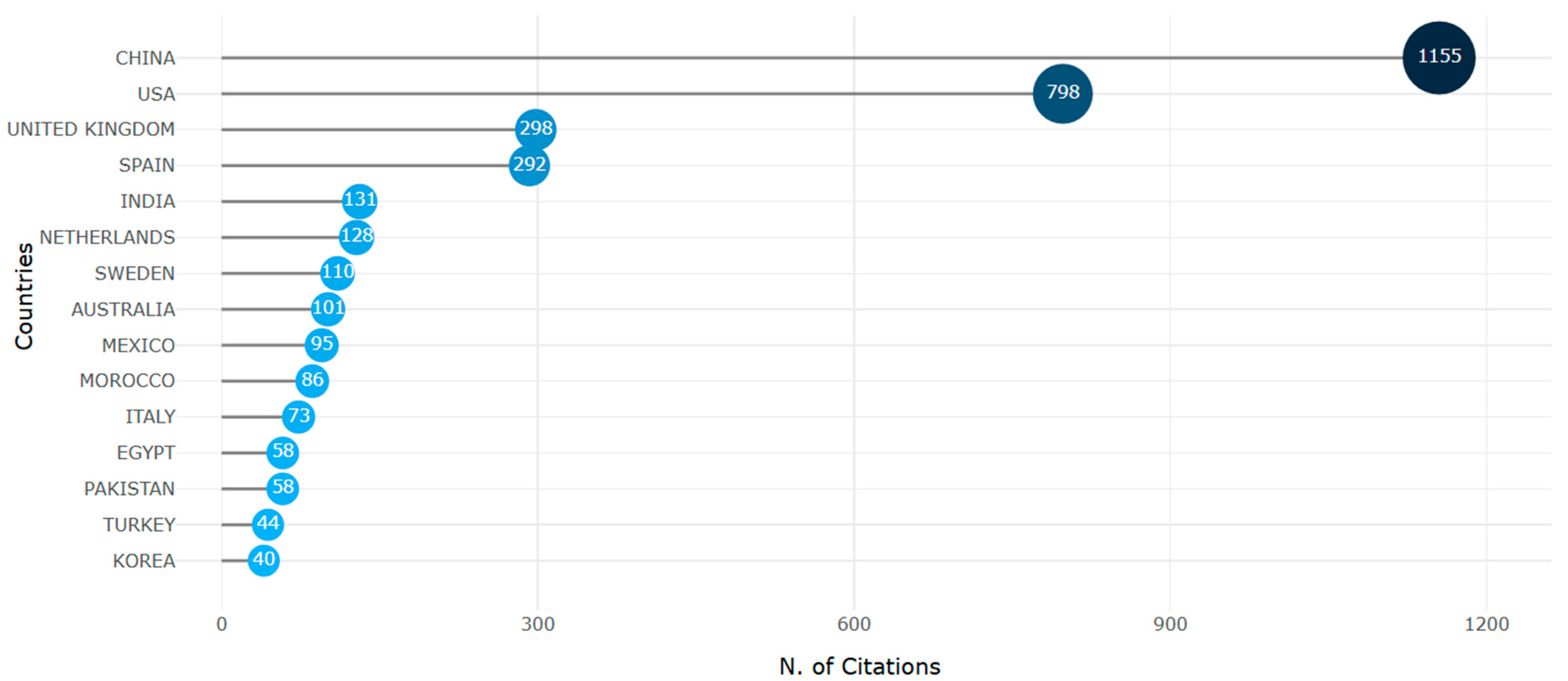

3.3. Authors

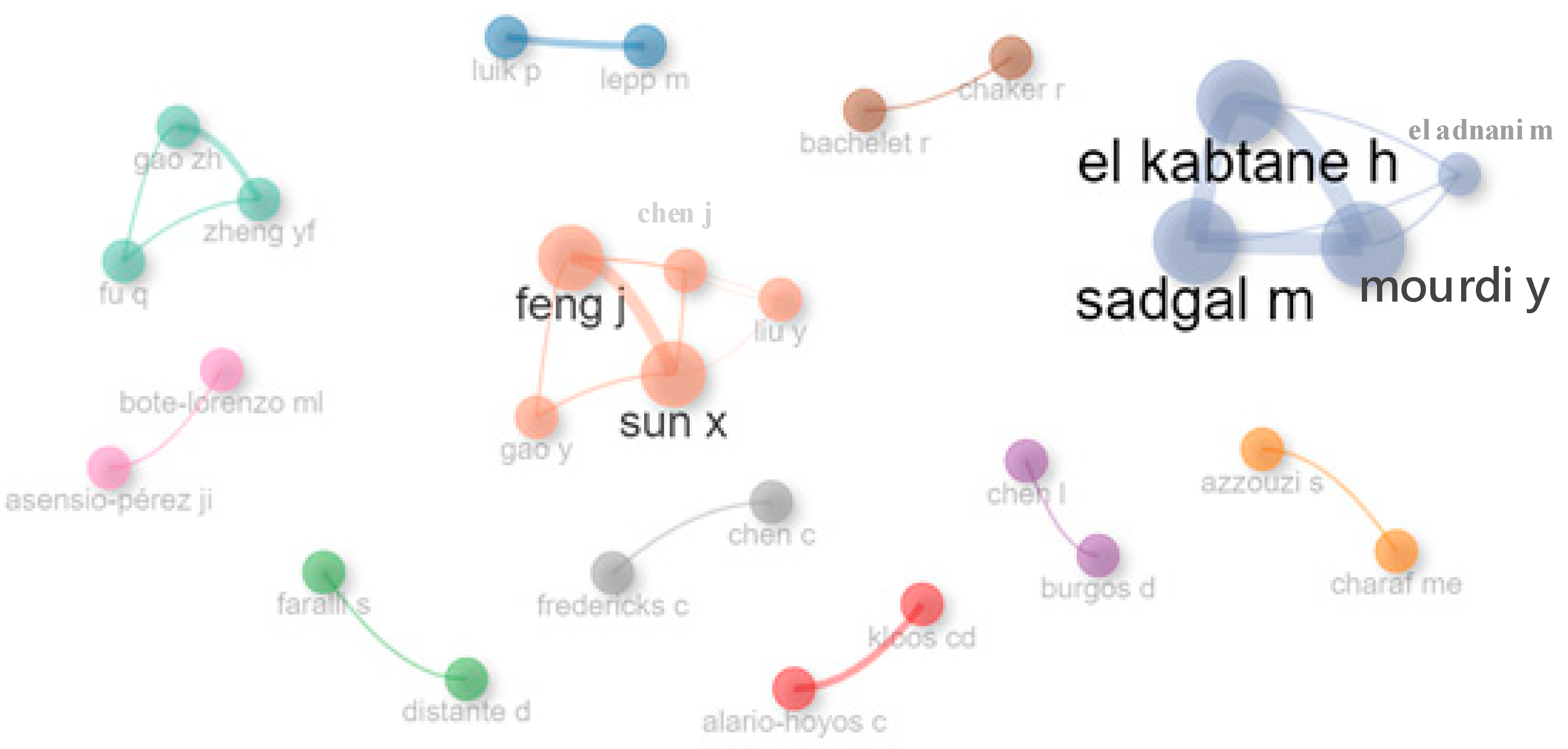

- Cluster #1 (in red): This consists of Alario-Hoyos C and Kloos CD. These two researchers have focused on analyzing how self-regulated learning strategies (SRLs), particularly event-driven and self-reported SRLs, can be integrated into predictive models for self-paced MOOCs [90]. The authors have also investigated the relationships between SRLs and the information obtained from MOOC learners [91].

- Cluster #2 (in blue): This consists of Lepp M and Luik P. They investigated performance metrics recorded before dropout and identified periods with the highest dropout rates in MOOCs dedicated to computer programming [92]. Using non-parametric tests and descriptive statistics, they analyzed performance in assessments of those who did not complete the courses, those who completed, and those who managed to complete based on involvement or difficulty [93].

- Cluster #6 (in brown): This cluster includes Bachelet R and Chaker R. They employed structural equation modeling and path analysis to investigate causal links between theoretical self-experience, MOOC learning outcomes, and social intentions [100].

- Cluster #10 (in orange): This is the largest cluster, consisting of Feng J, Sun X, Liu Y, Chen J, and Gao Y. The authors focused on developing a new algorithm combining extreme learning machines and decision trees for more accurate dropout predictions [107], creating a hybrid neural network for selecting posts needing immediate teacher attention [108] and developing a parallel neural network for grouping MOOC forum sentiments [109].

- Cluster #11 (in navy blue): This is the second largest cluster, made up of El Kabtane H, Mourdi Y, Sadgal M, and El Adnani M. The research conducted by the authors included predicting learner behavior leading to dropout [27,110], creating individual learner behavior profiles throughout courses [25] and incorporating online manipulations into MOOCs [28].

3.4. Analysis of the Literature

3.4.1. Top 10 Most Cited Papers—Overview

3.4.2. Top 10 Most Cited Papers—Review

3.4.3. Words Analysis

3.5. Mixed Analysis

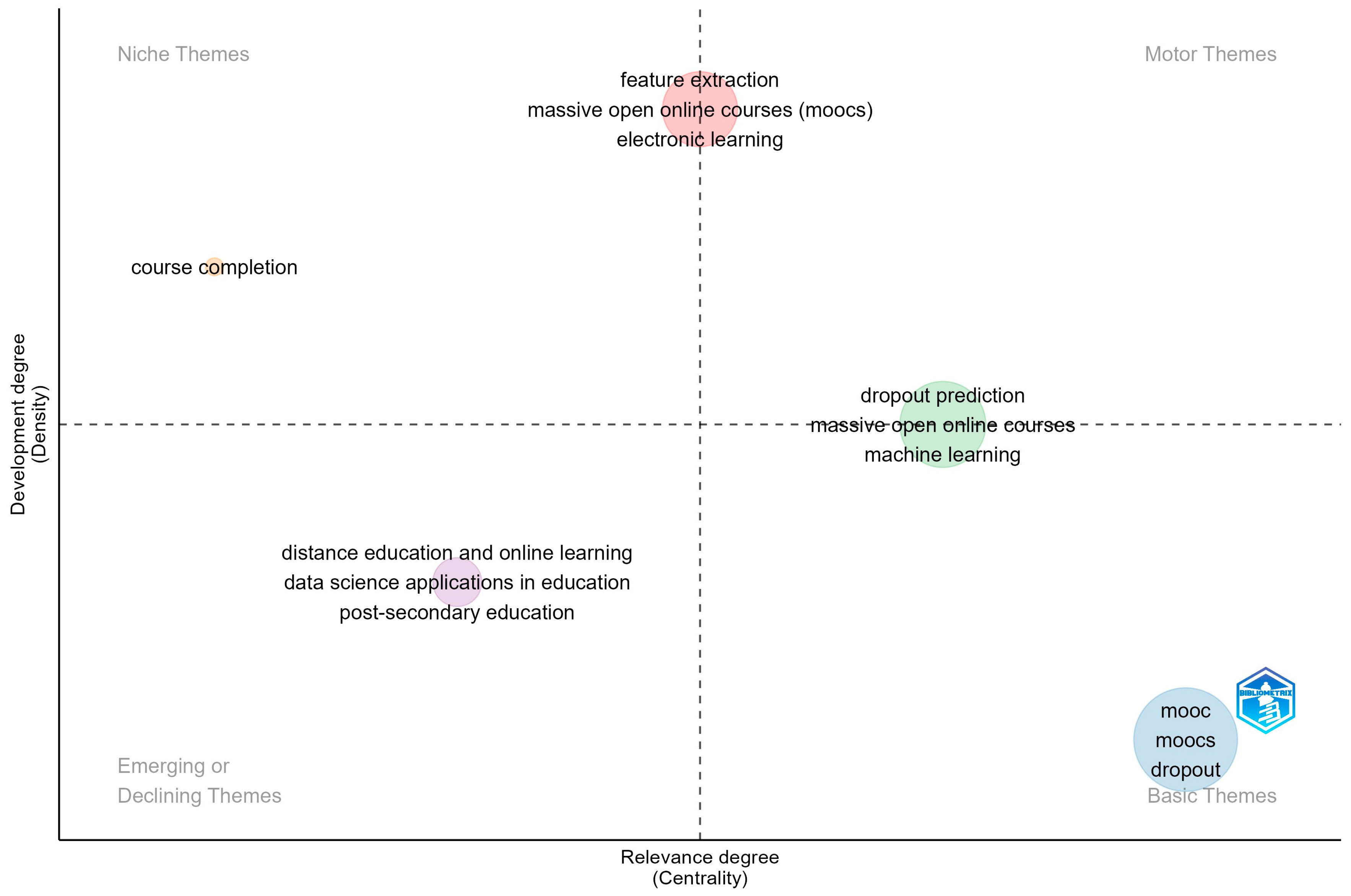

3.5.1. Thematic Map

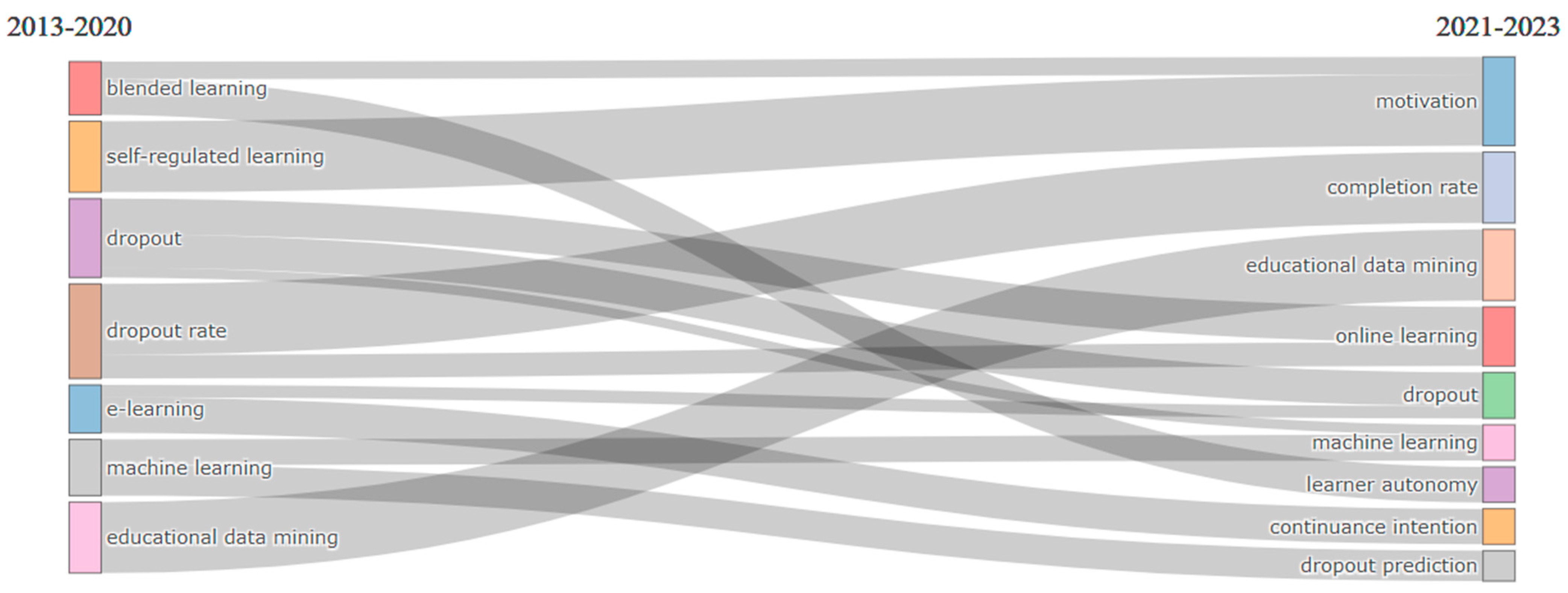

3.5.2. Thematic Map Evolution

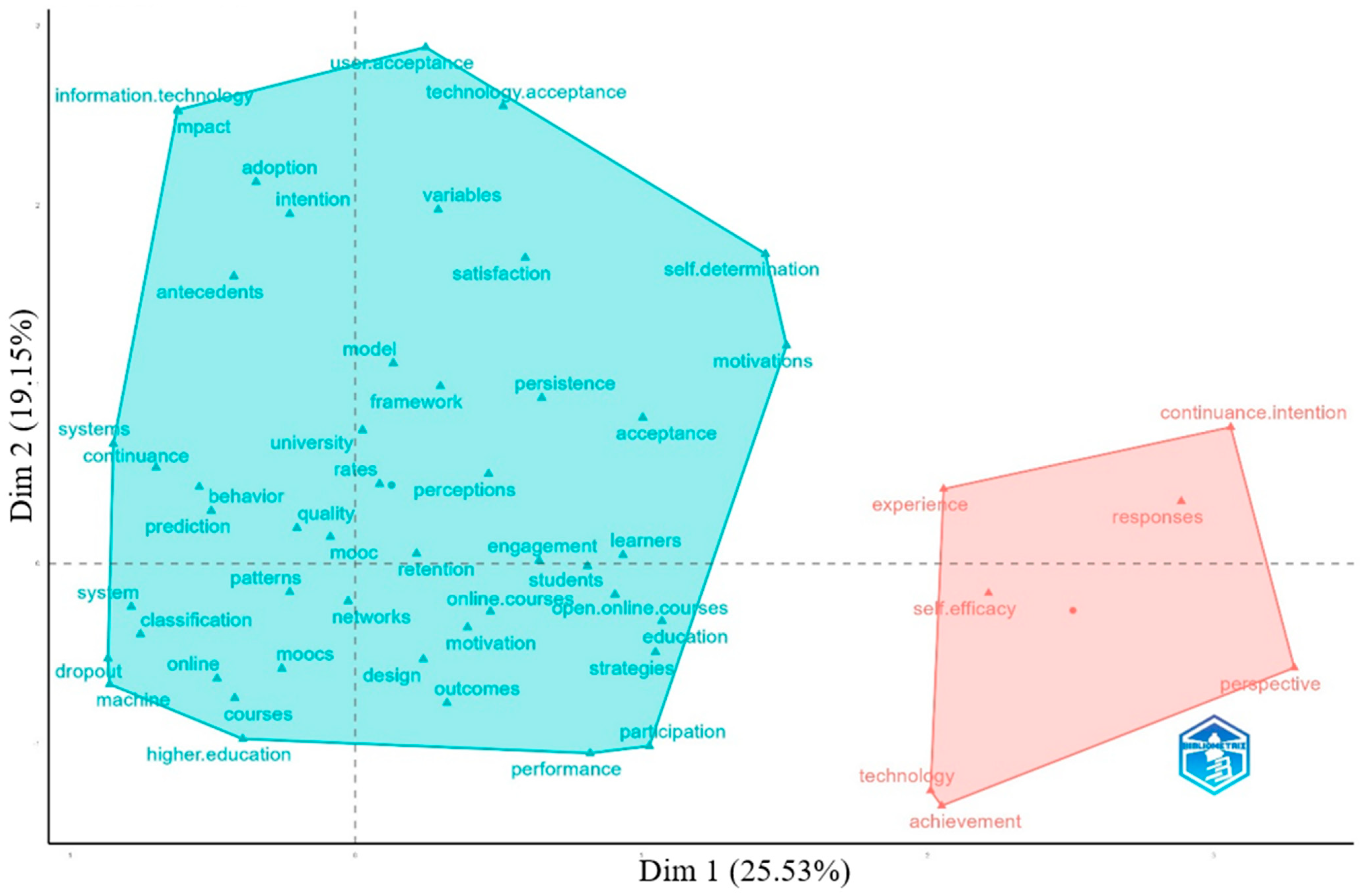

3.5.3. Factorial Analysis

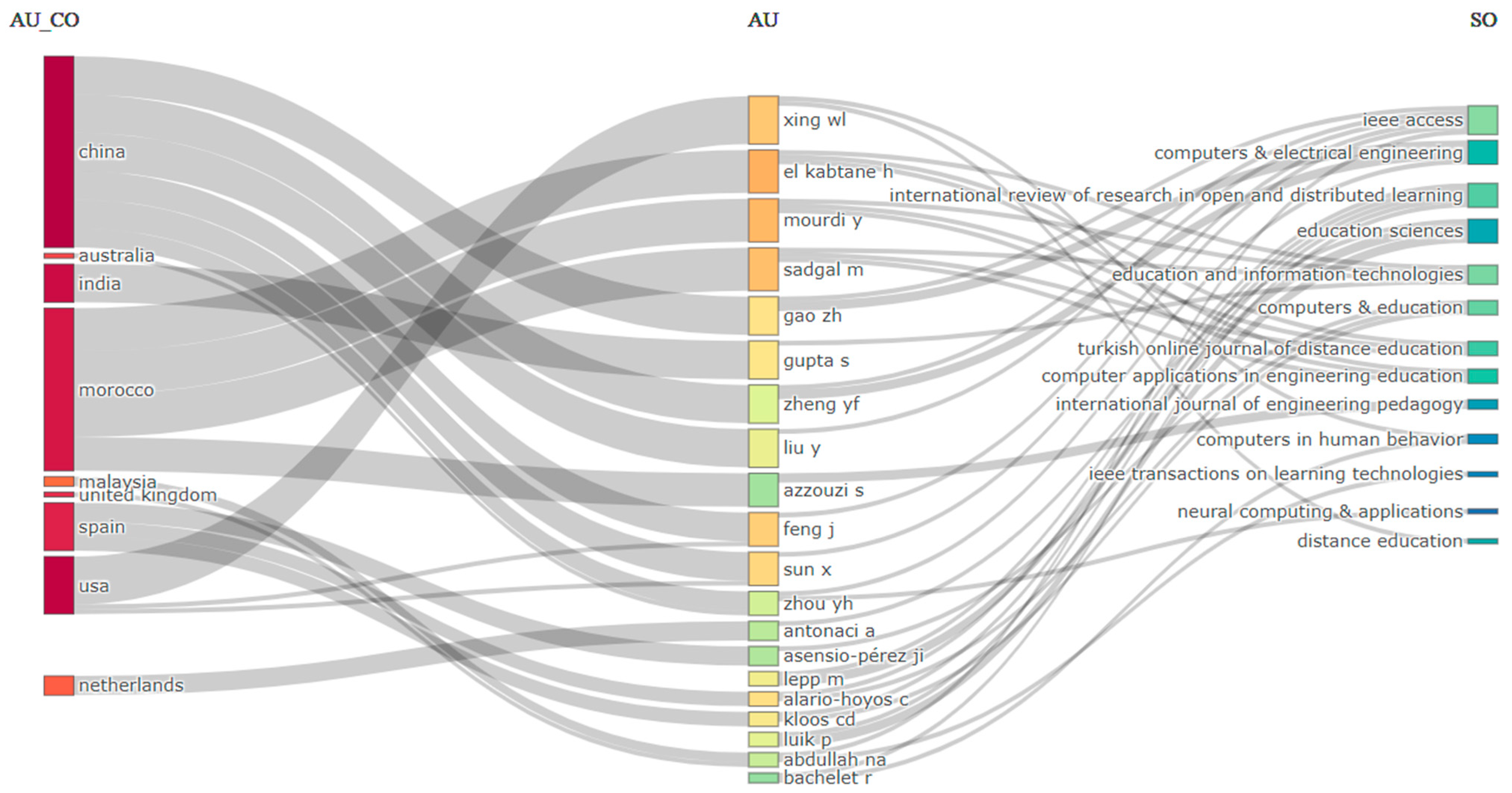

3.5.4. Three-Fields Plot

4. Conclusions and Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, S.; Zhao, Y.; Guo, L.; Ren, M.; Li, J.; Zhang, L.; Li, K. Quantification and Prediction of Engagement: Applied to Personalized Course Recommendation to Reduce Dropout in MOOCs. Inf. Process. Manag. 2024, 61, 103536. [Google Scholar] [CrossRef]

- Galikyan, I.; Admiraal, W.; Kester, L. MOOC Discussion Forums: The Interplay of the Cognitive and the Social. Comput. Educ. 2021, 165, 104133. [Google Scholar] [CrossRef]

- Knox, J. Massive Open Online Courses (MOOCs). In Encyclopedia of Educational Philosophy and Theory; Peters, M.A., Ed.; Springer: Singapore, 2017; pp. 1372–1378. ISBN 978-981-287-588-4. [Google Scholar]

- Margaryan, A.; Bianco, M.; Littlejohn, A. Instructional Quality of Massive Open Online Courses (MOOCs). Comput. Educ. 2015, 80, 77–83. [Google Scholar] [CrossRef]

- Clark, R.; Marks, L. MOOCs and Medical Education: Hope or Hype? MedEdPublish 2020, 9, 124. [Google Scholar] [CrossRef]

- da Silva, J.M.C.; Pedroso, G.H.; Veber, A.B.; Maruyama, Ú.G.R. Learner Engagement and Demographic Influences in Brazilian Massive Open Online Courses: Aprenda Mais Platform Case Study. Analytics 2024, 3, 178–193. [Google Scholar] [CrossRef]

- Huang, S.; Cheng, H.; Luo, M. Comparative Study on Barriers of Supply Chain Management MOOCs in China: Online Review Analysis with a Novel TOPSIS-CoCoSo Approach. J. Theor. Appl. Electron. Commer. Res. 2024, 19, 1793–1811. [Google Scholar] [CrossRef]

- Kaplan, A.M.; Haenlein, M. Higher Education and the Digital Revolution: About MOOCs, SPOCs, Social Media, and the Cookie Monster. Bus. Horiz. 2016, 59, 441–450. [Google Scholar] [CrossRef]

- Gong, Z. The Development of Medical MOOCs in China: Current Situation and Challenges. Med. Educ. Online 2018, 23, 1527624. [Google Scholar] [CrossRef]

- Kumar, G. Massive Open Online Courses’ (MOOCS’) Role in Promoting Educational Equity and SDG 4. Int. Educ. Sci. Res. J. 2024, 10, 18–23. [Google Scholar]

- Harting, K.; Erthal, M.J. History of Distance Learning. Inf. Technol. Learn. Perform. J. 2005, 23, 35–44. [Google Scholar]

- Kovanovic, V.; Joksimovic, S.; Gasevic, D.; Siemens, G.; Hatala, M. What Public Media Reveals about MOOCs: A Systematic Analysis of News Reports. Br. J. Educ. Technol. 2015, 46, 510–527. [Google Scholar] [CrossRef]

- Crosslin, M.; Al, E. Chapter 1: Overview of Online Courses. In Creating Online Learning Experiences; Mavs Open Press: Arlington, TX, USA, 2018. [Google Scholar]

- Xiong, Y.; Ling, Q.; Li, X. Ubiquitous E-Teaching and e-Learning: China’s Massive Adoption of Online Education and Launching MOOCs Internationally during the COVID-19 Outbreak. Wirel. Commun. Mob. Comput. 2021, 2021, 6358976. [Google Scholar] [CrossRef]

- Alamri, M.M. Investigating Students’ Adoption of MOOCs during COVID-19 Pandemic: Students’ Academic Self-Efficacy, Learning Engagement, and Learning Persistence. Sustainability 2022, 14, 714. [Google Scholar] [CrossRef]

- Chen, C.; Sonnert, G.; Sadler, P.M.; Sasselov, D.D.; Fredericks, C.; Malan, D.J. Going over the Cliff: MOOC Dropout Behavior at Chapter Transition. Distance Educ. 2020, 41, 6–25. [Google Scholar] [CrossRef]

- Jin, C. MOOC Student Dropout Prediction Model Based on Learning Behavior Features and Parameter Optimization. Interact. Learn. Environ. 2023, 31, 714–732. [Google Scholar] [CrossRef]

- Deng, R.; Benckendorff, P.; Gannaway, D. Learner Engagement in MOOCs: Scale Development and Validation. Br. J. Educ. Technol. 2020, 51, 245–262. [Google Scholar] [CrossRef]

- Almatrafi, O.; Johri, A.; Rangwala, H. Needle in a Haystack: Identifying Learner Posts That Require Urgent Response in MOOC Discussion Forums. Comput. Educ. 2018, 118, 1–9. [Google Scholar] [CrossRef]

- Vilkova, K.; Shcheglova, I. Deconstructing Self-Regulated Learning in MOOCs: In Search of Help-Seeking Mechanisms. Educ. Inf. Technol. 2021, 26, 17–33. [Google Scholar] [CrossRef]

- Narayanasamy, S.K.; Elçi, A. An Effective Prediction Model for Online Course Dropout Rate. Int. J. Distance Educ. Technol. (IJDET) 2020, 18, 94–110. [Google Scholar] [CrossRef]

- Huang, H.; Jew, L.; Qi, D. Take a MOOC and Then Drop: A Systematic Review of MOOC Engagement Pattern and Dropout Factor. Heliyon 2023, 9, e15220. [Google Scholar] [CrossRef]

- Celik, B.; Cagiltay, K. Did You Act According to Your Intention? An Analysis and Exploration of Intention–Behavior Gap in MOOCs. Educ. Inf. Technol. 2024, 29, 1733–1760. [Google Scholar] [CrossRef]

- Aldowah, H.; Al-Samarraie, H.; Alzahrani, A.I.; Alalwan, N. Factors Affecting Student Dropout in MOOCs: A Cause and Effect Decision-making Model. J. Comput. High. Educ. 2020, 32, 429–454. [Google Scholar] [CrossRef]

- Mourdi, Y.; Sadgal, M.; Elalaoui Elabdallaoui, H.; El Kabtane, H.; Allioui, H. A Recurrent Neural Networks Based Framework for At-Risk Learners’ Early Prediction and MOOC Tutor’s Decision Support. Comput. Appl. Eng. Educ. 2023, 31, 270–284. [Google Scholar] [CrossRef]

- El Kabtane, H.; El Adnani, M.; Sadgal, M.; Mourdi, Y. Virtual Reality and Augmented Reality at the Service of Increasing Interactivity in MOOCs. Educ. Inf. Technol. 2020, 25, 2871–2897. [Google Scholar] [CrossRef]

- Mourdi, Y.; Sadgal, M.; El Kabtane, H.; Berrada Fathi, W. A Machine Learning-Based Methodology to Predict Learners’ Dropout, Success or Failure in MOOCs. Int. J. Web Inf. Syst. 2019, 15, 489–509. [Google Scholar] [CrossRef]

- El Kabtane, H.; El Adnani, M.; Sadgal, M.; Mourdi, Y. Augmented Reality-Based Approach for Interactivity in MOOCs. Int. J. Web Inf. Syst. 2018, 15, 134–154. [Google Scholar] [CrossRef]

- Zhu, M.; Sari, A.R.; Lee, M.M. A Comprehensive Systematic Review of MOOC Research: Research Techniques, Topics, and Trends from 2009 to 2019. Educ. Technol. Res. Dev. 2020, 68, 1685–1710. [Google Scholar] [CrossRef]

- Zhu, M.; Sari, A.; Lee, M. A Systematic Review of Research Methods and Topics of the Empirical MOOC Literature (2014–2016). Internet High. Educ. 2018, 37, 31–39. [Google Scholar] [CrossRef]

- Doulani, A. A Bibliometric Analysis and Science Mapping of Scientific Publications of Alzahra University during 1986–2019. Libr. Hi Tech 2020, 39, 915–935. [Google Scholar] [CrossRef]

- Khan, M.A.; Pattnaik, D.; Ashraf, R.; Ali, I.; Kumar, S.; Donthu, N. Value of Special Issues in the Journal of Business Research: A Bibliometric Analysis. J. Bus. Res. 2021, 125, 295–313. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Pattnaik, D.; Lim, W.M. A Bibliometric Retrospection of Marketing from the Lens of Psychology: Insights from Psychology & Marketing. Psychol. Mark. 2021, 38, 834–865. [Google Scholar] [CrossRef]

- Sandu, A.; Cotfas, L.-A.; Stănescu, A.; Delcea, C. Guiding Urban Decision-Making: A Study on Recommender Systems in Smart Cities. Electronics 2024, 13, 2151. [Google Scholar] [CrossRef]

- Sandu, A.; Cotfas, L.-A.; Delcea, C.; Ioanăș, C.; Florescu, M.-S.; Orzan, M. Machine Learning and Deep Learning Applications in Disinformation Detection: A Bibliometric Assessment. Electronics 2024, 13, 4352. [Google Scholar] [CrossRef]

- Domenteanu, A.; Delcea, C.; Florescu, M.-S.; Gherai, D.S.; Bugnar, N.; Cotfas, L.-A. United in Green: A Bibliometric Exploration of Renewable Energy Communities. Electronics 2024, 13, 3312. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Lim, W.M. Research Constituents, Intellectual Structure, and Collaboration Patterns in Journal of International Marketing: An Analytical Retrospective. J. Int. Mark. 2021, 29, 1–25. [Google Scholar] [CrossRef]

- Verma, S.; Gustafsson, A. Investigating the Emerging COVID-19 Research Trends in the Field of Business and Management: A Bibliometric Analysis Approach. J. Bus. Res. 2020, 118, 253–261. [Google Scholar] [CrossRef]

- Delcea, C.; Oprea, S.-V.; Dima, A.M.; Domenteanu, A.; Bara, A.; Cotfas, L.-A. Energy Communities: Insights from Scientific Publications. Oeconomia Copernic. 2024, 15, 1101–1155. [Google Scholar] [CrossRef]

- Domenteanu, A.; Cotfas, L.-A.; Diaconu, P.; Tudor, G.-A.; Delcea, C. AI on Wheels: Bibliometric Approach to Mapping of Research on Machine Learning and Deep Learning in Electric Vehicles. Electronics 2025, 14, 378. [Google Scholar] [CrossRef]

- Herther, N.K. Research Evaluation and Citation Analysis: Key Issues and Implications. Electron. Libr. 2009, 27, 361–375. [Google Scholar] [CrossRef]

- Passas, I. Bibliometric Analysis: The Main Steps. Encyclopedia 2024, 4, 1014–1025. [Google Scholar] [CrossRef]

- Zong, B.; Sun, Y.; Li, L. Advances, Hotspots, and Trends in Outdoor Education Research: A Bibliometric Analysis. Sustainability 2024, 16, 10034. [Google Scholar] [CrossRef]

- Hallinger, P.; Jayaseelan, S.; Speece, M.W. The Evolution of Educating for Sustainable Development in East Asia: A Bibliometric Review, 1991–2023. Sustainability 2024, 16, 8900. [Google Scholar] [CrossRef]

- Basheer, N.; Ahmed, V.; Bahroun, Z.; Anane, C. Exploring Sustainability Assessment Practices in Higher Education: A Comprehensive Review through Content and Bibliometric Analyses. Sustainability 2024, 16, 5799. [Google Scholar] [CrossRef]

- Dönmez, İ. Sustainability in Educational Research: Mapping the Field with a Bibliometric Analysis. Sustainability 2024, 16, 5541. [Google Scholar] [CrossRef]

- Alghamdi, S.; Soh, B.; Li, A. A Comprehensive Review of Dropout Prediction Methods Based on Multivariate Analysed Features of MOOC Platforms. Multimodal Technol. Interact. 2025, 9, 3. [Google Scholar] [CrossRef]

- Alsuhaimi, R.; Almatrafi, O. Identifying Learners’ Confusion in a MOOC Forum Across Domains Using Explainable Deep Transfer Learning. Information 2024, 15, 681. [Google Scholar] [CrossRef]

- Luo, Z.; Li, H. The Involvement of Academic and Emotional Support for Sustainable Use of MOOCs. Behav. Sci. 2024, 14, 461. [Google Scholar] [CrossRef]

- Swacha, J.; Muszyńska, K. Predicting Dropout in Programming MOOCs through Demographic Insights. Electronics 2023, 12, 4674. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to Conduct a Bibliometric Analysis: An Overview and Guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Crețu, R.F.; Țuțui, D.; Banța, V.C.; Șerban, E.C.; Barna, L.E.L.; Crețu, R.C. The Effects of the Implementation of Artificial Intelligence-Based Technologies on the Skills Needed in the Automotive Industry—A Bibliometric Analysis. Amfiteatru Econ. 2024, 3, 658–673. [Google Scholar] [CrossRef]

- Moreno-Guerrero, A.-J.; López-Belmonte, J.; Marín-Marín, J.-A.; Soler-Costa, R. Scientific Development of Educational Artificial Intelligence in Web of Science. Future Internet 2020, 12, 124. [Google Scholar] [CrossRef]

- Yu, J.; Muñoz-Justicia, J. A Bibliometric Overview of Twitter-Related Studies Indexed in Web of Science. Future Internet 2020, 12, 91. [Google Scholar] [CrossRef]

- Berniak-Woźny, J.; Szelągowski, M. A Comprehensive Bibliometric Analysis of Business Process Management and Knowledge Management Integration: Bridging the Scholarly Gap. Information 2024, 15, 436. [Google Scholar] [CrossRef]

- Ravšelj, D.; Umek, L.; Todorovski, L.; Aristovnik, A. A Review of Digital Era Governance Research in the First Two Decades: A Bibliometric Study. Future Internet 2022, 14, 126. [Google Scholar] [CrossRef]

- Fatma, N.; Haleem, A. Exploring the Nexus of Eco-Innovation and Sustainable Development: A Bibliometric Review and Analysis. Sustainability 2023, 15, 12281. [Google Scholar] [CrossRef]

- Stefanis, C.; Giorgi, E.; Tselemponis, G.; Voidarou, C.; Skoufos, I.; Tzora, A.; Tsigalou, C.; Kourkoutas, Y.; Constantinidis, T.C.; Bezirtzoglou, E. Terroir in View of Bibliometrics. Stats 2023, 6, 956–979. [Google Scholar] [CrossRef]

- Marín-Rodríguez, N.J.; González-Ruiz, J.D.; Valencia-Arias, A. Incorporating Green Bonds into Portfolio Investments: Recent Trends and Further Research. Sustainability 2023, 15, 14897. [Google Scholar] [CrossRef]

- Anaç, M.; Gumusburun Ayalp, G.; Erdayandi, K. Prefabricated Construction Risks: A Holistic Exploration through Advanced Bibliometric Tool and Content Analysis. Sustainability 2023, 15, 11916. [Google Scholar] [CrossRef]

- Cibu, B.; Delcea, C.; Domenteanu, A.; Dumitrescu, G. Mapping the Evolution of Cybernetics: A Bibliometric Perspective. Computers 2023, 12, 237. [Google Scholar] [CrossRef]

- Modak, N.M.; Merigó, J.M.; Weber, R.; Manzor, F.; Ortúzar, J.d.D. Fifty Years of Transportation Research Journals: A Bibliometric Overview. Transp. Res. Part A Policy Pract. 2019, 120, 188–223. [Google Scholar] [CrossRef]

- Profiroiu, C.M.; Cibu, B.; Delcea, C.; Cotfas, L.-A. Charting the Course of School Dropout Research: A Bibliometric Exploration. IEEE Access 2024, 12, 71453–71478. [Google Scholar] [CrossRef]

- Tătaru, G.-C.; Domenteanu, A.; Delcea, C.; Florescu, M.S.; Orzan, M.; Cotfas, L.-A. Navigating the Disinformation Maze: A Bibliometric Analysis of Scholarly Efforts. Information 2024, 15, 742. [Google Scholar] [CrossRef]

- Ciucu-Durnoi, A.N.; Delcea, C.; Stănescu, A.; Teodorescu, C.A.; Vargas, V.M. Beyond Industry 4.0: Tracing the Path to Industry 5.0 through Bibliometric Analysis. Sustainability 2024, 16, 5251. [Google Scholar] [CrossRef]

- Mulet-Forteza, C.; Martorell-Cunill, O.; Merigó, J.M.; Genovart-Balaguer, J.; Mauleon-Mendez, E. Twenty Five Years of the Journal of Travel & Tourism Marketing: A Bibliometric Ranking. J. Travel Tour. Mark. 2018, 35, 1201–1221. [Google Scholar] [CrossRef]

- Bakır, M.; Özdemir, E.; Akan, Ş.; Atalık, Ö. A Bibliometric Analysis of Airport Service Quality. J. Air Transp. Manag. 2022, 104, 102273. [Google Scholar] [CrossRef]

- Using VOSviewer as a Bibliometric Mapping or Analysis Tool in Business, Management & Accounting. Available online: https://library.smu.edu.sg/topics-insights/using-vosviewer-bibliometric-mapping-or-analysis-tool-business-management (accessed on 28 July 2024).

- Aria, M.; Cuccurullo, C. Bibliometrix: An R-Tool for Comprehensive Science Mapping Analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Liu, F. Retrieval Strategy and Possible Explanations for the Abnormal Growth of Research Publications: Re-Evaluating a Bibliometric Analysis of Climate Change. Scientometrics 2023, 128, 853–859. [Google Scholar] [CrossRef]

- Liu, W. The Data Source of This Study Is Web of Science Core Collection? Not Enough. Scientometrics 2019, 121, 1815–1824. [Google Scholar] [CrossRef]

- Billsberry, J.; Alony, I. The MOOC Post-Mortem: Bibliometric and Systematic Analyses of Research on Massive Open Online Courses (MOOCs), 2009 to 2022. J. Manag. Educ. 2024, 48, 634–670. [Google Scholar] [CrossRef]

- Kurulgan, M. A Bibliometric Analysis of Research on Dropout in Open and Distance Learning. Turk. Online J. Distance Educ. 2024, 25, 200–229. [Google Scholar] [CrossRef]

- Raman, A.; Thannimalai, R.; Don, Y.; Rathakrishnan, M. A Bibliometric Analysis of Blended Learning in Higher Education: Perception, Achievement and Engagement. Int. J. Learn. Teach. Educ. Res. 2021, 20, 126–151. [Google Scholar] [CrossRef]

- Irwanto, I.; Wahyudiati, D.; Saputro, A.; Lukman, I.R. Massive Open Online Courses (MOOCs) in Higher Education: A Bibliometric Analysis (2012–2022). Int. J. Inf. Educ. Technol. 2023, 13, 223–231. [Google Scholar] [CrossRef]

- Alazaiza, M.Y.D.; Alzghoul, T.M.; Al Maskari, T.; Amr, S.A.; Nassani, D.E. Analyzing the Evolution of Research on Student Awareness of Solid Waste Management in Higher Education Institutions: A Bibliometric Perspective. Sustainability 2024, 16, 5422. [Google Scholar] [CrossRef]

- Rojas-Sánchez, M.A.; Palos-Sánchez, P.R.; Folgado-Fernández, J.A. Systematic Literature Review and Bibliometric Analysis on Virtual Reality and Education. Educ. Inf. Technol. 2023, 28, 155–192. [Google Scholar] [CrossRef]

- Donner, P. Document Type Assignment Accuracy in the Journal Citation Index Data of Web of Science. Scientometrics 2017, 113, 219–236. [Google Scholar] [CrossRef]

- Cretu, D.M.; Morandau, F. Initial Teacher Education for Inclusive Education: A Bibliometric Analysis of Educational Research. Sustainability 2020, 12, 4923. [Google Scholar] [CrossRef]

- Swacha, J. State of Research on Gamification in Education: A Bibliometric Survey. Educ. Sci. 2021, 11, 69. [Google Scholar] [CrossRef]

- Desai, N.; Veras, L.; Gosain, A. Using Bradford’s Law of Scattering to Identify the Core Journals of Pediatric Surgery. J. Surg. Res. 2018, 229, 90–95. [Google Scholar] [CrossRef]

- Hjørland, B.; Nicolaisen, J. Bradford’s Law of Scattering: Ambiguities in the Concept of “Subject”. In Information Context: Nature, Impact, and Role, Proceedings of the 5th International Conference on Conceptions of Library and Information Sciences, CoLIS 2005, Glasgow, UK, 4–8 June 2005; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3507, p. 106. ISBN 978-3-540-26178-0. [Google Scholar]

- Ireland, T.; MacDonald, K.; Stirling, P. The H-Index: What Is It, How Do We Determine It, and How Can We Keep Up With It? In Science and the Internet; Düsseldorf University Press: Düsseldorf, Germany, 2012. [Google Scholar]

- Shah, D. By The Numbers: MOOCs in 2019; The Report by Class Central; Class Central: Santa Clara, CA, USA, 2019. [Google Scholar]

- Impey, C.; Formanek, M. MOOCS and 100 Days of COVID: Enrollment Surges in Massive Open Online Astronomy Classes during the Coronavirus Pandemic. Soc. Sci. Humanit. Open 2021, 4, 100177. [Google Scholar] [CrossRef]

- Mayr, P. Relevance Distributions across Bradford Zones: Can Bradfordizing Improve Search? In Proceedings of the ISSI 2013—14th International Society of Scientometrics and Informetrics Conference, Vienna, Austria, 15–19 July 2013; Volume 2. [Google Scholar]

- Venable, G.; Shepherd, B.; Loftis, C.; McClatchy, S.; Roberts, M.; Fillinger, M.; Tansey, B.; Klimo, P. Bradford’s Law: Identification of the Core Journals for Neurosurgery and Its Subspecialties. J. Neurosurg. 2015, 124, 569–579. [Google Scholar] [CrossRef]

- Tan, X.; Tasir, Z. A Systematic Review on Massive Open Online Courses in China from 2019 to 2023. Int. J. Acad. Res. Progress. Educ. Dev. 2024, 13, 160–182. [Google Scholar]

- Ma, R.; Mendez, M.C.; Bowden, P.; Massive List of Chinese Online Course Platforms in 2025. The Report by Class Central. 2025. Available online: https://www.classcentral.com/report/chinese-mooc-platforms/ (accessed on 15 February 2025).

- Moreno-Marcos, P.M.; Muñoz-Merino, P.J.; Maldonado-Mahauad, J.; Pérez-Sanagustín, M.; Alario-Hoyos, C.; Delgado Kloos, C. Temporal Analysis for Dropout Prediction Using Self-Regulated Learning Strategies in Self-Paced MOOCs. Comput. Educ. 2020, 145, 103728. [Google Scholar] [CrossRef]

- Alonso-Mencía, M.E.; Alario-Hoyos, C.; Estévez-Ayres, I.; Kloos, C.D. Analysing Self-Regulated Learning Strategies of MOOC Learners through Self-Reported Data. Australas. J. Educ. Technol. 2021, 37, 56–70. [Google Scholar] [CrossRef]

- Rõõm, M.; Lepp, M.; Luik, P. Dropout Time and Learners’ Performance in Computer Programming MOOCs. Educ. Sci. 2021, 11, 643. [Google Scholar] [CrossRef]

- Feklistova, L.; Lepp, M.; Luik, P. Learners’ Performance in a MOOC on Programming. Educ. Sci. 2021, 11, 521. [Google Scholar] [CrossRef]

- Prenkaj, B.; Distante, D.; Faralli, S.; Velardi, P. Hidden Space Deep Sequential Risk Prediction on Student Trajectories. Future Gener. Comput. Syst. 2021, 125, 532–543. [Google Scholar] [CrossRef]

- Prenkaj, B.; Velardi, P.; Stilo, G.; Distante, D.; Faralli, S. A Survey of Machine Learning Approaches for Student Dropout Prediction in Online Courses. ACM Comput. Surv. 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Zheng, Q.; Chen, L.; Burgos, D. Emergence and Development of MOOCs. In The Development of MOOCs in China; Zheng, Q., Chen, L., Burgos, D., Eds.; Springer: Singapore, 2018; pp. 11–24. ISBN 978-981-10-6586-6. [Google Scholar]

- Zheng, Q.; Chen, L.; Burgos, D. Learning Support of MOOCs in China. In The Development of MOOCs in China; Zheng, Q., Chen, L., Burgos, D., Eds.; Springer: Singapore, 2018; pp. 229–244. ISBN 978-981-10-6586-6. [Google Scholar]

- Smaili, E.M.; Daoudi, M.; Oumaira, I.; Azzouzi, S.; Charaf, M.E.H. Towards an Adaptive Learning Model Using Optimal Learning Paths to Prevent MOOC Dropout. Int. J. Eng. Pedagog. (IJEP) 2023, 13, 128–144. [Google Scholar] [CrossRef]

- Sraidi, S.; Smaili, E.M.; Azzouzi, S.; Charaf, M.E.H. A Neural Network-Based System to Predict Early MOOC Dropout. Int. J. Eng. Pedagog. (IJEP) 2022, 12, 86–101. [Google Scholar] [CrossRef]

- Chaker, R.; Bouchet, F.; Bachelet, R. How Do Online Learning Intentions Lead to Learning Outcomes? The Mediating Effect of the Autotelic Dimension of Flow in a MOOC. Comput. Hum. Behav. 2022, 134, 107306. [Google Scholar] [CrossRef]

- Ortega-Arranz, A.; Er, E.; Martínez-Monés, A.; Bote-Lorenzo, M.L.; Asensio-Pérez, J.I.; Muñoz-Cristóbal, J.A. Understanding Student Behavior and Perceptions toward Earning Badges in a Gamified MOOC. Univers. Access Inf. Soc. 2019, 18, 533–549. [Google Scholar] [CrossRef]

- Ortega-Arranz, A.; Bote-Lorenzo, M.L.; Asensio-Pérez, J.I.; Martínez-Monés, A.; Gómez-Sánchez, E.; Dimitriadis, Y. To Reward and beyond: Analyzing the Effect of Reward-Based Strategies in a MOOC. Comput. Educ. 2019, 142, 103639. [Google Scholar] [CrossRef]

- Chen, C.; Sonnert, G.; Sadler, P.M.; Sasselov, D.; Fredericks, C. The Impact of Student Misconceptions on Student Persistence in a MOOC. J. Res. Sci. Teach. 2020, 57, 879–910. [Google Scholar] [CrossRef]

- Zheng, Y.; Gao, Z.; Wang, Y.; Fu, Q. MOOC Dropout Prediction Using FWTS-CNN Model Based on Fused Feature Weighting and Time Series. IEEE Access 2020, 8, 225324–225335. [Google Scholar] [CrossRef]

- Fu, Q.; Gao, Z.; Zhou, J.; Zheng, Y. CLSA: A Novel Deep Learning Model for MOOC Dropout Prediction. Comput. Electr. Eng. 2021, 94, 107315. [Google Scholar] [CrossRef]

- Zheng, Y.; Shao, Z.; Deng, M.; Gao, Z.; Fu, Q. MOOC Dropout Prediction Using a Fusion Deep Model Based on Behaviour Features. Comput. Electr. Eng. 2022, 104, 108409. [Google Scholar] [CrossRef]

- Chen, J.; Feng, J.; Sun, X.; Wu, N.; Yang, Z.; Chen, S. MOOC Dropout Prediction Using a Hybrid Algorithm Based on Decision Tree and Extreme Learning Machine. Math. Probl. Eng. 2019, 2019, 8404653. [Google Scholar] [CrossRef]

- Guo, S.X.; Sun, X.; Wang, S.X.; Gao, Y.; Feng, J. Attention-Based Character-Word Hybrid Neural Networks with Semantic and Structural Information for Identifying of Urgent Posts in MOOC Discussion Forums. IEEE Access 2019, 7, 120522–120532. [Google Scholar] [CrossRef]

- Gao, Y.; Sun, X.; Wang, X.; Guo, S.; Feng, J. A Parallel Neural Network Structure for Sentiment Classification of MOOCs Discussion Forums. J. Intell. Fuzzy Syst. 2020, 38, 4915–4927. [Google Scholar] [CrossRef]

- Youssef, M.; Mohammed, S.; Hamada, E.K.; Wafaa, B.F. A Predictive Approach Based on Efficient Feature Selection and Learning Algorithms’ Competition: Case of Learners’ Dropout in MOOCs. Educ. Inf. Technol. 2019, 24, 3591–3618. [Google Scholar] [CrossRef]

- Xing, W.; Chen, X.; Stein, J.; Marcinkowski, M. Temporal Predication of Dropouts in MOOCs: Reaching the Low Hanging Fruit through Stacking Generalization. Comput. Hum. Behav. 2016, 58, 119–129. [Google Scholar] [CrossRef]

- Carhuallanqui-Ciocca, E.I.; Echevarría-Quispe, J.Y.; Hernández-Vásquez, A.; Díaz-Ruiz, R.; Azañedo, D. Bibliometric Analysis of the Scientific Production on Inguinal Hernia Surgery in the Web of Science. Front. Surg. 2023, 10, 1138805. [Google Scholar] [CrossRef]

- Dai, H.M.; Teo, T.; Rappa, N.A.; Huang, F. Explaining Chinese University Students’ Continuance Learning Intention in the MOOC Setting: A Modified Expectation Confirmation Model Perspective. Comput. Educ. 2020, 150, 103850. [Google Scholar] [CrossRef]

- Xing, W.; Du, D. Dropout Prediction in MOOCs: Using Deep Learning for Personalized Intervention. J. Educ. Comput. Res. 2019, 57, 547–570. [Google Scholar] [CrossRef]

- Tsai, Y.; Lin, C.; Hong, J.; Tai, K. The Effects of Metacognition on Online Learning Interest and Continuance to Learn with MOOCs. Comput. Educ. 2018, 121, 18–29. [Google Scholar] [CrossRef]

- Henderikx, M.A.; Kreijns, K.; Kalz, M. Refining Success and Dropout in Massive Open Online Courses Based on the Intention–Behavior Gap. Distance Educ. 2017, 38, 353–368. [Google Scholar] [CrossRef]

- Eriksson, T.; Adawi, T.; Stöhr, C. “Time Is the Bottleneck”: A Qualitative Study Exploring Why Learners Drop out of MOOCs. J. Comput. High. Educ. 2017, 29, 133–146. [Google Scholar] [CrossRef]

- Sunar, A.S.; White, S.; Abdullah, N.A.; Davis, H.C. How Learners’ Interactions Sustain Engagement: A MOOC Case Study. IEEE Trans. Learn. Technol. 2017, 10, 475–487. [Google Scholar] [CrossRef]

- Jadrić, M.; Garača, Ž.; Čukušić, M. Student Dropout Analysis with Application of Data Mining Methods. Manag. J. Contemp. Manag. Issues 2010, 15, 31–46. [Google Scholar]

- Lykourentzou, I.; Giannoukos, I.; Nikolopoulos, V.; Mpardis, G.; Loumos, V. Dropout Prediction in E-Learning Courses through the Combination of Machine Learning Techniques. Comput. Educ. 2009, 53, 950–965. [Google Scholar] [CrossRef]

- Lee, S.; Chung, J.Y. The Machine Learning-Based Dropout Early Warning System for Improving the Performance of Dropout Prediction. Appl. Sci. 2019, 9, 3093. [Google Scholar] [CrossRef]

- Dilaver, I.; Karakullukcu, S.; Gurcan, F.; Topbas, M.; Ursavas, O.F.; Beyhun, N.E. Climate Change and Non-Communicable Diseases: A Bibliometric, Content, and Topic Modeling Analysis. Sustainability 2025, 17, 2394. [Google Scholar] [CrossRef]

- Liu, T.; Wang, Y.; Zhang, L.; Xu, N.; Tang, F. Outdoor Thermal Comfort Research and Its Implications for Landscape Architecture: A Systematic Review. Sustainability 2025, 17, 2330. [Google Scholar] [CrossRef]

- Nica, I.; Chiriță, N.; Georgescu, I. Triple Bottom Line in Sustainable Development: A Comprehensive Bibliometric Analysis. Sustainability 2025, 17, 1932. [Google Scholar] [CrossRef]

- Nica, I. Bibliometric Mapping in the Landscape of Cybernetics: Insights into Global Research Networks. Kybernetes 2024. ahead of print. [Google Scholar] [CrossRef]

- Nasir, A.; Shaukat, K.; Hameed, I.A.; Luo, S.; Alam, T.M.; Iqbal, F. A Bibliometric Analysis of Corona Pandemic in Social Sciences: A Review of Influential Aspects and Conceptual Structure. IEEE Access 2020, 8, 133377–133402. [Google Scholar] [CrossRef]

- Azhar, K.A.; Iqbal, N.; Shah, Z.; Ahmed, H. Understanding High Dropout Rates in MOOCs—A Qualitative Case Study from Pakistan. Innov. Educ. Teach. Int. 2024, 61, 764–778. [Google Scholar] [CrossRef]

- Bozkurt, A.; Akbulut, Y. Dropout Patterns and Cultural Context in Online Networked Learning Spaces|Open Praxis. Available online: https://openpraxis.org/articles/10.5944/openpraxis.11.1.940 (accessed on 15 February 2025).

- Chi, Z.; Zhang, S.; Shi, L. Analysis and Prediction of MOOC Learners’ Dropout Behavior. Appl. Sci. 2023, 13, 1068. [Google Scholar] [CrossRef]

- Hong, B.; Wei, Z.; Yang, Y. A Two-Layer Cascading Method for Dropout Prediction in MOOC. Mechatron. Syst. Control. 2019, 47, 91–97. [Google Scholar]

- Sr, N.; Saravanan, U. MOOC Dropout Prediction Using FIAR-ANN Model Based on Learner Behavioral Features. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 607–617. [Google Scholar] [CrossRef]

- Şahin, M. A Comparative Analysis of Dropout Prediction in Massive Open Online Courses. Arab. J. Sci. Eng. 2021, 46, 1845–1861. [Google Scholar] [CrossRef]

- Tlili, A.; Altinay, F.; Altinay, Z.; Aydin, C.H.; Huang, R.; Sharma, R. Reflections on Massive Open Online Courses (MOOCS) During the COVID-19 Pandemic: A Bibliometric Mapping Analysis. Turk. Online J. Distance Educ. 2022, 23, 1–17. [Google Scholar] [CrossRef]

- Aljarrah, A.; Ababneh, M.; Cavus, N. The Role of Massive Open Online Courses during the COVID-19 Era. New Trends Issues Proc. Humanit. Soc. Sci. 2020, 7, 142–152. [Google Scholar] [CrossRef]

- Liu, C.; Zou, D.; Chen, X.; Xie, H.; Chan, W.H. A Bibliometric Review on Latent Topics and Trends of the Empirical MOOC Literature (2008–2019). Asia Pac. Educ. Rev. 2021, 22, 515–534. [Google Scholar] [CrossRef]

- Wahid, R.; Ahmi, A.; Alam, A.S.A.F. Growth and Collaboration in Massive Open Online Courses: A Bibliometric Analysis. IRRODL 2020, 21, 292–322. [Google Scholar] [CrossRef]

| Reference | Focus |

|---|---|

| Zong et al. [43] | Outdoor education, highlighting the thematic transition from environmental governance to environmental education. |

| Hallinger et al. [44] | Developing research on education for sustainable development in East Asia between 1991 and 2023. |

| Basheer et al. [45] | Engaging higher education institutions in achieving the SDGs. |

| Dönmez [46] | Sustainability in education, highlighting the increase in the number of publications and the shift in emphasis from environmental education to education for sustainable development. |

| Alghamdi et al. [47] | Limitations of traditional models for predicting dropout in MOOCs and exploration of the use of advanced artificial intelligence methods to improve prediction accuracy and support effective interventions in online education. |

| Alsuhaimi and Almatrafi [48] | Applying a transferable deep learning method for automatically classifying MOOC forum posts based on confusability indicators, thus improving student support and reducing dropout rates through early and personalized responses. |

| Luo and Li [49] | Examination of the impact of academic and emotional support on the sustainable use of MOOC platforms in English language learning. |

| Swacha and Muszyńska [50] | Use of demographic data to predict early student dropout in MOOCs, identifying factors such as age, educational level, student status, nationality and disability as predictors of dropout. |

| Index Name | Period |

|---|---|

| Science Citation Index Expanded (SCIE) | 1900–present |

| Social Sciences Citation Index (SSCI) | 1975–present |

| Emerging Sources Citation Index (ESCI) | 2005–present |

| Arts & Humanities Citation Index (A&HCI) | 1975–present |

| Conference Proceedings Citation Index—Social Sciences and Humanities (CPCI-SSH) | 1990–present |

| Conference Proceedings Citation Index—Science (CPCI-S) | 1990–present |

| Book Citation Index—Science (BKCI-S) | 2010–present |

| Book Citation Index—Social Sciences and Humanities (BKCI-SSH) | 2010–present |

| Current Chemical Reactions (CCR-Expanded) | 2010–present |

| Index Chemicus (IC) | 2010–present |

| Exploration Steps | Filters on WoS | Description | Query | Query Number | Count |

|---|---|---|---|---|---|

| 1 | Title/Abstract/Keywords | Contains specific keywords related to MOOCs | ((TI=(MOOC*)) OR AB=(MOOC*)) OR AK=(MOOC*) | #1 | 8443 |

| Contains specific keywords related to dropout | ((TI=(dropout)) OR AB=(dropout)) OR AK=(dropout) | #2 | 32,480 | ||

| Contains specific keywords related to MOOCs and dropout | #1 AND #2 | #3 | 455 | ||

| 2 | Language | Limited to English | (#13) AND LA=(English) | #4 | 432 |

| 3 | Document Type | Limited to Articles | (#14) AND DT=(Article) | #5 | 212 |

| 4 | Year published | Excludes 2024 | (#15) NOT PY=(2024) | #6 | 193 |

| Indicator | Value |

|---|---|

| Timespan | 2013:2023 |

| Sources | 101 |

| Documents | 193 |

| Average citations per documents | 19.68 |

| References | 6560 |

| Keywords plus | 255 |

| Author’s keywords | 573 |

| Indicator | Value of the Indicator |

|---|---|

| Authors | 566 authors |

| Authors of single-authored documents | 20 authors |

| Authors of multi-authored documents | 546 authors |

| Indicator | Value of the Indicator |

|---|---|

| Single-authored documents | 21 documents |

| Documents per author | 0.34 documents/author |

| Authors per document | 2.93 authors/document |

| Co-authors per documents | 3.45 co-authors/document |

| Affiliations | Articles | Percentage |

|---|---|---|

| Beijing Normal University | 11 | 5.70% |

| Cadi Ayyad University of Marrakech | 6 | 3.11% |

| Harvard University | 5 | 2.59% |

| IBN Tofail University of Kenitra | 5 | 2.59% |

| Texas Tech University | 5 | 2.59% |

| Mohammed V University in Rabatg | 4 | 2.07% |

| Cadi Ayyad University of Marrakech | 4 | 2.07% |

| Guilin University of Electronic Technology | 4 | 2.07% |

| Central China Normal University | 4 | 2.07% |

| Northwest University Xi’an | 4 | 2.07% |

| Texas Tech University System | 4 | 2.07% |

| Universidad Carlos III de Madrid | 3 | 1.55% |

| Abdelmalek Essaadi University of Tetouan | 3 | 1.55% |

| Chulalongkorn University | 3 | 1.55% |

| Nanjing Agricultural University | 3 | 1.55% |

| No. | Paper (First Author, Year, Journal, Reference) | Number of Authors | Region | Total Citations (TC) | TC per Year (TCY) | Normalized TC (NTC) |

|---|---|---|---|---|---|---|

| 1 | Xing WL, 2016, Computers in Human Behavior [111] | 4 | USA | 157 | 17.44 | 2.93 |

| 2 | Dai HM, 2020, Computers & Education [113] | 4 | China | 147 | 29.40 | 6.10 |

| 3 | Xing WL, 2019, Journal of Educational Computing Research [114] | 2 | USA | 133 | 22.17 | 5.53 |

| 4 | Tsai YH, 2018, Computers & Education [115] | 4 | Taiwan | 124 | 17.71 | 4.27 |

| 5 | Henderikx MA, 2017, Distance Education [116] | 3 | Netherlands | 97 | 12.13 | 1.88 |

| 6 | Eriksson T, 2017, Journal of Computing in Higher Education [117] | 3 | Sweden | 97 | 12.13 | 1.88 |

| 7 | Aldowah H, 2020, Journal of Computing in Higher Education [24] | 4 | United Kingdom | 87 | 17.40 | 3.61 |

| 8 | Almatrafi O, 2018, Computers & Education [19] | 3 | USA | 87 | 12.43 | 2.99 |

| 9 | Sunar AS, 2017, IEEE Transactions on Learning Technologies [118] | 4 | United Kingdom, Asia | 85 | 10.63 | 1.64 |

| 10 | Moreno-Marcos PM, 2020, Computers & Education [90] | 6 | Spain, Chile | 78 | 15.60 | 3.24 |

| No. | Paper (First Author, Year, Journal, Reference) | Title | Methods Used | Data | Purpose |

|---|---|---|---|---|---|

| 1 | Xing WL, 2016, Computers in Human Behavior [111] | Temporal predication of dropouts in MOOCs: Reaching the low hanging fruit through stacking generalization | Machine learning methods, principal components analysis | 14 discussion forums and 12 multiple-choice quizzes, gathered from a course that had 3617 students enrolled | To create a mechanism to identify students at risk of dropping out as accurately as possible |

| 2 | Dai HM, 2020, Computers & Education [113] | Explaining Chinese university students’ continuance learning intention in the MOOC setting: A modified expectation confirmation model perspective | Expectation confirmation model, structural equation modeling, confirmatory factor analysis | 192 Chinese students were recruited as participants to complete a questionnaire | To identify and explore the factors that influence students to continue MOOC studies |

| 3 | Xing WL, 2019, Journal of Educational Computing Research [114] | Dropout Prediction in MOOCs: Using Deep Learning for Personalized Intervention | Techniques of deep learning, K-nearest neighbors, support vector machines, anddecision tree, baseline algorithms | 3617 students participants in a course organized by Canvas. JSON data for test scores or discussion form data, as well as trace or click-stream data | To optimize a MOOC dropout prediction model customized to intervention |

| 4 | Tsai YH, 2018, Computers & Education [115] | The effects of metacognition on online learning interest and continuance to learn with MOOCs | First order confirmatory factor analysis, structural equation modeling | Data were collected from a total of 126 respondents | To create a unified model that combines both learning interest and metacognition to investigate MOOCs’ continuance intention |

| 5 | Henderikx MA, 2017, Distance Education [116] | Refining success and dropout in massive open online courses based on the intention–behavior gap | The traditional approach tracking course success rates | Data were collected using two questionnaires (before and after the course) completed by the participants of two MOOCs. The first questionnaire had a total of 689 respondents, subsequently completed by 163 respondents, and the second questionnaireinitially had 821 respondents and subsequently had 126 respondents | The aim was to test the applicability of the typology by conducting an exploratory study |

| 6 | Eriksson T, 2017, Journal of Computing in Higher Education [117] | “Time is the bottleneck”: a qualitative study exploring why learners drop out of MOOCs | Qualitative case study approach | Application of semi-structured interviews, on a total of 34 learners who recorded different degrees of course completion for two MOOCs | To identify the reasons that influence participants to both complete and abandon the MOOC |

| 7 | Aldowah H, 2020, Journal of Computing in Higher Education [24] | Factors affecting student dropout in MOOCs: a cause and effect decision-making model | Multi-criteria decision-making | Identification of 12 factors from the literature | To find the underlying factors and possible causal relationships that are responsible for the rather high dropout rate in MOOCs |

| 8 | Almatrafi O, 2018, Computers & Education [19] | Needle in a haystack: Identifying learner posts that require urgent response in MOOC discussion forums | Linguistic inquiry and word count, metadata, term frequency, classification methods and sampling groups | The Stanford MOOCPosts dataset (with a large number of posts—29,604. 29,584, after excluding posts with insignificant information | To create a model that is able to identify posts of an urgent nature that need the immediate attention of the coordinator |

| 9 | Sunar AS, 2017, IEEE Transactions on Learning Technologies [118] | How Learners’ Interactions Sustain Engagement: A MOOC Case Study | Social network analysis techniques, prediction model | Discussions in a FutureLearn MOOC, which had a total of 9855 registered learners | To investigatet the social behaviors learners exhibit in MOOCs and what the impact of engagement is in terms of course completion |

| 10 | Moreno-Marcos PM, 2020, Computers & Education [90] | Temporal analysis for dropout prediction using self-regulated learning strategies in self-paced MOOCs | Predictive models, self-regulated learning | Questionnaire for MOOC participants on Electronics, named “Electrons in Action”, Open edX platform | To explore how self-regulated learning (SRL) strategies can be integrated into predictive models for self-paced MOOCs; it also introduces a new temporal analysis methodology for self-paced MOOCs aimed at early detection of learners at risk of dropout |

| Keywords Plus | Occurrences Keywords Plus | Authors Keywords | Occurrences Authors Keywords |

|---|---|---|---|

| students | 31 | mooc/moocs | 103 |

| engagement | 19 | dropout prediction | 21 |

| motivation | 18 | massive open online courses | 21 |

| education | 15 | machine learning | 20 |

| performance | 15 | dropout | 19 |

| online | 13 | learning analytics | 11 |

| model | 12 | online learning | 11 |

| motivations | 10 | deep learning | 10 |

| open online courses | 10 | distance education | 9 |

| participation | 10 | e-learning | 9 |

| Bigrams in Abstracts | Occurrences | Bigrams in Titles | Occurrences |

|---|---|---|---|

| dropout rate/rates | 160 | dropout prediction | 29 |

| online courses | 137 | online courses | 20 |

| moocs | 92 | mooc dropout | 14 |

| dropout prediction | 54 | student dropout | 11 |

| online learning | 54 | machine learning | 9 |

| machine learning | 43 | mooc learners | 8 |

| student dropout | 30 | deep learning | 7 |

| neural network | 29 | data mining | 5 |

| completion rates | 24 | discussion forums | 5 |

| continuance intention | 24 | continuance intention | 5 |

| Trigrams in Abstracts | Occurrences | Trigrams in Titles | Occurrences |

| online courses mooc/moocs | 97 | mooc dropout prediction | 10 |

| machine learning algorithms | 14 | student dropout prediction | 6 |

| dropout prediction model | 12 | mooc discussion forums | 3 |

| convolutional neural network/networks | 16 | online courses moocs | 3 |

| structural equation modeling | 8 | self-regulated learning strategies | 3 |

| low completion rates | 6 | Chinese university students | 2 |

| neural network model | 6 | convolutional neural networks | 2 |

| self-regulated learning srl | 6 | deep learning model | 2 |

| student dropout prediction | 6 | dropout prediction model | 2 |

| accuracy precision recall | 5 | educational data mining | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cișmașu, I.-D.; Cibu, B.R.; Cotfas, L.-A.; Delcea, C. The Persistence Puzzle: Bibliometric Insights into Dropout in MOOCs. Sustainability 2025, 17, 2952. https://doi.org/10.3390/su17072952

Cișmașu I-D, Cibu BR, Cotfas L-A, Delcea C. The Persistence Puzzle: Bibliometric Insights into Dropout in MOOCs. Sustainability. 2025; 17(7):2952. https://doi.org/10.3390/su17072952

Chicago/Turabian StyleCișmașu, Irina-Daniela, Bianca Raluca Cibu, Liviu-Adrian Cotfas, and Camelia Delcea. 2025. "The Persistence Puzzle: Bibliometric Insights into Dropout in MOOCs" Sustainability 17, no. 7: 2952. https://doi.org/10.3390/su17072952

APA StyleCișmașu, I.-D., Cibu, B. R., Cotfas, L.-A., & Delcea, C. (2025). The Persistence Puzzle: Bibliometric Insights into Dropout in MOOCs. Sustainability, 17(7), 2952. https://doi.org/10.3390/su17072952