1. Introduction

With the increasing proportion of installed photovoltaic (PV) power generation, PV power generation has begun to show a mode of “large-scale decentralised development, low-voltage access, and local consumption” [

1]. Compared with centralised PVs, distributed PVs can be installed on buildings and other carriers, which have higher flexibility and can significantly improve the utilisation rate of PV devices [

2]. But distributed PV installation sites are scattered with a small installed capacity, which has a greater impact on the stability of the power system [

3,

4]. Therefore, the accurate power prediction of distributed PVs is of great significance to ensure the reliability of the power system and formulate a power market strategy [

5,

6]. Although distributed PVs have been developed for a period of time, the historical statistical data are not standardised [

7]. Moreover, distributed PVs generally do not measure real-time power but only upload the total power generation data to the power grid [

8]. Therefore, it is difficult to establish a power prediction model of distributed PV. The principle of PV power generation involves using electronic components to convert solar radiation energy into electrical energy, and the power can be calculated by the solar irradiance according to the empirical formula. Therefore, the accurate prediction of solar irradiance is the basis for the power prediction of distributed PV power generation, and it has important practical significance for the location of new distributed PV stations [

9,

10].

Currently, research on solar irradiance prediction is primarily based on two aspects: the clear sky model in [

11] derived from physical formulas, and the use of historical data and numerical weather prediction (NWP) information to establish a machine learning model to directly predict solar irradiance. The first method is a model derived from physical and empirical formulas when the weather environment is clear, such as the ASHRAE model [

12,

13], Hottel model [

14], rest2 model [

15], etc. [

16]. The input of this type of model is simple, and the predicted irradiance can be obtained only from the longitude and latitude of the station to be measured. Because weather factors are not considered, the forecast results of the clear sky model are generally higher than the actual value, and the performance is poor in complex weather; therefore, it is difficult to meet the actual demand [

17]. The second method is a machine learning prediction model based on historical irradiance and NWP data. In general, the prediction accuracy of the model is higher than that of the clear sky model. Commonly used models primarily include artificial neural network (ANN) [

18], back propagation neural network (BPNN) [

19], convolutional neural network (CNN) [

20], recurrent neural network (RNN) [

21], and various network combination models [

22]. Inputs of this type of models include different combinations of NWP information, and the prediction accuracy is extremely dependent on NWP information. With the continuous progress in research to pursue higher prediction accuracy, more meteorological information and historical data are input into the model [

23]. With the continuous development of NWP technology, NWP includes an increasing number of products. The cost of NWP increases with an increase in the amount of information. However, owing to cost constraints, it is difficult for distributed PV power stations to meet the input requirements of such models.

As one of the main factors affecting solar irradiance, various scholars have begun to model the characteristics of clouds and use them together with NWP to predict PV power and solar irradiance [

24,

25,

26]. Cloud features are primarily obtained from ground-based and satellite-based cloud images. Ground-based cloud images come from the observation of the ground-based total sky imagers (TSIs), which can provide sky images with high spatial and temporal resolution [

27]. However, the price of an TSI is high, and it is not suitable for distributed PV stations with low investment costs. In recent years, with the continuous development of meteorological satellites, the spatial and temporal resolution of satellite-based cloud images has also made great progress. At the same time, many countries have also begun to provide free satellite-based cloud images, which provided great convenience for the solar irradiance prediction of distributed photovoltaics. Kim et al. [

28] and Prasad et al. [

29] extracted the thickness characteristics of clouds from satellite-based cloud images and improved the prediction accuracy of solar irradiance and power. However, the thickness of clouds is not the only factor that explains the shielding effect of clouds, and different types of clouds have different shielding effects [

30]. Chu et al. [

31] established different models for different types of clouds to predict solar irradiance; however, with the continuous subdivision of cloud types, the final prediction model is becoming larger and more complex. Texture is an attribute that reflects the surface structure and organisation of objects, and it is an important feature used to distinguish object categories [

32]. Texture features of clouds have been used several times as the basis for cloud classification. Previous studies have proved that using texture features and thickness features of cloud images together can further improve the prediction accuracy of solar irradiance. Therefore, this study focuses on how to better extract texture features from satellite-based cloud images.

With the continuous development of machine learning, CNNs have strong image-processing abilities, which are often used in the classification and feature extraction of cloud images. Wang et al. [

33] used a CNN model for cloud classification and recognition. Zhao et al. [

34] and Qin et al. [

35] extracted features from cloud images using a CNN model and used them for solar irradiance and power prediction. However, texture features of cloud images are not considered in these studies. The initially used convolution kernel is mostly the Sobel operator, but the Sobel operator pays more attention to image edge features [

36], and it is also unclear whether the trained convolution kernel contains texture features. The grey-level co-occurrence matrix (GLCM) is one of the earliest used methods to study image texture, and 14 features are defined to describe image texture [

37]. The texture features extracted by the GLCM have achieved excellent results in many classifications of images [

38,

39]. And the effectiveness of using texture features extracted by the GLCM to predict solar irradiance has been confirmed in Ref. [

1]. Therefore, this paper combines CNN with GLCM and proposes a novel textural convolution kernel to extract texture features from satellite cloud images.

In this study, an ultra-short-term solar irradiance prediction framework based on satellite cloud images and a clear sky model is proposed. Ultra-short-term solar irradiance prediction is conducted by combining the theoretical irradiance in a clear sky, textural features of clouds, and NWP information. By introducing a clear sky model and satellite cloud images, the strong dependence of prediction results on NWP information is reduced, and higher prediction accuracy can be obtained through a small amount of NWP information. In addition, a textural convolution kernel is proposed to extract cloud texture features from satellite-based cloud images, further improving the prediction accuracy of solar irradiance. The ability of the textural convolution kernel to extract cloud image features, and other common convolution kernels, are compared and analysed. It is demonstrated that textural convolution kernel and texture features can better describe the shielding effect of cloud on solar irradiance.

The main contributions of this study are summarised as follows:

This study combines a convolution kernel and GLCM and proposes a textural convolution kernel that can extract textural features from satellite cloud images. The textural convolution kernel is applied to the prediction of solar irradiance, and it is proved that the textural features of clouds can better reflect the shielding effect of clouds on solar irradiance.

Considering the different changes in irradiance in different areas, the parameters of the ASHRAE model of the station to be measured are determined by the least squares method so that the irradiance obtained by the ASHRAE model is more consistent with the change trend in the actual irradiance of the station.

The irradiance obtained by the ASHRAE model is used as the reference value in a clear sky, and the NWP information and cloud textural features are used to correct it, further improving the prediction accuracy of solar irradiance.

The rest of this paper is organised as follows:

Section 2 introduces the framework of the integrated model;

Section 3 describes the methods used in the proposed model in detail;

Section 4 introduces the case and prepares the data;

Section 5 evaluates and discusses the performance of the framework proposed in this paper; and

Section 6 summarises all the studies.

3. Methodology

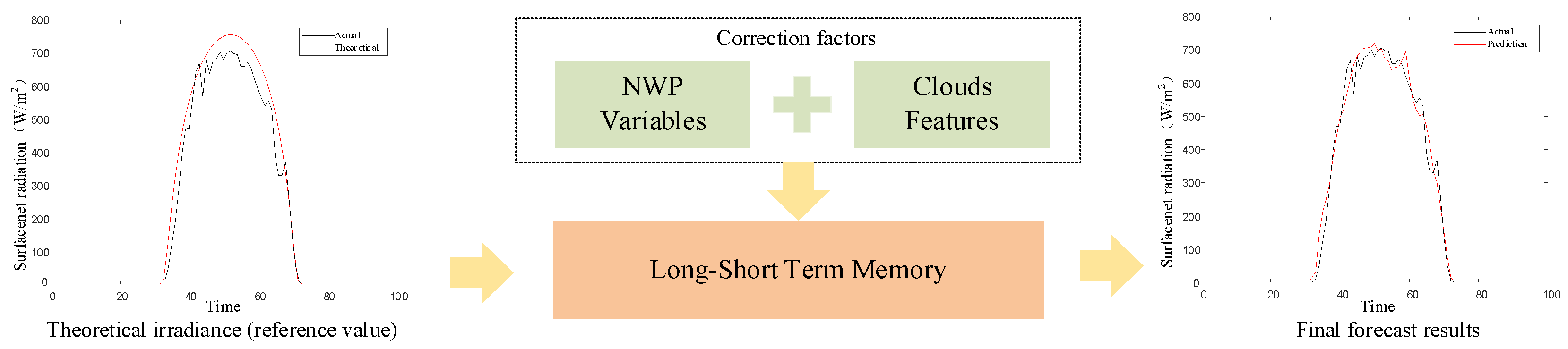

In this section, we introduce our novel solar irradiance forecasting framework, which is based on three main models: (1) a CNN with a textural convolution kernel to extract the textural features of clouds, (2) an improved clear sky model to calculate the theoretical irradiance, and (3) an LSTM to predict solar irradiance. The details of these models will be provided in the following sections.

3.1. CNN with Textural Convolution Kernel

We first utilise the CNN with the textural convolution kernel as a powerful feature extraction tool for clouds. The aim of using the textural convolution kernel is to obtain textural features of clouds. Texture is a feature that reflects the homogeneous phenomenon in an image [

40], which reflects the arrangement attribute of the object surface with a slow or periodic change and is the fundamental attribute for verifying the difference between objects. Therefore, the textural features of clouds can express different cloud types, and they have more advantages in PV power prediction compared to cover features of clouds.

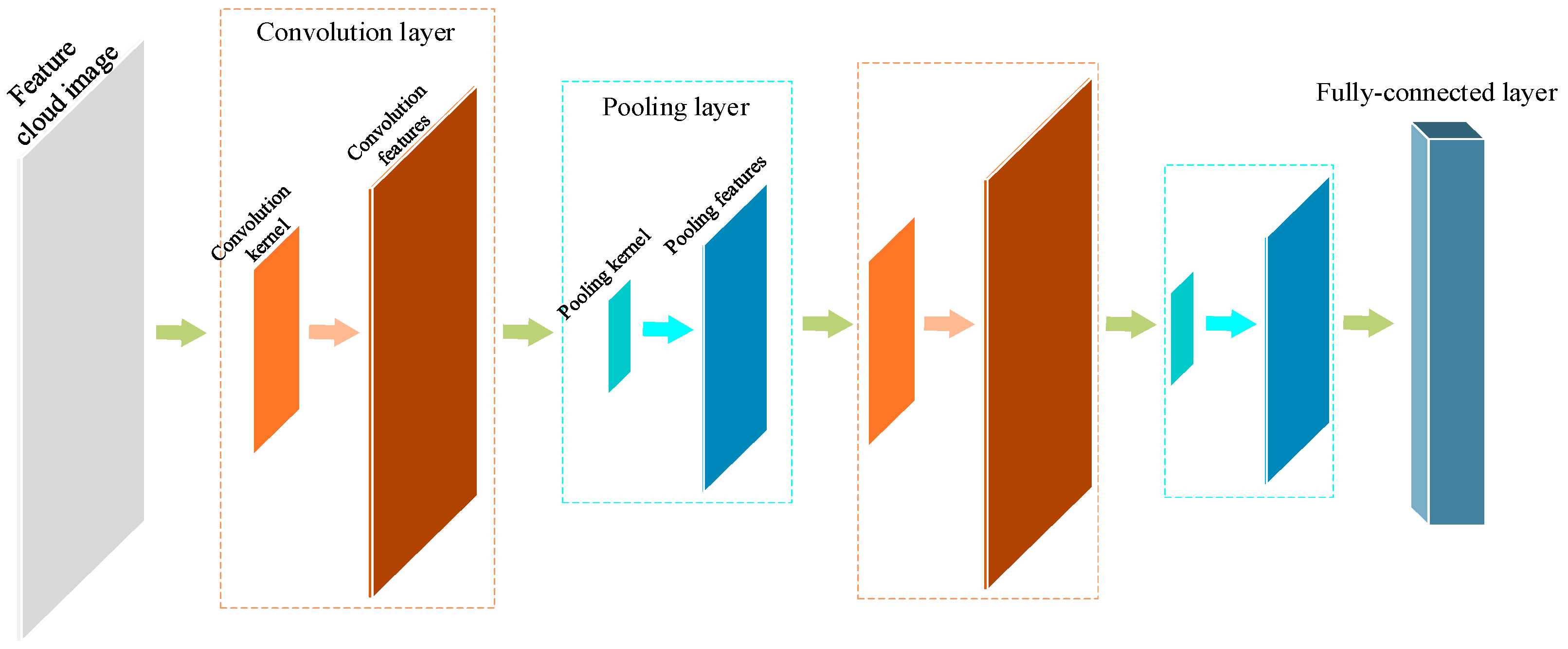

Based on image textural expression, we propose a textural convolution kernel and design a CNN model to extract textural features of clouds. The model includes two convolution layers, pooling layers, and a fully connected layer. The structure of the model is presented in

Figure 2.

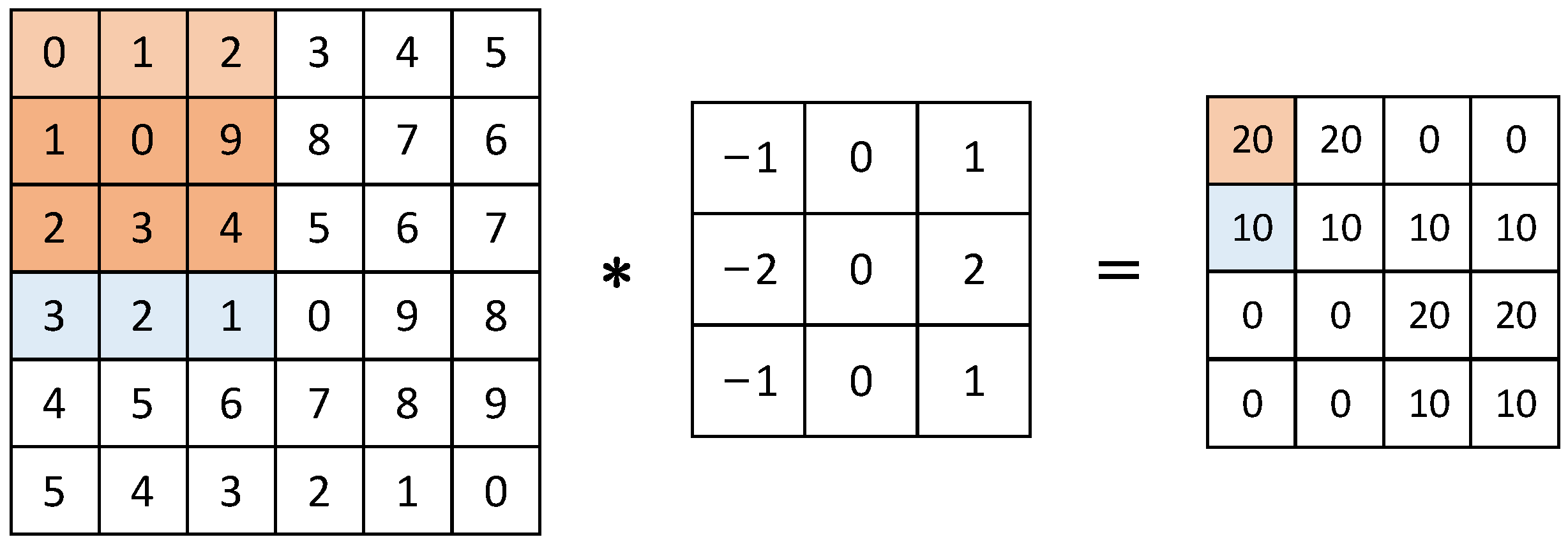

The convolution layer is the core unit of the CNN, which performs convolution operations on images using a convolution kernel. As shown in

Figure 3, when performing the convolution operation, the convolution kernel traverses the entire image, calculates the dot product between the pixels in the region and convolution kernel, and changes the calculation results into the characteristic matrix in turn.

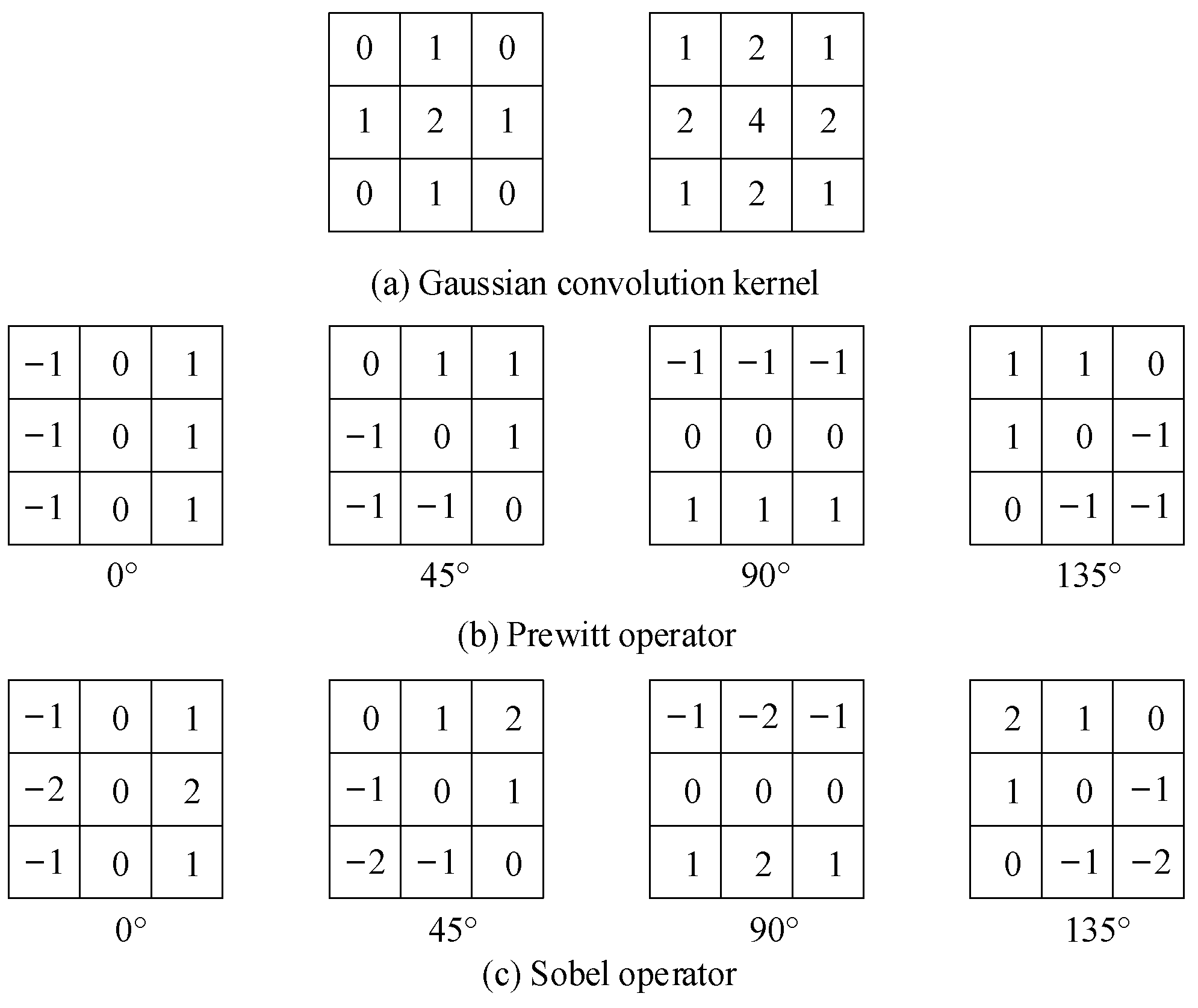

Different convolution kernels and input images were convoluted to obtain different feature matrices. Different features can be extracted by designing different convolutional kernel sizes and values. Common convolution kernels include the Gaussian convolution kernel [

41], Prewitt operator [

42,

43], and Sobel operator [

44,

45], as illustrated in

Figure 4. The Gaussian convolution kernel has smoothing and broadening effects and is mainly used for image filtering and feature enhancement. The Prewitt operator is a differential operator for image edge detection, and its principle is to use the difference generated by the grey value of pixels in a specific area for edge detection. The Sobel operator can not only detect the edge of the image through first-order differential calculation but also obtain the one-order gradient of the image. Prewitt and Sobel operators are the most common convolution kernels in CNN models and are often used for image edge feature extraction in the field of target detection and image classification, which are different from the textural features that this study aims to extract in order to distinguish cloud types.

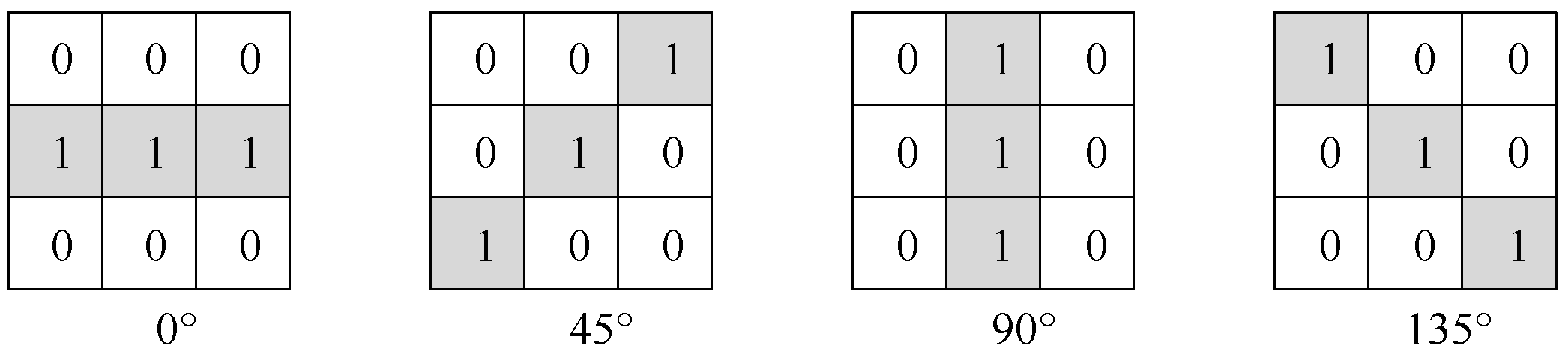

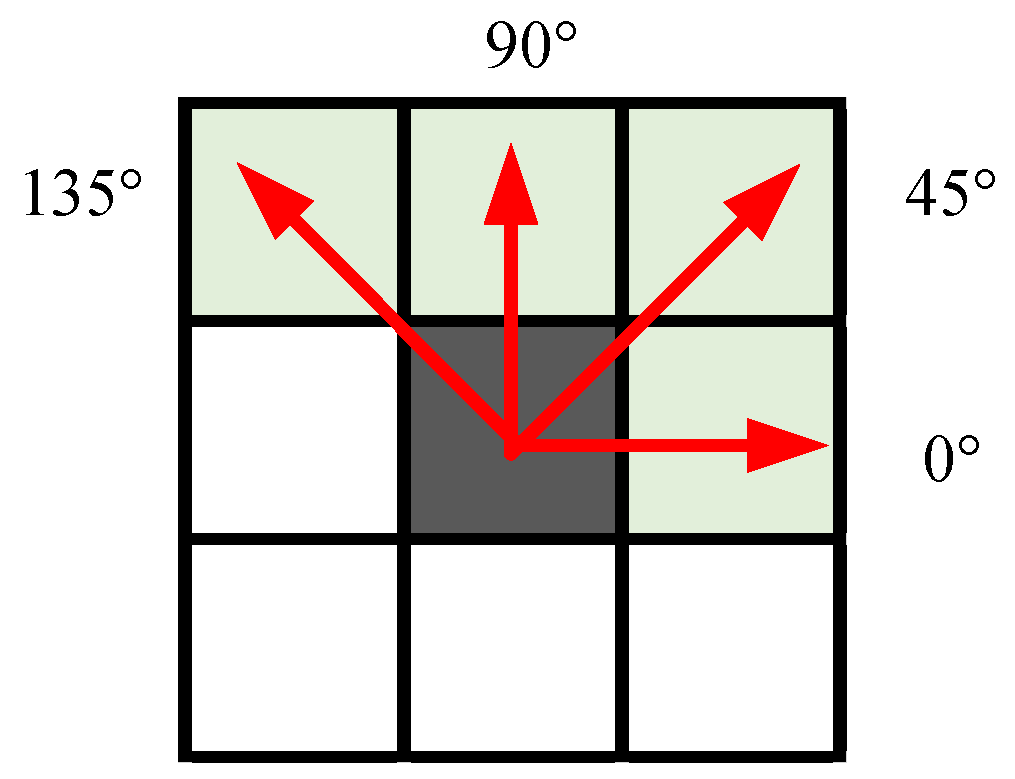

Accordingly, a novel textural convolution kernel of the CNN is proposed that simulates the calculation method of the Grey-level co-occurrence matrix (GLCM) and is used to extract textural features of images in a specified gradient direction, as shown in

Figure 5.

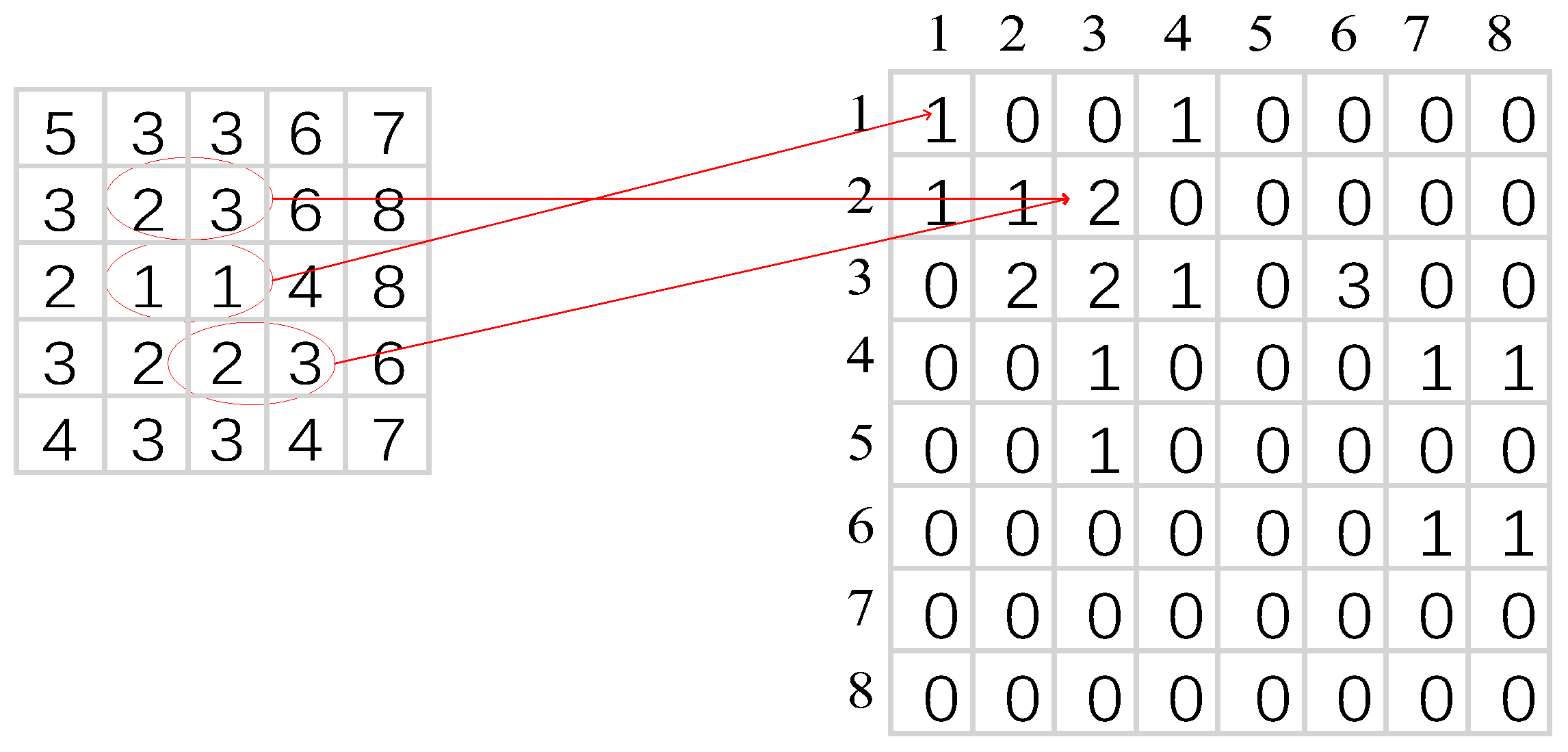

GLCM [

46] is a method for the statistical analysis of the spatial characteristics of image pixels, which is widely used in image texture analysis. It describes the textural information of an image in different gradient directions by extracting the intensity relationship between adjacent pixels in different gradient directions, as shown in

Figure 6.

An image is converted into a matrix that responds to pixel values within a specified distance. This process calculates the frequency of occurrence of pixel pairs at varying distances and directions, which are defined as horizontal, vertical, and diagonal orientations [

46].

where

.

where

is the GLCM of distance

d and angle

.

I(

x,

y) and

I(

m,

n) are the pixel intensity at position (

x,

y) and (

m,

n).

and

depend on direction and are evaluated using

Table 1.

Figure 7 shows an example of GLCM calculation where the first matrix is a 5 × 5 image with eight grey levels, 1–8, and the second matrix is the GLCM. Consider a pair of pixels (2, 3) with

d = 1 and

marked in red circles, two times in the image, which sets the GLCM value as two for this pair. Other pairs are measured similarly too.

The textural convolution kernel sets a valid value of 1 in the direction of the gradient, while the value is set to 0 in other positions. According to the convolution algorithm, when using the textural convolution kernel for convolution operation and feature extraction, the result is the superposition of pixel values in the current gradient direction, and the extracted features are more in line with the definition of textural features. When using a CNN for featural extraction of satellite cloud images, textural convolution kernels slide to collect textural features on different regions of the image, and the similarities and differences in textural features between adjacent regions can also be further reflected. Therefore, the textural convolution kernels and CNNs proposed in this study can extract textural features that describe the type of clouds.

In the study of image texture, the textural gradient directions are generally set to 0°, 45°, 90°, and 135°, so the textural convolution kernel also sets the four gradient directions. In CNNs, the size of the convolution kernel is usually set to squares of 3 × 3, 5 × 5, or 7 × 7. They were considered, and a detailed comparison was made in the solar irradiance prediction example in

Section 5.2.

3.2. Improved Clear Sky Model

The second step obtains the theoretical irradiance in a clear sky and supplies a reference value of irradiance in the places of distributed PVs, fundamental data used for solar irradiance forecasting. The ASHRAE model is a general prediction model of surface irradiance in clear skies developed by the American Society of Heating, Refrigerating, and Air-Conditioning Engineers based on research by Threlkeld and Jordan [

13]. The ASHRAE model assumes that the weather is clear (there are no or almost no clouds in the sky, and the air quality is very good) and calculates the surface irradiance through historical empirical data and physical formulas. Therefore, the calculation result is the theoretical irradiance that can be received on the ground in a clear sky.

The solar irradiance received by the ground is shown in

Figure 8. According to the ASHRAE model, the direct normal irradiance

Ib reaching the earth’s surface can be expressed as

The diffuse radiation

Id can be approximately expressed as

The calculation formula of Surfacenet radiation

I is

In Equations (3)–(5),

A is the radiation flux outside the atmosphere,

k is a dimensionless factor representing the depth of light,

θz is the zenith angle of the sun, and

n is the number of days from 1 January.

A and

k are functions considering the distance between the sun and the Earth’s revolution, which are obtained from the iterative regression of the actual irradiance and can be expressed as follows:

In Equations (6) and (7), a1, a2, a3, k1, k2, and k3 are the coefficients obtained via iterative regression.

The ASHRAE model does not consider the surface and climatic characteristics. To adapt to the climatic and geographical conditions of the station to be measured, it is necessary to change the parameters of the ASHRAE model to determine the change trend in irradiance that most conforms to the station. The ASHRAE model primarily relies on the sine function in

A and

k to reflect the annual and diurnal changes in solar irradiance [

13].

A mainly affects the annual solar irradiance change, and

k mainly affects the change in solar irradiance within the day.

a1 mainly affects the annual solar irradiance amplitude,

a2 mainly affects the annual variation in solar irradiance, and

a3 mainly affects the variation trend in solar irradiance in the year.

k1 mainly affects the amplitude of intraday solar irradiance, whereas

k2 and

k3 have weak effects on the changes in annual and diurnal solar irradiance.

In this study, the parameters of the ASHRAE model are fitted using the ordinary least squares model, and the specific steps are as follows:

The sine function in A is a function of the day. The function y = a2sin(2π(n−a3)/365), with a3 as the variable, is defined as the annual solar irradiance change curve. a2 and a3 are fitted by the least squares method according to the annual change in the actual irradiance amplitude of the station, and the parameters a2 and a3 are adjusted to make the ASHRAE model conform to the annual solar irradiance change trend of the station.

The actual irradiance is backfilled according to the function y = a2sin(2π(n−a3)/365) of the determined parameters; therefore, the variation in solar irradiance in the year is shielded, and the rest is the basic amplitude of solar irradiance in the year. Parameter a1 in A is solved according to Equations (3) and (6).

According to the change in solar irradiance in the day, the parameter k1 in k is improved using the least squares model.

According to the prediction results of annual solar irradiance, the parameters k2 and k3 are improved using the least squares model.

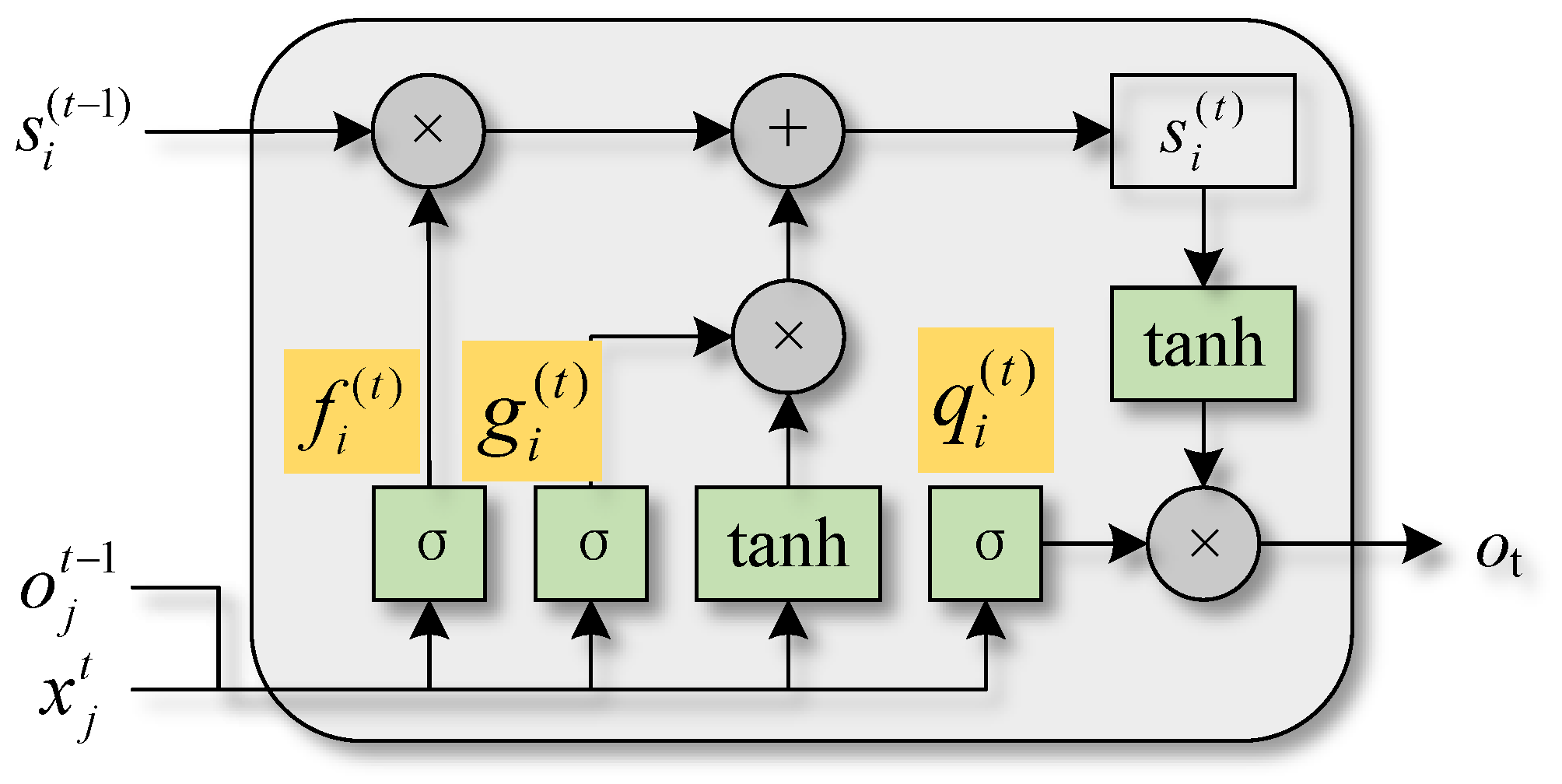

3.3. LSTM Model

A long short-term memory network (LSTM) is a kind of time recurrent neural network, which is used to solve the long-term dependence problem of RNN. It saves information for later use so as to prevent earlier information from disappearing gradually during processing. It controls the state of memory cells through a mechanism called a ‘gate’.

Figure 9 shows a cycle unit structure [

47].

The information transmission process can be expressed as Equations (8)–(12).

where

and

are bipolar sigmoid activation functions and hyperbolic tangent activation functions, respectively.

indicates the forgetting gate output value of the

ith unit at the current time,

indicates the value of the input gate at the current time, and

indicates the value of the output gate.

represents input vector of the current moment, and

is its number.

includes all the outputs of a cell at the previous moment, which can be regarded as the current hidden layer vector.

represent input bias, input weight, and cycle weight of the cell forgetting gate;

represent input bias, input weight, and cycle weight of the input gate; and

are input bias, input weight, and cycle weight of the output gate.

indicates the value of the current time of the state unit. The weight of the state unit self-cycle is the output of the forgetting gate

.

The solar irradiance obtained from

Section 3.2 is the theoretical irradiance that can be received by the ground under clear sky conditions (good weather, cloudless sky). Then, taking theoretical irradiance as the reference value, LSTM model training is used to fit the attenuation effect of meteorological factors (NWP variables and cloud features) on theoretical irradiance to obtain the prediction model of solar irradiance, as shown in

Figure 10.

4. Case Study

4.1. Data Description and Preprocessing

This study used the historical data of the station (in Wuwei, Gansu Province, China) from 20 September 2017 to 29 December 2018 and selected a relatively complete 113-day period to validate the proposed method.

The satellite cloud image was a free infrared cloud image provided by the FY-2E satellite of the China Satellite Meteorological Center. The longitude and latitude of the station are 102.07° E and 38.35° N; therefore, the range of the satellite cloud images selected in this study is 101°–103° E and 37.5°–39.5° N.

To describe the features of clouds more accurately, the regional cloud image was transformed from the spatial domain to the frequency domain by Fourier transform, and then the textural details of the cloud image were enhanced by homomorphic filtering.

Homomorphic filtering is a method that simultaneously compresses the image brightness range and enhances the image contrast in the frequency domain, which can simultaneously increase the contrast, as well as standardise the brightness to make the illumination of the image more uniform, and achieve enhancement of image details in dark areas without loss of the image details in bright areas by eliminating the multiplicative noises in the image [

48]; a specific flowchart is shown in

Figure 11.

The image

f(

x,

y) composed of pixel points (

x,

y) usually consists of two parts, the irradiation function

i(

x,

y) and the reflection function

r(

x,

y), as shown in Equation (13).

i(

x,

y) describes the illuminated portion of the image, which is in the low frequency, and

r(

x,

y) describes the texture details of the image, which is in the high frequency.

The two parts of the image multiply together to represent the image and cannot be processed separately, while the homomorphic filtering uses a logarithmic function to transform the two parts from multiplication to addition, as shown in Equation (14).

The image

F(

u,

v) and the irradiation function

I(

u,

v) and reflection function

R(

u,

v) in the frequency domain are obtained by Fourier transform to the frequency domain, as shown in Equation (15).

The image is enhanced using the filter function

H(

u,

v) as shown in Equation (16).

Since the reflection function is concentrated in the high-frequency band,

H(

u,

v) is chosen as the high-pass filtering function in order to obtain more texture details in the image, and its expression is shown in Equation (17).

where

and

are the high-frequency gain value and low-frequency gain value, respectively (selecting

> 1 and

< 1 can achieve the purpose of attenuating the low frequency and enhancing the high frequency); c is the sharpness of the slope of the control function; and

D(

u,

v) and

D0 denote the distance from the centre of the frequency and the cutoff frequency, respectively.

Considering the cloud image at 00:30 on 27 September 2017 as an example, the results after homomorphic filtering are shown in

Figure 12. Compared with the previous images, we can see that the cloud characteristics are better and more accurate after homomorphic filtering.

4.2. Determination of the Input Variables

The choice of suitable input variables is crucial for determining the forecasting accuracy of artificial intelligence models. Insufficient input data can lead to poor forecasting performance, while including too many variables can cause the issue of dimensionality, which may result in longer computation times and reduced model efficiency [

20].

The input variables for the ultra-short-term solar irradiance prediction model is divided into three parts: the first part is the textural features of clouds, which is extracted from the CNN with textural convolution kernel and predicted using an ARMA model in the next 1–4 h, the second part is the theoretical irradiance in clear sky calculated by the improved ASHRAE model, and the third part of inputs are NWP variables that are strongly related to solar irradiance, including temperature, humidity, precipitation, shortwave radiation, and sensible heat flux. The correlation coefficients, which are calculated from Pearson’s correlations, are presented in

Table 2.

The satellite cloud image enhanced by homomorphic filtering is feature-mapped to obtain the feature cloud image, and then the textural features of the cloud are extracted from the feature cloud image based on the CNN with TCK.

Based on the extracted cloud textural features at historical times, ARIMA models are established to predict future cloud textural features. Firstly, a stationarity test on the cloud textural features is performed. If it is a non-stationary time series, differential processing is performed until it becomes a stationary sequence. The number of differences is the order of difference

d in the ARIMA (

p,

d,

q) model. Then, based on the autocorrelation function (ACF) and partial autocorrelation function (PACF) of the feature sequence, the AIC and SC function values are calculated to determine the optimal autoregressive term

p and moving average term

q. In the ARIMA model,

p indicates that the future time value is related to the previous

p time values, and

q indicates that the future time noise is related to the previous

q time noise. Therefore, the order of the ARIMA model is determined based on the values of

p and

q. According to the above steps, the ARIMA time-series prediction model for cloud texture features was trained and debugged, and the parameters of the ARIMA model were finally determined to be

p = 7,

d = 1, and

q = 3. The prediction results of each textural feature as the prediction time increases are shown in

Figure 13. It can be seen that the prediction results are very good within 1–4 h, which can fit the changes in cloud cluster characteristics in a short period of time. The prediction effect worsens between 5 and 8 h. And the Mean Absolute Percentage Error (MAPE) is calculated as shown in

Table 3 below, from which it can be seen that the prediction errors of all four features in 1–4 h are relatively small, and the prediction errors in 5–8 h are larger. Based on the prediction effect of cloud textural features, it is shown that the predicted cloud textural features are applicable to solar irradiance prediction up to 4 h ahead.

The time resolution of the satellite cloud image used in this study was 1 h, while the time resolution of the predicted power of the photovoltaic power station was generally 15 min. The textural features of clouds at 15 min intervals were obtained by linear interpolation.

Based on the correction steps of the clear sky model described in

Section 3.2, we set the parameters of formulas

A and

k in Equations (6) and (7) as follows.

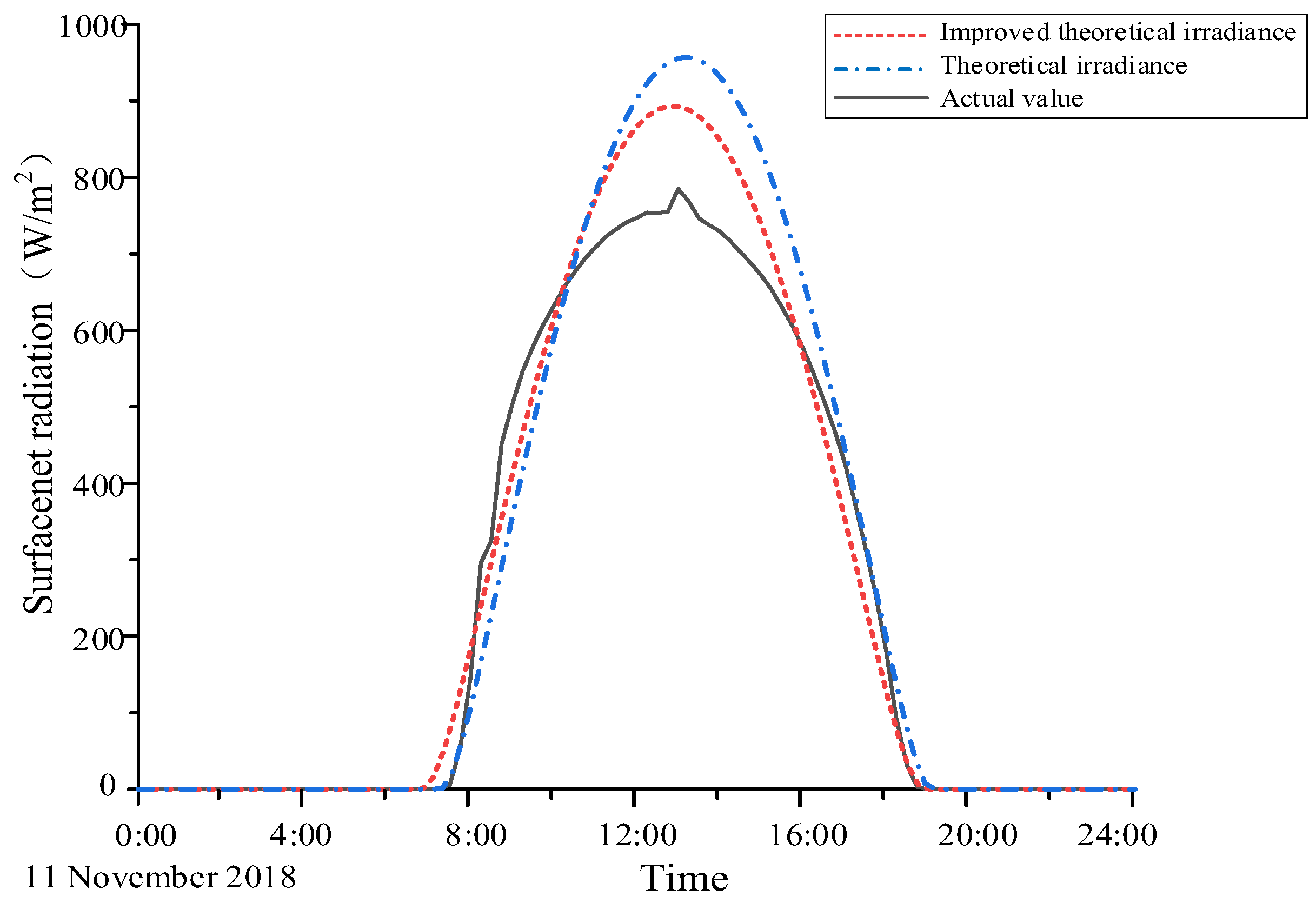

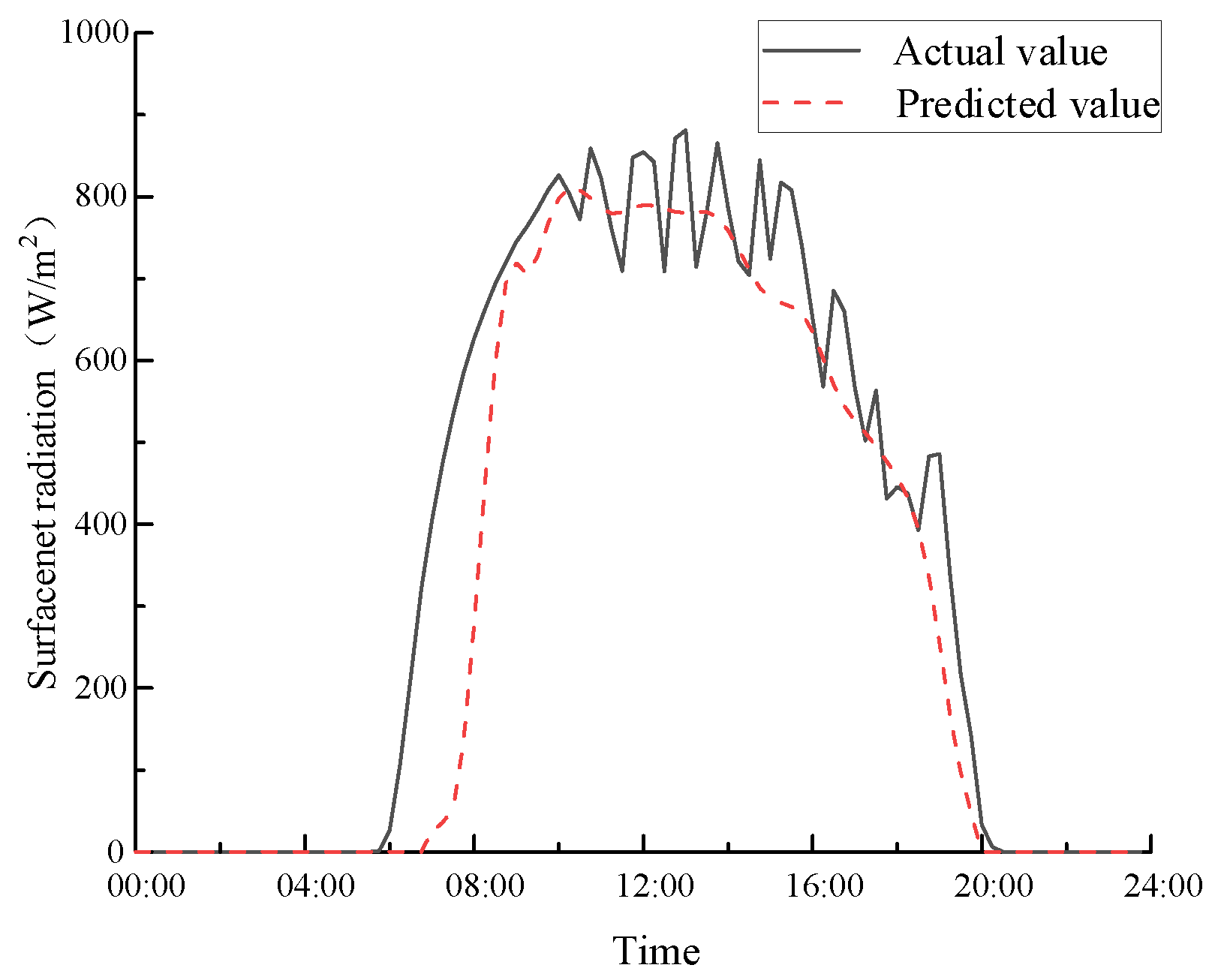

The results of the theoretical irradiance in the clear sky calculated based on the improved ASHRAE model are shown in

Figure 14. The error calculations are shown in

Table 4 below. It can be seen that the theoretical irradiance obtained from the improved clear-sky model is more consistent with the trend in actual irradiance changes and has a smaller error margin. However, the ASHRAE model does not consider complex weather changes, and the prediction result is the theoretical value under clear sky conditions. Although the change trend in solar irradiance can be fitted, the predicted value is higher than the actual value. Therefore, it is necessary to amend the theoretical irradiance in the clear sky model with other weather information.

4.3. Compared Models

To highlight the effectiveness of the proposed integrated framework, comprehensive comparisons are made in this study by constructing the other models. The experiments are divided into four groups: the first group is the NWP result, which is represented by the WRF-Solar model; the second group contains three common models, which are the ANN, SVR, and LSTM prediction results; the third group of experiments are the results of the partially integrated framework, CNN-LSTM and CS-LSTM; and the fourth group are the results of three feature extraction methods—PO-CS-LSTM, SO-CS-LSTM, and TCK-CS-LSTM—where PO represents Prewitt operator and SO represents Sobel operator.

The NWP and CS models display the rough solar radiation without any machine learning process to show the necessity of machine learning. The performance of the two popular machine learning methods, including ANN and SVR, is evaluated to highlight the performance of the standalone LSTM model. The partially integrated models CNN-LSTM and CS-LSTM are constructed to demonstrate the effectiveness of cloud feature extraction with CNN and the CS calculation in forecasting solar radiation. The feature extraction-based models including PO-CS-LSTM and SO-CS-LSTM are also constructed to compare with the proposed TCK-CS-LSTM model to further validate the effectiveness of the TCK method in extracting textural features of clouds and improving the solar radiation forecasting.

4.4. Performance Criteria

Three types of widely used metrics were used to evaluate model performance. The mean absolute error (MAE), the root mean square error (RMSE), and the correlation coefficient (R

2) were described as follows.

where

yi and

are the actual and forecasted solar radiation at point

i, respectively;

n represents the total number of forecasting points.

5. Results and Discussion

The nine models constructed in this study are applied to the solar radiation datasets for forecasting. The model performances are evaluated by three statistical measures including RMSE, MAE, and R2. This section compares the results of the proposed TCK-CS-LSTM model with the other contrast methods including NWP, ANN, SVR, LSTM, CS-LSTM, CNN-LSTM, PO-CS-LSTM, and SO-CS-LSTM. It can be categorised into four groups. The first group includes the NWP model, which provides predictions without machine learning. The second group includes three traditional models—ANN, SVR, and LSTM—to highlight the performance of LSTM. The third group consists of partially integrated models CNN-LSTM and CS-LSTM, which combine CNN extraction of cloud features and CS computation of forecast solar radiation to improve prediction. The last group compares three cloud models based on feature extraction, the PO-CS-LSTM, the SO-CS-LSTM, and the proposed TCK-CS-LSTM, where PO and SO stand for Prewitt operator and Sobel operator, respectively, and TCK is the texture convolution kernel proposed in this paper.

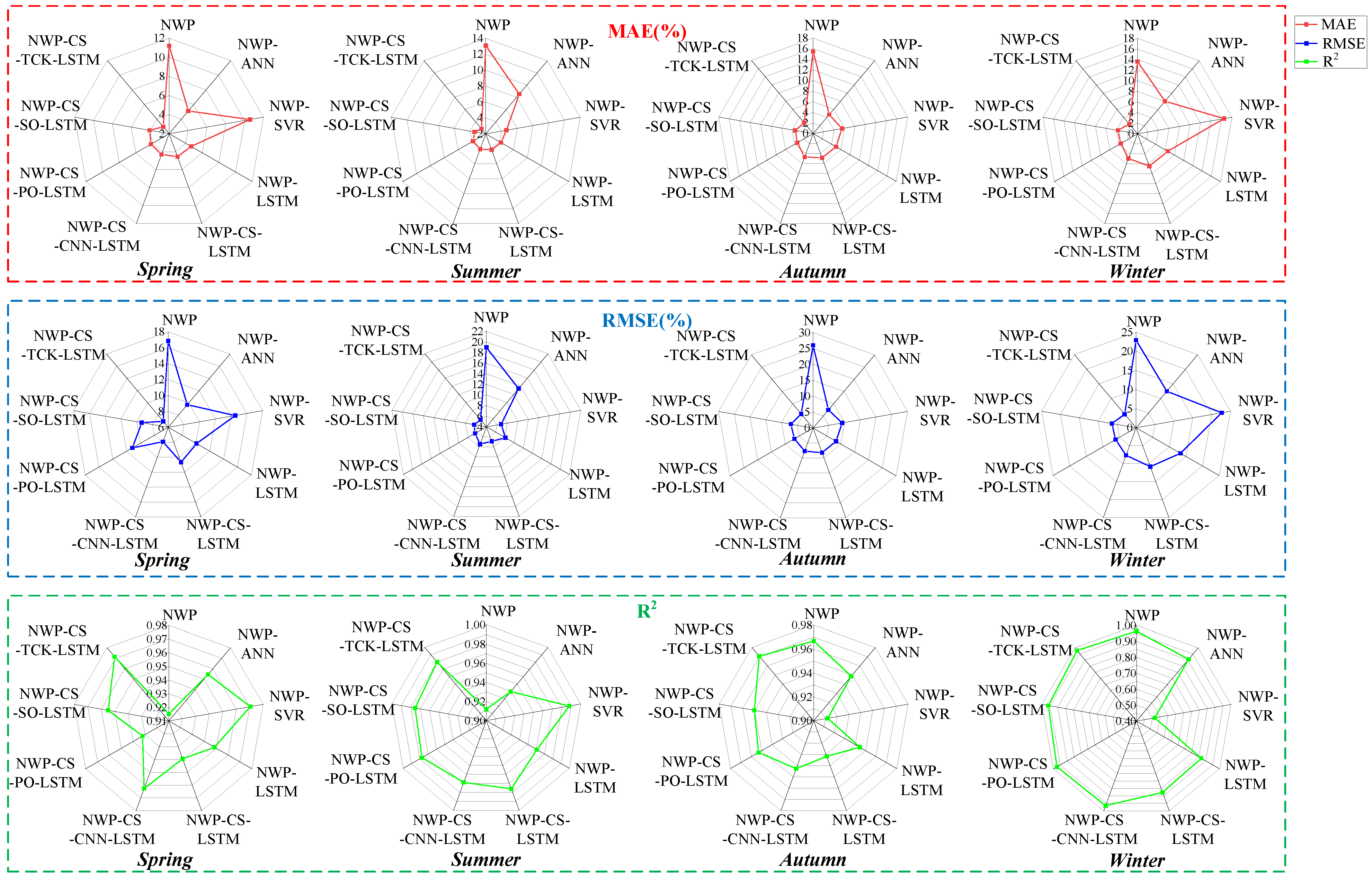

5.1. Comparison of Prediction Results Using Different Input Variables

The solar radiation forecasting results using different input variables for the datasets are firstly compared and analysed in this section. The values of the three statistical measures including RMSE, MAE, and R

2 are listed in

Table 5 for the proposed and the contrast models. To exhibit the error measures more intuitively, the RMSE, MAE, and R values of the nine models for the four datasets are illustrated using radar plots in

Figure 15. The experimental results in

Table 5 demonstrate the solar irradiance prediction performance of different models under different seasons. By comparing the performance metrics such as MAE, RMSE, and R

2, it can be seen that the proposed TCK-CS-LSTM model exhibits optimal prediction performance in all seasons.

Several conclusions can be drawn from the detailed analysis of

Table 5 and

Figure 15.

NWP lacks sufficient accuracy in predicting solar radiation; thus, relying only on numerical weather prediction for solar radiation prediction is limiting, and the introduction of machine learning methods is necessary.

Comparing ANN, SVR, and LSTM models, in general, LSTM has a more balanced performance in different seasons, demonstrating its advantages in dealing with complex nonlinear relationships. In contrast, ANN, although it performs well in some cases, is not as robust as LSTM in some seasons. The SVR model performs well under specific conditions, but its performance fluctuates greatly when dealing with complex weather changes, and its performance is obviously not as stable as that of LSTM. Therefore, LSTM is more adaptable and stable in dealing with diverse weather conditions.

Comparison results between the partially integrated models (CNN-LSTM and CS-LSTM) and the comprehensive integrated models (PO-CS-LSTM, SO-CS-LSTM, and TCK-CS-LSTM) show that the comprehensive integrated models exhibit superior performance in solar radiation prediction. The partially integrated model significantly improves the prediction accuracy by combining the results of cloud features and clear-sky irradiance through the LSTM model, respectively. However, the comprehensive integrated model is further optimised on this basis by combining the calculated results of cloud features and clear-sky irradiance, which significantly improves the prediction accuracy and stability of the model.

The comparison between the integrated models shows that the TCK-CS-LSTM model outperforms PO-CS-LSTM and SO-CS-LSTM in all the metrics, indicating that the cloud features extracted by using the texture convolution kernel provide more accurate prediction results than those extracted based on Prewitt and Sobel operators. TCK-CS-LSTM significantly improves the accuracy and stability of cloud feature extraction by introducing the texture convolution kernel, thus demonstrating the optimal prediction performance under complex weather conditions. The texture convolution kernel significantly improves the accuracy and stability of cloud feature extraction and thus demonstrates the optimal prediction performance under complex weather conditions. TCK-CS-LSTM provides the most accurate and reliable solar radiation prediction compared to other models.

5.2. Comparison of Prediction Results with Different Convolution Kernels

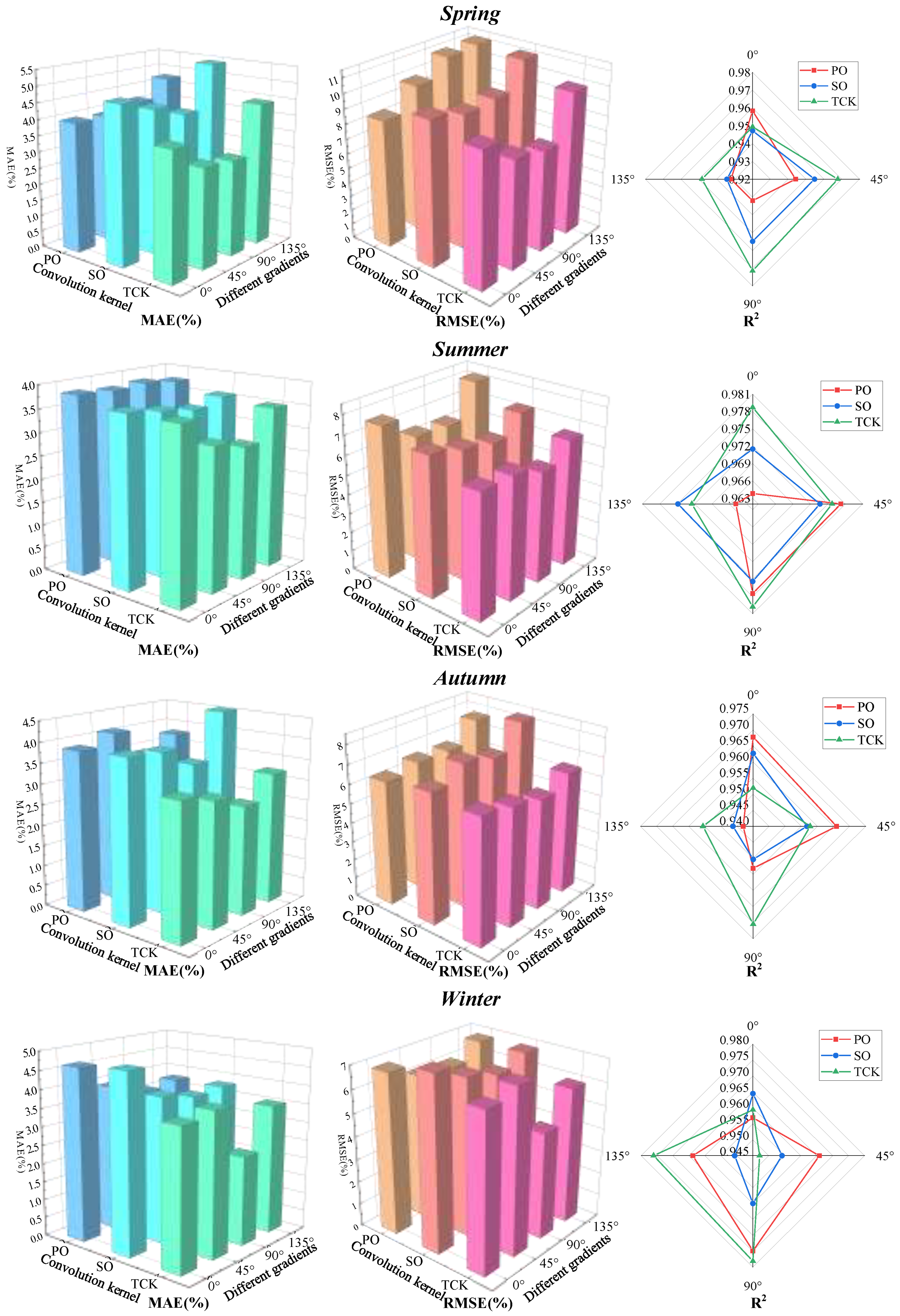

The experimental results in

Table 6 and

Figure 16 demonstrate the effect of cloud features extracted from four gradient orientations with different convolutional kernels on the solar irradiance prediction accuracy in different seasons. By comparing the performance metrics of MAE, RMSE, and R

2, TCK performs much better in all seasons and metrics. In contrast, the Prewitt and Sobel operators perform with less overall accuracy than TCK in MAE and RMSE, although with some stability. This suggests that TCK is able to better extract the cloud texture features, thus providing higher prediction accuracy and stability. Further analysing the effect of cloud mass features extracted by TCK on the prediction results under different gradient orientations, the performance of 90° orientation is particularly outstanding. For example, in spring and winter, the MAE of the 90° direction is as low as 2.95% and 2.41%, respectively, which is more accurate than the results of other directions. The corresponding RMSE and R

2 values also indicate that the convolution kernel in the 90° gradient direction is more capable of capturing cloud details and complex features.

Table 7 and

Figure 17 compare the TCK of different sizes, and the 3 × 3 convolutional kernel has the best overall performance, outperforming the other sizes both in terms of prediction error (MAE and RMSE) and model fit (R

2). In contrast, the 1 × 1 convolutional kernel is too small to effectively capture complex cloud features, resulting in lower prediction accuracy. 5 × 5 and 7 × 7 convolutional kernels are able to capture more information about image features, but the larger sizes may introduce more noise or redundant information, which in turn reduces the prediction performance of the model.

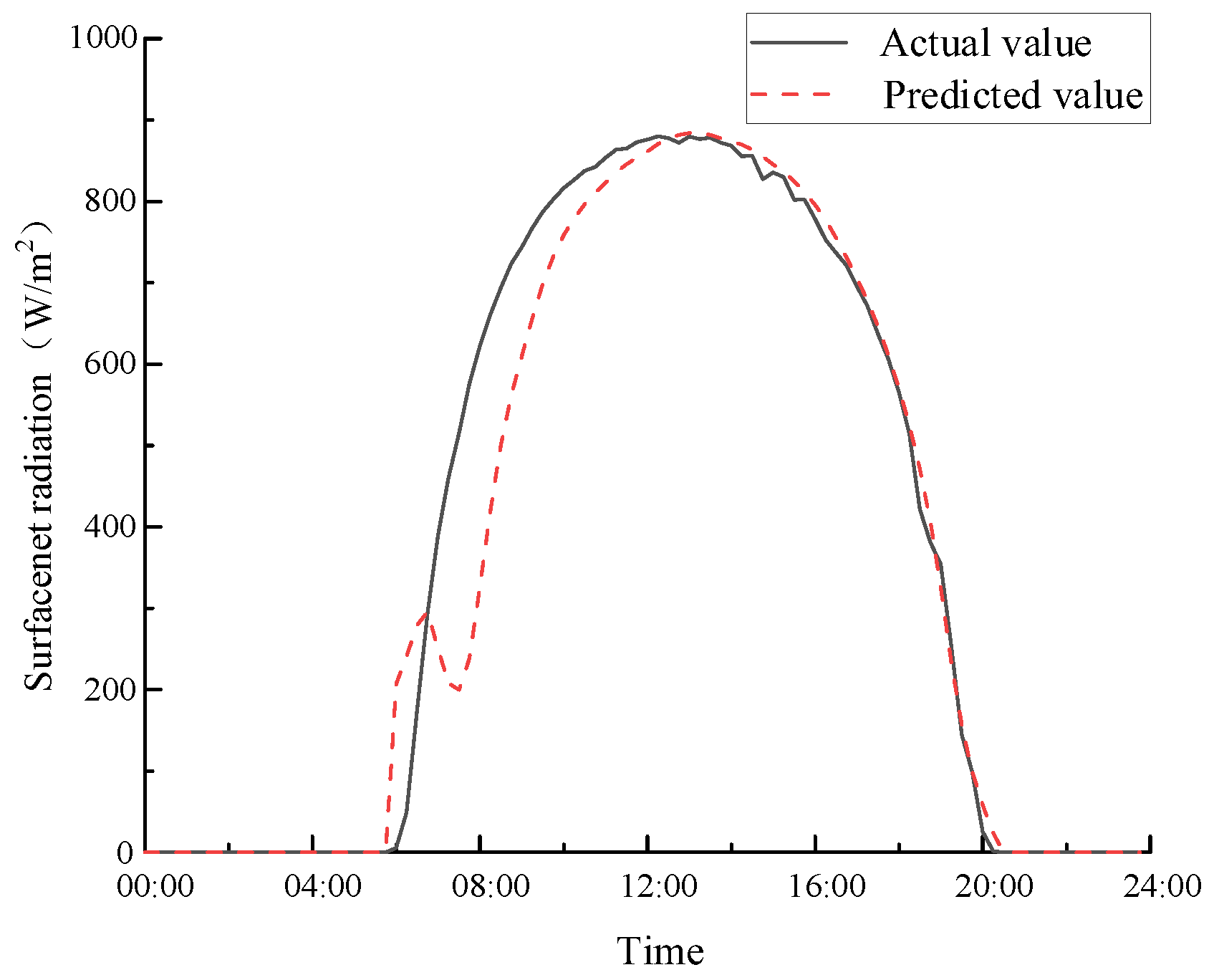

5.3. Predicted Results Under Different Weather Conditions

The validity of the prediction under different weather is verified using the TCK-CS-LSTM model with the textured convolution kernel of size 3 × 3 and 90° gradient direction.

Figure 18,

Figure 19 and

Figure 20 below show the prediction results under sunny and cloudy skies, respectively, from which it can be seen that the model is able to make accurate predictions under different weather conditions.