This section presents a detailed analysis of the performance of the convolutional neural network models used for waste classification; first, the classification metrics used to evaluate the effectiveness of the models are described, including accuracy, recall, F1-score and Matthews correlation coefficient (MCC). The performance of the models in terms of accuracy evolution throughout training is then analyzed, providing an overview of their ability to generalize to test data.

4.2. Perfomance Analysis of CNN Models

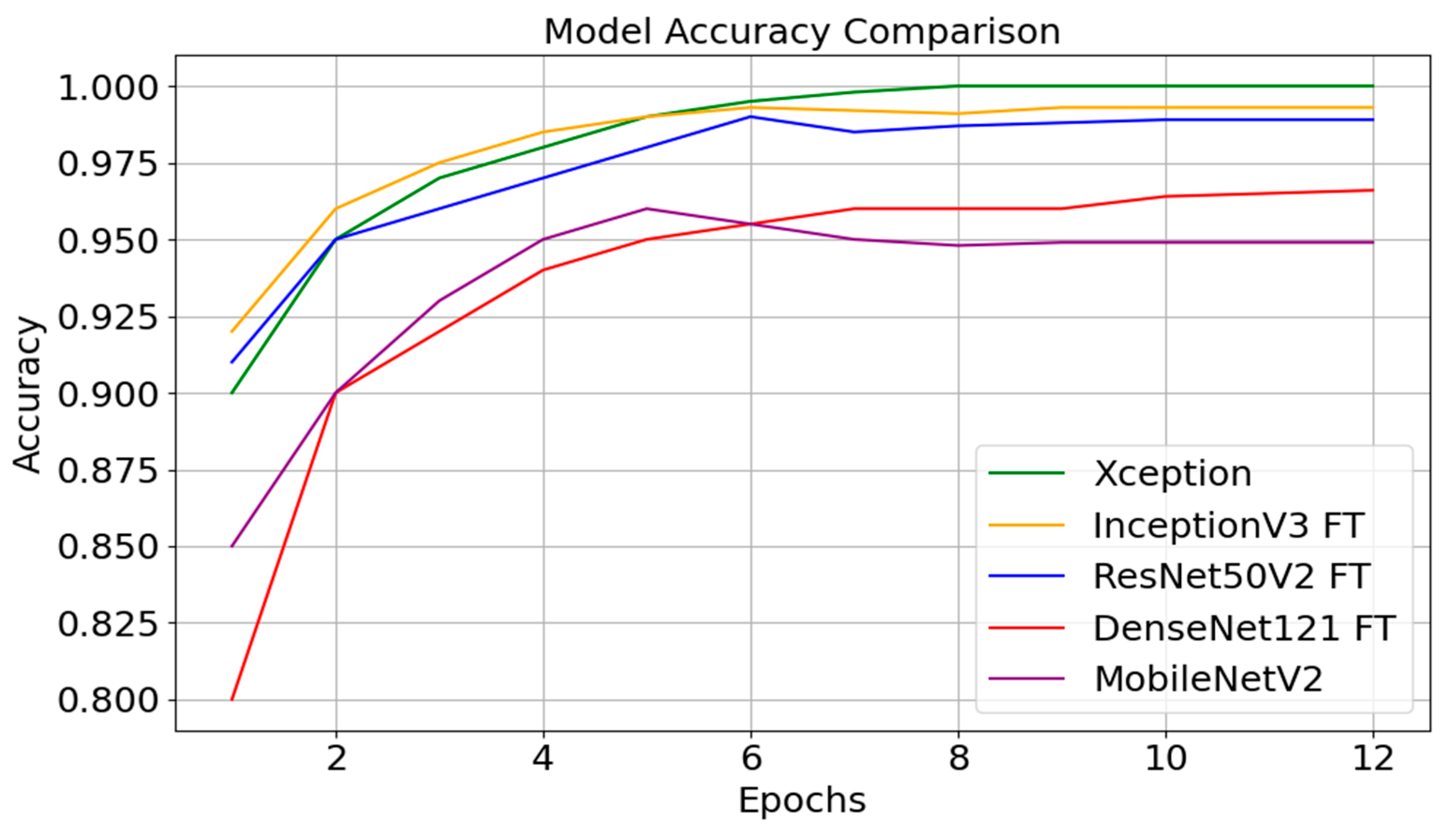

Figure 3 illustrates the evolution of the accuracy of the different neural network models over 12 epochs, a key indicator of the percentage of correct predictions in relation to the total samples evaluated. Initially, all models experienced a significant increase in accuracy during the early epochs, reflecting an accelerated learning phase. This initial trend suggests that the models were able to capture the most distinctive features of the classes at an early stage of training. However, from epochs 4 to 6, the accuracy of most models stabilized, indicating that they had achieved an adequate representation of the training data with no apparent overfitting.

In terms of overall performance, the Xception and InceptionV3 (FT) models stood out, achieving over 98% accuracy in the final epochs. This demonstrates their exceptional ability to classify images accurately and consistently. ResNet50V2 (FT) also exhibited strong performance, nearing 98%, although it showed slight variations in the final epochs. These fluctuations may indicate some sensitivity to configuration changes or lower stability in convergence compared with Xception and InceptionV3. While training these models is free in terms of licensing, the time required for training varies significantly. Deeper architectures such as Xception and ResNet50V2 require more computational resources and longer training times compared with lighter models such as MobileNetV2, which train faster but achieve lower accuracy. Furthermore, during classification, Xception and InceptionV3 (FT) required slightly longer processing times than MobileNetV2 due to their higher complexity. These results indicate a trade-off between classification accuracy, training time, and processing speed, highlighting the importance of selecting a model based on the specific needs of the application.

In contrast, the DenseNet121 (FT) and MobileNetV2 models showed comparatively lower performance, stabilizing around 94% and 92%, respectively. This suggests that these architectures may be less suitable for this specific task or may have required further hyperparameter tuning to optimize their performance. Their inability to reach the accuracy levels of Xception and InceptionV3 highlights limitations in extracting the critical features necessary for accurate classification in this dataset.

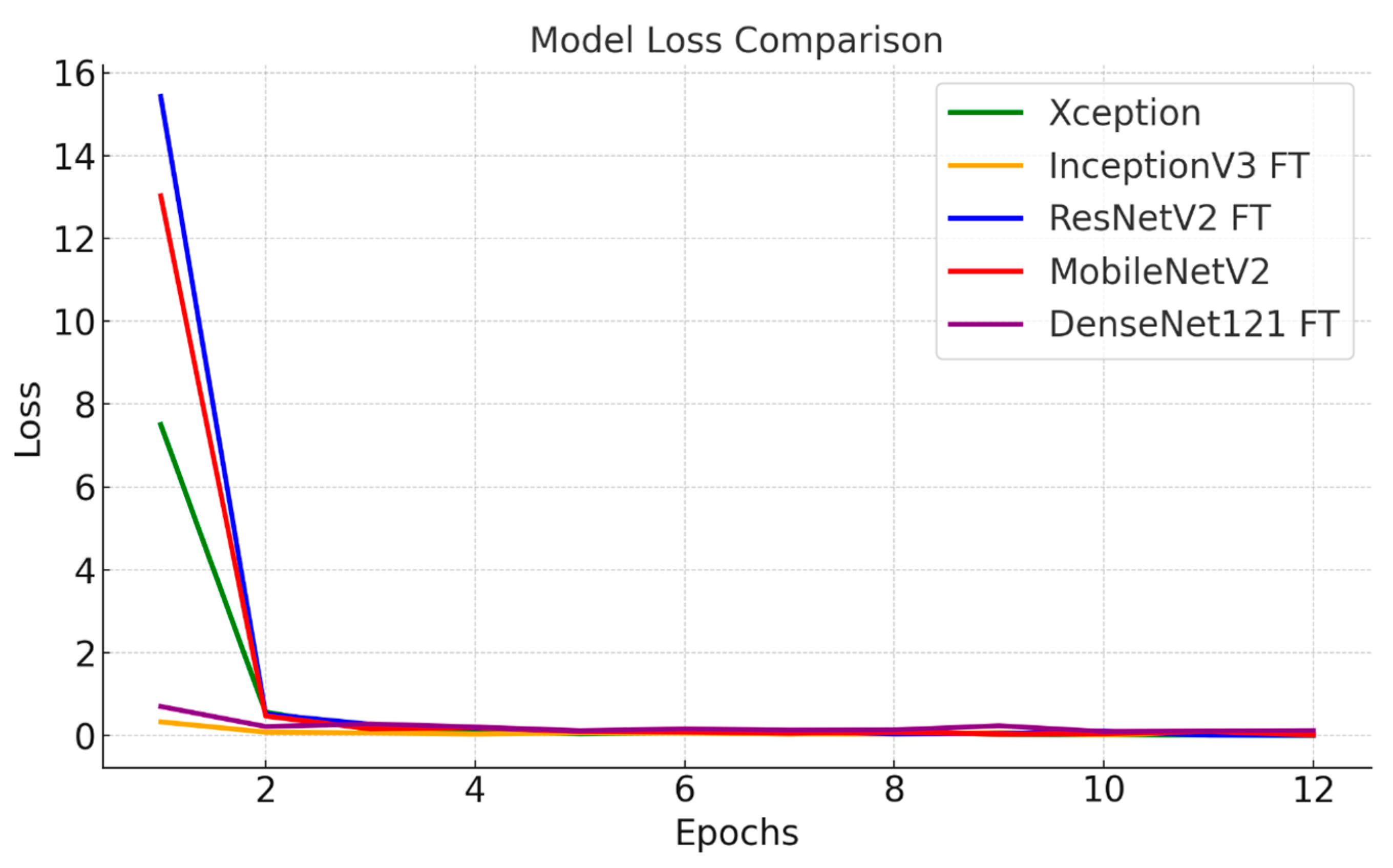

Figure 4 illustrates the evolution of loss across the different classification models over 12 epochs, reflecting the quality of each model’s fit to the correct labels. Loss is a crucial indicator of learning quality, with its reduction signifying progress toward improved predictions. In this case, the loss function used is categorical cross-entropy, which is suitable for multiclass classification problems.

Across the first two epochs, all models exhibited a notable reduction in loss, indicating that the initial parameters quickly adjusted to the dataset’s fundamental characteristics. This sharp decline suggests that the optimization process effectively captured the key relationships between features and target labels early on, rapidly improving the learning performance.

As training progressed, from the third epoch onward, the models reached a stabilization point where the loss decreased to values close to zero and remained constant. This indicates that the models achieved stable convergence, fitting the training data well without showing signs of overfitting, as the loss values did not fluctuate significantly in the final epochs.

Notably, although some models began with slightly higher initial loss levels, they all converged toward similar loss values by the end of training. The stability observed in the final epochs indicates that hyperparameter tuning and optimization techniques, such as fine-tuning, were applied effectively. These adjustments maximized the models’ predictive capacity while avoiding oscillation or divergence issues.

4.3. Trainig Results

Table 5 presents a detailed evaluation of the performance of the different neural network models when using the RMSprop optimizer with variations in the hyperparameters: learning rate, epochs, and batch size.

The results obtained in the evaluation of different neural network models under specific hyperparameter configurations revealed significant differences in performance. Using the RMSprop optimizer, the Xception, DenseNet121, and MobileNetV2 models performed favorably with a learning rate of 0.02, achieving high levels of accuracy in validation and testing. Xception stood out with a validation accuracy of 98.78% and a test accuracy of 98.12%, along with efficient training times (2820 s). DenseNet121 also demonstrated excellent performance, achieving a test accuracy of 96.60% with a training time of 823 s, highlighting a strong balance between accuracy and computational efficiency.

MobileNetV2, while slightly less accurate than Xception and DenseNet121, showed solid validation and test performance with a learning rate of 0.02, achieving an adequate balance between accuracy and processing times. Although effective for classification tasks, its accuracy remained lower compared with Xception and DenseNet121.

The InceptionV3 and ResNet50V2 models achieved their best results with a learning rate of 0.01, recording validation accuracies above 97% and shorter training times, indicating that both models converged efficiently with a lower learning rate. In contrast, MobileNetV3 exhibited the most limited performance, achieving its maximum validation accuracy with a learning rate of 0.005, but with significantly lower results compared with the other architectures. This suggests considerable room for improvement for application in this context.

The difference in accuracy between the Xception and ResNet50V2 models can be attributed to ResNet50V2’s inability to fully adapt to classification with high efficiency. Factors such as the nature of the data may introduce variations that ResNet50V2 does not handle so well as Xception, and despite its archiutecture having more parameters (23.6 M vs. 20.8 M), it is not so efficient at extracting relevant features. Therefore, the difference was not due to the training speed, but to the model’s ability to efficiently generalize over the evaluation and test data.

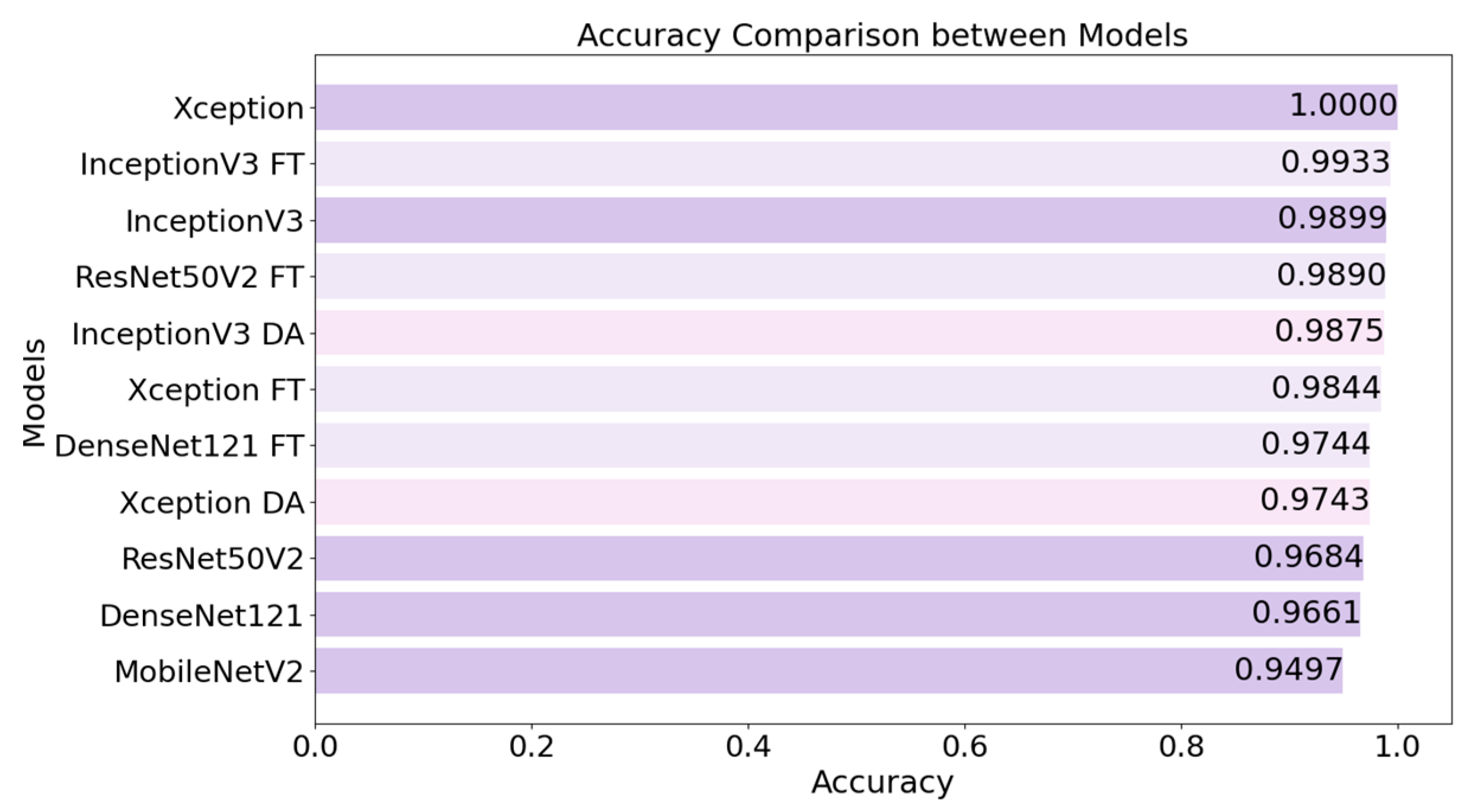

The accuracy comparison between image classification models highlighted the outstanding performance of Xception, which achieved a perfect accuracy of 1.0.

Figure 5 lists the rates of correct predictions in all samples in the test suite. InceptionV3 also showed excellent performance with fine-tuning (FT), no data augmentation, and data augmentation (DA), with accuracies greater than 0.98, suggesting that both techniques are effective in improving the generalizability of the model, although fine-tuning offers a slight advantage in this case.

The ResNet50V2 and DenseNet121 models also achieved high levels of accuracy in both configurations (FT and no data augmentation), while fine-tuning provided a small additional improvement. In contrast, MobileNetV2 showed lower accuracy, peaking at 0.9497. This result suggests that although MobileNetV2 is suitable for applications with computational processing constraints, it has limitations compared with more robust models such as Xception or InceptionV3 in terms of ultimate accuracy.

Figure 5 shows that the Xception, InceptionV3 FT, and ResNet50V2 architectures achieved the highest levels of accuracy, standing out as the most effective options for image classification tasks in this context. Based on the accuracy values, none of the CNN models proposed for the automatic waste classification system managed to improve accuracy when data augmentation was applied; so, for this type of system, it is not considered necessary to use the data augmentation technique.

The comparative results for the image classification models emphasize the superior performance of Xception, which achieved a test accuracy of 98.13% and high scores across metrics including accuracy (98.17%), recall (98.12%), F1 score (98.13%), and MCC (0.9722). These results position Xception as the most balanced and robust model for this task. InceptionV3 also ranked among the high-performing models, with a test accuracy of 97.43% and consistent values across all metrics, including an MCC of 0.9614, underscoring its reliability in classification tasks.

DenseNet121 and ResNet50V2 delivered solid performances, with test accuracies around 96%, along with metrics such as precision, recall, and F1-Score near 0.961, making them reliable options, albeit slightly behind Xception and InceptionV3. Conversely, MobileNetV2, despite achieving a test accuracy of 94.97%, fell short in accuracy and generalizability compared with the more complex models. MobileNetV3’s results reflected its significantly lower performance, with a test accuracy of 55.20% and an MCC of 0.3616. This significant drop in performance is mainly attributable to its limited ability to handle the complexities of the dataset, as it is designed for lightweight applications and lacks the depth required to model complex patterns effectively. Furthermore, the reduced number of parameters and layers in MobileNet models, while advantageous for reducing computational costs, compromises their ability to capture and process fine-grained features, leading to decreased accuracy in tasks requiring high levels of precision, such as residue classification. These findings underscore the trade-off between computational efficiency and classification performance and emphasize the need to align model selection with the specific requirements and complexity of the application. These results, detailed in

Table 6, underscore that Xception, InceptionV3, and DenseNet121 were the most effective models in terms of accuracy and consistency. Thus, they are highly recommended for classification tasks in contexts where accuracy is critical.

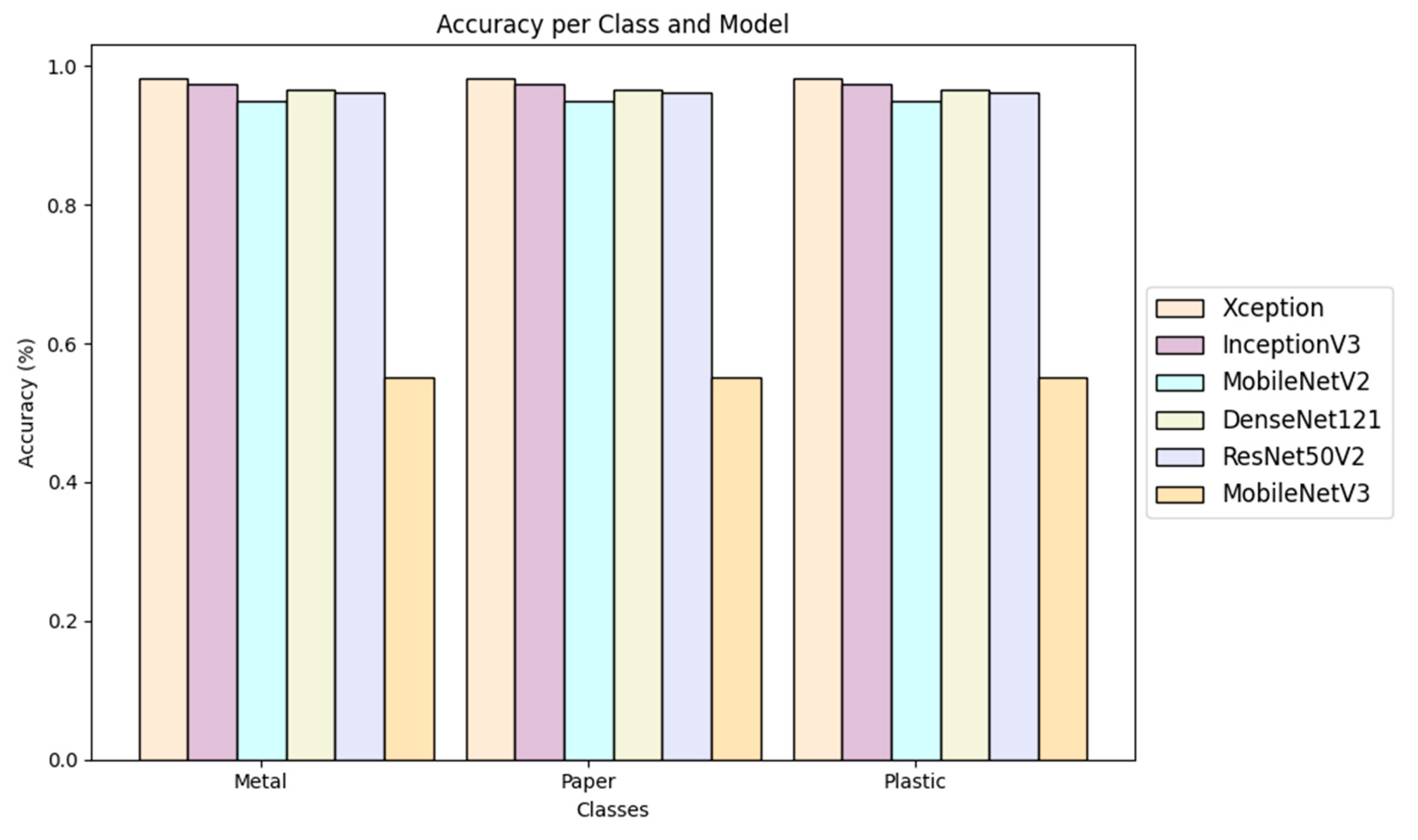

The Xception and InceptionV3 models demonstrated superior performance in waste categorization, achieving accuracies close to 100% in classifying metal, paper, and plastic. This highlights their exceptional effectiveness and consistency in this task. MobileNetV2, DenseNet121, and ResNet50V2 also performed well overall, though they exhibited slight decreases in accuracy, particularly in the plastics category. This suggests that, while robust, these models may encounter difficulties in classifying certain materials, probably due to variations in visual characteristics. This indicates that, despite their robustness, these models may struggle with certain materials due to variations in visual features [

12].

Conversely, MobileNetV3 showed significantly lower accuracy across all categories, underscoring its limitations in the context of this specific waste sorting application.

Figure 6 illustrates how the model selection significantly impacted the ability to distinguish between material categories, with Xception and InceptionV3 emerging as the most reliable and accurate architectures for this task.

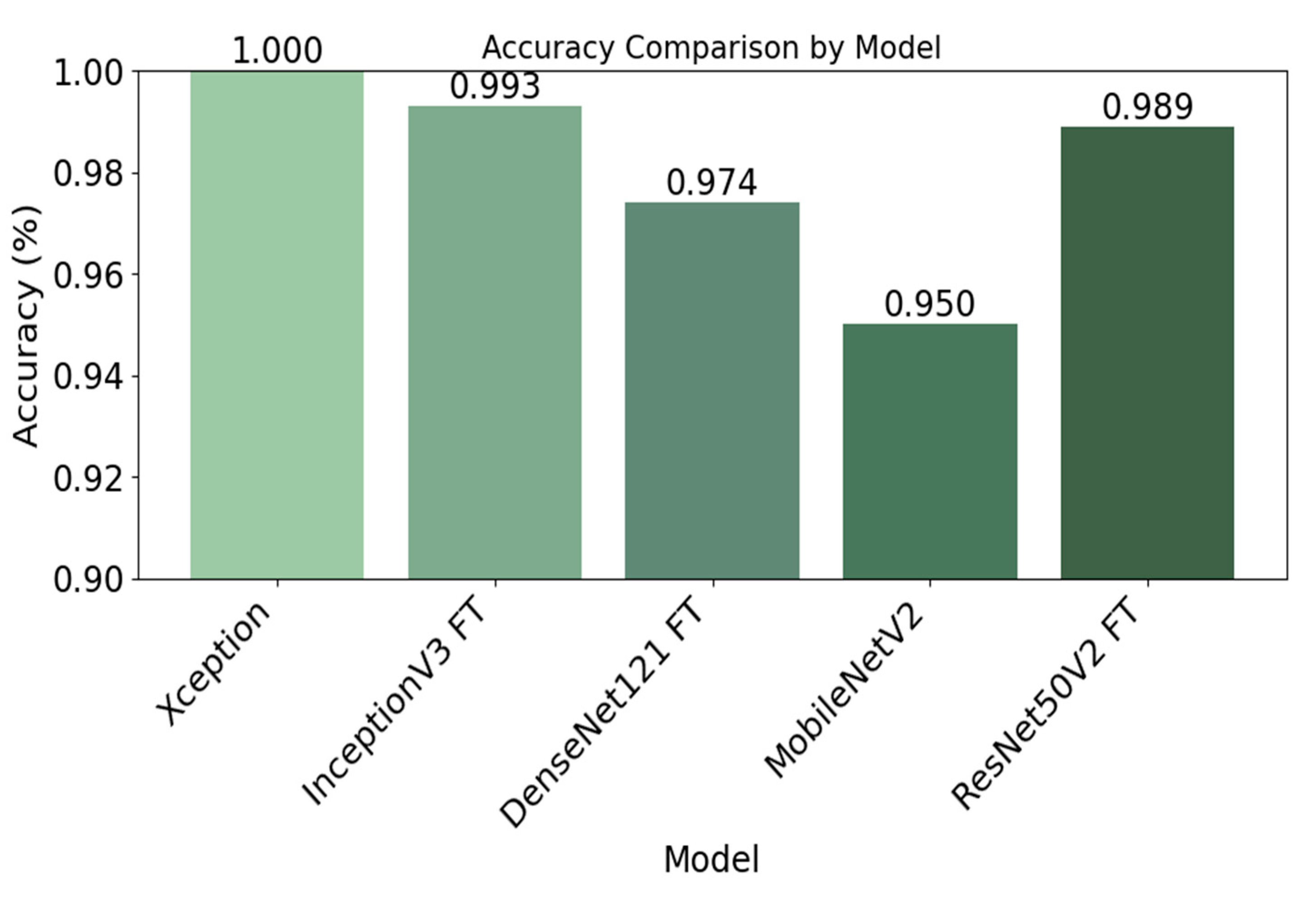

After applying data augmentation (DA) and fine-tuning (FT) techniques, the results revealed significant differences in the accuracy achieved by each model. Xception, even without data augmentation or fine-tuning, achieved optimal performance with a perfect accuracy of 1.0, establishing itself as the most effective model in terms of pure accuracy. InceptionV3’s accuracy reached 0.993 when fine-tuning was applied, highlighting how this technique enhances its generalizability. Similarly, ResNet50V2 showed a substantial improvement in accuracy, achieving 0.989 with fine-tuning, demonstrating its positive adaptation to the adjusted data.

These findings, illustrated in

Figure 7, emphasize the effectiveness of fine-tuning in improving the accuracy of models like InceptionV3 and ResNet50V2, while Xception maintained its peak performance without requiring additional modifications.

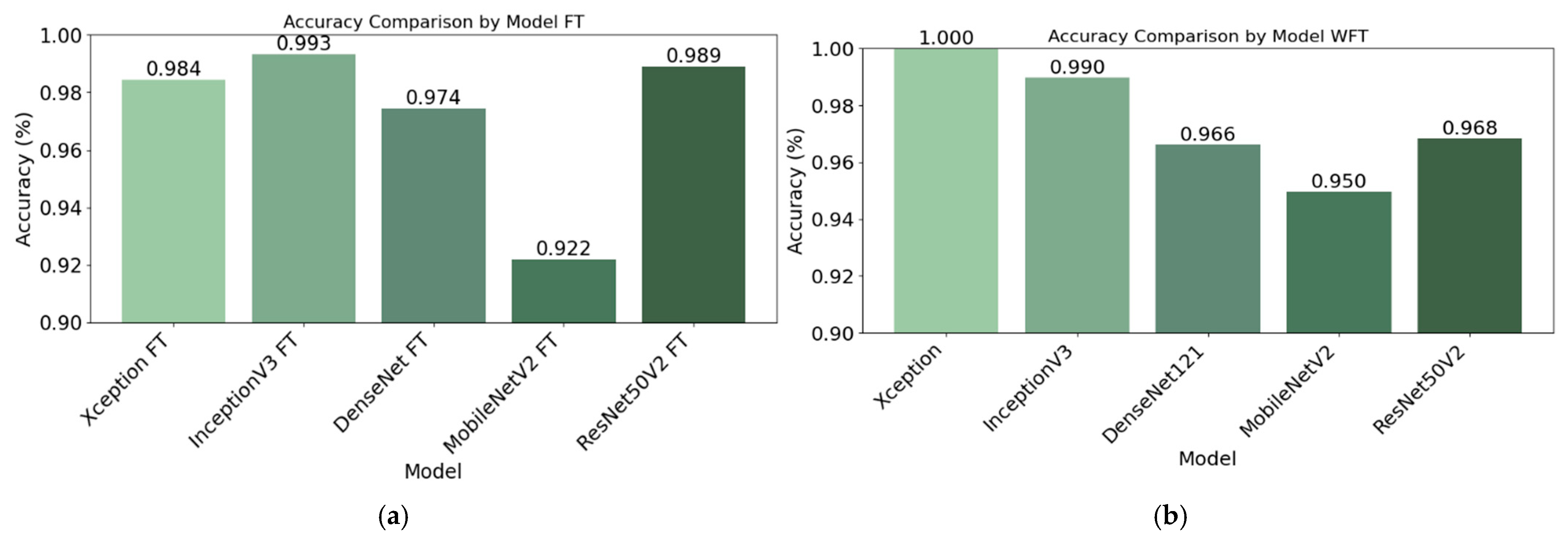

The fine-tuning technique demonstrated a positive impact on models such as InceptionV3, DenseNet121, and ResNet50V2 (see

Figure 8a). For instance, InceptionV3 achieved an accuracy of 0.993 with fine-tuning (see

Figure 8b), compared with 0.990 without fine-tuning. Similarly, DenseNet121 and ResNet50V2 improved their performance with fine-tuning, reaching accuracies of 0.974 and 0.989, respectively, compared with their baseline values.

However, Xception and MobileNetV2 exhibited decreased accuracy with fine-tuning, suggesting that this technique is not universally beneficial, particularly for architectures designed to be lighter and more computationally efficient. These results highlight the importance of tailoring optimization techniques to the specific structural characteristics of each model.

The results of the study demonstrated that, based on accuracy and overall performance metrics, the Xception model emerged as the most robust and effective option for the image classification task, achieving perfect accuracy without fine-tuning. InceptionV3 with fine-tuning (FT) and ResNet50V2 also exhibited outstanding performance, with near-perfect accuracy values, particularly when fine-tuning was applied.

These findings indicate that both InceptionV3 FT and ResNet50V2 FT can achieve high generalizability and are reliable for tasks requiring high precision. However, Xception distinguishes itself as the most efficient and accurate model, maximizing performance without the need for additional adjustments. This makes it the optimal choice for high-demand sorting applications where accuracy and stability are critical.

4.4. Confusion Matrix

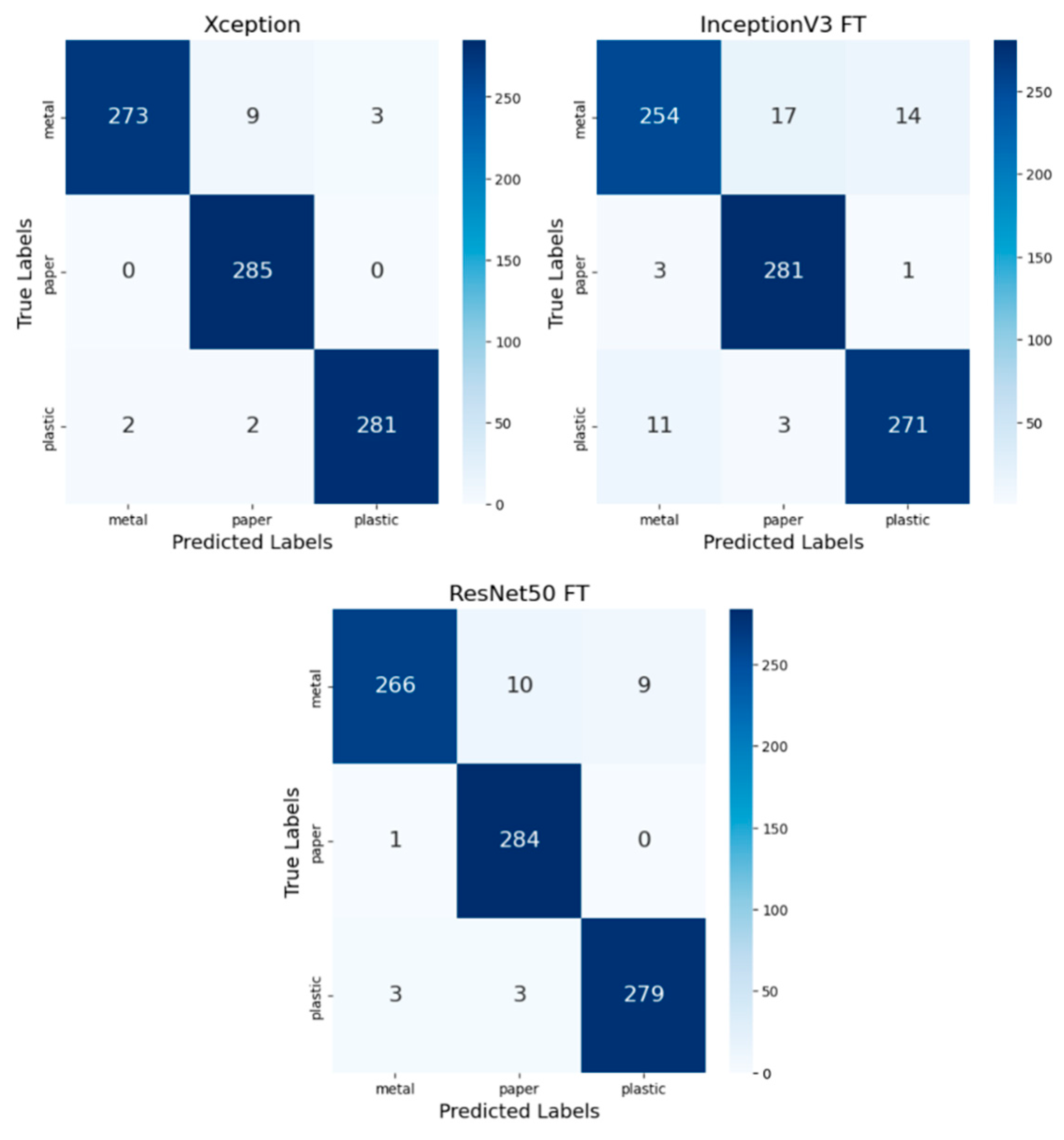

The results shown along the diagonal of each array represent the correct predictions made during the testing phase, using a set of images from the “test” folder [

30]. Analysis of the confusion matrices (see

Figure 9) reveals key differences in the performance of the Xception, InceptionV3 FT, and ResNet50 FT models.

Xception demonstrated the highest overall accuracy, with only 12 errors in the “metal” class (9 misclassified as paper and 3 as plastic) and perfect performance in the “paper” class, with no classification errors. In the “plastic” class, Xception recorded four errors (two misclassified as metal and two as paper), establishing itself as the most robust model.

InceptionV3 FT, while accurate, produced more errors than Xception: 31 in the “metal” class (17 misclassified as paper and 14 as plastic), 4 in “paper” (3 as metal and 1 as plastic), and 14 in “plastic” (11 as metal and 3 as paper). These results suggest lower accuracy, particularly in the “metal” and “plastic” classes.

ResNet50 FT delivered an acceptable performance, with 19 errors in the “metal” class (10 misclassified as paper and 9 as plastic), 1 error in the “paper” class (misclassified as metal), and 6 in the “plastic” class (3 as metal and 3 as paper). Although ResNet50 FT demonstrated solid accuracy, it fell short of the performance achieved by Xception.