Enhancing Personal Identity Proofing Services Through AI for a Sustainable Digital Society in Korea

Abstract

1. Introduction

2. Literature Review

2.1. Digital Trust

2.2. AI-Enabled Identity Risks and Synthetic Identities

2.3. AI and Sustainability in Identity Proofing

2.4. Comparative Governance for Digital Identity

2.5. Sustainability and SDG Alignment in Prior Research

2.6. Synthesis and Research Gaps

2.7. Methods

- Data sources and inclusion criteria: This study draws on publicly available Korean legal and regulatory sources—specifically the Information and Communications Network Act and the Personal Information Protection Act—and the national Identity Proofing Service Guideline, together with designation/audit checklists and international standards for digital identity. Items were included if they had official provenance (statutes, regulations, or formally issued guidance) or were peer reviewed; opinion pieces and undocumented claims were excluded.

- Expert panel and selection: This study convened an expert panel of eight participants—regulators (n = 2), AIPI operators (n = 3), and academics (n = 3)—purposively sampled for role and domain diversity. All experts had at least seven years of relevant experience; no current conflicts of interest were reported.

- Structured-judgment protocol: This study used a two-round Delphi process. In Round 1, experts independently rated the relevance and feasibility of candidate capabilities and indicators on a five-point scale. In Round 2, anonymized group feedback was shared (item-level median and interquartile range, IQR, with rationales), and experts re-rated the items. The consensus threshold was pre-specified as median ≥ 4 and IQR ≤ 1; items that did not meet the threshold were revised or dropped.

- Instruments and reproducibility: This study describes the indicator codebook—summarizing construct definitions, formulas, typical data sources, and measurement cadence—in the text. Owing to legal and institutional constraints, detailed audit criteria and templates are not publicly released; however, they may be made available on a limited basis under confidentiality upon reasonable request and subject to institutional approvals. Reproducibility is supported by the specification of inclusion criteria, Delphi consensus thresholds, and indicator formulas in the text.

- Indicator derivation and mapping to SDGs: This study retained indicators that achieved consensus and mapped to SDG 9 and 16. The final set prioritizes observable, audit-ready signals—e.g., PAD performance (AUC/FAR/FRR), detection and response latency (MTTD/MTTR), fairness gaps (ΔFNR/ΔFPR across salient groups), incident-disclosure timeliness, and energy per 1000 verifications.

3. Analysis of Korea’s AIPI Audit Framework and Its Limitations

3.1. Legal Basis and Audit Lifecycle

3.2. Evaluation Domains and Items

3.2.1. Organizational/Operational Controls (Physical, Technical, and Administrative)

3.2.2. Technical Capability

3.2.3. Financial Capability

3.2.4. Adequacy of Facility Scale

3.3. Structural Limitations and the Sustainability Gap

3.3.1. Limitations in Physical and Environmental Controls

3.3.2. Limitations in Network and System Security Operations

3.3.3. Limitations in Personal Data Protection and User Rights

3.3.4. Limitations in Substitute Credential and CI Management

3.3.5. Limitations in Access Log Management

4. AI-Integrated Framework

4.1. Need for Improvement

4.2. Domain-Specific Improvement Measures

4.2.1. Physical and Environmental Controls

- Improvement Plan: introduce active controls that integrate and analyze access logs, CCTV streams, and environmental sensor data for real-time alerts on abnormal behavior. Apply AI-based predictive maintenance [17] to detect early signs of failure in power and cooling systems and enable preemptive response.

- Contribution to Sustainability (SDG 9): improves predictive resilience and infrastructure reliability, strengthening technical and operational sustainability.

4.2.2. Network and System Security Operations

- Improvement Plan: to overcome rule- or signature-based FDS limitations, mandate machine learning–based FDS [45] capable of detecting novel patterns such as synthetic identity fraud and account laundering through large-scale analysis of issuance, renewal, and inquiry histories, network indicators, and user behavior.

- Contribution to Sustainability (SDG 9): enhances proactive defense against new attacks, stabilizes the digital economy, and protects economic sustainability.

4.2.3. Personal Data Protection and User Rights

- Improvement Plan: implement dynamic consent mechanisms [18], enabling users to modify or withdraw consent for AI model training and data use in real-time. Mandate explainable AI (XAI) in automated decision-making and institutionalize regular algorithmic bias audits.

- Contribution to Sustainability (SDG 16): reinforces data self-determination, transparency and accountability, building trust as the core of social sustainability.

4.2.4. CI/DI Management

- Improvement Plan: integrate PAD and liveness detection [43] into the identity proofing to detect deepfakes and synthetic identities. Establish graph-based analytics across issuance, renewal, and access histories to uncover organized misuse patterns.

- Contribution to Sustainability (SDG 16): protects individuals from identity fraud and strengthens system adaptiveness, advancing trustworthy institutions.

4.2.5. Access Log Management

- Improvement Plan: revise log-retention and review requirements to include AI-based risk scoring and prioritization, and establish AI-automated audit reporting for periodic assessments.

- Contribution to Sustainability (SDG 9 and 16): shifts from retrospective traceability to real-time response and automated risk management, reinforcing technical and operational sustainability.

4.3. Proposed New Audit Criteria for Sustainability

4.3.1. Synthetic Identity and Presentation Attack Detection

- Criterion title: detection of synthetic identities and presentation attacks

- Assessment description: evaluate whether presentation-attack and deepfake detection technologies are integrated into the identity proofing to identify manipulated biometric data—including synthetic video, voice, fingerprints, or facial information—and whether a regular performance verification system is in place.

- Assessment scope: identity proofing processes and biometric authentication modules

- Evidence materials: presentation-attack/forgery-detection reports, performance validation documentation

- Interview subjects: Service operators and security managers

- On-site inspection: demonstration of presentation-attack detection functionality

4.3.2. Anomalous Behavior Analytics

- Criterion title: Anomalous behavior analytics based on transaction and access logs

- Assessment description: modify the existing FDS operation and access log management criteria to mandate the integration of machine learning– and deep learning–based anomaly detection. Evaluate whether the institution automatically detects abnormal patterns in access records, transaction logs, and issuance histories, and applies these results to real-time incident response.

- Assessment scope: transaction servers, log management systems, and issuance record management systems

- Evidence materials: AI detection result reports, anomaly detection logs

- Interview subjects: security monitoring personnel, data analysis staff

- On-site inspection: demonstration of AI-based anomaly detection system

4.3.3. AI Explainability and Bias Governance

- Criterion title: XAI and algorithmic bias governance.

- Assessment description: AI algorithms used in automated identity proofing and personal data processing must provide explainable decision-making processes. Institutions should regularly assess, report, and remediate data bias and the potential for discrimination.

- Assessment scope: personal data processing systems and automated decision-making modules.

- Evidence materials: XAI reports, bias verification results, records of corrective actions

- Interview subjects: chief privacy officer, AI developers.

- On-site inspection: demonstration of explainability functions and bias governance procedures.

4.3.4. Predictive Security Management

- Criterion title: Predictive management for disaster and system failure response.

- Assessment description: revise the current disaster recovery and emergency response standards to assess whether the institution has implemented AI-driven predictive security management-includes verifying the use of sensor data and system logs to detect abnormal facility conditions, trigger automated alerts, and enable preemptive corrective actions.

- Assessment scope: data centers, internet data centers, and disaster recovery centers.

- Evidence materials: Reports on predictive model operations, analytical results from sensor data.

- Interview subjects: facility operators and security administrators.

- On-site inspection: demonstration of AI-based predictive maintenance and alert systems.

4.3.5. International Compliance and Interoperability

- Criterion title: Compliance with international standards and mutual recognition.

- Assessment description: assess whether the AIPI’s security and certification systems conform to international standards. In particular, verify whether institutional and technical measures are in place to facilitate mutual recognition when offering services to overseas users.

- Assessment scope: overall operation of the PIPS.

- Evidence materials: international standards compliance reports, mutual recognition review documents.

- Interview subjects: international standards officers, policy managers.

- On-site inspection: verification of compliance measures and current implementation status related to international standards.

| Audit Criterion | Assessment Description | Contribution to Sustainability |

|---|---|---|

| Detection of Synthetic Identities and AI-Based Forgery | Assess whether presentation-attack/deepfake-detection technologies are implemented to identify manipulated biometric data (synthetic video, voice, or facial information) and whether the institution has a regular performance verification system. | Reduces the risk of personal data breaches and identity fraud, thereby enhancing social trust and minimizing economic losses. |

| Anomalous Behavior Analysis Based on Transaction and Access Logs | Expand the existing FDS criteria to require machine learning and deep learning–based anomaly detection. Automatically detect abnormal patterns in access, transaction, and issuance logs and utilize results for real-time response. | Strengthens resilience against emerging intelligent attack types, enhances the stability of the digital economy, and improves technical resilience. |

| Explainability (XAI) and Algorithmic Bias Verification | Ensure that AI algorithms used in automated identity verification and data processing are explainable and that data bias and discrimination risks are regularly verified and corrected. | Reinforces user data self-determination and enhances the fairness and transparency of algorithms, contributing to social sustainability. |

| Predictive Management for Disaster and System Failure Response | Revise the disaster recovery criteria to include AI-based predictive security management using sensor data and system logs, enabling automated alerts and preemptive actions. | Enhances resilience against unpredictable disasters and ensures service continuity, improving technical and operational sustainability |

| Compliance with International Standards and Mutual Recognition | Evaluate whether the institution’s certification system conforms to global standards (e.g., NIST SP 800-63, EU eIDAS) and whether institutional and technical measures are in place to ensure cross-border mutual recognition. | Secures global compatibility of national systems, promotes cross-border digital identity interoperability, and supports the development of a trust-based global ecosystem |

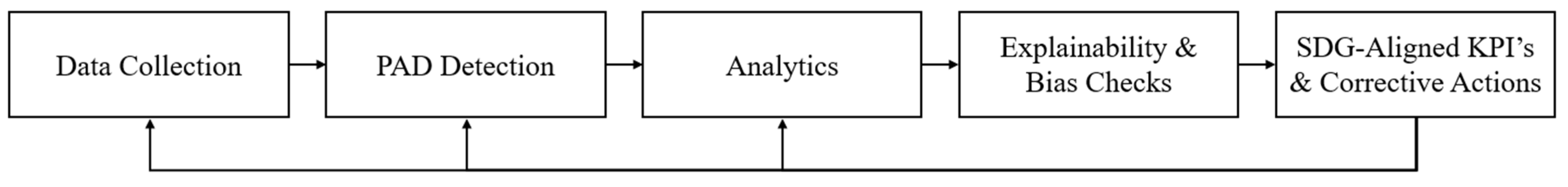

4.4. Adaptive Audit Flow

5. Policy Implications with Respect to SDGs 9 and 16

5.1. Social Sustainability (SDG 16–16.6, 16.10)

- Indicative policy actions: mandate model cards and incident registers; require periodic fairness audits and public summaries; ensure assisted channels and human escalation; require accessibility conformance and low-spec UI modes.

- Indicative assessment metrics: availability of model documentation; bias metrics; rate of substantiated user complaints; incident-disclosure timeliness; accessibility coverage; assisted-channel success rate; Δ usage/access gap across vulnerable cohorts; explainability coverage.

- Metric definitions and formulas: Table 5 quantifies the social sustainability dimensions—transparency, fairness, accountability, and inclusiveness—aligned with SDG 16 (targets 16.6 and 16.10). Each indicator specifies computation and linkage to governance outcomes in AI-integrated identity-proofing [14,48,49,50,51].

5.2. Economic Sustainability (SDG 9–9.1, 9.c)

- Indicative policy actions: publish an audit profile and certification cadence; introduce proportional oversight tied to risk scores; apply risk-tiered profiles with scaled cadence/sampling/evidence; link procurement/certification eligibility to profile conformance; provide performance-based incentives.

- Indicative assessment metrics: fraud loss rate per 10 k verifications; MTTD/MTTR improvement over baseline; percentage of remediation completed on time; false-positive block rate; risk score distribution; profile conformance rate.

5.3. Technological and Operational Sustainability (SDG 9–9.1)

- Indicative policy actions: require PAD/liveness in proofing workflows; require periodic disaster recovery (DR)/business continuity planning (BCP) drills that include AI-enabled attack scenarios; set alert-response SLOs (e.g., notification-to-response ≤ X minutes) and model-drift monitoring with retraining workflows.

- Indicative assessment metrics: PAD performance (AUC, FAR/FRR under attack conditions); drill success rates; RTO/RPO adherence in recovery tests; energy per 1 k verifications (kWh/1000); carbon intensity (kgCO2e/1000 verifications); water use efficiency—WUE (L/kWh); power usage effectiveness—PUE (unitless); time-to-contain (minutes); drift detection frequency (events/month).

5.4. International Interoperability (SDG 9 and 16)

- Indicative policy actions: adopt mapping matrices to international clauses; pilot mutual-recognition pathways with peer regulators; publish LoA mappings and conformance gap lists with dated remediation plans.

- Indicative assessment metrics: conformance score against selected profiles; number of services operating under mutual recognition; gap-closure lead time (days); number/duration of cross-border pilots; proportion of controls covered by LoA mapping.

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAL | Authenticator Assurance Level |

| AIPI | Accredited Identity Proofing Institution |

| AUC | Area Under the ROC Curve |

| CI | Connecting Information |

| DI | Duplication Information |

| eIDAS | electronic Identification, Authentication and trust Service |

| FAL | Federation Assurance Level |

| FDS | Fraud Detection System(s) |

| FNR | False Negative Rate |

| FPR | False Positive Rate |

| IAL | Identity Assurance Level |

| IDP | Identity Provider |

| KCC | Korea Communications Commission |

| LoA | Level of Assurance |

| MTTD | Mean Time to Detect |

| MTTR | Mean Time to Restore |

| NIST | National Institute of Standards and Technology |

| PAD | Presentation Attack Detection |

| PIPS | Personal Identity Proofing Service |

| PUE | Power Usage Effectiveness |

| RRN | Resident Registration Number |

| SDG | Sustainable Development Goal |

| WUE | Water Usage Effectiveness |

| XAI | Explainable AI |

References

- Jung, K.; Yeom, H.G.; Choi, D. A Study on Big Data Based Non-Face-to-Face Identity Proofing Technology. KIPS Trans. Comput. Commun. Syst. 2017, 6, 421–428. [Google Scholar]

- Laurent, M.; Levallois-Barth, C. 4-Privacy Management and Protection of Personal Data. Digit. Identity Manag. 2015, 137–205. [Google Scholar] [CrossRef]

- Kim, J.B. Personal Identity Proofing for E-Commerce: A Case Study of Online Service Users in the Republic of Korea. Electronics 2024, 13, 3954. [Google Scholar] [CrossRef]

- Kim, S.G.; Kim, S.K. An Exploration on Personal Information Regulation Factors and Data Combination Factors Affecting Big Data Utilization. J. Korea Inst. Inf. Secur. Cryptol. 2020, 30, 287–304. [Google Scholar]

- Kim, J. Improvement of Digital Identify Proofing Service through Trend Analysis of Online Personal Identification. Int. J. Internet Broadcast. Commun. 2023, 15, 1–8. [Google Scholar]

- Hwang, J.; Oh, J.; Kim, H. A study on the progress and future directions for MyData in South Korea. In Proceedings of the 2023 IEEE/ACIS 8th International Conference on Big Data, Cloud Computing, and Data Science (BCD), Hochimin City, Vietnam, 14–16 December 2023; pp. 49–51. [Google Scholar]

- Kim, J.B. A Study on the Quantified Point System for Designation of Personal Identity Proofing Service Provider based on Resident Registration Number. Int. J. Adv. Smart Converg. 2022, 11, 20–27. [Google Scholar]

- eIDAS Regulation. Available online: https://digital-strategy.ec.europa.eu/en/policies/eidas-regulation (accessed on 12 November 2025).

- Paul, A.G.; Michael, E.G.; James, L.F. Digital Identity Guidelines; NIST Special Publication: Gaithersburg, MD, USA, 2017. [Google Scholar]

- Manby, B. The Sustainable Development Goals and “Legal Identity for All”: “First, Do No Harm”. World Dev. 2021, 139, 105343. [Google Scholar] [CrossRef]

- Madon, S.; Masiero, S. Digital Connectivity and the SDGs: Conceptualising the Link through an Institutional Resilience Lens. Telecommun. Policy 2025, 49, 102879. [Google Scholar] [CrossRef]

- Lubis, S.; Purnomo, E.P.; Lado, J.A.; Hung, C.-F. Electronic Governance in Advancing Sustainable Development Goals: A Systematic Literature Review (2018–2023). Discov. Glob. Soc. 2024, 2, 77. [Google Scholar] [CrossRef]

- Sanina, A.; Styrin, E.; Vigoda-Gadot, E.; Yudina, M.; Semenova, A. Digital Government Transformation and Sustainable Development Goals: To What Extent Are They Interconnected? Bibliometric Analysis Results. Sustainability 2024, 16, 9761. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. NIST SP 800-63A-4: Digital Identity Guidelines—Identity Proofing and Enrollment; NIST: Gaithersburg, MD, USA, 2025. [Google Scholar]

- Çınar, O.; Doğan, Y. Novel Deepfake Image Detection with PV-ISM: Patch-Based Vision Transformer for Identifying Synthetic Media. Appl. Sci. 2025, 15, 6429. [Google Scholar] [CrossRef]

- Sohail, S.; Sajjad, S.M.; Zafar, A.; Iqbal, Z.; Muhammad, Z.; Kazim, M. Deepfake Image Forensics for Privacy Protection and Authenticity Using Deep Learning. Information 2025, 16, 270. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Jha, S.; Tharakunnel, K.; Westland, J.C. Data Mining for Credit Card Fraud: A Comparative Study. Decis. Support Syst. 2011, 50, 602–613. [Google Scholar] [CrossRef]

- Adadi, A.; Berrada, M. Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Kim, J.; Yum, K. Enhancing Continuous Usage Intention in E-Commerce Marketplace Platforms: The Effects of Service Quality, Customer Satisfaction, and Trust. Appl. Sci. 2024, 14, 7617. [Google Scholar] [CrossRef]

- Farhat, R.; Yang, Q.; Ahmed, M.A.O.; Hasan, G. E-Commerce for a Sustainable Future: Integrating Trust, Product Quality Perception, and Online-Shopping Satisfaction. Sustainability 2025, 17, 1431. [Google Scholar] [CrossRef]

- Ebrahimi, S.; Eshghi, K. A Meta-Analysis of the Factors Influencing the Impact of Security Breach Announcements on Stock Returns of Firms. Electron. Mark. 2022, 32, 2357–2380. [Google Scholar] [CrossRef]

- Chen, S.J.; Tran, K.T.; Xia, Z.R.; Waseem, D.; Zhang, J.A.; Potdar, B. The Double-Edged Effects of Data Privacy Practices on Customer Responses. Int. J. Inf. Manag. 2023, 69, 102600. [Google Scholar] [CrossRef]

- Nastoska, A.; Jancheska, B.; Rizinski, M.; Trajanov, D. Evaluating Trustworthiness in AI: Risks, Metrics, and Applications Across Industries. Electronics 2025, 14, 2717. [Google Scholar] [CrossRef]

- Sebestyen, H.; Popescu, D.E.; Zmaranda, R.D. A Literature Review on Security in the Internet of Things: Identifying and Analyzing Critical Categories. Computers 2025, 14, 61. [Google Scholar] [CrossRef]

- Abdullah, M.; Nawaz, M.M.; Saleem, B.; Zahra, M.; Ashfaq, E.b.; Muhammad, Z. Evolution Cybercrime—Key Trends, Cybersecurity Threats, and Mitigation Strategies from Historical Data. Analytics 2025, 4, 25. [Google Scholar] [CrossRef]

- Federal Trade Commission. Consumer Sentinel Network Data Book 2023; FTC: Washington, DC, USA, 2024. Available online: https://www.ftc.gov/reports/consumer-sentinel-network-data-book-2023 (accessed on 12 November 2025).

- Statista. Cybercrime Expected to Skyrocket in Coming Years. Available online: https://www.statista.com/chart/28878/expected-cost-of-cybercrime-until-2027/?utm_source=chatgpt.com (accessed on 12 November 2025).

- Ghiurău, D.; Popescu, D.E. Distinguishing Reality from AI: Approaches for Detecting Synthetic Content. Computers 2025, 14, 1. [Google Scholar] [CrossRef]

- Ramachandra, R.; Busch, C. Presentation Attack Detection Methods for Face Recognition Systems: A Comprehensive Survey. ACM Comput. Surv. 2017, 50, 1–37. [Google Scholar] [CrossRef]

- Fan, Z.; Yan, Z.; Wen, S. Deep Learning and Artificial Intelligence in Sustainability: A Review of SDGs, Renewable Energy, and Environmental Health. Sustainability 2023, 15, 13493. [Google Scholar] [CrossRef]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef]

- de Vries, A. The Growing Energy Footprint of Artificial Intelligence. Joule 2023, 7, 2191–2194. [Google Scholar] [CrossRef]

- Alonso, Á.; Pozo, A.; Gordillo, A.; López-Pernas, S.; Munoz-Arcentales, A.; Marco, L.; Barra, E. Enhancing University Services by Extending the eIDAS European Specification with Academic Attributes. Sustainability 2020, 12, 770. [Google Scholar] [CrossRef]

- Gregušová, D.; Halásová, Z.; Peráček, T. eIDAS Regulation and Its Impact on National Legislation: The Case of the Slovak Republic. Adm. Sci. 2022, 12, 187. [Google Scholar] [CrossRef]

- Wihlborg, E. Secure electronic identification (eID) in the intersection of politics and technology. Int. J. Electron. Gov. 2013, 6, 143. [Google Scholar] [CrossRef]

- Shemshi, V.; Jakimovski, B. Extended Model for Efficient Federated Identity Management with Dynamic Levels of Assurance Across eIDAS, REFEDS, and Kantara Frameworks for Educational Institutions. Information 2025, 16, 385. [Google Scholar] [CrossRef]

- Weitzberg, K.; Cheesman, M.; Martin, A.; Schoemaker, E. Between Surveillance and Recognition: Rethinking Digital Identity in Aid. Big Data Soc. 2021, 8, 20539517211006744. [Google Scholar] [CrossRef]

- Temoshok, D.; Choong, Y.-Y.; Galluzzo, R.; LaSalle, M.; Regenscheid, A.; Proud-Madruga, D.; Gupta, S. Lefkovitz, N. NIST SP 800-63-4: Digital Identity Guidelines; NIST Special Publication: Gaithersburg, MD, USA, 2025. [Google Scholar]

- Inza, J. The European Digital Identity Wallet as Defined in the EIDAS 2 Regulation. In Governance and Control of Data and Digital Economy in the European Single Market; Law, Governance and Technology Series; Springer: Berlin/Heidelberg, Germany, 2025; pp. 433–452. [Google Scholar]

- Raji, I.D.; Smart, A.; White, R.N.; Mitchell, M.; Gebru, T.; Hutchinson, B.; Smith-Loud, J.; Theron, D.; Barnes, P. Closing the AI Accountability Gap: Defining an End-to-End Framework for Internal Algorithmic Auditing. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 33–44. [Google Scholar]

- McGregor, S. Preventing Repeated Real-World AI Failures by Cataloging Incidents: The AI Incident Database. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 15458–15463. [Google Scholar]

- Mökander, J.; Morley, J.; Taddeo, M.; Floridi, L. Ethics-Based Auditing of Automated Decision-Making Systems: Nature, Scope, and Limitations. Sci. Eng. Ethics 2021, 27, 44. [Google Scholar] [CrossRef] [PubMed]

- Babaei, R.; Cheng, S.; Duan, R.; Zhao, S. Generative Artificial Intelligence and the Evolving Challenge of Deepfake Detection: A Systematic Analysis. J. Sens. Actuator Netw. 2025, 14, 17. [Google Scholar] [CrossRef]

- Zhang, W.; Yang, D.; Wang, H. Data-Driven Methods for Predictive Maintenance of Industrial Equipment: A Survey. IEEE Syst. J. 2019, 13, 2213–2227. [Google Scholar] [CrossRef]

- Kaye, J.; Whitley, E.; Lund, D.; Morrison, M.; Teare, H.; Melham, K. Dynamic consent: A patient interface for twenty-first century research networks. Eur. J. Hum. Genet. 2015, 23, 141–146. [Google Scholar] [CrossRef]

- Abbasi, M.; Váz, P.; Silva, J.; Martins, P. Comprehensive Evaluation of Deepfake Detection Models: Accuracy, Generalization, and Resilience to Adversarial Attacks. Appl. Sci. 2025, 15, 1225. [Google Scholar] [CrossRef]

- ISO/IEC 29115:2013; Information Technology—Security Techniques—Entity Authentication Assurance Framework. International Organization for Standardization: Geneva, Switzerland, 2013. Available online: https://www.iso.org/standard/45138.html (accessed on 12 November 2025).

- Namani, Y.; Reghioua, I.; Bendiab, G.; Labiod, M.A.; Shiaeles, S. DeepGuard: Identification and Attribution of AI-Generated Synthetic Images. Electronics 2025, 14, 665. [Google Scholar] [CrossRef]

- Schwartz, R.; Vassilev, A.; Greene, K.; Perine, L.; Burt, A.; Hall, P. Towards a Standard for Identifying and Managing Bias in AI; US Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2022. [Google Scholar]

- European Union. Directive (EU) 2022/2555 on Measures for a High Common Level of Cybersecurity across the Union (NIS 2 Directive). Off. J. Eur. Union 2022, 12, 80–152. Available online: https://eur-lex.europa.eu/eli/dir/2022/2555/oj/eng (accessed on 12 November 2025).

- ISO 10002:2018; Quality Management—Customer Satisfaction—Guidelines for Complaints Handling in Organizations. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/71580.html (accessed on 12 November 2025).

- NIST. Measurement Guide for Information Security: Volume 1—Identifying and Selecting Measures; NIST Special Publication 800-55v1; NIST: Gaithersburg, MD, USA, 2024. [Google Scholar]

- Association of Certified Fraud Examiners (ACFE). Occupational Fraud 2024: A Report to the Nations; ACFE: Austin, TX, USA, 2024; Available online: https://legacy.acfe.com/report-to-the-nations/2024/ (accessed on 12 November 2025).

- ISO/IEC 30107-3:2023; Information Technology—Biometric Presentation Attack Detection—Part 3: Testing and Reporting. ISO/IEC: Geneva, Switzerland, 2023. Available online: https://www.iso.org/standard/79520.html (accessed on 12 November 2025).

- European Commission. Commission Implementing Regulation (EU) 2015/1502 of 8 September 2015 on Minimum Technical Specifications and Procedures for Assurance Levels for Electronic Identification Means. Off. J. Eur. Union 2015, L235, 7–20. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX%3A32015R1502 (accessed on 12 November 2025).

| Dimension | Positive Impacts on Sustainability | Negative Impacts on Sustainability |

|---|---|---|

| Social |

|

|

| Economic |

|

|

| Technological/Environmental |

|

|

| Category | Korea | European Union | United States |

|---|---|---|---|

| Core System | CI/DI | eIDAS | NIST SP 800-63 (Digital Identity Guidelines) |

| Personal Linkage Method | CI | National/EU-level eID infrastructure (eID Hub) |

|

| Cross-Service Identity Recognition | CI | National/EU eID infrastructure | Always Required |

| Duplicate Registration Prevention | DI | None | Federated ID Provider |

| Legal Basis | Act on Promotion of Information and Communications Network Utilization and Information Protection | eIDAS Regulation | None |

| Personal Data Protection Principles |

| Pseudonymization, minimal disclosure of personal information |

|

| Key Features |

| Unified authentication via national/EU-level trusted eID |

|

| Domains | Current Audit Criteria | Proposed Improvement Plans | Expected Effects |

|---|---|---|---|

| Physical and Environmental Control | Operation of access control devices, retention of access logs for at least six months, installation and storage of CCTV footage, verification of disaster recovery center establishment, etc. |

| Enables proactive threat detection and disaster prevention, moving beyond simple record retention and facility possession |

| Network and System Security Operations | Verification of traditional security infrastructure such as firewalls, IDS/IPS, and FDS, retention and periodic review of logs, etc. |

| Enhances responsiveness to emerging attacks, zero-day vulnerabilities, and AI-driven threats |

| Personal Data Protection and User Rights | Verification of user consent procedures, adherence to data minimization principles, management of access, correction, deletion, and disposal processes, etc. |

| Strengthens user data self-determination and ensures algorithmic transparency and accountability |

| Substitute Credential and CI Management | Management of CI/DI issuance, storage, and deletion, assignment and maintenance of CP codes, tracking of CI provision history |

| Advances from procedural safety to intelligent forgery/presentation-attack detection and automated integrity assurance |

| Access Log Management | Retention of access logs for system administrators and users, periodic review and reporting, etc. |

| Evolves from retrospective traceability to real-time response and automated risk management, reinforcing technical and operational sustainability |

| Metric | Formula | Data source | Cadence | Threshold |

|---|---|---|---|---|

| Explainability coverage | Audit/logging system | Monthly | ≥99.0% | |

| Fairness gap—FNR (False Negative Rate) | , where | Model evaluation logs with group labels | Quarterly | ≤1.0 pp |

| If the false-negative rate for Group A is 1.8% and for Group B is 2.5%, then ΔFNR = ∣2.5 − 1.8∣ = 0.7 percentage points, which meets the illustrative target (≤1.0 pp) | ||||

| Fairness gap—FPR (False Positive Rate) | , where | Model evaluation logs with group labels | Quarterly | ≤1.0 pp |

| Substantiated complaint rate | Customer support logs | Monthly | <2/10 k | |

| Incident-disclosure timeliness | Incident reports | Per incident | ≤24 h | |

| Accessibility coverage | UX accessibility audits | Quarterly | ≥95.0% | |

| Assisted-channel success rate | Contact center/branch logs | Monthly | ≥90.0% | |

| Usage/access gap | , where | Authentication logs | Monthly | ≤5.0 pp |

| Adverse-decision notice coverage | # adverse automated decisions with notice incl. reasons & recourse/(# adverse automated decisions × 100) | Decision/notification logs | Monthly | ≥99.5% |

| Appeal resolution time | median (decision on appeal − appeal submission) | Appeals/case-management tickets | Monthly | ≤5 days |

| Metric | Formula | Data source | Cadence | Threshold |

|---|---|---|---|---|

| Fraud loss rate | Fraud claims register | Monthly | ≤org target | |

| MTTD/MTTR | MTTD = mean (Detection Time − Incident Start) MTTR = mean (Restore Time − Incident Start) | Incident-response tickets | Monthly | MTTD ≤ 10 min MTTR ≤ 30 min |

| On-time remediation rate | Audit follow-up log | Monthly | ≥95.0% | |

| False-positive block rate | Risk engine logs | Monthly | ≤0.5% | |

| Risk score distribution | I: <0.30, M: 0.30–060, H: >0.60 | Scoring logs | Weekly | High ≤ 15% |

| Profile conformance rate | Control checklist | Quarterly | ≥98% overall, 100% critical |

| Metric | Formula | Data Source | Cadence | Threshold |

|---|---|---|---|---|

| PAD performance (AUC, FAR/FRR) | AUC, FAR/FRR under attack conditions (spoof, replay, deepfake) | PAD evaluation logs | Monthly | AUC ≥ 0.98 FAR ≤ 1.0% FRR ≤ 2.0% |

| DR/BCP drill success rate | DR/BCP drill records | Quarterly | ≥95% | |

| RTO/RPO adherence | DR test logs | Quarterly | ≥95% | |

| Energy per 1 k verifications | Metering/billing | Monthly | ≤0.50 kWh/1 k | |

| If a service consumes 1.60 kWh while processing 3700 verifications over a week, then Energy/1 k = (1.60 kWh/3700) × 1000 = 0.43 kWh per 1000 verifications, satisfying the illustrative target (≤0.50) | ||||

| Carbon intensity | Electricity use × grid factors | Quarterly | ≤org target | |

| WUE | Data-center facilities metrics | Quarterly | ≤1.5 L/kW | |

| PUE | DC utilities | Monthly | ≤1.30 | |

| Time-to-contain | avg (Containment Time − Incident Start) | IR tickets | Monthly | ≤15 min |

| Drift detection frequency | Model-monitoring system | Weekly | <5/week | |

| Metric | Formula | Data Source | Cadence | Threshold |

|---|---|---|---|---|

| Conformance score | Conformance test reports | Quarterly | Overall ≥ 98% | |

| Mutual-recognition service count | # services operating under mutual recognition | MRA agreements/MoUs | Quarterly | ≥roadmap target |

| Gap-closure lead time (days) | Remediation Completion Date − Gap Identification Date | Remediation tracker (JIRA/GRC) | Monthly | Median ≤ 30 days |

| Cross-border pilots (count/duration) | Count; total pilot-days | Project/PoC reports | Quarterly | ≥2 pilots/quarter or ≥60 pilot-days |

| LoA mapping coverage (%) | LoA mapping register | Quarterly | 100% of in-scope controls |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J. Enhancing Personal Identity Proofing Services Through AI for a Sustainable Digital Society in Korea. Sustainability 2025, 17, 10486. https://doi.org/10.3390/su172310486

Kim J. Enhancing Personal Identity Proofing Services Through AI for a Sustainable Digital Society in Korea. Sustainability. 2025; 17(23):10486. https://doi.org/10.3390/su172310486

Chicago/Turabian StyleKim, JongBae. 2025. "Enhancing Personal Identity Proofing Services Through AI for a Sustainable Digital Society in Korea" Sustainability 17, no. 23: 10486. https://doi.org/10.3390/su172310486

APA StyleKim, J. (2025). Enhancing Personal Identity Proofing Services Through AI for a Sustainable Digital Society in Korea. Sustainability, 17(23), 10486. https://doi.org/10.3390/su172310486