1. Introduction

University educational quality does not rely solely on academic processes, curricula, or research activities. It is also conditioned by the physical environments that support these functions. In this sense, infrastructure becomes a visible component of institutional quality, capable of facilitating or limiting the experiences of students and faculty. Aware of this, universities increasingly allocate resources to buildings and services with the expectation of improving learning, research, and campus life. Despite this trend, in countries such as Peru, little is yet known about the relationship between investment in infrastructure and the actual quality of academic services [

1,

2].

Within this discussion, the notion of constructability acquires a central role. The Construction Industry Institute defines it as the integration of construction expertise into all phases of a project, from design through maintenance, to maximize outcomes [

3]. This approach has been applied in different productive sectors, but its potential within universities is particularly noteworthy, as it allows for the assessment not only of the technical efficiency of projects but also of their long-term sustainability [

4,

5].

In the Peruvian context, studies on constructability have been partial and generally concentrated on the initial stages of projects [

6]. However, the most valuable feedback on the quality of a building is obtained during its operational phase, when end users can express perceptions about its functionality and performance [

7,

8]. This highlights the importance of integrating post-occupancy evaluation (POE) with constructability analysis to capture both technical and user-centered perspectives of infrastructure performance.

Internationally, this idea has also gained importance. For instance, in Uganda, the quality of classrooms and university services has been shown to significantly influence student engagement [

9]. In Mexico, studies have demonstrated that continuous improvement initiatives focused on infrastructure directly affect satisfaction and loyalty toward the institution [

10]. In Lima, recent analyses confirmed that perceptions of service quality, including physical facilities, almost fully explain levels of student satisfaction and contribute to strengthening institutional image [

11]. This is not a phenomenon limited to Latin America. Research conducted in Pakistani universities also identifies physical infrastructure as a key determinant of student satisfaction and persistence within the educational system [

12]. These examples reinforce that the built environment in higher education has measurable impacts on learning outcomes, student loyalty, and institutional reputation.

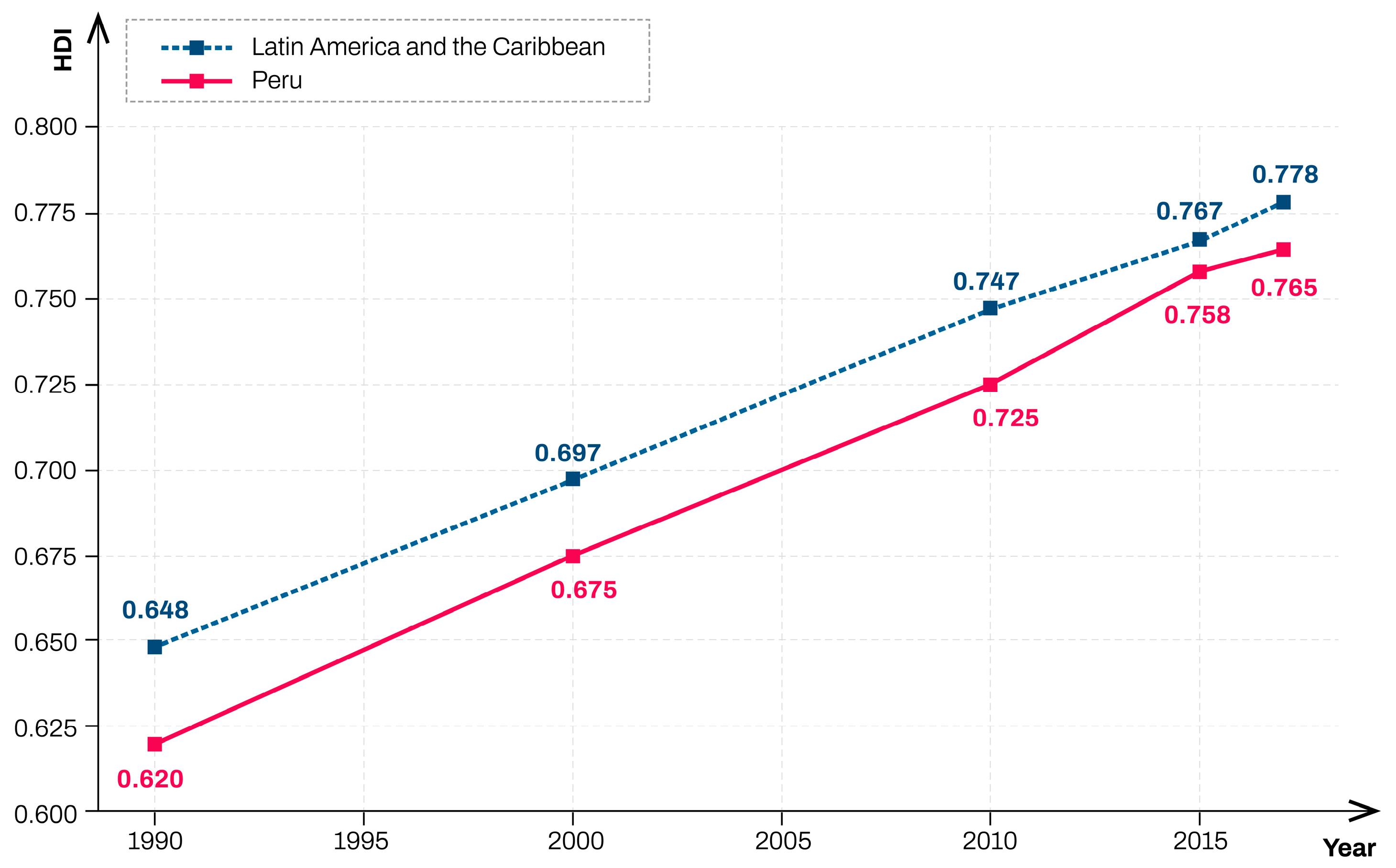

On a broader scale, the debate on university quality is linked to indicators of human development and competitiveness. The United Nations Development Program reported that Peru reached a Human Development Index of 0.740 in 2017 (

Figure 1), a level considered high but still below that of other countries in the region [

13]. Similarly, the World Economic Forum reported a decline in the country’s competitiveness ranking, underscoring weaknesses in infrastructure, innovation, and higher education [

14].

Table 1 presents a regional comparison of competitiveness, human development, and higher education enrollment indicators in selected Latin American countries. While Chile and Uruguay display high Human Development Index (HDI) values and broader access to higher education, Peru still faces challenges in expanding university enrollment and ensuring quality standards in educational infrastructure. This situation is reflected in the regional comparison, where Peru occupies an intermediate position in competitiveness and human development, but records lower university enrollment rates than countries with comparable economies.

In the Peruvian case, the National Superintendence of Higher Education (SUNEDU) has emphasized that economic development cannot be achieved without educational development, and that infrastructure constitutes an essential criterion for licensing and accreditation [

15]. This requirement responds to the need for universities to provide suitable environments for teaching and research. Private institutions have also followed this trend, incorporating innovation, sustainability, and collaborative spaces into their campuses [

16].

Beyond the Peruvian case, the literature on constructability highlights additional benefits such as cost reduction, productivity improvement, and the extension of the service life of buildings. Previous research has shown that applying constructability principles across all phases of a project generates sustained positive impacts [

15,

16].

By combining users’ perceptions with technical and economic evaluations, the study provides a replicable protocol for universities to assess the sustainability and service performance of their facilities. Although limited to a single campus, the approach contributes to the ongoing debate on how to connect infrastructure investment with service quality in higher education.

In this context, the present study seeks to contribute by analyzing the relationship between post-construction constructability efficiency and perceived service quality in a Peruvian university campus. Constructability was assessed across six operational dimensions, while service quality was evaluated through users’ satisfaction with facilities. The objective is not only to provide insights for the Peruvian higher education system but also to propose a replicable methodological framework for similar contexts in the region.

Constructability and POE can be framed as complementary stages of the same performance cycle. Constructability principles—buildability, construction knowledge feedback, and delivery efficiency—establish the technical preconditions during design and construction, whereas POE captures users’ post-handover perceptions of service quality (comfort, functionality, and usability). Linking these stages enables an examination of whether upstream constructability efficiency is reflected downstream in perceived service quality, thereby providing a coherent explanatory framework for facility performance.

2. Materials and Methods

2.1. Methodological Scheme

The study was conducted using an applied and explanatory approach, aimed at examining the relationship between constructability in the maintenance of university buildings and the perceived quality of educational services. This type of research is particularly relevant in the context of Latin American higher education, where infrastructure represents a decisive criterion in processes of institutional licensing and accreditation [

15]. To address this objective, a mixed-method framework was employed, integrating both quantitative and qualitative techniques, which made it possible to contrast findings from multiple perspectives and strengthen the validity of the results [

6].

Figure 2 presents the methodological scheme that synthesizes the phases and procedures applied in the study. As illustrated, the process sequentially integrates the literature review, data collection, application of instruments, development of the analysis, and the discussion stage. This articulation made it possible to establish a clear correspondence between the stated objectives, the information sources employed, the selection criteria applied, and the results obtained. In this way, the internal coherence of the methodological design was ensured, and the validity of the findings was reinforced by linking both the technical evaluation of infrastructure and users’ perceptions within a framework of educational sustainability.

2.2. Study Design

The research was designed as a non-experimental, cross-sectional study with descriptive and comparative procedures. This type of design has proven to be appropriate for evaluating educational and architectural phenomena when it is not possible to directly manipulate the variables of interest [

19]. This design was selected because it allowed the evaluation of real academic spaces without experimental intervention, focusing on the relationships between post-construction constructability and users perceived quality at a specific point in time.

Besides the global constructability efficiency index and the two post-handover covariates (economic gap and operational age), the analysis also considered the remaining constructability dimensions outlined in the methodology—namely: (i) requirements compliance, (ii) design conformity, (iii) architectural comfort (ILU–VEN–CIR: illumination, ventilation, circulation), (iv) social impact, and (v) environmental impact. Given the ordinal nature of the data and N = 15 buildings, Spearman’s ρ was used to assess monotonic associations with service quality. Exact p-values are reported; sensitivity checks with category collapsing (low = 1–2; high = 3–4) were also performed.

2.3. Mutiple Case Design, Participants and Context

The study was conducted at Ricardo Palma University, a private institution in Lima that has carried out a sustained process of investment in academic infrastructure over the past decades. Classrooms, laboratories, and service buildings that accommodate many users and represent different types of academic spaces were selected. The institutional portfolio comprised 45 infrastructure projects developed between 1996 and 2016. However, only 15 of them met the conditions required for comparative analysis, namely, being fully completed, of significant investment, and with complete technical and post-occupancy documentation. These projects were therefore selected as the final sample, representing all comparable buildings within the institutional stock.

It adopted a multiple-case design using replication logic, selecting buildings expected to provide literal and theoretical replications across types—rather than statistical sampling [

20,

21]. From the university’s stock of 45 projects (1996–2016), a criterion-based selection of 15 buildings was conducted by expert judgment to ensure coverage of key academic/service typologies, usage intensity, construction periods, structural systems, and documentation availability (replication logic in multiple-case studies). In line with post-occupancy evaluation (POE) practice, the building was the unit of analysis, and occupant responses were aggregated at building level for cross-building benchmarking (WBDG POE overview; CBE Occupant Survey and benchmarking; BUS Methodology). Given the technical measurement load, as on-site inspections and constructability audits plus user surveys, the 15-building panel is substantial relative to typical campus POEs that often examine one or two buildings in depth [

22]. This approach ensured that the subset of 15 cases was comprehensive rather than reduced, since it includes every project that fulfilled the comparability criteria.

The population consisted of students, faculty, and administrative staff, who constitute the main users of university infrastructure. In total, 120 students, 25 faculty members, and 15 administrative/technical staff were surveyed, applying purposive non-probabilistic sampling. Inclusion criteria considered regular users of the selected spaces with at least one year of experience. Exclusion criteria removed occasional users or visitors without permanent activities in the buildings. Each building had a different group of respondents, corresponding to its regular occupants, and no participant was surveyed in more than one facility. Given that the unit of analysis in this study was the building rather than the individual respondent, survey responses (students, faculty, and staff) were aggregated at the building level. For each of the six dimensions, we calculated the mean Likert score per building, combining all valid responses from its regular users. This procedure generated a single constructability profile and a single perceived service quality score for each of the 15 buildings. This aggregation is consistent with post-occupancy evaluation approaches, where the building is considered the analytic unit. Nevertheless, it is acknowledged that this method reduces individual variability, and thus results should be interpreted as exploratory and building-centered rather than individual-level findings.

Survey responses from students, faculty, and administrative staff were aggregated at the building level by calculating mean scores for each of the six dimensions. This aggregation generated a single constructability and service quality profile per building (N = 15), which allowed alignment with the technical assessments conducted at the same level of analysis.

2.4. Instruments and Data Collection

Three main instruments were employed for data collection. The first was a perception survey administered to students and faculty, structured on a four-point Likert scale, which is widely used in studies assessing perceptions of educational quality and built environments [

23]. This instrument contained 30 items grouped into six dimensions: conformity with quality requirements, conformity with initial design, economic valuation, time, social impact, and environmental impact.

The second instrument consisted of a technical evaluation form developed based on constructability principles proposed by the Construction Industry Institute and adapted to the educational context [

24]. This form included indicators related to material durability, cost efficiency, and regulatory compliance, aligned with the National Building Regulations (RNE) and ISO 21001.

The third instrument was an observation guide applied during site visits, which allowed for the structured recording of the physical, spatial, and service conditions of the facilities under study. All instruments were validated by a panel of five experts in architecture and educational management, obtaining Aiken’s V coefficients above 0.80. Reliability was tested through Cronbach’s alpha, reaching values >0.85 across all survey dimensions. Constructability efficiency was operationalized across six dimensions: compliance with quality requirements, adherence to the initial design, economic valuation, execution time, social impact, and environmental impact. Each dimension was scored on a four-point Likert-type scale based on technical evaluations and expert judgment. Service quality was measured through users’ satisfaction surveys, also on a four-point scale, with indicators related to comfort, functionality, and adequacy of facilities [

25,

26].

2.5. Data Analysis

Given the relatively small sample size (N = 15 buildings), non-parametric statistics were selected. Spearman’s rank correlation was used to examine associations between constructability and service quality, while bootstrap resampling (1000 iterations) provided robust confidence intervals and reduced the risk of inflated significance levels. The analysis combined quantitative and qualitative procedures. On the quantitative side, descriptive statistics were applied to characterize the variables, along with inferential tests using a 5% significance level. Spearman’s rho was used to test associations between constructability and service quality due to the ordinal nature of the data. Bootstrap resampling (1000 iterations) was applied to obtain robust confidence intervals under small-sample conditions. On the qualitative side, content analysis was applied to the observation records and the open-ended responses of the participants. Triangulation was achieved by integrating user perceptions, technical evaluations, and observational data, strengthening internal validity and allowing cross-checking of results.

2.6. Statistical Analysis Plan

The analysis began with the description of the variables using measures of central tendency and dispersion, complemented by normality tests appropriate to the sample size [

27]. Associations were estimated with Spearman’s correlations using two-tailed tests at 5% significance. Confidence intervals were estimated through non-parametric bootstrap. Effect sizes were calculated to improve interpretation. Sensitivity analysis included partial correlations controlling for contextual variables (faculty, building age).

Analyses were conducted using SPSS v27 and R v4.2.2; syntax files and replication codes were archived to ensure reproducibility [

28,

29].

3. Results

3.1. Overall Relationship Between Constructability Efficiency and Service Quality

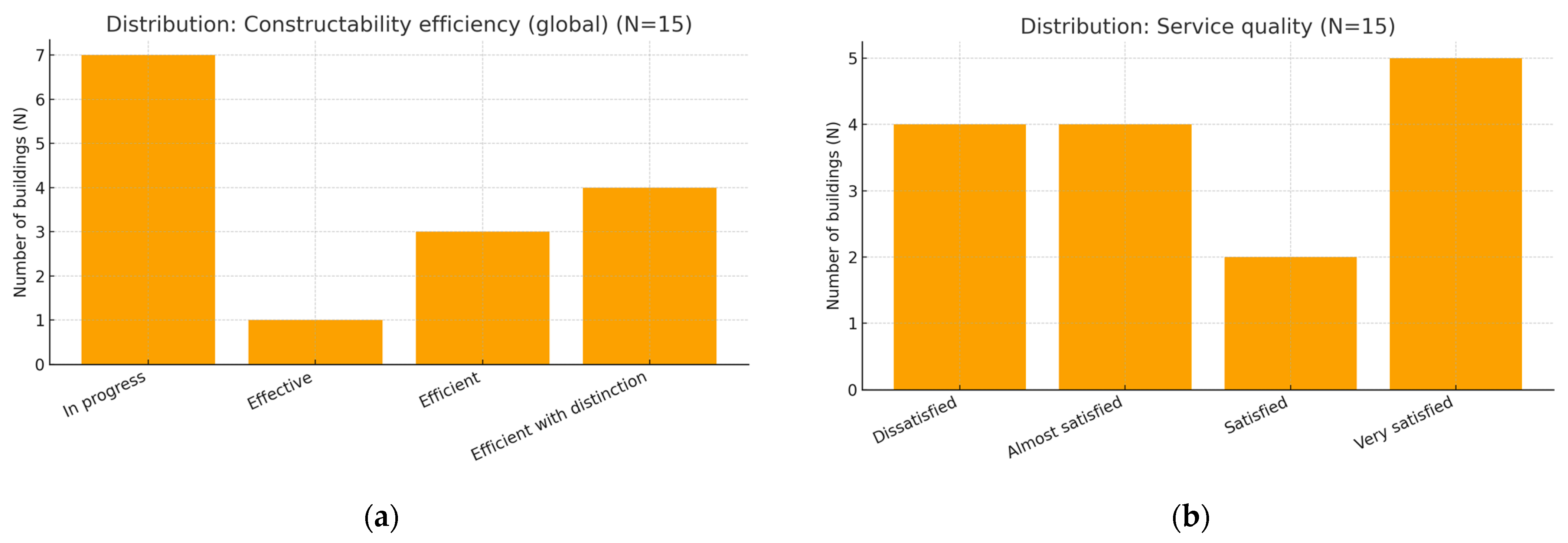

The descriptive analysis indicates that constructability efficiency is predominantly distributed across intermediate and high levels: 46.7% of projects were classified as “effective in process,” 6.7% as “effective,” 20.0% as “efficient,” and 26.6% as “efficient with distinction.” Concurrently, user-reported service quality displayed a bimodal distribution: 27% “unsatisfied,” 27% “somewhat satisfied,” 13% “satisfied,” and 33% “very satisfied.” This pattern suggests that, as shown in

Table 2 and

Figure 3, although one-third of the cases achieved high levels of satisfaction, more than half remained at intermediate or low levels.

The contingency analysis highlights a clear alignment between high efficiency and positive service evaluations: all “very satisfied” observations occurred in projects classified as “efficient” or “efficient with distinction,” whereas “unsatisfied” and “Somewhat satisfied” cases were exclusively associated with lower efficiency categories.

Spearman correlation analysis (

Table 3) confirms a strong positive association between overall constructability efficiency and service quality (ρ = 0.857;

p < 0.001) [

30,

31], indicating that higher post-construction efficiency translates into higher perceived service quality.

The correlation analysis confirmed a strong positive association between constructability efficiency and service quality (ρ = 0.857; p < 0.001). However, given the small sample size (N = 15), this result should be interpreted as exploratory evidence rather than a definitive conclusion.

3.2. Economic Gap (Commercial Value—Present Cost) and Service Quality

The economic gap indicator shows a strong skew toward high-performance levels: 73.3% of projects were classified as “efficient with distinction,” 6.7% as “efficient,” and 20% as “effective in process.” Nevertheless, this concentration at the upper levels did not consistently translate into higher satisfaction, as some “unsatisfied” responses were also recorded in buildings with favorable economic gap scores.

In

Table 4, contingency analysis reveals that “very satisfied” ratings are concentrated in high-gap categories, while “unsatisfied” and “somewhat satisfied” responses are more prevalent when the gap is low, although variability exists even in high-gap categories. However, variability was observed even among high-gap projects, suggesting that economic efficiency alone does not fully explain user perceptions.

The Spearman correlation was positive and statistically significant (ρ = 0.637;

p = 0.011), suggesting that better commercial value–cost relationships are associated with higher service perceptions [

27], although the effect size was lower compared to the overall constructability–service relationship.

3.3. Operational Age and Service Quality

The operational-age typology indicates a relatively modern building stock: 46.7% classified as “Type 4 (modern),” 40% as “Type 3 (almost modern),” and 13.3% as “Type 2 (slightly older),” with no projects in “Type 1 (old).”

In

Table 5, comparison with service quality showed that more modern buildings tended to receive higher satisfaction scores. However, this relationship was not uniform, as some recently built projects were still rated as “unsatisfied,” suggesting that operational age only partially explains user perceptions.

3.4. Architectural Standards (Lighting, Ventilation, and Circulation or ILU-VEN-CIR) and Service Quality

Frequency analysis reveals that 53.3% of projects did not reach comfortable levels in lighting, ventilation, and circulation, while 46.7% were perceived as comfortable. This suggests that, despite recent investments, several facilities still face limitations in basic habitability conditions.

In

Table 6, contingency tables indicate that “very satisfied” responses are concentrated in projects with high standards, while dissatisfaction is associated with lower-standard projects. Spearman correlation was positive and significant (ρ = 0.603;

p ≈ 0.008), confirming the impact of architectural conditions on user satisfaction [

32]. The Spearman correlation was positive and significant (ρ = 0.603;

p ≈ 0.008), confirming an association between architectural conditions and user satisfaction. Nevertheless, this finding should be considered exploratory, as the limited sample size (N = 15) may inflate the strength of association.

3.5. Summary of Associations or Synthesis of Findings

The analysis of post-construction technical variables and their relationship with service quality reveals a consistent and significant pattern across all dimensions. Higher constructability efficiency, more modern operational age, favorable economic gap, and improved architectural standards are all positively associated with increased user satisfaction. Among these variables, overall constructability efficiency shows the strongest correlation, positioning it as a central factor within this specific case study [

33] (

Table 7).

These results indicate that post-construction performance cannot be understood through a single dimension alone. Although operational age, economic efficiency, and environmental comfort contribute to user satisfaction, their combined integration within an efficient framework amplifies the effect.

Nevertheless, given the reduced sample size (N = 15), these associations should be interpreted as exploratory evidence. They highlight trends that merit validation in larger and more diverse samples.

From a practical standpoint, the findings point to the relevance of monitoring closure processes, preventive maintenance, and basic comfort conditions (lighting, ventilation, circulation) [

34]. While these strategies appear promising in this case, further research is required to determine their generalizability across higher education facilities.

4. Discussion

4.1. Integrating Constructability and POE

The present study examined the association between post-construction technical variables and service quality in 15 university buildings. The findings indicate that higher constructability efficiency, favorable economic gaps, more modern operational age, and improved architectural standards are positively associated with user satisfaction.

These results are consistent with prior literature highlighting the influence of post-occupancy performance on user perception and sustainability outcomes. However, the contribution of this study lies less in the discovery of new causal relationships and more in the proposal of a methodological framework that integrates constructability assessment with post-occupancy evaluation in higher education facilities. This dual approach offers an exploratory tool that other institutions can adapt and test.

Despite the observed statistical significance, the results must be interpreted with caution. The small sample size (N = 15) increases the risk of inflated correlation coefficients and limits the generalizability of the findings. Additionally, the cross-sectional and non-experimental design does not allow for strong causal claims. For this reason, the study should be understood as an exploratory case analysis that identifies patterns and methodological pathways rather than definitive evidence.

The extended analysis indicates that multiple constructability dimensions—not only the global index—track with service quality in a coherent direction. Technical compliance (requirements/design) and architectural comfort show the clearest gradients, whereas social and environmental indicators contribute modestly yet consistently. This strengthens the interpretation of constructability–POE integration as a portfolio of mutually reinforcing levers, rather than a single-metric effect.

The practical implications, however, are noteworthy. The associations observed suggest that facility managers should not treat economic efficiency, operational age, or environmental comfort in isolation. Instead, these variables gain explanatory power when integrated into a holistic constructability framework. This reinforces the importance of considering both technical and experiential dimensions in post-construction evaluations, echoing trends in the facility management and sustainability literature.

4.2. Beyond the Global Perspective

Across regions, quantitative POEs consistently link campus infrastructure to user satisfaction: in the UAE, 55% of occupants felt too cold in winter and 99% of recorded temperatures were below standards, with 45% reporting “stuffy air” and 30% headaches—yielding overall “slightly dissatisfied” ratings and clear correlations between IEQ defects and lower comfort [

35]; in Saudi Arabia, only 36% were satisfied with temperature (lowest of eight IEQ factors) versus up to 73% satisfied with electric lighting, acoustics also underperformed, and maintenance drew mixed views (48% approval; ~one-third dissatisfied) [

36]; in Nigeria, a survey of four campus buildings found high user ratings for quietness, lighting, cleanliness and surrounding services, but flagged inconsistent technical quality and low POE awareness as barriers [

37]; and in Australia, an 11-factor survey comparing a 1996 dorm to newer residences found subpar thermal and acoustic comfort in both, with the older building significantly worse on IAQ, lighting, maintenance/management, layout/furniture, and privacy—areas where newer facilities scored much higher [

38]. Synthesizing these results, thermal and acoustic comfort repeatedly emerge as the weakest, maintenance/cleanliness as pivotal, and upgrading design/construction/operations measurably improves satisfaction, patterns that mirror the Peruvian case where higher post-construction constructability aligns with better user ratings.

Across Europe, in Italy, a survey of 381 dorm residents reported overall satisfaction 3.27/5, with staff courtesy 3.44, study rooms 3.40, room temperature 3.3, but lower scores for maintenance (2.6) and laundry (2.4) [

39]; a UK/Saudi smart-building POE using 49 indicators (35 respondents) found favorable overall performance yet persistent ventilation/thermal control and daylight shortfalls affecting satisfaction [

40]; in Turkey, 685 students showed that physical environment of educational spaces was among the top contributors to student QoL and satisfaction in structural models; a meta-analysis of 428 studies estimated a medium effect size (Cohen’s d = 0.45) for facility quality → student satisfaction [

41]. Taken together, European evidence quantifies meaningful links between infrastructure/IEQ and satisfaction, while our Peruvian case shows a stronger association, likely reflecting context and measurement differences

Beyond the Latin American evidence, recent global scholarship shows higher education increasingly adopting “smart campus” paradigms—integrating IoT sensing, BIM/Digital Twins, and AI-driven analytics—to optimize operations, sustainability, and learning outcomes at scale. Conceptual and scoping reviews synthesize governance, interoperability, cybersecurity, and data-ethics enablers for smart campuses and propose frameworks that align facility management with pedagogical goals and carbon-reduction targets [

30,

31]. Empirical implementations of Digital Twin–enabled facility management in university settings demonstrate measurable gains in energy management, predictive maintenance, and service quality, positioning campuses as living laboratories for smart-infrastructure innovation [

32,

42]. In parallel, comprehensive reviews in smart education document how intelligent technologies, such as learning analytics, adaptive systems, and generative AI, are reshaping didactics, assessment, and student engagement, linking infrastructure digitalization to academic performance and institutional resilience [

43]. Collectively, this literature shows that AI-enabled, digital-twin campuses can improve sustainability, operational efficiency, and educational quality

4.3. Limitations and Future Research

This single-institution case study draws on a rich, building-level dataset (N = 15) assembled from two decades of project records and multi-stakeholder surveys. The single-site scope limits external validity and precludes complex models, though the campus-scale portfolio remains substantive [

44]. To manage uncertainty, we employed non-parametric statistics, reported exact

p-values, and ran sensitivity checks; findings are thus indicative for similar contexts, rather than definitive for all settings.

The framework has six domains, but results quantify associations only for those with complete records (economic gap, operational age/typology, architectural comfort), comparable records across all 15 buildings (economic gap, operational age/typology, and architectural comfort—ILU–VEN–CIR). For requirements compliance, design conformity, social impact, and environmental impact, project-level data were not consistently available in analyzable form for the full portfolio, precluding domain-specific correlation and contingency analyses. As a result, evidence for these four domains remains descriptive within the aggregate constructability measure, and external validity should be interpreted cautiously.

Future studies should test AI-based adaptive buildings [

45], where real-time data and machine learning adjust lighting, ventilation, and space allocation to optimize user comfort and resource efficiency.

Recent scholarship highlights that leveraging smart campus technologies—notably AI-driven analytics, digital twin models [

46], and IoT sensor networks—can significantly enhance post-construction facility performance and service quality in university settings. These tools enable a shift from reactive maintenance to proactive management: for example, digital twin platforms allow facility managers to visualize and simulate campus operations in real time, improving decision-making in space utilization, energy management, and scheduling [

32,

47]. The integration of AI and IoT has been shown to increase operational efficiency and sustainability while also fostering more adaptive, user-responsive environments [

48]. In effect, a “smart campus” functions as a living laboratory where building performance data and user feedback continuously inform each other, leading to higher user satisfaction and comfort [

49]. This theoretical perspective suggests that constructability in the post-construction phase is no longer limited to physical attributes; it now encompasses digital constructability, i.e., the capacity of built infrastructure to interface with intelligent systems for self-monitoring and improvement. By embedding these technologies, universities can transform traditional facilities into agile, sustainable, and service-oriented campuses, aligning technical quality with the expectations of digitally empowered students and staff.

5. Conclusions

This study explored the relationship between constructability efficiency and service quality within a single university campus comprising 15 buildings. Constructability efficiency showed a strong correlation with user satisfaction; economic gap, operational age, and architectural standards were also positive but comparatively weaker.

The main contribution of this research lies in its methodological design: a replicable framework that combines systematic constructability assessment with post-occupancy evaluation to analyze service quality. This framework can be adapted in future studies to broaden the understanding of how construction-phase decisions affect long-term user perceptions.

Nevertheless, the limitations of the study must be acknowledged. The small and context-specific sample prevents broad generalization of the results. Future research should replicate and expand this framework across multiple institutions, larger datasets, and diverse geographic settings, ideally incorporating statistical modeling techniques to control for confounding variables.

From a managerial perspective, jointly monitoring technical quality, economic efficiency, maintenance, and comfort can enhance user experience, though evidence remains preliminary. These insights can inform preventive maintenance strategies, design adjustments, and sustainability initiatives; however, they remain provisional and should be validated through larger-scale empirical research.

Overall, the results demonstrate that constructability assessment serves as a practical mechanism for enhancing the performance and sustainability of university infrastructure. By linking technical efficiency with users’ experiences, this study contributes to a more holistic approach to educational facility management and supports the advancement of SDG 4 (Quality Education), SDG 9 (Industry, Innovation and Infrastructure), and SDG 11 (Sustainable Cities and Communities).