1. Introduction

In recent years, a growing number of academic studies have sought to conceptualize and evaluate audit quality using AQIs. These studies have examined AQIs as mechanisms for enhancing transparency, improving auditor performance, and fostering stakeholder confidence. However, much of the existing literature has focused on financial audits, with limited attention paid to how AQIs operate in the context of ESG assurance. Research has also noted inconsistencies in how AQIs are defined, interpreted, and implemented across jurisdictions and professional contexts. These findings highlight the need for a more integrated understanding of AQIs that accounts for both their theoretical foundations and practical implications in sustainability reporting. By engaging with these strands of literature, this study aims to contribute to a more nuanced discussion on the role of AQIs in supporting audit quality in a rapidly evolving assurance landscape.

Audit quality has become a cornerstone of financial market integrity, with far-reaching implications for investor confidence, regulatory compliance, and sustainable corporate governance. High-profile corporate failures—such as Enron, Carillion, and Wirecard—have underscored the urgent need for transparent and reliable audit performance measures. In response, AQIs have emerged as standardized metrics to evaluate the inputs, processes, and outputs of audit engagements. Initially developed to enhance audit oversight and benchmarking, AQIs now play a pivotal role in aligning audit practices with evolving stakeholder expectations—particularly in light of increased demands for assurance in ESG reporting.

The regulatory landscape has significantly influenced this evolution. In the European Union, reforms such as Regulation (EU) No 537/2014 and Directive 2014/56/EU have mandated enhanced audit disclosures and oversight mechanisms, reinforcing the role of AQIs in ensuring transparency and quality. In Greece, the Hellenic Accounting and Auditing Standards Oversight Board (ELTE) has been instrumental in implementing these directives and fostering an accountability-driven audit environment. Parallel initiatives by regulatory bodies such as the PCAOB (U.S.), CPAB (Canada), and FRC (UK) have introduced diverse AQI frameworks, reflecting both convergence efforts and regional variation in audit oversight [

1].

At the same time, the audit scope is expanding to cover sustainability reporting alongside financial statements. The adoption of the CSRD by the EU has made limited assurance over ESG data mandatory for a broad class of entities. This development signals a shift in the role of auditors—from financial gatekeepers to verifiers of corporate accountability. AQIs, when adapted to ESG contexts, offer a mechanism for signaling assurance quality and building stakeholder trust. However, limited research addresses how AQIs are used across jurisdictions and integrated into ESG assurance.

To strengthen the theoretical positioning of the study, we clarify the distinction between regulatory developments and the academic literature on audit quality. While regulatory frameworks across jurisdictions provide the institutional context for the emergence and implementation of AQIs, academic research offers the conceptual underpinnings that shape how audit quality is defined, interpreted, and assessed. The literature emphasizes that audit quality is a multidimensional construct, encompassing technical competence, ethical responsibility, organizational culture, and institutional alignment. These conceptual insights inform the development and application of AQIs as both measurement tools and instruments of governance. By integrating these two perspectives—regulatory and theoretical—this study situates AQIs not only as regulatory responses but also as constructs grounded in scholarly discourse on audit performance, accountability, and stakeholder trust.

Despite the growing body of research on audit quality and its determinants, limited attention has been paid to the conceptual development and practical implementation of AQIs, particularly in the context of non-financial reporting and ESG assurance. Existing studies have primarily focused on financial audit contexts, often overlooking the unique challenges and expectations associated with sustainability-related disclosures. Moreover, the literature lacks a systematic comparison of regulatory and professional approaches to AQIs across jurisdictions [

2]. These gaps underscore the need for a deeper understanding of how AQIs are conceptualized, developed, and interpreted in evolving audit landscapes. The following research questions are designed to address these gaps and contribute to ongoing debates by linking AQIs to ESG assurance and cross-national regulatory initiatives.

This study addresses that gap by synthesizing the conceptual foundations, regulatory contexts, and evolving applications of AQIs with a specific emphasis on ESG assurance. The following research questions guide the analysis:

RQ1: How are AQIs conceptualized and categorized across international regulatory frameworks?

RQ2: What role do AQIs play in supporting ESG assurance, particularly under the emerging CSRD and related stakeholder expectations?

RQ3: To what extent do existing AQI frameworks align across jurisdictions, and what challenges hinder their standardization and cross-border comparability?

To address these questions, the manuscript is structured as follows:

Section 2 explores the theoretical foundations of audit quality, while

Section 3 presents the evolution and classification of AQIs.

Section 4 provides a comparative analysis of AQI frameworks across jurisdictions, and

Section 5 examines their integration into ESG assurance. The final sections discuss findings, implications, and future research directions.

2. Literature Review

2.1. Theoretical and Conceptual Foundations of AQIs

The evolution of audit quality frameworks cannot be fully understood without reference to the legal and regulatory environments in which they operate. In the European Union, a series of post-crisis reforms—particularly Directive 2014/56/EU and Regulation No 537/2014—marked a significant shift toward stricter oversight of statutory audits. These instruments introduced new requirements for auditor independence, mandatory rotation, and expanded audit reports, reflecting a renewed emphasis on enhancing audit quality and transparency [

1]. In Greece, the implementation of these reforms was overseen by the ELTE, which plays a central role in monitoring audit compliance and quality assurance. ELTE’s regulatory actions, aligned with EU directives, have laid the groundwork for integrating AQIs and sustainability assurance standards. This regulatory context provides a crucial foundation for understanding the relevance of AQIs and their potential to strengthen trust in audit practices both in financial and ESG reporting [

3].

Audit quality has been widely discussed in the academic literature and among practitioners, with definitions evolving to encompass both technical competence and ethical responsibility. Ref. [

4] was among the first to define audit quality as the joint probability that an auditor will detect a material misstatement in the financial statements and report it appropriately. This foundational definition emphasizes two essential aspects: the auditor’s ability to uncover inaccuracies and their independence to disclose them.

Subsequent scholars have expanded upon this definition. Ref. [

5] suggested that audit quality should be viewed as a continuum rather than a binary concept, shaped by a variety of factors including the size of the audit firm, industry specialization, and the strength of the legal and institutional environment. Francis emphasized the contextual nature of audit quality and highlighted the importance of both individual auditor capabilities and the organizational setting.

The International Auditing and Assurance Standards Board [

6] contributed a more holistic framework by identifying a wide range of factors that influence audit quality. According to the IAASB, audit quality is not only the outcome of an auditor’s individual behavior but also a function of the systems and culture within audit firms, as well as external elements like regulatory structures and stakeholder expectations. The IAASB’s framework underscores the importance of collaboration among stakeholders—auditors, clients, regulators, and investors—in enhancing audit quality.

These definitions collectively demonstrate that audit quality is inherently multidimensional. It encompasses technical proficiency, ethical judgment, organizational culture, and systemic interactions. They also form the basis for efforts to operationalize the concept of audit quality through measurable frameworks such as AQIs.

The Center for Audit Quality [

7] provided one of the most comprehensive and practical frameworks for understanding the drivers of audit quality. This model identifies six primary elements that contribute to audit excellence, offering a structured approach for both internal assessment and external communication.

The first element focuses on the effectiveness of the audit process. This includes the ability of the auditor to design risk-based audit plans, apply appropriate procedures, and respond to complex accounting scenarios. An effective audit process is marked by its methodological soundness and adaptability to engagement-specific risks.

Audit firm culture is another core dimension. The CAQ emphasized that an ethical and high-integrity culture supports audit quality by fostering professional skepticism and a commitment to public interest. Attributes such as accountability, open communication, and a clear tone at the top are essential in cultivating such a culture. Equally important are the skills and personal qualities of audit partners and staff. The technical competence, professional judgment, and communication abilities of auditors directly influence the quality of audit work. Ongoing training and development programs ensure that staff remain proficient in current standards and practices [

8].

The audit process itself, including the use of standardized methodologies and quality control mechanisms, contributes to the consistency and reliability of audit outcomes. Process quality is maintained through internal reviews, documentation standards, and peer consultations that help ensure procedural rigor and compliance with regulatory requirements. The reliability and usefulness of audit reporting also plays a crucial role. Audit reports that clearly articulate the auditor’s findings and highlight key audit matters help stakeholders better understand the financial health and governance of audited entities. This enhances transparency and supports informed decision-making [

9].

Finally, there are factors beyond the auditor’s direct control that still influence audit quality. These include the client’s financial reporting practices, the strength of internal controls, corporate governance quality, and the broader regulatory environment. Although external, these variables can significantly affect the auditor’s ability to perform a high-quality audit.

Taken together, these six dimensions form a comprehensive view of audit quality. They not only guide audit firms in strengthening their practices but also provide a foundation for the development of AQIs, which aim to translate these qualitative aspects into quantifiable metrics for assessment and benchmarking [

10,

11]. As stakeholders increasingly demand objective evidence of audit performance, the role of AQIs has become central in efforts to monitor, compare, and enhance audit quality across firms and jurisdictions. The following section explores the evolution and classification of these indicators, highlighting their growing relevance in both academic and regulatory contexts.

2.2. Cross-Jurisdictional Approaches to AQIs

The emergence of AQIs reflects the growing need for transparency, accountability, and measurable benchmarks in auditing. Over the last two decades, several high-profile corporate collapses—such as Enron, Parmalat, Carillion, and Wirecard—have underscored persistent weaknesses in audit quality oversight and the limitations of traditional assurance practices. These failures led to significant losses in investor confidence and raised doubts about the effectiveness of auditors in fulfilling their public interest role. As a response, audit regulators and professional bodies began seeking tools that could offer objective insights into the performance of auditors and audit firms, giving rise to the concept of AQIs [

12].

The development of AQIs has since evolved through both regulatory and market-driven initiatives. In the United States, the PCAOB was one of the first bodies to formally explore AQIs as part of its effort to enhance audit quality monitoring. In 2013, it released a concept paper proposing a set of indicators to assess audit performance both at the engagement level and across audit firms [

13]. Other countries followed suit, including Canada through the CPAB, and the United Kingdom via the FRC, each contributing to a growing international dialogue about the role and implementation of AQIs.

Simultaneously, the IAASB recognized the need for a global framework that would support consistency in the application and interpretation of AQIs. In 2014, the IAASB outlined its vision for audit quality and emphasized the importance of engaging all key stakeholders—including audit firms, regulators, standard setters, and users of financial statements—in the continuous improvement of audit practices [

14]. Their model positioned AQIs as a bridge between internal quality control processes and the external expectations of the audit profession.

Though adoption varies across jurisdictions, the increasing interest in AQIs reflects a shared objective: to make audit quality more transparent, measurable, and comparable. Pilot programs, public consultations, and voluntary disclosures by major audit firms have all contributed to shaping AQI frameworks that serve both evaluative and developmental purposes. The evolution of AQIs from a conceptual ideal to a practical tool represents a significant step toward fostering a culture of accountability and continuous improvement in the audit profession.

While jurisdictional differences influence the extent and manner of AQI adoption, common themes have emerged regarding how audit quality is defined, measured, and communicated. As the discourse around AQIs matures, attention has shifted from mere adoption to the standardization and interpretation of indicators. To facilitate comparability across firms and regions, regulators and professional bodies have pro-posed structured classification systems that group AQIs according to their functional role within the audit process. These typologies aim to transform abstract notions of audit quality into actionable metrics and thereby enhance both regulatory oversight and internal quality management.

In order to support the practical implementation and comparability of AQIs, several regulatory bodies and standard-setters have developed classification frameworks that group indicators based on the phase of the audit process they represent. One of the most widely recognized and applied models is the tripartite typology introduced by the International Auditing and Assurance Standards Board, which divides AQIs into three principal categories: input, process, and output indicators. This categorization has been echoed in frameworks developed by the PCAOB, the CPAB, and other international oversight entities. It allows for a systematic approach to evaluating the multiple dimensions of audit quality, from planning and resourcing to execution and outcomes [

15].

Input indicators represent the resources, structures, and personnel that an audit firm mobilizes in advance of and during the audit engagement. These indicators are intended to reflect the capacity of the firm to deliver high-quality audit services. Key metrics include partner workload, the ratio of audit staff to partners, the years of professional experience of team members, and the number of training hours provided to audit professionals [

16]. The underlying assumption is that firms investing more in experienced personnel, balanced team composition, and continuous training are more likely to conduct rigorous and effective audits. However, while these indicators signal a firm’s investment in quality infrastructure, they do not directly measure how these inputs translate into audit outcomes.

Process indicators, by contrast, focus on the conduct of the audit itself—capturing how well the audit is planned, executed, and monitored. These include the percentage of audit hours spent by engagement partners, the frequency of internal quality control reviews, the use of advanced auditing technologies, the extent of consultation on complex issues, and the timeliness of audit documentation. Process indicators serve as proxies for methodological soundness, adherence to standards, and the professional rigor applied throughout the engagement. For example, high levels of partner involvement may reflect a firm’s commitment to oversight and quality control, while frequent quality reviews can indicate a robust internal monitoring system. These indicators are particularly useful for identifying variations in audit execution across different engagements or offices within the same firm [

17].

Output indicators aim to capture the results or consequences of the audit process. While less directly controllable by auditors, these indicators provide external signals about the credibility and effectiveness of audit engagements. Examples include the frequency of financial statement restatements following the audit, the number of deficiencies identified during regulatory inspections, the issuance of going concern opinions, and the rate of client turnover. Some output indicators, such as audit reporting timeliness and the quality of communication with audit committees, are increasingly being emphasized in AQI frameworks. Nonetheless, these indicators can be influenced by a range of factors beyond auditor performance—such as the complexity of the client’s operations or changes in financial reporting standards—making them less precise as direct measures of audit quality [

18].

While each category of indicators offers valuable insights on its own, their true strength lies in their combined application. Evaluating input, process, and output indicators in tandem enables a more comprehensive and balanced assessment of audit quality. For instance, a firm might report high levels of training hours (input), but without sufficient partner involvement during execution (process) or with frequent inspection findings (output), the audit quality could still be compromised. Thus, AQI typologies provide a framework not only for performance measurement but also for internal quality improvement, regulatory benchmarking, and stakeholder communication [

19].

However, the practical use of these categories also presents challenges. Metrics are not always standardized across firms or jurisdictions, and not all indicators are equally relevant in every audit context. Additionally, the interaction among these categories is complex and non-linear; a deficiency in one area may or may not be compensated by strengths in another. Therefore, while the input-process-output framework is conceptually robust, its real-world application requires careful calibration, context sensitivity, and a willingness to integrate both quantitative and qualitative perspectives in audit evaluation [

20,

21].

In addition to their classification by input, process, and output, AQIs must be understood in terms of their practical application and intended users. AQIs are not designed solely for regulators or academic analysis; they also serve as critical tools for audit committees, investors, and audit firms themselves. For audit committees, AQIs provide a structured basis for evaluating auditor performance, engagement risk, and audit planning [

22]. For regulators, AQIs support inspection priorities and systemic risk monitoring. For audit firms, they act as internal management tools, helping identify areas for training, resource allocation, or quality control improvements. This multi-stakeholder utility underscores the versatility of AQIs, while also complicating efforts to standardize definitions and disclosures. For example, indicators such as staff turnover, internal inspection findings, and audit hours on key risk areas have different implications depending on the user and the context in which they are reported. As AQIs continue to evolve, understanding and aligning their use across different stakeholder groups will be critical to maximizing their effectiveness and avoiding misinterpretation [

23].

Despite these advantages, the broad applicability of AQIs across stakeholders and contexts introduces new complexities. As frameworks become more widely adopted and integrated into regulatory and organizational processes, questions have emerged about their interpretability, methodological soundness, and real-world relevance. These concerns are especially pronounced in settings where audit environments are dynamic, resource-constrained, or governed by divergent institutional logics. The next section explores the main criticisms and limitations of AQIs, emphasizing the importance of critical evaluation as these indicators continue to shape audit practices globally.

Despite the promise and growing adoption of AQIs, several criticisms have emerged that challenge their reliability, consistency, and practical utility. These concerns highlight the challenge of translating a complex, judgment-based process like auditing into standardized metrics. Scholars and regulators alike have cautioned that while AQIs offer useful benchmarks, they are not immune to methodological and conceptual limitations that may compromise their effectiveness [

24].

One of the most significant issues relates to the lack of standardization in AQI design and application. Different jurisdictions and audit regulators have developed their own sets of indicators, often tailored to local regulatory priorities and market contexts. For instance, the PCAOB, CPAB, FRC, and IAASB have each proposed AQI frameworks that vary in scope, terminology, and implementation strategy. This fragmentation creates inconsistencies in how audit quality is measured and reported across firms and countries, limiting the comparability of AQI data. Without global standards, stakeholders such as investors and audit committees may find it difficult to interpret AQIs consistently or compare audit quality across firms [

25].

Another common concern is that the use of AQIs may encourage a compliance-focused or tick box mentality among audit firms. When firms prioritize meeting externally defined indicators over improving substantive audit quality, the spirit of professional skepticism and critical judgment may be diluted. Ref. [

26], warned that AQIs could inadvertently lead to “checklist auditing,” where the focus shifts toward meeting numerical thresholds—such as achieving a minimum number of training hours or internal reviews—rather than addressing engagement-specific risks or exercising professional discretion. This checkbox approach can mislead stakeholders by masking deeper issues in audit execution.

A related limitation involves the over-reliance on quantitative indicators at the expense of qualitative dimensions that are more difficult to measure but equally essential to audit quality. For example, attributes such as ethical reasoning, communication clarity, and the tone set by audit leadership are central to audit performance but are often excluded from AQI frameworks due to their subjectivity. As Velte [

27] noted, numerical indicators are convenient proxies but fall short of capturing the ethical and behavioral complexity of audit practice. This imbalance risks narrowing the scope of quality assessment to easily reportable metrics while ignoring contextual nuances.

In addition, the interpretability and usability of AQI data can pose significant challenges. Different stakeholders—such as regulators, investors, audit committees, and clients—may interpret the same indicator differently based on their perspectives and priorities. For instance, high audit hours could be viewed as a sign of thoroughness by some, or inefficiency by others. Zahid [

28] highlighted that the absence of interpretive guidance or benchmarking standards may lead to stakeholder confusion or misjudgment, undermining the intended transparency benefits of AQIs.

Moreover, resource and cost constraints can inhibit the effective implementation of AQI systems, particularly for smaller audit firms or those operating in emerging markets. Persakis [

29] noted that maintaining detailed AQI reporting infrastructure—such as tracking training hours, audit partner workload, or engagement-specific metrics—requires significant investment in technology, staff, and data systems. Smaller firms may struggle to meet these requirements without compromising operational efficiency. As a result, AQI frameworks may disproportionately burden less-resourced firms and create unintended barriers to entry or participation in high-quality audit markets.

Finally, there are emerging concerns about technological dependence and cybersecurity. As audit firms increasingly use AI-powered tools and automated systems to collect and report AQIs, vulnerabilities may arise related to data integrity, system reliability, and cyber threats. While these technologies offer efficiency and scalability, their use also introduces risks that could affect the accuracy and credibility of AQI data [

30,

31]. Overdependence on automated tools without adequate human oversight may also reduce the auditor’s direct engagement with the data, weakening the depth of professional judgment.

2.3. AQIs and ESG Assurance: Research Gaps and Integration

The implementation of AQIs across jurisdictions reflects a complex and evolving landscape, shaped by different legal traditions, regulatory priorities, and levels of market maturity. While the underlying goal—enhancing audit quality through measurable indicators—is broadly shared, the strategies adopted by oversight bodies and audit firms differ significantly in terms of scope, disclosure practices, and stakeholder engagement. A closer examination of the approaches taken by the United States, Canada, the United Kingdom, and the European Union reveals both converging trends and persistent divergences that have implications for global audit reform.

In the United States, the PCAOB was among the first regulators to formally explore AQIs. Its 2013 concept release proposed a comprehensive set of 28 indicators designed to assess audit quality at the engagement and firm levels [

13]. These included metrics on partner workload, audit hours, staff experience, internal inspection results, and client retention. The PCAOB’s approach was ambitious in scope, aiming to standardize audit quality assessment and enhance transparency. However, after extensive public consultation, the Board did not mandate AQI disclosures, choosing instead to encourage voluntary experimentation by audit firms. As a result, the adoption of AQIs in the U.S. has been uneven and largely confined to internal dashboards or selective disclosures to audit committees. The lack of regulatory compulsion has limited the comparability and external usefulness of AQIs, and many firms continue to treat them as confidential management tools rather than public accountability mechanisms [

32].

In contrast, Canada has pursued a more coordinated and consultative approach under the leadership of the CPAB. Beginning in 2016, CPAB worked closely with the country’s largest audit firms to develop a set of pilot AQIs that could be used for internal quality improvement and audit committee communication. The Canadian model prioritizes relevance, consistency, and clarity over comprehensiveness, focusing on a narrower set of indicators that are easier to implement and interpret [

33]. CPAB has also emphasized the educational function of AQIs, encouraging their use in enhancing dialogue between auditors and governance bodies. While disclosure remains voluntary, CPAB’s guidance and oversight have contributed to a more standardized and transparent use of AQIs compared to other jurisdictions. This collaborative regulatory philosophy reflects Canada’s preference for consensus-building over top-down mandates and has yielded relatively strong buy-in from major firms.

The United Kingdom, governed by the FRC, has adopted a hybrid approach that combines thematic inspections with firm-level transparency initiatives. While the FRC has not issued a standalone AQI framework, it has incorporated quality indicators into its AQR program and encouraged firms to include selected AQIs in their annual transparency reports. Metrics such as audit hours, partner involvement, training, and inspection results are commonly disclosed by large firms, though with considerable variation in definitions and presentation. The FRC has also published periodic reports evaluating the use and potential of AQIs in enhancing audit committee oversight. However, the UK’s voluntary and principles-based approach has faced criticism for a lack of uniformity and enforceability, with some stakeholders calling for a more prescriptive regulatory model, particularly in light of high-profile audit failures such as Carillion and Thomas Cook [

34].

In the European Union, AQI implementation remains fragmented and largely dependent on national initiatives. The EU’s Audit Regulation (Regulation (EU) No 537/2014) [

1] introduced enhanced auditor reporting requirements, such as the extended audit report and transparency disclosures, but it stopped short of mandating specific AQIs. Consequently, Member States have adopted divergent strategies. For instance, the Netherlands has launched structured AQI programs involving both regulators and firms, while Germany has focused more on reinforcing internal quality controls. In many other countries, AQIs are either in the exploratory phase or absent from the regulatory agenda. This heterogeneity reflects the decentralized nature of EU audit oversight and poses significant barriers to cross-border comparability. Without EU-wide standards or a coordinating authority to promote harmonization, AQI adoption across Europe continues to lack coherence and consistency.

In response to these disparities, global standard-setting organizations have sought to promote convergence. The IAASB has advocated for a common understanding of audit quality and the development of globally relevant AQIs. Its 2014 Framework for Audit Quality emphasized the need to engage all stakeholders in building consensus on what constitutes a high-quality audit and how it can be measured. Similarly, the IFIAR has identified AQIs as a strategic priority and has called for greater alignment in definitions, methodologies, and disclosure practices. These efforts aim to address the current fragmentation by promoting standardized measurement tools that can be adapted across regulatory contexts while preserving local relevance.

However, significant obstacles remain. Legal and institutional differences, as well as varying levels of regulatory capacity and market sophistication, continue to shape how AQIs are understood and applied. In some jurisdictions, resistance to mandatory reporting stems from concerns about proprietary data, litigation risk, and competitive sensitivity. In others, the challenge lies in limited resources or technological infrastructure to support AQI tracking and reporting. Moreover, the lack of a clear cost–benefit analysis for AQIs in some contexts has made firms cautious about investing heavily in systems that may not yield immediate returns.

Despite these challenges, the global trajectory suggests a gradual movement toward greater standardization. Major audit networks are beginning to adopt common AQI frameworks across their international affiliates, and regulators are increasingly referencing AQIs in inspection criteria and policy guidance. The rise of sustainability and ESG assurance has also added urgency to the AQI agenda, as stakeholders seek clearer signals of audit integrity and performance in non-financial reporting domains.

As jurisdictions continue to refine their AQI frameworks, the broader role of these indicators within the audit ecosystem is evolving. Beyond their traditional ap-plication in financial audit settings, AQIs are increasingly being recognized as relevant tools for assessing assurance quality in new domains. One of the most prominent of these domains is ESG reporting, where the demand for reliable, standardized assurance mechanisms is rapidly growing. This transition has significant implications for how AQIs are conceptualized, applied, and evaluated in the context of sustainability-related disclosures.

The intersection of audit quality and sustainability assurance has become increasingly significant as global stakeholders demand higher standards of corporate accountability and transparency [

35]. While Audit Quality Indicators were initially conceived as tools to assess and improve the quality of financial audits, their relevance has grown with the rapid institutionalization of ESG reporting. In this evolving context, AQIs are now being reconsidered and adapted as mechanisms for benchmarking the quality of ESG assurance engagements—a development that reflects both the broadening scope of audit responsibilities and the transformation of stakeholder expectations [

36].

The shift toward integrating ESG within audit frameworks stems from mounting regulatory and market pressure. Institutional investors, civil society organizations, and consumers are demanding more credible, verifiable disclosures about a company’s sustainability performance. Reports covering climate impact, gender equality, supply chain ethics, and governance practices are no longer considered peripheral but integral to evaluating corporate risk and long-term value creation. As a result, these disclosures must increasingly meet standards of assurance similar to those applied to financial statements. However, unlike traditional audits, ESG assurance faces unique challenges: data is often non-standardized, qualitative, forward-looking, and embedded in complex organizational structures. This complexity amplifies the importance of clear, measurable indicators of assurance quality [

37,

38].

AQIs offer a promising solution to this problem. Indicators such as partner involvement in ESG engagements, team training on sustainability standards, use of ESG-specific assurance frameworks, and review procedures for non-financial data are directly translatable from financial audit settings into the ESG domain. These AQIs serve as proxies for the preparedness and rigor with which assurance providers engage with ESG data. For example, high levels of partner time allocation and ESG-specific training hours suggest stronger oversight and domain knowledge, while robust internal review mechanisms indicate methodological consistency and adherence to assurance standards such as ISAE 3000.

Moreover, process-oriented AQIs can help demonstrate how assurance providers evaluate ESG disclosures, especially when dealing with fragmented or narrative-driven information. Indicators related to data verification, use of specialists, and consultation practices can offer insights into how audit teams manage ESG complexity and uncertainty. Likewise, output-focused AQIs—such as the nature of assurance opinions issued (limited vs. reasonable assurance), the frequency of ESG restatements, and post-assurance stakeholder feedback—provide an additional layer of accountability, helping to evaluate the perceived and actual impact of ESG audits [

39].

The relevance of AQIs in ESG assurance is further heightened by the regulatory momentum behind sustainability reporting. The European Union’s CSRD, adopted in 2022, marks a significant turning point. The CSRD requires a wide range of companies to report detailed ESG information aligned with the European Sustainability Reporting Standards (ESRS), and mandates limited assurance of these disclosures by statutory auditors or accredited assurance providers. This regulatory requirement significantly expands the role of auditors and reinforces the need for objective indicators that can assess the quality of ESG assurance engagements. AQIs—adapted and contextualized for sustainability assurance—can become key tools in meeting these new demands, helping to demonstrate both compliance and quality in an area of growing regulatory scrutiny.

Beyond the EU, similar initiatives are emerging globally. The ISSB and the GRI have accelerated efforts to harmonize sustainability disclosure frameworks, while the International IAASB is developing dedicated standards for sustainability assurance. In this context, the development of AQIs tailored to ESG becomes not just beneficial but necessary. The credibility of ESG assurance will increasingly depend on the ability of firms to document and demonstrate the robustness of their procedures—an objective that AQIs are uniquely suited to support [

40].

Importantly, the use of AQIs in ESG also aligns with broader stakeholder expectations around transparency and ethical conduct. Investors seek assurance not only of financial performance but of sustainable value creation; regulators demand evidence-based assurance that companies are not engaging in greenwashing; and society at large calls for greater accountability on climate and social impact. In each of these cases, AQIs offer a concrete means of verifying whether assurance providers are equipped to meet these demands and are conducting their engagements with integrity and rigor [

41].

Beyond the technical function of ensuring audit rigor, AQIs play an increasingly important role in enhancing public trust and promoting ethical corporate behavior. As stakeholders—ranging from investors to NGOs—scrutinize ESG claims for evidence of greenwashing or ethical misreporting, the presence of reliable AQIs becomes a signal of institutional credibility. Indicators such as partner time allocation, sustainability training, and engagement-level reviews are not just internal quality measures but also proxies for the ethical commitment of the assurance provider. When communicated transparently, these indicators help bridge the trust gap between corporations and society, particularly as ESG data becomes a critical input into investment, regulatory, and consumer decisions. AQIs in the ESG domain do not simply reinforce quality—they affirm accountability. They make visible the processes that underpin assurance and signal that sustainability audits are conducted with the same level of discipline, independence, and scrutiny as financial audits. This trust-building function is especially vital in a regulatory climate shaped by the CSRD and increasing demand for ethical corporate conduct [

42,

43].

Despite the proliferation of AQI frameworks and the growing importance of ESG reporting, the literature remains fragmented in linking these domains—particularly in terms of cross-jurisdictional regulatory comparison and the integration of AQIs into ESG assurance. While several studies focus on the technical classification of AQIs or on financial audit performance, few explore how AQIs are evolving to meet the challenges posed by non-financial reporting and emerging legal requirements such as the CSRD. Furthermore, the social implications of AQIs—as tools for fostering public trust and ethical conduct—are underexplored. This study addresses these gaps by examining the conceptual evolution, international implementation, and ESG relevance of AQIs, with a focus on their legal, practical, and ethical dimensions across major jurisdictions.

3. Methodology

We adopted a quantitative design to address two core research aims: (i) identifying the factors that shape audit professionals’ perceptions of audit quality, and (ii) examining their relationship to ESG criteria. The data collection consisted of using a structured questionnaire, which we distributed electronically to executives of audit firms, with a primary focus on Big Four audit organizations. The final accepted sample comprised 262 respondents. We used purposive sampling targeting practitioners directly responsible for audit quality within large audit organizations. Eligibility required current employment in an audit role; duplicate entries were checked and removed before analysis. Participation was voluntary and uncompensated. The development of the questionnaire was based on bibliographically documented dimensions of audit quality, as well as on modern theoretical and empirical approaches that link ESG issues to the audit process [

44]. To find out the clarity and suitability of the questionnaire, we initially piloted it. Participants were asked to answer 5 demographic questions and 39 closed-ended questions, on a five-point Likert scale (1 = Strongly disagree to 5 = Strongly agree).

Table 1 summarizes the key psychometric and statistical validity indicators. With 39 items,

n = 262 (≈6.7 cases per item) meets common guidelines for EFA/CFA (e.g.,

n ≥ 200) and provides >0.80 power to detect small-to-moderate ANOVA effects (α = 05). It also exceeds minimal requirements for k-means solutions with k ≤ 4 on seven composite indices.

All analyses were implemented in R (version 4.5.1) using the following packages: psych, GPArotation, lavaan, semTools, car, cluster, clusterCrit, boot, and ggplot2 [

45,

46]. Analyses were performed on standardized data (z-scores), estimation options were documented (e.g., MLR/FIML where required), and resampling parameters (e.g., bootstrap R = 1000) were documented and applied with a fixed seed (set.seed) for reproducibility. Initial outputs were generated in SPSS v21; analyses were verified/updated in R 4.5.1, and reported results are those of R.

For greater transparency regarding the theoretical framework, we explicitly state the research questions and theoretically informed expectations that guided the analysis. Guided by the literature on perceived organizational support and audit quality in the ESG context, we pose: EE1—What multidimensional components underlie practitioners’ perceptions of audit quality in the ESG era? EE2—How do the components relate to each other? EE3—How do perceptions vary by demographic/role? Based on theory, we expected (indicatively) a positive covariance between ESG emphasis and supervision/training/inspections, as well as higher values of experience/supervision at higher levels. The above informed—without limiting—the primarily exploratory purpose of the study.

The design is cross-sectional and relies on self-reported perceptions, with a consequent risk of common method variance (CMV), despite the procedural/statistical countermeasures we implemented (

Appendix A.2). The sample (mainly executives of large audit organizations) supports conclusions primarily for this population and limits generalizability to medium/small companies. The study is exploratory; we supplemented the EFA with CFA, CR/AVE, HTMT, and robustness checks (including bootstrapped ANOVA and sensitivity analyses), without making causal claims. Finally, no formal cross-jurisdictional comparisons are made; therefore, any group differences are not attributed to the regulatory framework without further substantiation.

3.1. Factor Analysis and Reliability Analysis

Exploratory Factor Analysis (EFA) was used to identify and represent the underlying conceptual dimensions. The transformation method was Principal Axis Factoring with varimax rotation, and the discussion by Lorenzo [

47] guided the rationale for this selection. The appropriateness of the sample was verified via the Kaiser-Meyer-Olkin index (KMO = 0.907) and the statistically significant Bartlett’s test of sphericity (

p < 0.001) following criteria by [

48].

Values exceed the minimum acceptable limits (KMO > 0.60,

p < 0.05). In practice, the data verification process required more preparation than initially estimated. We tried to ensure the highest possible data quality and statistical rigor. In the context of EFA, all scale items were standardized and checked for numerical form; Principal Axis Factoring was combined with varimax rotation for maximum readability of loadings [

48,

49]. Sampling adequacy (KMO) and sphericity (Bartlett) were checked before factor extraction.

Cronbach’s Alpha was applied to assess the internal consistency of the dimensions with acceptance levels set to α ≥ 0.70 [

50]. We computed composite indices from only the reliable factors by computing mean scores of the corresponding variables. Reliability was calculated per factor [

51]. Then, composite indices were created by taking the means of the corresponding questions, in order to maintain the original 1–5 scale and make the dimensions comparable. For single-item dimensions, α was not calculated, as specified in the APA guidelines.

Given that the data are self-reported, we applied procedural controls (anonymity, scale mixing) and statistical controls for CMV (Harman’s single-factor and common latent factor in CFA; see

Appendix A.2). To further mitigate social-desirability bias, participation was confidential, completed outside supervisory channels, and no firm-identifying information was collected.

3.2. Correlations and ANOVA

Composite indicators were analyzed with Pearson correlations in order to assess the possible relationships. Despite minor deviations from normality on some variables, the sample size (

n = 262) was considered sufficient for our analysis [

45]. In addition, all variables were continuous and were linearly related to each other, justifying the parametric option [

52]. Our choice was visually supported through the relevant scatter plots and Shapiro–Wilk test (for normality). Pearson correlations were applied to the composite indices, with pairwise handling of missing values and checking of assumptions (linearity and homoscedasticity).

Then, differences between demographic characteristics (gender, age, position, experience, and education) were analyzed through one-way analysis of variance (One-Way ANOVA). Where statistical significance was identified (

p < 0.05), Tukey HSD Post Hoc Analysis was performed in terms of Lakens [

53]. For each dependent variable, a One-Way ANOVA was calculated for each demographic factor. In multilevel categories, Tukey HSD was applied for controlled multiple comparisons [

45,

54]. The analyses were based on the composite indices derived from the reliable EFA scales. The degrees of freedom are fully reported in

Section 4.5.

3.3. Cluster Analysis

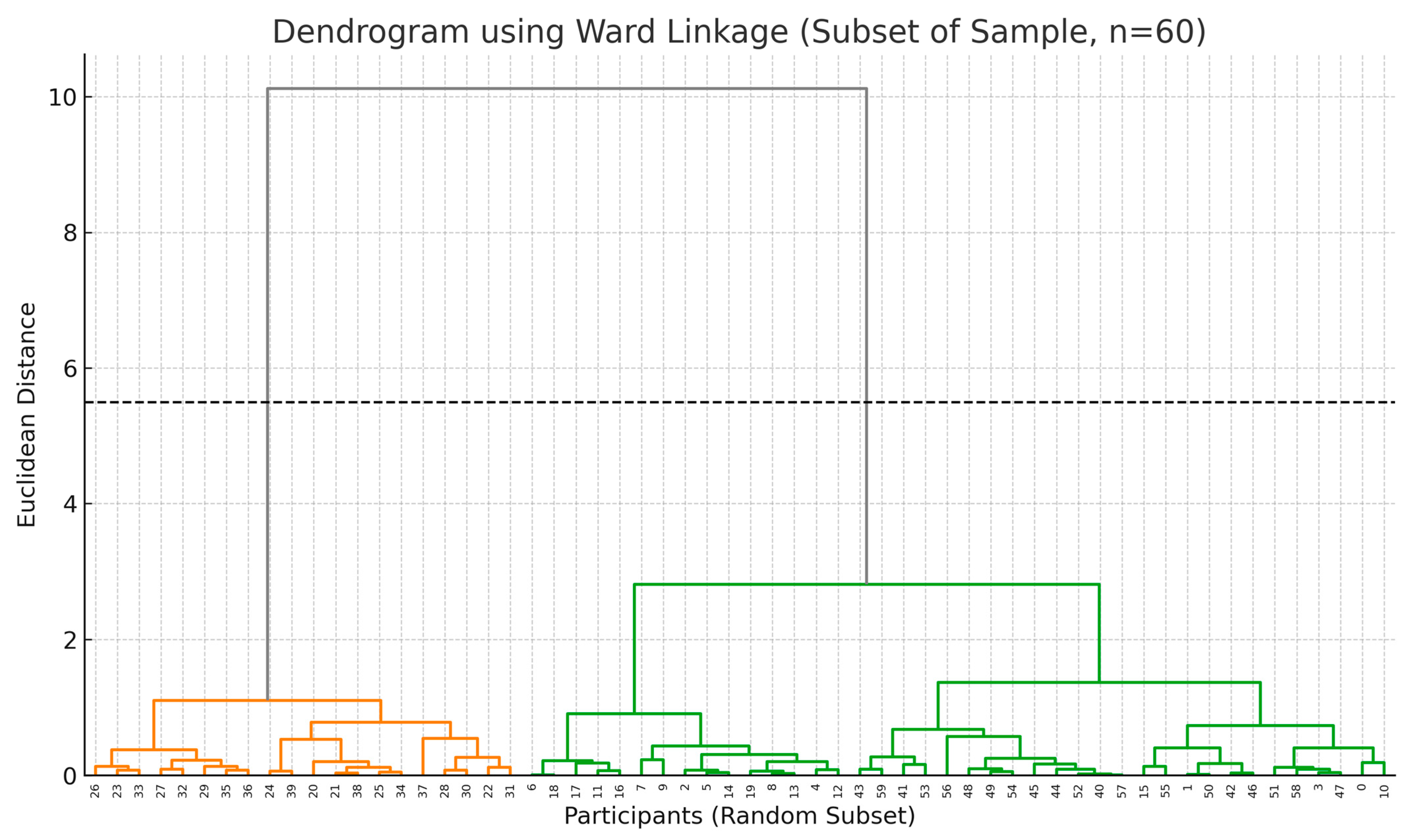

Hierarchical Cluster Analysis was initially used to identify professional groups that have similar perceptual profiles, employing the Ward method and squared Euclidean distance as the distance measure. The number of clusters was determined using the Elbow Method and Agglomeration Schedule [

55]. Subsequently, the tripartite solution with K-Means Clustering was validated, in which we determined that k = 3 clusters was the best solution.

The validity of the clusters was also evaluated with internal validation indicators, such as the Silhouette factor and the Dunn index [

56,

57,

58], which enhanced the adequacy of the selected solution. The procedure involved hierarchical clustering (Ward. D2) on standard composite indices, followed by K-Means with multiple random starts. The number k was determined by the “angle” in WSS/Elbow and internal indices (Silhouette, Dunn), with full diagnostics listed in the results. The stability of the solution was additionally checked by resampling.

To further assess quality, we performed sensitivity analysis based on two specific, key indicators. In addition, we used bootstrapped ANOVA (1000 replicates) to test the statistical stability of the groups [

58]. As a robustness check, we repeated the clustering into subsets of highly discriminatory indices and assessed the stability of differences between clusters with bootstrapped ANOVA (R = 1.000). The results of the sensitivity analyses were consistent with the basic three-cluster solution [

58].

3.4. Principal Component Analysis (PCA)

Finally, we utilized the Principal Component Analysis method (PCA) as an interpretation of the conceptual consistency of the composite indicators. The PCA produced a single principal component (eigenvalue > 1) which accounted for 51.57% of the total variance (

Table 1). The loadings of all variables were >0.60, indicating the existence of a single conceptual factor, which was interpreted as “Perceived Organizational Support in the ESG Context”. We have selected the one-dimensional model as theoretically appropriate as the indicators describe interrelated aspects of organizational support. The suitability of the analysis was confirmed in advance using KMO and Bartlett’s test [

46,

59], while no rotation was applied since only one main factor was extracted. The corresponding loadings and summary indices are listed in the results table. PCA was applied to the correlation table of the composite indices.

3.5. Robustness and Validity Checks

In

Table 1 to strengthen confidence in the structure revealed by the EFA, we performed Confirmatory Factor Analysis (CFA) with MLR/FIML, reporting fit indices (χ

2/df, CFI, TLI, RMSEA, SRMR), standardized loadings, Composite Reliability (CR/ω), and AVE (

Appendix A.1). Discriminant validity was also tested with HTMT; however, in the current computational combination (semTools/model), the algorithm did not yield values, so we document CR/AVE/CFA and refer to

Appendix A.1 for details. Where subgroup size allowed, multi-group CFA was performed to test for configural/metric equivalence. The results of these tests cross-reference the findings of the sensitivity analyses and bootstrapped ANOVA. The three-cluster solution includes a very small cluster (

n = 5); cluster-level generalizations should be interpreted cautiously despite robustness checks.

4. Results

4.1. Exploratory Factor Analysis and Reliability Analysis

The seven-factor measurement model (CFA, MLR/FIML) showed a good fit: χ

2(303) = 485.661, CFI = 0.906, TLI = 0.891, RMSEA = 0.048 [0.040–0.056], SRMR = 0.051. Reliability (model-based CR) ranged from 0.48 to 0.82 (ESG = 0.82, Supervision = 0.75, Workload = 0.68, Experience = 0.61, Training = 0.63, Inspection = 0.70, HR = 0.48) and AVE from 0.32 to 0.45 (ESG = 0.33, Supervision = 0.42, Workload = 0.41, Experience = 0.34, Training = 0.37, Inspection = 0.45, HR = 0.32). Details are provided in

Appendix A.1. Given short scales, ω/CR are also informative and reported alongside α.

CMV diagnostics did not indicate a dominant single-method factor: Harman’s single-factor accounted for 26.41% of variance; a single-factor CFA fit substantially worse than the seven-factor model; adding a common latent factor marginally improved fit indices without altering substantive conclusions (

Appendix A.2.).

EFA revealed seven distinct factor structures, which together explain 44.25% of the total variance (

Table 1). This indicates the existence of multidimensional factors that affect audit quality in Big 4 companies (

Table 2). The suitability of the sample was confirmed by the KMO index (0.907) [

45], which exceeds the minimum acceptable threshold (0.60), and by the statistically significant result of the Bartlett test (

p < 0.001) (

Table 1). The factors are related to both critical aspects of the audit process, such as experience, supervision, and training, and to modern dimensions of social responsibility, such as social indicators, equality and the integration of ESG criteria in audit design [

44]. Several participants mentioned, outside of the questionnaire, that the inclusion of social indicators is also a matter of personal professional ethics; some even described it as a “matter of utmost importance” for the credibility of the industry.

Reliability analysis with Cronbach’s Alpha index [

52] was performed for each of the seven retained multi-item factors identified by the EFA (

Table 3). The results showed a high level of internal consistency for the factor “Social Impact & ESG” (α = 0.901). In contrast, the factors “Supervision & Staff Support”, “Auditor Experience”, and “Education & Training” also presented satisfactory reliability (α > 0.75). For “Workload and Flexibility”, the index was lower (α ≈ 0.70) but still acceptable. The factors with a lower number of questions (e.g., “Human Rights & Training” and “Inspections & Improvement Culture”) showed marginally acceptable index values (α ≈ 0.65), which is partly due to the limited number of variables. Finally, single-item indicators (e.g., “Independence & Conflicts”, “Quality Management”, “Focus on Quality”) were not treated as factors and were excluded from reliability estimation. (Clarification from R analysis: the model-based reliability/convergent validity indicators were Composite Reliability/ω: ESG = 0.818; Supervision = 0.745; Workload = 0.678; Experience = 0.611; Training = 0.632; Inspection = 0.699; HR = 0.484; AVE ≈ 0.319–0.446. The low value in HR is associated with the two items, so interpretations need caution.).

4.2. Composite Indexes and Correlation Analysis

Composite indices were created for each factor that emerged from the EFA and presented satisfactory reliability according to the Cronbach’s Alpha analysis (

Table 4). The composite indices were computed as the average of the responses to the particular questions that make up each factor. The decision to average further ensures the indices retain the original measurement scale (1 to 5) and allow for easy comparison across the dimensions—a process that took longer than we expected. This simplicity in comparison proved particularly useful when we needed to present the results to non-specialists. The composite indices were utilized in subsequent analyses (correlations, groupings), providing an aggregated representation of the main dimensions that make up the quality of audits in audit firms. Factors with only one item (e.g., Independence Policy, Standardized Quality Systems) were removed from all subsequent statistical analyses because they were single items, which we could not assess for reliability, and which did not make sense to combine into factors for analysis (correlations were calculated on composite indices derived from the reliable scales; pairwise missing values were handled—pairwise.complete.obs—and robustness checks against normality deviations were applied).

Pearson correlation analysis between the composite factors resulting from the factor analysis showed statistically significant positive relationships across all pairs of variables (

p < 0.01) (

Table 4 and

Table 5). The coefficient ranges from r = 0.235 to r = 0.608, indicating moderate to strong positive relationships between the audit quality dimensions. The strong relationships between ESG and Workload (r = 0.608), ESG and HR (r = 0.553), and Workload and Supervision (r = 0.546) were significant. These strong positive relationships suggest that the higher the respondents’ perception of social impact and ESG issues, the higher the perception of workload and HR issues. In contrast, the lowest but statistically significant correlation is found between HR and Experience (r = 0.235), which may indicate a limited interaction of auditor experience with diversity and social responsibility issues (

Table 5). Overall, the patterns of the correlations indicate that the individual factors are mediated and correlated significantly, which reflects the view of audit quality as a multidimensional concept (all correlations were verified to be positive and statistically significant at the

p < 0.01 level; adjustment for multiple comparisons with Bonferroni did not change the conclusions).

4.3. One-Way ANOVA

To examine how demographics (gender, age, position in the company, years of experience in auditing, level of education) affect the seven dependent variables, we applied one-way ANOVA (see

Table 6). Where statistically significant differences were found, we proceeded with the Tukey HSD method for further comparisons, keeping the significance level at α = 0.05 (in all ANOVAs,

n = 262; the correct degrees of freedom are: for ESG_Mean for gender F (2, 259) = 5.583,

p = 0.004 and for years of experience F (3, 258) = 3.018,

p = 0.030).

For the variable ESG_Mean, the ANOVA revealed a statistically significant difference (F (2, 259) = 5.583, p = 0.004) about gender. The Tukey HSD analysis showed that group 3 (“prefer not to answer”) differed significantly from both group 1 (men) and group 2 (women), with a mean value of 4.62 versus 4.06 and 4.05, respectively (p = 0.011 and p = 0.003). This suggests that non-disclosure of gender may be associated with increased sensitivity to ESG issues. A significant effect (F (3, 258) = 3.018, p = 0.030) was also found for years of experience in auditing. Tukey HSD showed a difference between group 2 (moderate experience) and group 3 (high experience), with mean values of 4.09 and 4.43, respectively (p = 0.023), indicating that increased years of experience are associated with more positive attitudes towards ESG. (Tukey: 7–10 years > 4–6 years, p ≈ 0.023.)

Regarding the composite variable Supervision_Mean and job position, a significant difference was found (F (3, 258) = 2.714, p = 0.045). Tukey HSD indicated that group 4 (senior managers) had a higher mean (4.50) than group 1 (entry level), which had a mean of 3.78 (p = 0.026). It is plausible that this is related to the increase in oversight and evaluation that is being done by managers. (according to R output: F (3, 258) = 2.714, p = 0.045; the Junior–Director comparison was significant, diff = − 0.365, p = 0.026.).

The analysis showed a statistically significant effect (F (2, 259) = 5.505, p = 0.005) of gender on the Workload_Mean variable. Group 3 presented significantly higher workload values (M = 4.22) compared to men (M = 3.55, p = 0.011) and women (M = 3.57, p = 0.007). The finding suggests a possible differentiation in the work experience or perception of it by individuals who do not self-identify based on the traditional gender binary. While statistical analysis provides valuable insights, it cannot fully capture the subjective experiences and emotional nuances underlying individual responses. For instance, two people may receive the same score, but those two people’s experiences may be completely different day-to-day realities. (R: F (2, 259) = 5.505, p = 0.005; Tukey gave differences “Prefer not to answer” vs. Male/Female with p = 0.003–0.011.)

About the variable Experience_Mean, statistically significant effects were found for age, position in the company, and education. For age, a significant difference was found (F (3, 258) = 2.987, p = 0.032), with a tendency for experience to increase in older age groups. However, none of the links showed statistically significant deviations in multiple comparisons. For the position in the company, the effect was statistically significant (F (3, 258) = 3.916, p = 0.009). Tukey HSD showed that group 4 (senior executives, M = 4.38) and group 3 (middle management, M = 4.27) had a significantly higher perception of professional experience compared to group 1 (M = 3.67), with p = 0.007 and p = 0.044, respectively. At the same time, regarding education, the ANOVA showed a strongly significant difference (F (2, 259) = 12.15, p < 0.001). Individuals with a PhD (group 3) had a significantly higher experience value (M = 4.64) than individuals with a bachelor’s degree (group 1, M = 3.60, p <0 0.001) and a master’s degree (group 2, M = 4.06, p = 0.030). The differentiation of views according to years of experience reflects, in our opinion, the tendency of more experienced auditors to perceive audit quality through the lens of broader responsibility, and not simply formal procedures. Also, group 2 outperformed group 1 (p = 0.001). The results support the existence of a positive relationship between the level of education and self-perceived professional experience.

For the variables Training_Mean, Inspection_Mean, and HR_Mean, none of the examined independent variables showed a statistically significant effect. For Training_Mean there was an effect of education: F (2, 259) = 5.955, p = 0.003. Tukey: PhD > Bachelor (p = 0.003) and trend Master > Bachelor (p ≈ 0.052). For Inspection_Mean and HR_Mean, no significant effects emerged. For the second variable, although gender group 3 (“I prefer not to answer”) had a slightly lower mean (M = 4.19) compared to men (M = 4.40) and women (M = 4.41), no difference was statistically significant according to Tukey HSD (all p > 0.40). About age, there was a slight trend of increased values in group 4 (51 + years, M = 4.60); however, the differences were not statistically significant in any of the pairs (all p > 0.06). The overall p = 0.064 in Tukey HSD shows a marginal but non-significant trend of differentiation. The mean value for senior executives (group 4, M = 4.56) was higher compared to the other positions. However, the differences between them were not significant according to the multiple comparison analysis (all p > 0.40). Although there was an increase in the mean from the group with fewer years of experience (group 1, M = 4.32) to the group with more experience (group 4, M = 4.48), the differences were not statistically significant at any level (all p > 0.50). In addition, individuals with a PhD (group 3, M = 4.46) had a slightly higher value than those with a bachelor’s degree (M = 4.35) or a master’s degree (M = 4.41), but without any statistical significance (p > 0.50).

Finally, regarding HR_Mean, the analysis showed that there are no statistically significant differences between genders (F (2, 259) = 2.178, p = 0.115). Although group 3 (“I prefer not to answer”) had a lower mean value (M = 4.11) compared to men (M = 4.44) and women (M = 4.45), the differences were not statistically significant (p > 0.05). The p value = 0.051 in the homogeneous subgroups indicates a marginal but not statistically significant difference. No significant differences were found between age groups (F (3, 258) = 0.668, p = 0.572). The means ranged from 4.37 to 4.48, without any statistically significant comparison (all p > 0.50). Job position had no statistically significant effect on the index values (F (3, 258) = 0.220, p = 0.882). Mean values were similar across all positions (from 4.41 to 4.50), and no difference was considered significant (all p > 0.80). No statistically significant differentiation was observed depending on experience (F (3, 258) = 0.895, p = 0.444). The group with the most experience (1 = 21+ years) had the highest mean value (M = 4.50), but the differences were not significant (p > 0.50). Nor was educational level statistically significantly associated with HR_Mean values (F (2, 259) = 0.347, p = 0.707). Although the group with a PhD (M = 4.49) had a marginally higher mean, no significance was reached (all p > 0.60).

4.4. Hierarchical Cluster Analysis and K-Means Clustering

Hierarchical Cluster Analysis was then applied to identify groups of participants with similar response profiles. The aim was to identify the underlying types/profiles of auditors, based on the seven composite indicators. To investigate different types of professionals based on their perceptions, we followed Ward’s method and the Squared Euclidean Distance as a distance measure. The Agglomeration Schedule revealed steady and relatively small increases in the values of the mergers during the early stages (with values from 0 to about 10,000). In contrast, a sudden and sharp increase was observed in the last mergers, with peaks: from 296,267 to 401,289 (final merger 260 to 261), and from 255,680 to 296,267, i.e., rapid increases > 40,000–100,000 units.

According to the interpretation criteria of the analysis, the pattern shows a clear point of discontinuity. This leads us to conclude that the three (3) group solution is the most understandable and statistically stable (

Table 7). The same is seen in the dendrogram, where the clear distinction into three groups is evident before the mergers that increase homogeneity begin (

Figure 1).

The choice of three groups allows for the optimal balance between interpretability and participant variability. In addition to the WSS and the Elbow method, the quality of the clusters was also evaluated with internal validation indicators, such as the Silhouette factor and the Dunn index. K-means completed in 10 iterations with a maximum centroid shift < 0.011, indicating adequate stabilization, even though the strict convergence criterion was not formally met.

To ascertain the optimal number of clusters in the present K-means clustering analysis, we evaluated instances of k = 2, k = 3, and k = 4 using a combination of statistical diagnostics and theoretical interpretability. The within-cluster sum of squares (WSS) is reported for each solution. The most notable decline in the WSS occurred between k = 2 (WSS = 280.54) and k = 3 (WSS = 242.42); the decline from k = 3 to k = 4 (WSS = 226.01) was minor. Both our study’s findings and the WSS results suggest an evident “elbow” at k = 3, which aligns with the elbow method [

60] and suggests that a solution for this research project is optimal at k = 3. There is one issue of concern to address in the 3-cluster solution since Cluster 2 only had five members of a total of 262. However, it is important to note that although the cluster size is small, this does not mean that the model is invalid. Small sample sizes such as these have been discussed and accepted in previous literature in various forms; however, there must be other metrics available that corroborate findings when considering a cluster size of 5 individuals.

To add even more evidence in favor of the cluster model, we also contemplated the k = 4 model, which presented a cluster of only two individuals, further threatening its validity. However, the turnover rate across sensitivity analyses was sufficient to justify a valid interpretation. To ensure and still protect statistical validity, we did a supporting sensitivity analysis. For this purpose, two key variables with high explanatory contribution and differentiation between the groups were selected: Workload_Mean and Inspection_Mean. The selection was based on both their statistical power in the original model (F > 88,

p < 0.001) (

Table 7) and their theoretical weight in the daily work experience of the auditors. Based on these two variables, a new K-means clustering with three groups was performed. The results demonstrated a distribution similar to the original solution, revealing significant differences between the clusters (Workload: F = 131.46; Inspection: F = 153.45;

p < 0.001). A bootstrapped ANOVA was performed using 1000 resamplings with a 95% confidence interval. This analysis supported the stability of the differences in means [

58]. These findings confirm the reliability of the clustering solution, despite the small group size. (all between-cluster comparisons remained

p < 0.001 in the robustness tests.)

In addition, the ANOVAs for each of the cluster solutions showed statistically significant differences (p < 0.001) between clusters for all of the variables in this study. This further reinforces the discriminant validity of the 3-cluster solution. In conclusion, both quantitative criteria (WSS, ANOVA) and qualitative evaluation (interpretability and stability of the clusters) led to the conclusion that a three-cluster solution is the best solution, both theoretically and statistically speaking.

Cluster 3 (“High Performance/Integrated Perception”—

n = 155) members rate high on all dimensions with averages around 4.6–4.7 in ESG, supervision, workload, experience, training, inspection, and HR. This suggests members have an integrated, positive perception of the work environment and likely experience, training, and outstanding professionalism. Cluster 1 (“Satisfied but with margins”—

n = 102) gathers individuals with good, but slightly lower values than Cluster 3 (around 4.1–4.2). Although they maintain a positive perception, they show somewhat less consistency in all indicators. Possibly these are professionals in a transitional stage or with limited support. Cluster 2 (“Low Perception/Potentially Problematic”—

n = 5) has significantly lower values in all indicators (around 2.5 to 3.4). Its small population composition suggests a marginal but factual minority of professionals who face challenges or low satisfaction with their work environment. Further investigation is worthwhile so that we can design more targeted interventions. This aspect was not foreseen from the beginning. From informal discussions, some of these professionals indicated that their low rating was due to recent changes in management. It is worth noting that the existence of this cluster with low quality perception may reflect organizational difficulties or emotional fatigue. Participants may experience pressure without the required support, which is not easily reflected in numerical data, but is present in their daily professional lives.

Figure 2 outlines the markedly different profiles of the three clusters for all composite indices as an illustration of the significant differences to their perceptions.

Hence, the K-means analysis provided evidence of three groups of participants with differing perceptions of the work parameters. The K-means analysis selected the number of groups (k = 3) relative to the Elbow Plot, which indicated a bend after k = 3, as shown in

Figure 3, which confirmed the three distinctive groupings [

56]. Cluster 3 was the largest (over 59%) and revealed a very positive perception of their work, while only a small group demonstrated membership in Cluster 2 (1.9%), which was disconcerting and, therefore, emphasized. The implications of the findings will help shift items around in human resources, regarding strategies to improve satisfaction and performance, as well as within the smaller groups that present with increased challenges. (The “elbow” in WSS supports k = 3, with a small marginal improvement at k = 4)

To examine whether the three clusters created by K-Means differ significantly in terms of the key research indicators, a One-Way ANOVA was applied. It was found that all indicators show statistically significant differences between the clusters (

p < 0.001), which enhances the discriminatory power of the clustering (

Table 7).

Post-hoc Analysis (Tukey HSD) between groups revealed which differences are significant (

Table 7). For the variable ESG_MEAN, Cluster 3 > Cluster 1 > Cluster 2 (all differences significant,

p < 0.001). The highest ESG perception appears in Cluster 3 (M = 4.62), the lowest in Cluster 2 (M = 3.00). For Supervision Mean, Cluster 3 > Cluster 1 > Cluster 2 (all differences significant). Cluster 3 shows the highest satisfaction with supervision (M = 4.62). Regarding Workload Mean, Cluster 3 > Cluster 1 > Cluster 2. Cluster 2 has a significantly lower perception of workload (M = 2.53). Experience Mean captures Cluster 3 > Cluster 1 > Cluster 2. Experience perception increases significantly from Cluster 2 (3.47) to Cluster 3 (4.65). Training Mean, respectively, gives Cluster 3 > Cluster 1 > Cluster 2. Cluster 3 feels the most training (M = 4.65). In Inspection Mean Cluster 3 differs significantly from the rest, mainly from Cluster 1 (

p < 0.001). The difference between Cluster 1 and 2 is not statistically significant (

p = 0.475), although there are differences in means. Finally, for HR_Mean, it is true that Cluster 3 > Cluster 1 > Cluster 2 (all differences significant). The perception of human resources is low in Cluster 2 (3.00) and high in Cluster 3 (4.64).

The analysis indicates that the groups identified by K-Means were both very different and statistically significantly different on all of the key indicators except for the difference between Cluster 1 and Cluster 2 when examining Inspection, which was not significant. However, Cluster 3 had a very positive perception about all areas (training, supervision, experience, workload, ESG, etc.). Cluster 1 has an overall satisfactory image but had slightly lower averages than Cluster 3. Cluster 2 showed much lower perception overall, revealing a possibly low perception and difficulty, which can lead to improvements in the organization; However, it only holds a small part of the data itself (n = 5), it still should be considered important.

4.5. Principal Component Analysis

The structure of the seven indicators was uncovered through PCA. The KMO goodness-of-fit index of the study was 0.872, which is a value deemed “good” [

59], indicating a strong correlation among the variables to warrant factor analysis (

Table 1). Moreover, using Bartlett’s test of sphericity yielded statistically significant results (Chi

2 = 614.47, df = 21,

p < 0.001), which suggested that PCA was an appropriate technique to use because the study sample served as a viable representative of the population (PCA was applied to the correlation matrix of the standardized composite indicators.). In practice, PCA showed only one component with an eigenvalue greater than 1 (3.61). This explains about 51.57% of the total variance. Simply put, the seven variables appear to be closely related and converge on the same dimension—likely describing the same fundamental aspect of the phenomenon. The loadings of the variables onto the principal component were quite high (all > 0.60), which also supports the dimension being consistent, as follows: ESG_Mean (0.803), Supervision_Mean (0.774), Workload_Mean (0.758), Inspection_Mean (0.694), HR_Mean (0.684), Training_Mean (0.675), Experience_Mean (0.623). (The first eigenvalue was ≈3.61 and the explained variance 51.57%; the KMO for the composite indices was 0.87, and Bartlett’s test was significant, so the use of PCA is sufficiently substantiated).

Rotation was not applied, as only one component was extracted (not applying rotation is methodologically sound when only one component is extracted). This result supports the interpretation that the composite indicators capture a single psychometric construct or type of experience of the participants [

48] PCA confirms that the indicators can be considered part of a common conceptual framework, which allows the creation of an overall indicator or factor. This indicator was labeled “Perceived Organizational Support in the ESG Context”. This dimension expresses how the participants experience general job support, training, supervision, inspection, and burden in terms of ESG practices. As a result, the standardized factor identifies a single momentous construct of Perceived Organizational Support related to ESG. The items represent the construct because they are all related to employees’ perceptions of ethical climate, management emphasis on ESG, and internal support and structures related to ESG. This notion derives from organizational behavior research where perceived support is a crucial antecedent to employee engagement, retention, as well as ethical behavior in line with an organization’s ESG practices [

61]. Such a divergence of indicators, although encouraging, requires careful interpretation, so as not to overlook the exceptions hidden in the data. Every statistical analysis benefit from a second, more qualitative perspective.

When we contacted audit firms to distribute the questionnaire, we initially encountered reservations about the ESG topic (the finding aligns with the literature on the role of perceived organizational support in the ESG context). However, there was ultimately a positive response, indicating a growing interest in social and environmental issues in the industry. From our personal experience, the stronger the sense of support from the organization, the more auditors feel empowered to integrate ESG values into their work. This confirms the importance of the human factor and culture in professional environments.

5. Discussion

The findings of this study provide empirical depth to ongoing global discussions about the purpose, implementation, and effectiveness of AQIs. By surveying audit professionals across jurisdictions and applying advanced statistical techniques—factor analysis, composite indices, ANOVA, clustering, and PCA—the research offers a nuanced portrait of how AQIs are perceived and used within different institutional and regulatory contexts. Crucially, the results highlight that AQIs are not interpreted uniformly across countries; rather, they are filtered through national regulatory logics, organizational practices, and stakeholder expectations. This complexity reinforces the importance of context-specific analysis in discussions of audit quality and offers new insight into how AQIs may evolve as tools of accountability, performance management, and sustainability assurance.

The statistical findings make clear that national regulatory frameworks significantly shape how AQIs are perceived. In Canada, where the CPAB has promoted a collaborative, principle-based approach to AQI development, respondents rated indicators more positively in terms of their usefulness for internal quality control, communication with audit committees, and long-term improvement. The composite indices constructed through factor analysis showed that Canadian respondents consistently emphasized developmental dimensions of AQIs, suggesting alignment between regulatory philosophy and practitioner engagement. These findings provide empirical confirmation of claims made in previous studies that highlight the benefits of cooperative regulatory environments in fostering meaningful quality initiatives [

33].