Data-Driven Framework for Aligning Artificial Intelligence with Inclusive Development in the Global South

Abstract

1. Introduction

2. Literature Review

2.1. Inclusion, Diversity, and Data Justice

2.2. From Ethics to Governance: Translating Principles into Organizational Practice

2.3. Societal Risks, Surveillance, and the Politics of Framing

2.4. Digital Divides, Access, and Territorial Inequalities

2.5. Applications, Sectoral Transformations, and Methodological Advances

2.6. Synthesis and Implications for This Study

3. Materials and Methods

3.1. Study Design and Rationale

3.2. Setting and Participants

3.3. Measures and Instrument Development

3.4. Data Collection Procedures

3.5. Analytical Strategy

3.6. Strongness and Bias Mitigation

3.7. Ethics and Consent

3.8. Reproducibility, Data, and Code Availability

4. Results

4.1. Sample Characteristics

4.2. Measurement Results: Dimensionality, Reliability, and Validity

4.3. Index Scores and Site Differences

4.4. Structural Relations with Development-Relevant Outcomes

Disaggregation of Harms and Framework Correlations

4.5. Heterogeneity, Invariance, and Sensitivity Analyses

4.6. Qualitative Integration and Mechanisms

5. Conclusions from Results

6. Discussion

6.1. Interpretation of Principal Findings

6.2. How the Findings Relate to and Extend Prior Work

6.3. Distributional, Sectoral, and Environmental Implications

6.4. Implications for Policy and Practice

6.5. Implications for Research

6.6. Limitations

6.7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Andrés-Martínez, M.E.; Alfaro-Navarro, J.L. Relationships between Sustainable Development Goals and Information and Communication Technologies in Europe. Inf. Technol. Dev. 2025, 1–22. [Google Scholar] [CrossRef]

- Walsham, G. The application of IT in organisations: Some trends and issues. Inf. Technol. Dev. 1989, 4, 627–644. [Google Scholar] [CrossRef]

- Qureshi, S. Why Data Matters for Development? Exploring Data Justice, Micro-Entrepreneurship, Mobile Money and Financial Inclusion. Inf. Technol. Dev. 2020, 26, 201–213. [Google Scholar] [CrossRef]

- Qureshi, S. Digital transformation for development: A human capital key or system of oppression? Inf. Technol. Dev. 2023, 29, 423–434. [Google Scholar] [CrossRef]

- Roberts, T.; Oosterom, M. Digital authoritarianism: A systematic literature review. Inf. Technol. Dev. 2024, 1–25. [Google Scholar] [CrossRef]

- Iazzolino, G.; Stremlau, N. AI for social good and the corporate capture of global development. Inf. Technol. Dev. 2024, 30, 626–643. [Google Scholar] [CrossRef]

- Sarku, R.; Ayamga, M. Is the right going wrong? Analysing digital platformization, data extractivism and surveillance practices in smallholder farming in Ghana. Inf. Technol. Dev. 2025, 1–27. [Google Scholar] [CrossRef]

- Zang, L.; Zhu, Y.; Cheng, D. Inequality through digitalization: Investigation of mediating and moderating mechanisms. Inf. Technol. Dev. 2024, 1–16. [Google Scholar] [CrossRef]

- Owoseni, A.; Twinomurinzi, H. Evaluating mobile app usage by service sector micro and small enterprises in Nigeria: An abductive approach. Inf. Technol. Dev. 2020, 26, 762–772. [Google Scholar] [CrossRef]

- Viale Pereira, G.; Cunha, M.A.; Lampoltshammer, T.J.; Parycek, P.; Testa, M.G. Increasing collaboration and participation in smart city governance: A cross-case analysis of smart city initiatives. Inf. Technol. Dev. 2017, 23, 526–553. [Google Scholar] [CrossRef]

- Schelenz, L.; Pawelec, M. Information and Communication Technologies for Development (ICT4D) critique. Inf. Technol. Dev. 2022, 28, 165–188. [Google Scholar] [CrossRef]

- Dada, J.T.; Akinlo, T.; Ajide, F.M.; Al-Faryan, M.A.S.; Tabash, M.I. Information communication and technology, and environmental degradation in Africa: A new approach via moments. Inf. Technol. Dev. 2025, 1–27. [Google Scholar] [CrossRef]

- Cachat-Rosset, G.; Klarsfeld, A. Diversity, Equity, and Inclusion in Artificial Intelligence: An Evaluation of Guidelines. Appl. Artif. Intell. 2023, 37, 2176618. [Google Scholar] [CrossRef]

- Radanliev, P. AI Ethics: Integrating Transparency, Fairness, and Privacy in AI Development. Appl. Artif. Intell. 2025, 39, 2463722. [Google Scholar] [CrossRef]

- Khan, N.; Khan, Z.; Koubaa, A.; Khan, M.; Salleh, R. Global insights and the impact of generative AI-ChatGPT on multidisciplinary: A systematic review and bibliometric analysis. Connect. Sci. 2024, 36, 2353630. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Mao, J.; Chen, Y.; Zheng, L.; Yu, J.; Yan, L.; He, L. Artificial Intelligence to Facilitate the Conceptual Stage of Interior Space Design: Conditional Generative Adversarial Network-Supported Long-Term Care Space Floor Plan Design of Retirement Home Buildings. Appl. Artif. Intell. 2024, 38, 2354090. [Google Scholar] [CrossRef]

- Buckmire, R.; Hallare, M.; Maskelony, G.; Morales, T.; Ogueda, A.; Perez, C.; Seshaiyer, P.; Uma, R. Culturally Relevant Instruction and Sustainable Pedagogy (CRISP) for Data Science and Social Justice. Scatterplot 2025, 2, 2506871. [Google Scholar] [CrossRef]

- Bozkus, E.; Kaya, I. A fuzzy-based model proposal for risk assessment prioritization using failure mode and effect analysis and Z numbers: A real case study in an automotive factory. Int. J. Occup. Saf. Ergon. 2025, 1–20. [Google Scholar] [CrossRef]

- Arora, P. Creative data justice: A decolonial and Indigenous framework for shaping global futures for data technologies. Inf. Commun. Soc. 2024, 1–17. [Google Scholar] [CrossRef]

- de Souza, R. Can data justice be global? Exploring the practice of digital rights, and the search for cognitive data justice. Inf. Commun. Soc. 2025, 28, 1006–1022. [Google Scholar] [CrossRef]

- Papagiannidis, E.; Mikalef, P.; Conboy, K. Responsible artificial intelligence governance: A review and research framework. J. Strateg. Inf. Syst. 2025, 34, 101885. [Google Scholar] [CrossRef]

- Birkstedt, T.; Minkkinen, M.; Tandon, A.; Mäntymäki, M. AI governance: Themes, knowledge gaps and future agendas. Internet Res. 2023, 33, 133–167. [Google Scholar] [CrossRef]

- Taeihagh, A. Governance of Generative AI. Policy Soc. 2025, 44, 1–22. [Google Scholar] [CrossRef]

- Janssen, M. Responsible governance of generative AI: Conceptualizing GenAI as complex adaptive systems. Policy Soc. 2025, 44, 38–51. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile; Technical Report NIST AI 600-1; U.S. Department of Commerce: Gaithersburg, MD, USA, 2024; Approved by the NIST Editorial Review Board on 25 July 2024. [Google Scholar] [CrossRef]

- Cheung, S. Generative AI, generating crisis: Framing opportunity and threat in AI governance. Inf. Commun. Soc. 2025, 1–17. [Google Scholar] [CrossRef]

- Medrado, A.; Verdegem, P. Participatory action research in critical data studies: Interrogating Artificial Intelligence from a South–North approach. Big Data Soc. 2024, 11. [Google Scholar] [CrossRef]

- Yang, T.; Zhang, H.; Wang, H. Addressing territorial digital divide through digital policy: Lessons from China’s national comprehensive big data pilot zones. Inf. Technol. Dev. 2025, 31, 577–603. [Google Scholar] [CrossRef]

- Broadband Commission for Sustainable Development. The State of Broadband 2024: Leveraging Artificial Intelligence for Universal Connectivity; Technical Report; International Telecommunication Union and UNESCO: Geneva, Switzerland, 2024; Flagship report released June 2024. [Google Scholar]

- Creswell, J.W.; Plano Clark, V.L. Designing and Conducting Mixed Methods Research, 3rd ed.; SAGE Publications: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Tashakkori, A.; Teddlie, C. (Eds.) SAGE Handbook of Mixed Methods in Social & Behavioral Research, 2nd ed.; SAGE Publications: Thousand Oaks, CA, USA, 2010. [Google Scholar]

- O’Cathain, A.; Murphy, E.; Nicholl, J. The quality of mixed methods studies in health services research. J. Health Serv. Res. Policy 2008, 13, 92–98. [Google Scholar] [CrossRef]

- Boateng, G.O.; Neilands, T.B.; Frongillo, E.A.; Melgar-Quinonez, H.R.; Young, S.L. Best practices for developing and validating scales for health, social, and behavioral research: A primer. Front. Public Health 2018, 6, 149. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications, 4th ed.; SAGE Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Lynn, M.R. Determination and quantification of content validity. Nurs. Res. 1986, 35, 382–385. [Google Scholar] [CrossRef]

- Willis, G.B. Cognitive Interviewing: A Tool for Improving Questionnaire Design; SAGE Publications: Thousand Oaks, CA, USA, 2005. [Google Scholar]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research, 2nd ed.; Guilford Press: New York, NY, USA, 2015. [Google Scholar]

- Kline, R.B. Principles and Practice of Structural Equation Modeling, 4th ed.; Guilford Press: New York, NY, USA, 2016. [Google Scholar]

- Fetters, M.D.; Curry, L.A.; Creswell, J.W. Achieving integration in mixed methods designs: Principles and practices. Health Serv. Res. 2013, 48, 2134–2156. [Google Scholar] [CrossRef]

- Tourangeau, R.; Rips, L.J.; Rasinski, K. The Psychology of Survey Response; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Shandilya, S. Intersection of AI and Agency Law: Accountability, Consent, and the Evolution of Legal Frameworks for Modern Contracts. Available online: https://ssrn.com/abstract=5252526 (accessed on 1 September 2025).

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory, 3rd ed.; McGraw–Hill: New York, NY, USA, 1994. [Google Scholar]

- Amend, T. Governance for Ecosystem-Based Adaptation: Understanding the Diversity of Actors & Quality of Arrangements; Deutsche Gesellschaft für Internationale Zusammenarbeit (GIZ) GmbH: Bonn, Germany, 2019. [Google Scholar]

- Hartung, C.; Lerer, Y.A.; Tseng, W.; Brunette, W.; Borriello, G.; Anderson, R. Open Data Kit: Tools to Build Information Services for Developing Regions. In Proceedings of the 4th ACM/IEEE International Conference on Information and Communication Technologies and Development, London, UK, 13–16 December 2010. [Google Scholar]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Nowell, L.S.; Norris, J.M.; White, D.E.; Moules, N.J. Thematic analysis: Striving to meet the trustworthiness criteria. Int. J. Qual. Methods 2017, 16, 1–13. [Google Scholar] [CrossRef]

- Sanders, E.B.N.; Stappers, P.J. Co-creation and the new landscapes of design. CoDesign 2008, 4, 5–18. [Google Scholar] [CrossRef]

- Chambers, R. The origins and practice of participatory rural appraisal. World Dev. 1994, 22, 953–969. [Google Scholar] [CrossRef]

- Rubin, D.B. Multiple Imputation for Nonresponse in Surveys; John Wiley & Sons: New York, NY, USA, 1987. [Google Scholar]

- van Buuren, S. Flexible Imputation of Missing Data, 2nd ed.; Chapman & Hall/CRC: Boca Raton, FL, USA, 2018. [Google Scholar] [CrossRef]

- Little, R.J.A.; Rubin, D.B. Statistical Analysis with Missing Data, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Fabrigar, L.R.; Wegener, D.T.; MacCallum, R.C.; Strahan, E.J. Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 1999, 4, 272–299. [Google Scholar] [CrossRef]

- Costello, A.B.; Osborne, J.W. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 2005, 10, 1–9. [Google Scholar]

- McDonald, R.P. Test Theory: A Unified Treatment; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1999. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Hu, L.T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Jolliffe, I.T. Principal Component Analysis, 2nd ed.; Springer: New York, NY, USA, 2002. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Satorra, A.; Bentler, P.M. Corrections to test statistics and standard errors in covariance structure analysis. In Latent Variables Analysis: Applications for Developmental Research; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1994; pp. 399–419. [Google Scholar]

- Yuan, K.H.; Bentler, P.M. Three likelihood-based methods for mean and covariance structure analysis with nonnormal missing data. Sociol. Methodol. 2000, 30, 165–200. [Google Scholar] [CrossRef]

- Pearl, J. Causality: Models, Reasoning, and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- Hernán, M.A.; Robins, J.M. Causal Inference: What If; Chapman & Hall/CRC: Boca Raton, FL, USA, 2020. [Google Scholar]

- Textor, J.; van der Zander, B.; Gilthorpe, M.S.; Liskiewicz, M.; Ellison, G.T.H. Robust causal inference using directed acyclic graphs: The R package dagitty. Int. J. Epidemiol. 2016, 45, 1887–1894. [Google Scholar] [CrossRef]

- Bang, H.; Robins, J.M. Doubly robust estimation in missing data and causal inference models. Biometrics 2005, 61, 962–973. [Google Scholar] [CrossRef]

- Stuart, E.A. Matching methods for causal inference: A review and a look forward. Stat. Sci. 2010, 25, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Angrist, J.D.; Pischke, J.S. Mostly Harmless Econometrics: An Empiricist’s Companion; Princeton University Press: Princeton, NJ, USA, 2009. [Google Scholar]

- Denzin, N.K. The Research Act: A Theoretical Introduction to Sociological Methods, 2nd ed.; McGraw–Hill: New York, NY, USA, 1978. [Google Scholar]

- Hennink, M.M.; Kaiser, B.N.; Marconi, V.C. Code saturation versus meaning saturation: How many interviews are enough. Qual. Health Res. 2017, 27, 591–608. [Google Scholar] [CrossRef] [PubMed]

- Meredith, W. Measurement invariance, factor analysis and factorial invariance. Psychometrika 1993, 58, 525–543. [Google Scholar] [CrossRef]

- Putnick, D.L.; Bornstein, M.H. Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Dev. Rev. 2016, 41, 71–90. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.F. Sensitivity of goodness of fit indexes to lack of measurement invariance. Struct. Equ. Model. 2007, 14, 464–504. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Gelman, A.; Hill, J. Data Analysis Using Regression and Multilevel/Hierarchical Models; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar] [CrossRef]

- Snijders, T.A.B.; Bosker, R.J. Multilevel Analysis: An Introduction to Basic and Advanced Multilevel Modeling, 2nd ed.; SAGE Publications: London, UK, 2012. [Google Scholar]

- National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. In The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research; Technical Report; U.S. Department of Health, Education, and Welfare: Washington, DC, USA, 1979.

- World Medical Association. World Medical Association Declaration of Helsinki: Ethical principles for medical research involving human subjects. JAMA 2013, 310, 2191–2194. [Google Scholar] [CrossRef]

- Sweeney, L. k-anonymity: A model for protecting privacy. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Peng, R.D. Reproducible research in computational science. Science 2011, 334, 1226–1227. [Google Scholar] [CrossRef] [PubMed]

- Nosek, B.A.; Alter, G.; Banks, G.C.; Borsboom, D.; Bowman, S.D.; Breckler, S.J.; Buck, S.; Chambers, C.D.; Chin, G.; Christensen, G.; et al. Promoting an open research culture. Science 2015, 348, 1422–1425. [Google Scholar] [CrossRef] [PubMed]

- Stodden, V.; Leisch, F.; Peng, R.D. Implementing Reproducible Research; Chapman & Hall/CRC: Boca Raton, FL, USA, 2014. [Google Scholar]

| Theme | Key Insights from the Literature | Gap Addressed by Framework |

|---|---|---|

| Inclusion and Data Justice | Context-sensitive, participatory governance required; risks of universal templates | Framework operationalizes inclusion via access, agency, accountability, adaptation |

| Ethics to Governance | Ethical principles often fail in translation to practice | Framework specifies measurable constructs and governance levers |

| Societal Risks | AI tied to surveillance, extraction, market logics | Framework foregrounds agency and accountability to mitigate harms |

| Digital Divides | Access and affordability remain foundational | Framework integrates infrastructural, linguistic, and cultural fit |

| Sectoral Applications | AI impacts uneven across domains, with methodological challenges | Framework validated with mixed methods and field data |

| Construct | Operational Definition | Measurement Indicators | Primary Harm Types Addressed |

|---|---|---|---|

| Access | Availability and affordability of digital infrastructure and services | Connectivity, device access, affordability, reliability | Exclusionary harms (unequal access, denial of services) |

| Agency | Capacity of individuals and communities to understand, contest, and influence automated decisions | Comprehension, appeal channels, consent, collective redress pathways | Procedural harms (opacity, lack of remedy) |

| Accountability | Institutional mechanisms for transparency, auditability, and responsibility | Clear assignment of responsibility, grievance resolution records, audit trails | Privacy and security harms (data breaches, surveillance), procedural harms |

| Adaptation | Fit of systems to local languages, cultural practices, and infrastructural realities | Local language support, offline/low-bandwidth modes, socio-cultural responsiveness | Exclusionary harms (bias against marginalized groups, cultural misfit) |

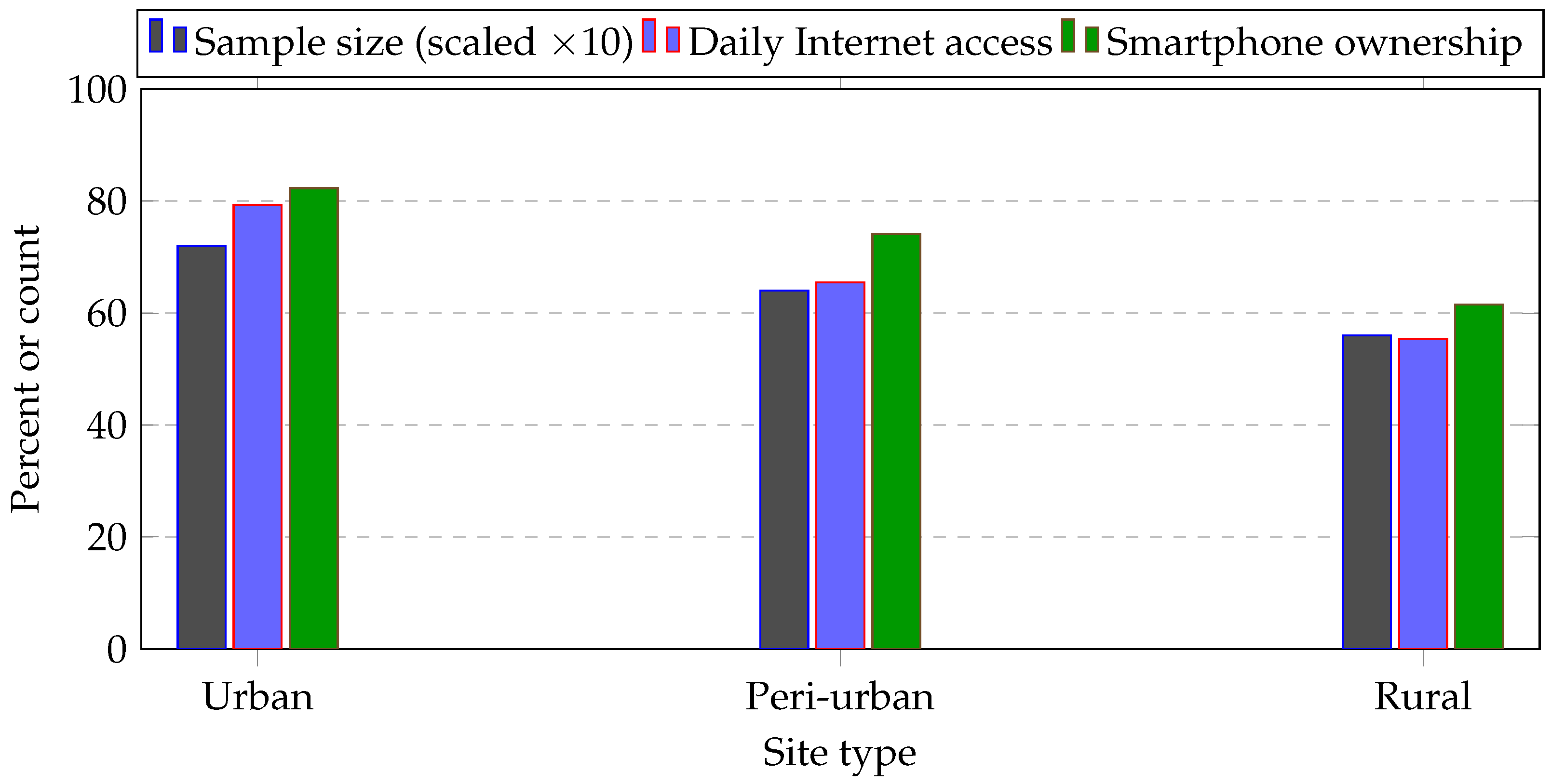

| Characteristic | Urban (n = 720) | Peri-Urban (n = 640) | Rural (n = 560) |

|---|---|---|---|

| Female (%) | 50.7 | 53.4 | 52.7 |

| Age, median (interquartile range) | 31 (23–42) | 34 (25–45) | 36 (26–47) |

| Daily internet access (%) | 79.3 | 65.5 | 55.4 |

| Smartphone ownership (%) | 82.3 | 74.1 | 61.5 |

| Uses AI-enabled public service a (%) | 46.8 | 39.1 | 28.9 |

| Completed secondary education (%) | 77.6 | 63.8 | 48.2 |

| Household income below national median (%) | 28.1 | 46.7 | 62.4 |

| Dimension | Items Retained | Loading Range | Composite Reliability | Average Variance Extracted |

|---|---|---|---|---|

| Access | 5 | 0.58–0.82 | 0.84 | 0.54 |

| Agency | 5 | 0.61–0.85 | 0.88 | 0.60 |

| Accountability | 5 | 0.57–0.81 | 0.83 | 0.55 |

| Adaptation | 5 | 0.59–0.83 | 0.86 | 0.63 |

| Predictor | Service Reach | Time Savings | Grievance Resolved | Reported Harms |

|---|---|---|---|---|

| Access | 0.41 (0.03) *** | 0.22 (0.03) *** | 0.08 (0.03) ** | (0.03) ** |

| Agency | 0.12 (0.03) ** | 0.06 (0.03) † | 0.28 (0.04) *** | (0.03) *** |

| Accountability | 0.07 (0.03) * | 0.05 (0.03) | 0.16 (0.04) *** | (0.04) *** |

| Adaptation | 0.10 (0.04) ** | 0.19 (0.04) *** | 0.06 (0.03) † | (0.03) * |

| Construct | Qualitative Mechanism | Quantitative Signal |

|---|---|---|

| Access | Bandwidth volatility and shared device use lead to deferred transactions and abandonment. | Strong positive association with service reach and time savings; negative association (modest) with harms. |

| Agency | Absence of clear explanations and appeal pathways limits user contestation and remedy. | Positive association with grievance resolution and service reach; negative association with harms. |

| Accountability | Ambiguity in institutional responsibility reduce traceability of adverse events. | Negative association with harms; positive association with grievance resolution. |

| Adaptation | Offline-first modes and local-language prompts reduce cognitive and transaction costs. | Positive association with time savings and service reach. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Silva, G.H.B.A. Data-Driven Framework for Aligning Artificial Intelligence with Inclusive Development in the Global South. Sustainability 2025, 17, 9360. https://doi.org/10.3390/su17219360

de Silva GHBA. Data-Driven Framework for Aligning Artificial Intelligence with Inclusive Development in the Global South. Sustainability. 2025; 17(21):9360. https://doi.org/10.3390/su17219360

Chicago/Turabian Stylede Silva, G. H. B. A. 2025. "Data-Driven Framework for Aligning Artificial Intelligence with Inclusive Development in the Global South" Sustainability 17, no. 21: 9360. https://doi.org/10.3390/su17219360

APA Stylede Silva, G. H. B. A. (2025). Data-Driven Framework for Aligning Artificial Intelligence with Inclusive Development in the Global South. Sustainability, 17(21), 9360. https://doi.org/10.3390/su17219360