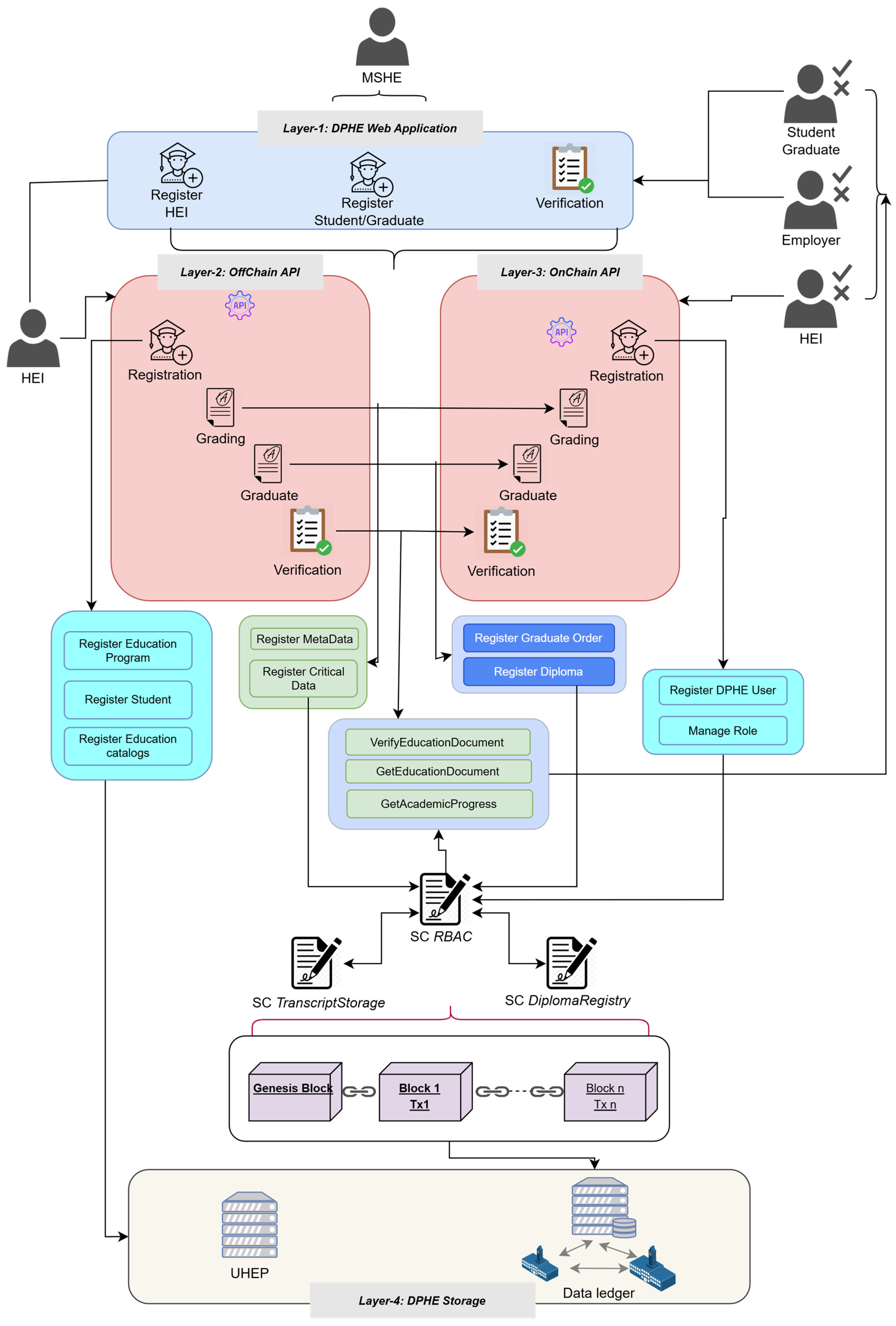

3.1. System Architecture and Performance Considerations

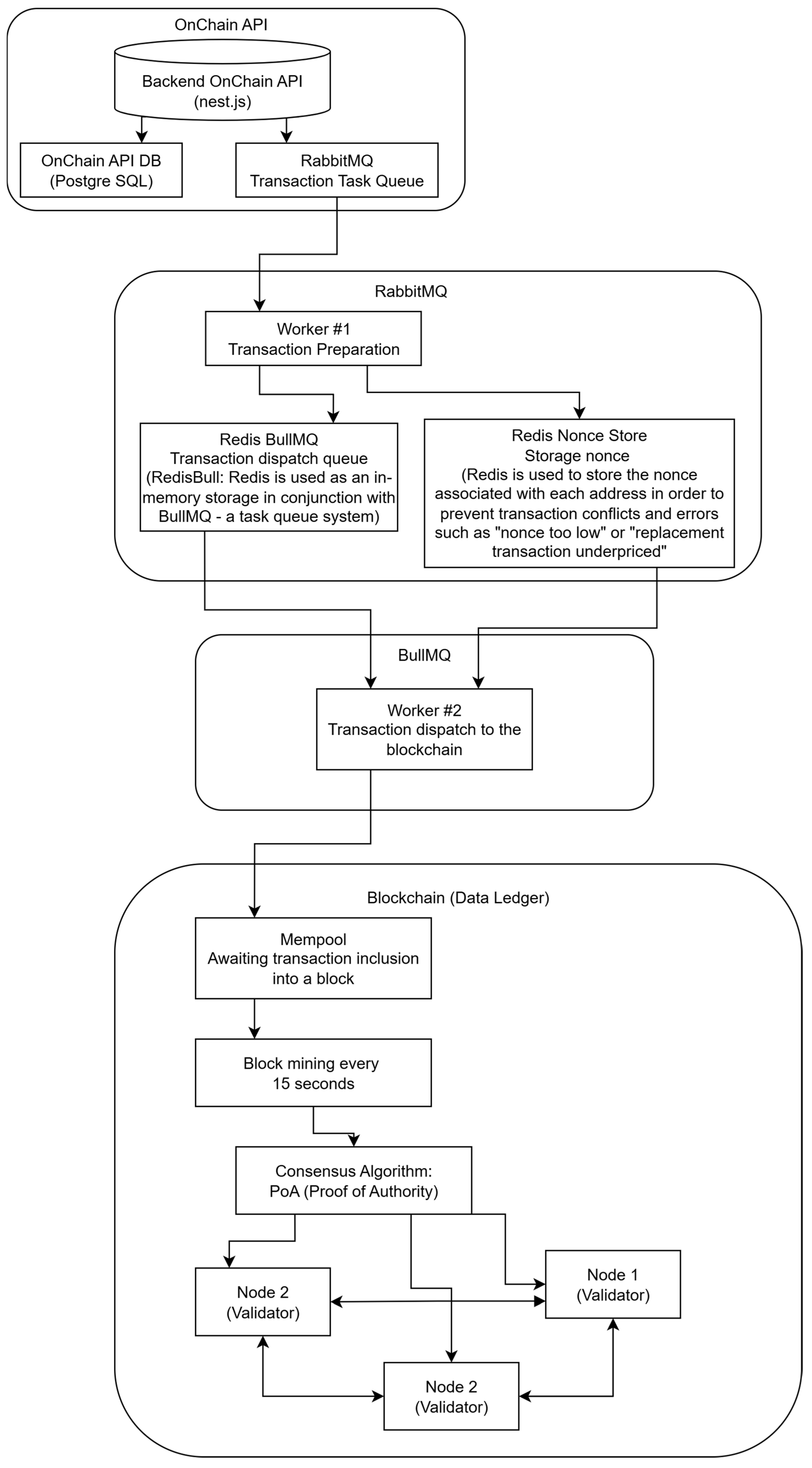

The illustrated architecture (

Figure 11) outlines the core components and operational workflow of a blockchain-based transaction processing system, designed for high performance, security, and deterministic execution within a permissioned blockchain network. This system utilizes a microservices architecture and employs asynchronous processing mechanisms to ensure efficiency, scalability, and fault isolation.

At the application layer, the OnChain API, developed using the NestJS framework, serves as the main interface for interacting with the blockchain. It handles incoming requests and connects to a PostgreSQL database for persistent data storage. Meanwhile, a RabbitMQ-based transaction task queue is utilized to separate the API from the transaction execution logic, thus enabling non-blocking and asynchronous processing.

Upon submitting a transaction request, Worker #1 retrieves the task from RabbitMQ and initiates the transaction preparation process. This phase involves structuring transaction metadata, validating input data, and ensuring nonce consistency. A Redis-based Nonce Store is utilized to mitigate nonce-related transaction errors, such as “nonce too low” or “replacement transaction underpriced.” This store maintains a mapping between blockchain addresses and their respective nonces, ensuring the correct sequencing of transactions in a concurrent environment.

Then, the prepared transaction is pushed into a BullMQ transaction dispatch queue, which uses Redis as its underlying in-memory storage system. This architecture enables high-speed queuing and delivery to the following worker processes. Worker #2, in charge of the dispatch phase, pulls transactions from BullMQ and sends them to the blockchain network for inclusion in the ledger.

Transactions are then held in the mempool, a temporary staging area, while awaiting block confirmation. The blockchain utilizes a Proof of Authority (PoA) consensus mechanism, wherein a pre-authorized set of validator nodes (Node 1, Node 2, and Node 3) is responsible for validating and finalizing blocks. The network is configured to mine new blocks at deterministic intervals (e.g., every 15 s), ensuring timeliness and consistency in transaction processing.

This modular and layered architecture addresses several key requirements for enterprise and institutional blockchain applications. By combining asynchronous processing, secure nonce management, and deterministic consensus, the system demonstrates robustness against transaction collisions, enhances throughput, and ensures data integrity. The adoption of a Proof of Authority (PoA) further ensures trust and governance within the network, making this architecture particularly suitable for applications such as academic credential verification, governmental record-keeping, and regulated financial transactions.

The presented architecture illustrates a robust and scalable blockchain transaction processing system based on a microservices approach. It integrates asynchronous messaging via RabbitMQ and BullMQ, nonce conflict prevention through Redis, and a Proof of Authority (PoA) consensus mechanism for secure block validation. By separating transaction preparation and dispatch into distinct workers and leveraging both in-memory and persistent storage, the system ensures high throughput, fault tolerance, and deterministic execution—ideal for institutional applications such as credential verification and secure record management.

Predicting the deployment patterns or complexity of smart contracts on a public Ethereum blockchain is nearly impossible, as any participant can freely deploy contracts. This openness leads to challenges when estimating future performance or storage requirements. In contrast, on a consortium-based Ethereum blockchain, performance and storage requirements can be estimated with greater accuracy because of the following characteristics: participants are authorized nodes; smart contracts are relatively fixed, and the underlying business model determines their complexity; the transaction volume is limited by the specific enterprise use case’s scale.

In Ethereum, the transaction execution time can be divided into two main components: the time spent by the Ethereum Virtual Machine (EVM) and the cost associated with updating the “World State” [

32]. Similarly, storage overhead is primarily influenced by the size of the transaction and the changes made to the “World State” data. Consortium blockchains typically operate with predetermined and stable contracts, so the EVM execution time and transaction size remain relatively constant. Therefore, variations in performance and storage utilization are mainly driven by modifications to the “World State.”

Each transaction executed through a smart contract ultimately alters the nodes of the World State tree. The Ethereum state maps a 160-bit address to the corresponding account state, which is stored in a data structure known as the State Trie. The state of an account corresponds to a leaf node, and its address forms a path from the root to that node. Smart contracts are treated as special types of accounts. Each contract account is associated with a separate data structure for persistent storage called the Storage Trie. This storage is also implemented using a Merkle Patricia Trie (MPT) [

33].

Consequently, Ethereum’s World State consists of two primary hierarchical layers: the Account Trie (upper layer) and the Storage Trie (lower layer). In the current Ethereum implementation, when a node is searched for or inserted along a path, the system executes the sha3() hashing function and performs read/write operations to LevelDB. These actions result in measurable time consumption, and the size of the new node corresponds to the incremental data stemming from the modification. Therefore, if the current height of a new leaf node can be predicted, the performance and storage implications can be inferred accordingly. When state changes occur within the MPT structure, transaction performance and storage increments can be projected by analyzing the modification time of the World State or by empirically testing business-specific smart contracts. This analysis focuses on the current height of the World State nodes within the MPT.

According to Devroye [

34], the expected height of a Merkle Patricia Trie (MPT) can be calculated using the following formula:

When the number of transactions reaches

n and a new transaction is executed, the average time cost associated with modifying the Merkle Patricia Trie (MPT) is determined as follows:

where

a is the number of contract deployments in the network,

n is the transaction volume,

t is the execution time for creating a node in the MTP tree (sha3() calculation and database access).

Ethereum uses LevelDB as its key–value storage database. Due to the discrete nature of hashing, the keys used for accessing the database are irregular. While LevelDB performs better for sequential read and write operations, its efficiency decreases with random key access patterns [

26]. As a result, the access time

t to LevelDB increases with the growth of the stored data volume. Experimental results also show that when

n becomes sufficiently large, the value of

t rises, and performance significantly degrades for specific data entries that are no longer cached in LevelDB.

Subsequently, for brilliant contract execution within the Ethereum Virtual Machine (EVM), after processing

n transactions, the average execution time and the maximum transaction time are defined as follows:

where

is the time to execute the contract on the virtual machine.

After the execution of n transactions, the storage increment resulting from the modification of the Merkle Patricia Tree (MPT) can be computed as follows:

where

is the size of the filled branch,

is the size of the end node,

is the storage space occupied by the account state and

is the sum of storage trees for each account. If we include here the size of the

transaction itself, then the final formula becomes

The authors conduct experiments to validate the proposed performance prediction method. Three key metrics are collected during the experiment: the height of the State Trie in the Ethereum “World State,” the transaction execution time, and the storage utilization after each transaction. The experimental setup involves the deployment of a single test node, with each transaction encapsulated in an individual block to eliminate the impact of Ethereum’s caching mechanisms on performance measurements. A smart contract with minimal logic is utilized, and one million transactions are executed to ensure sufficient data for analysis.

Based on the empirical data obtained from the conducted experiment, the following conclusions were drawn:

The actual heights of the State Trie did not exceed the values predicted by Equation (1). Consequently, the predictive model’s accuracy improves when the empirical relationship between the LevelDB read time t and the volume of stored data is included, whether through experimental observations or theoretical estimation.

Under “one transaction per block,” the observed storage growth in the testing environment closely matches the predicted values.

This study is significant for validating the choice of blockchain network architecture in our proposed solution—the Ethereum-based consortium blockchain. We acknowledge that HEIs are not profit-oriented organizations; their primary objective is delivering quality education rather than generating financial returns. Therefore, promoting digital transformation in HEIs by adopting public blockchain platforms (e.g., Ethereum, Bitcoin), which incur high operational and computational costs due to cryptocurrency incentives and infrastructure maintenance, is economically unfeasible.

In contrast, a consortium Ethereum network allows for accurate predictions of blockchain performance and storage utilization under clearly defined and controlled conditions. The ability to estimate transaction scale supports the design of smart contracts that minimize performance and storage overhead. As a result, optimal data distribution between the State Trie and Storage Trie can be achieved, enabling a performance–storage trade-off that significantly lowers operational costs.

3.4. Key Performance Indicators for Network, Dapp and Infrastructure

3.4.1. Comparing Indicator Results with Benchmark Values

In addition to the parameters outlined above, we compared our experimental results with benchmark indicators commonly used in blockchain technology to assess the network’s performance, underlying infrastructure, and decentralized applications. The comparative analysis revealed that not all optimal or recommended values were achieved during the experiments. This discrepancy can be attributed to the platform’s specific operational characteristics and the predefined input parameters that govern its operation.

The analysis of the observed performance indicators, as summarized in

Table 4, demonstrates a generally stable and operationally viable blockchain environment, tailored for academic credential management within a private network. Several network-level metrics are utilized to approach or meet the target thresholds. For instance, block propagation time was measured at approximately 856 milliseconds, aligning with optimal expectations (≤1 s). The fork/error rate remained at 0%, thanks to the single-validator configuration, which ensures deterministic finality without chain splits.

However, block finalization and transaction response times notably exceeded typical enterprise-grade application benchmarks. The 15 s interval, predetermined in the genesis block for block creation, directly impacted transaction throughput (TPS), limiting it to 8.26 transactions per second, which is well below the ≥100 TPS target considered optimal for high-load systems. This is acceptable within the current use case, where transaction volumes are low and periodic, but it may pose scalability challenges with increased usage.

From an application performance standpoint, the smart contract code coverage (90%) and gas utilization (80–90%) indicate a well-optimized deployment. However, the latter slightly exceeds the recommended 50–70% range, suggesting potential for congestion in more intensive usage scenarios. The interface uptime and monitoring coverage remained at 99.9% and 100%, respectively, demonstrating a mature infrastructure setup with strong observability through tools like Zabbix and Blockscout.

Strategically, the system demonstrated full validator engagement (100%) and maintained a low-complexity upgrade cycle, with updates completed in 1–2 h. This highlights the environment’s maintainability and operational readiness, supporting its use in semi-critical academic and cross-institutional scenarios.

In conclusion, the experimental results affirm the system’s viability for its intended purpose, highlighting performance bottlenecks related to design-time parameters. These factors should be considered in future iterations that require greater scalability or responsiveness.

3.4.2. On-Chain Storage Growth Dynamic

Table 5 shows the monthly growth dynamics of blockchain storage, differentiating between empty and non-empty block sizes. Data from January and February are excluded from percentage growth calculations due to the initial network bootstrapping phase. A significant increase in storage consumption is noted in March (+43.2%) and April (+72.63%), signaling an upward trend in data usage as transaction activity intensifies.

The reported figures are split into the size contributions from empty blocks—typically produced to maintain the block generation schedule—and non-empty blocks, which contain actual transaction data. The combined monthly storage increments reflect both network protocol characteristics (e.g., block time, block size limit) and user behavior (e.g., transaction frequency, contract interaction density).

This progression underscores the importance of proactive storage resource planning in permissioned blockchain networks. The predictable yet accumulative nature of block data necessitates efficient indexing, compression, and archival strategies to ensure long-term operability, particularly in domains such as higher education, where transaction frequency may correlate with academic cycles.

3.4.3. Impact of Transaction Volume per Block

The volume of transactions stored within a single blockchain block is crucial for determining the system’s overall performance, scalability, and responsiveness. A high transaction density per block can influence not only execution efficiency but also the interaction between system components and the retrieval of specific transaction records.

One of the immediate consequences of an increased transaction volume is the rise in transaction processing latency. Since each transaction in a block must be validated and executed independently, the overall computational burden grows proportionally with the number of transactions. This added complexity can slow down block verification, leading to delays in block propagation across the network and reduced system responsiveness, particularly in high-throughput environments.

Besides processing latency, a high transaction load affects transaction search efficiency. Specifically, when queries target certain attributes like transaction hashes, performance can decline if suitable indexing mechanisms are not in place. Without indexing, search operations within a block may involve linear time complexity, causing delays as each transaction must be scanned sequentially. This problem becomes more noticeable as the number of transactions per block increases.

Scalability is another area negatively affected by large block sizes. Higher transaction volumes require greater computational resources to ensure timely validation and execution. At the same time, storage needs increase, as blocks with dense transaction payloads take up more disk space, putting further pressure on nodes throughout the network. The necessity to store the entire blockchain state worsens memory and storage limitations, especially for nodes operating with limited resources.

Furthermore, the propagation of large blocks across the network may be delayed due to increased data volume, especially in consensus mechanisms like Proof of Work (PoW) or Proof of Stake (PoS), where every node must verify each block. This latency can impede synchronization among full nodes, ultimately impacting consensus finality and transaction finalization timeframes.

State rollback and system recovery also become increasingly complex when managing large transaction sets per block. In the event of an error or failure within a transaction, the rollback mechanism must consider numerous state transitions, making error handling and system restoration more computationally intensive and error-prone.

To address these challenges, several optimization strategies can be employed. Transaction indexing can significantly enhance query efficiency, particularly by transaction hash or relevant metadata. Moreover, distributing transaction loads across multiple smaller blocks may improve scalability and reduce computational strain, depending on the consensus protocol used. Storage optimization techniques, such as data compression, can reduce disk usage, while parallel processing of transactions, if supported by the blockchain architecture, can speed up block validation and boost system throughput.

In summary, storing numerous transactions in a block can negatively affect search and processing efficiency, as well as overall system performance if not properly optimized. However, these effects can be alleviated through effective indexing, storage optimization, and architectural enhancements.

3.4.4. Transaction Gas Limit

Efficient management of transaction gas consumption is essential for optimizing the performance and reliability of a blockchain-based system. One key strategy involves limiting the gas usage of individual transactions—to no more than 80% of the estimated limit, for example. This not only prevents resource exhaustion but also helps maintain predictable system behavior.

Three primary control layers are commonly used:

Sender-Level Control (Off-chain): The sender manually restricts the gas limit during transaction formation, usually setting it to 80% of the estimated gas. Although this method provides strict control, it may result in transaction failures if the actual gas consumption exceeds the set threshold.

Infrastructure-Level Control (Middleware/API): Intermediate layers such as RPC endpoints or middleware can dynamically analyze and estimate gas values and block transactions considered too resource-intensive. This enables real-time filtering and enforces system-wide gas usage policies.

Monitoring and Post-Factum Analysis: Tools like Blockscout can perform SQL-based analytics on recent transactions to evaluate gas consumption patterns. Although this method does not prevent overuse in real time, it provides valuable insights that can inform infrastructure tuning and smart contract optimization.

Combining these approaches creates a comprehensive gas management strategy, ensuring that transaction execution stays within acceptable resource limits. This enhances system stability, improves user experience, and supports the scalability of the underlying decentralized application.