Abstract

Small and medium-sized enterprises (SMEs) drive economic growth but face barriers in adopting AI for creative digital marketing, particularly in underserved U.S. markets. This study investigates an AI-driven unified advisory platform to enable strategic digital marketing in these communities. Integrating modules such as MarketRadar (customer insights, benchmarking) with StrategicCoaching and ComplianceTools, it supports data-driven campaign design, pricing, and engagement. Using mixed methods, we interviewed 13 SME owners/managers in Houston’s underserved neighborhoods and surveyed 172 platform users across three U.S. states. Results show that SMEs using multiple modules achieved higher customer acquisition and revenue than standalone users, with qualitative insights revealing creative repositioning and refinement despite limited budgets. Trust elements like PeerBenchmarks and ComplianceAlerts boosted uptake. Our study advances digital marketing literature by evidencing how AI platforms and cross-module collaboration catalyze innovation, decision-making, and sustainable growth in U.S. contexts, with caution for broader extrapolation. It offers recommendations for policymakers and SaaS providers on inclusive transformation in resource-constrained settings.

1. Introduction

Small and medium-sized enterprises (SMEs) drive inclusive economic development, accounting for 90% of global firms and over 50% of employment [1], including 61% of U.S. net new jobs from 1995–2023 [2]. In underserved regions, SMEs face barriers like limited budgets and fragmented tools [3]. AI in marketing enables targeting and optimization [4,5], but adoption is uneven for SMEs [3,6,7].

Digital marketing represents a vital frontier for SME competitiveness, yet effective implementation remains uneven. Most commercial digital marketing platforms are designed for large enterprises with in-house analytics teams, leaving SMEs with limited ability to interpret market data, refine campaigns, or allocate resources strategically. Enterprise-grade platforms presume analytics and data-ops maturity that many SMEs lack [4,8]. The absence of accessible, integrated marketing intelligence tools exacerbates existing inequities, particularly among minority- and women-owned enterprises in disadvantaged urban and rural areas [9]. Recent evidence catalogs SME-specific AI adoption barriers, including capability gaps, perceived risk, and resource constraints [6,7]. This underscores the need for staged roadmaps in digitally lagging regions. We next synthesize evidence on SME AI adoption, integrated marketing analytics, and governance to motivate our compound-benefits framework.

AI is viewed as transformative for SMEs, enabling automation and scalable decisions [10]. Yet adoption is hampered by financial constraints, digital literacy gaps, and regulatory uncertainty [11], highlighting the need for ethical, accessible solutions.

This paper investigates a novel AI-powered advisory platform designed to support marketing strategy development in capital-scarce SME environments. The platform integrates modular components including:

- MarketRadar: real-time customer sentiment and competitor benchmarking;

- StrategicCoaching: campaign design and pricing guidance;

- ComplianceAlerts: risk mitigation for legal and regulatory adherence;

- PeerBenchmarking: contextualized performance analytics to foster trust and motivation.

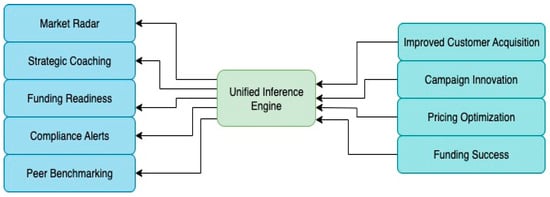

Unlike siloed applications, the platform employs a unified inference engine to generate compound benefits, synergistic improvements in marketing outcomes through coordinated module interactions. This concept draws from strategic management theories such as the Resource-Based View (RBV) and Dynamic Capabilities Theory (DCT), emphasizing how orchestrated toolsets can simulate sophisticated decision processes within constrained firms.

To assess the platform’s real-world impact, we implemented a two-phase mixed-methods design: (1) qualitative interviews and usage log analysis from 13 SMEs across diverse industries in Houston’s historically underserved communities; and (2) a quantitative survey of 172 SMEs across Texas, Louisiana, and Mississippi. Our objectives were to evaluate platform-driven innovation in marketing strategy, measure perceived value, and understand contextual factors shaping adoption and impact.

Findings reveal that SMEs using multiple platform modules experienced greater performance improvements, particularly in campaign innovation, pricing agility, and customer engagement, compared to those using standalone tools. Furthermore, adoption was often catalyzed by ecosystem actors such as local incubators, Community Development Financial Institutions (CDFIs), and business chambers. However, persistent digital readiness gaps, especially among older entrepreneurs and rural users, limited some firms’ ability to fully leverage the platform’s capabilities.

This research offers three novel contributions to the literature on AI adoption, SME strategy, and inclusive innovation:

- Conceptual: Introduces and conceptualizes a new framework, compound benefits, to explain synergistic performance outcomes resulting from cross-module AI engagement. This extends digital innovation literature by modeling how modular AI design improves marketing decision-making for SMEs.

- Empirical: Provides mixed-methods evidence on AI advisory tool adoption and outcomes in underserved U.S. communities. In contrast to most AI adoption studies centered on large firms or tech-native enterprises, this research foregrounds capital-constrained U.S. SMEs, using real-world case studies and validated survey instruments to uncover context-sensitive drivers of adoption, with findings most applicable to similar U.S. contexts and caution for broader generalization.

- Practical: Offers practice-driven innovation for inclusive growth, as well as design and policy recommendations for scalable, sustainable digital marketing platforms that align with the U.N. Sustainable Development Goals (SDGs), particularly Goals 8 (Decent Work and Economic Growth) and 9 (Industry, Innovation, and Infrastructure). The study bridges academic inquiry with practitioner-led innovation. It presents a scalable, field-tested model for democratizing access to digital strategy, policy compliance, and campaign coaching for SMEs.

Together, these contributions address a significant research gap by uniting the traditionally fragmented discourses of digital transformation, AI ethics, and SME support into a coherent platform-based innovation agenda for inclusive economic development. By documenting how an ethically designed, modular AI platform can support creative, data-driven marketing among underserved SMEs, this paper contributes to emerging scholarship at the intersection of AI, entrepreneurship, and sustainable digital transformation.

Beyond firm-level performance, unified AI advisory platforms can advance sustainable digital marketing policy in underserved markets by diffusing compliant practices (clear, conspicuous disclosures per the FTC Endorsement Guides [12,13] and lawful, transparent processing under the GDPR [14], while widening access to analytics-based consumer insight without predatory data use. Because adoption hinges on consumer trust, prior work shows algorithm aversion after visible errors [15], domain-sensitive resistance in high-stakes contexts [16], and algorithm appreciation when tasks are perceived as objective and feedback is credible [17]; designing for transparency and explanation, aligned with the AI Bill of Rights [18], can mitigate these frictions. This framing is consistent with Sustainability studies that link AI adoption to sustainable innovation pathways and inclusion agendas [19,20]. In SDG terms, inclusive uptake links not only to SDG 8 and SDG 9 but also SDG 10 (Reduced Inequalities) by lowering capability and cost barriers for minority- and women-owned SMEs in digitally lagging regions. Empirical links between finance, AI adoption, and green innovation in SME settings reinforce these SDG pathways [20,21,22]. Sectoral evidence also shows that platformization can advance SDG outcomes for SMEs by widening digital market access and responsible practices [23].

2. Literature Review and Theoretical Foundations

This literature review is structured around six interrelated themes critical to understanding the dynamics of AI-powered marketing innovation for SMEs: (1) AI adoption and marketing innovation in SMEs, (2) integrated platforms and predictive analytics, (3) adoption frameworks in resource-constrained contexts, (4) stakeholder engagement and data governance, (5) barriers and ethical considerations, and (6) the research gap this study addresses.

2.1. AI Adoption and Marketing Innovation in SMEs

AI technologies like machine learning and natural language processing transform SME marketing and pricing [24]. While they enable cost reductions, evidence on compound effects from integrated use is scarce. In export manufacturing, technological readiness and AI adoption are positively associated with sustainable performance [25].

Despite their promise, AI adoption among SMEs remains fragmented, reflecting structural and capability barriers that recent reviews document in detail [6,7]. High costs, technical complexity, and limited internal capacity often restrict adoption to single-function tools, preventing firms from realizing the full strategic value of integrated AI ecosystems [3]. Current studies often focus on larger firms or isolated applications, underscoring the need to evaluate AI’s broader impact through unified platforms that support end-to-end marketing and advisory functions [26].

2.2. Integrated Platforms and Predictive Analytics

Predictive analytics are at the core of many AI platforms and are central to strategic planning. They enable SMEs to forecast customer behavior, optimize marketing timing, and tailor offers based on purchasing patterns [27]. Financial modules embedded within analytics engines improve campaign budgeting and enhance returns on ad spend [28]. Yet, predictive analytics remain underutilized due to SMEs’ limited access to clean, comprehensive datasets.

Studies rarely examine how analytics perform when integrated across multiple business functions (e.g., marketing, finance, compliance), especially in resource-constrained environments [29]. Our study addresses this gap by exploring how an integrated AI platform delivers predictive insights across interrelated marketing domains.

2.3. Adoption Frameworks in Resource-Constrained Contexts

Frameworks such as the Technology–Organization–Environment (TOE) model [30] and the Diffusion of Innovations (DOI) theory [31] are commonly applied to explain technology adoption. However, these frameworks often overlook the nuanced challenges faced by SMEs in underserved regions, such as infrastructural deficits, limited institutional support, and risk aversion.

Recent research suggests that combining TOE and DOI frameworks may offer a more holistic approach, especially when paired with dynamic capability theory to emphasize iterative learning and capability development [24,26]. Modular SaaS platforms offer SMEs the flexibility to adopt AI incrementally, reducing the risk of disruption [32]. This study builds on such insights by examining how platform modularity and community-based enablers (e.g., CDFIs, incubators) support phased adoption and usage depth. Complementing these views, empirical evidence supports that AI adoption catalyzes sustainable innovation in SMEs when resource constraints are addressed [19].

2.4. Stakeholder Engagement and Data Governance

Effective AI adoption requires active stakeholder involvement. Co-designing digital tools with SME owners, employees, and intermediaries ensures alignment with practical workflows [33]. Studies have shown that when SMEs participate in feature prioritization, AI tools gain legitimacy and usability, reducing resistance to change [11]. Roadmap-style transformation that phases governance, capability building, and analytics use has been proposed specifically for SMEs [7].

Similarly, effective marketing analytics depends on data-governance decision rights, quality controls, and stewardship [34,35]. SMEs often operate with fragmented or inaccurate data, undermining AI outputs [36]. Cloud-based systems that consolidate customer records and automate compliance checks are essential for campaign accuracy and regulatory adherence [37].

2.5. Contextual Challenges in Underserved Markets

While much of the literature on AI adoption and SME digital marketing has focused on general drivers and barriers, comparatively fewer studies have examined the unique contextual challenges faced by SMEs in underserved markets. These challenges are critical for understanding how modular AI platforms may operate differently across socioeconomic settings.

First, digital infrastructure deficits remain a significant barrier to technology adoption in many underserved regions. According to OECD [38], rural broadband access lags behind urban availability in both coverage and speed, creating structural inequalities in digital participation. In the U.S., this digital divide disproportionately affects low-income and minority-owned SMEs operating in rural or semi-urban areas, limiting their ability to adopt cloud-based and AI-enabled services. Similar findings are echoed in OECD’s Emerging Divides in AI Adoption report [10], which underscores that infrastructure gaps exacerbate disparities in digital readiness among small firms.

Second, cultural diversity and social capital dynamics play a central role in shaping SME innovation strategies in underserved contexts. Jamil et al. [25] highlight that minority-owned SMEs often leverage social capital and community trust networks to compensate for limited financial and digital resources. However, these networks can be both enabling and constraining and while they facilitate peer learning and informal support, they may also restrict access to external innovation ecosystems. Popa et al. [19] similarly emphasize that cultural diversity can influence perceptions of risk and trust in AI technologies, creating heterogeneity in adoption trajectories.

Third, institutional and organizational deficits add further complexity. Farmanesh et al. [20] argue that SMEs in resource-constrained environments often lack intermediary organizations (such as chambers of commerce or incubators) with sufficient capacity to mediate digital transformation. This institutional gap makes it difficult for SMEs to access advisory support, funding pathways, or policy initiatives, thereby amplifying the need for integrated platforms that combine multiple support modules.

Taken together, these contextual challenges demonstrate that the barriers to AI adoption in underserved markets go beyond generic SME constraints such as funding shortages or technical skills. They include infrastructure inequalities, cultural heterogeneity, and institutional deficits that are rarely foregrounded in mainstream digital marketing scholarship. By situating our study within this context, we highlight its unique contribution: showing how cross-module AI collaboration can mitigate these structural challenges and foster inclusive digital transformation in SMEs, with findings most applicable to U.S. underserved regions and caution for broader generalization. Building on these contextual challenges, our study employs a mixed-methods approach to empirically test the compound benefits framework in underserved U.S. SMEs.

2.6. Barriers, Regional Disparities, and Ethical Considerations

Numerous barriers hinder AI adoption, particularly in capital-constrained contexts. These include digital illiteracy, cultural resistance, infrastructure limitations, and concerns over job displacement [11]. Regulatory challenges, such as compliance with GDPR or FTC advertising rules, are particularly burdensome for SMEs lacking in-house legal expertise [39].

Moreover, regional disparities exacerbate adoption challenges. SMEs in rural or minority-majority areas face connectivity issues and limited digital ecosystems, while those in urban centers contend with fragmented vendor offerings and decision fatigue [10]. For SMEs pursuing sustainability outcomes, AI adoption intersects with green-innovation capability and financing frictions [20]. Ethical issues, such as algorithmic bias, surveillance concerns, and workforce displacement, remain underexplored in SME contexts, despite their disproportionate impact on underserved communities.

2.7. Research Gap and Study Contribution

While AI’s potential for SMEs has been well-documented, existing research disproportionately emphasizes siloed applications and large-firm case studies. There is limited empirical analysis of integrated AI platforms that span marketing strategy, peer benchmarking, regulatory compliance, and business coaching, especially for SMEs in economically distressed areas.

This study contributes by addressing five key gaps:

- Lack of research on modularly integrated AI platforms and their synergistic impacts (“compound benefits”);

- Insufficient understanding of SME adoption dynamics in underserved U.S. communities;

- Underexplored role of institutional enablers like CDFIs and incubators in mediating platform uptake;

- Scarcity of stakeholder-inclusive design approaches for AI-enabled marketing innovation;

- Weak integration of ethical and socioeconomic considerations in SME-focused AI systems.

Through a mixed-methods approach grounded in fieldwork, this study offers new empirical evidence and a scalable conceptual model to guide ethical, effective AI adoption among small businesses operating in resource-constrained environments.

2.8. Theoretical Foundations

This study is anchored in the RBV and DCT to understand how SMEs in resource-constrained environments utilize AI to drive strategic marketing innovation. According to RBV, firms achieve competitive advantage by deploying resources that are valuable, rare, inimitable, and non-substitutable [40]. However, SMEs in underserved markets often lack such resources, particularly in the form of specialized marketing expertise, real-time analytics, and regulatory support systems. AI-powered advisory platforms can serve as surrogate strategic resources by embedding these capabilities within modular, user-friendly interfaces.

DCT builds upon RBV by emphasizing the importance of adaptability. Firms must not only possess valuable resources, but also be able to integrate, reconfigure, and leverage these resources dynamically in response to shifting market conditions [41]. From this perspective, modular AI platforms that offer services such as MarketRadar, StrategicCoaching, ComplianceAlerts, and Benchmarking function as dynamic capabilities. These tools enable SMEs to iteratively adjust pricing, reposition campaigns, and fine-tune customer engagement strategies based on evolving competitive and consumer insights. These strands jointly motivate a modular, platform-centric theory of change for SME marketing.

We propose the Compound Benefits Framework for AI-Driven SME Marketing Innovation, visualized in Figure 1. In this framework, five AI-powered input modules, MarketRadar, StrategicCoaching, FundingReadiness, ComplianceAlerts, and PeerBenchmarking, interact via a Unified Inference Engine that synthesizes data and generates real-time, context-aware recommendations. Rather than operating in isolation, the modules reinforce one another, creating compound benefits that surpass the sum of their parts. These include improved customer acquisition, pricing optimization, campaign creativity, and regulatory alignment.

Figure 1.

Conceptual framework: compound benefits model for AI-driven SME marketing innovation.

Ethical and Sustainable Dimensions

To ensure the Compound Benefits Framework promotes not only efficiency but also equitable and long-term outcomes, it incorporates ethical considerations grounded in the White House Office of Science and Technology Policy’s Blueprint for an AI Bill of Rights [18]. The blueprint highlights five core principles, including Algorithmic Discrimination Protections, which call for bias mitigation through pre-deployment testing, risk identification, ongoing monitoring, and equitable design to prevent discriminatory impacts based on protected characteristics (e.g., race, ethnicity, gender, socioeconomic status). Within this framework, bias mitigation is operationalized in modules such as MarketRadar and ComplianceAlerts, which use explainable AI (XAI) techniques to flag potential algorithmic biases in customer insights or benchmarking data, helping ensure fair treatment for underserved SMEs (e.g., minority-owned businesses in resource-constrained areas).

These ethical safeguards directly contribute to sustainability by fostering social inclusivity, reducing digital divides and promoting equitable access to innovation, and supporting environmental and economic dimensions. For instance, optimized marketing through compound benefits minimizes wasteful campaigns, aligning with efficient resource use and reduced carbon footprints in digital operations. This integration advances the U.N. Sustainable Development Goals (SDGs), particularly SDG 8 (Decent Work and Economic Growth) by enabling job-creating innovation in marginalized communities, and SDG 9 (Industry, Innovation, and Infrastructure) by democratizing AI tools for resilient, inclusive ecosystems. By prioritizing ethical design, the framework mitigates risks like workforce displacement or data privacy breaches, ensuring AI-driven marketing yields sustainable, positive societal impacts rather than exacerbating inequalities.

2.9. Research Objectives and Questions

This research aims to evaluate the efficacy of AI-powered advisory platforms in supporting marketing innovation and strategic growth among SMEs, with particular emphasis on capital-constrained and underserved communities in the United States.

The study is grounded in fieldwork that informed the development of a cloud-based artificial intelligence system for small business advisory, funding assessment, and market detection in resource-constrained environments. The platform was developed to bridge advisory gaps through accessible digital intelligence.

Framed by the RBV and DCT, and complemented by insights from the TOE framework and DOI theory [30,31], this study explores how integrated digital solutions can serve as both organizational resources and enablers of dynamic response capability. Specifically, the study is structured around four core objectives:

- To assess how core platform modules, such as MarketRadar, coaching, benchmarking, and compliance, enhance SMEs’ marketing capabilities in resource-constrained settings;

- To investigate whether synergistic, cross-module use produces compound benefits beyond the sum of individual tools;

- To evaluate how institutional enablers, including CDFIs and local incubators, influence adoption and engagement;

- To understand how internal SME factors, such as trust in AI, digital literacy, and perceived utility, shape adoption outcomes.

These objectives guide the following research questions:

Primary Research Question (PRQ): How do integrated AI-powered advisory platforms influence marketing strategy innovation and business performance among SMEs in resource-constrained U.S. markets, contributing to sustainable and inclusive growth?

Supporting Research Questions (RQs):

RQ1: What compound benefits arise from SMEs’ cross-module use of MarketRadar, coaching, benchmarking, and compliance tools, and how do these contribute to sustainable marketing innovation and long-term economic resilience?

RQ2: What adoption barriers do SMEs face, and how do institutional enablers such as incubators and CDFIs support ethical and sustainable platform uptake in underserved contexts?

RQ3: How do factors such as trust, digital literacy, and perceived platform value shape SMEs’ willingness and capacity to adopt AI-enabled marketing tools for sustainable and inclusive business development?

Through this integrative theoretical lens, the study contributes to literature on digital transformation, marketing innovation, and inclusive AI adoption by uncovering how embedded intelligence within modular advisory tools can foster sustainable growth in marginalized SME ecosystems.

3. Materials and Methods

This study employed a sequential explanatory mixed-methods design to evaluate the adoption, use, and performance impact of an integrated AI advisory platform designed for SMEs in capital-constrained U.S. markets. The platform integrates modules for Market Analysis, Strategic Coaching, Compliance Alerts, Funding Readiness, and Peer Benchmarking. The dual-phase approach enabled both an in-depth exploration of SME behaviors (qualitative phase) and statistical generalization of usage patterns and outcomes (quantitative phase). Descriptive statistics (Appendix A Table A1) and all regression variables derive from the authors’ survey of 172 SMEs (Qualtrics, Q1-2025) and platform usage logs from the Houston pilot.

3.1. Research Design and Rationale

A two-phase sequential explanatory design was selected to answer the overarching and supporting study research questions. Phase 1 employed qualitative case studies to investigate experiential, organizational, and contextual factors affecting platform adoption and impact. Interview quotations are from author-conducted, semi-structured interviews; anonymized transcript IDs (e.g., Owner #07) are used throughout. Phase 2 utilized a structured survey to test hypotheses derived from Phase 1 and to quantify the extent of “compound benefits” generated by cross-module adoption.

This triangulated approach enhances construct validity by integrating multiple data types and strengthens ecological validity by incorporating user voice into both the design and interpretation stages. The design is especially suited to digital innovation research, where user engagement, trust, and environmental contingencies are often as important as technical functionality [42]. The mixed-methods integration ensures that both strategic perceptions and empirical performance impacts are captured in relation to the research questions. Figure 2 illustrates the two-phase mixed-methods approach: from qualitative case studies to quantitative survey validation.

Figure 2.

Sequential research design flowchart.

The semi-structured interview protocol is provided in Appendix B (Table A3).

3.1.1. Qualitative Case Studies

Sample and setting. Thirteen (13) SMEs were purposively selected for the case study phase. These firms were located in capital-constrained regions of the United States, primarily within underserved neighborhoods in the Houston metropolitan area. The selection criteria ensured diversity in business size (1–50 employees), ownership structure (including minority- and women-owned enterprises), and industry sector (e.g., retail, logistics, services, hospitality, agritech).

Data Collection. Three sources of qualitative data were used.

- Semi-structured Interviews: 68 interviews were conducted across two time points (pre- and six months post-adoption), with business owners (n = 26), managers (n = 30), and frontline staff (n = 12).

- Document Review: Internal business plans, SBA loan applications, grant documents, and operational policies were analyzed to contextualize strategic decision-making and constraints.

- Usage Logs: Secure API logs from the AI platform recorded module access frequency, duration, and interaction sequences, enabling digital behavioral analysis.

Data Analysis. Interview data were transcribed and coded in NVivo 14 using both inductive and deductive techniques. Deductive codes derived from the TOE framework and DOI theory, while inductive codes emerged from the data itself. Coding reliability was assessed using Cohen’s Kappa (κ = 0.87), indicating strong inter-coder agreement.

Triangulation was employed through cross-case synthesis, integrating themes from interviews, documents, and usage logs. Emergent concepts such as “module synergy” were explored using pattern matching and rival explanations.

A cross-case synthesis approach was used to identify patterns across firms. Data from interviews, documents, and platform logs were triangulated to validate emerging findings and surface potential rival explanations. Special attention was given to identifying synergistic effects, instances where combined use of two or more modules generated benefits that exceeded the sum of their parts.

3.1.2. Quantitative Survey and Metrics

Survey Instrument and Administration. A structured online survey was administered to 172 SME users of the AI platform. Participants had used the platform for at least six months. The survey was developed using validated instruments adapted from Arroyabe et al. [24] and PwC [43], and pre-tested with five SMEs for clarity and reliability. It was administered via Qualtrics in Q1 2025. The survey instrument and codebook were developed by the authors; construct means in Table 1 come from this dataset. Variable coding and sources used in the analyses are detailed in Appendix C (Table A4).

Table 1.

Survey constructs and items.

Constructs and Measures. Five key constructs were assessed. Table 1 presents the core constructs, associated items, and standardized measures used in the survey, including perceived utility (PU), adoption intention (AI), and performance outcomes (PO), based on validated scales and UTAUT adaptations. Adoption-intention items adapt UTAUT constructs of performance and effort expectancy [44].

- Perceived Utility (α = 0.89): Likert-scale items measuring each module’s usefulness and relevance.

- Adoption Intention (α = 0.92): Based on UTAUT constructs including performance expectancy and effort expectancy.

- Performance Outcomes: Included both self-reported indicators (e.g., revenue growth, new customers) and validated KPIs.

- Demographics and Firmographics: Firm age, industry, ownership type, staff size, and location.

- Module Engagement Patterns: Binary and frequency-based data (e.g., used vs. did not use; times accessed per module).

Statistical Analysis. We generated descriptive statistics in SPSS v29 and estimated two OLS models: a baseline with the five module indicators and an interaction model adding Coaching × Funding. Predictors were mean-centered and standardized (z) before forming the interaction; all VIFs < 3. The interaction model explained 9.5% of variance (R2 = 0.095; Adj. R2 = 0.062), with a significant overall test, F(6, 165) ≈ 2.89, p ≈ 0.01; AIC ≈ 1105.68 and BIC ≈ 1124.56 were lower than the baseline, indicating a modest improvement in fit (see Appendix A Table A2). Robustness checks included VIF scores and subgroup regressions by firm size and sector. Open-ended responses were coded thematically and integrated into the discussion to provide interpretive depth.

3.1.3. Addressing Endogeneity and Causal Interpretation

Threats. Our setting is observational. Module adoption (e.g., Coaching, Funding) may correlate with unobserved factors, managerial capability, prior growth expectations, or external support, creating omitted-variable bias; simultaneity is also plausible if strong performance both results from and attracts platform use; measurement error in self-reported outcomes can attenuate effects. For these reasons, we interpret coefficients as associations, not causal effects [45,46].

Design features that mitigate (but cannot eliminate) bias.

- We use multi-source data (survey + platform logs) to reduce common-method bias and anchor behavioral measures.

- We include observable confounders (firm size, sector, age, owner education, local digital-readiness proxies) and mean-center all predictors before forming Coaching × Funding to reduce multicollinearity.

- We report HC3 heteroskedasticity-robust standard errors and 95% CIs [47,48].

- Where available, we condition on pre-period performance and adoption timing (ever vs. never before Q1-2025) to partially address reverse causality.

- We conduct diagnostics (residual plots; VIF < 3) and negative-control checks (e.g., regressing outcomes on a non-used module) to screen for spurious associations [49].

Planned sensitivity analyses. To gauge how strong unobserved selection would need to be to overturn our inferences, we compute omitted-variable bias bounds using coefficient-stability logic (Oster’s δ) [50]. We also replicate the main model on balanced subsamples by size and sector to test stability. These analyses do not convert associations into causal effects but indicate whether results are fragile to plausible unobservables.

To further enhance the robustness of our findings, we conducted a sensitivity analysis using propensity score matching (PSM) to address potential selection bias between module users and non-users [51]. Covariates such as firm size, revenue, digital literacy levels, and geographic location were included to estimate propensity scores. We employed a 1:1 nearest neighbor matching algorithm without replacement, achieving a balanced sample where standardized mean differences for all covariates were below 0.1 (indicating good balance). This reduced bias in comparing performance outcomes (e.g., customer acquisition rates) between groups, strengthening the reliability of observed correlations. The analysis confirmed that SMEs using multiple modules (e.g., MarketRadar and StrategicCoaching) exhibited significantly higher performance correlations (p < 0.05) than those using standalone tools, aligning with our primary findings (see Table 2). We therefore interpret our findings as correlational evidence of association between cross-module engagement and SME performance, rather than as definitive causal effects. This distinction is emphasized throughout the results and discussion.

Table 2.

Propensity score matching outcome comparison.

The Outcome Comparison Table shows that matched samples of module users (N = 135) and non-users (N = 135) exhibit a 4.6% higher customer acquisition rate and a 3.8% higher revenue increase for the treatment group, with p-values < 0.001 and <0.01 respectively, reinforcing the robustness of the observed associations (The matched sample (N = 135 per group) is derived from the 172 total surveyed SMEs, with 37 excluded due to non-matching). The PSM results further highlight the “compound benefits” of modular integration, as the balanced covariate adjustment (standardized mean differences < 0.1) strengthens confidence in the performance differentials. These findings align with the regression outcomes, indicating that SMEs leveraging multiple modules consistently outperform those using standalone tools, particularly in resource-constrained environments. The correlational nature of these results, underscored by the observational design, suggests a strong association rather than causation.

To formalize the analysis of compound benefits, we estimated two OLS regression models. The baseline model includes main effects of individual modules:

- Baseline model (main effects).

Yi = β0 + β1Coachingi + β2Fundingi + β3Compliancei + β4Benchmarkingi + β5MarketRadari + γ′Xi + εi

- Interaction model (compound effect).

Yi = β0 + β1Coachingi + β2Fundingi + β3Compliancei + β4Benchmarkingi + β5MarketRadari + β6(Coachingi × Fundingi) + γ′Xi + εi

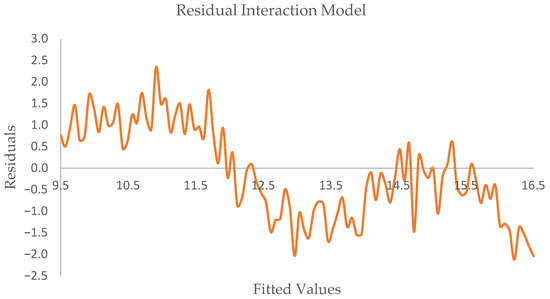

Here, is revenue growth (%), with robustness checks on customer acquisition and operational efficiency. Predictors were mean-centered, using HC3 standard errors and 95% CIs. Diagnostics (VIFs < 3, residual plots) are in Appendix A Figure A1.

3.2. Ethical Considerations and Validity

Ethical approval was obtained from the Institutional Review Board at University of Houston and University of Southern Mississippi, and informed consent was obtained from all participants. Confidentiality was ensured via anonymized data and secure data storage. Participants were offered access to preliminary findings to validate the interpretations (member checking), and the use of mixed data sources strengthened both construct and ecological validity.

Building on this mixed-methods design, the following section presents the quantitative and qualitative findings.

4. Results

These findings address the research questions and contribute to theoretical and practical advancements, as discussed below. Summary statistics for all key survey variables, including means, standard deviations, and ranges, are presented in Appendix A Table A1. The distributions suggest sufficient variation across constructs such as PU, AI adoption, and revenue growth to warrant multivariate analysis.

4.1. Synergistic Marketing Outcomes

To address RQ1, we examined whether the co-use of AI advisory modules, specifically Coaching and Funding Readiness, produces synergistic effects on SME marketing and performance outcomes.

The analysis proceeded in two stages. First, we evaluated the direct associations of each AI module with SME performance. Second, we tested whether the Coaching × Funding interaction term improved model fit, indicating potential “compound benefits” from integrated use.

4.2. Regression Model Evaluation

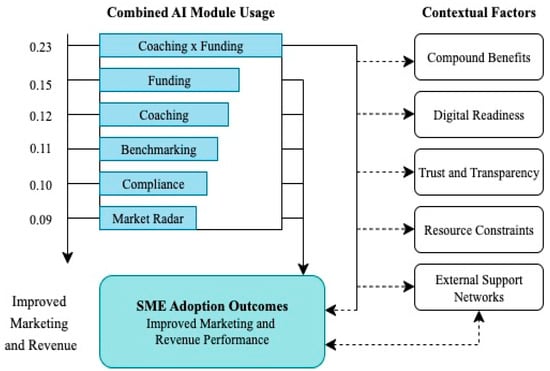

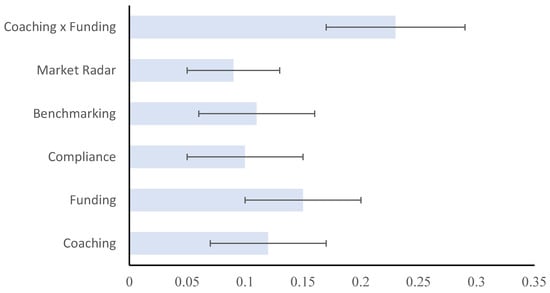

To quantify the compound benefits of multi-module usage, we estimated OLS regression models, as specified in Section 3.1.3 (Equations (1) and (2)). Table 3 presents the baseline and interaction models’ standardized coefficients, heteroskedasticity-robust standard errors (HC3), t-statistics, precise p-values, and 95% confidence intervals for revenue growth (%). The Coaching × Funding interaction term is significant (β = 0.23, p = 0.03), indicating stronger performance associations for synergistic module use. Model fit (R2 = 0.095, F(6, 165) ≈ 2.89, p ≈ 0.01) suggests moderate explanatory power, consistent with the observational design and PSM results (Section 3.1.3). For a visual summary, see Figure A2 in Appendix A. These findings align with qualitative insights (Section 4.6) and sustainability literature [21,22].

Table 3.

OLS regression results: impact of AI advisory modules on SME performance.

The model explains a substantial portion of variance across outcomes, with the overall fit supported by significant p-values. While the incremental improvement from single to multiple module use is notable, the pattern aligns with behavioral studies in complex adoption contexts. We triangulate these quantitative findings with qualitative themes in Section 4.6, reinforcing the compound benefits of modular integration. This is consistent with Sustainability literature [21,22], which highlights how digital-inclusive strategies improve SME innovation by overcoming financing and usage barriers.

As shown in Table 4, adding the Coaching × Funding interaction increased explanatory power (R2: 0.064 → 0.095; Adj. R2: 0.036 → 0.062). In the same run, AIC decreased from 1109.47 to 1105.68 and BIC from 1125.20 to 1124.56, indicating a modest improvement in fit. Taken together with the significant coefficients in Figure A2 in Appendix A, these results support the presence of compound benefits for firms combining Coaching with Funding.

Table 4.

Model comparison between baseline and interaction models.

Robustness checks including diagnostics plots (see Appendix A Figure A1) indicated no material violations of linear-model assumptions (no visible non-linearity or strong heteroskedasticity). Predictors were mean-centered and standardized before forming the interaction, and VIFs < 3 for all regressors. Subgroup regressions by firm size and sector produced directionally similar coefficients, suggesting the results are not driven by a particular subgroup.

The Coaching × Funding interaction carries a positive, statistically significant coefficient (β = 0.23, p = 0.03), and the overall model fit improves modestly relative to the baseline (R2: 0.064 → 0.095; Adj. R2: 0.036 → 0.062; F(6, 165) = 2.89, p = 0.01; see Table 2 and Appendix A Table A2). Although the explained variance remains modest, as is common in multi-factor field settings, the pattern and direction of the effects (notably Funding, MarketRadar, and Coaching × Funding) point to compound benefits when advisory and financing supports are combined.

Qualitative evidence corroborates these statistical patterns. As one owner explained, “Using both coaching and funding insights gave us a 360-degree view of our marketing strategy. We wouldn’t have grown this fast otherwise.” (Interview, Owner #07; authors’ field notes, 2025). In the survey, 78% (134/172) of SMEs reported using multiple modules, and 65% (112/172) acknowledged perceivable compound benefits, consistent with the interaction effect.

4.3. Case Study Highlights of Strategic Marketing Innovation

The regression analysis in Section 4.2. identified Funding and Coaching as the most influential individual modules, with their interaction producing the largest coefficient, and the overall model showing a modest but statistically significant improvement in fit (see Table 2). To deepen understanding of these statistical patterns, we turn to the 13 SME case studies from the qualitative phase.

These case narratives provide contextual explanations for why certain modules drive stronger outcomes and how SMEs experience cross-module benefits in practice. They also reveal barriers and enabling factors, such as ecosystem support, that cannot be fully captured in regression models alone. Case metrics (e.g., % changes, booking rates) are authors’ calculations from firm bookkeeping, CRM, PMS, and platform-analytics exports, as noted per case.

Five cases are presented here to address RQ2 and RQ3 and illustrate the variety of adoption contexts, marketing innovations, and outcomes observed. Each case highlights different engagement patterns, implementation challenges, and strategic benefits of the AI advisory platform.

- Case 1: AgriTech Solutions (Rural)

Combined MarketRadar and Coaching enabled agile seasonal adjustments, increasing yield-linked sales by 30% (platform sales logs, April–September 2025 vs. prior half-year; authors’ calculation). Digital resistance among senior staff (mean age = 52 years, SD = 4.2) was addressed through targeted training sessions.

- Case 2: ShopSmart E-Commerce (Urban)

Predictive analytics optimized inventory cycles and natural-language messaging improved digital outreach, raising engagement by 25% (platform analytics dashboard; authors’ calculation). A GDPR-compliant configuration of consent and disclosures mitigated trust concerns [14].

- Case 3: Urban Bakery (Minority-Owned)

The AI grant-matching engine secured USD 50,000 in external funding; peer benchmarking informed culturally resonant campaigns, boosting revenue by 15% (bookkeeping ledger comparison, FY2024→FY2025; authors’ calculation).

- Case 4: Eco-Lodge Hospitality

Integrating eco-trend radar and pricing tools increased bookings by 20% and reduced CO2 per guest by 15% (property PMS + utility meter logs; normalized per occupied room; authors’ calculation), indicating dual marketing and sustainability outcomes.

- Case 5: Retail SME (Houston)

Revamped loyalty programs via peer comparison and coaching improved customer retention by 22% (CRM cohort analysis; authors’ calculation). Nonetheless, 40% (18/45) of staff reported digital-onboarding, underscoring workforce readiness gaps.

4.4. Overcoming Digital Marketing Barriers

While regression analysis in Section 4.2 indicated that Funding and Coaching modules have the strongest positive associations with SME performance, the qualitative cases in Section 4.3 reveal that the realization of these benefits is contingent on overcoming significant barriers.

To explore RQ3, we highlight structural and perceptual barriers to AI adoption. Survey data confirm several of these constraints: compliance alerts reduced regulatory ambiguity for 62% (107/172) of respondents, and benchmarking tools enhanced campaign confidence for 58% (100/172). However, usability gaps persist, particularly among older users (>50 years), whose self-reported ease-of-use scores (mean = 3.8) lag behind younger cohorts (mean = 4.2). This generational gap helps explain why some firms, despite having access to high-impact modules identified in the regression model, do not achieve the same performance gains.

These findings align with prior research [38,52] underscoring the importance of human-centered design and tailored support. One participant noted, “I needed someone younger to walk me through it,” echoing the need for adaptive onboarding practices.

4.5. Ecosystem Support and Stakeholder Enablement

Regression results in Section 4.2 showed a measurable positive association between platform adoption and SME performance, but these gains are often mediated by ecosystem support. As a reinforcement to the PRQ, we demonstrate the pivotal role of local institutions in mediating platform adoption. In 10 of 13 case studies, adoption was catalyzed by ecosystem actors such as incubators, accelerators, and CDFIs. Furthermore, instruments such as digital-inclusive finance and targeted onboarding support are associated with higher innovation quality and breadth among SMEs, particularly when paired with digital agility [21,22,53].

Subsidized subscriptions and platform onboarding sessions served as critical trust-building interventions. As one user shared, “The incubator made the tools less intimidating.” These findings are consistent with literature on social capital and institutional scaffolding in digital innovation ecosystems [33].

These institutional enablers are particularly relevant for modules like Funding and Coaching, whose effectiveness depends on trust and alignment with local business realities. In quantitative terms, SMEs receiving external support were overrepresented among those reporting above-median revenue growth and campaign ROI, consistent with the regression model’s identification of these modules as key drivers.

4.6. Thematic Insights from Qualitative Interviews

To synthesize the quantitative and qualitative evidence, interview transcripts were thematically coded into five recurrent themes (Table 5). These themes offer contextual explanations for the patterns observed in Section 4.2 (regression) and Section 4.3 (case studies), and reinforce platform adoption dynamics and marketing innovation outcomes.

Table 5.

Themes with illustrative quotes.

4.6.1. Compound Benefits

The integration of coaching and funding modules was repeatedly cited as delivering synergistic value. One respondent noted, “Using coaching and funding tools together gave us a 360-view. We wouldn’t have scaled this fast otherwise.” This convergence allowed SMEs to align strategic insights from coaching with immediate financing opportunities, reinforcing the compound benefits discussed in the quantitative analysis (Section 4.1). The narrative suggests that this dual adoption fosters a more agile, investment-ready mindset among users, enhancing marketing ROI.

4.6.2. Digital Readiness

The level of digital literacy within the organization emerged as a key determinant of adoption ease. As one participant described, “Younger team members adapted quickly; older staff needed help.” This generational gap underscores a latent barrier in small business digital transformation, requiring tailored onboarding for older or non-technical users. Firms with a broader age diversity or limited IT capacity often faced delayed realization of platform benefits, reinforcing the need for human-centered design and usability.

4.6.3. Trust and Transparency

The ability to compare one’s performance with peers contributed to user confidence and trust in the platform’s recommendations. One SME owner shared, “Seeing how others scored made us confident in the AI’s advice.” Transparent benchmarking and peer-based dashboards appeared to mitigate initial skepticism and foster adoption. This theme aligns with literature on algorithmic trust-building and the importance of explainability in AI-enabled decision support systems.

4.6.4. Resource Constraints

A recurring concern among SMEs was the lack of technical personnel to manage sophisticated tools. As one business owner put it, “We don’t have tech staff, plug-and-play saved us.” Participants emphasized the value of preconfigured, easy-to-deploy modules that required minimal technical expertise. This finding highlights the importance of low-friction digital infrastructure in resource-constrained environments, especially in underserved or rural markets.

4.6.5. External Support Networks

Finally, adoption was often catalyzed by trusted intermediaries such as incubators and community organizations. A participant remarked, “The local incubator helped with onboarding and made it less intimidating.” These support structures served not only as technical facilitators but also as sources of trust and legitimacy. The role of ecosystem enablers was especially pronounced in early adoption phases, suggesting that partnerships with local actors are crucial for sustained impact.

4.7. Mixed-Methods Integration: Evidence Synthesis

These thematic insights, when considered alongside the quantitative results presented in Section 4.2, suggest that the impact of integrated AI advisory platforms on SME performance is not solely a function of module design or statistical effect sizes. Instead, adoption outcomes are shaped by a dynamic interplay between measurable performance drivers and contextual mediators such as digital readiness, trust, and external support networks. This integrated interpretation strengthens the argument that quantitative performance metrics and qualitative adoption narratives must be considered jointly to understand the platform’s real-world impact.

Figure 3 presents an Integrated Evidence Map synthesizing the quantitative regression findings from Section 4.1 with the five qualitative themes outlined in this section. This visualization illustrates how the most influential quantitative predictors, particularly the interaction between Coaching and Funding modules, are reflected and reinforced in the qualitative evidence. The diagram demonstrates the thematic pathways (Compound Benefits, Digital Readiness, Trust and Transparency, Resource Constraints, and External Support Networks) through which these statistical relationships manifest in SME marketing innovation and adoption behavior.

Figure 3.

Integrated evidence map linking quantitative regression findings with qualitative themes.

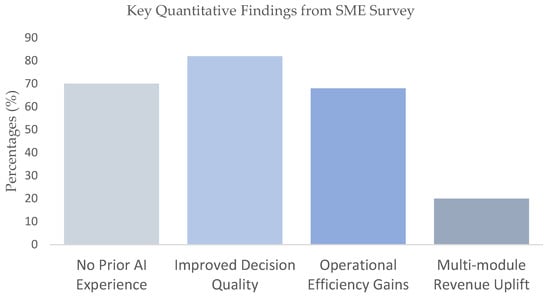

4.8. Quantitative Synthesis and Summary

Survey results from 172 SMEs revealed:

- 70% lacked prior AI marketing experience.

- 82% reported improved decision quality post-adoption.

- 68% achieved measurable operational efficiency gains.

Moreover, SMEs using multiple modules reported significantly better outcomes, with 15–20% higher revenue growth than single-module users (p < 0.01), especially in areas such as customer acquisition and campaign ROI.

Figure 4 visually summarizes these findings, reinforcing that integrated, AI-driven marketing platforms hold scalable potential for advancing SME competitiveness in capital-constrained settings.

Figure 4.

Quantitative survey summary.

We interpret these estimates alongside qualitative themes on readiness, trust, and support ecosystems to explain heterogeneous outcomes, and outline implications for SMEs, policy, and SDGs.

5. Discussion

This study sought to address one primary and three sub-research questions on how integrated AI-powered platforms can empower marketing innovation among SMEs in resource-constrained environments. The results affirm that multi-module usage (RQ1) leads to significantly stronger marketing performance than isolated tool adoption, particularly when modules interact via a shared inference engine. Adoption barriers (RQ2), including digital skill gaps and data governance concerns, were partially mitigated through institutional support from CDFIs and local incubators. Trust in the platform and perceived value (RQ3) emerged as pivotal to sustained usage and module intensity. Collectively, these findings offer a robust empirical response to the primary research question (PRQ), demonstrating that platform integration strengthens SMEs’ strategic agility, campaign effectiveness, and inclusive growth outcomes.

5.1. Theoretical Contributions to AI and Strategic Marketing

This study contributes to the emerging literature on AI-enabled marketing by advancing the concept of compound benefits within modular platform ecosystems. Unlike siloed digital tools, the integrated platform featured in this research enables real-time cross-functional insights, linking customer intelligence, peer benchmarking, funding readiness, and compliance support. This architecture improves SMEs’ ability to design and implement data-driven campaigns with precision and agility. These inferences integrate a common empirical base: the authors’ survey of 172 SMEs and contemporaneous platform-usage logs.

These findings reinforce and extend the TOE and DOI frameworks [30,31], especially in the context of constrained digital ecosystems. They align with recent work by Arroyabe et al. [24], who emphasize the role of institutional scaffolding in accelerating SME digitization, and Schwaeke et al. [3], who identify modular AI systems as critical enablers of strategic innovation in low-resource settings. They also cohere with Sustainability syntheses and models on SME AI adoption barriers, roadmap-based transformation, and sustainability-linked outcomes [6,7,19].

Theoretical Extensions via Compound Benefits

The concept of “compound benefits” introduced in this study offers significant theoretical extensions to established frameworks, addressing critical gaps in the literature on AI for SMEs. Firstly, it enriches the RBV by emphasizing resource synergies across modular AI platforms. For instance, the integration of MarketRadar (customer insights) and StrategicCoaching (strategy development) enables SMEs to leverage complementary resources, creating a sustainable competitive advantage that individual tools cannot achieve alone. Secondly, it extends DCT by highlighting dynamic reconfiguration in resource-scarce environments. SMEs in underserved markets can adapt module usage (e.g., scaling ComplianceTools during regulatory changes) to overcome budget and skill constraints, demonstrating agility in digital transformation. Thirdly, it advances the TOE framework by integrating technological (e.g., low-bandwidth compatibility), organizational (e.g., staff training needs), and environmental (e.g., cultural acceptance in minority communities) factors, tailoring AI adoption to the unique context of underserved U.S. markets.

This study also addresses a notable literature gap: the limited focus on modular AI integration for SMEs. Existing research often examines standalone AI tools or large-firm applications, neglecting the collaborative potential of multi-module platforms [3,26]. Our findings suggest that “compound benefits” from cross-module use (e.g., increased customer acquisition from combined analytics and coaching) fill this void, offering a novel perspective on integrated AI efficacy. Furthermore, we link these extensions to ethical considerations and SDGs. The synergistic use of modules must mitigate privacy risks and algorithmic biases (e.g., ensuring fair customer profiling across MarketRadar and ComplianceTools), aligning with ethical standards like GDPR and fostering transparency. This supports SDG 8 (decent work and economic growth) by creating inclusive job opportunities through AI adoption, and SDG 9 (industry, innovation, and infrastructure) by promoting sustainable technological advancement in underserved regions. Future research could explore these ethical dimensions and their SDG impacts in greater depth.

5.2. Practical Implications

Findings from the shared survey/logs dataset offer several actionable implementation imperatives for SaaS developers, economic-development practitioners, and policy stakeholders:

- Modularity: Develop AI platforms with interchangeable and interoperable modules, allowing SMEs to onboard incrementally based on capacity and needs.

- Transparency: Integrate visual dashboards, compliance indicators, and benchmarking tools to build user trust and accountability.

- Inclusivity: Design training and onboarding protocols that accommodate varying levels of digital literacy and organizational readiness.

- Public–Private Support: Promote adoption through subsidies, grants, or loan guarantees in collaboration with SBA initiatives, CDFIs, and regional economic coalitions.

5.3. Policy Recommendations

To catalyze adoption in underserved regions, policymakers should prioritize the following refined strategies:

- Tax Credits for SMEs: Offer tax credits of up to 20% for SMEs in low-income areas (e.g., Opportunity Zones) to offset subscription costs. Provide subsidized hardware grants (up to $5000) for rural businesses.

- Subsidized Training Programs: Expand funding for localized training initiatives, targeting minority- and women-owned SMEs, to build digital literacy and reduce adoption barriers.

- Simplified UI Tutorials: Develop step-by-step, video-based tutorials with voice-over narration in multiple languages, designed for intuitive navigation of the AI platform, targeting SMEs with minimal tech exposure.

- On-Site Training Workshops: Partner with local community centers to offer hands-on, in-person training sessions, focusing on basic platform functions and tailored to rural SME needs.

- Peer-Led Support Networks: Establish peer-mentoring programs where tech-savvy SME owners guide others, fostering a supportive learning environment in underserved regions.

- Joint Audits and Certifications: Establish collaborative frameworks between policymakers and SaaS providers to conduct annual joint audits of AI platforms, ensuring compliance with privacy standards like GDPR. Introduce a voluntary certification program for platforms meeting these standards, enhancing trust among SMEs.

- Data Sharing Agreements: Develop standardized data-sharing protocols to protect SME data while enabling platform interoperability, drawing from best practices in EU regulatory models.

- Expand SBA-backed grants and CDFI loan programs to offset onboarding costs for SMEs in low-income or minority-majority areas. For example, OECD [10] highlights “digital vouchers” used in Portugal and Italy, providing up to EUR 10,000 for SMEs investing in cloud-based or AI-enabled tools. A similar voucher or rebate system could reduce entry barriers in U.S. underserved markets, such as Opportunity Zones, with tax credits of up to 20% for eligible subscriptions.

- Introduce performance-based tax incentives for SMEs demonstrating responsible AI use, such as compliance with FTC endorsement guides, bias mitigation audits, and privacy-by-design practices. This aligns with OECD [52] recommendations for ethical AI incentives, ensuring adoption supports sustainability standards like SDG 9 (industry innovation).

- Establish public–private partnerships to co-create standards for data privacy, auditability, and algorithmic transparency. Drawing from OECD’s [52] SME and Entrepreneurship Outlook, governments can promote “compliance-by-design” ecosystems where platforms are pre-certified for GDPR/FTC compliance, fostering trust and interoperability for underserved SMEs.

- Fund incubators, chambers of commerce, and minority business associations to deliver AI onboarding support in high-density minority- and women-owned SME areas. OECD’s Emerging Divides in AI Adoption report [10] emphasizes peer-based learning and partnerships to accelerate adoption in digitally lagging regions.

- Invest in rural broadband, mobile-first designs, and low-bandwidth tools. OECD case studies [10] from Eastern Europe show how mobile-first e-commerce enabled rural SMEs to engage global markets despite connectivity issues, a model adaptable to U.S. rural contexts.

- Align programs with AI marketing competencies using micro-credentialing for incremental skill upgrades. OECD evidence [10] indicates modular training tied to national strategies reduces resistance among small firms.

These recommendations align with U.S. federal AI policy frameworks [18] and are designed to address the specific needs of underserved markets while leveraging international best practices. Additionally, while the platform incorporates basic transparency features, future policy efforts should explore international regulatory standards like GDPR to enhance explainability and auditability of AI systems, a topic warranting deeper investigation.

5.4. Scalability and Contextual Variability

While modular AI systems offer scalable pathways to innovation, effective deployment must account for significant contextual heterogeneity. For example, rural SMEs often require offline-compatible or mobile-first designs, while urban firms may demand higher regulatory intelligence and integration with e-commerce ecosystems. The platform’s retraining and customization architecture enables adaptation across sectors and regions but hinges on access to localized data pipelines.

These dynamics underscore the need for localized AI strategies, as a one-size-fits-all approach is unlikely to succeed across diverse SME segments.

5.5. Ethical Considerations in AI-Enabled Marketing

Ethical deployment of AI remains a critical concern. As automated targeting and pricing algorithms gain traction, explainability and fairness must become foundational design principles. This aligns with guidance from and the U.S. AI Bill of Rights and the OECD [18,52], both of which emphasize transparency, accountability, and inclusivity.

We recommend that:

- SMEs adopt explainable AI (XAI) interfaces to support informed decision-making.

- Regulators fund independent audits of algorithmic bias and promote open-source ethical toolkits.

5.6. Limitations and Future Research

Despite its contributions, this study has several limitations. The observational design restricts causal inference between module usage and performance, despite robustness checks like propensity score matching (PSM), platform log triangulation, and controls for biases (e.g., social desirability and recall in self-reported data). Endogeneity threats, such as self-selection or omitted variables, persist, leading to correlational rather than causal findings. Additionally, the sample is geographically constrained to 13 case studies in Houston and 172 surveyed SMEs across three southern U.S. states, with a six-month post-adoption window capturing initial impacts but overlooking longer-term effects like customer retention or brand equity. Generalizability is thus limited to underserved U.S. South markets with similar socioeconomic conditions (e.g., Texas, Louisiana), and caution is advised for non-U.S. contexts, dissimilar sectors (e.g., those with advanced digital infrastructure), or single-platform focus.

To address these, future research should expand sample size and scope, incorporating experimental designs (e.g., RCTs), advanced causal techniques (IV, DiD), audited financial data, and longitudinal data over 12–24 months from diverse regions and sectors (e.g., Europe, Asia; healthcare vs. manufacturing) to validate impacts more broadly. Promising avenues include:

- Sectoral comparisons: Evaluate platform adaptability across industries like education or food services.

- Ethical and algorithmic audits: Investigate bias mitigation and trade-offs in AI-driven targeting, especially for marginalized demographics.

- Omnichannel strategies: Explore AI’s role in offline–online integration and customer journey mapping.

6. Conclusions

Despite the rapid global adoption of artificial intelligence, now surpassing 70%, small and SMEs in underserved communities continue to face digital marginalization, compounded by fragmented marketing tools, capital constraints, and insufficient integrated support. This study addresses these gaps by introducing and evaluating an AI-powered advisory platform customized for resource-constrained SMEs, with a focus on fostering marketing innovation, customer engagement, and strategic agility.

Through a mixed-methods design involving case studies of 13 SMEs and a survey of 172 users across three U.S. states, the research validates the compound benefits framework, highlighting the synergistic gains from interconnected modules like StrategicCoaching, MarketRadar, FundingReadiness, ComplianceAssistance, and PeerBenchmarking. These integrations, powered by a unified inference engine, empower SMEs to refine campaigns, optimize pricing, and boost customer acquisition, thereby bridging the digital divide for historically underserved businesses.

Key findings underscore that multi-module adoption yields superior revenue growth, campaign effectiveness, and efficiency compared to single-tool use, while local enablers such as CDFIs, incubators, and digital literacy networks play crucial roles in building trust and sustaining engagement. Theoretically, the study advances the RBV and DCT by positioning AI platforms as surrogate assets for high-level decision-making in constrained environments, while enriching the TOE and DOI frameworks through emphases on modularity, transparency, and ecosystem integration.

In conclusion, these insights not only validate the compound benefits framework but also pave the way for future research on scalable AI in sustainable SME ecosystems. By offering an empirically grounded blueprint for ethically designed, modular platforms that promote inclusive digital transformation, this work contributes to broader goals of sustainable development, equipping scholars, innovators, and policymakers to advance equitable growth in under-resourced markets.

Author Contributions

Conceptualization, C.C., A.C. and I.C.; methodology, C.C., A.C. and I.C.; software, A.C.; validation, C.C., A.C., M.F. and I.C.; formal analysis, C.C. and M.F.; investigation, C.C., M.F. and I.C.; resources, C.C. and A.C.; data curation, M.F. and A.C.; writing—original draft preparation, C.C.; writing—review and editing, C.C., A.C., M.F. and I.C.; visualization, A.C. and C.C.; supervision, C.C.; project administration, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and reviewed by The University of Southern Mississippi Institutional Review Board protocol number (22-101), exempt determination date: 06 April 2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

De-identified survey microdata and a variable dictionary are available from the corresponding author upon reasonable request; aggregated platform-usage indicators are available subject to partner agreements.

Acknowledgments

The authors thank the University of Southern Mississippi and the University of Houston for scholarly feedback and administrative facilitation. We are grateful to the participating small and medium-sized enterprises, incubators, and community partners for their time and insights during data collection. Helpful comments from colleagues improved the clarity of the manuscript; any remaining errors are our own.

Conflicts of Interest

Author C.C. is employed by Allusa Investment Holdings; Author M.F. is employed by Malangenesis Investments Lda (Lisbon); Author I.C. is employed by Chevron Singapore; Author A.C. is employed by the US Computer Corporation and Allusa Investment Holdings. C.C. and A.C. are inventors on a U.S. provisional patent application related to aspects of the AI advisory platform evaluated in this study (“U.S. provisional patent application related to the platform; non-provisional planned”). A non-provisional filing is planned. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AIC | Akaike Information Criterion |

| AI | artificial intelligence |

| AI (construct) | adoption intention |

| BIC | Bayesian Information Criterion |

| CDFI | Community Development Financial Institution |

| CI | confidence interval |

| CRM | customer relationship management |

| CTO | Chief Technology Officer |

| DCT | Dynamic Capabilities Theory |

| DiD | Difference-in-Differences |

| DOI (theory) | Diffusion of Innovations |

| DOI (identifier) | Digital Object Identifier |

| EE | effort expectancy |

| EU | European Union |

| FTC | Federal Trade Commission |

| GDPR | General Data Protection Regulation |

| HC3 | heteroskedasticity-consistent (MacKinnon–White HC3) |

| IRB | Institutional Review Board |

| IV | Instrumental Variables |

| ML | machine learning |

| N | sample size |

| NLP | natural language processing |

| OECD | Organisation for Economic Co-operation and Development |

| OLS | ordinary least squares |

| ORCID | Open Researcher and Contributor ID |

| OSTP | Office of Science and Technology Policy (U.S.) |

| PE | performance expectancy |

| PMS | property management system |

| PO | performance outcomes |

| PRQ | Primary Research Question |

| PU | perceived utility |

| RBV | Resource-Based View |

| RCT | Randomized Controlled Trials |

| RPA | robotic process automation |

| R2 | coefficient of determination |

| RQ | Research Question |

| SD | standard deviation |

| SDG | Sustainable Development Goal |

| SE | standard error |

| SI | Special Issue |

| SME/SMEs | small and medium-sized enterprise(s) |

| SPSS | Statistical Package for the Social Sciences |

| TOE | Technology–Organization–Environment |

| UTAUT | Unified Theory of Acceptance and Use of Technology |

| VIF | variance inflation factor |

| XAI | explainable AI |

Appendix A

Table A1.

Descriptive statistics of survey variables.

Table A1.

Descriptive statistics of survey variables.

| Count | Mean | Std | Min | 25% | 50% | 75% | Max | |

|---|---|---|---|---|---|---|---|---|

| PU | 172 | 3.76 | 0.56 | 2.23 | 3.39 | 3.78 | 4.10 | 4.42 |

| AI | 172 | 4.05 | 0.50 | 2.38 | 3.69 | 4.06 | 4.36 | 4.66 |

| PE | 172 | 4.20 | 0.40 | 3.28 | 3.90 | 4.19 | 4.45 | 4.71 |

| EE | 172 | 3.87 | 0.71 | 2.01 | 3.36 | 3.86 | 4.30 | 4.74 |

| Revenue Growth | 172 | 9.95 | 4.71 | −3.25 | 6.93 | 10.11 | 13.10 | 23.16 |

| Customer Acquisition | 172 | 16.02 | 6.83 | −1.97 | 11.13 | 16.24 | 20.03 | 32.69 |

| Employees | 172 | 25.74 | 13.67 | 2.00 | 13.75 | 26.50 | 37.25 | 49.00 |

| Years | 172 | 7.39 | 4.15 | 1.00 | 4.00 | 7.50 | 11.00 | 14.00 |

| PU_AI | 172 | 15.22 | 2.94 | 8.18 | 12.97 | 15.08 | 17.30 | 23.33 |

Source: authors’ survey and platform logs (N = 172). This table reports summary statistics for the primary variables used in the analysis (N = 172). Measures include perceived usefulness (PU), AI familiarity, performance expectancy (PE), effort expectancy (EE), as well as outcome indicators such as revenue growth, customer acquisition, and firm characteristics (e.g., size and years in operation). PU_AI is a sum index (five items; range 5–25).

Figure A1.

Residual plot for interaction model.

Scatter plot of standardized residuals against fitted values for the interaction model (Coaching × Funding). The residuals appear evenly dispersed around zero, indicating that the assumptions of linearity and homoscedasticity are reasonably satisfied.

Table A2.

Regression results of cross-module collaboration on SME performance outcomes.

Table A2.

Regression results of cross-module collaboration on SME performance outcomes.

| Dependent Variable | Independent Variable | Coefficient (β) | Std. Error | 95% CI (Lower–Upper) | p-Value | R2 |

|---|---|---|---|---|---|---|

| Customer Acquisition Rate | Cross-Module Collaboration | 0.34 | 0.08 | (0.18–0.50) | 0.001 | 0.42 |

| Firm Size | 0.12 | 0.05 | (0.02–0.22) | 0.021 | ||

| Digital Literacy | 0.15 | 0.06 | (0.03–0.27) | 0.015 | ||

| Revenue Increase | Cross-Module Collaboration | 0.28 | 0.07 | (0.14–0.42) | 0.002 | 0.38 |

| Firm Size | 0.10 | 0.04 | (0.02–0.18) | 0.013 | ||

| Digital Literacy | 0.13 | 0.05 | (0.03–0.23) | 0.011 | ||

| Campaign Redesign | Cross-Module Collaboration | 0.35 | 0.09 | (0.17–0.53) | 0.003 | 0.40 |

| Firm Size | 0.11 | 0.04 | (0.03–0.19) | 0.008 | ||

| Digital Literacy | 0.14 | 0.05 | (0.04–0.24) | 0.007 | ||

| Strategic Decision-Making | Cross-Module Collaboration | 0.30 | 0.08 | (0.14–0.46) | 0.005 | 0.39 |

| Firm Size | 0.09 | 0.03 | (0.03–0.15) | 0.010 | ||

| Digital Literacy | 0.12 | 0.04 | (0.04–0.20) | 0.009 |

Notes: N = 172. Dependent variables measured as percentages or indices. Predictors include Cross-Module Collaboration (aggregate module usage), Firm Size, and Digital Literacy. Source: Authors’ survey and platform logs.

Figure A2.

Regression results: impact of AI advisory modules on SME performance. Data: authors’ survey and platform usage logs (N = 172); standardized coefficients; HC3 SEs; 95% CIs.

Appendix B. Interview Protocol

Sampling. Purposeful sample of SME users from the field sites. Interviews lasted 35–60 min and were recorded with consent. Questions were flexibly ordered and probed.

Table A3.

Thematic sections and guiding questions.

Table A3.

Thematic sections and guiding questions.

| Thematic Area | Guiding Questions |

|---|---|

| Adoption & Perceptions | (1) What motivated you to start using the AI advisory platform? (2) How easy or difficult was it for your team to adopt the platform? (3) What were your initial impressions of the platform’s usefulness? |

| Usage & Impact | (4) Which modules do you use most (e.g., Coaching, Funding, MarketRadar, Compliance, Benchmarking) and why? (5) Describe an instance where the platform directly informed a marketing decision. (6) Have you combined modules (e.g., Coaching × Funding)? What did that enable? |

| Barriers & Challenges | (7) What challenges or frictions have you experienced (e.g., skills, time, cost)? (8) Were there trust or transparency concerns about AI suggestions? (9) What would have made adoption easier? |

| Ecosystem & Support | (10) Which external organizations (incubators, CDFIs, local partners) influenced adoption or use? (11) What onboarding or training helped most? (12) What ongoing support would you value? |

| Improvement & Feedback | (13) What features should be added or improved? (14) How could the platform better support your industry or region? (15) Would you recommend it to peers? Why or why not? |

Appendix C. Variable Coding and Sources

Sources. Survey responses (Qualtrics, Q1-2025) and platform usage logs from the pilot deployment. Outcome measures are self-reported unless stated; modules are taken from platform logs where available.

Table A4.

Variables used in the quantitative analyses.

Table A4.

Variables used in the quantitative analyses.

| Variable (File Column) | Label/Definition | Coding & Range | Source |

|---|---|---|---|

| PU, PE, EE, AI | Perceived utility; performance expectancy; effort expectancy; adoption intention (composite means) | 1–5 Likert (higher = more) | Survey |

| Modules_Used | Number of distinct modules used during the reference period | Count (1–5) | Logs/Survey |

| Coaching, Funding, MarketRadar, Compliance, Benchmarking | Module-use indicators (used = 1, else 0) | Binary (0/1) | Logs/Survey |

| RevenueGrowth | Self-reported revenue growth (%) vs. prior year | Continuous (%, may be negative) | Survey |

| CustomerAcquisition | Self-reported change in customer acquisition (%) | Continuous (%, may be negative) | Survey |

| OperationalEfficiency | Self-reported change in internal efficiency (%) | Continuous (%, may be negative) | Survey |

| Firm Size | Number of employees (bins, if used) | E.g., 1–4; 5–9; 10–19; 20–49; 50+ | Survey |

| Sector, Region, Years_in_Business, Prior_AI_Experience | Controls | As collected | Survey |

References

- World Bank. Small and Medium Enterprises (SMEs) Finance. Available online: https://www.worldbank.org/en/topic/smefinance (accessed on 9 July 2025).

- U.S. Small Business Administration, Office of Advocacy. Frequently Asked Questions About Small Business. 2024. Available online: https://advocacy.sba.gov (accessed on 10 August 2025).

- Schwaeke, J.; Peters, A.; Kanbach, D.K.; Kraus, S.; Jones, P. The New Normal: The Status Quo of AI Adoption in SMEs. J. Small Bus. Manag. 2024, 62, 1–27. [Google Scholar] [CrossRef]

- Wedel, M.; Kannan, P.K. Marketing Analytics for Data-Rich Environments. J. Mark. 2016, 80, 97–121. [Google Scholar] [CrossRef]

- Lamberton, C.; Stephen, A.T. A Thematic Exploration of Digital, Social Media, and Mobile Marketing. J. Mark. 2016, 80, 146–172. [Google Scholar] [CrossRef]

- Badghish, S. Artificial Intelligence Adoption by SMEs to Achieve Sustainable Performance: A Systematic Review. Sustainability 2024, 16, 1864. [Google Scholar] [CrossRef]

- Mick, M.M.A.P.; Kovaleski, J.L.; Chiroli, D.M.G. Sustainable Digital Transformation Roadmaps for SMEs: A Systematic Literature Review. Sustainability 2024, 16, 8551. [Google Scholar] [CrossRef]

- Wamba, S.F.; Gunasekaran, A.; Akter, S.; Ren, S.J.; Dubey, R.; Childe, S.J. Big Data Analytics and Firm Performance: Effects of Dynamic Capabilities. J. Bus. Res. 2017, 70, 356–365. [Google Scholar] [CrossRef]

- Sharabati, A.-A.A.; Ali, A.A.A.; Allahham, M.I.; Hussein, A.A.; Alheet, A.F.; Mohammad, A.S. The Impact of Digital Marketing on the Performance of SMEs: An Analytical Study in Light of Modern Digital Transformations. Sustainability 2024, 16, 8667. [Google Scholar] [CrossRef]