1. Introduction

The rapid development of Internet of Things (IoT) technologies has significantly transformed public healthcare by enabling continuous monitoring of vital signs [

1,

2], activity patterns, and environmental conditions. Wearable and ambient sensors generate large volumes of real-time data, creating opportunities for early disease detection, personalized interventions, and large-scale health surveillance [

3]. As health systems move toward data-driven and sustainable care, managing these data streams securely and efficiently has become a key research challenge [

4].

At the same time, reliance on sensitive health data raises concerns regarding privacy, security, and ethics. Conventional IoT healthcare systems usually assume that raw data must be sent to centralized servers for storage and analysis. This design increases the attack surface and introduces complex issues such as long-term retention, consent management, and compliance with frameworks like GDPR and HIPAA [

5]. In many cases, retaining the exact health values is unnecessary; the real requirement is to determine whether a patient’s health status satisfies clinical criteria without disclosing underlying values.

Existing studies have explored solutions such as secure data storage, encryption-based access control, and federated learning. While these approaches mitigate certain risks, they still depend on the availability of raw values or intermediate results at computational nodes, leaving the possibility of information leakage. They do not fully solve the tension between the need for accurate health judgments and the requirement to minimize data disclosure. This issue is particularly important for sustainable healthcare, where scalability, trust, and compliance must coexist over long-term deployments.

To address these challenges, this paper proposes a privacy-preserving collaborative framework for IoT-based health monitoring. In this framework, local sensor nodes encrypt measurements and send only ciphertexts to a secure computation core, which performs statistical and functional evaluations directly in the encrypted domain using homomorphic encryption [

6]. The results are masked and transferred to a compliance verification client that produces zero-knowledge range proofs [

7], demonstrating whether health indicators fall within specified thresholds.

The proposed approach provides several benefits. First, data confidentiality is maintained since raw values and intermediate statistics are not revealed beyond the data owner. Second, the system enables verifiable compliance checks without exposing real values, supporting external verification while maintaining privacy. Third, it reduces ethical and sustainability concerns by avoiding unnecessary long-term storage of sensitive data, thus lowering risks associated with consent, access control, and data retention. These properties form a secure foundation for IoT-enabled public healthcare.

Furthermore, the framework aligns with several United Nations Sustainable Development Goals (SDGs). By enabling continuous yet privacy-preserving assessments, it supports SDG 3 (Good Health and Well-Being) through secure health monitoring and timely clinical checks. Its verifiability-by-design architecture—based on commitments, range proofs, and append-only audit logs—contributes to SDG 16 (Peace, Justice, and Strong Institutions) by improving accountability and governance. The inclusion of sustainability metrics such as latency decomposition, CPU time, memory footprint, and network load promotes resource-efficient health infrastructures, supporting SDG 9 (Industry, Innovation, and Infrastructure). Finally, by separating compliance judgments from raw-value storage, the framework contributes to SDG 10 (Reduced Inequalities) by reducing data exposure disparities. The contributions of this paper are as follows:

An end-to-end privacy-preserving framework for IoT-based healthcare is proposed. By combining homomorphic encryption with zero-knowledge range proofs, the system supports compliance verification under a minimal-disclosure, three-party trust model (data owner, computation service, and adjudicator).

A method is developed to compute health metrics (e.g., mean and variance) directly on ciphertexts, allowing encrypted-domain results to be checked against integer-based range proofs consistent with healthcare thresholds and clinical standards.

The system’s robustness against statistical and learning-based inference attacks is evaluated. Results show attacker performance close to random guessing, confirming the high entropy and inference resistance of the commitment process.

The rest of this paper is organized as follows.

Section 2 reviews related work.

Section 3 introduces the system model and problem formulation.

Section 4 presents the experimental design and results.

Section 5 concludes this paper and discusses future research directions.

2. Related Works

In recent years, the Internet of Things (IoT) has become a cornerstone technology in transforming public health. It enables continuous data collection, real-time monitoring, and intelligent decision support across a variety of healthcare contexts. However, the highly sensitive nature of health data introduces significant privacy and security challenges, requiring advanced mechanisms that balance efficiency with strong protection of patient information. Research on IoT-based public health monitoring has therefore evolved along two complementary directions: (1) leveraging IoT devices, wearables, and cloud–edge architectures to improve health monitoring and patient-centric care and (2) developing robust privacy-preserving, authentication, and secure data-sharing mechanisms to ensure reliable operation in real-world healthcare systems.

2.1. IoT for Public Health Monitoring

In public health monitoring, IoT technologies are shifting practice from episodic, facility-based measurements to continuous and context-aware sensing that supports patient-centered care, population-level surveillance, and timely intervention. Reviews and surveys show that wearables, home sensors, mobile devices, and hospital equipment can generate longitudinal records of vital signs and activities. These data are fused through cloud and edge analytics to enable personalized care pathways and faster clinical decisions, reducing readmissions and improving operational efficiency [

8,

9].

Concrete implementations include intelligent surveillance and activity recognition systems, where pose and gait estimation from cameras are combined with spatial features to achieve real-time fall detection on GPU-backed IoT video platforms, delivering high recall and competitive false alarm rates in elder care settings [

10]. On-body sensing using multimodal wearables also supports posture recognition by coupling lightweight on-device inference with cloud-based classification, improving reliability. This setup represents a hybrid intelligence model in which edge devices ensure low latency and cloud services enhance accuracy under varying conditions [

11].

Beyond single applications, surveys highlight remote monitoring frameworks for chronic disease management and community healthcare, describing sensors, gateways, communication protocols, and microcontroller implementations that provide real-time dashboards for clinicians and caregivers [

12,

13]. With the development of 5G connectivity and embedded machine learning, integrated IoT and WSN architectures have achieved near real-time collection of vital signs with on-device neural inference, offering accuracy comparable to clinical devices while maintaining responsiveness in emergency scenarios [

14,

15]. Complementary work on feature learning and ensemble methods for cardiac risk prediction shows that classical clinical datasets can be effectively combined with IoT data to achieve high screening accuracy through appropriate preprocessing and model design [

16].

Together, these studies demonstrate that IoT-enabled health monitoring is evolving from isolated solutions to layered systems that integrate sensing, connectivity, and intelligence to deliver continuous and scalable healthcare services [

17]. However, they also highlight a growing dependence on sensitive data streams, emphasizing the need for strong privacy protection in future deployments.

2.2. Privacy and Security in IoT-Based Public Health

Because health-related signals are highly sensitive, IoT-based healthcare systems must incorporate comprehensive privacy and security protection at the device, network, and service levels. A broad range of research efforts have been proposed, generally categorized as federated learning (FL), secure multi-party computation (MPC), lightweight cryptography, blockchain-based solutions, homomorphic encryption (HE), zero-knowledge proofs (ZKPs), and differential privacy (DP).

Federated learning (FL) has become a prominent paradigm because it keeps data local and shares only model updates. Variants such as FedImpPSO improve robustness in unstable IoT networks [

18]. Extensions combining FL with homomorphic encryption and secure aggregation prevent inference from gradients and achieve high performance in medical image classification and intrusion detection tasks [

19,

20,

21]. Clustered FL with adaptive local differential privacy further mitigates heterogeneous data distributions by using clipping and weight compression to reduce leakage while maintaining accuracy [

22,

23]. These approaches demonstrate the potential of FL in balancing accuracy and privacy but still incur communication overhead and residual leakage risks from shared gradients.

Secure multi-party computation (MPC) offers another way to support collaborative health analytics without exposing raw data. IoT-enabled MPC has been applied to pneumonia diagnosis, achieving accuracies above 96% while maintaining data confidentiality [

24]. Offloading frameworks incorporating trust and incentive models help reduce computation on constrained devices and verify results without third-party involvement [

25]. Blockchain-assisted MPC systems, such as BELIEVE, validate mobility and healthcare data in real time without revealing raw information, improving both privacy and auditability [

26]. However, MPC schemes often face scalability challenges due to communication and synchronization costs.

Lightweight cryptography remains important for IoT medical devices with limited resources. Hybrid encryption combining AES, ECC, and Serpent has been proposed to protect electronic medical records [

27], and ECC-based authentication achieves anonymity and forward secrecy with low overhead [

28]. Chaos-based cryptographic schemes exploit the unpredictability of chaotic systems for real-time biosignal encryption on wearable sensors [

29,

30]. These methods offer good efficiency and robustness but rely mainly on symmetric primitives and key management, limiting their ability to provide fine-grained verifiability.

Blockchain-based solutions are widely used to eliminate single points of failure, enforce decentralized trust, and maintain immutable audit trails. Systems such as LoRaChainCare combine blockchain with fog and LPWAN technologies to support secure elderly health monitoring [

31], while blockchain-based identity management frameworks enhance decentralized authentication in healthcare IoT [

32]. Electronic health record (EHR) systems use blockchain with hierarchical access control, redactable signatures, or interplanetary file systems to ensure secure and interoperable data sharing [

33,

34]. However, blockchain frameworks often introduce latency, scalability, and energy challenges that complicate real-time healthcare scenarios.

Homomorphic encryption (HE) allows computation over encrypted values. Applications range from secure data aggregation in medical wireless sensor networks [

35] to privacy-preserving federated learning [

19,

20] and BFV- or CKKS-based designs that protect against reconstruction attacks while maintaining utility [

36,

37]. Some recent studies combine HE with blockchain for access control and auditable sharing [

38]. While HE provides strong confidentiality, it often increases computational and storage costs.

Zero-knowledge proofs (ZKPs) have been introduced to enhance authentication and compliance verification. Efficient ZKP schemes provide scalable authentication for large IoT networks with reduced verification costs [

39]. Blockchain-integrated rollups group multiple access control requests into a single proof, reducing latency in high-traffic environments [

40]. Other works employ ZKP to enhance anonymity and integrity in IoT systems through blockchain integration [

41,

42]. However, most current ZKP applications focus on identity management rather than encrypted health computation.

Differential privacy (DP) complements these methods by adding random noise to shared data or gradients to reduce inference risk. Particle swarm optimization-enhanced DP improves the trade-off between utility and privacy in IoT data sharing [

43], while PoA-blockchain with Gaussian DP secures federated aggregation in industrial IoT fault detection [

44]. Federated deep fingerprinting also uses adaptive gradient noise to protect radio feature learning in constrained IoT settings [

23]. DP approaches are lightweight and scalable but may distort statistical relationships, reducing precision in clinical applications.

Synthesis. Each privacy-preserving approach for IoT healthcare offers unique advantages suited to specific scenarios. Federated learning limits raw data exposure but remains vulnerable to gradient inference and high communication cost. MPC guarantees correctness and privacy across institutions but scales poorly in real-time settings. Lightweight cryptography is efficient but focuses only on channel security. Blockchain ensures decentralized trust but increases latency and energy use. Homomorphic encryption protects computation yet lacks compliance verification. ZKP enhances authentication privacy but has seen limited use in health data analysis. DP improves scalability but may harm analytical accuracy.

As shown in

Table 1, our framework differs by performing all computations within the encrypted domain and outputting only zero-knowledge proofs of compliance. It combines the confidentiality of HE with the verifiability of ZKP. Only local sensors access raw data, while all other entities handle encrypted or masked information. All data remain non-plaintext, supporting verifiable compliance without revealing sensitive values. This approach directly mitigates disclosure risks that remain unresolved in prior work and supports sustainable, large-scale public health monitoring.

3. Methodological Overview

To bridge the gap between existing privacy-preserving methods and the need for secure IoT-based health monitoring, this section outlines the methodological foundation of our framework. The design follows three core principles: (1) all computations must remain correct within the encrypted domain without revealing plaintext data, (2) compliance must be verified through cryptographic proofs instead of disclosing intermediate results, and (3) accountability must be built in through commitments and auditable logs. These principles ensure that the system achieves privacy, correctness, and verifiability at the same time.

3.1. Workflow Description

The proposed workflow consists of four coordinated stages involving three distinct entities:

Sensor Nodes (SNs): Each sensor node collects physiological data (e.g., heart rate and activity traces) and immediately encrypts them using CKKS homomorphic encryption. This process ensures that no plaintext values ever leave the device, and each sensor only retains access to its own data.

Secure Computation Core (SCC): The SCC aggregates encrypted data from multiple SNs and performs statistical operations such as mean, variance, or other metrics directly on ciphertexts. Random masking factors are introduced to prevent potential reverse inference. The SCC operates entirely in the encrypted domain and has no access to any plaintext values.

Compliance Verification Client (CVC): The CVC receives masked encrypted results from the SCC, decrypts them locally, and generates Pedersen commitments along with zero-knowledge range proofs showing that the decrypted value v lies within a specified threshold. A fresh random factor r is used for each commitment to maintain indistinguishability. The CVC is the only entity capable of both generating commitments and constructing proofs, without revealing any actual data.

Verifier/Audit Log: External verifiers or audit servers validate the pair to confirm compliance without accessing raw data. Verification results are stored in append-only audit logs, which ensure transparency and accountability across organizations.

This structure provides a clear link between existing IoT-based health monitoring approaches and the detailed system model presented later. By separating computation, verification, and auditing under a minimum-disclosure trust model, the framework addresses key challenges of privacy, ethical governance, and sustainability in digital healthcare.

3.2. Algorithmic Steps

To illustrate the workflow more clearly, Algorithm 1 summarizes the main message flows and cryptographic operations for each entity.

| Algorithm 1 Privacy-preserving IoT health monitoring framework. |

- 1:

Input: Physiological data from each Sensor Node - 2:

Output: Compliance decision recorded in the audit log

- 3:

for each sensor node do - 4:

Encrypt using CKKS - 5:

Transmit to SCC - 6:

end for

- 7:

SCC: Aggregate and compute encrypted metric - 8:

SCC: Apply random mask and forward to CVC

- 9:

CVC: Decrypt masked result - 10:

CVC: Generate Pedersen commitment - 11:

CVC: Construct ZK range proof for - 12:

Transmit to Verifier

- 13:

Verifier: Verify with respect to C and threshold T - 14:

Verifier: Record compliance result in append-only audit log

|

The design guarantees that (i) raw sensor data remain private at the edge, (ii) computations are executed entirely on encrypted inputs, (iii) compliance is verified through zero-knowledge proofs without revealing intermediate values, and (iv) auditability is preserved through tamper-resistant logs. Together, these properties provide a reliable and sustainable foundation for IoT-enabled public health monitoring.

3.3. Stakeholder Visibility

Table 2 summarizes what information each stakeholder can access and what remains hidden within the system. Only local sensors observe raw physiological values, while all transmitted and stored information is encrypted or masked, ensuring strict end-to-end confidentiality.

4. System Model and Problem Formulation

In the context of IoT-enabled public health, continuous monitoring of physiological signals and health indicators provides essential evidence for timely assessment and disease prevention. Traditional methods typically rely on centralized collection and plaintext analysis of raw sensor data, which not only increases the risk of privacy leakage but also fails to meet compliance and ethical requirements in cross-institutional collaborations. To address these challenges, this paper proposes an end-to-end privacy-preserving and verifiable system model that enables health indicator evaluation and compliance verification without disclosing individual raw data or computed metric values. By combining homomorphic encryption (HE) and zero-knowledge proof techniques, the model supports encrypted-domain computation of health-related metrics and outputs only binary compliance judgments to healthcare providers or monitoring authorities. This design lowers trust barriers and facilitates secure data sharing across multiple parties. This directly supports SDG 3 (Good Health and Well-being) by enabling sustainable health monitoring without compromising privacy and SDG 16 (Peace, Justice and Strong Institutions) by enhancing trust in data governance across institutions.

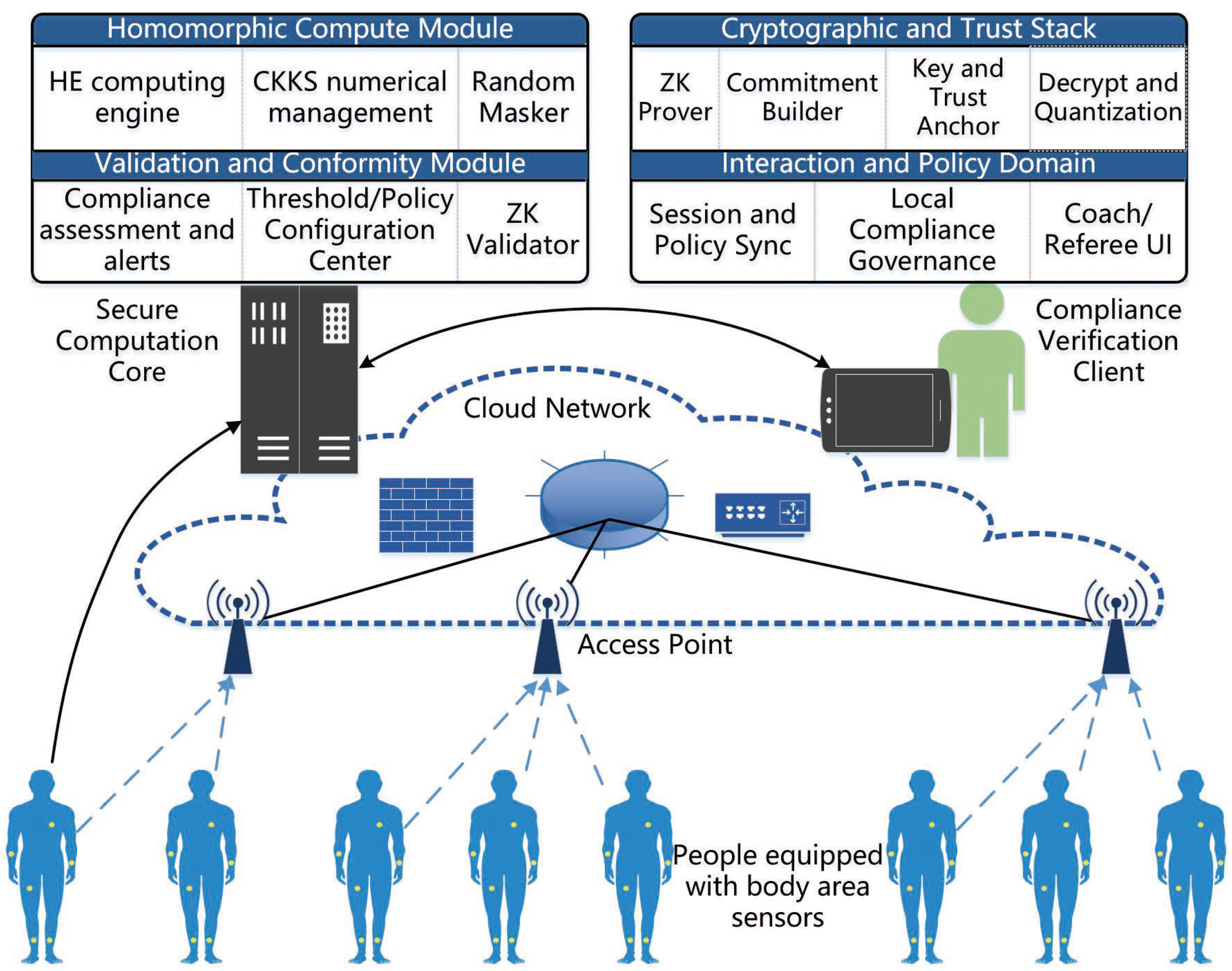

The system model consists of three core entities, SNs responsible for real-time collection of physiological signals, the SCC, which performs encrypted computation with random masking, and the CVC, which decrypts masked results and generates zero-knowledge proofs, as shown in

Figure 1. Sensor nodes are deployed on patients or in healthcare environments to capture raw health data and transmit it in encrypted form. The SCC computes averages, variances, or other health-related indicators without decryption capability, while masking ensures that intermediate results cannot be inverted. The CVC, holding the decryption key, obtains only masked values, generates commitments and range proofs, and returns them to the SCC for verification. Together, these entities form an integrated framework that achieves both real-time responsiveness and privacy-preserving security with minimal disclosure.

To demonstrate practicality, we instantiate the proposed framework in the scenario of IoT-enabled public health monitoring. Physiological data such as heart rate, blood oxygen saturation, or activity patterns are continuously collected by wearable sensors, and their privacy-sensitive nature makes conventional centralized analysis problematic. In practice, many healthcare applications do not require storing or transmitting exact raw values; instead, the key requirement is to judge whether certain indicators fall within clinically acceptable thresholds. This motivates our system design: sensor nodes encrypt data at the source, the SCC performs encrypted-domain computation to derive health metrics, and the CVC generates zero-knowledge proofs to verify compliance without revealing true values. By grounding the design in this representative scenario, the framework demonstrates applicability to real-world healthcare contexts where privacy, security, and auditability are critical.

4.1. Data Acquisition and Encryption

In the proposed framework, the foundation of privacy-preserving public health analysis begins with data acquisition at the sensor nodes. Patients are equipped with IoT-based body area sensors (e.g., ECG monitors, pulse oximeters, and accelerometers), which capture raw physiological streams such as heart rate, blood pressure, or blood oxygen saturation. Let us denote the

j-th patient’s time-series data as

where

N is the number of monitored individuals and

T is the number of sampling instants. Each

may represent a scalar (e.g., heart rate at time

t) or a multidimensional vector of features.

To ensure privacy at the earliest stage, each

is transformed from plaintext into ciphertext using homomorphic encryption (HE). Specifically, we adopt a CKKS-type approximate homomorphic encryption scheme, which is suitable for real-valued vector operations commonly required in healthcare analytics. The CKKS encoding maps a real value

into a polynomial ring element with scaling factor

:

where

p determines the precision of the fixed-point representation. The encrypted ciphertext is then obtained as

with

denoting the public key distributed by the system. The patient-side nodes hold no decryption capability, thus preventing any intermediate agent from recovering the raw health data once uploaded.

For efficient transmission, sensor nodes often batch multiple samples into a vectorized form before encryption. Thus, the ciphertext at time window

can be written as

where

K denotes the batch size. This vectorized encryption enables the secure computation core (SCC) to later perform SIMD-like (single instruction multiple data) operations, such as summation and threshold checks, directly in the encrypted domain.

It is important to note that, unlike traditional plaintext transmission, the data available to any external party is limited to

, which semantically hides both the individual sample values and the aggregate patterns. Formally, the semantic security property ensures

where

is any polynomial-time adversary and

is the domain of possible sensor values. This guarantees that an attacker cannot distinguish between encryptions of different data, thereby eliminating privacy leakage at the acquisition stage.

In summary, this stage accomplishes two objectives: (1) it establishes a trust boundary where sensitive physiological signals never leave the patient’s device in plaintext form, and (2) it produces structured encrypted data streams that can be processed by the SCC without decryption. This forms the foundation for all subsequent privacy-preserving computations and verifications in the system.

4.2. Privacy-Preserving Computation in SCC

Once encrypted health data streams are transmitted, the secure computation core (SCC) is responsible for conducting analytics without decryption. The central idea is to leverage homomorphic encryption (HE) to compute health-related metrics directly in the ciphertext domain. This ensures that sensitive data remain hidden while still enabling meaningful assessment.

Let us denote the ciphertext batch of patient

j at window

as

, which corresponds to the encrypted sequence

. The SCC can aggregate encrypted data across all patients and compute averages, deviations, or other statistical functions needed for monitoring. For example, for patient

j, a variance-like health indicator over window

can be defined as

where the window mean is

If needed, a group-level indicator can be obtained by averaging across all patients:

where

is the average value. In the encrypted domain, this can be evaluated by computing encrypted deviations, squaring them homomorphically, and aggregating the results. More generally, the SCC can support composite metrics beyond variance, depending on healthcare requirements.

To further reduce inference risk, the SCC applies random masking before transmitting results to downstream components. Let

be a randomly chosen scalar known only to the SCC. The masked ciphertext is given by

The purpose of this masking is to prevent the decryption party from inferring the true value of . Instead, it only recovers , which is indistinguishable from other possible values unless combined with the SCC’s secret multiplier . In our implementation, each masking factor is generated using a cryptographically secure pseudo-random number generator (CSPRNG) with full entropy (≥128 bits). Every is single-use and bound to one computation window, ensuring a lifetime of at most one proof session. Values are held only in the volatile memory of the SCC and are never stored persistently. If a particular were compromised, only the masked result of the corresponding session could be exposed, while all other sessions remain secure. To further mitigate risk, session identifiers and commitments are bound to fresh randomness, ensuring forward security. Formally, the leakage bound of one-time exposure is limited to at most one masked statistic , which without the corresponding plaintext remains computationally indistinguishable from random.

Thus, the SCC fulfills two crucial roles: (1) it performs accurate health indicator computation in the encrypted domain using homomorphic operations and (2) it injects controlled randomness to enforce the principle of computability without observability.

4.3. Result Decryption and Proof Generation in CVC

After the SCC completes encrypted computation and applies random masking, the resulting ciphertext

is transmitted to the compliance verification client (CVC). The CVC holds the private key

, which enables decryption of the masked ciphertext. Let

where

denotes the true health indicator and

is the random masking factor known only to the SCC.

To ensure that the CVC cannot infer the unmasked result

, the decrypted value

w is never disclosed directly. Instead, the CVC generates a cryptographic commitment to

w. Using a Pedersen-style scheme, the commitment is computed as

where

g and

h are independent generators of a cyclic group

G, and

is a blinding factor. This commitment is both hiding (concealing

w) and binding (preventing equivocation).

Subsequently, the CVC constructs a zero-knowledge range proof showing that the hidden value

w lies within the scaled compliance interval:

where

is the original compliance threshold defined by healthcare providers. The range proof guarantees that the underlying health indicator

(equivalently denoted as

in experiments) is within

, while concealing its exact value.

The zero-knowledge proof can be instantiated using Bulletproofs or other range proof protocols. Formally, the proof object is denoted as

Finally, the CVC returns the tuple to the SCC. This tuple binds the proof to a specific session and prevents replay attacks. Importantly, the CVC never reveals the actual value of w or , ensuring strict preservation of privacy.

4.4. Compliance Verification and Feedback Loop

After the CVC generates the commitment and range proof, these objects are transmitted back to the SCC for verification. The SCC at this stage does not perform any decryption but instead validates the correctness of the zero-knowledge proof against the pre-established compliance interval.

Formally, the SCC receives the tuple

where

is the Pedersen commitment to the masked value

w,

is the zero-knowledge proof, and

sess_id binds the proof to a unique session.

The SCC checks the following conditions, Proof Validity and Range Consistency:

ensuring that the zero-knowledge proof is cryptographically valid.

where the interval endpoints are computed using the random masking factor

generated by the SCC and the original compliance thresholds

. This check guarantees that the unmasked health indicator

falls within the compliance interval.

If both conditions are satisfied, the SCC outputs a positive verdict:

Otherwise, it returns

This binary feedback is then communicated to healthcare providers through the system interface. Importantly, the SCC and external stakeholders only learn the compliance status (Pass/Fail), not the actual value of or the raw data of any individual.

Through this process, the system closes the loop between encrypted computation and compliance reporting, achieving the principle of verifiability without disclosure. Healthcare providers thus obtain actionable feedback, while patients’ privacy and sensitive health metrics remain protected.

The proposed framework simultaneously achieves privacy preservation, computational correctness, verifiability, and auditability. Raw sensor data never leave the patient’s device in plaintext; by the semantic security of homomorphic encryption, ciphertexts reveal no exploitable information to the SCC or to any external observer. The homomorphic operations carried out at the SCC preserve the semantics of plaintext computations, ensuring that encrypted summation, averaging, and other computations yield results consistent with those obtained in the clear, up to the precision limits of CKKS encoding. To enable compliance checks without disclosing metric values, the framework integrates commitments and range proofs: the zero-knowledge property guarantees that a verifier learns nothing beyond the fact that the masked result lies within the designated interval, preventing leakage of actual values. Robustness against inference is further enhanced by the introduction of random masking factors, which decouple the decrypted masked result from the underlying indicator, leaving the attacker with residual uncertainty that remains close to maximal entropy. Finally, every compliance verification is bound to session identifiers and timestamps, forming an immutable audit log. This mechanism ensures that all verdicts can be traced and certified without exposing sensitive intermediate data, thereby offering a trustworthy, privacy-preserving, and verifiable infrastructure for IoT-based public health monitoring. Collectively, these properties not only guarantee cryptographic soundness but also align with SDG 10 (Reduced Inequalities) by ensuring equitable participation of diverse populations in secure health data sharing.

5. Performance Evaluation

5.1. Simulation Settings

All experiments are conducted in a controlled simulation environment to evaluate the feasibility and effectiveness of the proposed privacy-preserving framework. The system integrates homomorphic encryption for secure computation of health metrics and zero-knowledge range proofs for compliance verification. In addition to technical validation, the experiments illustrate how secure and verifiable data processing can support sustainable healthcare infrastructures, aligning with SDG 3 (Good Health and Well-being) through trustworthy monitoring and SDG 10 (Reduced Inequalities) by enabling inclusive participation without compromising privacy.

For encryption, we adopt the CKKS scheme implemented in Microsoft SEAL (version 3.x). The encryption parameters are configured as follows: polynomial modulus degree of 8192, coefficient modulus chain

, and scale factor

. These settings balance precision, performance, and noise growth. During ciphertext operations—addition, multiplication, squaring, relinearization, and rescaling—level and scale are explicitly aligned to ensure numerical stability and prevent decryption errors. The main simulation parameters used in the experiments are summarized in

Table 3.

For verification, Pedersen commitments and Bulletproofs range proofs are implemented using a Rust-based dynamic library linked to the C++ simulation environment. Each encrypted numerical result is rounded to the nearest integer before proof generation to ensure compatibility with the integer-based commitment scheme. A fresh random blinding factor r is generated for each commitment to maintain indistinguishability and resist inference attacks.

Simulated health data represent physiological indicators such as heart rate. Samples are drawn from Gaussian distributions centered near realistic values (e.g., 70 bpm) with adjustable standard deviations to reflect variability. Each time window corresponds to one patient, with

samples per window representing short-term fluctuations. Statistical indicators are computed homomorphically as follows:

where

is the local mean.

corresponds to

in

Section 2. For clarity, masking factors

are omitted in experimental plots without affecting interpretation.

All experiments are implemented in C++ using Microsoft SEAL v4.1 for encryption, Rust Bulletproofs v3.0 for proof generation and verification, and Python 3.9 (matplotlib v3.7) for visualization. This setup ensures consistency across modules and supports reproducible evaluation.

5.2. Compliance Verification of Encrypted Health Indicators

This experiment verifies whether encrypted-domain computations can be correctly validated against compliance thresholds without revealing actual values. Heart rate data are simulated over short windows, with samples per window and total windows. Each is computed homomorphically, and instead of revealing numeric results, a zero-knowledge proof of is generated. The thresholds represent variance-like sums consistent with physiological variations of approximately , , and bpm after scaling. Thus, although the encrypted-domain values appear large, they correspond to realistic clinical ranges.

In deployment, thresholds can be personalized per patient. Physicians define clinically suitable ranges during registration, which are incorporated into the encrypted and masked computation pipeline. Since only masked comparisons are performed, personalized thresholds reveal no raw information.

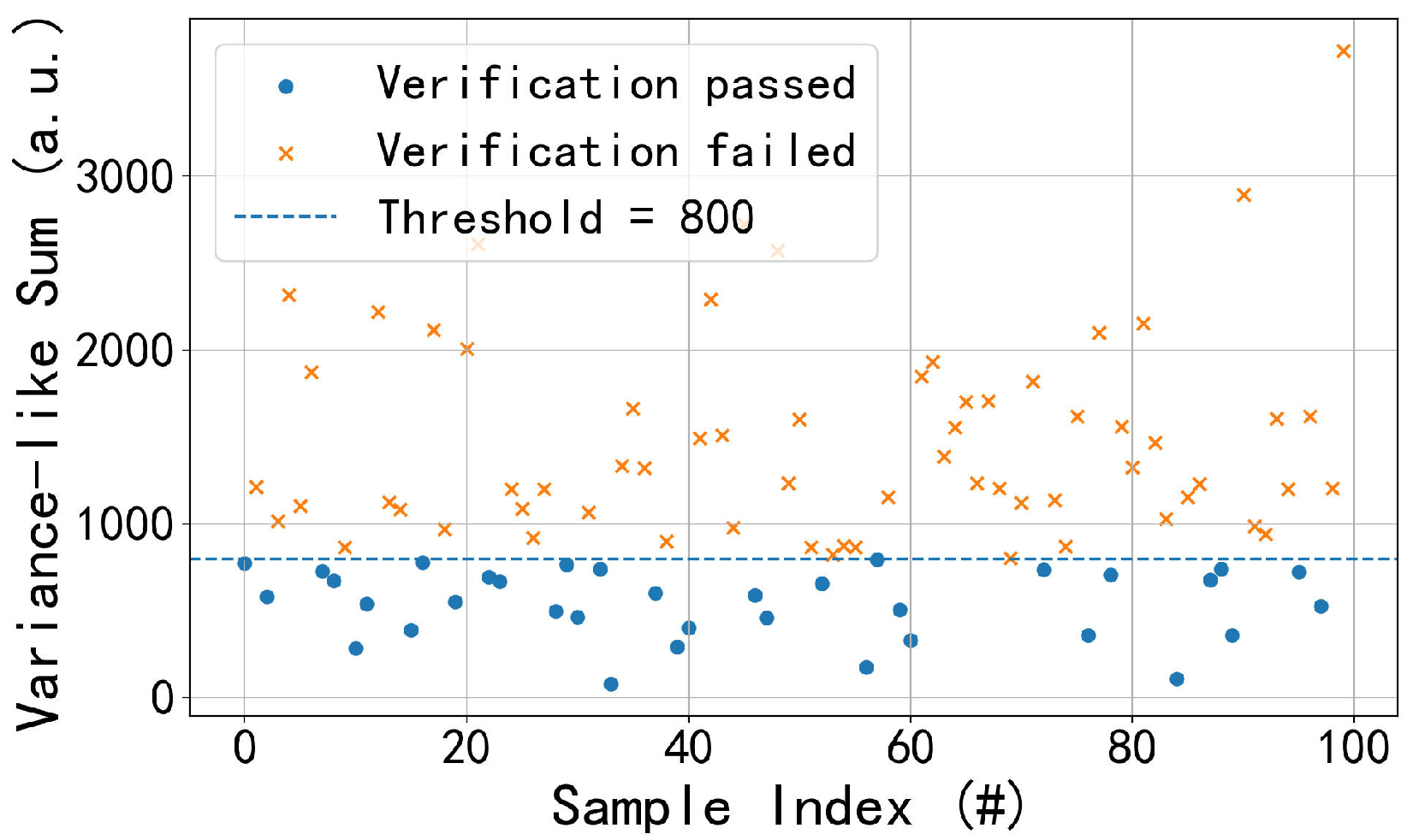

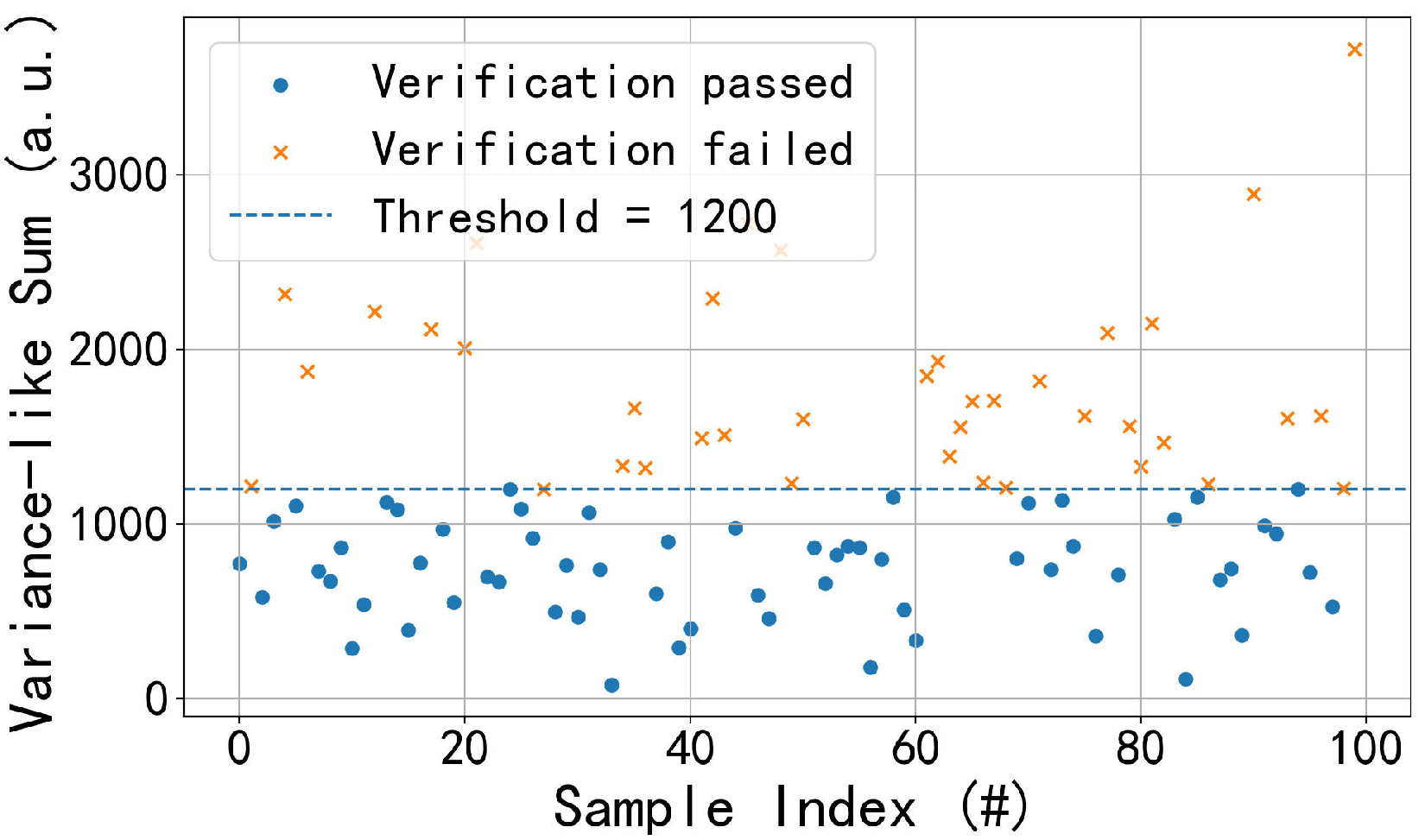

The results in

Figure 2,

Figure 3 and

Figure 4 show that as the threshold increases, the acceptance rate rises. Under

and sample size

m, the normalized variance satisfies

, giving

Substituting

yields

. Hence, thresholds of 400, 800, and 1200 represent progressively relaxed criteria. Lower thresholds filter out most samples, while higher ones increase acceptance, demonstrating adjustable compliance verification suitable for different medical contexts.

5.3. Inference Resistance of Commitments

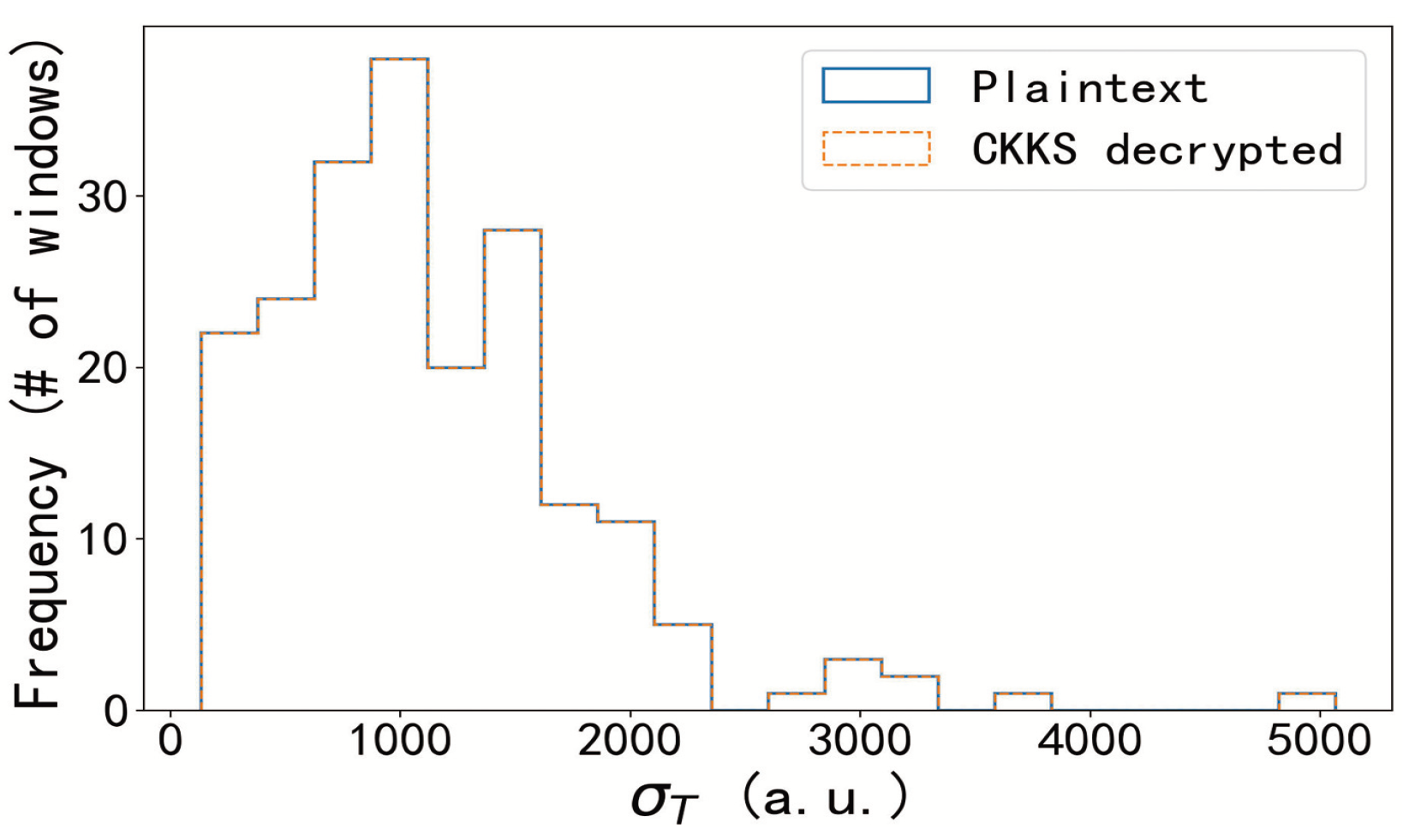

This experiment evaluates whether commitments leak information about underlying health data. The attacker model assumes that an adversary tries to distinguish between commitments to a fixed value and those for random values. Commitments are generated using the Pedersen scheme with fresh random blinds r. A logistic regression classifier is trained on the raw bytes of commitments to test learnability. Classification accuracy, ROC, and AUC metrics are recorded. The Shannon entropy of commitment encodings is also analyzed across increasing sample sizes. Finally, encrypted and plaintext computations of are compared to confirm the absence of systematic bias.

Results confirm the inference resistance of commitments. The logistic regression attack achieved an accuracy of around 0.53, close to random guessing (

Figure 5). The ROC curve gave an AUC of 0.53, consistent with theoretical indistinguishability. Entropy analysis (

Figure 6) shows per-byte entropy rising from about 5.4 to 7.4 bits and stabilizing near that level. The slight deviation from the ideal 8 bits stems from elliptic curve point compression, not information leakage. Finally,

Figure 7 shows nearly identical distributions for plaintext and decrypted values, confirming that encrypted computations introduce no statistical bias.

5.4. Efficiency and Sustainability Discussion

In addition to correctness and privacy, we assess efficiency, scalability, and sustainability. These are key for real-world IoT health applications and directly relate to SDG 3 and SDG 10 by ensuring lightweight and inclusive secure processing.

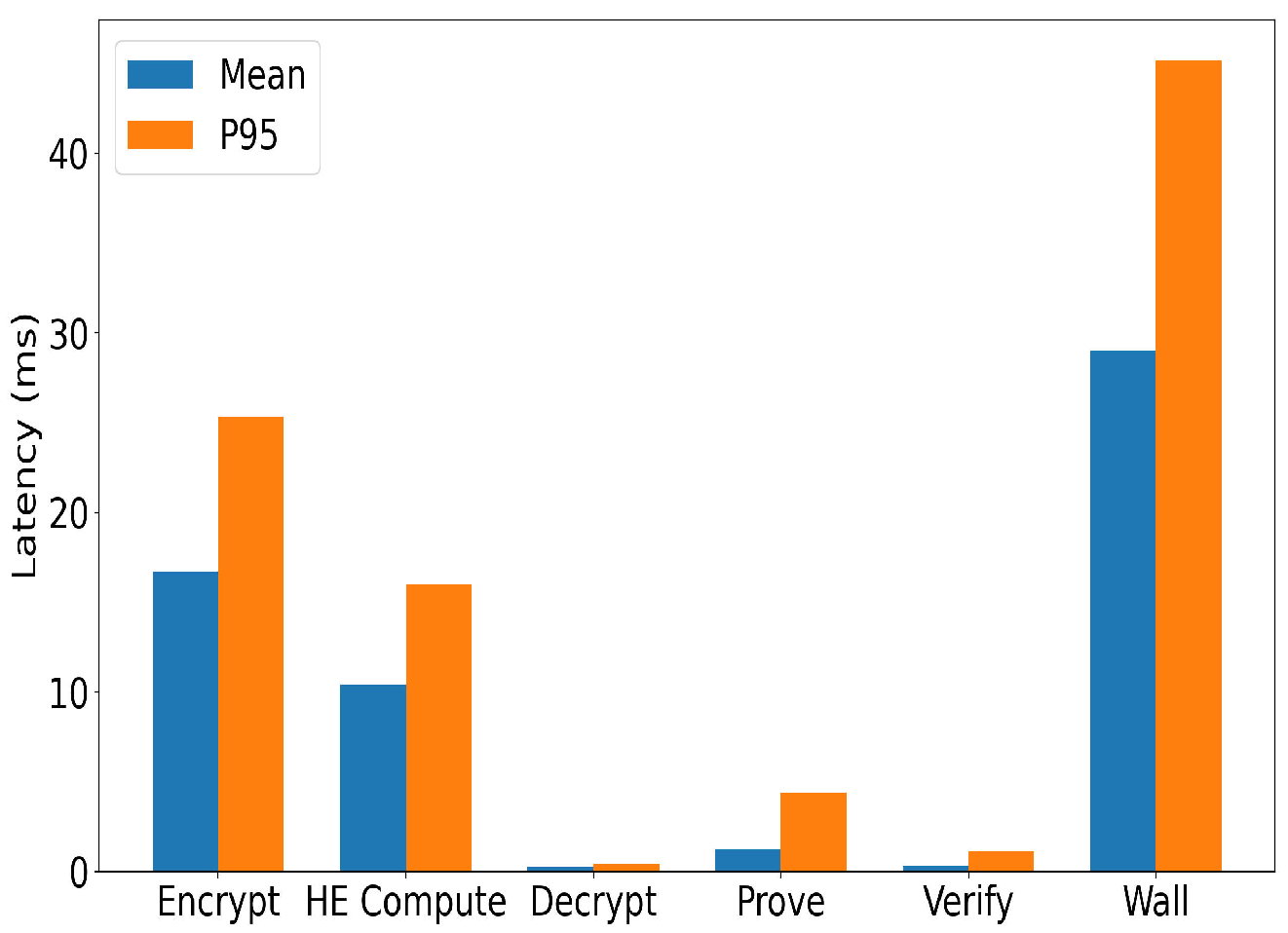

Figure 8 shows the latency for each processing stage—encryption, computation, decryption, proof generation, and verification. Both mean and 95th percentile values remain within tens of milliseconds, with total per-window time under 50 ms, demonstrating near real-time capability.

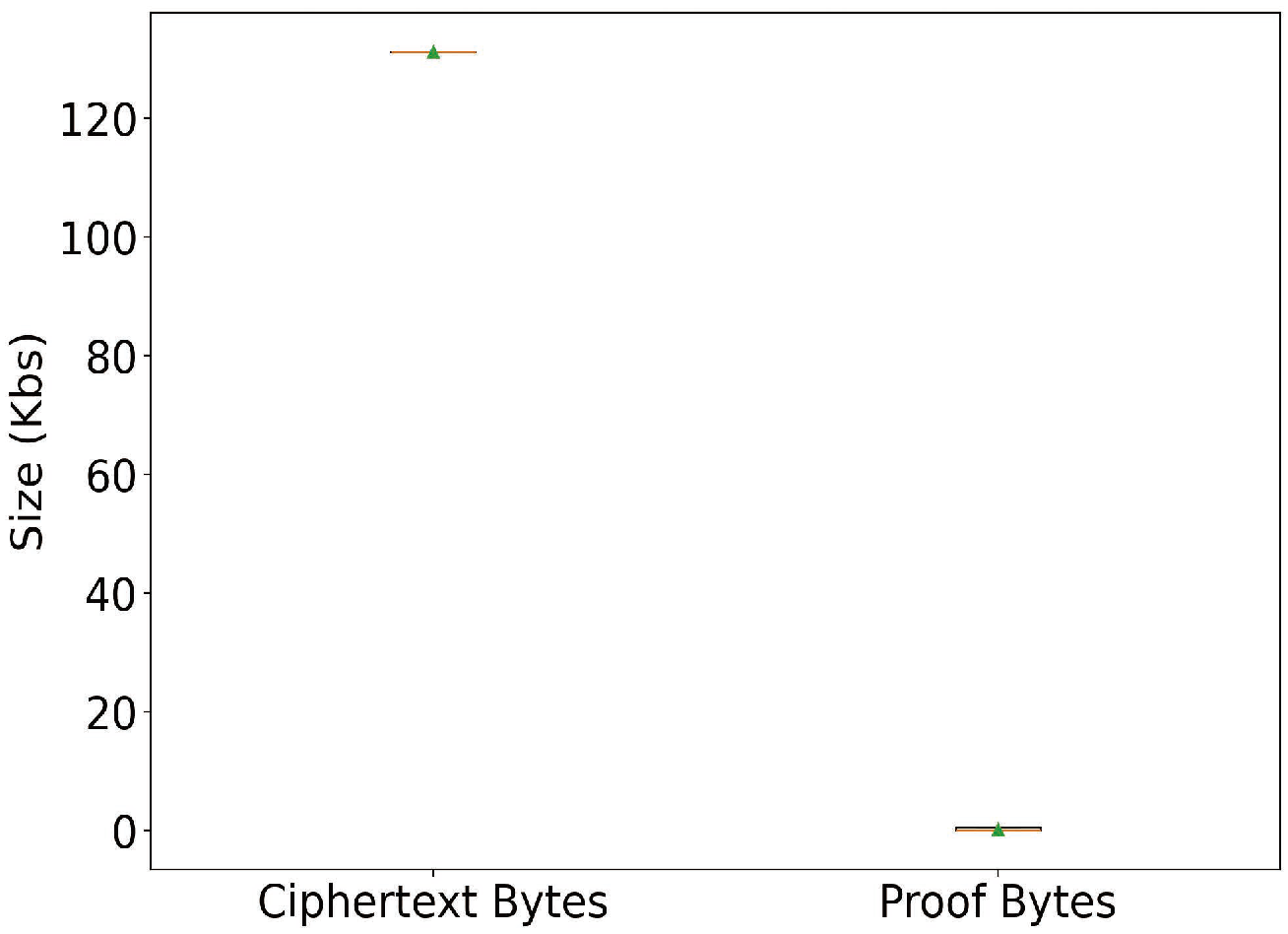

Figure 9 shows that ciphertexts average around 120 KB while proofs remain about 2 KB, indicating minimal verification overhead.

Figure 10 shows compliance pass rates under different thresholds, illustrating flexible and tunable criteria for different monitoring contexts.

Overall, results show balanced performance in latency, scalability, and privacy. Commitments remain indistinguishable from random data, ensuring strong inference resistance. By combining low overhead with flexible verification, the framework supports trustworthy and sustainable digital health monitoring.

Compared with existing methods, MPC-based approaches [

24,

25] offer strong privacy but require heavy synchronization and communication, making sub-50 ms latency difficult (

Figure 8). Lightweight cryptography [

27,

29] protects individual devices but still transmits raw data. Differential privacy methods [

22,

23] reduce inference risk but compromise accuracy, degrading compliance verification, as in

Figure 10. Fully homomorphic encryption in federated learning [

19,

20] preserves privacy but often lacks verifiability. Blockchain frameworks [

32,

33] improve auditability but incur storage and energy costs.

In contrast, our framework never exposes raw or intermediate data beyond the originating sensor. Compact zero-knowledge proofs (2 KB,

Figure 9) enable verifiable compliance with negligible communication cost. This combination achieves a sustainable balance among privacy, efficiency, and scalability, providing a practical pathway for SDG-aligned public health monitoring.

The reported latency reflects a controlled desktop-class simulation and serves as a baseline. In practice, latency variance will depend on device heterogeneity and network conditions. Future work will analyze P50/P95/P99 latency distributions across real deployment scenarios.

6. Conclusions

This paper presented a framework that combines homomorphic encryption and zero-knowledge range proofs for privacy-preserving health indicator evaluation in IoT-enabled public health monitoring. By separating the roles of sensor nodes, the secure computation core (SCC), and the compliance verification client (CVC), the system achieves verifiability without disclosure, providing healthcare providers with compliance results while safeguarding sensitive data.

Experiments validated both accuracy and privacy. Encrypted computations of variance-based metrics matched plaintext baselines, and compliance verification behaved as expected: stricter thresholds rejected more cases, while relaxed ones increased acceptance. These results demonstrate that the proposed framework offers a practical, auditable, and secure foundation for digital health applications, enabling cross-institutional collaboration without revealing raw data.

The framework also contributes to the United Nations Sustainable Development Goals: SDG 3 by enabling secure and sustainable health monitoring, SDG 10 by promoting equitable access to privacy-preserving technologies, and SDG 16 by strengthening trust and transparency in health data governance. Future work will extend the system to larger datasets, more complex indicators, and real-world deployment.

7. Limitations

Although the proposed framework provides strong privacy guarantees and verifiable compliance, several limitations remain. First, true physiological values are not stored; only encrypted or masked representations exist. This makes the framework unsuitable for applications requiring long-term historical data, such as chronic disease tracking or retrospective analysis. Second, while the system efficiently handles basic indicators (e.g., mean and variance), extending encrypted computations to complex or multimodal features increases computational cost. Third, the strict privacy design ensures that only sensors can access raw data, which complicates scenarios where clinicians need direct signal access for diagnosis, requiring parallel conventional pipelines. Finally, scalability to ultra-low-power devices may be limited by the computational load of homomorphic encryption and proof generation, though batching and hardware acceleration can mitigate this overhead. These limitations highlight directions for further optimization toward broader real-world adoption.