1. Introduction

Wind energy represents a cornerstone in the global transition toward sustainable and low-carbon power systems due to its environmental benefits, economic competitiveness, and capacity to mitigate greenhouse gas emissions. Over the past decades, installed wind power capacity has expanded rapidly worldwide, reflecting both technological progress and the urgency of reducing reliance on fossil fuels [

1]. In Europe, wind power has become central to achieving climate and energy objectives through large-scale deployment and supportive policy frameworks such as the European Green Deal and the Fit for 55 package [

2,

3]. Spain ranks among the leading countries in this field, with production levels comparable to global benchmarks such as Germany and India. However, the inherent variability of wind resources poses persistent integration challenges, as forecasting errors can compromise grid stability, increase operational costs, and limit the sustainable deployment of renewable energy [

4,

5].

Accurate short-term wind power forecasting is therefore required for enabling reliable integration of renewables into modern power systems and supporting the development of sustainable smart grids. Despite significant progress, existing approaches still face difficulties in capturing the nonlinear and long-range temporal dependencies that characterize wind power generation. Traditional physical and statistical models, such as numerical simulations and Autoregressive Integrated Moving Average ARIMA-family methods, offered efficient short-horizon forecasts but were constrained by assumptions of linearity and stationarity, limiting their effectiveness in highly dynamic environments [

4,

6,

7]. These limitations motivate the exploration of advanced data-driven approaches capable of delivering accurate and robust forecasts to enhance energy system sustainability.

Machine learning (ML) and deep learning (DL) methods have been increasingly explored for wind power forecasting, offering notable improvements over traditional statistical approaches. Random Forests, Gradient Boosting, and hybrid decomposition-based models have demonstrated competitive accuracy by learning from historical data without rigid assumptions [

4,

8]. Nevertheless, these methods face practical limitations, as their performance often depends on large, high-quality datasets and extensive feature engineering, while recurrent and convolutional networks, despite their advances, still struggle to fully capture long-range sequential dependencies critical for wind power dynamics [

9,

10]. Recent developments in attention mechanisms and Transformer architectures provide a promising alternative by explicitly modeling long-range temporal dependencies. Early applications in wind forecasting confirm their capacity to leverage complex temporal patterns, but most rely on high-frequency, turbine-level meteorological inputs [

11]. This highlights a gap in the ability of streamlined forecasting frameworks based on aggregated historical generation to reflect realistic data availability in many national power systems. Addressing this gap is important to improve predictive performance while supporting the sustainable integration of wind energy into smart grids.

In light of the challenges discussed above, this study pursues two primary objectives. The first is to develop a Transformer-based architecture for short-term wind power forecasting using historical generation data as input. By leveraging the self-attention mechanism, the approach aims to capture long-range and multi-scale temporal dependencies inherent in aggregated power series, providing a data-efficient solution suitable for operational contexts with limited access to high-resolution meteorological variables. The second objective is to apply this framework to a case study of hourly wind power generation in Spain during the 2020–2024 period, using data reported by the European Network of Transmission System Operators for Electricity (ENTSO-E). The performance of the Transformer model is benchmarked against two widely adopted DL architectures, LSTM and GRU networks, in order to assess predictive accuracy and robustness under realistic system constraints. To guide this analysis, the following research questions (RQs) are formulated:

RQ1: Can Transformer architectures achieve higher accuracy than recurrent models in short-term wind power forecasting when only historical generation data are available?

RQ2: How do input sequence length and key architectural choices of the Transformer model affect forecasting performance?

The remainder of this paper is structured as follows.

Section 2 provides a background on the Spanish wind power sector and reviews existing forecasting methodologies, highlighting the specific gaps this study addresses.

Section 3 details the methodological design, including data preprocessing, model architecture, and training strategy.

Section 4 reports the empirical results, focusing on the comparative performance of Transformer, LSTM, and GRU models under different input configurations. Finally,

Section 5 summarizes the main findings, answers the research questions, and discusses avenues for future research in the context of sustainable energy systems.

3. Self-Attention and Transformer Models in Time Series Prediction

The Transformer architecture is a sequence modeling framework that eliminates the need for recurrence and convolution, relying exclusively on self-attention mechanisms to capture contextual dependencies. This design allows the model to effectively learn relationships between all elements within a sequence, independent of their position, and has proven highly scalable and effective across a wide range of sequence prediction tasks [

27].

Formally, let

denote an input sequence of length

T, where each element is represented by a

d-dimensional feature vector [

28]. To compute self-attention, the input sequence is linearly projected into three distinct representations: the

query matrix

, the

key matrix

, and the

value matrix

.

where

are trainable parameters, and

is the latent dimension. Scaled dot-product attention is then defined as follows:

This operation produces a weighted combination of the values, where weights reflect the learned relevance of each position in the sequence. The result is projected back into the original feature space:

with

. This allows residual connections and normalization to be applied in subsequent layers. To further enrich the model’s representational capacity, the Transformer introduces the

multi-head attention mechanism. In this case, instead of computing a single attention operation, the model projects the input into multiple subspaces and performs self-attention independently in each [

29]. Specifically, for

h attention heads, the input is transformed using

h distinct sets of projection matrices:

where each

, with

. These heads capture different types of dependencies across the sequence. The outputs of all heads are then concatenated and linearly transformed:

where

is another learnable matrix. This structure enables the model to jointly attend to information from diverse representation subspaces at different positions, increasing the expressiveness and flexibility of the attention mechanism without increasing its computational complexity. The use of multi-head attention has proven to capture complex, long-range patterns in sequences, particularly in tasks such as time series forecasting where both short-term fluctuations and long-term trends coexist [

30].

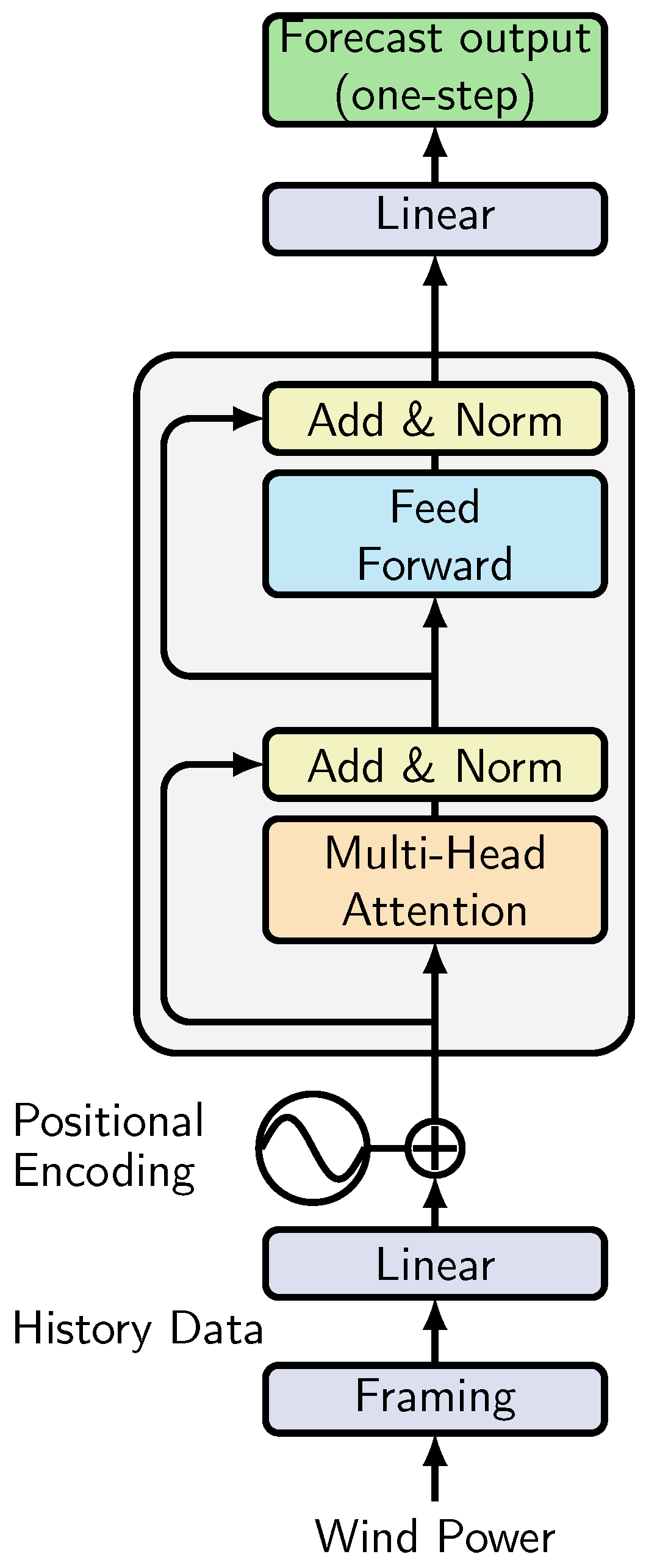

For the present study, which focuses on single-step forecasting, only the encoder stack of the Transformer is employed. Each encoder layer consists of multi-head self-attention followed by a position-wise feed-forward network:

where

is a two-layer feed-forward network with ReLU activation:

The final encoder output is aggregated and passed through a linear projection layer to produce the next value

. Unlike multi-step autoregressive decoding, this formulation avoids the need for a decoder with masked attention, reducing parameters and computation while aligning with the single-step prediction objective. The architecture adopted in this study, illustrated in

Figure 1, is an encoder-only Transformer tailored for short-term wind power forecasting. By relying on self-attention, the model captures both short-term fluctuations and long-range temporal dependencies within the input window. Unlike language models, no sequence tokens (e.g., start-of-sequence or end-of-sequence markers) are required, since the task involves continuous numerical values. The representation of the historical window is directly mapped through a linear output layer to forecast the next observation, which simplifies the design while preserving the ability to model complex temporal patterns.

5. Experimental Results

Before implementing the forecasting models, an exploratory analysis was conducted to characterize the statistical properties and temporal dynamics of wind power generation in Spain. The resulting dataset, composed of 43,848 hourly records spanning five years, is presented in

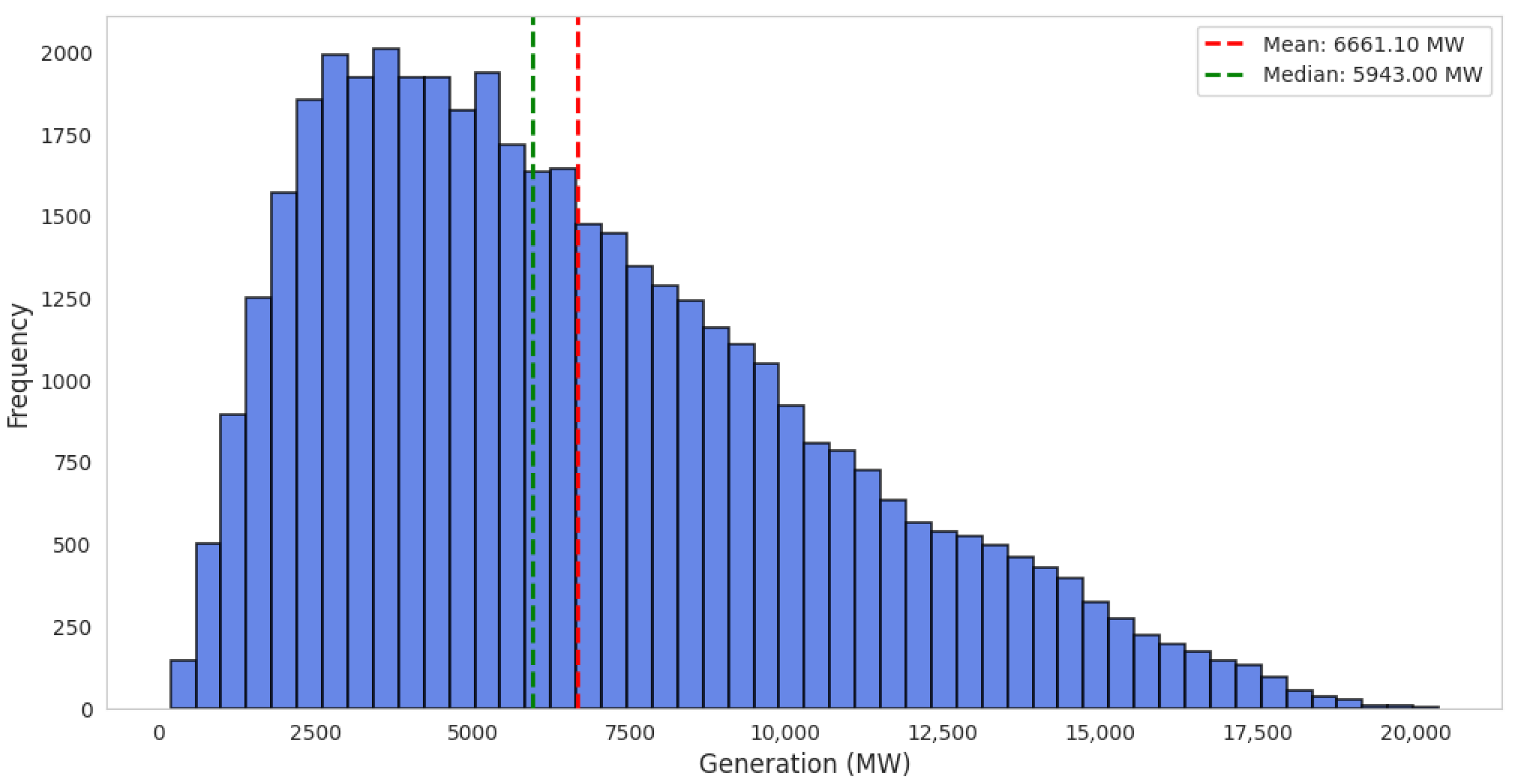

Table 1. The average generation is approximately 6661 MW, with a standard deviation of 3867 MW, indicating substantial variability in wind power output. The distribution is positively skewed, as evidenced by the higher mean compared to the median (5943 MW), and values range from a minimum of 196 MW to a maximum of 20,321 MW.

Figure 2 provides a histogram of wind power generation frequencies, further illustrating the right-skewed nature of the data. Most observations fall within the range of 2000 to 8000 MW, while extreme values are less frequent but relevant for understanding the volatility of the series.

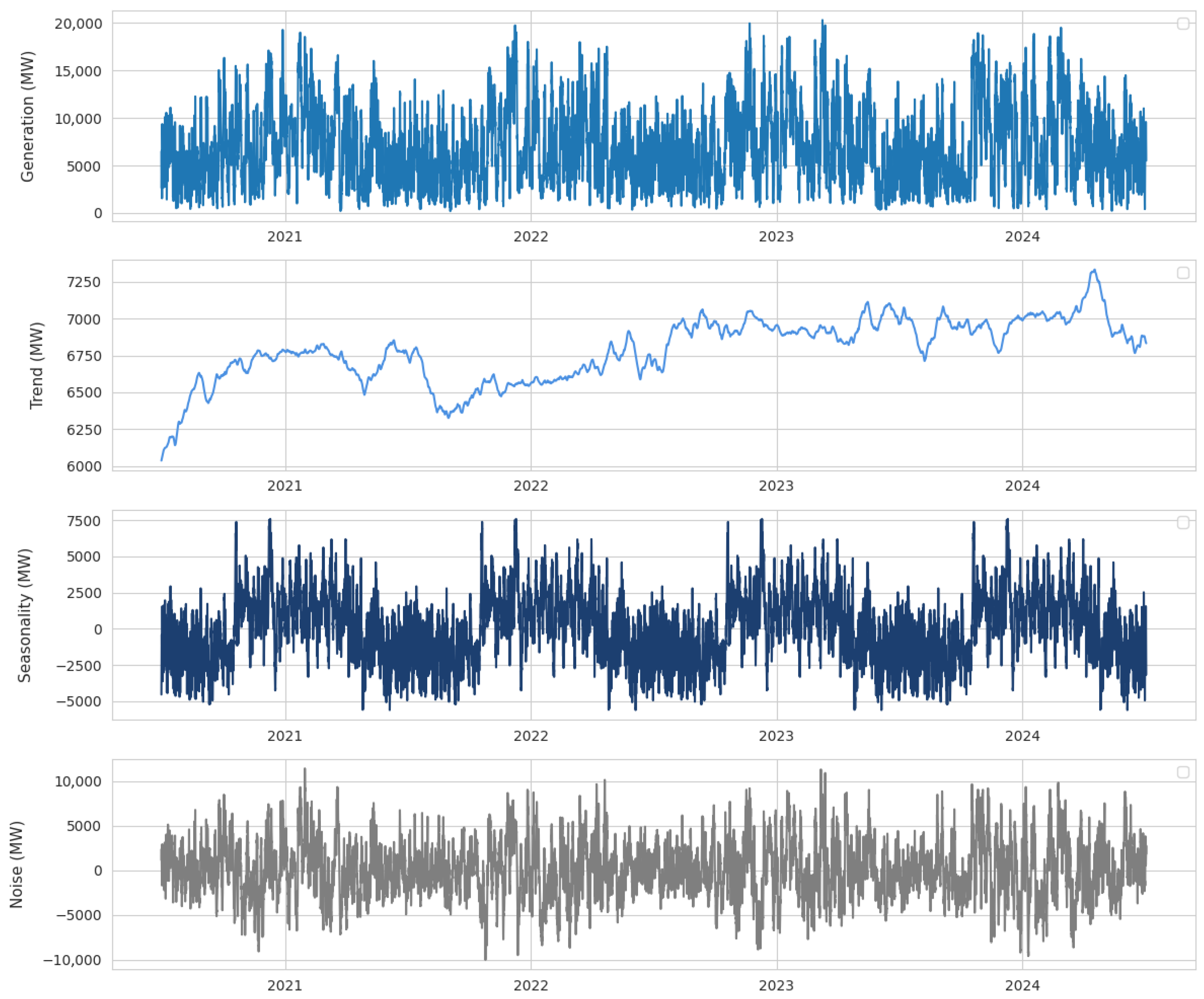

To gain deeper insights into the temporal structure of the series,

Figure 3 presents a classical additive decomposition into trend, seasonal, and residual components, with an annual cycle specified on hourly data. The trend component shows a progressive increase in wind power generation over the years, reflecting broader structural changes in the energy mix. The seasonal component highlights recurrent annual cycles, indicating systematic variability linked to meteorological conditions. At the same time, the residual component captures high-frequency fluctuations and abrupt changes, pointing to short-term dependencies that characterize the volatility of wind generation. Together, these patterns confirm that the series exhibits both short-term and long-term dependencies, thereby justifying the adoption of a Transformer-based architecture capable of capturing temporal dynamics across multiple scales.

As described in

Section 4, the forecasting pipeline was implemented by restructuring the hourly time series into overlapping input–output pairs using a sliding-window mechanism, thereby enabling temporal dependencies to be learned from fixed-length historical sequences. To preserve temporal consistency and prevent information leakage, the dataset was partitioned chronologically into training (70%), validation (15%), and testing (15%) subsets. Standardization was performed through

z-score normalization, with mean and standard deviation computed exclusively on the training set and subsequently applied to validation and test data to ensure consistency. To investigate RQ2, the effect of input resolution and architectural choices on predictive accuracy, three sequence lengths (12, 24, and 36 h) were evaluated. For each forecasting horizon, the Transformer was trained across a grid of hyperparameter settings, including model dimensionality, number of attention heads, encoder depth, dropout rate, and batch size (see

Section 4.3). Standard error metrics were employed to evaluate all configurations, and for each horizon (12 h, 24 h, and 36 h), the model with the lowest RMSE on the test set was selected as the best-performing configuration. While RMSE guided this selection due to its sensitivity to large deviations, the final benchmarking and comparative analysis also report complementary metrics, ensuring a comprehensive assessment of predictive accuracy across models. The results corresponding to the 12 h input configuration, where half-daily historical observations were employed to predict the subsequent value are presented first, with the five best-performing Transformer models on the test set reported in

Table 2.

Among the evaluated configurations for the 12 h input horizon, the top-performing Transformer models achieved test RMSE values in the range of 380–382 MW, with corresponding MAE values between 267 and 270 MW and MAPE below 5.3%. The best-ranked configuration (Dim = 16, heads = 2, layers = 3, dropout = 0.1, and batch = 16) attained a test RMSE of 380.62 MW, with validation and training RMSE values of 360.16 MW and 336.73 MW, respectively. This relatively narrow gap across splits indicates stable generalization and limited overfitting. Other high-performing configurations, such as those with Dim = 32 and four attention heads, yielded comparable results, reinforcing that model capacity beyond a certain threshold does not necessarily translate into improved accuracy. Instead, consistent patterns emerged across the top-ranked models: dropout rates of 0.1, batch sizes of 16 or 32, and encoder depths of two to three layers. These findings suggest that moderate regularization and architectural depth are sufficient to achieve robust performance under the 12 h horizon. Importantly, increasing the number of attention heads did not produce systematic gains, indicating that additional attention diversity offers limited benefits for relatively short input sequences.

Following the analysis of the 12 h input configuration, the performance of Transformer models trained with a 24 h sequence length was subsequently examined. This setting allows the model to incorporate a full day of historical wind power generation when predicting the subsequent value, thereby potentially capturing daily periodicities and longer-term dependencies that shorter windows may overlook.

Table 3 presents the performance of the five best-performing configurations under this new temporal scope. As in the 12 h setup, each model reflects a distinct combination of the architectural hyperparameters introduced in

Section 4.3, including dimensionality, number of attention heads, encoder depth, dropout rate, and batch size. This selection allows for a focused comparison of the most accurate configurations under the 24 h input scenario.

Among the tested configurations, the best performance for the 24 h input horizon was achieved by the model with a dimensionality of 16, two attention heads, three encoder layers, a dropout rate of 0.1, and a batch size of 32. This configuration yielded a test RMSE of 375.75, MAE of 263.02, and MAPE of 5.08, while also exhibiting balanced results across training and validation, thus indicating effective generalization. The remaining top-performing models followed a consistent pattern, relying on relatively compact architectures with either 16 or 32 dimensions, two to three encoder layers, and predominantly a dropout rate of 0.1. In terms of batch size, while the 12 h horizon favored configurations with 16, the 24 h setting showed a preference for 32, suggesting that slightly larger batches contribute to more stable training dynamics when longer input sequences are used. Interestingly, as in the 12 h horizon, the number of attention heads did not show a clear performance advantage beyond two or four, suggesting that increasing attention diversity does not necessarily translate into improved accuracy for short- to medium-range horizons.

Finally, the evaluation of Transformer models trained with a 36 h input sequence is presented in

Table 4. This configuration provides the model with an extended temporal context, allowing the learning of broader temporal dependencies and delayed effects in wind generation patterns. However, longer input sequences may also introduce noise or redundant information, increasing the risk of overfitting if not adequately regularized. Therefore, assessing the performance under this extended horizon is relevant to determine whether the additional historical context leads to tangible improvements or diminishing returns compared to shorter input lengths. As in the previous cases, the top five performing configurations under this setting were identified and analyzed based on their predictive accuracy on the test set.

Among the 36 h input configurations, the best predictive performance was achieved by the model with a dimensionality of 32, four attention heads, two encoder layers, a dropout rate of 0.1, and a batch size of 16. This model reached an RMSE of 370.71, MAE of 258.77, and MAPE of 4.92% on the test set. Notably, the gap between training and test performance (RMSE: 331.52 vs. 371.71) remained narrow, indicating effective generalization and limited overfitting. Compared to the 12 h configuration, where smaller dimensionality (16) was generally favored, the results for the 36 h horizon suggest that larger model dimensionality (32) becomes beneficial when capturing longer temporal dependencies. Across the top-performing models, relatively shallow architectures with two encoder layers predominated, indicating that greater depth does not necessarily improve performance in extended horizons. Dropout values of 0.1 were consistently observed, highlighting the importance of regularization as the sequence length increases. Furthermore, smaller batch sizes (mostly 16) continued to be preferred, reinforcing the observation that reduced batch regimes promote stable convergence across horizons. In addition to its predictive accuracy, this configuration also demonstrated strong computational efficiency. Although the training schedule allowed for a maximum of 50 epochs, convergence was reached after only 11 epochs due to the application of early stopping, resulting in a total training time of 439.10 s, with a median epoch duration of 40.02 s. From a deployment perspective, the model further exhibited low-latency suitability, achieving an average inference time of 3.698 ms (p95 = 4.768 ms) when evaluated on batches of 16 sequences of length 36. These results show not only the accuracy of the architecture under extended input horizons but also its ability to achieve rapid convergence and efficient inference, reinforcing its practical viability for real-world forecasting applications.

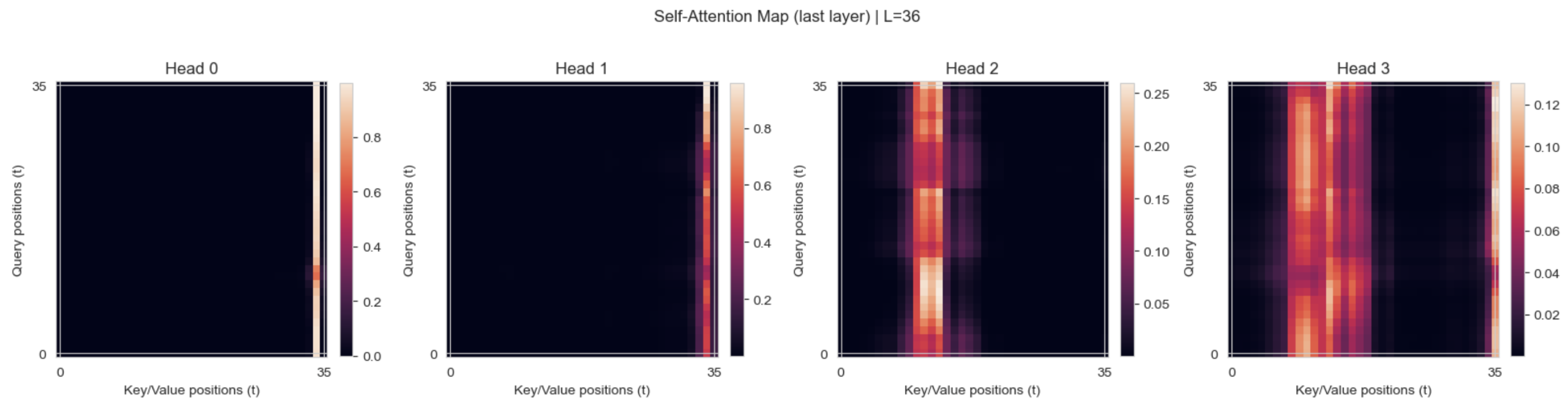

Importantly, the attention maps for the 36 h horizon revealed that most heads concentrated their weights on the most recent hours, highlighting the dominant role of short-term dynamics in wind power variability. Nevertheless, certain heads distributed attention more broadly across intermediate and even earlier positions within the input window, suggesting that the model simultaneously captured longer-range dependencies. This division of roles across attention heads indicates a complementary mechanism in which recent fluctuations are emphasized while broader temporal patterns are also considered (see

Figure 4). Consequently, the 36 h input horizon demonstrated that extended context lengths enable the Transformer to leverage both short- and long-term information.

Complementary error stratification further validated the robustness of the 36 h configuration. As reported in

Table 5, mean absolute ramp errors increased moderately with the ramp horizon, from 356 MW at

1 h to 377 MW at

6 h. This progression indicates that, although absolute ramp prediction errors rise with longer intervals, the growth remains limited, which highlights that the model preserves a reasonable capacity to adapt to temporal ramps of larger magnitude. Under extreme conditions (

Table 6), the model exhibited higher errors, particularly for strong ramps (

). The largest deviations occurred at short horizons (

1 h, MAE = 679 MW), reflecting the challenge of anticipating abrupt fluctuations. At longer horizons (

3 h and

6 h), both MAE and RMSE declined, showing that broader aggregation intervals mitigate the impact of short-term variability. Regarding peak generation events (

), errors remained substantial (MAE = 376 MW, RMSE = 526 MW), confirming the intrinsic difficulty of accurately forecasting rare and highly volatile extreme values.

To establish a robust benchmark against the Transformer-based architectures, complementary experiments were conducted using recurrent neural networks, specifically LSTM and GRU models. Both architectures were implemented with a comparable design, consisting of stacked recurrent layers, followed by a fully connected output layer to produce point forecasts. The evaluation protocol was kept identical to that of the Transformer experiments: chronological data partitioning (70% training, 15% validation, and 15% testing); z-score normalization based exclusively on the training set; and input sequence lengths of 12, 24, and 36 h. Model selection was carried out through an exhaustive grid search across key hyperparameters, including the number of hidden units (16, 32, and 64), the number of recurrent layers (1, 2, and 3), dropout regularization levels (0.1, 0.2, and 0.3), and batch sizes (16, 32, and 64). Training was performed using the Adam optimizer with a learning rate of , and early stopping was applied on the validation loss with a patience of five epochs and a minimum improvement threshold of . This procedure ensured that model complexity, convergence dynamics, and generalization ability were systematically evaluated under conditions directly comparable to those of the Transformer models.

Table 7 summarizes the best-performing configurations of the Transformer, LSTM, and GRU architectures across the three input horizons. The Transformer model, trained with a 36 h input sequence, consistently outperformed the recurrent baselines, achieving an RMSE of 370.71 MW, MAE of 258.77 MW, and MAPE of 4.92%. These values represent a substantial improvement over the naïve 1 h lag benchmark, whose MAE ranged between 378.08 and 378.39 MW depending on the window length, thus confirming the ability of the model to capture temporal dependencies beyond short-term persistence. For the recurrent models, the best LSTM configuration (36h, hidden = 32, layers = 1, dropout = 0.3) reached an RMSE of 397.59 MW and MAE of 292.37 MW, while the best GRU (36h, hidden = 32, layers = 1, dropout = 0.1) slightly outperformed the LSTM, with an RMSE of 395.47 MW and MAE of 289.11 MW. Although both models reduced errors relative to the naïve baseline, their performance lagged behind the Transformer in all error measures, particularly in MAE and sMAPE, where reductions were less pronounced. The comparison of normalized errors provides further insights. While the LSTM and GRU achieved nRMSE values around 6.3–6.5%, the Transformer reduced this figure to 5.92%. Similarly, sMAPE decreased from 5.71–6.09% in the recurrent networks to 4.85% in the Transformer. These differences, though moderate in relative terms, are significant in operational contexts where small percentage improvements translate into large absolute reductions in forecast error. Overall, the results support RQ1 by demonstrating that the Transformer architecture generalizes better across horizons, improving not only absolute accuracy (RMSE, MAE, and MAPE) but also scale-independent and relative metrics (nRMSE, MASE, and sMAPE). The recurrent baselines exhibited performance gains when provided with extended input windows (36 h). However, even under their best-performing configurations, they consistently lagged behind the Transformer, underscoring the latter’s superior capacity to capture long-range dependencies and complex temporal dynamics.

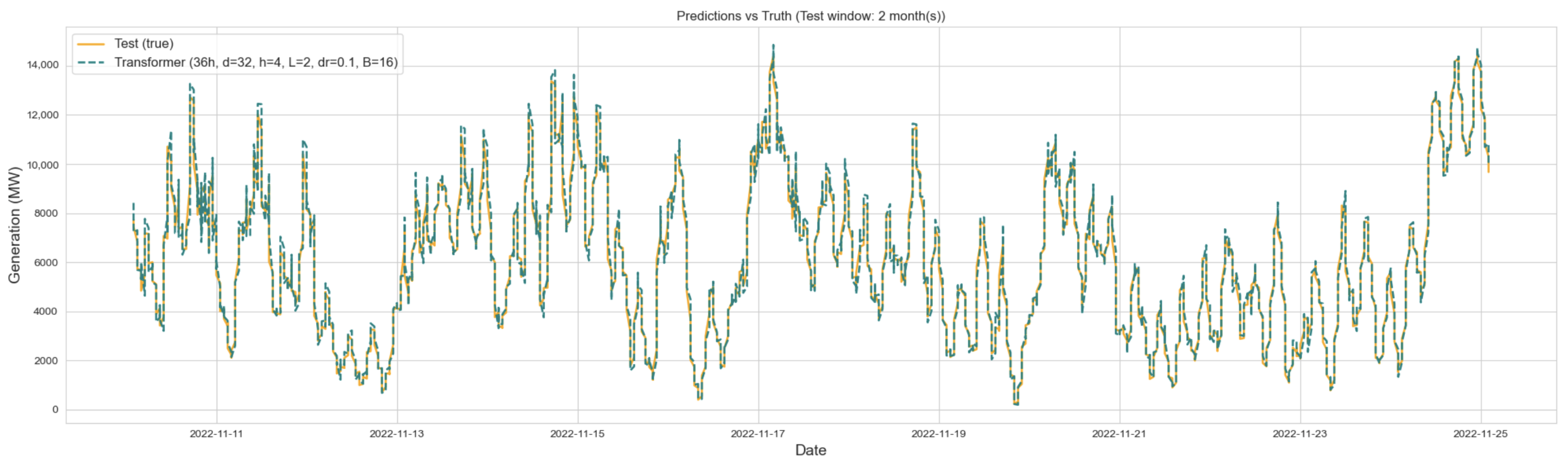

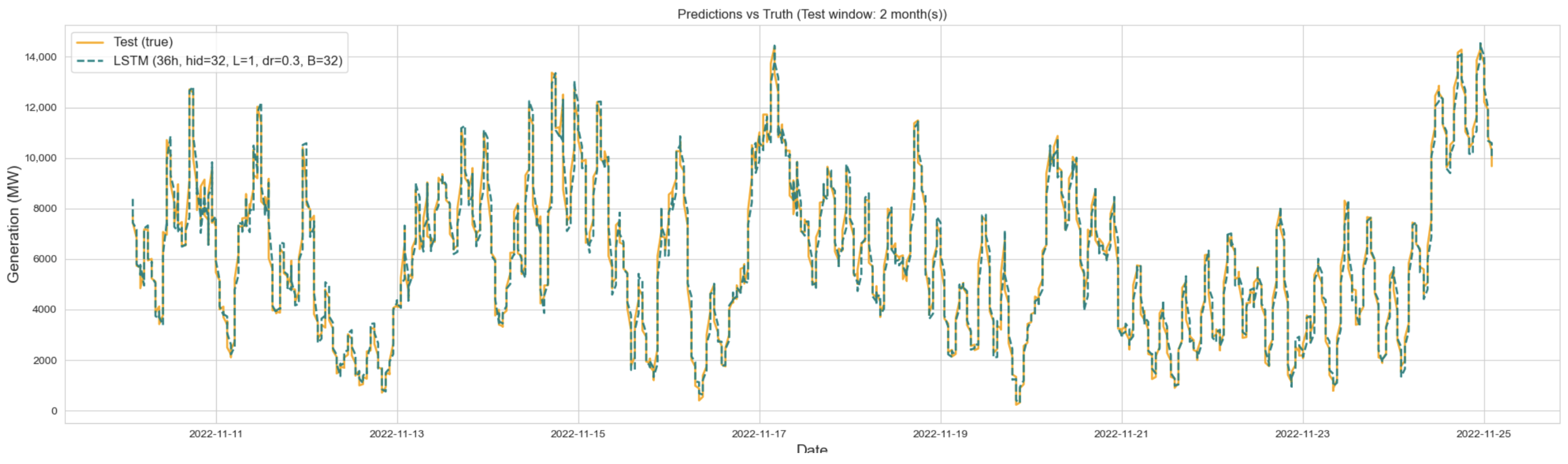

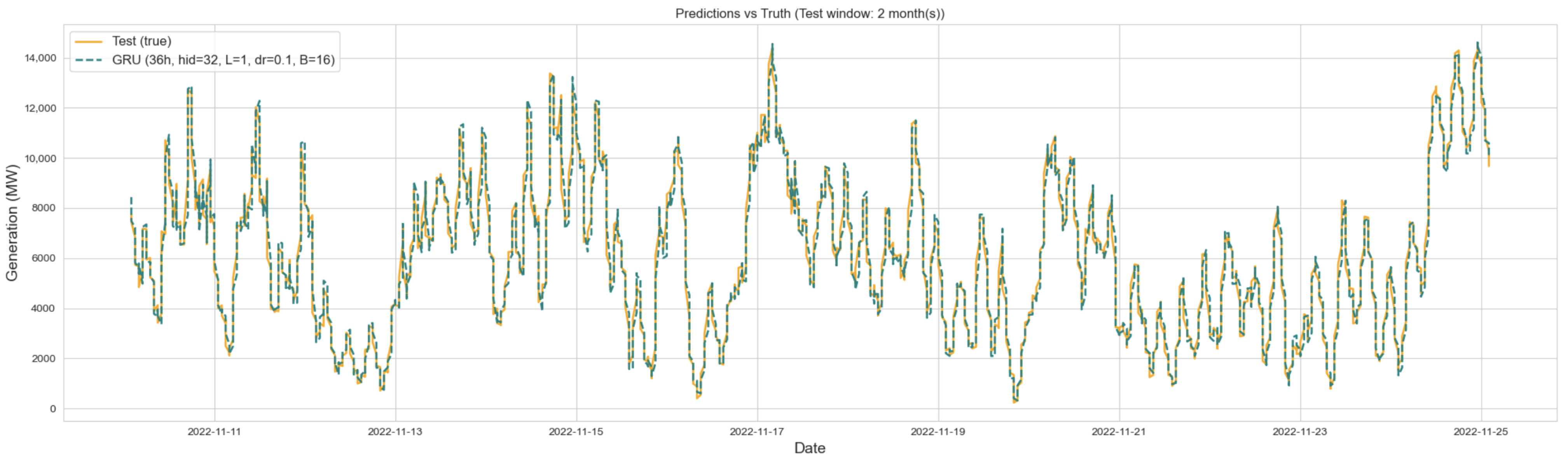

To complement the tabular results and provide further insight into model behavior,

Figure 5,

Figure 6 and

Figure 7 illustrate the alignment between actual wind power generation and predictions from the best-performing configurations of Transformer, LSTM, and GRU over a two-month segment of the test set. The comparison highlights clear differences in the models’ ability to reproduce temporal variability, especially at peak values. The GRU model, while capable of capturing overall trends, systematically underpredicts sharp peaks and exhibits lagged responses to sudden ramps, which reflects the limitations of its simpler recurrent gating structure. In contrast, the LSTM model reacts more promptly to fluctuations and tracks moderate variations with reasonable accuracy. Nonetheless, its forecasts occasionally overshoot during rapid transitions, showing instability under highly volatile conditions. The Transformer model achieves the closest alignment with the ground truth across the full range of dynamics, successfully capturing both smooth transitions and abrupt surges in wind generation.

6. Discussion of Findings

The empirical results of this study demonstrate that Transformer-based architectures achieve superior predictive accuracy in short-term wind power forecasting compared to recurrent models. The best-performing configuration, trained with a 36 h input window and a compact architecture of two encoder layers, four attention heads, and moderate dropout (0.1), reached an RMSE of 370.71 MW, MAE of 258.77 MW, and MAPE of 4.92% on the test set. From a computational standpoint, the model converged efficiently, requiring only 11 epochs out of a maximum of 50 due to early stopping (total training time of 439.10 s and median epoch duration of 40.02 s), and exhibited low-latency suitability, with inference averaging 3.698 ms (p95 = 4.768 ms) for batches of 16 sequences. Attention maps showed that, while most heads concentrated on the most recent lags, others distributed weights across earlier positions, confirming that the Transformer simultaneously leverages short-term fluctuations and longer-range dependencies. These results show that performance gains do not stem from indiscriminate model complexity, but rather from balanced architectures with tuned hyperparameters that provide an advantageous trade-off between accuracy, and interpretability.

In contrast, the best LSTM and GRU models, both using 36 h input sequences, achieved higher errors. The LSTM configuration (hidden = 32, layers = 1, dropout = 0.3, and batch = 32) reached an RMSE of 397.59 MW and MAE of 292.37 MW, while the GRU (hidden = 32, layers = 1, dropout = 0.1, and batch = 16) slightly outperformed the LSTM with an RMSE of 395.47 MW and MAE of 289.11 MW. Although both recurrent models improved substantially over the naïve 1 h lag benchmark (MAE ≈ 378 MW), they lagged behind the Transformer in all metrics. Particularly, scale-independent measures such as nRMSE and sMAPE showed consistent advantages for the Transformer (5.92% and 4.85%, respectively) compared to the recurrent baselines (nRMSE ≈ 6.3–6.6% and sMAPE ≈ 5.7–6.1%). Visual inspections over a two-month segment of the test set further confirmed these results, with the GRU systematically underpredicting peaks and lagging during ramps, the LSTM tracking moderate fluctuations but occasionally overshooting in highly volatile conditions, while the Transformer provided the closest alignment across both gradual and abrupt dynamics.

Placed in the context of the existing literature, these results reinforce recent advances that highlight the benefits of attention mechanisms and hybrid designs [

20,

21,

22,

23,

25]. Unlike prior work that relies on meteorological covariates or turbine-level signals, this study demonstrates that a Transformer trained exclusively on historical, aggregated generation data can achieve competitive performance. This has practical significance for the Spanish power system, where forecasting accuracy directly influences imbalance costs and operational reliability in day-ahead and intraday markets. The ability of a lightweight Transformer to deliver accurate forecasts under these constraints provides a scalable tool for system operators and market agents who may not have access to high-resolution meteorological data, thereby supporting grid stability and Spain’s broader sustainability objectives within the European energy transition.

Nevertheless, several limitations should be acknowledged. First, the analysis is restricted to deterministic forecasts at the national level, leaving open questions regarding regional variability and probabilistic forecasting. As highlighted by recent reviews [

26], interpretability and uncertainty quantification remain pressing challenges for the deployment of DL models in energy forecasting. Future research could therefore extend this work by incorporating probabilistic frameworks, attention-based interpretability analyses, and cross-country validations to test generalization under different system conditions.

7. Conclusions

The increasing penetration of variable renewables, particularly wind power, underscores the need for accurate short-term forecasting to support system reliability and efficient market operation. Using high-resolution Spanish data, this study assessed a Transformer-based approach to one-step-ahead wind power forecasting and contrasted it against strong recurrent baselines (LSTM/GRU) under a common, chronologically consistent evaluation protocol.

Three main findings emerged. First, in response to RQ1, the Transformer consistently outperformed LSTM and GRU across absolute and scale-independent metrics. The best Transformer, trained within the 36 h context, achieved RMSE = 370.71 MW, MAE = 258.77 MW, and MAPE = 4.92 %, improving upon the 1 h naïve persistence baseline (test MAE_naive ≈ 378 MW) and surpassing the best LSTM/GRU configurations (RMSE ≈ 398/395 MW; MAE ≈ 292/289 MW). Normalized errors corroborate this advantage (nRMSE = 5.92 %, sMAPE = 4.85 % versus ≈ 6.3–6.6 % and 5.7–6.1 % for recurrent models). Second, addressing RQ2, input resolution, and architectural choices proved to be relevant. Extending the window to 36 h yielded the most accurate results, while shallow encoders with two layers, moderate dimensionality of 32, and dropout of 0.1 provided the best balance between capacity and regularization. Additional attention heads or deeper stacks did not result in systematic improvements. Third, attention maps revealed a division of labor across heads, where most emphasized the most recent hours, whereas some attended to intermediate or earlier lags. This indicates that the Transformer is capable of jointly capturing short-term fluctuations and longer-range patterns. Error stratification also showed a moderate increase in mean absolute ramp error with ramp horizon, rising from 356 MW at 1 h to 377 MW at 6 h, and highlighted larger errors for strong ramps and peaks, which reflects the intrinsic difficulty of forecasting rare and highly volatile events.

From an operational standpoint, the preferred Transformer proved computationally efficient. With a maximum budget of 50 epochs, early stopping led to convergence in 11 epochs (total 439.10 s, median per epoch 40.02 s), and inference was low-latency, with a mean of 3.698 ms and a p95 of 4.768 ms for batches of 16 sequences of length 36. These characteristics make the approach suitable for near-real-time deployment in market and system-operation workflows, where small percentage improvements imply large absolute reductions in forecast error. Future work should extend this framework to probabilistic and multi-step settings, incorporate exogenous meteorological signals and multi-resolution inputs, and explore uncertainty quantification and interpretability at finer spatial granularity. Cross-system validations would test external validity under diverse climatic regimes. Overall, the evidence supports the use of compact, well-tuned Transformers as a practical and scalable tool for short-term wind forecasting, contributing to grid stability and facilitating the integration of renewables in Spain’s energy transition.