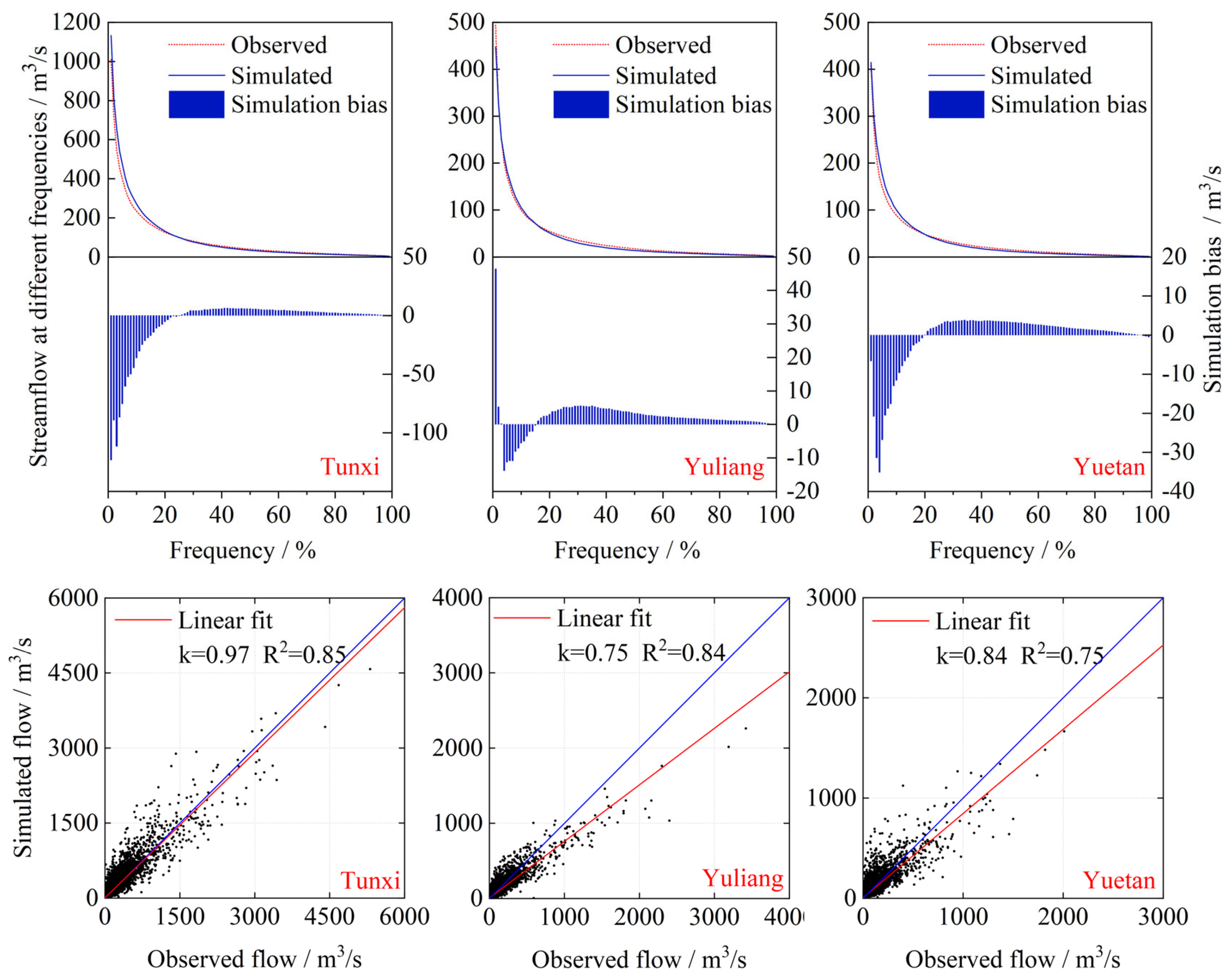

The results proceed in three stages: GBHM baseline skill and peak bias; RRT/RC-RRT performance on long-sequence and extreme events; and RCGR improvements over both physical and data-driven baselines, highlighting peak-flow fidelity and generalization.

4.2. Evaluation and Analysis of Data-Driven Model

- (1)

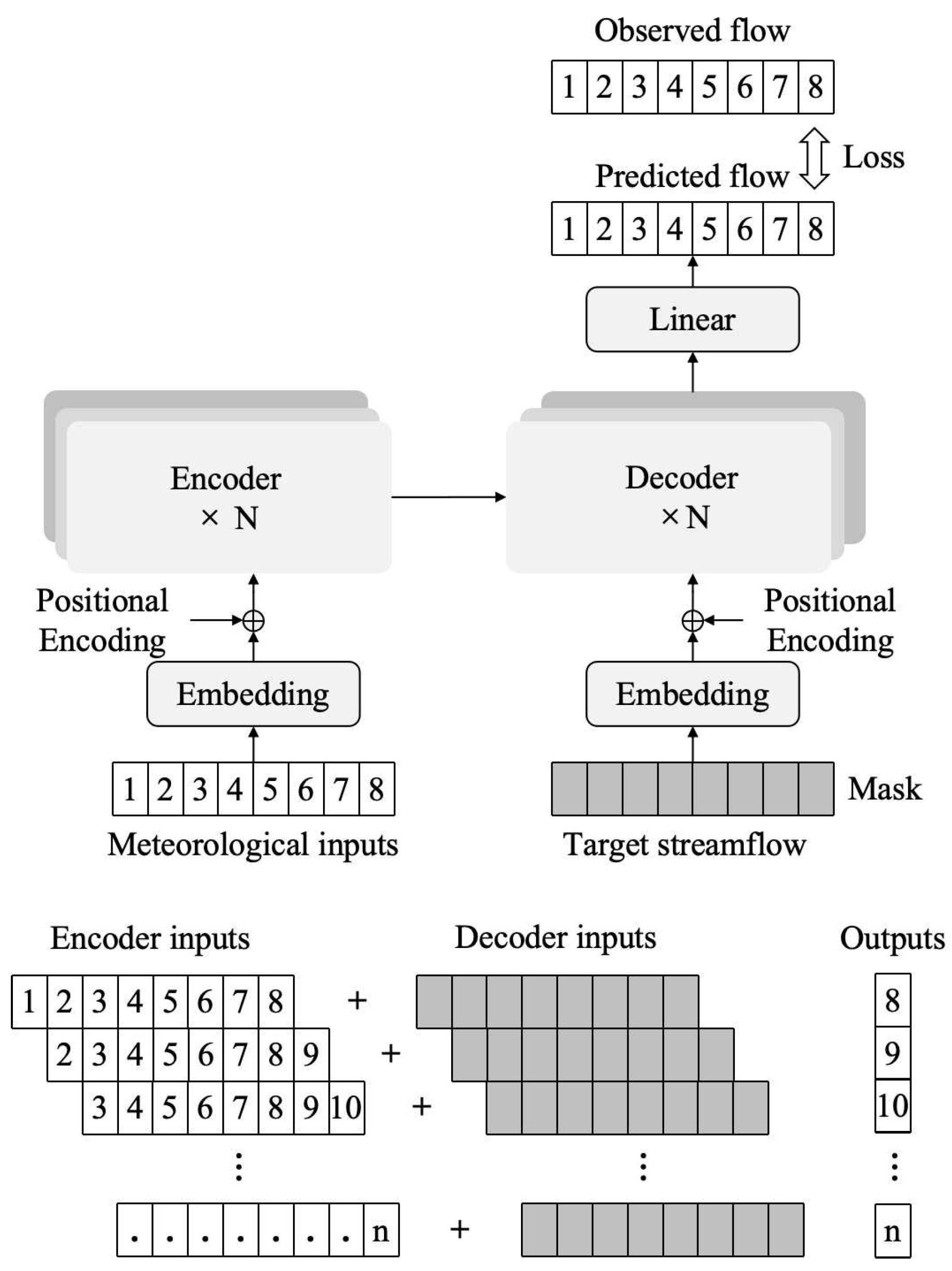

Introducing a Time Window Mechanism for the Transformer Model

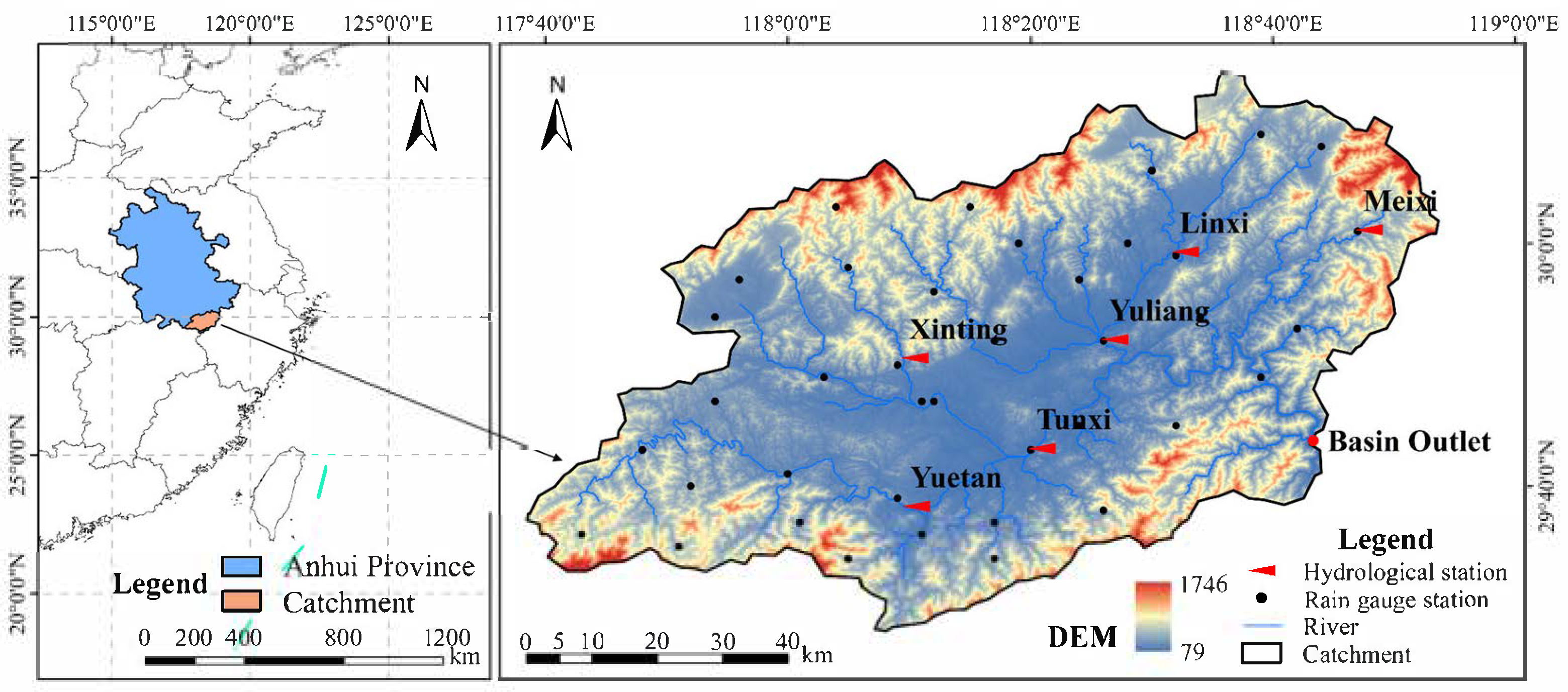

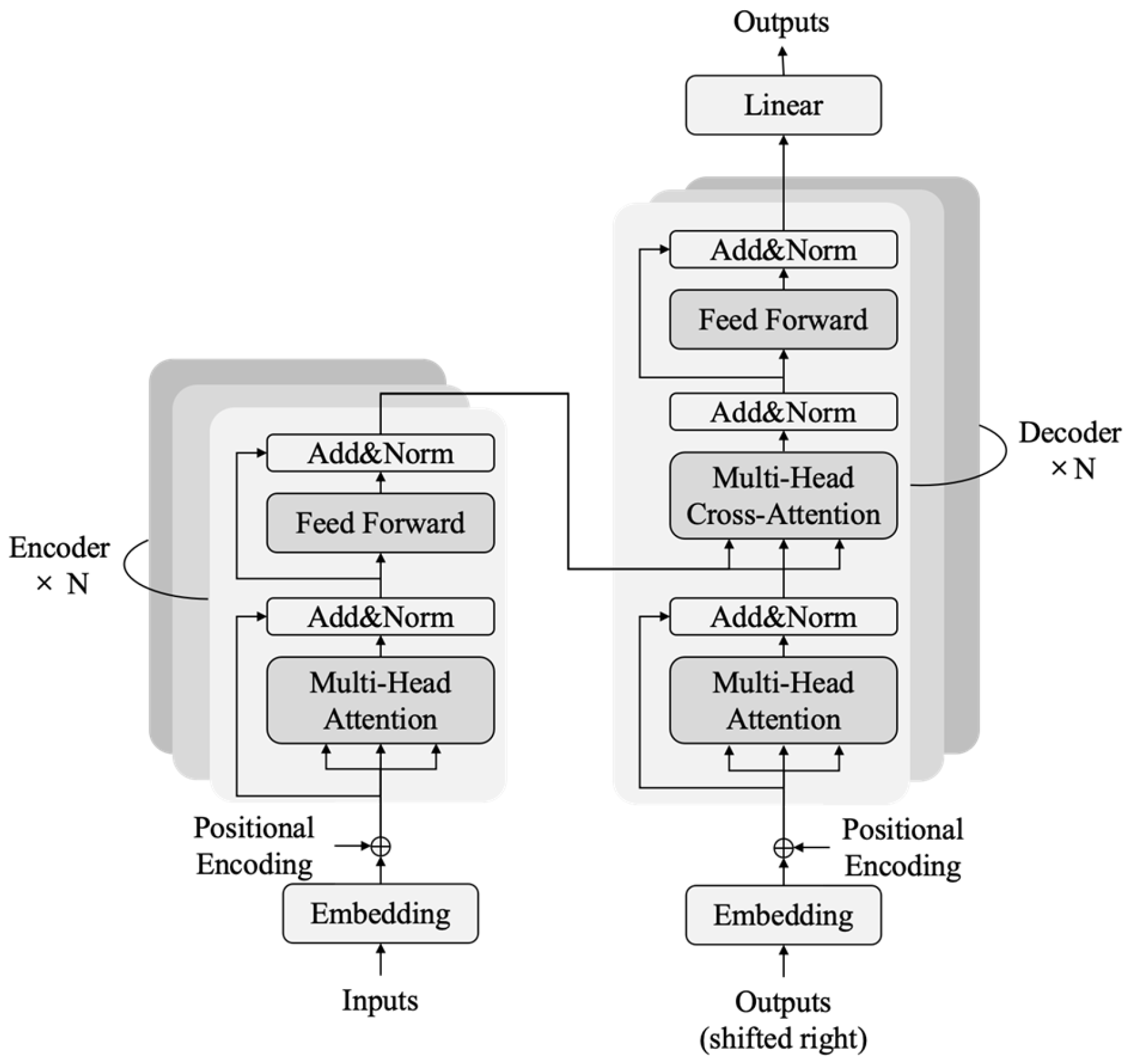

In the original Transformer architecture, the decoder masks the target flow to prevent the model from accessing true flow values during training, thereby ensuring error-based backpropagation. Autoregressive decoding suffers from error accumulation when predicting extended lead times, while non-autoregressive decoding, although accurate in short-term forecasts, relies on observed flow at each step and thus proves unsuitable for long-term predictions. Consequently, this study proposes a fully masked structure, named the Fully Masked Rainfall–Runoff Transformer (RRT) model, which omits explicit flow inputs in the encoder and uses only meteorological data to predict streamflow (

Figure 7). By exploiting the relationship between meteorological sequences and flow, the approach avoids dependence on prior flow conditions and eliminates error accumulation, making it theoretically applicable to long-term flow simulations.

To ensure comparability with the GBHM model, the RRT model utilizes identical meteorological input variables, including daily precipitation, mean air temperature, mean atmospheric pressure, mean relative humidity, sunshine duration, and mean wind speed. The model outputs daily streamflow at three hydrological stations: Tunxi, Yuliang, and Yuetan. The RRT model was trained using data from 1972 to 2007, encompassing 12,783 valid daily records. The validation period spans from 2008 to 2012 (1827 days), and the testing period from 2014 to 2018 (1826 days). Certain years were excluded due to missing streamflow data at some stations. Consequently, the training, validation, and testing sets account for 78%, 11%, and 11% of the data, respectively. In addition, to mitigate overfitting during model training, an early stopping strategy was adopted. Specifically, model performance on the validation set was monitored during each training iteration, and training was terminated when no significant improvement in validation metrics was observed over multiple consecutive epochs. The model’s performance on the testing set was subsequently used as the primary basis for evaluating simulation accuracy and robustness.

Antecedent hydrometeorological conditions significantly influence flow forecasts. To ensure accuracy and robustness, the time window setting requires careful consideration during model development. While each prediction step necessitates sufficient meteorological data, excessive historical input can induce overfitting and weaken predictive performance. Therefore, this study evaluated five time window sizes—3, 5, 7, 14, and 21 days—when constructing the fully masked RRT model to determine the optimal structure. Despite applying the same early-stopping strategy, training variations often produced substantial deviations in testing when validation performance appeared optimal, indicating persistent overfitting. To achieve stable and reliable outcomes, multiple training iterations were performed under the same early-stopping conditions for each time window setting, and model snapshots were saved. The snapshot exhibiting the best evaluation metrics and most consistent predictive performance was selected as the optimal model for that time window.

Table 2 presents the simulation results for different time windows. When the time window is set to 3 days, the RRT model fails to incorporate sufficient antecedent meteorological information, leading to unsatisfactory performance, with PBIAS reaching 24.2% in the validation period. At a 7-day window, the model achieves optimal results, exhibiting similar performance between validation and training, with NSE and RSR reaching 0.79 and 0.46, respectively, and PBIAS within 6%. The model thus demonstrates both accuracy and stability, while the 5-day window ranks second in performance. Consequently, 4–6 days of historical meteorological data appear the most influential in runoff forecasting using meteorological inputs alone. When the window extends to 14 days or more, training performance declines relative to the 5-day and 7-day windows, indicating overfitting caused by excessive data. Moreover, windows of at least 14 days markedly slow training and increase memory demands (approximately 15 GB at 14 days). Therefore, in subsequent analyses, the fully masked RRT model employs a 7-day window.

Figure 8 illustrates the runoff predictions for a 7-day window, revealing comparable performance in both validation and testing. Although mid-to-low flow fitting is satisfactory, high flows are notably underestimated. For instance, on 4 June 2008, simulated flow is 1439 m

3/s versus an observed 3420 m

3/s, yielding a 58% deviation; on 8 June 2011, the model underestimates at 2507 m

3/s against 4410 m

3/s, a 43% error. Nevertheless, extreme-flow simulations in the testing period outperform those in the validation period (APE-2% = 24.46%), partly because peak flows in the test set are generally smaller. However, underestimation of peak flows remains frequent.

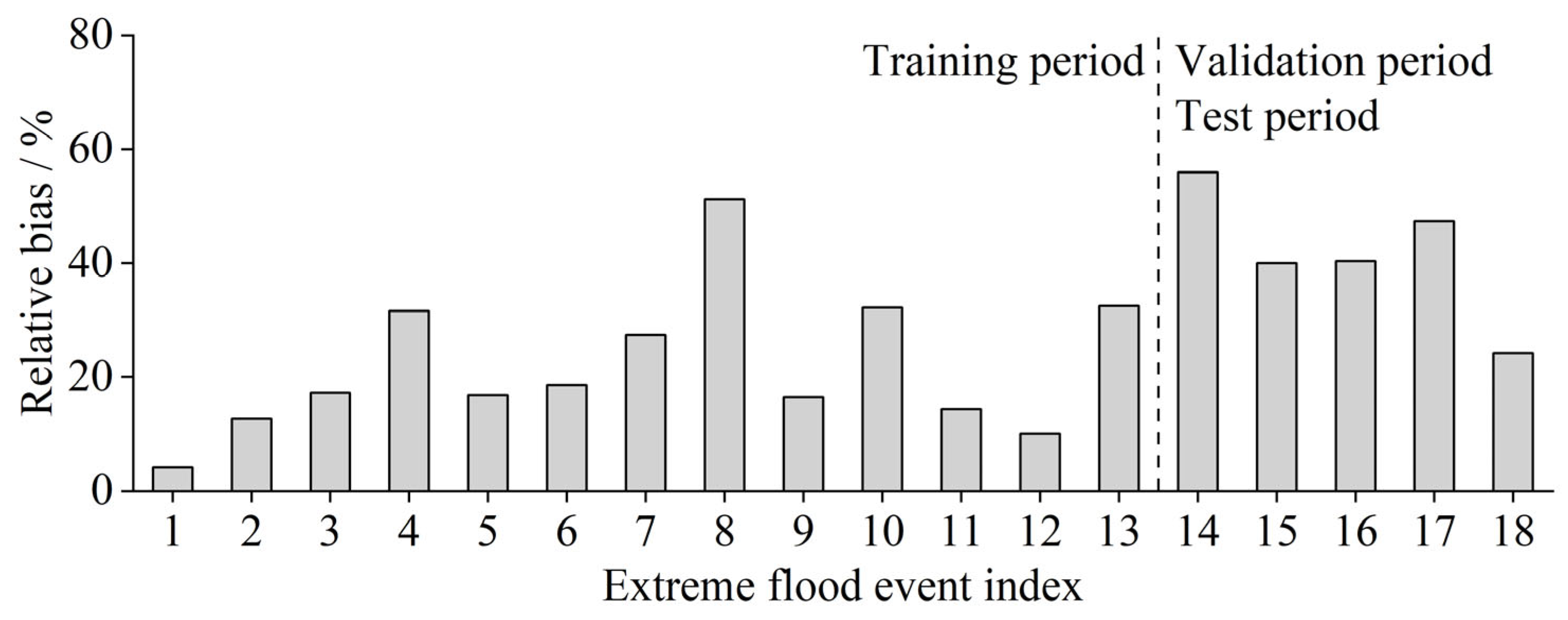

Figure 9 shows the model’s performance for extreme flood events, with relative errors ranging from 4.1% to 56.0% and an average of 27.4%. Two extreme events exceed 50% error. Notably, during training, the mean error for extreme events is lower (21.9%) but still considerable. This result arises from the limited number of extreme event samples relative to the full dataset, making them challenging to predict precisely.

Although some discrepancy persists in extreme flood simulations, the model achieves an overall “good” performance rating, accurately tracking runoff variations and surpassing traditional distributed hydrological models for low-flow conditions. Future work may focus on refining peak-flow estimates to enhance simulation accuracy.

- (2)

Introducing a Runoff Classification Mechanism for the RRT Model

Although the fully masked RRT model has been successfully applied to long-term runoff forecasting, it remains challenging to simulate extreme flow events accurately. Because extreme events occur infrequently in the training dataset, they are underrepresented, leading to systematic underestimation of these rare occurrences in actual forecasts. To address this limitation, we adopt a classification-based approach in which meteorological inputs associated with low-to-moderate flows, high flows, and extremely high flows are categorized into three separate datasets. Each dataset is then used to train an RRT model independently.

For classification, we modify the Transformer model to support a cross-entropy loss function and one-hot encoded labels, where [1, 0, 0], [0, 1, 0], and [0, 0, 1] correspond to low-to-moderate, high, and extremely high flows, respectively. A Softmax activation function outputs the probabilities for each category, and classification accuracy measures the proportion of correctly categorized samples. Based on historical flow data and frequency analysis, we classify flows below the 80th percentile, between the 80th and 95th percentiles, and above the 95th percentile into three subsets, along with their corresponding meteorological sequences. To rigorously evaluate model performance, we split each subset into training (80%), validation (10%), and testing (10%) sets. Training ceases once the validation accuracy plateaus for multiple iterations, and the final model is assessed using the test set.

Classification results are shown in

Table 3. The Transformer model demonstrates strong classification performance, achieving up to 99.4% accuracy on the training set and 97.5% on both validation and testing, underscoring its robustness and stability. Even the relatively scarce “extremely high” flow category exceeds 90% accuracy. Using the classification outcomes, we partition the data for the RRT model. To ensure each time step has sufficient antecedent hydrometeorological inputs, a sliding-window approach is employed. After partitioning, each subset is trained using the fully masked RRT model. The same method is applied to the validation and testing sets, and predictions from all three models are merged according to their original sequence to assess overall performance.

As shown in

Table 4, the RRT model with runoff classification (RC-RRT) yields favorable results during both validation and testing, with NSE, RSR, and PBIAS reaching 0.91, 0.29, and 3.96%, respectively, in validation.

Figure 10 indicates reliable fitting in the low-to-moderate flow range and reasonable peak-flow forecasts between 2008 and 2011, although a substantial error persists for the June 2011 peak (observed 4410 m

3/s vs. modeled 3032 m

3/s). While this 31% discrepancy improves upon the 43% error from the fully masked RRT model, additional refinements are needed. The limited training samples for such high flows—only one event since 1970 exceeding this level (5310 m

3/s at Tunxi Station in July 1996)—suggests constraints in data-driven extrapolation. Nonetheless, the classification-based approach achieves an overall “good” performance, particularly enhancing peak-flow simulation accuracy.

By comparing the simulation performance of the fully masked RRT model with its runoff-classification-based variant during the testing period (

Figure 11), the classification mechanism significantly improves peak-flow predictions without compromising mid-to-low flow accuracy. Specifically, APE-2% drops from 24.5% to 21.5%, and flow deviations above the 90th percentile decrease from 26% to 22%, effectively mitigating underestimation.

Figure 12 shows the model’s performance on extreme flood events, with errors ranging between 0.4% and 40.9% (averaging 14.7%). Except for a 41% error in June 2016, the relative error of all other events remains under 30%. Although the model’s errors in the validation and testing phases surpass those in training, the classification step reduces the average relative error from 41.6% to 26.5%, indicating further room for improvement.

While the RC-RRT model demonstrates strong performance overall, a closer look at the 2008–2019 period in both validation and testing reveals declining performance in later years, with NSE decreasing from 0.91 to 0.85. This reduction may be tied to global climate change and human activities altering the underlying hydrometeorological relationships that the RRT model, relying solely on meteorological data, struggles to capture fully. Consequently, the study couples the model with a physical process hydrological framework to leverage its ability to represent changes in underlying surface conditions, thereby enhancing simulation stability.

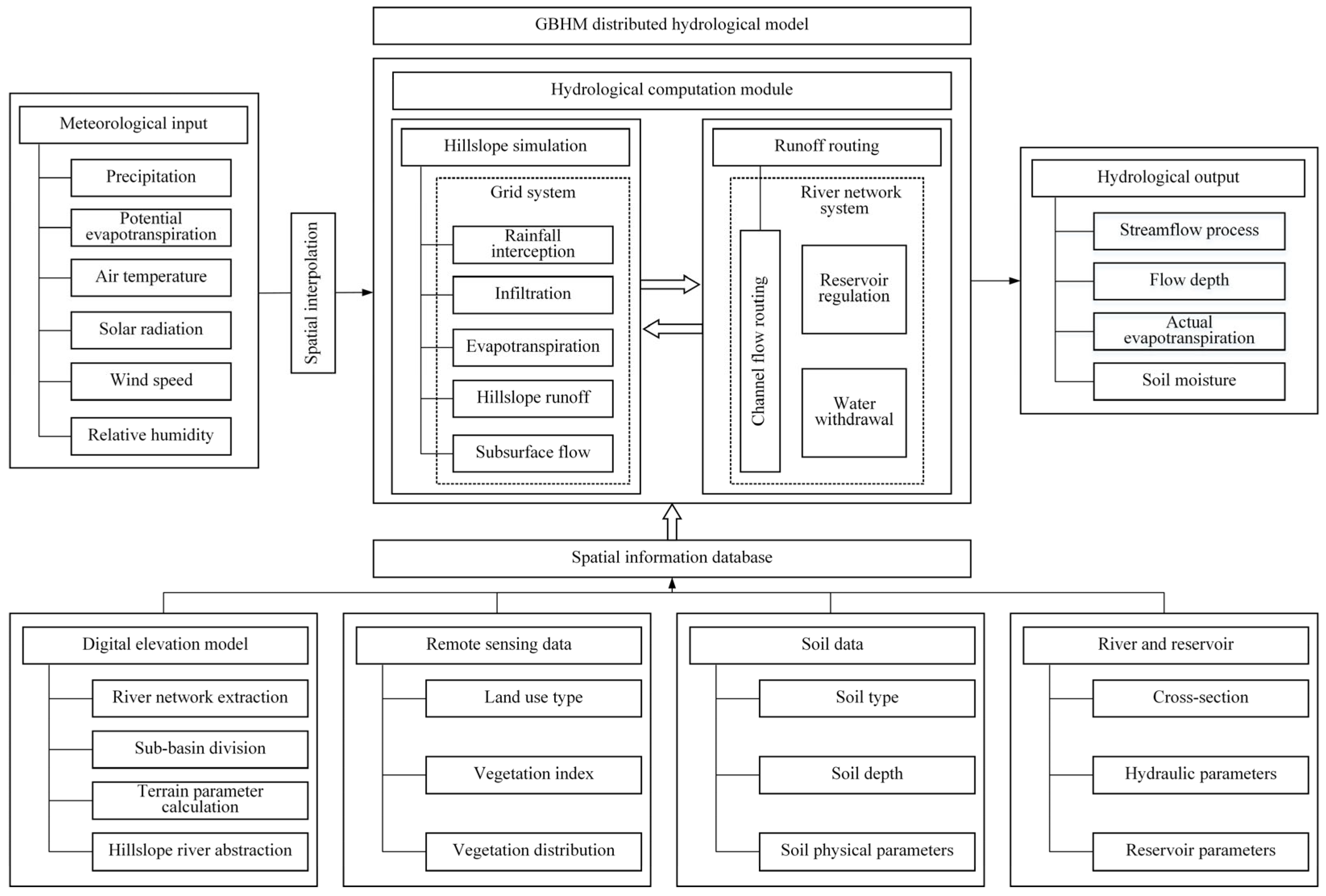

4.3. Simulation Performance and Analysis of the Coupled Model

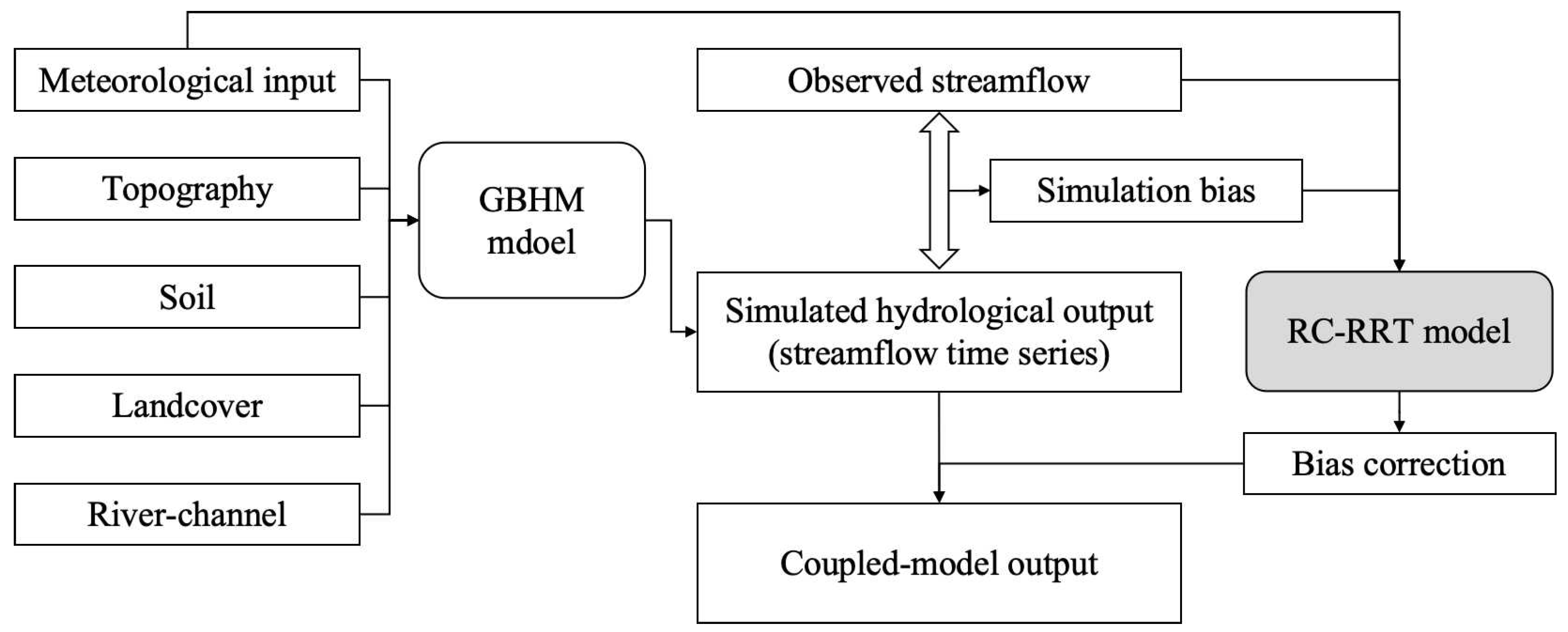

Due to the limitations of data accuracy and theoretical assumptions, simulation outputs from physically based models inevitably exhibit biases. However, these biases often display autocorrelation characteristics. To enhance predictive accuracy, machine learning models can be employed to model the residuals. In this approach, the observed streamflow is expressed as the sum of the physically based model output and a learnable error component. This residual correction strategy effectively mitigates systematic errors and improves overall simulation performance, as illustrated in

Figure 13.

As shown in

Table 5, the GBHM–RRT coupled model based on runoff classification and bias correction (RCGR) substantially outperforms the RC-RRT model, boosting NSE, RSR, and APE-2% at Tunxi Station from 0.82, 0.43, and 26.89% to 0.93, 0.27, and 19.07%, respectively, while maintaining PBIAS below 0.5%. Moreover, similar performance on validation and testing underscores the RCGR model’s stability and reliability.

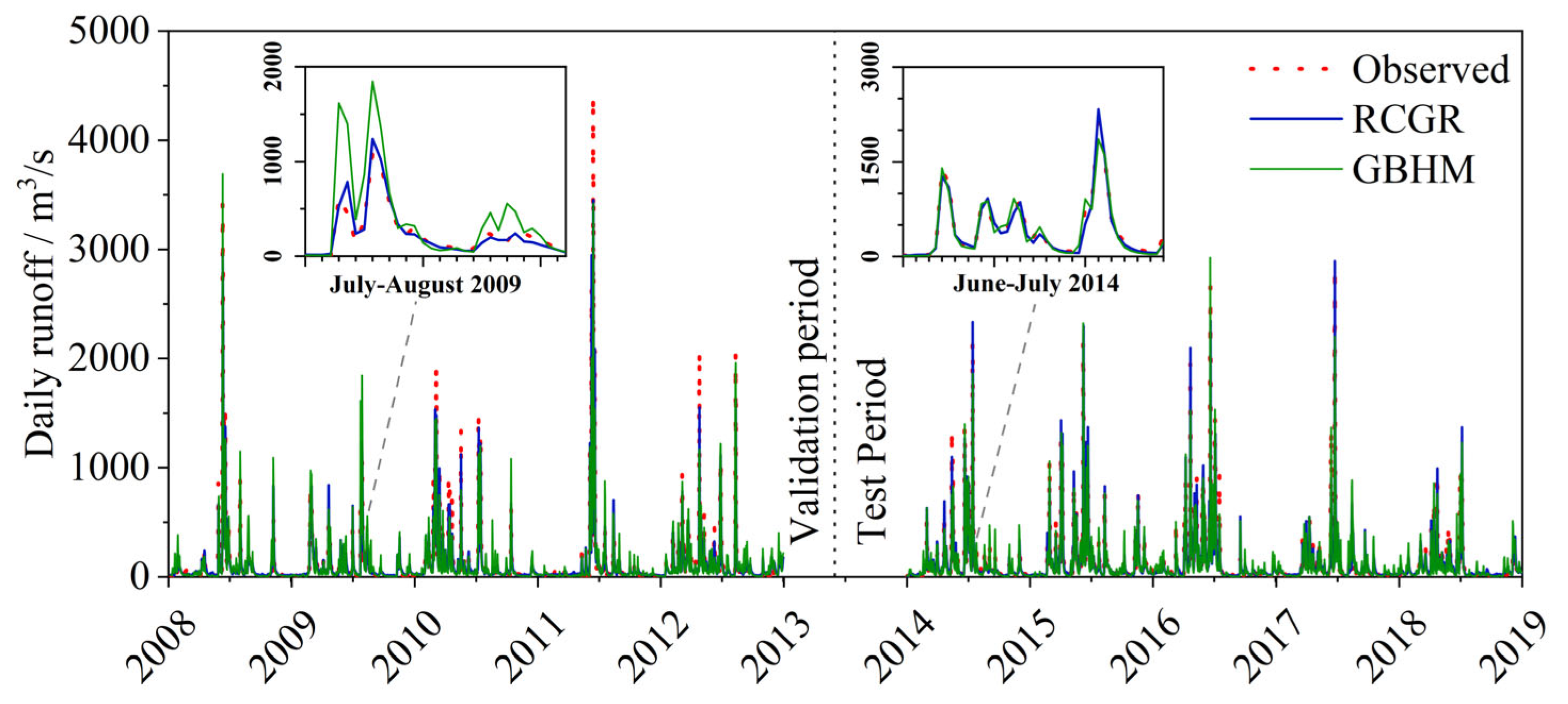

Since the GBHM model simulations are categorized only into calibration and validation periods, data from 2008 to 2018 were extracted for comparison with the RCGR-coupled model. After applying the RC-RRT model to learn and correct the simulation errors of GBHM, model performance improved significantly. For instance, at Tunxi Station during the testing period, the NSE increased from 0.86 to 0.93, RSR decreased from 0.37 to 0.27, and PBIAS improved from −4.49% to −0.50%. While the APE-2% for extreme flow simulation remained comparable, the average APE-2% across the three stations slightly decreased from 22.78% to 20.98%. As illustrated in

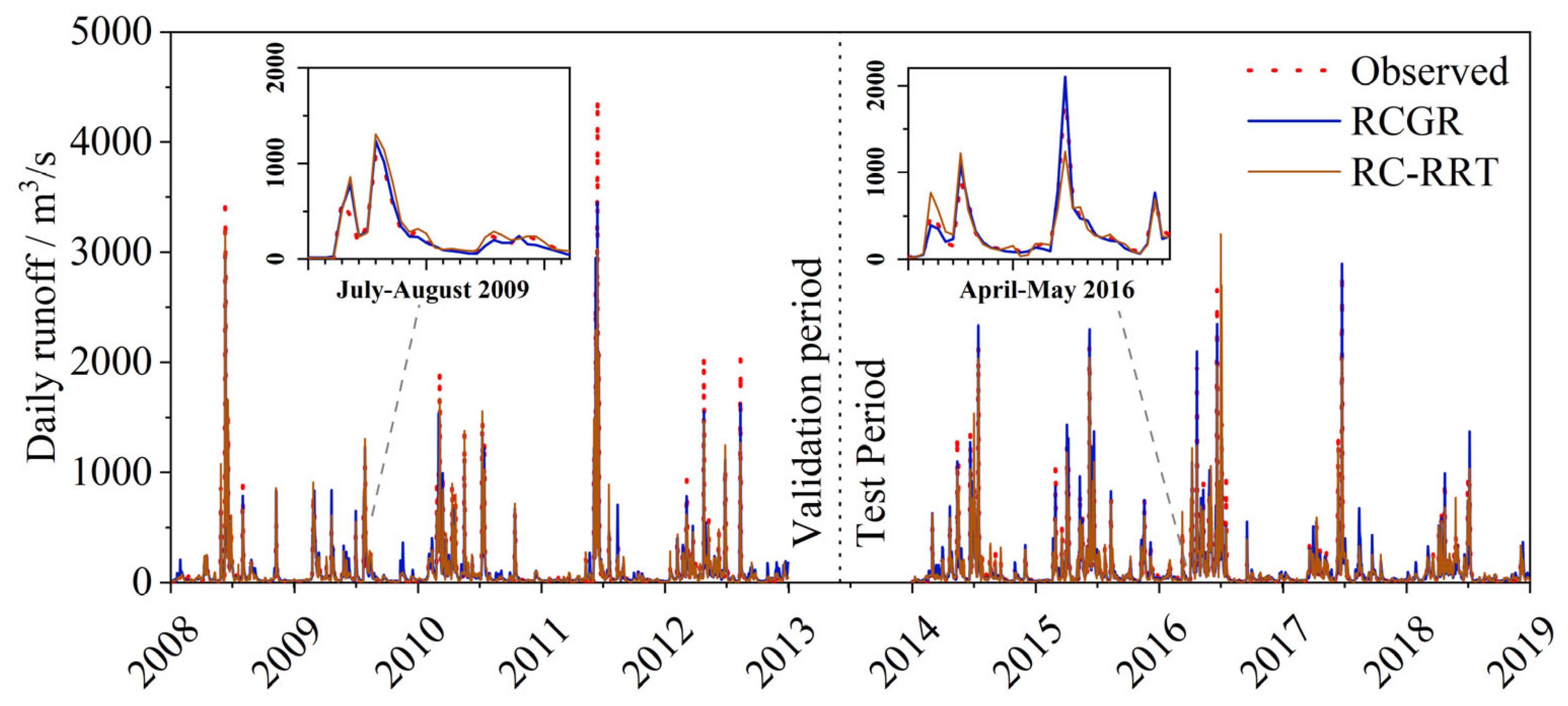

Figure 14, the RCGR -coupled model outperformed the standalone GBHM model, with the RRT component effectively correcting most peak-flow biases in GBHM, such as the overestimation during July–August 2009 and the underestimation in June–July 2014. However, for certain extreme events (e.g., June 2008 and June 2011), the correction capability of the RC-RRT model was limited.

Figure 15 contrasts the RCGR model against the RC-RRT model. Their validation performance differs marginally (NSE of 0.93 versus 0.91 and peak-flow error within a 3% gap). Both capture most flow variations and peaks reliably (e.g., July–August 2009). However, the RCGR model excels during testing, particularly for the April–May 2016 peak, around 1950 m

3/s. Although the RC-RRT approach significantly enhances peak simulations, it remains constrained by the inherent generalization limits of data-driven methods and incurs a 35% error. In contrast, the coupled approach reduces this error to under 10%, reflecting its superior modeling capabilities.

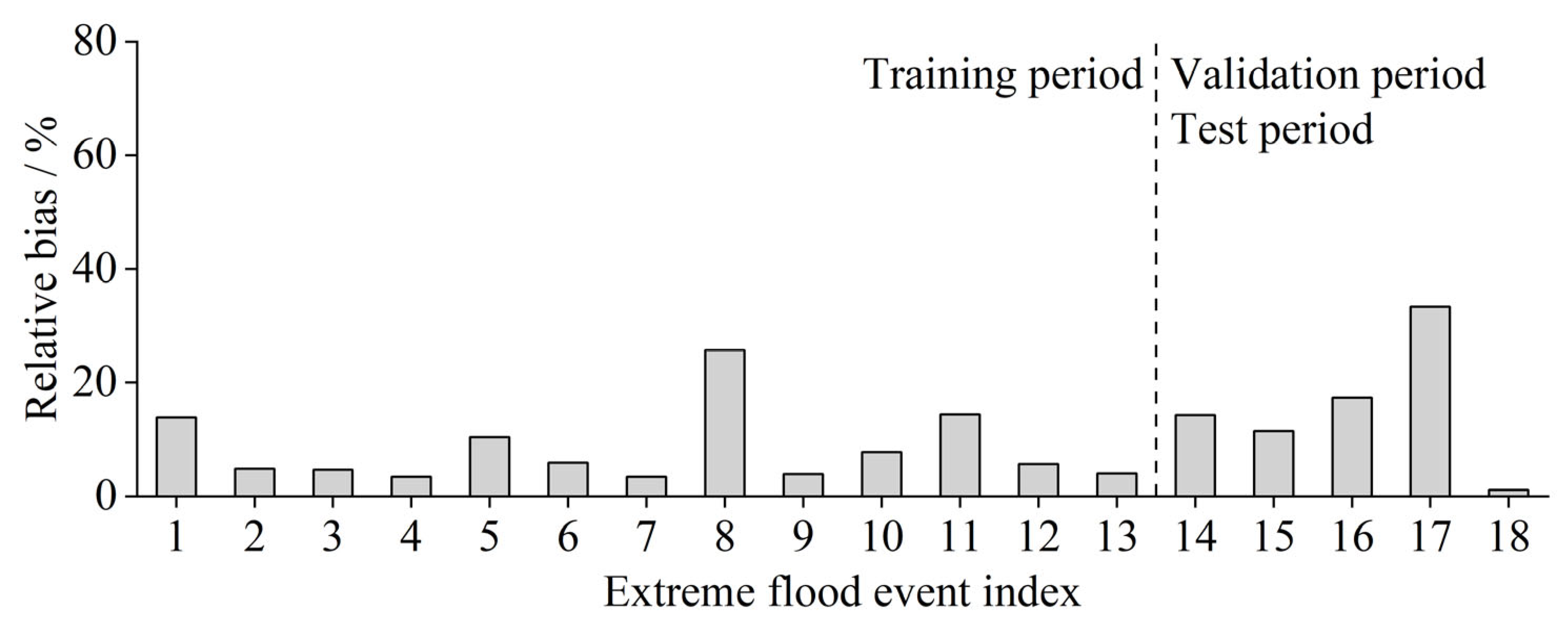

Figure 16 exhibits the RCGR model’s extreme flood simulation, with errors ranging from 1.1% to 33.3% (mean 10.3%). Aside from deviations of 25.7% and 33.3% in July 1996 and June 2016, all other extreme events remain under 20% error. While training errors stand at 8.3%, validation and testing errors increase to 15.5%, still markedly lower than those in standalone data-driven models, illustrating improved stability under coupling. By incorporating GBHM outputs as an additional input, the coupled model benefits from the strong correlation between GBHM and observed flows, helping alleviate overfitting and thereby extending temporal generalization. Validation and testing at Yuliang Station yield NSE values of 0.89 and 0.87, respectively, while Yuetan Station achieves 0.91 and 0.90, confirming that the coupled model’s generalization surpasses that of the RC-RRT model.

4.4. Discussion

This section synthesizes the main findings and benchmarks them against standard baselines, including physically based (GBHM, XAJ, SWAT) and data-driven (ANN, LSTM, WLSTM, BiLSTM, RC-RRT) models.

- (1)

Comparison with physically based hydrological models

A comparative analysis was conducted between the widely adopted XAJ model and SWAT models in the XRB region [

66,

83], as summarized in

Table 6. Across all evaluation periods, the RCGR-coupled model consistently outperformed standalone physically based hydrological models and data-driven models in terms of NSE, RSR, PBIAS, and APE-2%. The NSE improved from 0.70 to 0.85 to above 0.90, and PBIAS was controlled within 7% at all stations. Additionally, the coupled model demonstrated comparable performance during the validation and testing phases, indicating strong model stability.

Compared with the GBHM model, the RCGR model achieved over a 10% increase in NSE, particularly at Yuetan Station, where the relatively weaker performance of GBHM (NSE = 0.74) was significantly enhanced to 0.93 through deep learning of meteorological-hydrological relationships. When benchmarked against the standalone data-driven RRT model, both models exhibited similar performance during the validation period. However, in the testing phase, the RRT model showed slightly reduced accuracy at Tunxi and Yuliang stations. This suggests that data-driven models rely heavily on learned correlations within meteorological-hydrological sequences, which remain relatively stable over short periods. Under the dual influences of climate change and anthropogenic activities, these correlations may shift, and data-driven models—limited by the scope of meteorological input—fail to capture such changes, leading to diminished predictive performance and reduced generalization capabilities in extended forecasts. In contrast, coupling with a physically based distributed model mitigates this limitation, significantly enhancing model robustness.

For extreme flood event simulations,

Figure 17 illustrates the comparative error analysis between the coupled model and standalone models. The SWAT model yielded relative errors ranging from 2.7% to 77.8%, with an average of 30.1%, while the Xin’anjiang model showed a range of 5.8% to 72.7%, averaging 28.4%. Both models exhibited consistent performance during calibration (events 1–10) and validation periods (events 11–18). Notably, the GBHM model maintained a stable average relative error of 31.4% and 31.2% for the two periods, attributed to its capacity to account for meteorological and land use changes. Although both the RC-RRT and RCGR models showed some discrepancies between training (events 1–13) and validation/testing (events 14–18) phases, the coupled model significantly reduced the variability in extreme event simulation accuracy across periods. Moreover, it preserved the robustness of the GBHM model under changing environmental conditions while achieving enhanced precision in simulating extreme hydrological events.

Physically based hydrological models demonstrate high accuracy and stability in simulating average hydrological conditions; however, they often exhibit considerable deviations when modeling extreme events. In contrast, data-driven models are adept at uncovering latent relationships among variables, granting them a significant advantage in simulating extremes. Therefore, integrating physically based models with data-driven approaches offers a promising strategy for enhancing the accuracy of extreme hydrological event simulations.

- (2)

Comparison with data-driven models

Several widely used machine learning models for rainfall–runoff simulation were selected for comparison, including the Artificial Neural Network (ANN) [

84], Long Short-Term Memory network (LSTM) [

85], Wavelet-LSTM model (WLSTM) [

74,

86], and Bidirectional LSTM (BiLSTM) [

87]. Given that the primary objective of this study is to achieve long-sequence runoff simulation, all data-driven models were developed following the architectural design of the fully masked RRT model to ensure consistency in input data structure. A temporal window of 7 days was adopted, where meteorological data over each 7-day window were used to predict the runoff on the 7th day. The predicted runoff values of each 7th day were then concatenated to form the complete forecast sequence.

To ensure consistency across models, the dataset was partitioned identically to the Transformer model, with the training, validation, and testing periods accounting for 78%, 11%, and 11% of the total dataset, respectively. Model hyperparameters were optimized through manual trial-and-error, and the early stopping strategy was aligned with that of the Transformer, where training was terminated once the validation NSE showed no significant improvement after successive iterations. The iteration yielding the best validation NSE was selected as the final model configuration. Acknowledging the stochastic nature of model training, each model underwent 500 independent runs, and the best-performing result was adopted to represent the final model outcome. A detailed summary of model performance at the Tunxi station is presented in

Table 7.

In both the validation and testing phases, aside from the RCGR coupled model, the RC-RRT model and other data-driven approaches exhibited comparable performance, with NSE values generally exceeding 0.7 and PBIAS maintained at relatively low levels. This indicates that the RC-RRT model performs on par with widely adopted and validated models such as ANN, LSTM, and their variants in long-sequence rainfall-runoff simulation. The simulation errors for extreme flood events, as illustrated in

Figure 18, show that the relative errors for ANN, LSTM, WLSTM, and BiLSTM models range from 0.7 to 45.7%, 2.3–40.8%, 0.8–41.4%, and 7.4–60.6%, respectively, with corresponding average errors of 14.5%, 15.4%, 16.0%, and 27.0%. These results suggest that, excluding BiLSTM, the extreme event simulation accuracy of data-driven models is generally comparable to that of the RRT model, with no significant performance disparity. Although the BiLSTM model performs well overall, its accuracy in simulating extreme events remains suboptimal. The similar performance of various data-driven models in extreme event scenarios implies that model architecture exerts limited influence on simulation accuracy under such conditions. Instead, the primary limitation stems from the scarcity of extreme event samples within the overall training dataset. This is further corroborated by the observation that the RC-RRT model, which incorporates runoff classification, achieved over a 50% improvement in extreme event simulation accuracy compared to the fully masked RRT model. Additionally, when comparing the simulation accuracy across training, validation, and testing periods, standalone data-driven models exhibit notable discrepancies in extreme event performance, highlighting limited model robustness and generalizability. In contrast, the RCGR coupled model demonstrates consistent performance across all periods, delivering both high and stable accuracy in simulating extreme hydrological events.