1. Introduction

As a low-emission energy source, natural gas serves as a pivotal component in global energy systems and has become a crucial choice for the energy transition in numerous countries. It is well known that the prediction of natural gas can facilitate effective policy formulation by governments, ensure secure energy supply to humans, and optimize investment returns on energy projects, thereby supporting sustainable development. However, natural gas prices are subject to significant volatility due to geopolitical tensions, supply disruptions, and fluctuating demand, posing challenges for both market participants and policymakers. From a macroeconomic perspective, persistent price increases in natural gas can trigger cost–push inflation dynamics, where rising input costs propagate through production chains and contribute to broader price-level pressures [

1,

2]. At the same time, the efficiency of energy markets, in which prices are expected to reflect all publicly available information, imposes inherent limits on predictability, consistent with the semi-strong form of the Efficient Market Hypothesis [

3]. In recent years, the application of natural gas has expanded significantly across various sectors, including power generation, petroleum, and transportation. Driven by the global transition away from coal and the increasing adoption of natural gas as a low-emission energy source, the accurate forecasting of natural gas prices has emerged as a vital concern across energy markets, industrial operations, and policy planning [

4].

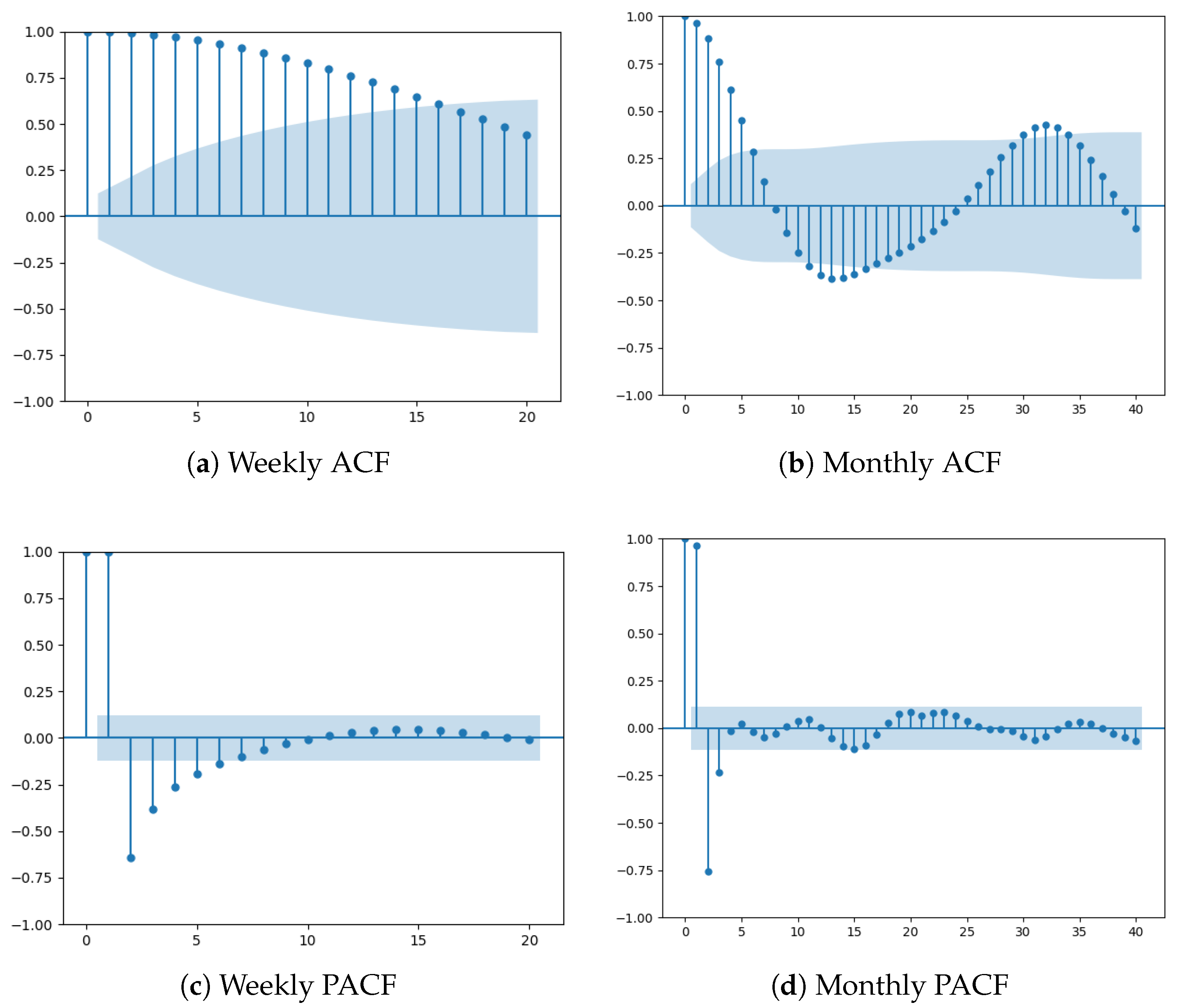

Owing to the criticality of natural gas price forecasting, some work has studied how to predict commodity prices through different models. For example, Li and Kong [

5] constructed the ARIMA, the SARIMA, and the ARIMA-GARCH models to predict natural gas prices. The corresponding shows that SARIMA is the best model for time series data with the seasonal effect and trend effect. Moreover, Su et al. [

6] conducted predictive modeling of natural gas prices using four individual machine learning methods, and evaluated their forecasting performance separately. Bilgili and Pinar [

7] employed a LSTM network to forecast gross energy consumption (GEC) in Turkey and compared its performance with the SARIMA model, with results indicating that the LSTM model generally outperforms SARIMA. For forecasting liquefied natural gas prices, Kim et al. [

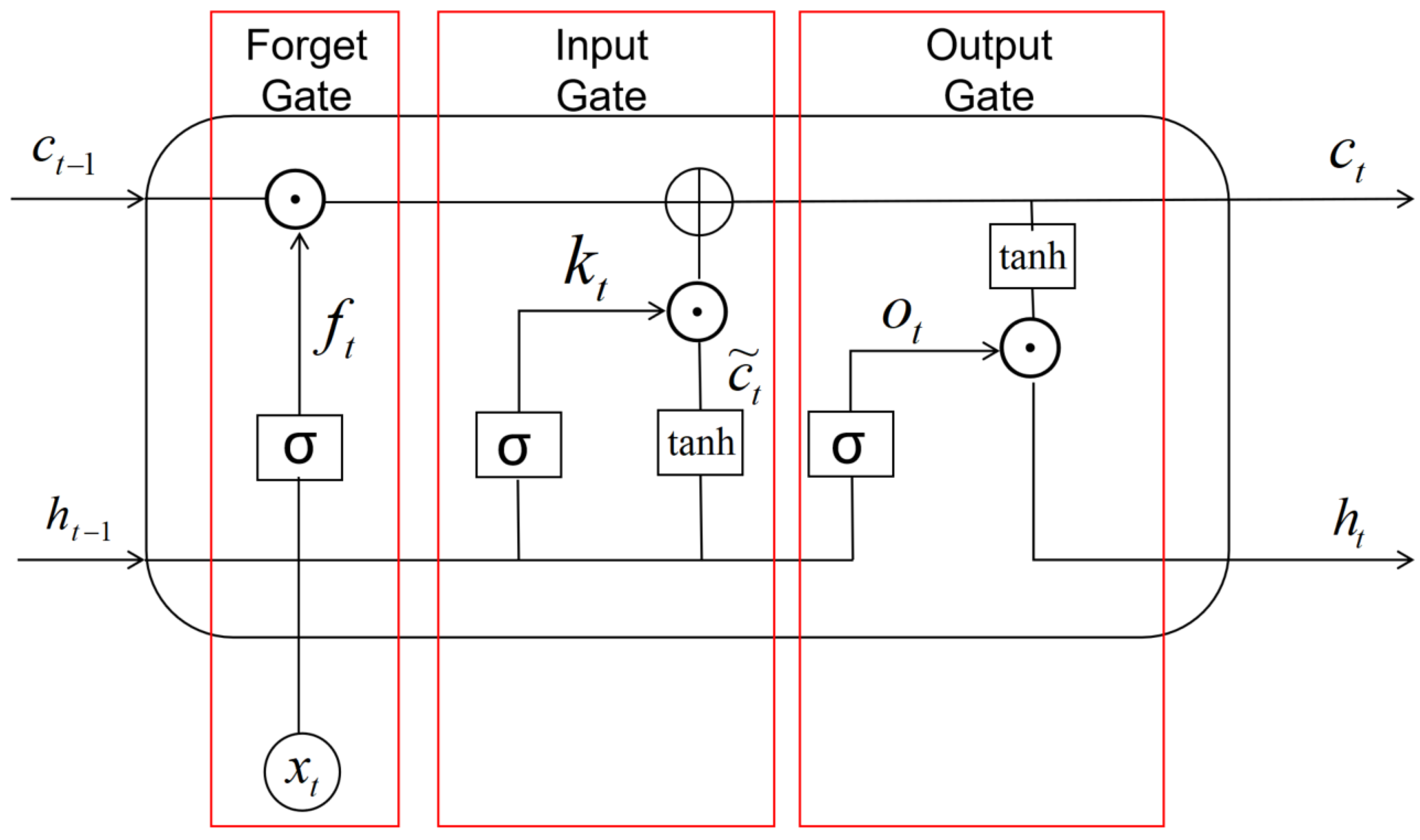

8] compared simple recurrent neural networks, LSTM network, and the gated recurrent unit model. The LSTM network demonstrates superior performance in modeling nonlinear and volatile time series by efficiently capturing long-term dependencies and mitigating the vanishing gradient issue.

The aforementioned works focus mainly on individual models such as ARIMA, SARIMA, and LSTM to compare their predictive performance. Nevertheless, ensemble models are capable of combining the strengths of various individual models. More recently, this issue has received much attention. He et al. [

9] proposed a novel hybrid framework that integrates the SARIMA with model with a Convolutional Neural Network (CNN) and LSTM network, for high-frequency tourism demand forecasting. The model leverages SARIMA to extract linear components, CNN to capture hierarchical data structures, and LSTM to model long-term temporal dependencies. Parasyris et al. [

10] evaluated the efficacy of SARIMA, LSTM, and hybrid models in forecasting meteorological variables for a two-day weather prediction in Greece, revealing that hybrid methodologies outperformed others for temperature and wind speed. Moreover, Peirano et al. [

11] proposed a hybrid SARIMA-LSTM framework designed to robustly capture linear and nonlinear temporal dependencies in inflation rate forecasting for five emerging economies in Latin America, demonstrating superior predictive accuracy compared to standalone models and other combined approaches. Tahyudin et al. [

12] demonstrated that the hybrid SARIMA-LSTM framework significantly enhances forecasting accuracy for US COVID-19 case predictions, with reduced RMSE and MAE compared to individual SARIMA and LSTM models. Yu and Song [

13] developed a multi-stage hybrid model (VMD-GRU-AE-MLP-RF) that combines decomposition, correlation-based feature grouping, group-specific deep learning networks, and ensemble integration through random forest to enhance natural gas price forecasting performance. However, few existing studies have systematically compared the impact of different ensemble methods on forecasting performance.

However, despite the proliferation of hybrid and decomposition-based models, most adopt fixed integration mechanisms without systematic evaluation of alternative ensemble strategies. To illustrate the landscape and limitations of recent studies,

Table 1 summarizes representative works in natural gas price forecasting.

As summarized in

Table 1, an increasing number of studies have adopted hybrid and data-driven approaches for natural gas price forecasting. However, several key limitations remain in the existing literature. For instance, many models rely on fixed ensemble strategies. Deep learning models such as LSTM have demonstrated strong performance but often suffer from overfitting and high computational complexity. In contrast, traditional statistical models are constrained by linear assumptions and are therefore unable to capture nonlinear dynamics inherent in time series data.

To overcome these challenges, some works have investigated various ensemble approaches to integrating individual models, such as stacking ensemble methods, bagging methods, and weighted average methods. For example, Abdellatif et al. [

20] proposed a stacking ensemble learning model to forecast day-ahead PV power. In addition, Nguyen and Byeon [

21] employed a stacking ensemble model utilizing logistic regression (LR) as the meta-classifier to enhance predictive performance, combined with five base learners to improve the early detection of depression in Parkinson’s disease patients. Li et al. [

22] employed four machine learning models as base learners, and logistic regression was adopted as the meta-learner to construct a stacking ensemble model for predicting regional CO

2 concentrations. This hybrid model demonstrated high accuracy and reliability in the forecasting task. Duan et al. [

23] improved SVR performance through the complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) preprocessing and the bagging ensemble. Moreover, Adnan et al. [

24] integrated the Locally Weighted Learning (LWL) algorithm with the bagging ensemble technique to forecast streamflow in the Jhelum Basin, Pakistan, while Wang et al. [

25] presented an ensemble model which combines three individual models using a weighted approach to forecast natural gas prices. Shashvat et al. [

26] introduced a weighted averaging framework for forecasting infectious disease incidence rates, such as typhoid fever, and evaluated its performance against conventional predictive models through comparative analysis.

To facilitate a more intuitive comparison of ensemble methods,

Table 2 summarizes recent studies with respect to different integration approaches.

As shown in

Table 2, ensemble learning is increasingly applied in forecasting methods. However, most studies have focused on a single integration strategy and lack a unified comparative evaluation.

To better assess the contribution of forecasting models to sustainability, this study reviews and compares the temporal scale choices adopted by previous researchers in energy forecasting, with a systematic summary provided in

Table 3.

As illustrated in

Table 3, most existing research is confined to a single time scale or split high-frequency data into a lower temporal resolution, with limited systematic assessment of forecasting performance across temporal resolutions. However, this paper addresses this gap through a comparative analysis on two time scales such as weekly and monthly natural gas price predictions.

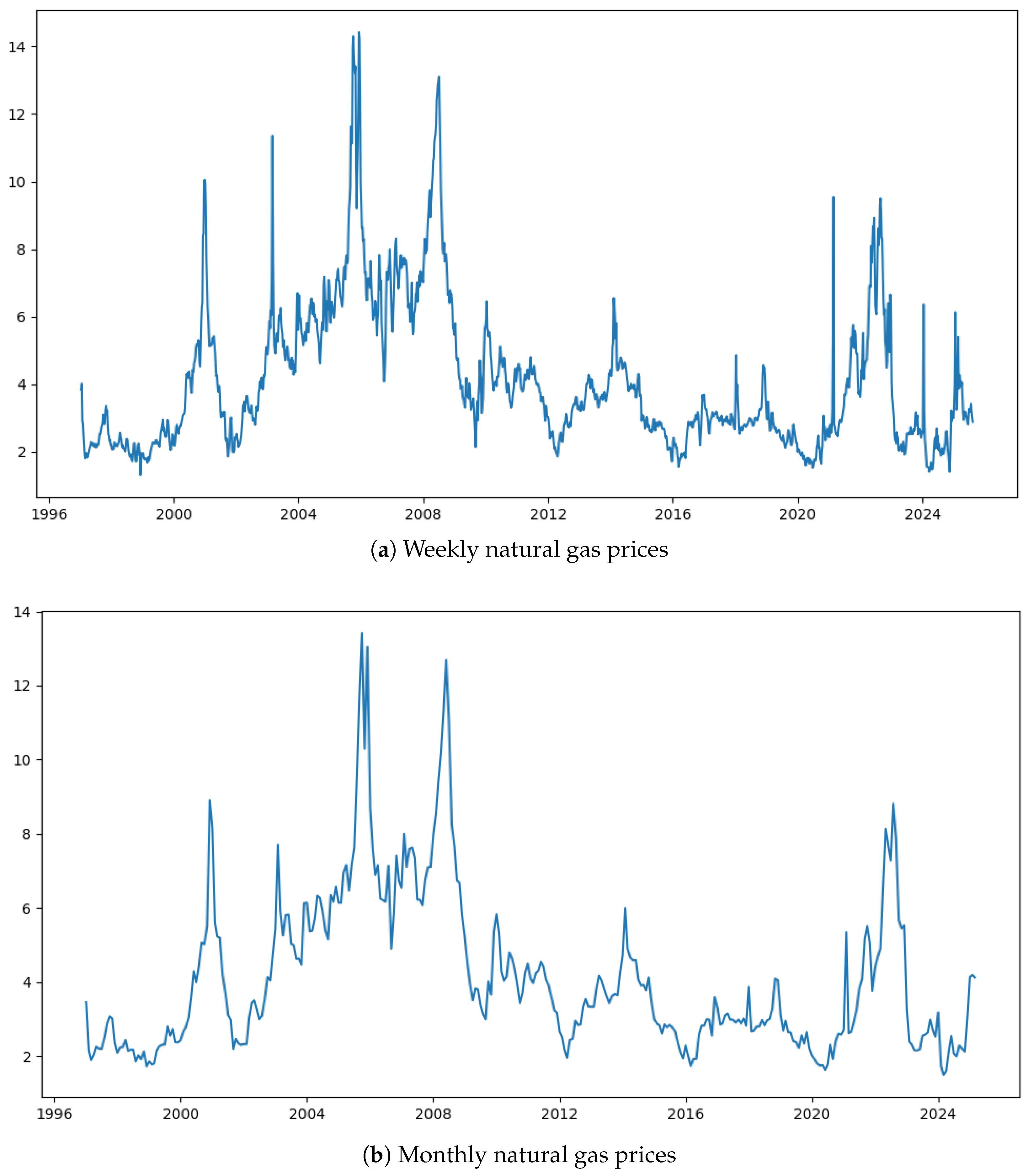

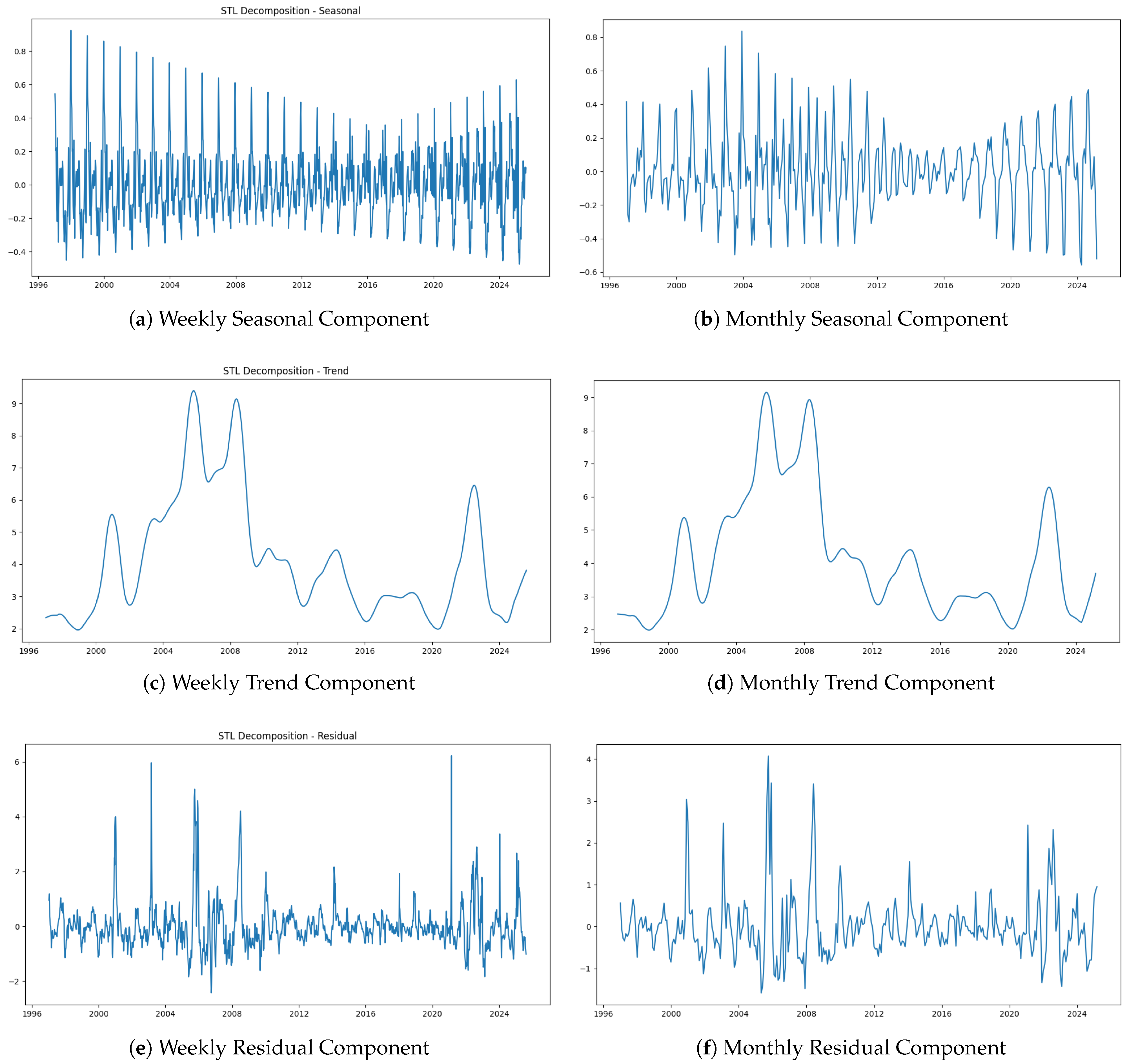

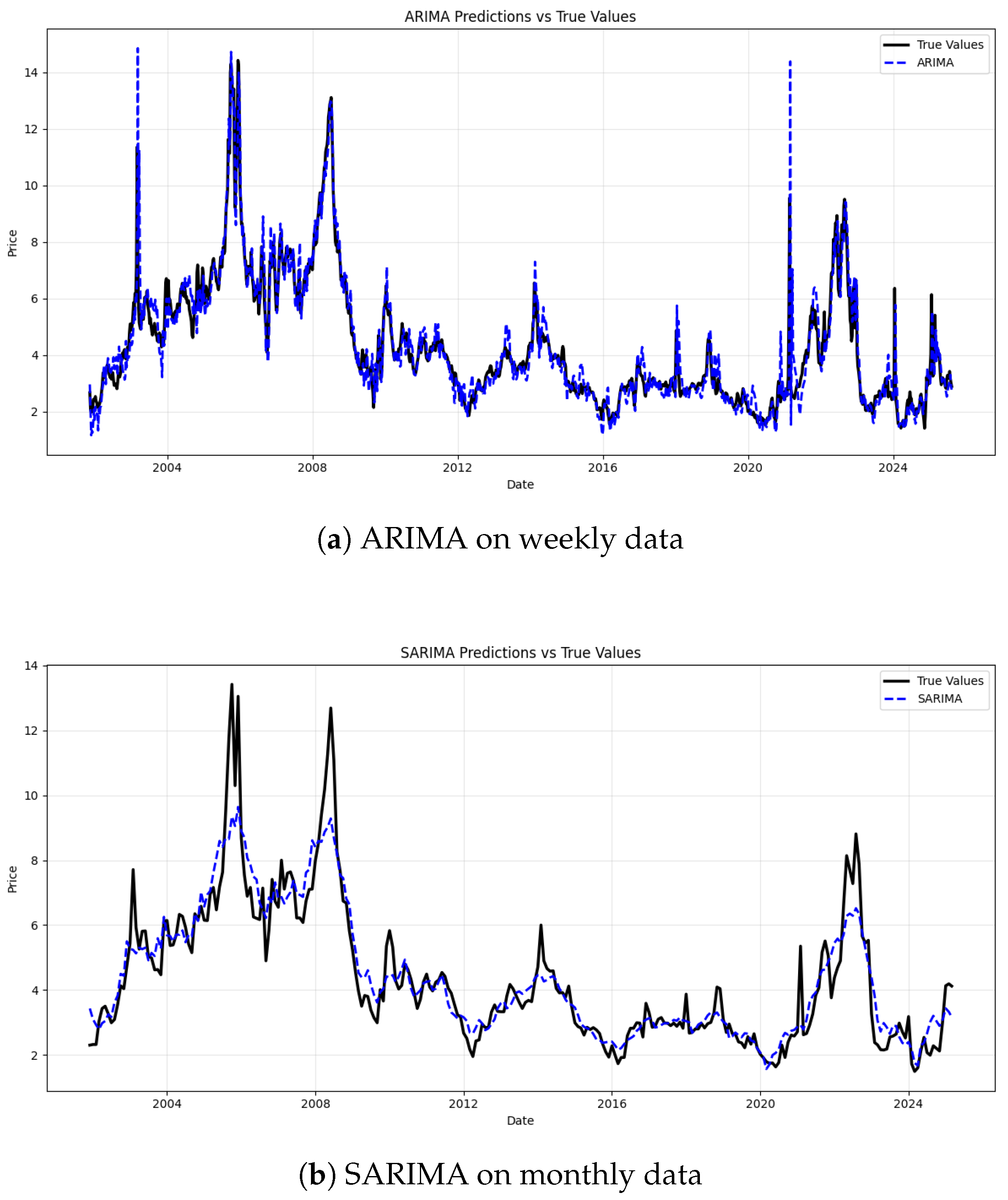

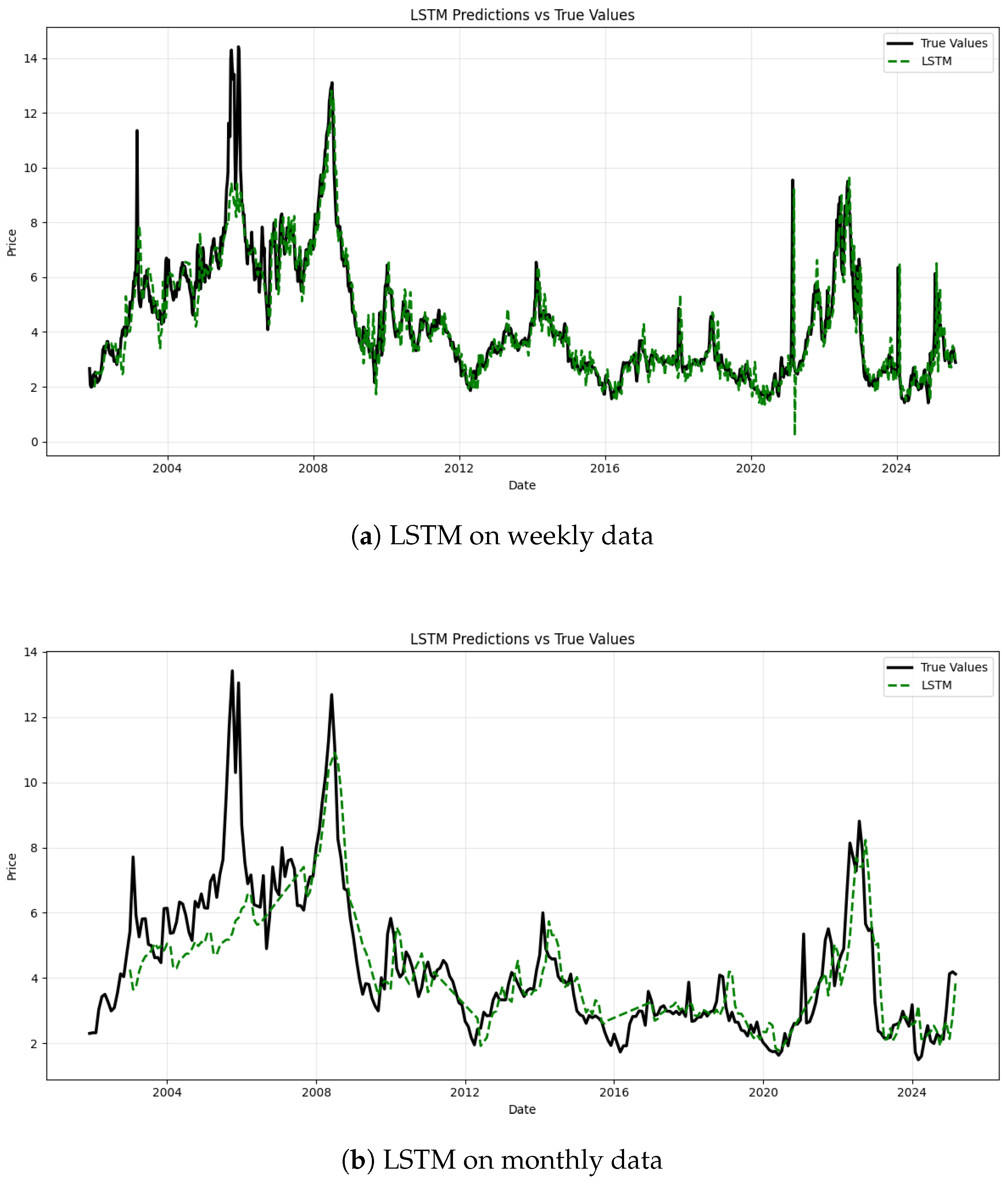

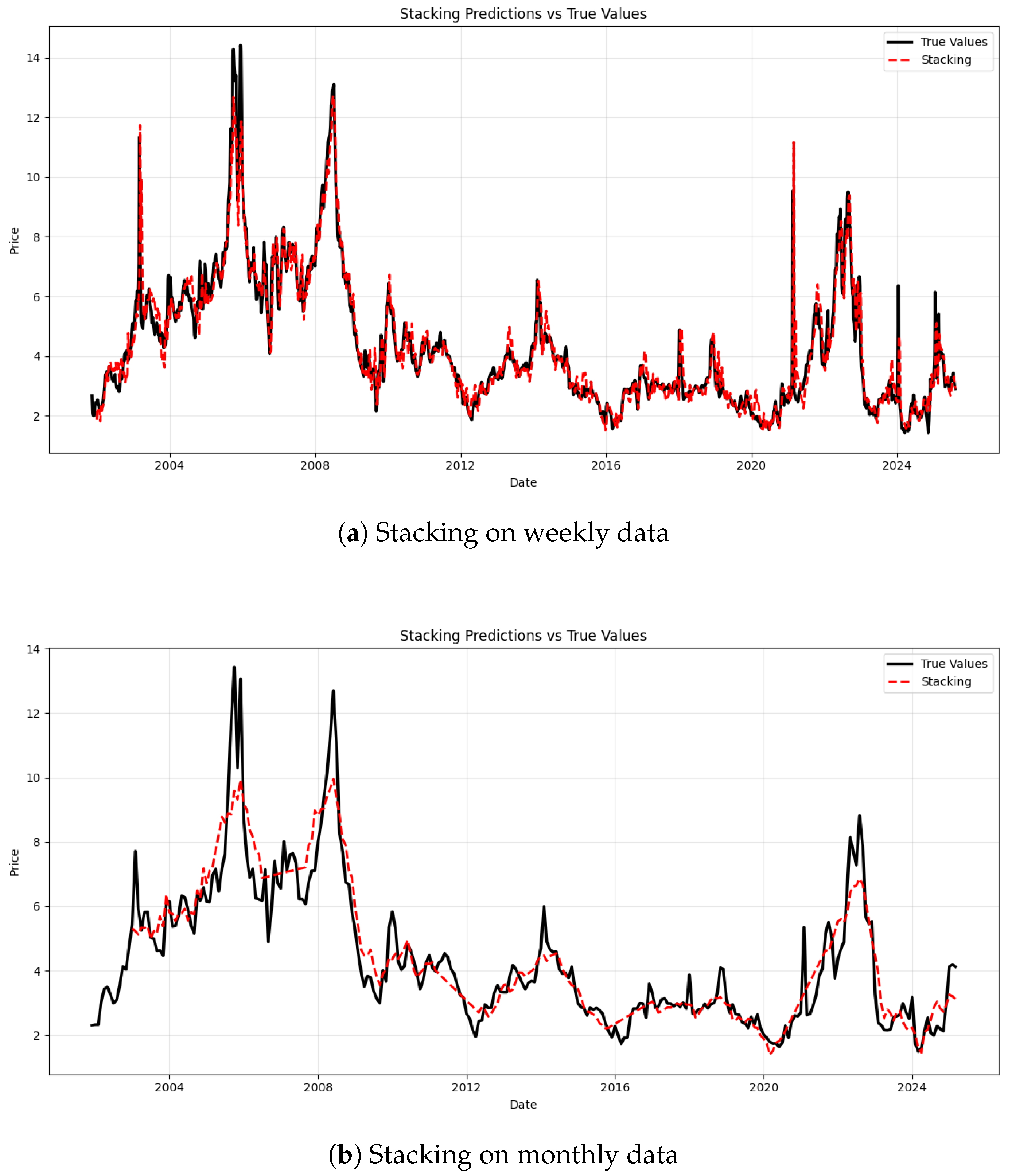

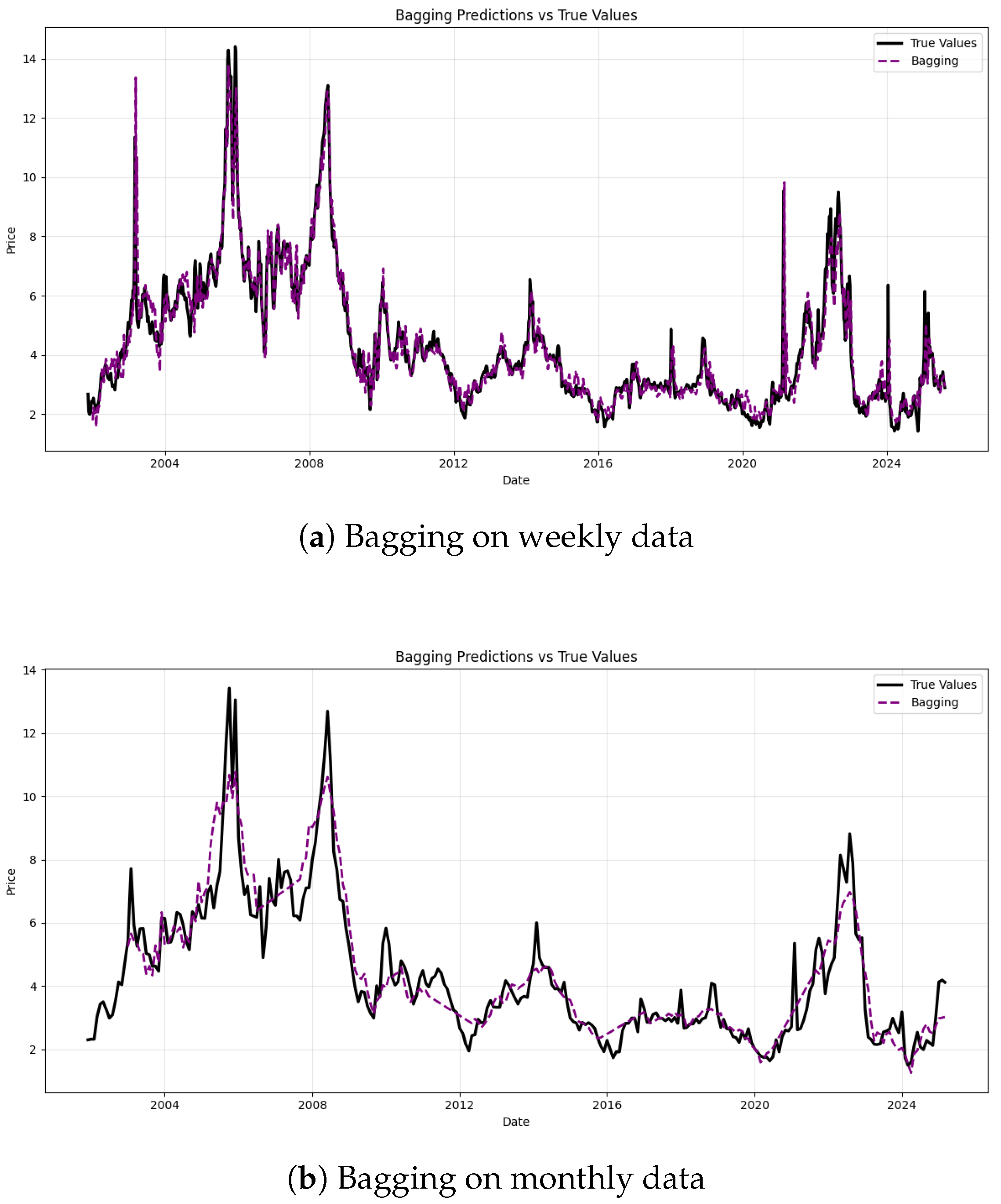

Based on the aforementioned studies, it is evident that relying solely on a single model, a single integration approach, or considering only one time scale may entail certain limitations. To address these limitations, this paper investigates natural gas price forecasting accuracy across two distinct time scales by constructing multiple hybrid models and exploring various ensemble strategies. Firstly, the model is constructed using two distinct temporal scales such as weekly and monthly data. The natural gas price series are decomposed via the STL method into interpretable trend, seasonal trend, and residual trend. Considering the trade-off between model complexity and predictive accuracy, for the weekly data, a 5-fold cross-validation procedure is applied to fit an ARIMA model to capture linear patterns in the time series, while an LSTM network is employed to model temporal dependencies and uncover potential nonlinear dynamics within the residual component. For the monthly data, 5-fold cross-validation is similarly used to fit both ARIMA and SARIMA models to the linear component, and their forecasting performance results are compared based on error metrics; the superior model is selected for subsequent ensemble integration. Concurrently, the LSTM network is applied to extract nonlinear features from the residual series. Finally, to explore the optimal strategy for enhancing predictive performance, this study conducts a comprehensive comparison of three ensemble methods: stacking, bagging, and weighted averaging. The primary objective is to identify the most effective integration approach under different temporal scales, thereby optimizing the synergy between the statistical (ARIMA or SARIMA) and deep learning (LSTM) components.

The subsequent sections are arranged as follows. In

Section 2, some definitions of the ARIMA, SARIMA model, and LSTM network are given, and the evaluation metrics for forecasting performance are introduced briefly.

Section 3 illustrates three ensemble methods such as stacked ensemble, bagging ensemble, and weighted average ensemble and describes the construction of ARIMA, SARIMA, and LSTM models, as well as their ensemble counterparts, at two temporal scales for natural gas price forecasting. Finally, a short discussion and conclusion is given in

Section 4.

4. Conclusions and Discussion

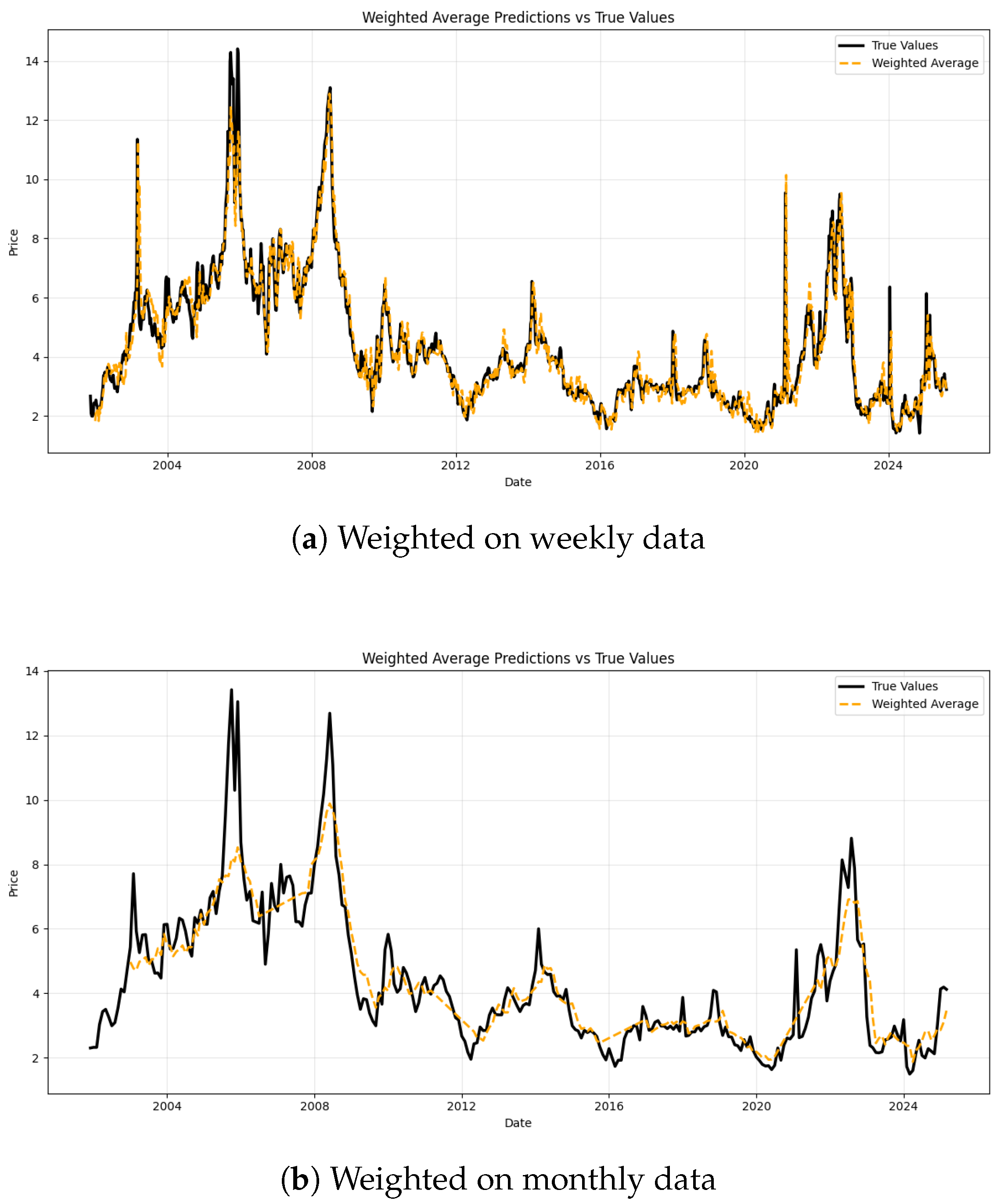

To develop a more accurate ensemble method for natural gas price forecasting across time scales while considering model complexity and computational cost, this paper compares two ensemble models. One combines ARIMA optimized through 5-fold cross-validation and LSTM for weekly data, and the other integrates LSTM with the better-performing model between ARIMA and SARIMA, where the better-performing one is selected via error metrics, for monthly data. Both of these ensemble models use STL decomposition to separate seasonal, trend, and residual components: ARIMA and LSTM capture linear patterns, and LSTM models residual nonlinear dependencies. This paper also evaluates stacking, bagging, and weighted averaging to find the optimal hybrid approach.

Despite the contributions of this study, several limitations should be acknowledged. Firstly, the analysis relies exclusively on a single dataset without incorporating regional variations, potentially limiting the generalizability of the findings. Secondly, external factors such as macroeconomic indicators, weather patterns, and geopolitical events are not integrated into the modeling framework, which may overlook critical drivers of natural gas price fluctuations. Thirdly, the absence of nonlinear meta-learners such as gradient boosting machines or neural network-based ensemblers prevent further refinement of the ensemble framework due to data constraints. Finally, this study does not explicitly account for the impacts of market-specific characteristics (e.g., storage capacity and regulatory policies) on model performance.

To address these limitations in future research, two key directions could be prioritized. On the one hand, future research should thus validate the proposed framework by expanding the dataset: collect multi-regional and multi-temporal natural gas price data, and integrate external variables such as economic indices and climate factors to capture broader market interactions and thereby better assess its cross-market robustness. On the other hand, diversifying the ensemble framework through the development of additional base models and optimization of their combinations enhances predictive accuracy via complementary modeling strengths. These improvements could ultimately lead to more robust and universally applicable forecasting methodologies.

Research on improving the efficiency of natural gas price forecasting models facilitates timely policy formulation by governments and contributes to the support of sustainable development goals. This paper presents a comparative analysis of ensemble models for natural gas price forecasting at two temporal scales such as weekly and monthly. The findings lead to the following conclusions. On the weekly dataset, the bagging ensemble method demonstrates a marginal edge over other models, with a MAPE of 9.60%, MAE of 0.3865, RMSE of 0.5780, and an R2 of 0.8287. Meanwhile, on the monthly dataset, the bagging ensemble maintains this slight superiority, achieving a MAPE of 11.43%, MAE of 0.5302, RMSE of 0.6944, and an R2 of 0.7813. The results demonstrate that the bagging ensemble method slightly outperforms the other two ensemble approaches in predictive accuracy on both temporal scales. This finding highlights the robustness and effectiveness of the bagging framework across different data frequencies, can aid risk management by enabling traders and firms to hedge against price uncertainty, suggests potential patterns consistent with the nuances of the semi-strong efficient market hypothesis to integrate with market efficiency theory, and supports cost structure optimization in the industry by predicting input cost volatility. We also link forecast accuracy to broader macroeconomic stability and market dynamics, providing a valuable reference for future energy price forecasting research.