Resolving the Classic Resource Allocation Conflict in On-Ramp Merging: A Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network Approach for Connected and Automated Vehicles

Abstract

1. Introduction

- (1)

- Unlike Nash-Q, Nash DQN, QMix, and MADDPG, the proposed RC-NashAD-DQN introduces a region-based coordination mechanism that treats ramp–main road interaction areas as unified decision regions, enabling consistent yet independent policy execution. A conflict-aware Q-fusion term is embedded into the loss function to resolve inter-regional action conflicts while preserving local autonomy. Furthermore, an advantage decomposition structure is integrated into the Nash equilibrium computation to enhance policy stability and joint value estimation, providing a more robust and coordinated control framework for complex traffic interactions.

- (2)

- Unlike existing methods that typically target single vehicle types, this study considers mixed traffic environments with both passenger cars and container trucks. By modeling heterogeneous vehicle interactions, the proposed method improves the realism and practical applicability of merging control strategies.

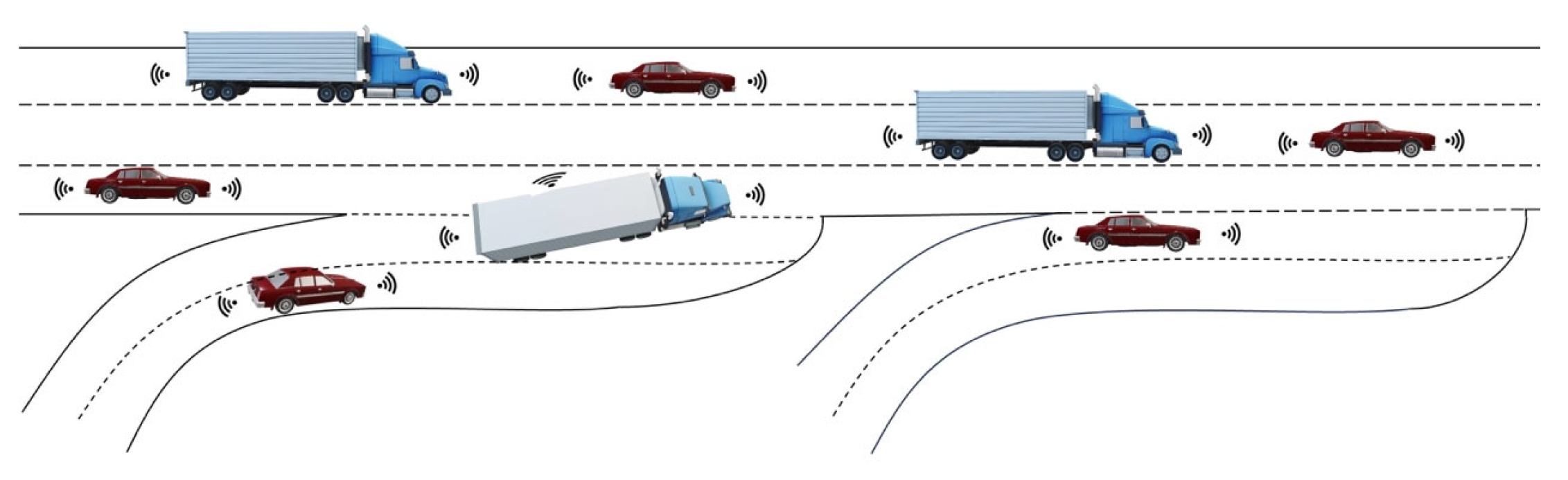

2. Problem Description

3. Methodology

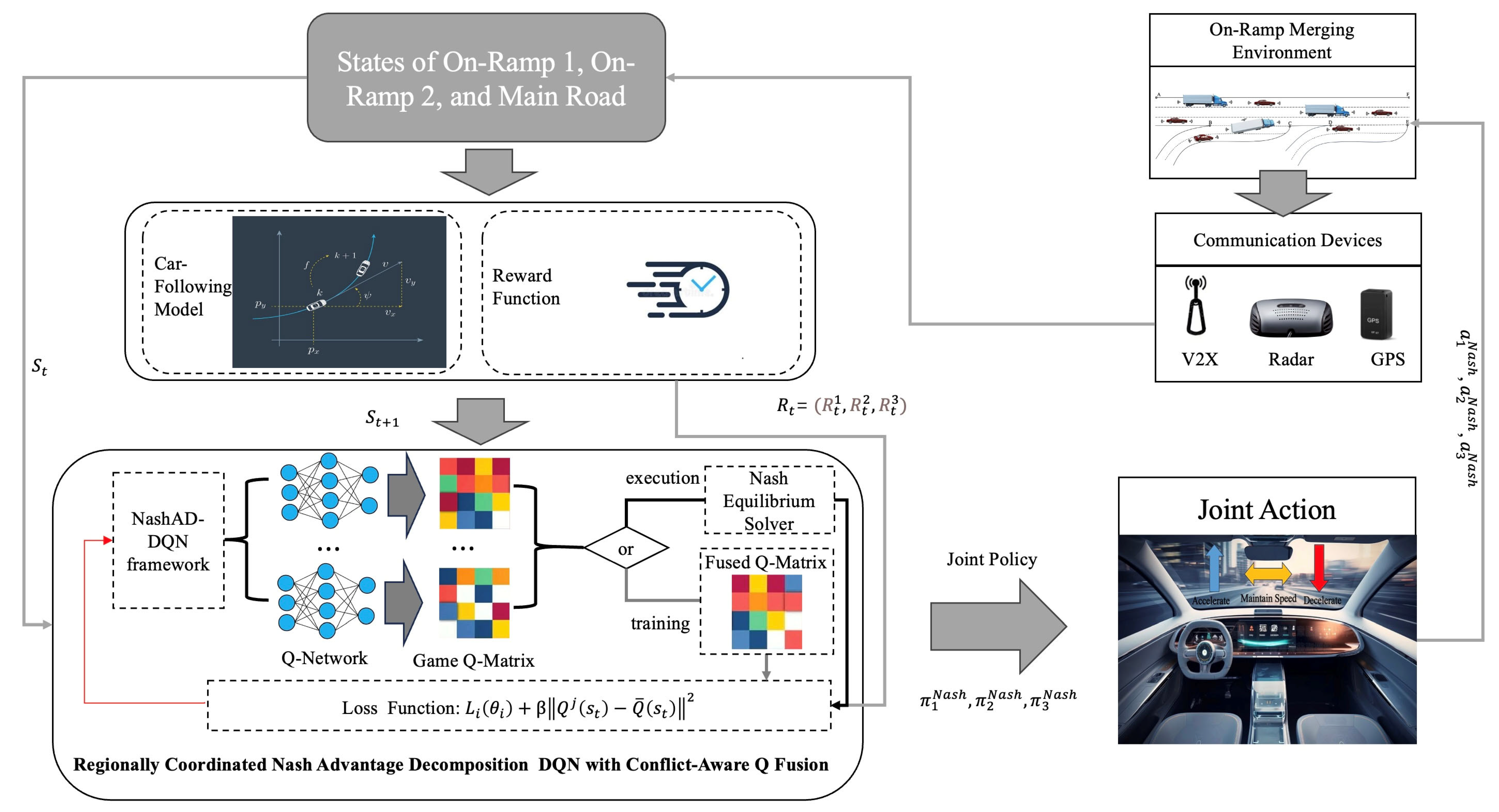

3.1. Model Overview

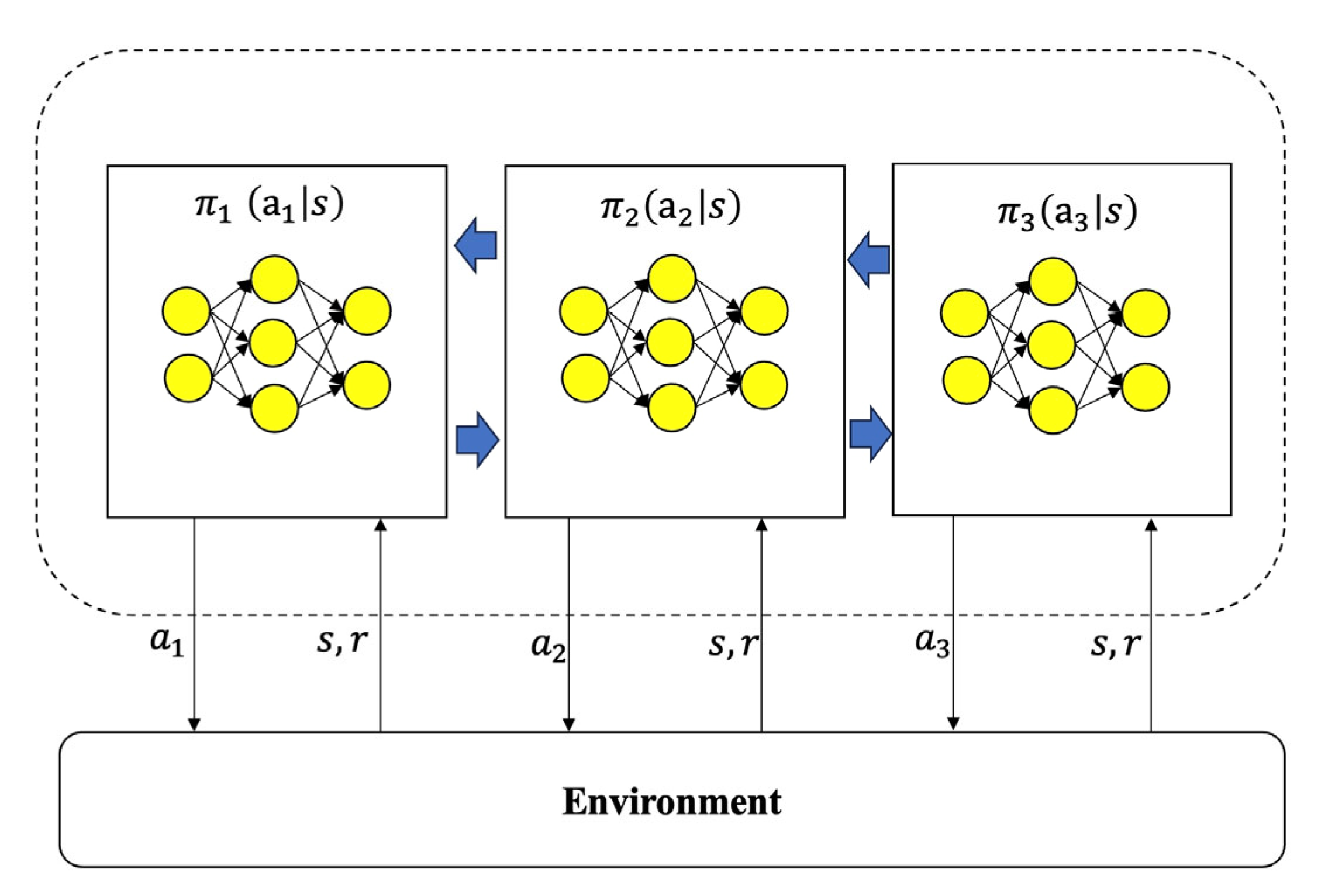

3.2. Multi-Agent Reinforcement Learning

| Algorithm 1 Pseudocode of regional coordination per control interval, Δt. |

Inputs:

2: ← read_all_sensors ( ) # raw detections 3: ← aggregate_features () # speed, density, flow, gap, type mix 4: Dynamic region partitioning + overlap detection 5: R ← partition_roadway (; method = density/conflict clustering) 6: O ← compute_overlaps (R) # O = | regions share a merging boundary window} 7: Neighbor communication (bounded delay/loss) 8: for each region ∈ R do 9: ← ⟨ |), (|)⟩ 10: CommManager.broadcast () 11: end for 12: M ← CommManager.collect (TTL = τ, retry = 1) # receive neighbor summaries; discard stale/duplicates 13: for any missing neighbor do # partial observability handling 14: use last_known (j) with time-decay weighting 15: end for 16: Regional Q-value construction with advantage decomposition 17: for each region ∈ R do 18: ← compose_local_joint_state |, M|N(i)) # include neighbors’ summaries near boundaries 19: build = + − 20: end for 21: Overlap-aware conflict scoring (short-horizon rollout on overlaps only) 22: for each overlap ∈ O do 23: ← conflict_score , , horizon H, ) 24: end for 25: C ← } # used as weights for boundary actions 26: Nash-based decision (per-step equilibrium over joint actions) 27: a * (* The superscript “*” denotes the optimal solution (Nash equilibrium action)) ← solve_nash_equilibrium ({}, overlaps = C) # joint action , …, ) 28: U ← map_actions_to_controls (a *) # , , } 29: Feedback execution and logging 30: apply_controls (U) # actuators/V2X commands 31: log_transition , , ) # for offline analysis/training 32: t ← t + Δt |

3.2.1. State Space Considering Vehicle Heterogeneity

- Macroscopic traffic flow indicators:: average speed of vehicles in region: vehicle density: traffic flow (veh/time)

- Behavioral statistics:: speed variance, reflecting flow stability: average headway, : vehicle type ratios

- Inter-agent interaction context:

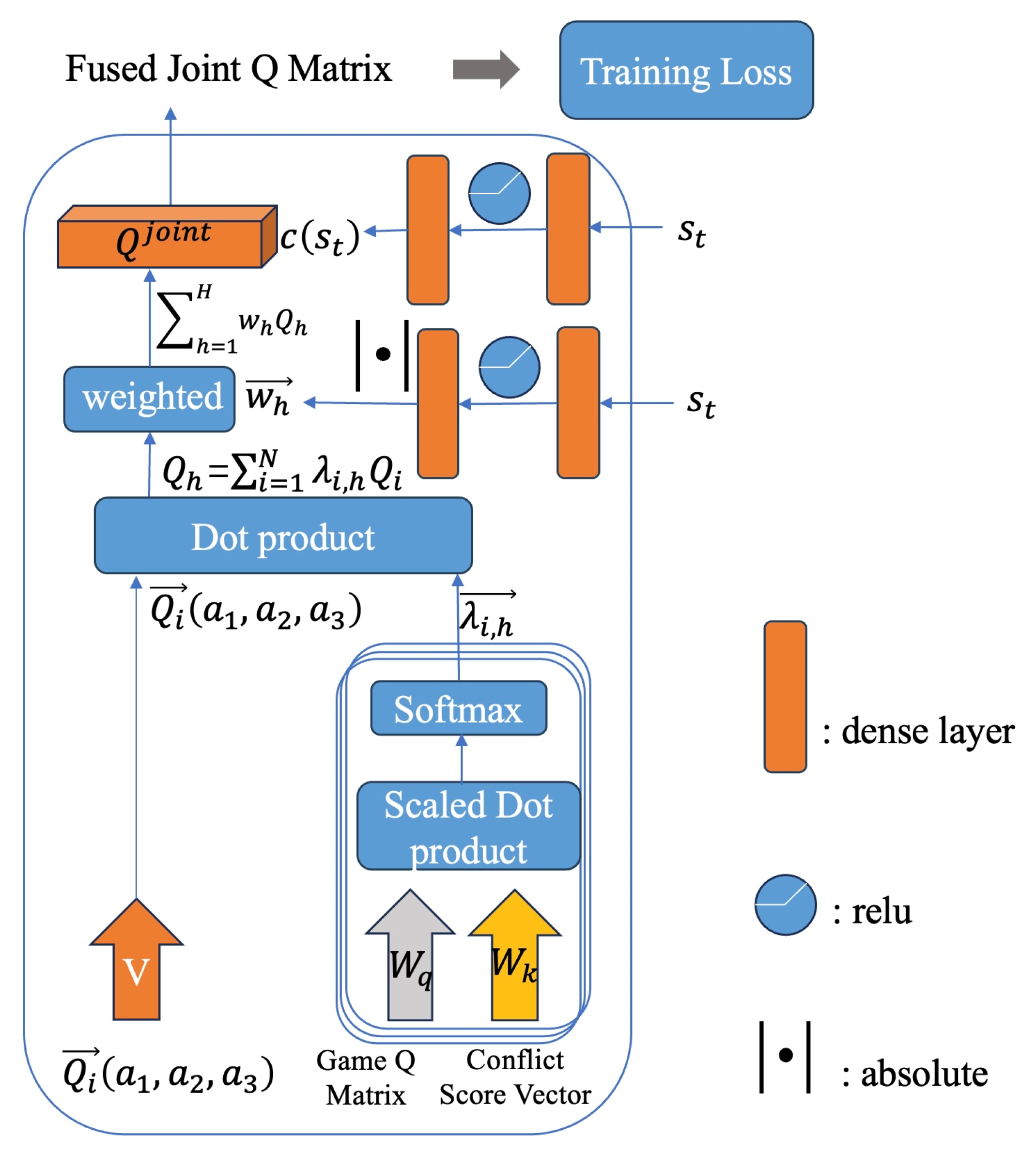

3.2.2. Deep Network Structure

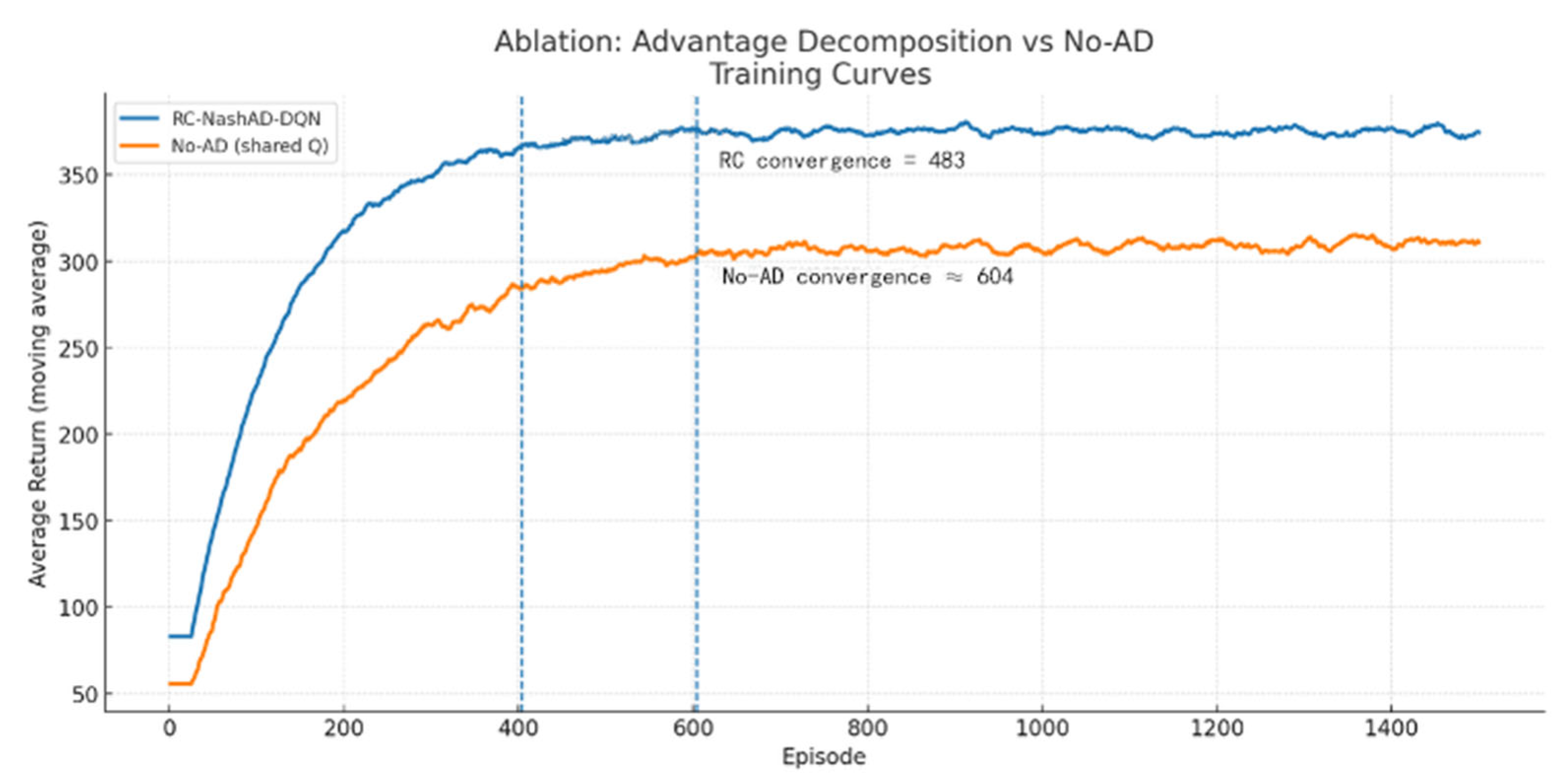

3.2.3. Nash-Advantage Decomposition Deep Q-Network Design and Training

3.3. Conflict-Aware Q-Value Fusion Module and New Loss Function Design

3.3.1. Fusion Process

- (1)

- Trajectory Rollout

- (2)

- Spatiotemporal Conflict Detection

- (3)

- Conflict Score Vector Construction

- (4)

- Attention-Based Q Fusion

3.3.2. New Loss Function Design

| Algorithm 2 Pseudocode of NashAD-DQN with conflict-aware Q fusion. |

Initialize:

2: Initialize environment 3: for time step t = 1 to T do 4: for each agent i ∈ N do 5: Sample joint actions based on Nash equilibrium and strategy 6: end for 7: Execute joint actions and observe next state and rewards 8: Store experience ,, , in replay buffer M 9: Update , , 10: if t = T then 11: Reset S0 and t 12: end if 13: end for |

|

14: if then 15: Sample batch M from buffer: , , , ) 16: Use target network to compute target value = target network 17: Compute Nash equilibrium value and strategy 18: if j is terminal then 19: = 20: else 21: = 22: end if 23: end if Conflict-aware Q-fusion (training only) 24: for each agent i: 25: Flatten into 26: Compute conflict score vector V 27: for h in do 28: for each agent i do 29: 30: end for 31: = 32: end for 33: = 34: for h do: 35: 36: end for 37: = 38: = 39: end for Total loss for each agent 40: for each agent i do 41: TD loss: 42: Fusion regularization: 43: Total loss: TD loss + Fusion regularization 44: Update using gradient descent |

|

45: Every C steps, update target network: . 46: end for |

4. Numerical Settings

4.1. Experimental Process

4.2. Experimental Results

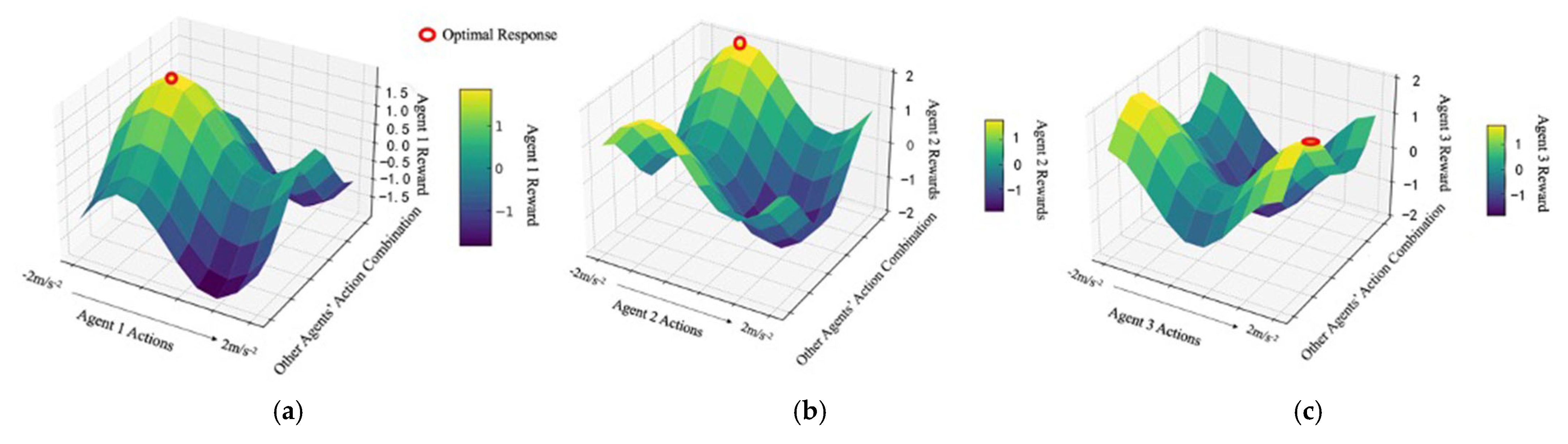

4.2.1. Nash Equilibrium State

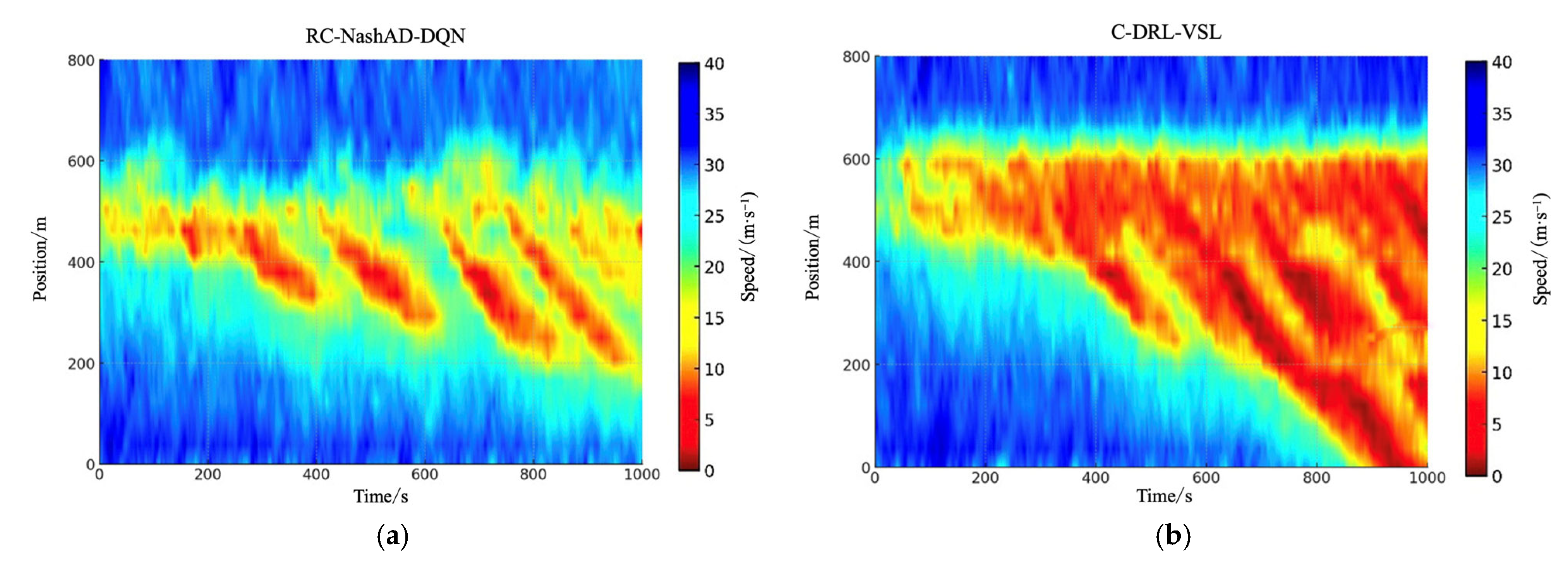

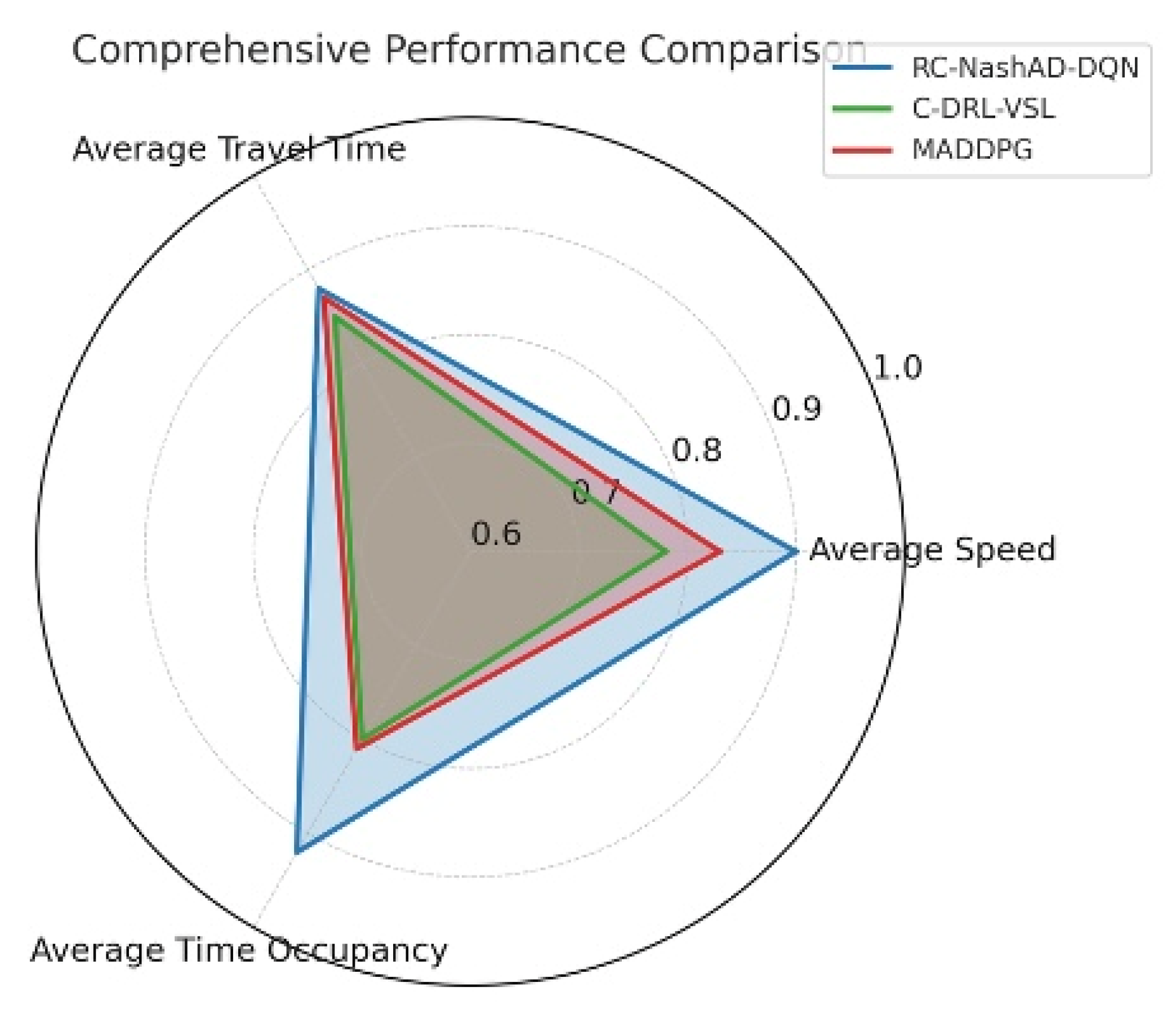

4.2.2. Average Speed

4.2.3. Average Travel Time

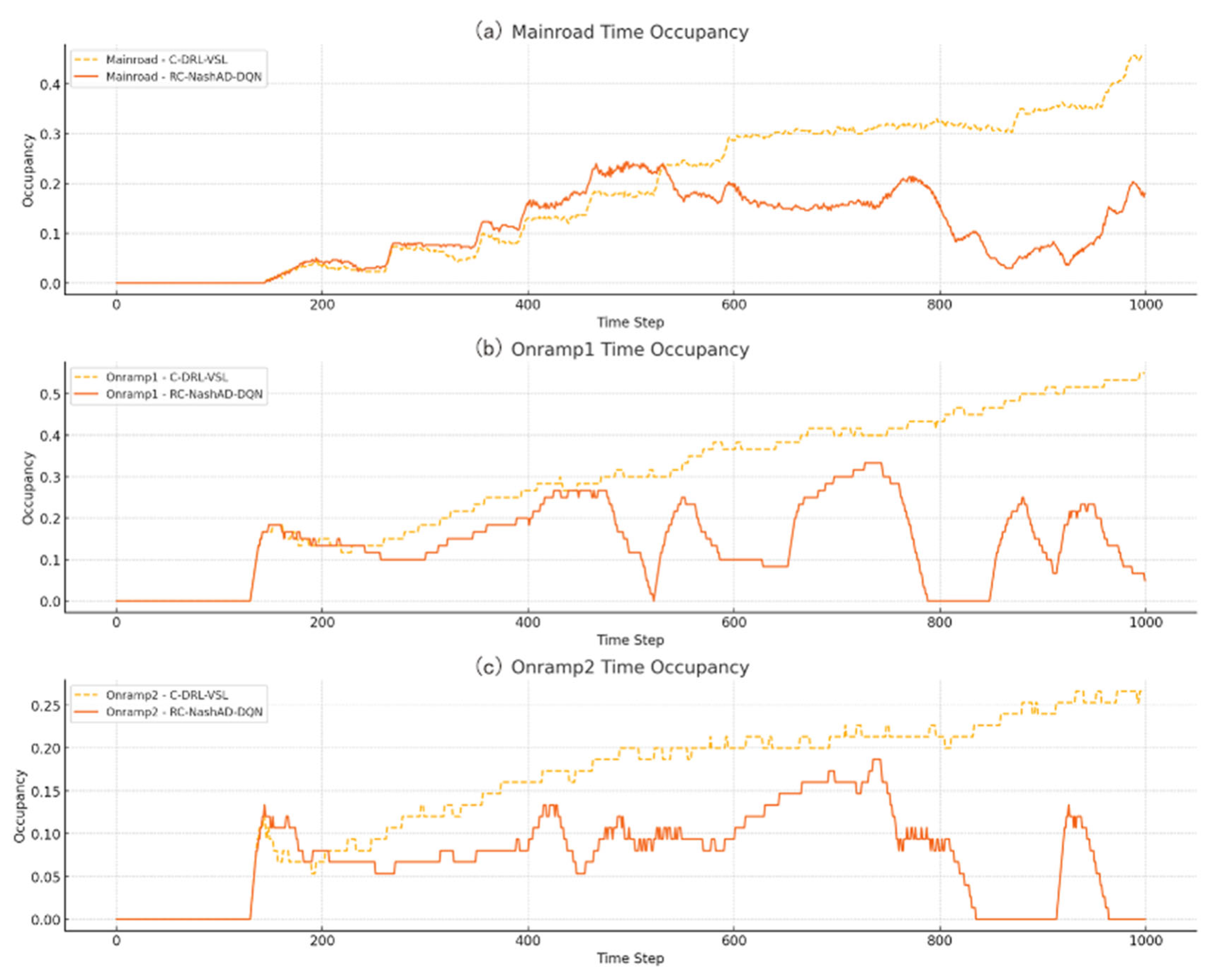

4.2.4. Time Occupancy

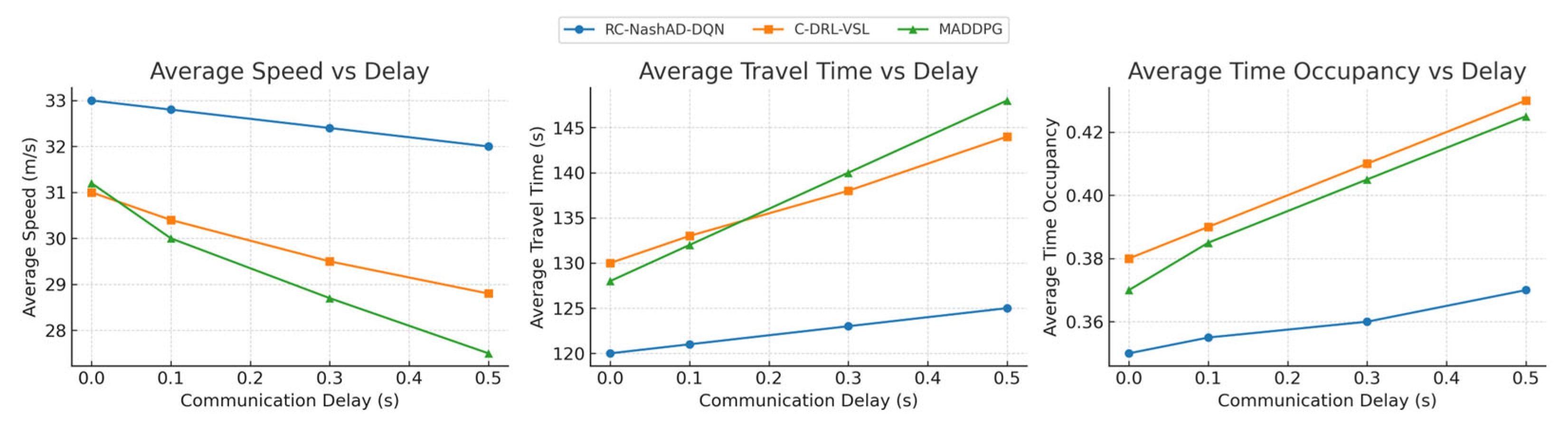

4.3. Computational Cost and Robustness

4.3.1. Computational Cost

4.3.2. Robustness

5. Conclusions

- The proposed framework addresses two major limitations in existing multi-agent reinforcement learning traffic control models: instability in equilibrium strategy learning and the lack of fine-grained conflict resolution across regions. By embedding a conflict-aware Q-fusion mechanism into the Nash Advantage Decomposition Deep Q-Network, the model effectively balances global coordination with local adaptability, achieving higher strategy stability and reduced solution overhead. Moreover, the region-level control design supports scalable deployment in complex traffic networks.

- Simulation experiments conducted on real-world highway merging settings show that the proposed approach significantly improves average speed, reduces time occupancy, and lowers travel time compared to the C-DRL-VSL baseline. These results demonstrate the effectiveness of embedding spatio-temporal conflict resolution into Nash-based learning and underscore the practical value of region-level coordination in balancing local interactions and global traffic efficiency.

- Precisely simulating individual vehicle behavior, such as lane-changing, acceleration, and deceleration, by setting ramp merging rules and main road traffic rules in the model to accurately capture the interaction processes among vehicles.

- Developing adaptive traffic control strategies for mixed traffic flows, where CAVs coexist with human-driven vehicles.

- Exploring more complex and multi-layered traffic management architectures to further enhance the cooperation and competition capabilities of CAVs, contributing to sustainable intelligent transportation development.

- Future work will extend the evaluation to more diverse traffic configurations, enabling a broader validation of the proposed approach across different roadway geometries and operational conditions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CAV | Connected and Automated Vehicles |

| V2V | Vehicle-to-Vehicle |

| CC | Car–Car |

| CT | Truck–Car |

| TC | Truck–Car |

| TT | Truck–Truck |

| IMU | Inertial Measurement Unit |

| GPS | Global Positioning System |

| MDP | Markov Decision Processes |

| DQN | Deep Q-Network |

| Nash Double Q | Nash Double Q-based Multi-Agent Deep Reinforcement Learning |

| C-DRL-VSL | Deep Q-Network-based expressway variable speed limit control |

| RC-NashAD-DQN | Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network with Conflict-Aware Q Fusion |

References

- Xie, N.; Wang, H. Distributed adaptive traffic signal control based on shockwave theory. Transp. Res. Part C Emerg. Technol. 2025, 173, 105052. [Google Scholar] [CrossRef]

- Han, L.; Zhang, L.; Pan, H. Improved multi-agent deep reinforcement learning-based integrated control for mixed traffic flow in a freeway corridor with multiple bottlenecks. Transp. Res. Part C Emerg. Technol. 2025, 174, 105077. [Google Scholar] [CrossRef]

- He, Z.; Wang, L.; Su, Z.; Ma, W. Integrating variable speed limit and ramp metering to enhance vehicle group safety and efficiency in a mixed traffic environment. Phys. A Stat. Mech. Its Appl. 2024, 641, 129754. [Google Scholar] [CrossRef]

- Ali, Y.; Zheng, Z.; Bliemer, M.C. Calibrating lane-changing models: Two data-related issues and a general method to extract appropriate data. Transp. Res. Part C Emerg. Technol. 2023, 152, 104182. [Google Scholar] [CrossRef]

- Beinum, A.V.; Farah, H.; Wegman, F.; Hoogendoorn, S. Driving behaviour at motorway ramps and weaving segments based on empirical trajectory data. Transp. Res. Part C Emerg. Technol. 2018, 92, 426–441. [Google Scholar] [CrossRef]

- Kondyli, A.; Elefteriadou, L. Driver behavior at freeway-ramp merges: An evaluation based on microscopic traffic data. Transp. Res. Rec. 2009, 4, 129–142. [Google Scholar]

- Sheikh, M.S.; Peng, Y. Collision Avoidance Model for On-Ramp Merging of Autonomous Vehicles. Transp. Eng. 2023, 27, 1323–1339. [Google Scholar] [CrossRef]

- Papageorgiou, M.; Hadj-Salem, H.; Blosseville, J.-M. ALINEA: A local feedback control law for on-ramp metering. Transp. Res. Rec. 1991, 1320, 58–64. [Google Scholar]

- Gomes, G.; Papageorgiou, M.; Hadj-Salem, H.; Blosseville, J.-M.; Horowitz, R. Optimal freeway ramp metering using the asymmetric cell transmission model. Transp. Res. Part C Emerg. Technol. 2006, 14, 244–262. [Google Scholar] [CrossRef]

- Papamichail, I.; Papageorgiou, M. Traffic-responsive linked ramp-metering control. IEEE Trans. Intell. Transp. Syst. 2008, 9, 111–121. [Google Scholar] [CrossRef]

- Papageorgiou, M.; Kotsialos, A. Freeway ramp metering: An overview. IEEE Trans. Intell. Transp. Syst. 2002, 3, 271–281. [Google Scholar] [CrossRef]

- Levin, M.W.; Boyles, S.D. A multiclass cell transmission model for shared human and autonomous vehicle roads. Transp. Res. Part C Emerg. Technol. 2016, 62, 103–116. [Google Scholar] [CrossRef]

- Talebpour, A.; Mahmassani, H.S. Influence of connected and autonomous vehicles on traffic flow stability and throughput. Transp. Res. Part C Emerg. Technol. 2016, 71, 143–163. [Google Scholar] [CrossRef]

- Wang, M.; Daamen, W.; Hoogendoorn, S. Connected variable speed limits control and car-following control with vehicle-infrastructure communication to resolve stop-and-go waves. J. Intell. Transp. Syst. 2016, 20, 559–572. [Google Scholar] [CrossRef]

- Wang, B.; Han, Y.; Wang, S.; Tian, D.; Cai, M.; Liu, M.; Wang, L. A Review of Intelligent Connected Vehicle Cooperative Driving Development. Mathematics 2022, 10, 3635. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, X.; Xu, Z.; Guan, W. Centralized ramp confluence control method with special connected and automated vehicle priority. J. Traffic Transp. Eng. 2022, 22, 263–272. [Google Scholar]

- Yang, X.; Cheng, Y.; Chang, G.-L. Integration of adaptive signal control and freeway off-ramp priority control for commuting corridors. Transp. Res. Part C Emerg. Technol. 2018, 86, 328–345. [Google Scholar] [CrossRef]

- Chen, X.; Ma, F.; Wu, Y.; Han, B.; Luo, L.; Biancardo, S.A. MFMDepth: MetaFormer-based monocular metric depth estimation for distance measurement in ports. Comput. Ind. Eng. 2025, 207, 111325. [Google Scholar] [CrossRef]

- Chen, X.; Wu, S.; Shi, C.; Huang, Y.; Yang, Y.; Ke, R.; Zhao, J. Sensing Data Supported Traffic Flow Prediction via Denoising Schemes and ANN: A Comparison. IEEE Sens. J. 2020, 20, 14317–14328. [Google Scholar] [CrossRef]

- Wang, J.; Cai, Y.; Chen, L. A confluence method for intelligent vehicles at a highway on-ramp in mixed traffic. J. Chongqing Univ. Technol. (Nat. Sci.) 2023, 37, 93–101. [Google Scholar]

- Lin, Q.; He, Z.; Xie, J.; Wu, Z.; Huang, W. Urban Expressway Coordinated Ramp Metering Approach Using Automatic Vehicle Identification Data and Deep Reinforcement Learning. China J. Highw. Transp. 2023, 36, 224–237. [Google Scholar]

- Gu, M.; Ge, Z.; Wang, C.; Su, Y.; Guo, Y. Human-like Merging Control of Intelligent Connected Vehicles on the Acceleration Lane. China J. Highw. Transp. 2024, 37, 134–146. [Google Scholar]

- Chen, T.; Wang, M.; Gong, S.; Zhou, Y.; Ran, B. Connected and automated vehicle distributed control for on-ramp merging scenario: A virtual rotation approach. Transp. Res. Part C Emerg. Technol. 2021, 133, 103451. [Google Scholar] [CrossRef]

- Xue, Y.; Zhang, X.; Cui, Z.; Yu, B.; Gao, K. A platoon-based cooperative optimal control for connected autonomous vehicles at highway on-ramps under heavy traffic. Transp. Res. Part C Emerg. Technol. 2023, 150, 104083. [Google Scholar] [CrossRef]

- Liu, Z.; Huang, J.; Wang, D. Collaborative control of intelligent connected vehicles on acceleration section of merging area based on cooperative game. Transp. Res. 2023, 9, 34–43. [Google Scholar]

- Qu, D.-Y.; Dai, S.-C.; Chen, Y.-C.; Cui, S.-N.; Yang, Y.-X. Modeling of vehicle game cut-out and merging behavior based on trajectory data. J. Jilin Univ. (Eng. Technol. Ed.) 2024, 1671–5497. [Google Scholar] [CrossRef]

- Jiang, Y.; Chen, H.; Xiao, G.; Cong, H.; Yao, Z. A Stackelberg game-based on-ramp merging controller for connected automated vehicles in mixed traffic flow. Transp. Lett. 2025, 17, 423–441. [Google Scholar] [CrossRef]

- Casgrain, P.; Ning, B.; Jaimungal, S. Deep Q-Learning for Nash Equilibria: Nash-DQN. Appl. Math. Financ. 2022, 29, 62–78. [Google Scholar] [CrossRef]

- Li, L.; Zhao, W.; Wang, C.; Fotouhi, A.; Liu, X. Nash double Q-based multi-agent deep reinforcement learning for interactive merging strategy in mixed traffic. Expert Syst. Appl. 2024, 237, 121458. [Google Scholar] [CrossRef]

- Shi, P.; Zhang, J.; Hai, B.; Zhou, D. Research on Dueling Double Deep Q Network Algorithm Based on Step Momentum Update. Transp. Res. Rec. J. Transp. Res. Broad 2023, 2678, 288–300. [Google Scholar] [CrossRef]

- Bando, M.; Hasebe, K.; Nakayama, A.; Shibata, A.; Sugiyama, Y. Dynamical model of traffic congestion and numerical simulation. Phys. Rev. E 1995, 51, 1035–1042. [Google Scholar]

- Ge, H.X.; Meng, X.P.; Cheng, R.J.; Lo, S.M. Time-dependent Ginzburg–Landau equation in a car-following model considering the driver’s physical delay. Phys. A Stat. Mech. Its Appl. 2011, 390, 3348–3353. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling Network Architectures for Deep Reinforcemnet Learning. In Proceedings of the 33rd International Conference on International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48. [Google Scholar]

- Wu, H.; Shi, Y.; Zhao, J.; Sun, J. Collaborative Control for Urban Expressway Mainline and On-ramp Metering in Connected-Vehicle Environment. J. South China Univ. Technol. (Nat. Sci. Ed.) 2025, 53, 73–86. [Google Scholar]

- Gan, Q.; Li, B.; Xiong, Z.; Li, Z.; Liu, Y. Multi-Vehicle Cooperative Decision-Making in Merging Area Based on Deep Multi-Agent Reinforcement Learning. Sustainability 2024, 16, 22. [Google Scholar] [CrossRef]

| Parameter | Description | Value |

|---|---|---|

| ALPHA | Learning rate | 0.1 |

| GAMMA | Discount factor | 0.99 |

| EPSILON_START | Initial exploration rate | 0.5 |

| EPSILON_END | Final exploration rate | 0.01 |

| DECAY_RATE | Exploration rate decay | 0.995 |

| N_ACTIONS | Number of actions | 3 |

| MEMORY_SIZE | Experience replay buffer size | 2000 |

| BATCH_SIZE | Mini-batch size for training | 64 |

| TAU | Target network soft update parameter | 0.1 |

| SYNC_TARGET_STEPS | Target network synchronization interval | 200 |

| UPDATE_STEPS | Model update interval | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Lu, L. Resolving the Classic Resource Allocation Conflict in On-Ramp Merging: A Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network Approach for Connected and Automated Vehicles. Sustainability 2025, 17, 7826. https://doi.org/10.3390/su17177826

Li L, Lu L. Resolving the Classic Resource Allocation Conflict in On-Ramp Merging: A Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network Approach for Connected and Automated Vehicles. Sustainability. 2025; 17(17):7826. https://doi.org/10.3390/su17177826

Chicago/Turabian StyleLi, Linning, and Lili Lu. 2025. "Resolving the Classic Resource Allocation Conflict in On-Ramp Merging: A Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network Approach for Connected and Automated Vehicles" Sustainability 17, no. 17: 7826. https://doi.org/10.3390/su17177826

APA StyleLi, L., & Lu, L. (2025). Resolving the Classic Resource Allocation Conflict in On-Ramp Merging: A Regionally Coordinated Nash-Advantage Decomposition Deep Q-Network Approach for Connected and Automated Vehicles. Sustainability, 17(17), 7826. https://doi.org/10.3390/su17177826