Enhancing Sustainability Through Quality Controlled Energy Data: The Horizon 2020 EnerMaps Project

Abstract

1. Introduction

- How can energy data be made more FAIR?

- How can energy data be assessed for its overall quality in terms of FAIR principles?

2. Materials and Methods

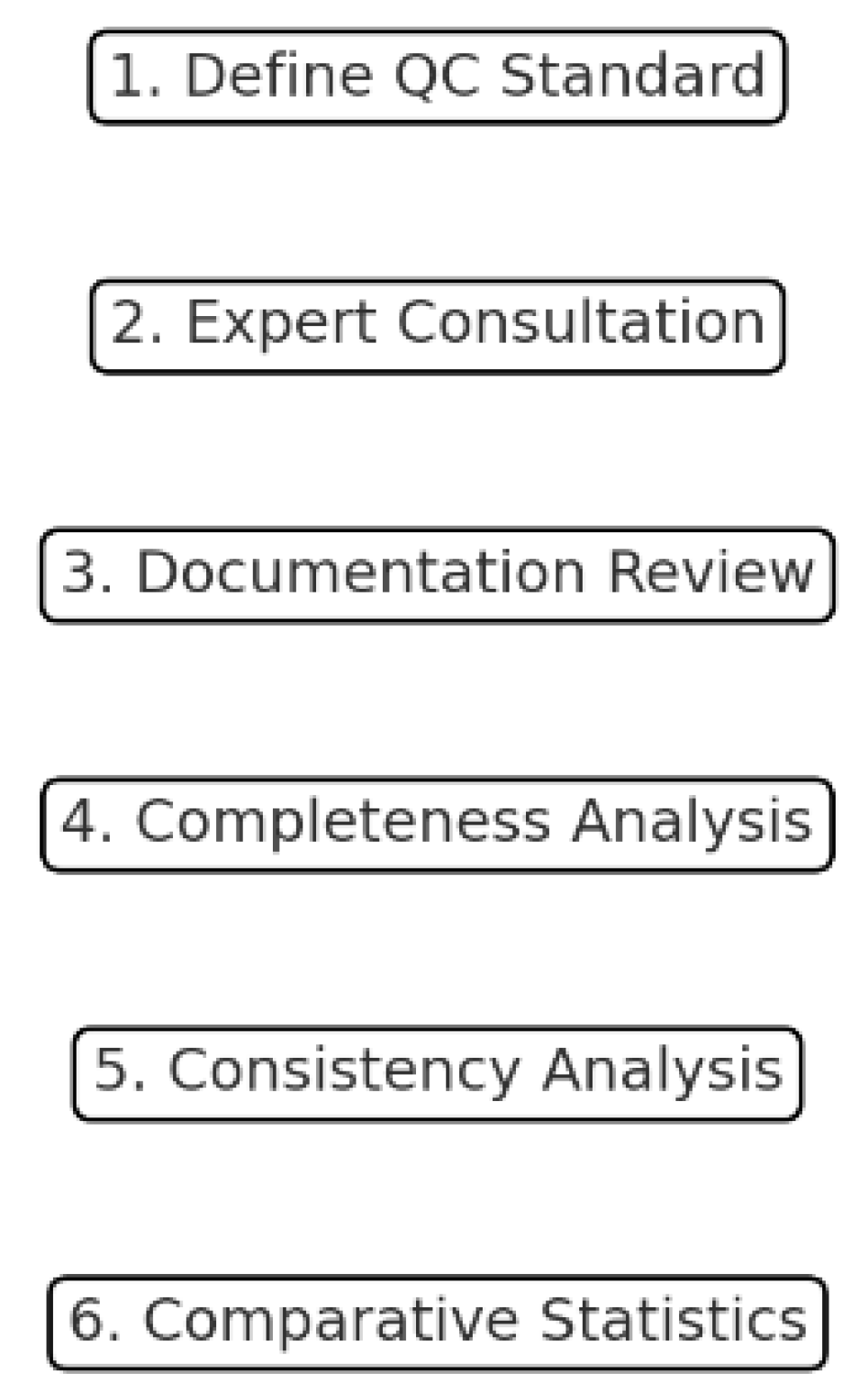

- Establish a standard for high-quality data;

- Consultation with external experts;

- Documentation review;

- Consistency analysis;

- Statistical comparative assessment;

- Community feedback.

2.1. Case Study: EnerMaps

2.1.1. Project

2.1.2. EnerMaps Initial Dataset Inventory

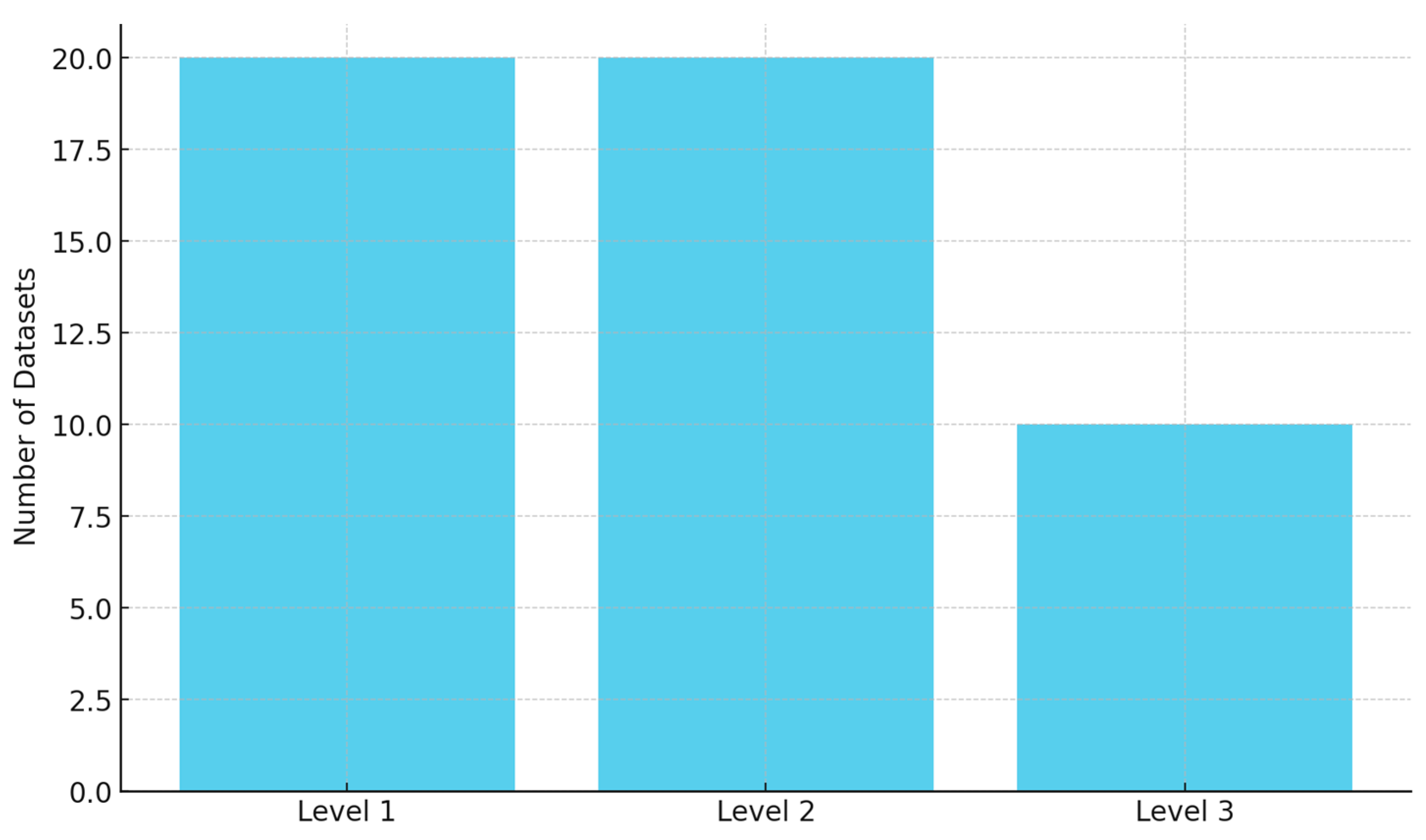

- Level 1 datasets (n = 20) were selected through stakeholder consultation and included thematically relevant datasets that, however, lacked sufficient metadata or documentation to justify more in-depth checks.

- Level 2 datasets (n = 20) were internally selected by the EnerMaps team based on criteria such as completeness, metadata structure, and their potential impact for policy or modelling.

- Level 3 datasets (n = 10) were prioritized for their analytical depth, reliability, and relevance for comparative assessments. These underwent the most extensive QC, including statistical accuracy and consistency checks.

2.2. Research Steps

2.2.1. Establish a Standard for High-Quality Data

- Follows FAIR principles;

- Contains relevant metadata;

- Includes transparent documentation describing dataset creation;

- Meets QC indicators.

- Quality and completeness of dataset metadata;

- What’s behind the data? Quality of documentation describing the methodology and accuracy of the data.

- Dataset completeness;

- Dataset consistency.

2.2.2. Consultation with External Experts

- How familiar are you with the selected datasets? (For example, have you used any of the datasets or do you know/trust any of the data providers?)

- What are your thoughts on the selected datasets with regards to your own work? (For example, do they cover important areas of analysis in your field?)

- Quality control proves a basis for assessing the accuracy, completeness, and consistency of the datasets. Do you feel more confident in the accuracy and quality of the selected datasets since they underwent this process?

- If possible, please provide a user story from your perspective so that we may better identify ways EnerMaps can provide value to energy research and analysis.

2.2.3. Existence of Relevant Metadata

2.2.4. Documentation Review

2.2.5. Completeness Analysis

2.2.6. Consistency Analysis

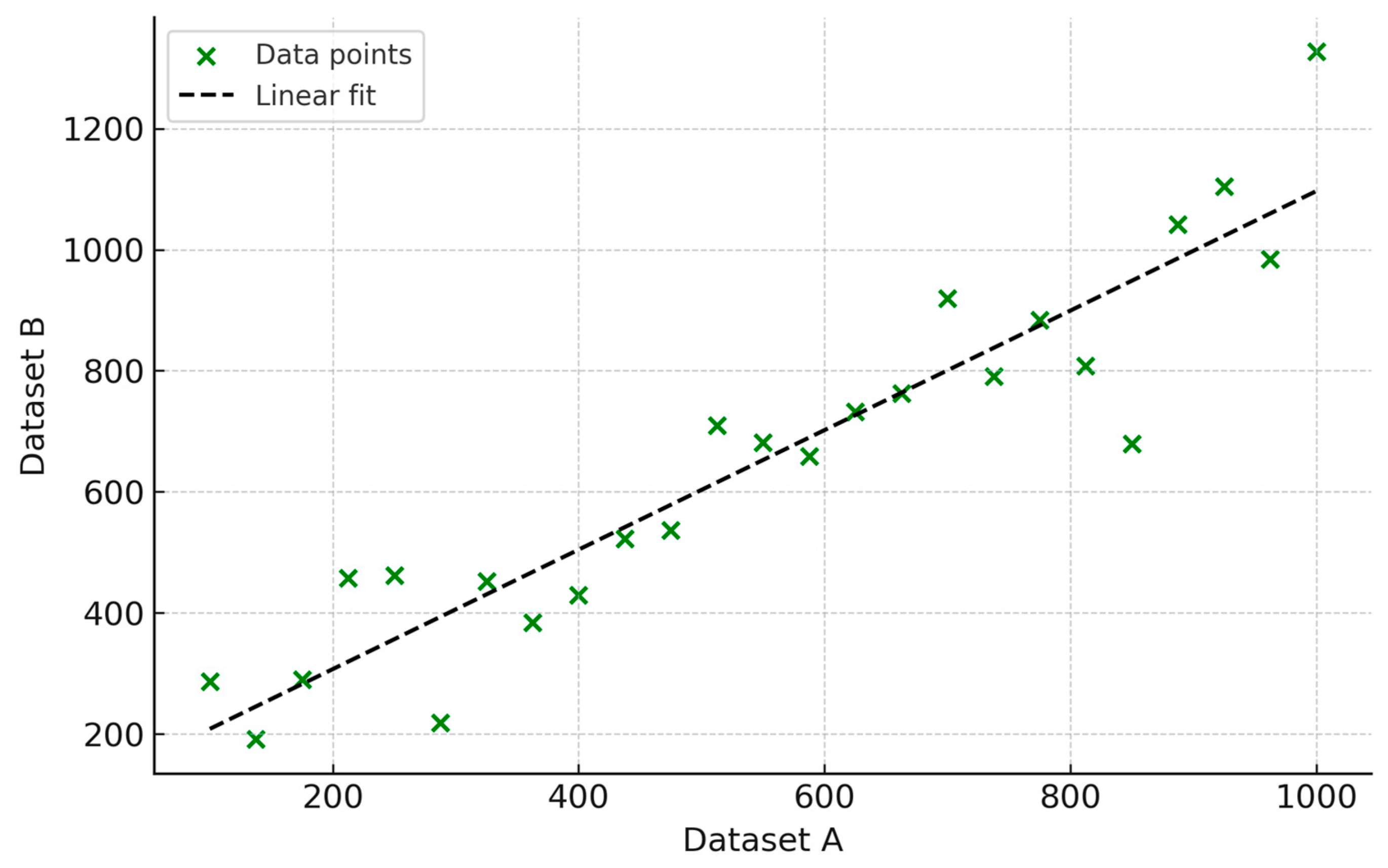

- A related dataset was selected that is linearly correlated with the dataset of interest.

- In order to conduct a simple analysis, the datasets were subsetted to reduce data dimensionality so that both the dataset of interest and the related datasets were comparable. This usually took the form of subsetting a panel dataset to create a longitudinal or temporal subset (e.g., one year across multiple locations or one location over numerous years).

- A simple linear regression was conducted with the dataset of interest set as the independent variable. The correlation between the variables (i.e., datasets) was then assessed by performing a hypothesis test (with 95% significance level) that tests whether or not a correlation exists. A significance level equal to less than 5% suggests certain evidence of correlation between the two subsetted datasets.

2.2.7. Statistical Comparative Assessment

3. Results

3.1. Results for the Establishment of a Standard for High-Quality Data

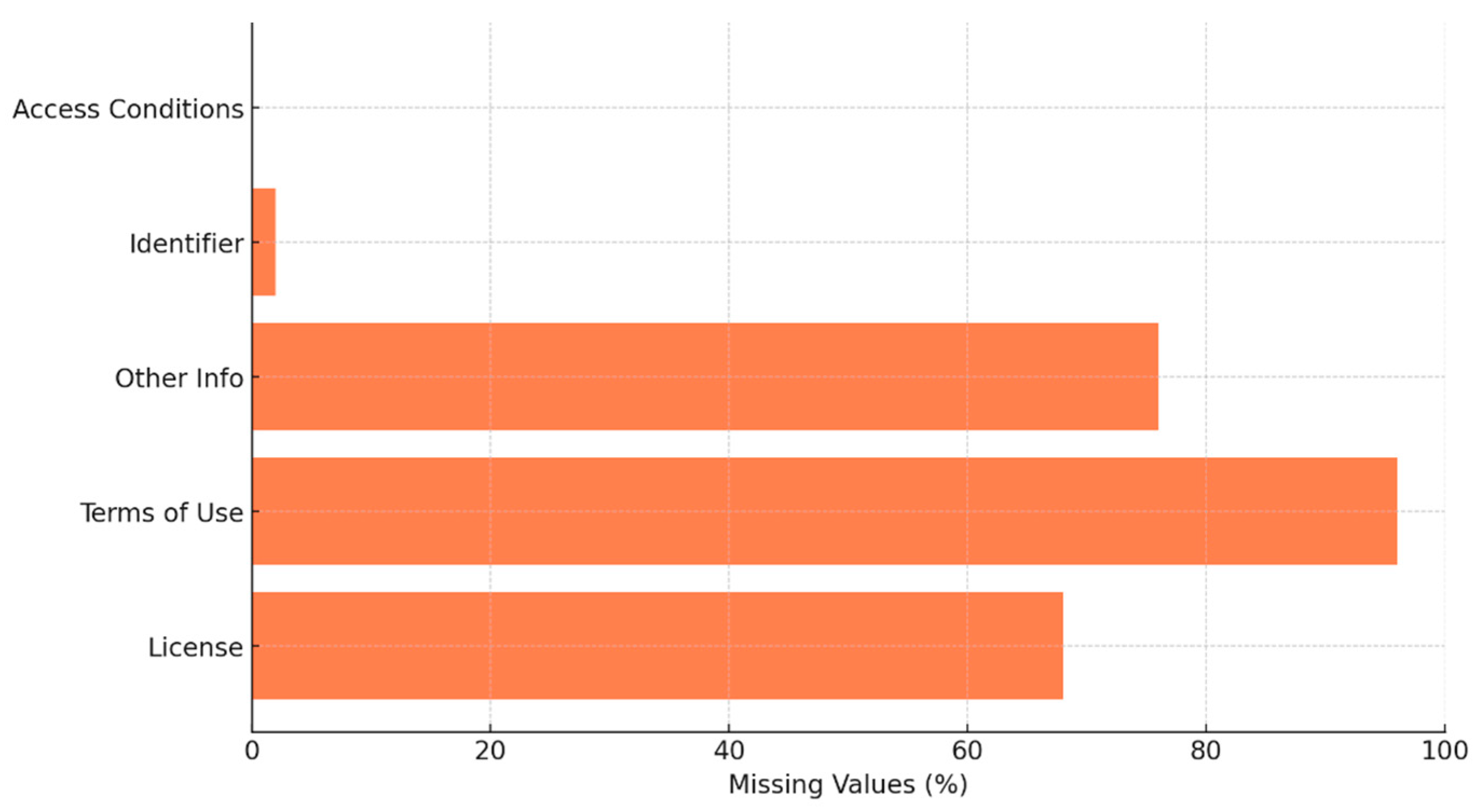

3.2. Check of the Existence of Relevant Metadata

3.3. Results of the Documentation Review

3.3.1. Methodology Check

3.3.2. Statistical Accuracy Check

3.4. Results of the Completeness Analysis

3.5. Results of the Consistency Analysis

3.6. Results of the Statistical Comparative Assessment

4. Discussion

5. Conclusions

- The proposed QC framework, aligned with the FAIR principles, was successfully applied to 50 spatially referenced datasets with varying structure and completeness.

- All datasets satisfied the FAIR dimensions of findability and accessibility, confirming that these criteria can be met with relatively limited effort.

- Major gaps remain in transparency and reusability: 68% of the datasets lacked an explicit license, 96% omitted terms-of-use statements, and 23% did not include methodology documentation.

- The completeness analysis revealed that more than 80% of the assessed datasets contained missing values, with over one-quarter missing more than 50% of key fields.

- Consistency checks based on linear regression indicated statistically significant correlations (p < 0.05) for most dataset pairs, supporting the internal coherence of the data.

- The workflow provides a replicable and scalable model for other domains or platforms, allowing the calibration of QC thresholds based on specific risk tolerance or analytical objectives.

- There is a clear need for standardized metadata templates and documentation protocols to improve data transparency, provenance, and reuse.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balest, J.; Pezzutto, S.; Giacovelli, G.; Wilczynski, E. Engaging Stakeholders for Designing a FAIR Energy Data Management Tool: The Horizon 2020 EnerMaps Project. Sustainability 2022, 14, 11392. [Google Scholar] [CrossRef]

- Pfenninger, S.; DeCarolis, J.; Hirth, L.; Quoilin, S.; Staffell, I. The Importance of Open Data and Software: Is Energy Research Lagging Behind? Energy Policy 2017, 101, 211–215. [Google Scholar] [CrossRef]

- Panico, S.; Larcher, M.; Troi, A.; Codreanu, I.; Baglivo, C.; Congedo, P.M. Hygrothermal analysis of a wall isolated from the inside: The potential of dynamic hygrothermal simulation. IOP Conf. Ser. Earth Environ. Sci. 2021, 863, 012053. [Google Scholar] [CrossRef]

- GO FAIR. FAIR Principles. Available online: https://www.go-fair.org/fair-principles/ (accessed on 5 June 2025).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for Scientific Data Management and Stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef] [PubMed]

- Bahim, C.; Casorrán-Amilburu, C.; Dekkers, M.; Herczog, E.; Loozen, N.; Repanas, K.; Russell, K.; Stall, S. The FAIR Data Maturity Model: An Approach to Harmonise FAIR Assessments. Data Sci. J. 2020, 19, 41. [Google Scholar] [CrossRef]

- Scheffler, M.; Aeschlimann, M.; Albrecht, M.; Bereau, T.; Bungartz, H.-J.; Felser, C.; Greiner, M.; Groß, A.; Koch, C.T.; Kremer, K.; et al. FAIR Data Enabling New Horizons for Materials Research. Nature 2022, 604, 635–642. [Google Scholar] [CrossRef] [PubMed]

- Gotzens, F.; Heinrichs, H.; Hörsch, J.; Hofmann, F. Performing Energy Modelling Exercises in a Transparent Way—The Issue of Data Quality in Power Plant Databases. Energy Strategy Rev. 2019, 23, 1–12. [Google Scholar] [CrossRef]

- Pezzutto, S.; Croce, S.; Zambotti, S.; Kranzl, L.; Novelli, A.; Zambelli, P. Assessment of the Space Heating and Domestic Hot Water Market in Europe—Open Data and Results. Energies 2019, 12, 1760. [Google Scholar] [CrossRef]

- Mathew, P.A.; Dunn, L.N.; Sohn, M.D.; Mercado, A.; Custudio, C.; Walter, T. Big-Data for Building Energy Performance: Lessons from Assembling a Very Large National Database of Building Energy Use. Appl. Energy 2015, 140, 85–93. [Google Scholar] [CrossRef]

- The CARINA Group. The CARbon Dioxide IN the Atlantic Ocean (CARINA) Database (Version 1.1, 2010) Data Set; NOAA National Centers for Environmental Information: Asheville, NC, USA, 2013. [CrossRef][Green Version]

- Tanhua, T.; Olsen, A.; Hoppema, M.; Jutterström, S.; Schirnick, C.; van Heuven, S.M.A.C.; Velo, A.; Lin, X. Carbon Dioxide Information Analysis Center (CDIAC) Datasets. 2009. Available online: https://www.ncei.noaa.gov/access/ocean-carbon-acidification-data-system/oceans/CARINA/about_carina.html (accessed on 4 August 2025).[Green Version]

- Forstinger, A.; Wilbert, S.; Jensen, A.R.; Kraas, B.; Fernández Peruchena, C.; Gueymard, C.A.; Ronzio, D.; Yang, D.; Collino, E.; Martinez, J.P.; et al. Expert Quality Control of Solar Radiation Ground Data Sets. In Proceedings of the SWC 2021: ISES Solar World Congress, Virtual Conference, 25–29 October 2021; pp. 1–12. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, L.; Zhang, X.; Chen, X.; Mi, J.; Xie, S. Consistency Analysis and Accuracy Assessment of Three Global 30-m Land-Cover Products over the European Union Using the LUCAS Dataset. Remote Sens. 2020, 12, 3479. [Google Scholar] [CrossRef]

- d’Andrimont, R.; Verhegghen, A.; Meroni, M.; Lemoine, G.; Strobl, P.; Eiselt, B.; Yordanov, M.; Martinez-Sanchez, L.; van der Velde, M. LUCAS Copernicus 2018: Earth-Observation-Relevant In Situ Data on Land Cover and Use throughout the European Union. Earth Syst. Sci. Data 2021, 13, 1119–1133. [Google Scholar] [CrossRef]

- Tsendbazar, N.-E.; de Bruin, S.; Fritz, S.; Herold, M. Spatial Accuracy Assessment and Integration of Global Land Cover Datasets. Remote Sens. 2015, 7, 15804–15821. [Google Scholar] [CrossRef]

- Evans, B.; Druken, K.; Wang, J.; Yang, R.; Richards, C.; Wyborn, L. A Data Quality Strategy to Enable FAIR, Programmatic Access across Large, Diverse Data Collections for High Performance Data Analysis. Informatics 2017, 4, 45. [Google Scholar] [CrossRef]

- Wierling, A.; Schwanitz, V.J.; Altinci, S.; Bałazińska, M.; Barber, M.J.; Biresselioglu, M.E.; Burger-Scheidlin, C.; Celino, M.; Demir, M.H.; Dennis, R.; et al. FAIR Metadata Standards for Low Carbon Energy Research—A Review of Practices and How to Advance. Energies 2021, 14, 6692. [Google Scholar] [CrossRef]

- Kubler, S.; Robert, J.; Neumaier, S.; Umbrich, J.; Le Traon, Y. Comparison of Metadata Quality in Open Data Portals Using the Analytic Hierarchy Process. Gov. Inf. Q. 2018, 35, 13–29. [Google Scholar] [CrossRef]

- World Wide Web Consortium (W3C). Data Catalog Vocabulary (DCAT): Version 3 (W3C Recommendation). Available online: https://www.w3.org/TR/vocab-dcat-3/ (accessed on 4 August 2024).

- Neumaier, S.; Umbrich, J.; Polleres, A. Automated Quality Assessment of Metadata across Open Data Portals. J. Data Inf. Qual. 2016, 8, 1–29. [Google Scholar] [CrossRef]

- Rager, J.; von Gunten, D.; Wilczynski, E.; Pezzutto, S. EnerMaps Project: A New Open Energy Data Tool to Accelerate the Energy Transition. Euroheat Power 2021, 1, 19–22. [Google Scholar]

- DataCite Metadata Working Group. DataCite Metadata Schema Documentation for the Publication and Citation of Research Data. Version 4.3. DataCite e.V. 2019. Available online: https://schema.datacite.org/meta/kernel-4.3/ (accessed on 19 June 2025).

- Schema.org. Dataset. Available online: https://schema.org/Dataset (accessed on 5 June 2025).

- Wilczynski, E.; Pezzutto, S. Quality-Check Process Results Report (Deliverable D1.6). EnerMaps. 2021. Available online: https://www.researchgate.net/publication/350278152_EnerMaps_D16_Quality-check_process_results_report (accessed on 19 June 2025).

- Pezzutto, S.; Zambotti, S.; Croce, S.; Zambelli, P.; Garegnani, G.; Scaramuzzino, C.; Pascuas, R.P.; Haas, F.; Exner, D.; Lucchi, E.; et al. Deliverable 2.3—WP2 Report—Open Data Set for the EU28. HotMaps Project, H2020. Available online: https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://www.hotmaps-project.eu/wp-content/uploads/2018/03/D2.3-Hotmaps_for-upload_revised-final_.pdf&ved=2ahUKEwjAvo7omqaPAxWLh_0HHe0bHwgQFnoECBcQAQ&usg=AOvVaw3_LIgfRoQO0rJaaVc-gZNc (accessed on 19 June 2025).

- U.S. Department of Energy. Buildings Performance Database (BPD): Technical Documentation. 2021. Available online: https://bpd.lbl.gov (accessed on 19 June 2025).

| Stage Within the Quality Assurance Workflow | First Level | Second Level | Third Level |

|---|---|---|---|

| Gathering input from subject-matter experts | X | X | X |

| Availability of user feedback on the Kialo platform | X | X | X |

| Verification that pertinent metadata are present | X | X | |

| Review of the methodology applied to the datasets | X | X | |

| Assessment of dataset completeness | X | X | |

| Evaluation of statistical accuracy | X | X | |

| Examination of intra- and cross-dataset consistency | X | X | |

| Benchmarking against comparable datasets | X |

| Metadata Element | Description |

|---|---|

| Processing stage | Geographic focus of the dataset. |

| Spatial resolution/granularity | Spatial resolution represented (e.g., the minimum spatial unit). |

| Internal identifier | Persistent identifier or locator that uniquely resolves to the dataset. |

| Identifier type/scheme | Identifier scheme/type used in the “Identifier” field. |

| Data creator/author | Entity responsible for data production (organization and/or individuals). |

| Described object (subject) | Title shown on the dataset’s landing page. |

| Issuing body (publisher) | Repository/organization that hosts and disseminates the data. |

| Date of publication | Publication date (day–month–year): if multiple updates exist, record the most recent; if only a partial date is provided, retain the available granularity (e.g., month–year or year only). |

| Year of publication | Publication year. |

| Temporal resolution | Temporal resolution of the dataset. |

| Time references (coverage markers) | Temporal coverage (dates/years) referenced by the dataset; a range for longitudinal series or a single year for cross-sectional data. |

| URLs (external links) | URL for the dataset or its landing page. |

| Content descriptors (keywords) | Keywords describing the dataset’s content. |

| Origin/provenance | Provenance/source initiative (e.g., the project that generated the dataset). |

| Geographic coverage/extent | Geographic extent/zone covered (distinct from the “Level” field only for raster products). |

| CRS/map projection | Projected coordinate reference system employed (applicable to projected data). |

| Access conditions | Statement on openness and download availability. |

| Licensing information | License specifying permitted uses and conditions. |

| Terms of use | Concise notes on terms of use. |

| Availability | Where the dataset can be accessed (for publicly available resources). |

| Resource category/type | Resource type (e.g., “dataset”). |

| Data/file format | File format. |

| File size (bytes/MB) | File size of the downloaded dataset (and of any compressed archive, if relevant). |

| Other pertinent information | Additional pertinent notes (e.g., login required for access). |

| Metadata Element | Share of Missing Entries (%) |

|---|---|

| Processing stage | 0 |

| Spatial resolution/granularity | 0 |

| Internal identifier | 2 |

| Identifier type/scheme | 2 |

| Data creator/author | 0 |

| Described object (subject) | 2 |

| Issuing body (publisher) | 0 |

| Date of publication | 2 |

| Year of publication | 2 |

| Temporal resolution | 2 |

| Time references (coverage markers) | 2 |

| URLs (external links) | 2 |

| Content descriptors (keywords) | 0 |

| Origin/provenance | 0 |

| Geographic coverage/extent | 0 |

| CRS/map projection | 2 |

| Access conditions | 0 |

| Licensing information | 68 |

| Terms of use | 96 |

| Availability | 0 |

| Resource category/type | 0 |

| Data/file format | 0 |

| File size (bytes/MB) | 0 |

| Other pertinent information | 76 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pezzutto, S.; Bottino-Leone, D.; Wilczynski, E.J. Enhancing Sustainability Through Quality Controlled Energy Data: The Horizon 2020 EnerMaps Project. Sustainability 2025, 17, 7684. https://doi.org/10.3390/su17177684

Pezzutto S, Bottino-Leone D, Wilczynski EJ. Enhancing Sustainability Through Quality Controlled Energy Data: The Horizon 2020 EnerMaps Project. Sustainability. 2025; 17(17):7684. https://doi.org/10.3390/su17177684

Chicago/Turabian StylePezzutto, Simon, Dario Bottino-Leone, and Eric John Wilczynski. 2025. "Enhancing Sustainability Through Quality Controlled Energy Data: The Horizon 2020 EnerMaps Project" Sustainability 17, no. 17: 7684. https://doi.org/10.3390/su17177684

APA StylePezzutto, S., Bottino-Leone, D., & Wilczynski, E. J. (2025). Enhancing Sustainability Through Quality Controlled Energy Data: The Horizon 2020 EnerMaps Project. Sustainability, 17(17), 7684. https://doi.org/10.3390/su17177684