1. Introduction

With the continuous growth of the global population and the intensification of climate change, agricultural production is confronting unprecedented challenges [

1,

2,

3,

4,

5]. Globally, pests and pathogens are responsible for approximately 10–40% of crop yield losses, resulting in substantial economic impacts across regions [

6]. In northern China, climatic fluctuations have led to frequent outbreaks of pests and diseases, posing severe threats to crop security and yield stability [

7]. Leafy vegetables, as one of the most important daily-consumed crops in China, are particularly vulnerable due to their short cultivation cycles and exposure to open environments. In some production and distribution chains, postharvest losses of leafy vegetables can exceed 50% [

8]. These factors contribute to the high diversity of pathogens, rapid disease transmission [

9], and considerable diagnostic difficulties [

10], while current disease detection systems in real-field conditions may show reduced accuracy (77–90%) and error rates of 10–23% [

11], ultimately exerting significant impacts on both yield and quality [

12]. Against this backdrop, achieving efficient detection and precise identification of diseases affecting leafy vegetables has become a critical component in safeguarding agricultural productivity and food safety [

13].

Traditional methods for disease identification typically rely on visual inspection and expert judgment [

14]. These approaches are not only inefficient and costly, but their accuracy also depends heavily on the skill of the inspectors [

15], making them ill-suited for large-scale, high-frequency monitoring [

16]. In recent years, the adoption of modern agricultural models such as aquaponics has offered new avenues for intensive vegetable production, but it has also introduced new challenges for disease management. In closed or semi-closed recirculating systems, once a disease outbreak occurs, it can spread rapidly, severely compromising system stability and water quality. Therefore, it is imperative to develop an intelligent disease recognition system tailored to leafy vegetables in aquaponic environments, enabling efficient and fine-grained monitoring of multiple diseases to support precise intervention and sustainable control.

With the ongoing progress of agricultural modernization and rapid advancements in computer vision technologies [

17,

18,

19], image-based approaches for detecting plant diseases have gained increasing attention [

20,

21,

22]. These techniques generally function by analyzing visual features such as color distribution, texture patterns, and structural shapes within images to locate diseased areas [

23]. Typical methodologies include threshold-based segmentation, edge detection, and texture characterization [

24]. Despite demonstrating acceptable results under controlled or simple conditions, these traditional image processing methods are inherently dependent on handcrafted features and fixed rule sets, which limits their adaptability to real-world, complex agricultural environments. When faced with a broad variety of disease types and constantly changing field conditions, conventional methods often fail to effectively distinguish between healthy and infected crops, leading to significant performance degradation [

25,

26,

27]. Moreover, their limited generalization capacity becomes apparent in scenarios involving coexisting diseases, inconsistent growing environments, or when data availability is low or imbalanced across disease categories [

28,

29,

30]. These challenges significantly hinder their applicability in practical agricultural settings, especially in rural regions where digital infrastructure is underdeveloped. Therefore, there is a pressing need for more intelligent and adaptable solutions for the effective detection of leafy vegetable diseases.

In recent years, deep learning—particularly convolutional neural networks (CNNs)—has emerged as a powerful alternative for visual analysis in agriculture [

31,

32]. Unlike traditional image-based techniques that rely on predefined features, deep learning models are capable of automatically learning hierarchical representations directly from large datasets, reducing manual intervention. These models have shown remarkable success in various computer vision tasks such as image classification, object localization, and semantic segmentation [

33,

34,

35,

36]. For instance, Abdu et al. introduced a disease recognition framework that efficiently captures both local and global lesion features, reducing redundancy in feature vectors and achieving a recall rate exceeding 99% [

37]. Similarly, Rahman et al. developed an automated tomato leaf disease detection system that computed 13 statistical descriptors and used a support vector machine (SVM) for classification, yielding accuracy above 85% [

38]. Li et al. enhanced the YOLOv5s model by modifying components such as the CSP, FPN, and NMS modules to improve feature extraction across multiple scales and adapt to environmental variability, achieving a mean average precision (mAP) of 93.1% [

39]. Tiwari et al. employed transfer learning to construct a potato disease detection model, resulting in a classification accuracy of 97.8% [

40]. Wang et al. proposed a two-stage framework combining DeepLabV3+ and U-Net for identifying the severity of cucumber leaf diseases under complex background conditions; their system reached a segmentation accuracy of 93.27%, a Dice coefficient of 0.6914 for lesion areas, and an average disease severity classification accuracy of 92.85% [

41]. Lastly, Jiang et al. utilized CNNs for feature extraction on rice leaf disease images and adopted an SVM classifier, achieving an overall accuracy of 96.8% [

42].

Although deep learning–based models have achieved remarkable progress in agricultural disease detection, they still face numerous challenges in real-world applications. These include the large variety of disease types, highly similar visual characteristics among diseases, and significant interference from complex backgrounds, which often lead to target recognition confusion and unclear boundary segmentation. To effectively address these challenges, this study proposes a disease detection and segmentation model incorporating a prototype attention mechanism—ProtoLeafNet—specifically designed to meet the demands of multi-disease coexistence and fine-grained recognition in leafy vegetables. The proposed approach substantially improves both the accuracy and robustness of disease identification. The main contributions of this work are as follows:

We collected and organized a large-scale image dataset comprising multiple typical leafy vegetable disease categories, covering diverse scenarios and disease morphologies, with strong representativeness and diversity.

Prototype-guided attention mechanism design: We propose a class-prototype–based attention mechanism to guide the model in focusing on key discriminative features of disease regions, effectively suppressing background interference and enhancing its ability to distinguish among multiple diseases.

Prototype loss optimization strategy: By optimizing the distance relationships between sample embeddings and class prototypes, we enhance inter-class separability and intra-class compactness in the feature space, thereby improving classification and segmentation accuracy.

Dual-task network structure for detection and segmentation: We construct a multi-task model that integrates object detection and semantic segmentation, enabling both precise localization of disease regions and fine-grained segmentation, thus, improving the overall recognition capability of the system.

The remainder of this paper is organized as follows:

Section 2 reviews related work in plant disease detection and segmentation.

Section 3 introduces the overall architecture of ProtoLeafNet, including the prototype attention mechanism.

Section 4 details the dataset construction and experimental setup.

Section 5 presents quantitative and qualitative results, including ablation studies and specific case discussions. Finally,

Section 6 concludes the paper and outlines future research directions.

3. Methods and Materials

3.1. Dataset Construction

Constructing a high-quality dataset is a foundational step in this study, as the data quality directly affects the performance and generalization ability of the model. In this work, an image dataset was created specifically for leafy vegetable disease detection and segmentation tasks. The dataset includes several common vegetable types and their corresponding disease symptoms.

The images were collected from two sources: (1) public online platforms including PlantVillage, Kaggle, and Google Image Search, and (2) field photographs taken at the experimental agricultural base of China Agricultural University in Haidian District, Beijing. When searching online, we used disease-specific and crop-specific keywords (e.g., “lettuce downy mildew”, “spinach anthracnose”) and manually filtered the results based on image clarity, completeness, and relevance. Low-resolution, watermarked, or duplicate images were excluded.

In the field collection, we used handheld cameras (mainly mobile phone cameras) under natural lighting to photograph various disease conditions. The selected vegetable species included spinach, lettuce, water spinach, Chinese cabbage, and celtuce. The disease types covered in the dataset include downy mildew, white rust, anthracnose, virus disease, gray mold, soft rot, black rot, black spot, sclerotinia, and powdery mildew. For each disease type, about 1000 to 2000 images were collected to ensure sufficient variation and coverage.

Although healthy leaves were not labeled as a separate class, most of the disease symptoms are localized in nature. As a result, each image naturally contains areas of healthy leaf tissue, which serve as negative examples during training. A summary of the number of images per disease type is provided in

Table 1. To support reproducibility, we are open to sharing the dataset upon reasonable request.

To guarantee high visual fidelity and preserve fine-grained details during the image acquisition process, high-resolution digital cameras were employed. The hardware setup primarily consisted of digital single-lens reflex (DSLR) cameras manufactured by Nikon and Canon. Furthermore, to enrich the dataset and enhance its diversity, additional images were sourced from publicly available online platforms. Only images that met strict selection standards—such as high pixel density, clearly distinguishable disease symptoms, and credible origins—were included. An example of the image quality and selection rationale is shown in

Figure 1.

The dataset was partitioned with 80% allocated for training, 10% for validation, and the remaining 10% for testing—serving purposes of model fitting, parameter adjustment, and evaluation of final performance, respectively.

3.2. Data Augmentation

In the context of deep learning, data augmentation serves as a vital strategy to improve the robustness and predictive performance of models. It involves generating additional training instances by applying a range of transformations to the original dataset, thereby enhancing generalization capabilities and reducing overfitting tendencies. As deep learning research progresses, a number of advanced augmentation techniques—such as Cutout, Mixup, and CutMix—have emerged and gained significant attention. These methods introduce unique perturbations to training data, greatly enriching the variability of input distributions and contributing to performance improvements across diverse application domains.

3.2.1. Cutout

Among these, Cutout stands out for its conceptual simplicity and practical effectiveness. The method operates by randomly masking a rectangular patch within the input image, setting all pixel values in the selected area to zero. This localized dropout encourages the model to focus on more comprehensive contextual cues during training. One notable advantage of Cutout is its minimal computational overhead, as the operation requires only a straightforward masking step. The formal representation of the Cutout procedure is provided in Equation (

3):

In the Cutout method, let I represent the original input image and the resulting image after augmentation. A binary mask M, which shares the same dimensions as the input image, is utilized to apply the occlusion. Within this mask, a rectangular region is randomly selected and assigned a value of 0 to indicate masked pixels, while all other positions retain a value of 1, preserving the corresponding original pixel values. By introducing such masked-out areas, the Cutout strategy compels the neural network to learn more generalized and spatially diverse features, thereby improving robustness during training.

3.2.2. Mixup

Mixup is a data augmentation strategy that synthesizes new examples by blending two input images in a fixed proportion. This process involves pixel-wise interpolation and label fusion through weighted averaging. Its core benefit lies in promoting model generalization by mitigating issues such as noisy annotations, data scarcity, and unclear class boundaries.The procedure of Mixup is as Equations (

4) and (

5):

In the case of Mixup, and refer to two distinct input samples, and , denote their respective labels. A mixing parameter is drawn from a uniform distribution , which determines the ratio used to blend both images and their corresponding labels. This interpolation process not only exposes the model to transitional cases between different classes but also reduces sensitivity to label noise and sharp decision boundaries, ultimately enhancing generalization performance and mitigating the risk of overfitting.

3.2.3. CutMix

CutMix is an alternative augmentation technique that constructs synthetic samples by extracting a region from one image and embedding it into a different image. This method contributes to better learning of localized patterns and strengthens the model’s resistance to overfitting and noise.The operation of CutMix can be formulated as Equations (

6) and (

7):

Specifically,

and

denote two input images with their corresponding labels

and

, respectively. A binary mask

M is generated to indicate the rectangular region cropped from image

A and pasted onto image

B. The proportion of the cropped region is determined by a scalar

sampled from a uniform distribution

. The resulting label

corresponds to the augmented image. CutMix improves the model’s understanding of local image regions and enhances its ability to recognize combinations of features from different objects. The data enhancement effect is shown in

Figure 2:

3.3. Proposed Method

3.3.1. Network Architecture Overview

This paper proposes a vegetable disease detection and segmentation model based on a Transformer architecture combined with a prototype enhancement mechanism, as illustrated in

Figure 3. The model integrates the local perception capability of convolutional neural networks with the global modeling strength of Transformers, and significantly improves the detection and segmentation performance in complex diseased regions through foreground-guided and prototype optimization mechanisms. The overall network employs ResNet-50 as the backbone feature extractor, encoding the input image into multi-scale convolutional features, which are then projected to a fixed dimension and flattened into a sequence fed into the Transformer.

During the semantic modeling process, the Transformer encoder utilizes multi-layer, multi-head self-attention to capture global relationships among different image regions, yielding semantically enriched representations. To enhance the model’s focus on key regions, a class-agnostic foreground prediction mechanism is introduced to estimate the probability of each encoded token belonging to the foreground. The top-k tokens with the highest confidence are selected as query vectors for the decoder, enabling a foreground-driven decoding process. The Transformer decoder further integrates global semantics and local spatial information to produce task-discriminative representations.

To improve the category distinctiveness and semantic consistency of the decoder outputs, a prototype enhancement module is introduced following the decoder. This module extracts prototype vectors for each disease category from the training samples and computes the similarity between the decoder features and the prototypes, refining the model’s response to target regions through weighted fusion. Finally, the enhanced features are fed into a multi-task predictor comprising three branches for object classification, bounding box regression, and lesion mask generation.

The entire model is trained end-to-end by jointly optimizing multiple loss functions, including standard classification, bounding box, and segmentation losses, as well as an additional prototype loss that constrains the distance between the decoder output and the corresponding category prototype. This design enhances the discriminability and stability of semantic modeling. The proposed architecture supports end-to-end training and achieves high-precision object detection and semantic segmentation in agricultural scenarios characterized by imbalanced data distributions and diverse disease region morphologies.

3.3.2. Feature Encoding and Semantic Modeling Module

As illustrated in

Figure 4, the model first takes an input image

and employs ResNet-50 as the backbone feature extractor to obtain multi-scale convolutional features. To achieve efficient information fusion and construct a unified representation, features from multiple stages of the backbone, denoted as

, corresponding to different spatial resolutions and semantic depths, are selected. Each feature map is first passed through a

convolution to adjust the channel dimension to a common size

C, and then combined through upsampling and concatenation operations to produce a unified global feature representation

.

To incorporate global contextual information, the fused convolutional features

F are flattened into a token sequence

, where

. A learnable positional encoding

is then added to

X to form the input to the Transformer encoder (Equation (

8)).

Specifically, the query matrix

Q, key matrix

K, and value matrix

V for each token are obtained via linear projections, where

denotes the dimension of each attention head (Equation (

9)).

After passing through

L layers of the encoder, the globally modeled output sequence

is obtained. This sequence encodes rich semantic and contextual information, which serves as the foundation fo Equation (

10).

For the i-th token , a foreground score is computed as , where and are learnable parameters, and denotes the sigmoid function. Based on the foreground scores, the top-k tokens are selected to form the foreground feature set , which serves as the query input to the Transformer decoder.

The decoder takes as queries and the encoder output Z as keys and values. Through cross-attention, it fuses the features and generates task-discriminative representations . These output tokens are subsequently used for target classification, localization, and mask prediction.

This module effectively combines local convolutional perception with global Transformer-based semantic modeling, which not only enhances the ability of this model to perceive disease regions but also provides a solid semantic foundation for subsequent prototype enhancement and multi-task prediction.

3.3.3. Prototype Enhancement Module

Although the Transformer decoder possesses strong global modeling capability, its reliance solely on the attention mechanism may lead to inaccurate category discrimination in the presence of irregularly distributed disease regions and subtle inter-class differences, particularly in multi-class disease images with confusing regions. To address this issue, a prototype enhancement module is introduced, which leverages category-level semantic representations as guidance to improve feature discriminability from a categorical perspective. This module consists of two parts: one is prototype extraction module and the other one is prototype attention mechanism, which, respectively, construct category prototypes during training and utilize them to semantically enhance the decoder outputs during inference.

Prototype Extraction Module: During training, for each disease category

, a semantic centroid vector, referred to as the category prototype vector

, is computed by aggregating the features of all samples belonging to category

c. This prototype represents the most representative feature of the category by summarizing the shared semantics of its samples (Equation (

11)).

Prototype Attention Mechanism: During inference or training, the output of the Transformer decoder is denoted as

. To enhance the category-specific expressiveness of each target token, a prototype attention mechanism based on cosine similarity is designed. Specifically, the similarity between each token

and each category prototype

is computed as Equation (

12).

The attention weights are then normalized via a softmax function to obtain the attention distribution (Equation (

13)).

Based on these weights, the prototype-enhanced representation of each token is formulated as Equation (

14).

The enhanced token sequence incorporates category-guided information, resulting in more discriminative and semantically consistent features, which are beneficial for subsequent multi-task branches performing classification, localization, and mask prediction.

3.3.4. Multi-Task Predictor and Loss Function Design

After completing the Transformer decoding and prototype enhancement, the output feature sequence is fed into three task-specific branches, which are responsible for target classification, bounding box regression, and pixel-level mask prediction of the diseased regions, respectively. This constitutes an end-to-end multi-task learning framework. The structure of each branch is as follows:

Target Classification Branch: The classification branch employs a multi-layer perceptron (MLP) to predict the category probability distribution for each token. Let

denote the predicted category distribution of the

j-th token, and

be its ground-truth category label. The classification loss is defined as the average multi-class cross-entropy (Equation (

15)).

Bounding Box Regression Branch. The regression branch outputs the four-dimensional bounding box parameters

for each target, indicating the bounding box’s center position along with its width and height dimensions. The loss function combines the L1 loss with the Generalized IoU (GIoU) loss (Equation (

16)).

where

denotes the ground-truth bounding box of the

j-th target.

Mask Prediction Branch. The mask prediction branch generates a binary mask

for each detected diseased region. To obtain precise and complete segmentation, the loss function combines the binary cross-entropy (BCE) and Dice losses (Equation (

17)).

where

is the ground-truth mask and

is the predicted mask for the

j-th target.

Prototype Loss. To further improve the discriminability of the model for different disease categories, a prototype loss (

PrototypeLoss) is introduced. This loss encourages each target feature to be closer to the semantic prototype of its corresponding category. The prototype loss is defined as Equation (

18)).

where

is the feature representation of the

j-th target and

is the prototype vector of its ground-truth category. This loss minimizes the intra-class distance while enlarging the inter-class distance, improving feature compactness and separability, which is particularly beneficial for distinguishing confusing disease categories.

3.3.5. Multi-Task Joint Loss Function

To jointly optimize the model for classification, localization, segmentation, and semantic consistency, the total loss function is defined as a weighted sum of the individual loss terms (Equation (

19)).

where

,

,

, and

are hyperparameters controlling the relative contributions of the classification loss, bounding box regression loss, mask prediction loss, and prototype loss, respectively. This joint optimization enables the model to learn a balanced representation that is both semantically discriminative and spatially precise.

3.4. Evaluation Metrics

In this research, several quantitative indicators—such as accuracy, precision, recall, and mAP (mean Average Precision)—are utilized to assess model performance.Among them, accuracy is a straightforward yet fundamental indicator that quantifies the proportion of correctly classified instances relative to the total number of samples. Within classification scenarios, this metric captures how many predictions match the ground truth across the entire dataset. Precision acts as an essential metric to evaluate how reliably the model identifies positive predictions. It indicates the proportion of correctly classified positives out of all instances marked as positive by the system. In contrast, recall measures the model’s capacity to detect actual positive cases, calculated as the number of true positives divided by the total count of ground-truth positive examples. While precision emphasizes prediction correctness, recall focuses on the ability to capture all relevant positives, aiming to maximize coverage of true cases. To deliver a thorough evaluation of performance across multiple object classes and varying confidence levels, the metric of mAP is adopted. The calculation process consists of several stages: initially, a PR (precision–recall) curve is plotted for each category using model predictions; subsequently, the area beneath each PR curve is computed to yield the AP (Average Precision) for the corresponding class. The overall mAP is then obtained by averaging these AP values across all object categories. The formal mathematical expressions for these evaluation measures are presented as follows:

where,

and

indicate the counts of correctly predicted positive and negative instances, while

and

correspond to incorrect positive and negative predictions. The symbol

N denotes either the total sample count (for accuracy) or the number of object classes (for

mAP). Additionally,

reflects the precision value at a given recall level

r.

4. Results

4.1. Experimental Setup

The experiments were executed on a high-efficiency computing system configured with GPU-enabled infrastructure tailored for deep learning workloads.

Table 2 outlines the experimental environment, including both software frameworks and hardware specifications utilized during the evaluation.

To ensure consistency and fairness in performance comparison, all models were trained for a unified total of 200 epochs. For ProtoLeafNet, the Transformer decoder is composed of layers, which was empirically selected to balance representational depth and computational cost.

The training process employed a carefully tuned set of hyperparameters to achieve stable model behavior under data-scarce conditions. Specifically, the learning rate was initialized at 0.001 and decayed by a factor of 10 every 10 epochs to facilitate convergence and global optima search. A batch size of 16 was chosen to strike a balance between computational efficiency and gradient stability.

Prior to training, all input images were resized to pixels to match the input dimension expectations of standard convolutional architectures. To enhance generalization and reduce the variance introduced by dataset partitioning, we employed a five-fold cross-validation strategy. The dataset was evenly divided into five subsets; in each round, one subset was used as validation while the remaining four were used for training. The final results were reported as the average across all five folds.

To prevent overfitting, both L2 regularization (with a weight decay of 0.001) and dropout (with a rate of 0.5) were applied during training. Optimization was performed using the Adam algorithm with hyperparameters , , and .

For all baseline models, including YOLOv8, YOLOv9, YOLOv10, YOLOv11, and TinySegformer, we adhered to the best-performing configurations and training procedures as reported in their respective original publications to ensure a fair and reliable comparison.

4.2. Multi-Task Evaluation Results for Disease Recognition

This study conducts a comparative analysis of several representative models in object detection and segmentation, aiming to verify the proposed method’s capability in identifying disease regions on leafy vegetables. The evaluation relies on multiply performance indicators, including precision, recall, classification accuracy, and mAP calculated at IoU thresholds of 50% (mAP@50) and 75% (mAP@75), ensuring a holistic measurement of both localization precision and general robustness.

The outcomes of the experiments are reported in

Table 3 and

Table 4, where various models’ effectiveness on detection and segmentation tasks is outlined. Results suggest that architectures with enhanced multi-scale feature extraction, such as YOLOv10 and TinySegformer, yield better results than prior baselines. Importantly, the proposed approach consistently surpasses all benchmarks, especially in complex environments and scenarios with limited training data, highlighting its practical advantages and generalization strength.

In the object detection evaluation, YOLOv9 surpassed DETR by a margin of 2.94% on both mAP@50 and mAP@75, reflecting a clear performance uplift. Building on this, YOLOv10 demonstrated a strong balance between precision and recall, achieving mAP scores of 85.26% at 50% IoU and 84.28% at 75% IoU. These gains stem from its refined detection head and enhanced multi-scale feature integration, which notably improve the identification of small-scale targets. Continuing this progression, YOLOv11 sustained high accuracy in detection scenarios.

Advancing beyond existing frameworks, the method proposed in this work reached 91.07% and 90.25% for mAP@50 and mAP@75, respectively—exceeding all comparative models. Its superior performance is largely due to the integration of a prototype-driven feature encoder and attention-guided refinement module. These components boost the distinctiveness of extracted representations and intensify focus on semantically critical regions, especially in scenes with dense background interference or limited annotated data, thereby affirming its robust generalization capability.

Within the segmentation evaluation, TinySegformer exhibited strong performance in capturing global contextual dependencies, attributed to its Transformer-based design. It achieved mAP scores of 89.82% at 50% IoU and 88.83% at 75% IoU. However, the method introduced in this study yielded even better outcomes, recording 90.80% for both mAP@50 and mAP@75.

Furthermore, the proposed model reported an accuracy of 93.77%, recall of 90.80%, and precision of 91.79%. These metrics validate the model’s strength in improving segmentation fidelity and its resilience under adverse conditions, such as cluttered scenes and category overlap—challenges commonly encountered in agricultural disease imagery.

From the modeling standpoint, DETR employs self-attention to capture holistic feature interactions across the image. Nonetheless, its detection capability is hindered by substantial computational overhead and inadequate handling of small-scale objects, limiting its overall applicability in practical detection scenarios. In contrast, the YOLO family improves inference efficiency and detection accuracy through architectural simplification and multi-scale feature pyramid enhancement. TinySegformer integrates a lightweight Transformer backbone with multi-level receptive field fusion strategies, offering certain advantages in contextual modeling, though its performance remains limited by the spatial resolution constraints inherent to multi-head attention.

In contrast to previous approaches, the method introduced here integrates prototype-based representation learning and attention-driven refinement to reinforce intra-class consistency while maximizing inter-class distinction within the embedding space. This structural design strengthens the model’s ability to differentiate complex and texture-rich disease types. Additionally, a prototype-aware loss formulation is employed to explicitly minimize the distance between feature vectors and their respective class centers during optimization, thereby enhancing generalization performance, particularly in data-constrained environments.

In summary, the presented approach delivers leading results across object detection and semantic segmentation benchmarks, highlighting its versatility and practical value in the context of agricultural image analysis.

4.3. Comparative Ablation of Attention Mechanisms

This investigation focuses on explore how various attention mechanisms influence model performance, with a specific focus on their effectiveness in detecting and segmenting diseases in leafy vegetables. Three different attention approaches were evaluated: traditional self-attention mechanisms, the CBAM (Convolutional Block Attention Module), and the newly proposed attention mechanism based on prototype guidance. Each method was evaluated to assess its contribution to improving detection accuracy and segmentation quality within the agricultural disease analysis task.

Through a comparative evaluation using indicators—including precision, recall, classification accuracy, and mAP computed at IoU levels of 50% and 75%, this study investigates the impact of distinct attention mechanisms on feature expressiveness, detection robustness, and overall recognition performance. The outcomes, presented in

Table 5, highlight clear performance variations across the three evaluated strategies.

As shown in

Table 5, the ablation study on different attention mechanisms demonstrates that the proposed prototype attention mechanism outperforms the baseline methods across all evaluation metrics, exhibiting superior feature focusing and discriminative capabilities. Specifically, the standard self-attention mechanism yielded relatively poor performance, with a precision of 72.85%, recall of 69.86%, accuracy of 71.86%, mAP@50 of 71.86%, and mAP@75 of 70.86%. Although self-attention is effective in modeling global dependencies, it is prone to interference from redundant information in scenarios with complex backgrounds or subtle inter-class differences. This leads to reduced selectivity in feature extraction and degrades overall performance.

Conversely, the Convolutional Block Attention Module (CBAM), which integrates spatial and channel-wise attention components, notably strengthens the model’s resilience and feature discrimination capability. This enhancement translated into consistent gains across evaluation criteria, yielding a precision of 84.83%, recall of 80.84%, accuracy of 82.83%, mAP@50 of 83.83%, and mAP@75 of 82.83%.

Remarkably, the introduced prototype-guided attention module delivered the highest overall performance, achieving 94.81% in precision, 91.82% in recall, 92.81% in accuracy, and identical mAP values of 91.82% at both 50% and 75% IoU thresholds. This mechanism integrates prototype vector modeling with an adaptive weighting strategy, enabling the extraction of representative features for each disease class at the global semantic level. It dynamically emphasizes relevant regions in local feature maps via attention weighting, thereby enhancing the response to disease-affected areas while effectively suppressing background noise.

Overall, the prototype attention mechanism demonstrates superior performance in both detection and segmentation tasks, confirming its strong adaptability to the challenges of high-variance backgrounds, complex textures, and inter-class feature overlap in vegetable disease imagery. These findings highlight its potential as an effective solution for intelligent disease recognition in precision agriculture applications.

4.4. Ablation Study on Different Loss Functions

In order to assess how different loss formulations affect model behavior in detecting and segmenting diseases on leafy vegetables, an ablation analysis was performed. The analysis centers on three widely adopted loss formulations: conventional cross-entropy, focal loss designed to handle class imbalance, and a newly developed prototype-oriented loss proposed in this work.

A comprehensive evaluation was carried out using several performance indicators, including precision, recall, accuracy, and mAP, assessed at IoU thresholds of 50% (mAP@50) and 75% (mAP@75). This investigation explores how various loss functions influence model convergence behavior, prediction reliability, and general robustness. As detailed in

Table 6, experimental outcomes indicate that the prototype-guided loss consistently delivers the best performance, surpassing both the conventional cross-entropy and focal losses across all measured dimensions.

The experimental outcomes reveal that the model employing the cross-entropy loss function demonstrates relatively inferior performance across various evaluation metrics, with mAP@50 and mAP@75 values of 65.87% and 64.87%, respectively. This finding underscores that, despite its extensive use in multi-class classification tasks, cross-entropy loss is incapable of adequately modeling the discriminative boundaries between classes in scenarios involving fine-grained recognition and class imbalance.

In contrast, the introduction of the focal loss leads to a substantial improvement in model performance, with mAP@50 and mAP@75 increasing to 80.84%. This enhancement can be attributed to the focal loss’s ability to focus on hard-to-classify samples, thereby effectively alleviating the performance degradation caused by class imbalance.

Furthermore, the prototype-based loss function yielded the highest performance across all evaluation indicators, achieving mAP scores of 91.82% at both the 50% and 75% IoU thresholds. By explicitly regulating the embedding-to-prototype distance during training, this loss formulation markedly improves the model’s capability to differentiate between low-frequency categories and reduces the impact of irrelevant background features. Consequently, it leads to accuracy gains in both classification and segmentation tasks. This prototype-driven strategy introduces an effective mechanism for strengthening feature separability under complex visual conditions.

4.5. Specific Case Discussions

This experiment is designed to assess how effectively the proposed model identifies various plant disease types, with a particular emphasis on challenging conditions such as cluttered visual backgrounds and feature-level ambiguity. To evaluate how effectively the model responds to difficult cases, this analysis investigates its strengths and potential shortcomings in fine-grained disease recognition. Detection outcomes for multiple disease types—namely downy mildew, gray mold, black rot, sclerotinia, and brown spot—are comparatively analyzed, as summarized in

Table 7 and

Table 8.

The integrated results of the detection and segmentation tasks demonstrate that the proposed model holds significant potential for application in aquaponic systems, particularly in the identification of diseases affecting various leafy vegetables. For instance, in the case of spinach downy mildew, the model achieved an accuracy of 94.56%, a recall of 91.61%, and an mAP@50 of 92.59% in the context of object recognition. In this segmentation task, the precision reached 97.80%, with mAP@50 and mAP@75 values of 94.47% and 93.47%, respectively. The results indicate that is model is highly capable of recognizing and segmenting diseases with clear lesion edges and distinct morphological features, making it suitable for early disease monitoring in aquaponic systems.

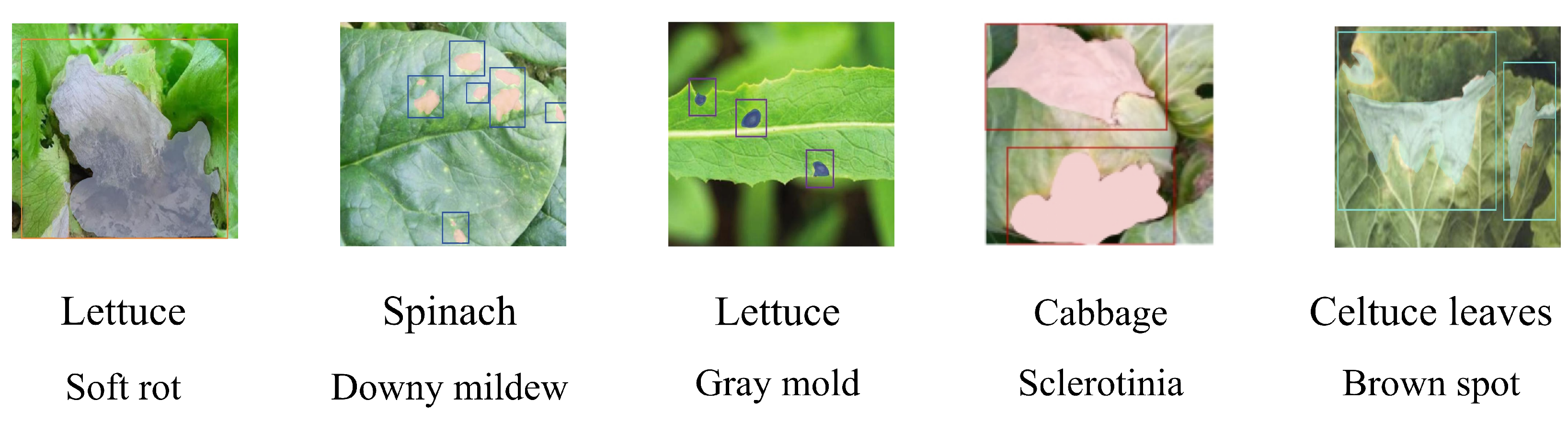

In contrast, for diseases with less prominent features or stronger background interference, such as cabbage sclerotinia and lettuce leaf brown spot, the model’s mAP@75 values in both detection and segmentation tasks were slightly lower (88.65% and 90.45%, respectively). This suggests that the model still faces challenges in handling lesions with blurred textures, diffused spots, or unclear boundaries. This also reflects the heterogeneous distribution of different diseases in the image feature space, imposing stricter demands on inter-class differentiation. To further illustrate these findings, we have visualized the detection and segmentation results for the detection task, as shown in

Figure 5.

Figure 5 presents representative qualitative detection results for five common leafy vegetable diseases: soft rot and gray mold on lettuce, downy mildew on spinach, sclerotinia on cabbage, and brown spot on celtuce leaves. The predicted bounding boxes accurately highlight symptomatic areas of varying shapes, colors, and textures, demonstrating the model’s robustness across diverse species and disease manifestations. These visual results complement the quantitative metrics by confirming that the proposed method can localize and classify disease symptoms consistently, even under variations in leaf morphology and lesion appearance, thereby reinforcing the overall reliability of the detection framework.

5. Discussion

5.1. Advantages

ProtoLeafNet enhances the discriminative and feature-focusing capabilities of key regions in disease images by introducing a prototype-guided attention mechanism and a prototype loss function. This model delivers strong results across both detection and segmentation tasks, exhibiting remarkable resilience and generalization capabilities in agricultural environments characterized by visual clutter and overlapping disease categories. According to the evaluation, it obtains mAP scores of 91.07% at 50% IoU and 90.25% at 75% IoU for object detection, while achieving an mAP@75 of 90.80% in the delineation of pathological areas. In comparison with mainstream models like YOLOv10 and TinySegformer, ProtoLeafNet shows significant advantages in boundary discrimination and feature differentiation. Its multi-task joint training strategy further promotes feature sharing and collaborative optimization, significantly enhancing the model’s overall disease recognition capability. Additionally, the prototype-enhanced mechanism improves the model’s interpretability and semantic consistency, making it particularly suitable for fine-grained recognition tasks involving multiple co-existing diseases, thus, providing theoretical and algorithmic support for smart agricultural disease monitoring systems.

Given its intended use in precision agriculture and aquaponic environments, we further analyzed ProtoLeafNet’s computational efficiency and deployment feasibility on resource-constrained edge devices. The model comprises approximately 38.2 million parameters and requires 144 MB in storage (FP32). Inference tests on an NVIDIA RTX 4090D GPU showed an average processing time of 28.4 ms per 224 × 224 image and a peak memory usage of 1.7 GB. While slightly heavier than TinySegformer, ProtoLeafNet offers superior accuracy and robustness, and remains compatible with edge AI accelerators, making it practical for real-time deployment in smart greenhouses, vertical farms, and other embedded agricultural systems.

5.2. Challenges and Limitations

Despite the strong overall performance of ProtoLeafNet, several limitations were observed during experimental evaluation. First, the model exhibits performance degradation under extreme lighting variations, such as overexposure or severe shadows, which can distort disease color and texture cues. Second, in cases where multiple leaves overlap significantly, occlusion may lead to missegmentation or missed detections, particularly for small or early-stage lesions. Additionally, some visually ambiguous symptoms—such as early powdery mildew versus dust or leaf aging effects—may challenge the model’s feature differentiation capacity. These limitations suggest that while ProtoLeafNet generalizes well across most conditions, its performance may be affected in low-quality image settings or ambiguous disease scenarios. Future work will consider incorporating illumination-invariant pre-processing and occlusion-aware attention mechanisms to further improve robustness.

5.3. Future Perspectives

Future research can further expand and optimize the findings of this study in several key areas. Firstly, it would be beneficial to combine the prototype mechanism with a dynamic update strategy to enable adaptive updates of the prototype vectors during both training and inference phases. This would enhance the model’s ability to adapt to diverse agricultural environments. Secondly, in terms of model architecture, exploring more lightweight Transformer variants or incorporating techniques such as knowledge distillation and sparse attention mechanisms could improve deployment efficiency and inference performance, facilitating its application in edge computing and IoT devices for agricultural monitoring. Additionally, multi-modal fusion approaches could be further explored, such as integrating image data with environmental variables like dissolved oxygen and pH values from aquaponic systems, to build a multi-source perception disease recognition model that handles visually complex cases. Finally, while this model demonstrates promising performance, there is still room for improvement in its generalization ability and domain adaptability. Future work could employ strategies like distribution shift mitigation to increase this model’s transferability across regions and crops, thereby laying the foundation for building more universally applicable smart agricultural vision systems to serve aquaponic systems.

To better contextualize the model’s technical performance within the framework of sustainable agriculture, we emphasize that the improvement in detection accuracy—from 85.26% in YOLOv10 to 91.07% in ProtoLeafNet—has practical implications beyond numerical gains. Higher accuracy in disease detection enables earlier and more precise identification of affected plants, allowing farmers to apply treatments only when and where necessary. This targeted intervention reduces excessive or preventive pesticide use, thereby lowering environmental toxicity and input costs. Furthermore, precise lesion segmentation supports quantification of disease severity, enabling informed decisions about culling, nutrient adjustment, or environmental control in hydroponic systems. By improving detection robustness under real-world conditions, ProtoLeafNet contributes to reducing crop losses due to late or missed diagnoses. Collectively, these outcomes align with sustainable agriculture goals by enhancing resource efficiency, minimizing ecological impact, and supporting high-yield, low-input production systems.

6. Conclusions

This paper proposed ProtoLeafNet, a model for identifying and segmenting diseases in green-leaf vegetables that integrates a prototype-guided attention mechanism, aiming to address the challenges of multi-disease recognition, complex backgrounds, and high sample diversity in agricultural systems like aquaponics.

In performance assessments, ProtoLeafNet attained 93.12% accuracy, alongside mAP scores of 91.07% at 50% IoU and 90.25% at 75% IoU for object detection. For segmentation, the model reached 90.80% at both mAP@50 and mAP@75, outperforming leading models such as YOLOv10 and TinySegformer across several key metrics. Specifically, in the case of Spinacia oleracea infected with downy mildew, it recorded an mAP@50 of 92.59% and a segmentation accuracy of 97.80%. These findings underscore the model’s effectiveness in fine-level feature targeting, detailed classification, and maintaining generalization across challenging visual conditions.

Moreover, ablation studies comparing different attention strategies and supervision signals revealed that the prototype-based attention mechanism markedly enhances focus on semantically important areas, leading to an approximate 10-point improvement in mAP. When contrasted with conventional cross-entropy loss, the prototype-guided loss displayed superior consistency and class discriminability, especially in subtle category distinctions. Collectively, these enhancements contribute to better learning under data scarcity and improve the model’s interpretability in practical deployments.

Despite the promising experimental results, ProtoLeafNet still faces several challenges in practical applications. Firstly, the prototype vector’s expressiveness heavily depends on high-quality annotated data and balanced class distribution. In cases where some disease categories have very few samples or poor-quality labels, the model’s attention allocation may become skewed, impacting overall recognition performance. Secondly, while the Transformer module enhances the model’s semantic modeling and global perception, the substantial computational burden limits its applicability on low-resource edge platforms, limiting time really application in smart agricultural terminals. Furthermore, the current model relies primarily on a single visual modality. Under interference from factors such as lighting variations, leaf occlusion, or image blur, the model’s robustness and stability still have room for improvement.

In the future, research works may explore strategies to enhance model flexibility and streamline its deployment in practical settings. One possible avenue is to incorporate a dynamic prototype update mechanism, allowing the prototype vectors to be self-adjusted during both training and inference phases, thereby enhancing the model’s response to real-time environmental changes. In terms of architecture, exploring more lightweight model designs, such as MobileViT or TinyFormer, combined with techniques like knowledge distillation or sparse attention mechanisms, could reduce inference overhead and meet the requirements for edge deployment. Additionally, multi-modal fusion of image data with environmental sensor data from aquaponic systems, such as water temperature, pH value, and dissolved oxygen concentration, could help construct a more comprehensive disease perception model and improve its understanding of complex agricultural environments. Finally, adopting meta-learning or domain adaptation strategies could enhance the model’s transferability across crop varieties, different cultivation regions, and even across systems, thereby improving its generalization ability. With these improvements, ProtoLeafNet has the potential to further enhance its practicality and broader application value, providing stable and reliable technical support for efficient disease monitoring in smart agriculture, especially within aquaponic systems.