Joint Training Method for Assessing the Thermal Aging Health Condition of Oil-Immersed Power Transformers

Abstract

1. Introduction

- (1)

- A wide and deep model is proposed for transformer health condition assessment, employing joint training and unified optimization to enhance the learning capabilities of both components.

- (2)

- The joint training mechanism, facilitated by MCMC, optimizes the parameters of both the wide and deep components. This integration not only ensures efficient learning of global feature interactions but also enhances complex feature learning capabilities.

- (3)

- The model offers the following specific advantages:

- (a)

- The wide component effectively memorizes global interrelationships, while the deep neural network component, optimized through MCMC, exhibits a strong generalization ability, enabling it to recognize intricate health patterns across different transformer conditions.

- (b)

- The joint training of both components results in a significant improvement in the overall accuracy of transformer thermal aging health assessments, offering a more reliable and robust tool for predictive maintenance and informed operational decision making.

2. Framework and Method

2.1. Input Selection

- (1)

- Water dynamics:

- (a)

- Solubility and migration: As temperature increases, the solubility of water in transformer oil rises, disrupting the equilibrium of water distribution between the oil and the paper insulation. Initially, this may lead to a reduction in water content in the paper insulation as moisture migrates into the oil. However, over time, water accumulates in the oil, diminishing its insulating properties and ultimately compromising the overall effectiveness of both the oil and paper insulation.

- (b)

- Aging acceleration: At lower temperatures, the solubility of water in oil decreases, driving water back into the paper insulation. This accelerates the aging process of the insulation material and may elevate the risk of localized electrical discharges.

- (2)

- Acidification:

- (3)

- Furfural formation:

- (4)

- Direct electrical property impacts:

- (a)

- Dielectric breakdown voltage (DBV): Temperature increase reduces oil viscosity, impacting its insulating properties and lowering DBV. Additionally, elevated temperatures enhance the solubility of water in the oil and accelerate its acidification, both of which contribute to a reduction in the oil’s dielectric strength, thereby increasing the risk of electrical breakdown.

- (b)

- Dissipation factor (DF): Higher temperatures improve oil’s electrical conductivity and modify polarization processes, elevating the dissipation factor. This signifies more energy loss in alternating electric fields, with elevated temperatures also speeding up chemical reactions that produce polar molecules, further degrading insulation quality.

2.2. Wide and Deep Framework

2.3. Wide Part

- k = 1 corresponds to VG.

- k = 2 corresponds to G.

- k = 3 corresponds to M.

- k = 4 corresponds to B.

- k = 5 corresponds to VB.

2.4. Deep Part

2.5. Wide and Deep Model Uses a Joint Method

3. Experiment Design and Results

3.1. Data Preprocessing

- (1)

- Raw electricity consumption data:

- (2)

- Data augmentation:

3.2. Experimental Settings

3.3. Joint Prediction Results

- (1)

- Joint weight determinations:

- (2)

- Case analysis and prediction accuracy:

4. Comparative Analysis

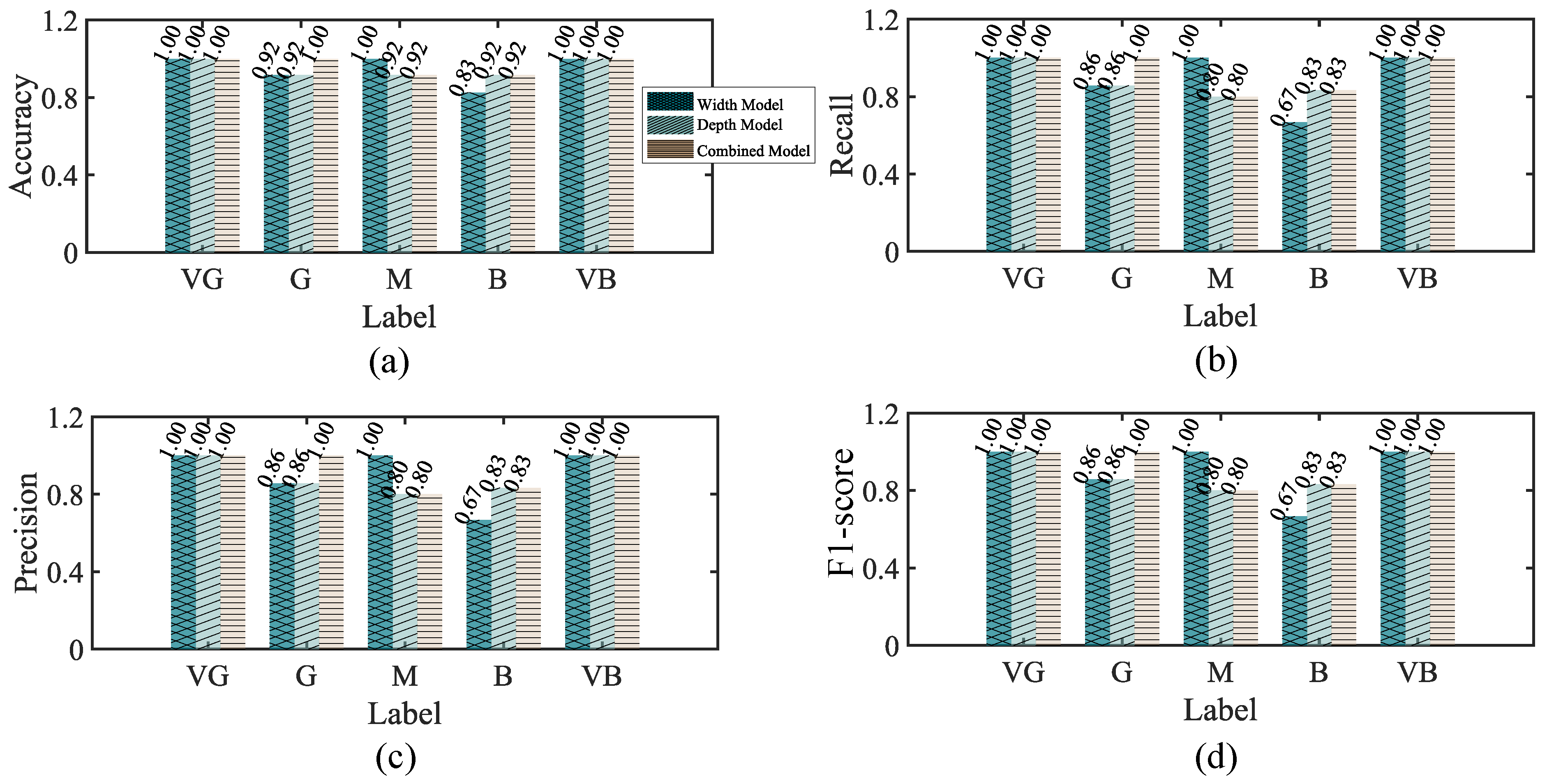

4.1. Performance Comparison of Independent Wide and Deep Models

4.2. Advantages of the Joint Wide and Deep Model

4.3. Detailed Confusion Matrix Analysis

4.4. Performance Metrics

4.5. Performance Evaluation Using ROC Curve Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, C.; Dong, X.; Ruan, J.; Deng, Y. Dynamic thermal rating assessment of oil-immersed power transformers for multiple operating conditions. High Volt. 2024, 9, 195–205. [Google Scholar] [CrossRef]

- Foros, J.; Istad, M. Health Index, Risk and Remaining Lifetime Estimation of Power Transformers. IEEE Trans. Power Deliv. 2020, 35, 2612–2620. [Google Scholar] [CrossRef]

- Azam, M.W.; Liu, A.; Tong, J.; Deng, W. Pilot study of operational health management approach for transformers in nuclear power plant. IET Conf. Proc. 2023, 2022, 1926–1931. [Google Scholar] [CrossRef]

- Abu-Elanien, A.E.B.; Salama, M.M.A. Evaluation of transformer health condition using reduced number of tests. Electr. Eng. 2019, 101, 357–368. [Google Scholar] [CrossRef]

- Li, S.; Li, X.; Cui, Y.; Li, H. Review of Transformer Health Index from the Perspective of Survivability and Condition Assessment. Electronics 2023, 12, 2407. [Google Scholar] [CrossRef]

- Ashkezari, A.D.; Ma, H.; Saha, T.K.; Ekanayake, C. Application of fuzzy support vector machine for determining the health index of the insulation system of in-service power transformers. IEEE Trans. Dielectr. Electr. Insul. 2013, 20, 965–973. [Google Scholar] [CrossRef]

- Malik, I.M.; Sharma, A.; Naayagi, R.T. A Comprehensive and Practical Method for Transformer Fault Analysis with Historical Data Trend Using Fuzzy Logic. IEEE Trans. Dielectr. Electr. Insul. 2023, 30, 2277–2284. [Google Scholar] [CrossRef]

- Manoj, T.; Ranga, C.; Abu-Siada, A.; Ghoneim, S.S.M. Analytic Hierarchy Processed Grey Relational Fuzzy Approach for Health Assessment of Power Transformers. IEEE Trans. Dielectr. Electr. Insul. 2024, 31, 1480–1489. [Google Scholar] [CrossRef]

- Rediansyah, D.; Prasojo, R.A.; Suwarno; Abu-Siada, A. Artificial Intelligence-Based Power Transformer Health Index for Handling Data Uncertainty. IEEE Access 2021, 9, 150637–150648. [Google Scholar] [CrossRef]

- Dong, M.; Li, W.; Nassif, A.B. Long-Term Health Index Prediction for Power Asset Classes Based on Sequence Learning. IEEE Trans. Power Deliv. 2021, 37, 197–207. [Google Scholar] [CrossRef]

- Qi, B.; Zhang, P.; Rong, Z.; Li, C. Differentiated warning rule of power transformer health status based on big data mining. Int. J. Electr. Power Energy Syst. 2020, 121, 106150. [Google Scholar] [CrossRef]

- Soni, R.; Mehta, B. Diagnosis and prognosis of incipient faults and insulation status for asset management of power transformer using fuzzy logic controller & fuzzy clustering means. Electr. Power Syst. Res. 2023, 220, 109256. [Google Scholar] [CrossRef]

- Malik, H.; Sharma, R.; Mishra, S. Fuzzy reinforcement learning based intelligent classifier for power transformer faults. ISA Trans. 2020, 101, 390–398. [Google Scholar] [CrossRef]

- Medina, R.D.; Zaldivar Sanchez, D.A.; Romero Quete, A.A.; Zúñiga Balanta, J.; Mombello, E.E. A fuzzy inference-based approach for estimating power transformers risk index. Electr. Power. Syst. Res. 2022, 209, 108004. [Google Scholar] [CrossRef]

- ElShawi, R.; Sherif, Y.; Al-Mallah, M.; Sakr, S. Interpretability in healthcare: A comparative study of local machine learning interpretability techniques. Comput. Intell. 2020, 37, 1633–1650. [Google Scholar] [CrossRef]

- Zhang, D.; Li, C.; Shahidehpour, M.; Wu, Q.; Zhou, B.; Zhang, C.; Huang, W. A bi-level machine learning method for fault diagnosis of oil-immersed transformers with feature explainability. Int. J. Electr. Power Energy Syst. 2022, 134, 107356. [Google Scholar] [CrossRef]

- Almoallem, Y.D.; Taha, I.B.M.; Mosaad, M.I.; Nahma, L.; Abu-Siada, A. Application of Logistic Regression Algorithm in the Interpretation of Dissolved Gas Analysis for Power Transformers. Electronics 2021, 10, 1206. [Google Scholar] [CrossRef]

- Menezes, A.G.C.; Araujo, M.M.; Almeida, O.M.; Barbosa, F.R.; Braga, A.P.S. Induction of Decision Trees to Diagnose Incipient Faults in Power Transformers. IEEE Trans. Dielectr. Electr. Insul. 2022, 29, 279–286. [Google Scholar] [CrossRef]

- Kherif, O.; Benmahamed, Y.; Teguar, M.; Boubakeur, A.; Ghoneim, S.S.M. Accuracy Improvement of Power Transformer Faults Diagnostic Using KNN Classifier With Decision Tree Principle. IEEE Access 2021, 9, 81693–81701. [Google Scholar] [CrossRef]

- Shi, H.; Chen, M. A two-stage transformer fault diagnosis method based multi-filter interactive feature selection integrated adaptive sparrow algorithm optimised support vector machine. IET Electr. Power Appl. 2022, 17, 341–357. [Google Scholar] [CrossRef]

- Prasojo, R.A.; Putra, M.A.A.; Ekojono; Apriyani, M.E.; Rahmanto, A.N.; Ghoneim, S.S.; Mahmoud, K.; Lehtonen, M.; Darwish, M.M. Precise transformer fault diagnosis via random forest model enhanced by synthetic minority over-sampling technique. Electr. Power Syst. Res. 2023, 220, 109361. [Google Scholar] [CrossRef]

- Yu, X.; Gu, J.; Zhang, X.; Mao, J. GAN-based semi-supervised learning method for identification of the faulty feeder in resonant grounding distribution networks. Int. J. Electr. Power Energy Syst. 2022, 144, 108535. [Google Scholar] [CrossRef]

- Saroja, S.; Haseena, S.; Madavan, R. Dissolved Gas Analysis of Transformer: An Approach Based on ML and MCDM. IEEE Trans. Dielectr. Electr. Insul. 2023, 30, 2429–2438. [Google Scholar] [CrossRef]

- Badawi, M.; Ibrahim, S.A.; Mansour, D.-E.A.; El-Faraskoury, A.A.; Ward, S.A.; Mahmoud, K.; Lehtonen, M.; Darwish, M.M.F. Reliable Estimation for Health Index of Transformer Oil Based on Novel Combined Predictive Maintenance Techniques. IEEE Access 2022, 10, 25954–25972. [Google Scholar] [CrossRef]

- Zeinoddini-Meymand, H.; Kamel, S.; Khan, B. An efficient approach with application of linear and nonlinear models for evaluation of power transformer health index. IEEE Access 2021, 9, 150172–150186. [Google Scholar] [CrossRef]

- Zhang, H.; Ren, F.; Yang, J.; Kang, Z.; Li, Q.; Liu, H. A Transformer condition assessment method based on combined deep neural network. CSEE J. Power Energy Syst. 2022, 11, 861–870. [Google Scholar]

- Abdo, A.; Liu, H.; Mahmoud, Y.; Zhang, H.; Sun, Y.; Li, Q.; Guo, J. Hybrid model of power transformer fault classification using C-set and MFCM–MCSVM. CSEE J. Power Energy Syst. 2022, 10, 672–685. [Google Scholar]

- Cheng, H.-T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & Deep Learning for Recommender Systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Sarajcev, P.; Jakus, D.; Vasilj, J. Optimal scheduling of power transformers preventive maintenance with Bayesian statistical learning and influence diagrams. J. Clean. Prod. 2020, 258, 120850. [Google Scholar] [CrossRef]

- Kruschke, J.K. Doing Bayesian Data Analysis: A Tutorial with R, JAGS, and Stan, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2015; pp. 1–759. [Google Scholar]

- Abu-Elanien, A.E.B.; Salama, M.M.A.; Ibrahim, M. Calculation of a Health Index for Oil-Immersed Transformers Rated Under 69 kV Using Fuzzy Logic. IEEE Trans. Power Deliv. 2012, 27, 2029–2036. [Google Scholar] [CrossRef]

- Islam, M.; Lee, G.; Hettiwatte, S.N. Application of a general regression neural network for health index calculation of power transformers. Int. J. Electr. Power Energy Syst. 2017, 93, 308–315. [Google Scholar] [CrossRef]

| Number | Parameter |

|---|---|

| 1 | Water/ppm |

| 2 | Acidity/(mgKOH/g) |

| 3 | DBV/kV |

| 4 | DF/% |

| 5 | TDCG/ppm |

| 6 | Furan/ppm |

| No. | Water | Acidity | TDCG | Furan | DBV | DF | HI | Label |

|---|---|---|---|---|---|---|---|---|

| 1 | 21.7 | 0.024 | 483 | 0.86 | 32.5 | 0.075 | 0.377 | G |

| 2 | 26.9 | 0.098 | 254 | 0.65 | 40.5 | 0.894 | 0.334 | G |

| 3 | 14.5 | 0.033 | 78 | 0.26 | 58 | 0.14 | 0.29 | G |

| 4 | 21.2 | 0.226 | 215 | 5.53 | 48.7 | 0.424 | 0.7 | B |

| 5 | 10 | 0.01 | 126 | 0.06 | 75 | 0.111 | 0.102 | VG |

| 6 | 15.5 | 0.075 | 38 | 0.53 | 71 | 0.143 | 0.274 | G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Ruan, J.; Deng, Y.; Xie, Y. Joint Training Method for Assessing the Thermal Aging Health Condition of Oil-Immersed Power Transformers. Sustainability 2025, 17, 7218. https://doi.org/10.3390/su17167218

Zhang C, Ruan J, Deng Y, Xie Y. Joint Training Method for Assessing the Thermal Aging Health Condition of Oil-Immersed Power Transformers. Sustainability. 2025; 17(16):7218. https://doi.org/10.3390/su17167218

Chicago/Turabian StyleZhang, Chen, Jiangjun Ruan, Yongqing Deng, and Yiming Xie. 2025. "Joint Training Method for Assessing the Thermal Aging Health Condition of Oil-Immersed Power Transformers" Sustainability 17, no. 16: 7218. https://doi.org/10.3390/su17167218

APA StyleZhang, C., Ruan, J., Deng, Y., & Xie, Y. (2025). Joint Training Method for Assessing the Thermal Aging Health Condition of Oil-Immersed Power Transformers. Sustainability, 17(16), 7218. https://doi.org/10.3390/su17167218