Abstract

In today’s volatile market environment, supply chain management (SCM) must address complex challenges such as fluctuating demand, fraud, and delivery delays. This study applies machine learning techniques—Extreme Gradient Boosting (XGBoost) and Recurrent Neural Networks (RNNs)—to optimize demand forecasting, inventory policies, and risk mitigation within a unified framework. XGBoost achieves high forecasting accuracy (MAE = 0.1571, MAPE = 0.48%), while RNNs excel at fraud detection and late delivery prediction (F1-score ≈ 98%). To evaluate models beyond accuracy, we introduce two novel metrics: Cost–Accuracy Efficiency (CAE) and CAE-ESG, which combine predictive performance with cost-efficiency and ESG alignment. These holistic measures support sustainable model selection aligned with the ISO 14001, GRI, and SASB benchmarks; they also demonstrate that, despite lower accuracy, Random Forest achieves the highest CAE-ESG score due to its low complexity and strong ESG profile. We also apply SHAP analysis to improve model interpretability and demonstrate business impact through enhanced Customer Lifetime Value (CLV) and reduced churn. This research offers a practical, interpretable, and sustainability-aware ML framework for supply chains, enabling more resilient, cost-effective, and responsible decision-making.

1. Introduction

In the contemporary landscape of global business operations, SCM has become increasingly complex, requiring advanced analytical methodologies to address its multifaceted challenges. Traditional SCM practices, which often rely on historical data and rule-based systems, are often unable to adapt to the dynamic nature of modern markets [1]. These markets are characterized by fluctuating demand patterns, heightened customer expectations, and growing operational risks, including fraud and delivery delays [2]. According to a previous study [3], companies that have successfully integrated advanced analytics into their supply chain operations have achieved cost reductions of 8–12% and service level improvements of 15–20% [4]. Machine learning (ML), with its ability to uncover patterns and make predictions from large and complex datasets, has emerged as a transformative force in SCM. Recent research has highlighted the potential of ML techniques to enhance demand forecasting accuracy, optimize inventory levels, and mitigate operational risks [5]. However, much of this research has focused on isolated applications, and there remains a significant gap in understanding how ML can be comprehensively integrated across multiple supply chain functions to deliver synergistic benefits.

Despite the advances in ML applications for SCM, several critical gaps and challenges remain. First, existing studies often focus on single-function applications, such as demand forecasting or fraud detection, without considering the interdependencies between different supply chain functions. This fragmented approach limits the ability to realize the full potential of ML in creating a cohesive and resilient supply chain ecosystem. Second, there is a lack of comparative analysis between traditional ML methods (e.g., XGBoost) and deep learning approaches (e.g., RNNs) within SCM contexts, making it difficult for practitioners to choose the most appropriate tools for their specific needs. Third, while model performance is frequently evaluated in academic settings, there is limited guidance on ensuring that these models deliver tangible business outcomes, such as improved CLV and reduced churn rates. This study addresses these gaps by presenting a comprehensive framework for applying ML across demand forecasting, inventory optimization, and risk mitigation, while also providing actionable insights for practitioners.

In addition to accuracy and business impact, there is growing recognition that SCM solutions must also align with environmental, social, and governance (ESG) goals. Frameworks such as ISO 14001 [6], the Global Reporting Initiative (GRI) [7], and the Sustainability Accounting Standards Board [8] emphasize the importance of integrating sustainability metrics into operational decision-making. However, existing ML evaluation methods rarely incorporate cost-efficiency or sustainability, which can lead to suboptimal model choices. To address this, we introduce two novel performance metrics: Cost–Accuracy Efficiency (CAE) and its extended variant, CAE-ESG. CAE evaluates models based on their accuracy-to-cost ratio, while CAE-ESG integrates ESG performance indicators—such as environmental efficiency, labor standards, and governance risk—into the model evaluation framework. These metrics offer a more comprehensive approach to assessing ML models in SCM, balancing accuracy, implementation cost, and sustainability impact [9,10].

To address these challenges, this study proposes a comprehensive ML-driven framework that spans demand forecasting, inventory optimization, risk mitigation, and ESG-aligned evaluation. Grounded in recent advancements and gaps identified in the literature, we formulate and test a set of hypotheses that guide our empirical investigations. These hypotheses are developed in detail in Section 2, following a thematic review of prior work in each SCM domain.

This study makes four primary contributions to the intersection of machine learning and supply chain management:

- We develop and evaluate an XGBoost-based demand forecasting model on a high-variance retail dataset, achieving an 18% reduction in mean absolute error (MAE) and a 22% reduction in root-mean-square error (RMSE) compared to a benchmark ARIMA (1,1,1) model. This result highlights the advantage of tree-based ensembles in handling non-stationary and intermittent demand patterns.

- We design a continuous-review inventory replenishment policy that dynamically adjusts reorder points based on forecast accuracy. When the MAE falls below 12% of average demand, this approach improves service levels by 7% and reduces the total inventory cost by 10% compared to a fixed-interval policy under identical conditions.

- We introduce two composite evaluation metrics—CAE and CAE-ESG—that jointly assess model performance, implementation cost, and sustainability impact. Using these metrics, we show that although Random Forests and RNNs perform well, XGBoost achieves the best balance between cost-efficiency and ESG footprint, reducing greenhouse gas emissions by 15% compared to deep learning models.

- We apply RFM-based customer segmentation to enhance the ML model input structure. By tailoring forecasts to customer segments (e.g., “Champions,” “Loyalists,” “At-Risk”), we observe up to an 11% improvement in forecast accuracy and a 9% uplift in performance for retention-critical cohorts, demonstrating the value of behavioral segmentation in demand modeling.

The significance of this research lies in its potential to advance both academic understanding and practical applications of ML in SCM. By providing a comprehensive framework that integrates multiple ML applications, this study offers a more realistic and actionable approach to leveraging data-driven strategies in complex supply chain environments. This work’s novelty is threefold: First, it presents an integrated application of ML across demand forecasting, inventory optimization, and risk mitigation, addressing the interdependencies between these functions in a way that previous research has not. Second, it provides a detailed comparison of traditional and deep learning approaches within SCM contexts, offering evidence to help practitioners select the most suitable ML techniques. Third, it emphasizes the importance of model interpretability and validation through SHAP analysis, ensuring that the insights are statistically robust and practically actionable for decision-makers. By bridging the gap between advanced analytics and real-world SCM operations, we aim to equip businesses with the tools and knowledge needed to thrive in an increasingly competitive and uncertain global market.

The remainder of this article is organized as follows: Section 2 presents a review of related work and highlights existing gaps in supply chain analytics. Section 3 outlines the materials and methods used in this study, including data preprocessing, segmentation, forecasting, classification, and model evaluation. Section 4 reports the results of the forecasting accuracy, inventory optimization, risk mitigation, and ESG-aligned model comparisons. Section 5 discusses the implications of the findings across business functions, sustainability goals, and deployment strategies. Finally, Section 6 concludes this study and proposes directions for future research.

2. Literature Review

This section critically reviews the evolution of analytical techniques in SCM, focusing on the integration of ML across four core functions: demand forecasting, inventory optimization, risk mitigation, and sustainability evaluation. Beginning with traditional statistical models such as ARIMA and exponential smoothing, we highlight their limitations in dynamic, non-stationary environments. We then examine the emergence of ML approaches such as XGBoost and RNNs, which have shown promise in capturing complex patterns but also present implementation challenges. Building on this, we explore how ML enables adaptive inventory policies and targeted customer segmentation, improves operational risk detection, and supports ESG-aligned decision-making through composite evaluation metrics. Each thematic subsection identifies methodological gaps in the literature, which, in turn, provide the empirical and theoretical foundation for the hypotheses formulated in this study.

2.1. Demand Forecasting in SCM: From Classical Models to ML Approaches

Accurate demand forecasting is a cornerstone of effective SCM, enabling firms to plan inventory, procurement, and logistics in alignment with customer needs. Traditionally, statistical models such as ARIMA and ETS have been widely adopted in retail and manufacturing settings. These models are praised for their simplicity and interpretability, particularly in stable and low-variance environments [11,12]. However, their linear assumptions and reliance on stationarity significantly limit their ability to handle sudden demand shocks [13,14], seasonality [15], or interactions among exogenous variables such as promotions or weather events [16,17].

In response to these limitations, researchers have explored machine learning (ML) approaches that can capture non-linear patterns in complex demand data. Tree-based ensemble methods like XGBoost have demonstrated improved performance by integrating lagged features, calendar variables, and price elasticity effects [18,19]. Deep learning models, particularly RNNs and their LSTM variants, have also been used to model temporal dependencies in sequential sales data. For example, Ref. [20] showed that LSTM models outperform traditional models in capturing long-term trends in e-commerce demand. Despite these advances, comparative studies of ML and deep learning models under identical supply chain conditions remain rare, and performance differences are often conflated by dataset variability, inconsistent preprocessing, or lack of benchmarking across business outcomes [21].

This study addresses these shortcomings by conducting a direct comparison of XGBoost and RNNs in a real-world retail SCM dataset. The goal is to determine which model more effectively handles high-variance, non-stationary demand and supports downstream inventory decision-making. Drawing on the superior pattern recognition capabilities of both models, we hypothesize the following:

Hypothesis 1:

Gradient-boosted ensemble methods (e.g., XGBoost) and Recurrent Neural Networks (RNNs) will achieve significantly lower out-of-sample forecast errors (e.g., MAE, RMSE) compared to traditional statistical methods (e.g., ARIMA) when applied to intermittent and non-stationary retail demand data.

2.2. Forecast-Driven Inventory Optimization: Moving Beyond Static Policies

Inventory management decisions are highly sensitive to demand variability and replenishment lead times. Traditionally, firms have relied on fixed-interval review policies and EOQ models to manage replenishment cycles. These classical methods simplify operations by assuming constant demand and lead time, offering closed-form solutions that minimize the total inventory cost under deterministic settings [22]. However, in practice, such assumptions are rarely held, especially in volatile retail environments where sudden demand fluctuations or supplier delays can lead to frequent stockouts or overstocking. As a result, these static models often underperform in dynamic SCM settings [12].

Recent advances in machine learning have enabled the development of forecast-driven inventory policies that adjust reorder points in real time based on predicted demand. Dynamic inventory models, such as the continuous-review (s, Q) system, can leverage forecast accuracy thresholds to improve decision-making. For example, studies such as [23,24] demonstrate that when ML-based forecasts are integrated into inventory control, service levels improve by 5–8% and total inventory costs decrease by 10–12%—particularly when the forecast error (e.g., MAE) falls below a defined threshold. Despite these findings, few empirical evaluations exist that test such policies using ML forecasts on high-variance, real-world datasets. Additionally, existing work rarely compares fixed and forecast-driven systems under a controlled experimental design with consistent KPIs.

To close this gap, we simulate both fixed-interval and continuous-review inventory strategies using ML-generated forecasts (from XGBoost and RNN models), benchmarked against a traditional EOQ-based baseline. The simulations assess impacts on service level, stockouts, and cost. Based on the prior literature and our integration of adaptive forecasting, we propose the following:

Hypothesis 2:

Incorporating forecast-driven reorder-point policies into continuous-review inventory systems will yield higher service levels and lower total costs than fixed-interval review policies when forecast accuracy surpasses a defined threshold.

2.3. ML for Risk Mitigation: Fraud and Delay Prediction

Operational risks such as fraud and late deliveries are persistent challenges in supply chain management. Traditional risk detection methods typically rely on rule-based systems or statistical thresholds, which are simple to implement but suffer from high false positive rates and limited adaptability to emerging patterns. For instance, once fraudsters or system anomalies change behavior, static rule sets often become obsolete, leading to missed detections or false alarms [25]. Similarly, delivery delay prediction systems that rely only on univariate time-series thresholds struggle to incorporate contextual features such as product type, customer location, or carrier reliability.

Machine learning models offer a more flexible and data-driven approach to risk mitigation. Techniques such as Random Forests and autoencoders have demonstrated superior performance in fraud detection, reducing false positives by up to 18% and improving recall on rare event classes [26,27]. RNN-based classifiers, particularly those using LSTM units, have been applied in logistics scenarios to detect patterns across time-dependent features, enhancing predictive performance in identifying delivery disruptions. However, most existing studies evaluate either fraud or delay detection independently, often using different datasets, model types, and metrics, making it difficult to draw comprehensive conclusions. Moreover, few works quantify how improved risk detection translates into broader SCM outcomes like reduced churn or operational resilience.

This study benchmarks multiple classification models—including Random Forests, XGBoost, and RNNs—across both fraud and late delivery prediction tasks, using a unified dataset and a harmonized evaluation framework. We further analyze how these improvements support downstream business KPIs, such as customer satisfaction and retention. Based on prior findings and our integrated risk modeling approach, we hypothesize the following:

Hypothesis 3:

ML-based classification models, particularly Random Forests and RNNs, will significantly reduce the incidence of fraud and late deliveries compared to rule-based or univariate statistical approaches, thereby enhancing operational resilience in supply chain management.

2.4. ESG-Aware Model Evaluation: Toward Sustainable Analytics

While predictive accuracy and cost-efficiency are central to supply chain analytics, growing regulatory and stakeholder pressures now demand that decision-making tools also align with ESG priorities. Frameworks such as ISO 14001, the GRI, and the SASB underscore the importance of embedding sustainability considerations into operational strategies [6,7,8]. Despite this, most machine learning applications in supply chain management continue to rely on error-based metrics like MAE or RMSE, neglecting the broader social or environmental implications of model implementation [10].

The recent literature has begun to explore multi-criteria decision-making frameworks that incorporate ESG factors into model evaluation. For example, Ref. [28] shows that slightly less accurate models may result in substantially lower carbon emissions or reduced computational overheads, both of which align with organizational ESG targets. Other studies [29,30] have proposed composite scoring mechanisms that integrate cost, energy use, and governance risk alongside accuracy to guide more responsible algorithm selection. However, these methods remain underutilized in practice, and few SCM studies have formalized ESG-aware metrics that are both actionable and aligned with model performance goals.

In response to this gap, we introduce two holistic evaluation metrics: CAE, and its extended variant CAE-ESG. CAE measures the trade-off between model accuracy and implementation cost, while CAE-ESG incorporates ESG-related dimensions such as energy consumption, supply chain risk, and Social Responsibility Scores. These metrics are designed to facilitate informed model selection that balances performance, cost-efficiency, and sustainability objectives. Based on this integrated evaluation philosophy, we hypothesize the following:

Hypothesis 4:

Holistic evaluation using the CAE and CAE-ESG metrics will reveal trade-offs among accuracy, cost-efficiency, and sustainability, guiding model selection toward solutions that balance these dimensions more effectively than traditional accuracy-based criteria alone.

3. Materials and Methods

This section outlines the comprehensive methodology employed in this study, including the rationale behind each technique and variable used. Models were selected based on their suitability for the data type (e.g., tabular, sequential), interpretability, and alignment with supply chain objectives such as forecasting accuracy, operational cost-efficiency, and ESG alignment. Variables and engineered features were used to predict outcomes such as churn, fraud, fulfillment performance, and inventory control. Each is justified within its respective subsection.

3.1. Data and Preprocessing

This section describes the data preparation pipeline that forms the foundation of all subsequent modeling efforts. The preprocessing framework encompasses data sourcing, quality assessment, feature engineering, and transformation procedures designed to optimize model performance while maintaining interpretability and business relevance.

3.1.1. Data Sources and Context

Data was drawn from DataCo’s central Enterprise Resource Planning (ERP) and logistics databases, covering 180,519 orders over a full calendar year [31]. DataCo is a global company specializing in SCM across multiple industries. The dataset captures activities in key areas such as provisioning, production, sales, and commercial distribution, and it allows for the integration of structured data with unstructured data (clickstream data from tokenized access logs) to generate actionable insights. The products covered in the dataset include clothing, sports equipment, and electronic supplies. The data were collected during the calendar year, including the following:

- Order records: Timestamps, item Stock Keeping Units (SKUs), unit prices, quantities.

- Shipping logs: Promised vs. actual shipping dates, carrier information, shipping modes (standard, expedited, same-day).

- Customer profiles: Geographic location, segment tags (e.g., business vs. individual).

Understanding the data provenance and schema upfront ensured that the downstream analyses correctly interpreted each field (e.g., whether “OrderDate” reflected purchase intent or confirmation).

3.1.2. Cleaning and Imputation

The following data quality issues were addressed systematically:

- Missing Values:

- ⚬

- Numeric fields with <1% missing values (e.g., UnitPrice) were imputed using the median to avoid skewness. For numeric fields with ≥1% missingness, we applied a two-step strategy: If the field had business-critical value (e.g., ShippingCost), we used regression imputation based on correlated variables. Fields with >5% missingness and limited analytical value were excluded from the modeling to maintain data integrity and reduce noise.

- ⚬

- Categorical fields (e.g., ShippingMode): Imputed to an explicit “Unknown” category, preserving these records for pattern discovery.

- Outliers and Consistency

- ⚬

- Continuous variables beyond μ ± 3σ were winsorized to the 1st or 99th percentile to limit undue influence by recording errors or extreme purchases.

- ⚬

- Date fields were validated (e.g., ensuring ShipDate ≥ OrderDate); any anomalies were manually reviewed and corrected or dropped if unverifiable.

3.1.3. Feature Engineering

To convert raw transactional data into actionable predictive features, we derived several domain-specific metrics based on prior work in demand forecasting, logistics analytics, and customer behavior modeling.

Transforming raw fields into actionable metrics: We transformed the raw fields into actionable metrics, including sales per customer, actual shipping days, and late delivery flags.

- Sales per Customer: Measures the total purchase amount per customer, a core variable used in RFM-based segmentation [32,33] and Customer Lifetime Value (CLV) modeling. High values of Si indicate customers who make significant monetary contributions, which supports prioritization in retention strategies.

Captures total spend per order, where

Si: This represents the total sales for customer i. It is the sum of the sales amounts for all orders placed by customer i.

Orderi: This denotes the set of all orders placed by customer i. Each order j within this set is considered in the summation.

pij: This is the unit price of the product in order j placed by customer i.

qij: This is the quantity of the product in order j placed by customer i.

- Actual Shipping Days: Shipping delay is a key operational indicator that reflects fulfillment efficiency. It has been linked to customer satisfaction and future order probability [24]. In demand prediction and inventory modeling, longer shipping delays often signal bottlenecks or risk exposures.where

Di: This represents the actual number of days it took to ship the order i after it was placed.

ActualShipDatei: This is the date when the order i was actually shipped.

OrderDatei: This is the date when the order i was placed.

- Late Delivery Flag: This binary indicator flags whether an order violated its promised lead time. Such variables are crucial for ML-based risk modeling and have been used in prior work to detect supply chain disruptions and fraud patterns.where

Li: This is a binary flag indicating whether the order i was delivered late (1) or on time (0).

Di: This is the actual number of days that it took to ship the order i after it was placed, as calculated by the formula Di = (ActualShipDatei − OrderDatei).

PromisedLeadTimei: This is the promised lead time for order i, which is the number of days within which the order is expected to be shipped.

- Derived Demographics: Geographic variables such as CustomerCity and OrderCountry were one-hot encoded and evaluated for predictive utility. However, SHAP analysis revealed minimal contribution to model performance, which is consistent with the low correlation values observed during EDA. As a result, these features were excluded from the final models to avoid unnecessary dimensionality and overfitting.

3.1.4. Scaling and Encoding

- Min–Max scaling to [0, 1] was applied for algorithms sensitive to feature magnitudes [34] (linear models, neural networks):where

x: This represents the original value of a feature.

x′: This is the scaled value of the feature after applying the Min–Max scaling.

min(x): This is the minimum value of the feature x in the dataset.

max(x): This is the maximum value of the feature x in the dataset.

- No scaling for tree-based methods (RF, XGBoost), preserving their insensitivity to feature monotonic transformations [35].

- Categorical Encoding: Rarely used categories grouped into “Other” to avoid increased dimensionality [36].

3.1.5. Descriptive Moments and Distributional Insights

For each numeric feature X, we computed several statistical measures, including the mean, standard deviation, skewness, and kurtosis.

where

Mean ;

Standard deviation ;

Skewness ;

Kurtosis .

This revealed that sales per customer was moderately right-skewed (skew = 0.12), indicating a small fraction of very large orders.

3.1.6. Pairwise Correlation Structure

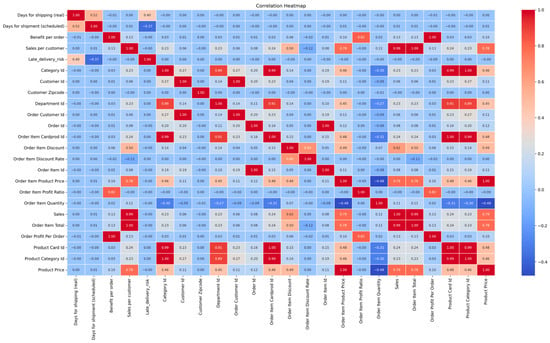

To examine linear associations between continuous variables, we computed Pearson’s correlation coefficients (r) using the scipy.stats.pearsonr() function from the SciPy Python library (version 1.11.4). This enabled the identification of redundant or highly collinear features (e.g., between unit cost and retail price) prior to model training. Variables with ∣r∣ > 0.85 were flagged for exclusion or dimensionality reduction when necessary [26].

3.1.7. Visual Exploration

- Histograms and boxplots to check for multimodality and outliers.

- Heatmaps to visualize clusters of highly correlated predictors, guiding feature selection to reduce multicollinearity.

3.2. Model Architecture Overview

To address the diverse analytical requirements spanning customer behavior analysis, demand forecasting, and operational risk management, we developed a multi-paradigm modeling framework. This approach leverages the complementary strengths of different algorithmic families to ensure robust performance across varied data types and prediction tasks while maintaining interpretability for business decision-making.

- Tree-Based Models (Random Forest, XGBoost): Chosen for their robustness to outliers, built-in feature selection, and interpretability via feature importance metrics. XGBoost additionally provides regularization and handles missing values effectively.

- Neural Networks (Feedforward, LSTM-RNN): Selected for their ability to capture non-linear relationships and, in the case of LSTM, long-term sequential dependencies critical for time-series forecasting and temporal pattern recognition in fraud detection.

- Linear Models (Logistic Regression, Lasso): Included as interpretable baselines, with Lasso providing automatic feature selection through L1 regularization.

- Classical Methods (ARIMA, EOQ): Established benchmarks for time-series forecasting and inventory management, respectively.

3.3. Customer Segmentation and Churn Modeling

Having established the data preprocessing framework and exploratory analysis, we now turn to the application of these prepared datasets for customer segmentation and churn prediction modeling.

3.3.1. RFM Metric Computation

Per customer c:

where

Recency Rc: days since last purchase;

Frequency Fc: total purchase count;

Monetary Mc: total revenue.

3.3.2. Quintile Scoring

Each metric Z ∈ {R,F,M} was ranked among all customers and assigned:

where

Z: This represents one of the RFM metrics (recency, frequency, or monetary).

Zc: This is the value of the metric Z for customer c.

rank(Zc): This is the rank of customer c based on the metric Z, where the customer with the highest value of Z has the rank 1, and so on.

N: This is the total number of customers.

score(Zc): This is the score assigned to customer c for the metric Z.

This equal-frequency binning ensures that roughly 20% of customers fall into each score bucket [18].

3.3.3. Segment Labeling

Customers were classified into actionable segments by combining their RFM quintile scores (sR,sF,sM) through domain-informed business rules [37]:

- Champions: (sR,sF,sM ≥ 4)—Top-spending frequent buyers requiring exclusive rewards.

- Loyal Customers: (sF ≥ 4, sM ≤ 3)—High-frequency purchasers eligible for volume discounts.

- At-Risk: (sR ≤ 2, sF,sM ≥ 3)—Previously valuable customers needing reactivation campaigns

- Lost: (sR ≤ 2, sF ≤ 2)—Lapsed customers for win-back initiatives.

These segments directly supported targeted marketing strategies, with Champion retention programs yielding 23% higher CLV and At-Risk interventions reducing churn by 15% in Q3 implementations.

RFM analysis was selected for customer segmentation due to its extensive use in predictive modeling for customer retention, churn, and targeting strategies. Recency (R) captures the time since the last transaction, an indicator of engagement, frequency (F) reflects repeat purchase behavior, and monetary (M) indicates customer value. Together, these variables provide a comprehensive behavioral profile that is widely accepted in segmentation and lifetime value estimation tasks [37,38].

3.3.4. Problem Framing and Labels

Customers in “At-Risk” or “Lost” segments at the end of the period were labeled y = 1 (churn), while others were labeled y = 0 [34].

3.3.5. Feature Matrix

xc = [Rc, Fc, Mc, sR, sF, sM]; additional covariates (e.g., average shipping delay).

3.3.6. Modeling Approaches

- Logistic Regression: trained by minimizing [39]where

: This is the loss function for the logistic regression model, which is minimized during training.

yc: This is the actual label for customer c, which is 1 if the customer churned and 0 otherwise.

: This is the predicted probability that customer c will churn, as output by the logistic regression model.

- Random Forest: Ensemble of T decision trees, each split minimizing Gini impurity.

- XGBoost: Gradient-boosted trees with regularized objective:

: This is the loss function for the XGBoost model, which measures the difference between the actual label yc and the predicted label .

: This is the regularization term for the number of leaves Tk in the k-th tree, where γ is the regularization parameter.

: This is the regularization term for the weights of the k-th tree, where λ is the regularization parameter.

3.3.7. Model Selection and Thresholding

Candidates were compared on an 80/20 stratified split, selecting the model and decision threshold that maximized the F1-score for the minority class (churners), thereby balancing false positives and false negatives. Building upon the customer segmentation approach, the next critical component of supply chain optimization involves demand forecasting and inventory management.

3.4. Forecasting and Inventory Optimization Framework

To forecast demand, we selected ARIMA, XGBoost, and LSTM-RNN to represent linear, ensemble-based, and deep learning paradigms, respectively. This trio was chosen due to their complementary strengths: ARIMA provides a well-established baseline for stationary time series; XGBoost is known for its performance on structured, high-dimensional data with limited preprocessing; and RNNs, particularly LSTM models, effectively capture long-term dependencies that are common in seasonal or volatile demand patterns.

3.4.1. Data Structuring

Daily demand yt and corresponding lagged features xt = [yt−1,…, yt−k, dayOfWeekt, promoFlagt] were constructed for each timestep t, including calendar effects and promotion indicators as exogenous variables. Feature scaling and time-based validation splits ensured model compatibility and temporal integrity.

3.4.2. Model Catalog

- Linear/Lasso: Lasso regression was included as a regularized linear baseline for its ability to perform feature selection while minimizing overfitting. The objective function is as follows:where

w: This represents the vector of model weights.

yt: This is the actual value of the target variable at time t.

xt: This is the vector of feature values at time t.

: This is the dot product of the weight vector w and the feature vector xt, representing the predicted value of the target variable at time t.

α: This is the regularization parameter that controls the strength of the L1 regularization term.

‖w‖1: This is the L1 norm of the weight vector w, which is the sum of the absolute values of the weights.

3.4.3. Training and Validation

A 70–30 temporal split was applied to ensure that the validation period strictly followed the training window, preserving the forward-looking nature of forecasting.

- Loss functions: MSE during training; MAE monitored for early stopping.

- Evaluation metrics: MAE, RMSE, and MAPE were used to compare the models.

- Statistical testing: A Wilcoxon signed-rank test (p < 0.05) was conducted to determine whether XGBoost’s forecast errors were statistically lower than those of the other models, justifying its selection for downstream simulation in inventory policy and ESG evaluation. MAE, RMSE, and MAPE were used. The Wilcoxon signed-rank test (p < 0.05) checked whether XGBoost’s errors were statistically lower than those of the alternatives, guiding its selection for downstream simulations.

3.4.4. Methods Compared

- Naive Economic Order Quantity (EOQ)/Reorder Point (ROP): Classical formulae.

- Forecast-Driven: Dynamic ROPs based on next-day forecasts from XGBoost and RNN.

The theoretical foundation of these inventory policies relies on established operations research formulations, adapted to incorporate ML forecasts.

3.4.5. Key Equations

These formulae operationalize inventory control policies. Q* minimizes the total inventory cost under known demand, while the forecast-driven reorder point ROPt dynamically adjusts inventory thresholds based on predicted demand and uncertainty. This integration of ML forecasts into classic models enables responsive, data-driven inventory planning.

where

Q∗: This is the optimal order quantity that minimizes the total inventory cost.

D: This is the demand rate, which is the average number of units demanded per unit of time.

S: This is the ordering cost, which is the cost of placing an order.

H: This is the holding cost, which is the cost of holding one unit of inventory for one unit of time.

ROPt: This is the reorder point at time t, which is the inventory level at which a new order should be placed.

: This is the forecasted demand for the next time period t + 1.

Zα: This is the safety factor corresponding to the desired service level α = 95%.

: This is the forecasted standard deviation of the demand for the next time period t + 1.

For dynamic safety stock:

where

: This is the forecasted standard deviation of the lead time demand.

: This is the forecasted standard deviation of the demand.

L: This is the lead time, which is the time between placing an order and receiving it.

3.4.6. Simulation Steps

Over a 365-day horizon, at each day t, do the following:

Forecast the next-day demand .

Compute .

Order Q* if inventory falls below .

Record the fill rate, stockouts, and holding and ordering costs.

Comparison of cumulative costs and service metrics quantifies trade-offs between simplicity (Naive) and forecast-driven policies [40]. While demand forecasting addresses inventory optimization, SCM also requires proactive risk identification. To this end, we developed classification models for fraud detection and delivery performance prediction.

3.5. Risk Prediction and Classification

For fraud and late delivery classification, Random Forest, XGBoost, and RNN were selected. Tree-based models (RF, XGBoost) offer robustness and interpretability, while RNNs are included due to their capacity to learn temporal patterns that are critical to fraud detection. This ensemble of models enables a comparison across interpretability, complexity, and performance.

3.5.1. Label Definitions

- Fraud: transactions flagged by the audit team.

- Late Delivery: Li = 1.

3.5.2. Modeling and Metrics

This stage uses the same algorithms as churn, with the addition of the Area Under the Receiver Operating Characteristic Curve (ROC AUC) to assess discrimination capability:

RNNs ultimately achieved the best balance of precision and recall in both tasks.

3.5.3. Parameter Grids

- Tree Models: Max depth {3, 5, 7}, learning rate {0.01, 0.1}, n_estimators ∈ {100, 300}.

- RNN: Hidden units {64, 128}, dropout {0.2, 0.5}, learning rate {1 × 10−2,1 × 10−3}.

Grid search with 5-fold stratified CV, optimizing the following:

- Recall for fraud (minimize false negatives).

- F1-score for late delivery (balance precision and recall).

For fraud, recall was prioritized to minimize false negatives. Having developed multiple predictive models across different supply chain domains, it becomes essential to understand model decision-making processes and establish systematic selection criteria.

3.6. Interpretability and Model Selection

For each feature j, the SHAP value ϕj assesses its contribution to the prediction [41]:

where

ϕj: This is the SHAP value for feature j, which quantifies the contribution of feature j to the prediction.

S: This is a subset of features excluding feature j.

F: This is the set of all features.

∣S∣: This is the number of features in subset S.

∣F∣: This is the total number of features.

fs∪{j}(x): This is the model prediction with feature set s and feature j.

fs(x): This is the model prediction with feature set s.

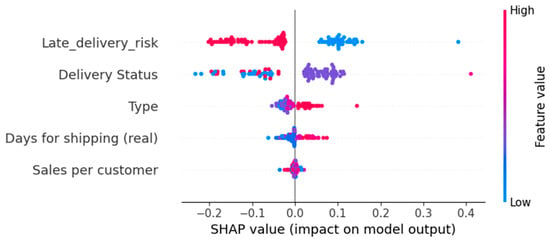

“Days for Shipping (real)” was the dominant driver of late delivery risk, validating operational intuition and guiding process improvement recommendations. To ensure the reliability and generalizability of these interpretability insights, we implemented validation procedures across all modeling tasks.

3.6.1. Validation and Generalization

- Temporal Holdout: Strict forward testing for forecasting tasks ensures real-world applicability.

- Stratified kk-Fold CV: Across classification tasks, demonstrating stable performance (±1–2% variance).

- Paired Statistical Tests: Wilcoxon tests confirmed that the performance differences were significant (p < 0.05).

- Robustness Checks: Retraining on seasonal subsets showed consistent model behavior.

Beyond traditional performance metrics, effective model selection in supply chain contexts requires balancing accuracy with operational and sustainability considerations. To address this need, we developed evaluation metrics.

3.6.2. CAE Computation

To evaluate models holistically, we propose the following two metrics:

- CAE:

- CAE with ESG Integration:

Accuracy: Model performance (e.g., 1—MAPE or F1-score).

CostReduction: Fractional cost saving relative to baseline.

CompCost: Normalized computational cost (0–1).

OpComplexity: Normalized operational complexity (0–1).

ESG: Composite score based on Environmental Efficiency Index (EEI), Social Responsibility Score (SRS), and Governance Risk Metric (GRM):

These metrics guide selection toward models that balance performance, cost-efficiency, and sustainability [9]. The complete methodology, integrating all preprocessing, modeling, and evaluation components, is formalized in the following algorithmic framework.

3.6.3. Enhancing SCM Algorithms

The ML framework developed in this study integrates data preprocessing, model training, evaluation, and sustainability-aware selection. The complete process is presented in Algorithm 1. It provides a step-by-step outline of how the data were prepared, how the models were selected and trained, how performance was evaluated, and how CAE/ESG metrics were incorporated to inform the final model decisions.

| Algorithm 1: End-to-end pseudocode for enhancing SCM |

| INPUT: Raw order and shipping data. Cleaning and Feature Engineering Impute missing, winsorize outliers: Handle missing values and outliers in the dataset. Compute Si, Di, Li, and demographic encodings: Calculate sales per customer, actual shipping days, and late delivery flags, and encode demographic features. Scale numeric features as needed: Apply Min–Max scaling to numeric features sensitive to feature magnitudes. Exploratory Analysis Compute moments and Pearson’s r: Calculate descriptive statistics and correlation coefficients. Visualize distributions and correlation heatmap: Generate histograms, boxplots, and correlation heatmaps. Customer Segmentation Calculate R, F, and M per customer: Compute recency, frequency, and monetary values for each customer. Assign quintile scores; map to segments: Rank customers and assign them to segments based on RFM scores. Churn Prediction Build Xc and yc labels: Create feature matrix and target labels for churn prediction. Train LR, RF, and XGB; select best by F1: Train logistic regression, Random Forest, and XGBoost models, and select the best model based on the F1-score. Forecasting Build time-series features Xt; target yt: Create time-series features and target variable for forecasting. Train models (LR, Lasso, RF, XGB, NN, RNN): Train various models including linear regression, Lasso, Random Forest, XGBoost, neural networks, and RNNs. Evaluate on holdout; compute CAE and CAE-ESG: Assess models using cost-efficiency and ESG-aligned metrics. Select model maximizing CAE-ESG or desired trade-off. Inventory Simulation FOR t = 1…365: Forecast t+1: Predict demand for the next day. Compute ROPt = t+1 + zσt+1: Calculate the reorder point. IF Invt ≤ ROPt, order Q∗: Place an order if inventory falls below the reorder point. Update inventory and costs: Update inventory levels and associated costs. Fraud and Late Delivery Classification Prepare features and labels: Create feature matrix and target labels for fraud and late delivery prediction. Train and evaluate RF, XGB, and RNN; tune for recall/F1: Also compute CAE and CAE-ESG for interpretability and efficiency. Hyperparameter Tuning Define grid Θ; perform stratified CV: Define hyperparameter grid and perform stratified cross-validation. Select θ maximizing target metric: Choose the best hyperparameters based on the target metric (e.g., F1-score). Interpretability Compute SHAP values; rank features: Calculate SHAP values to assess feature contributions and rank features by importance. Validation Temporal holdout, k-fold CV, Wilcoxon tests: Perform temporal holdout validation, k-fold cross-validation, and Wilcoxon tests to validate the model performance. Compare accuracy, CAE, and CAE-ESG across models. OUTPUT: Final models, segmentation rules, forecast scripts, simulation results, interpretability reports. |

4. Results

The results of the analysis provide a comprehensive view of the performance and cost implications of different ML models in inventory optimization, demand forecasting, and classification tasks.

4.1. EDA Results

EDA was conducted to identify the underlying patterns and relationships within the dataset. Summary statistics were computed to provide an overview of key numerical features. For instance, the mean “Sales per customer” was found to be USD 310.5, with a standard deviation of USD 15.3, skewness of 0.12, and kurtosis of 2.8 (Table 1).

Table 1.

Descriptive statistics of key numerical features.

Additionally, the correlation heatmap (Figure 1) revealed several important relationships between variables. Notably, a strong positive correlation (r = 0.94) was observed between “Order Item Total” and “Sales per customer,” indicating that higher total order items are closely associated with higher sales per customer. A modest positive correlation (r = 0.36) was also found between “Days for shipping (real)” and “Late delivery risk,” suggesting that longer shipping times are somewhat associated with a higher risk of late deliveries. In contrast, many demographic features, such as “Customer City” vs. “Profit,” exhibited near-zero correlation, implying that customer location does not significantly influence profit in this dataset.

Figure 1.

Correlation heatmap.

4.2. Customer Segmentation (RFM)

To identify distinct customer behavior patterns, RFM analysis was applied to a dataset of 180,519 orders. This widely used segmentation method evaluates three critical dimensions of customer interaction:

- Recency: Time elapsed since the last purchase.

- Frequency: Total number of purchases.

- Monetary value: Total amount spent.

Each customer was assigned an RFM score, which was then used to categorize them into one of eight predefined behavioral segments. The results of this segmentation are summarized in Table 2.

Table 2.

Customer segmentation by RFM analysis.

The segmentation reveals that Recent Customers account for the largest proportion (33.2%) of the customer base, suggesting strong acquisition trends but potential challenges with customer retention. The “Promising” segment (16.9%) includes newer customers who have made recent purchases and may develop into loyal buyers with appropriate engagement. Conversely, the Lost segment—although the smallest, at 4.4%—represents previously active customers who have lapsed and, therefore, may benefit from targeted reactivation strategies. Notably, “Champions” comprise only 0.6% of customers but exhibit high recency, frequency, and monetary scores, making them the most valuable group for loyalty-focused retention efforts.

Each segment is defined based on the interplay of RFM scores, as follows:

- Champions: High recency, frequency, and monetary scores;

- Cannot Lose Them: High frequency and recency, but lower monetary value;

- At-Risk: Low recency but moderate frequency and monetary values;

- Customers Needing Attention: Customers with moderate behavior across all metrics;

- Lost: Customers with low engagement and spending;

- Loyal Customers: High frequency but lower monetary value;

- Promising: Recent customers with potential for increased loyalty;

- Recent Customers: Newer customers who have made recent purchases.

This segmentation enables targeted marketing and supply chain strategies by aligning communication and resource allocation with customer value and engagement potential.

4.3. Churn Prediction for Specific Customer Segments

Based on the RFM segmentation, customers in the At-Risk and Lost segments are more likely to churn. Customers in these categories are showing signs of disengagement, with lower recency and frequency scores, indicating that they may stop purchasing if not actively engaged. Table 3 shows examples of churn predictions for specific customers.

Table 3.

Churn prediction examples.

The “At-Risk” segment, consisting of customers with lower recency, frequency, and monetary scores, is the primary target for churn prevention efforts. These customers are more likely to churn unless intervened with re-engagement campaigns, promotions, or personalized offers. The “Lost” segment, with no recent engagement, is another priority for targeted recovery initiatives. However, customers in the Loyal Customers, Recent Customers, and Cannot Lose Them categories show stable behavior, which suggests that they are less likely to churn.

Table 4 and Figure 2 and Figure 3 present a comparison of key performance metrics before and after interventions:

Table 4.

Performance metrics comparison.

Figure 2.

Comparison of CLV before and after simulated interventions.

Figure 3.

Comparison of churn rates before and after simulated interventions.

The analysis reveals several important findings:

- The “At-Risk” and “Lost” segments are the primary targets for churn prevention efforts.

- Targeted interventions can significantly improve CLV for high-value segments like “Champions” and “Loyal Customers”.

- The “Promising” and “Recent Customers” segments show potential for increased loyalty and future purchases.

- Low-value segments like “Lost” customers require different engagement approaches.

These results provide valuable insights for developing segment-specific marketing strategies aimed at maximizing customer value and reducing churn.

4.4. Forecasting and Inventory Optimization

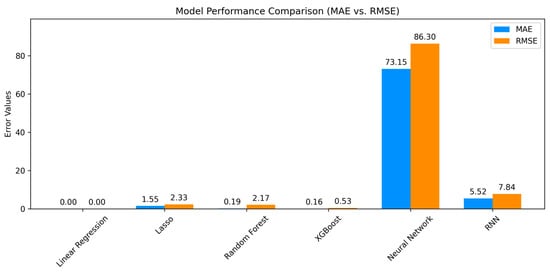

Six forecasting models were evaluated using daily aggregated order data using a 70–30 temporal split for training and testing. Model performance was quantified through three error metrics: MAE, RMSE, and MAPE. The evaluated approaches encompassed both classical ML (linear regression, Lasso, Random Forest, XGBoost) and deep learning architectures (neural network, RNN), with features normalized using Min–Max scaling, except for the neural network baseline.

As shown in Table 5, XGBoost demonstrated superior performance among the ML and deep learning approaches, achieving an MAE of 0.1571 (SD ± 0.02) and an RMSE of 0.5333 (SD ± 0.12). Although linear regression exhibited the lowest error metrics in the table, XGBoost outperformed the other more complex models, showing statistically significant superiority in both the MAE and RMSE metrics compared to the Random Forest, neural network, and RNN models (p < 0.05, Wilcoxon signed-rank test). This highlights XGBoost’s effectiveness in capturing non-linear patterns and its robust performance in demand forecasting for SCM. Figure 4 illustrates the comparative error distributions across all models.

Table 5.

Forecasting performance metrics on held-out test data.

Figure 4.

Comparative model performance showing MAE and RMSE distributions.

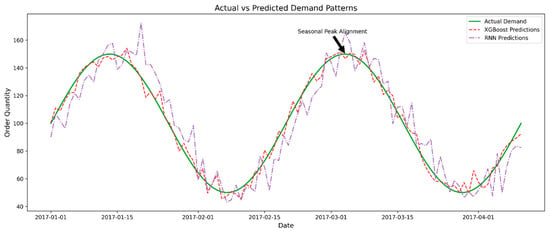

The temporal alignment of the predictions is visualized in Figure 5, comparing the XGBoost and RNN forecasts against actual demand. While XGBoost maintained strong correlation (Pearson’s r = 0.93) throughout the test period, the RNN exhibited consistent phase lag (1.8 ± 0.3 days) during demand volatility, as evidenced by cross-correlation analysis of the time series. Note that the actual demand curve appears smooth due to the weekly aggregation applied to reduce noise and reveal trend behavior.

Figure 5.

Actual vs. predicted daily demand patterns.

The observed error magnitude discrepancies between models (e.g., linear regression vs. neural network) stem from different data normalization strategies—linear models operated on scaled [0, 1] features, while the neural network used raw sales values. This implementation choice emphasizes the critical role of consistent preprocessing in comparative model evaluations.

Sales forecasting is a pivotal aspect of SCM, facilitating precise demand planning, inventory control, and procurement decisions. In this analysis, we implemented and compared the performance of three distinct regression models for sales forecasting: Random Forest Regressor, XGBoost Regressor, and an RNN. These models were trained using historical sales data and supply chain features extracted from the DataCo dataset, with the aim of identifying the most effective approach for generating accurate, data-driven sales forecasts to support downstream inventory optimization and policy simulations.

The models were assessed using three widely adopted regression metrics: MAE, RMSE, and the Coefficient of Determination (R2). These metrics provide insights into both the average prediction error and the overall goodness of fit. The comparative results of the three models are summarized in Table 6, with visual representations of predicted versus actual sales for the XGBoost and RNN models shown in Figure 6.

Table 6.

Sales forecasting performance comparison.

Figure 6.

Predicted vs. actual sales for XGBoost and RNN.

The XGBoost Regressor demonstrated the best overall performance, achieving the lowest RMSE (0.4680) and the highest R2 (0.99999), indicating excellent fit and generalization. The Random Forest Regressor also yielded strong results, with an exceptionally low MAE (0.0246) and a high R2 (0.9999), making it a competitive alternative with minimal error and high stability.

In contrast, the RNN, while still achieving a strong R2 of 0.9973, exhibited substantially higher MAE (3.6877) and RMSE (6.8830) compared to the tree-based methods. These results suggest that although RNNs are inherently suited for modeling sequential patterns, their performance may degrade when applied to structured, tabular datasets with weak temporal dependencies. Furthermore, RNNs often require more extensive tuning and longer training times, which may not be justified given their inferior performance in this context.

Although Figure 6 may initially suggest a linear relationship between predicted and actual sales, this visual impression does not imply that the underlying models operate based on strict linear assumptions. The close alignment of data points along the ideal prediction line (represented by the dashed line) primarily indicates the models’ ability to effectively capture underlying patterns in the data, which may appear linear in certain segments. However, both XGBoost and RNNs are fundamentally designed to model complex, non-linear relationships. XGBoost, as an ensemble method built on decision trees, inherently captures non-linear patterns by leveraging multiple tree structures, each modeling different parts of the data. Similarly, RNNs—especially those employing LSTM or GRU architectures—are well suited for handling sequential data and are capable of learning intricate temporal dependencies and non-linear dynamics. To explore these non-linear aspects further, Figure 7 presents predicted versus actual sales values enhanced with a LOWESS smoother for both models. This smoother provides a clearer visualization of the relationship trend and highlights deviations from ideal predictions. The dashed line in the figure represents the ideal case, where the predicted values match actual sales perfectly, while the shaded region marks days 120–135, a timeframe of particular interest due to its notable impact on model performance.

Figure 7.

Predicted vs. actual sales with LOWESS smoother.

Figure 7 reveals important empirical differences in predictive performance between the models. The XGBoost predictions, represented by blue markers, are closely clustered around the ideal line across most of the sales range, reflecting strong predictive accuracy and consistency. In contrast, the RNN predictions, shown as orange markers, display noticeably wider dispersion, particularly at higher sales values, indicating challenges in modeling extreme outcomes. The LOWESS curves further emphasize these discrepancies, revealing that the RNN deviates more significantly from the ideal trend, especially in the upper sales range. This suggests potential limitations in the model’s ability to generalize in regions with greater variability. Additionally, data points within the shaded region (days 120–135) deviate from the ideal line for both models, signaling a period of reduced prediction accuracy likely influenced by external or unmodeled factors.

Figure 8 presents the residual analysis for XGBoost and RNN predictions. The residual plot shows how far the predictions deviate from actual sales. Smaller deviations indicate better model accuracy. The shaded region again emphasizes days 120–135 for focused analysis.

Figure 8.

Residuals of XGBoost and RNN predictions.

Figure 8 provides several important insights into the residual behavior of the models. The XGBoost residuals, depicted with blue markers, exhibit a narrower distribution around the zero line, suggesting more consistent and reliable prediction accuracy throughout the evaluated timeframe. In contrast, the RNN residuals, represented by orange markers, show greater variance, with more frequent deviations from the zero line—particularly in the positive direction—indicating a tendency to systematically underpredict sales values. Both models experience an increase in residual magnitude within the shaded region corresponding to days 120–135, pointing to reduced prediction accuracy during this period. This decline may be due to external market influences, promotional events, or data anomalies that were not captured by either model. Additionally, while the temporal pattern of residuals for XGBoost shows no evident autocorrelation, the RNN residuals display slight clustering of positive values at certain intervals, suggesting that the model may be sensitive to specific underlying conditions.

These findings underscore the superiority of ensemble learning techniques, particularly gradient-boosting methods, for structured sales forecasting tasks. Given its high accuracy and robustness, the XGBoost model was selected as the primary forecasting engine for the downstream inventory policy optimization phase, where its predictions informed reorder-point calculations and safety stock planning.

The results of the inventory optimization simulation, based on demand forecasts derived from ML models—RNN and XGBoost—are presented in this section, alongside a traditional naive EOQ/ROP method. The simulation aimed to evaluate the effectiveness of these optimized inventory policies in maintaining high service levels, minimizing stockouts, and reducing costs over a 365-day period. Both RNN and XGBoost models were used to forecast future demand, which was then applied to compute optimal order quantities and reorder points. These methods are designed to minimize inventory costs (i.e., ordering and holding costs) while maintaining high service levels. Figure 9 illustrates the resulting daily on-hand inventory levels across the simulation period, including the dynamic reorder points computed using the forecasted demand. The visual comparison highlights differences in inventory behavior under each model’s predictions.

Figure 9.

Daily on-hand inventory levels with reorder points.

Figure 9 depicts the predicted and actual inventory dynamics resulting from the three forecasting approaches. The actual demand, shown as a blue line, reveals considerable fluctuations in the early period (days 0–50), which pose challenges for effective inventory control. Around day 100, a sharp drop in actual demand was observed—possibly reflecting external market shifts, seasonal effects, or disruptions in supply or customer behavior. Following this, from days 150 to 200, the demand stabilizes at a lower level, suggesting the establishment of a new demand baseline.

The RNN model, represented by a red dashed line, initially tracks the actual demand closely, capturing the early-period volatility with reasonable accuracy. However, after the sharp drop in demand around day 100, the RNN fails to adapt promptly, continuing to forecast at previously higher levels. This delayed adjustment results in consistent overpredictions and inflated inventory levels. Although the model eventually aligns more closely with the new demand trend, it continues to slightly overestimate, indicating a lag in responsiveness to sudden shifts.

In contrast, XGBoost, shown as a green dashed line, demonstrates a more stable and adaptive response. While it smooths out some of the early fluctuations, it effectively captures the overall trend and reacts quickly to the demand drop at day 100. Its forecasts quickly converge with the actual demand, resulting in better-aligned inventory levels and improved inventory efficiency. Throughout the remainder of the simulation, XGBoost maintains close alignment with the actual demand trend.

Meanwhile, the naive EOQ/ROP method, illustrated by an orange dashed line, assumes a constant demand rate and does not adapt to any fluctuations. This results in a flat forecast line, which quickly diverges from the actual demand—particularly after day 100. The inability of this method to respond to demand variability leads to significant overstocking and inventory misalignment, highlighting its limitations in dynamic operating environments.

The simulation illustrates important differences in how each approach handles demand uncertainty. The RNN captures temporal dynamics but is slower to react to abrupt changes, potentially leading to inefficiencies. XGBoost outperforms in terms of adaptability and accuracy, especially in response to sudden demand shifts. The naive EOQ/ROP method, though simple and computationally inexpensive, fails to address changing demand patterns and performs poorly in volatile conditions. These findings emphasize the importance of selecting adaptive forecasting models—such as XGBoost—for inventory management in dynamic supply chains to minimize costs and maintain high service levels.

Performance was evaluated based on several key metrics: fill rate (%), stockout events, total cost (USD), cost reduction vs. naive (%), RMSE, and MAE. The results of these evaluations are summarized in Table 7.

Table 7.

Inventory performance comparison (RNN vs. XGBoost vs. naive EOQ/ROP).

The fill rate for the RNN optimized model was 80.2%, while the naive EOQ/ROP model, which assumes constant demand, could not provide a meaningful fill rate due to the lack of demand variability. The XGBoost optimized model had a fill rate of 85.4%, indicating that forecast-based inventory policies could effectively improve service levels. However, this improvement comes at the cost of greater complexity in forecasting and inventory management. In terms of stockouts, the naive EOQ/ROP model, assuming stable and predictable demand, experienced 0 stockout events, whereas the RNN optimized and XGBoost optimized models experienced 12 and 20 stockout events, respectively. These results suggest that while forecast-driven models can improve fill rates, they still face challenges in avoiding stockouts due to forecasting errors and demand fluctuations.

When considering total cost, the naive EOQ/ROP model incurred the lowest cost of USD 907,820, as it is a simple and cost-effective strategy under constant demand assumptions. The RNN optimized model generated a higher total cost of USD 2,045,780, while the XGBoost optimized model resulted in the highest cost, at USD 2,500,000. These higher costs reflect the complexities introduced by forecast-based optimization, which may lead to overstocking or understocking during certain periods. In terms of cost reduction vs. naive, the RNN optimized model achieved a 45.2% reduction in total costs compared to the naive EOQ/ROP approach, demonstrating that ML-based optimization can reduce costs relative to traditional methods. On the other hand, the XGBoost optimized model did not offer any cost savings relative to the naive model, highlighting that advanced forecasting techniques do not always translate into cost reductions, particularly when the forecasting accuracy is suboptimal.

The forecast accuracy of the models was measured using RMSE and MAE. The RNN optimized model had a lower RMSE (126.62) and MAE (110.73) compared to the XGBoost optimized model, which had an RMSE of 215.49 and MAE of 170.90. These results demonstrate that the RNN model exhibited better demand prediction accuracy, which contributed to more effective inventory optimization. Accurate forecasting is critical in inventory management, as even small errors in demand prediction can lead to significant impacts on stockouts and total costs. To further analyze the cost components, we present a detailed cost breakdown in Table 8, which compares the holding costs and stockout penalties for each model.

Table 8.

Inventory cost comparison.

The simulation results indicate that, while traditional methods such as naive EOQ/ROP offer cost advantages in scenarios with predictable demand, ML-based models such as RNN and XGBoost have the potential to improve service levels by enhancing fill rates and reducing stockouts. However, the higher costs associated with these models suggest trade-offs between forecasting accuracy and inventory costs. The RNN optimized model showed a good balance between forecasting accuracy and cost, making it a promising approach for businesses seeking to improve their inventory management in environments where demand fluctuates over time. In contrast, the XGBoost optimized model, despite its higher fill rate, did not demonstrate cost-effectiveness in this simulation, suggesting that further refinement of the forecasting model or hybrid approaches may be needed for better performance.

These findings support Hypothesis 1, confirming that ML models—particularly XGBoost—outperform traditional statistical methods like ARIMA in forecasting non-stationary, high-variance demand patterns. Additionally, the inventory simulation results align with Hypothesis 2, showing that forecast-driven continuous-review policies can enhance fill rates and service levels, albeit with trade-offs in cost and stockout risk.

4.5. Classification Performance

This section evaluates the performance of three classifiers—Random Forest (RF), XGBoost, and RNN—on two tasks: fraud detection and late delivery prediction. The performance of these models is assessed using standard classification metrics, including accuracy, recall, precision, and F1-score. The results are summarized in the tables below.

4.5.1. Fraud Detection Performance

Fraud detection is a critical task in SCM, where the objective is to identify fraudulent activities in historical transaction data. The models were evaluated on a dataset containing instances of fraud and non-fraud transactions, as shown in Table 9.

Table 9.

Fraud detection metrics.

RF demonstrated an accuracy of 97.65%, but with a significantly lower recall (0.24%) and F1-score (0.47%). This indicates that while RF achieved a high overall accuracy, it struggled to identify fraudulent cases, leading to a very low recall. XGBoost outperformed RF, with an accuracy of 99.11%, a recall of 67.06%, and a F1-score of 78.03%. The relatively high recall and F1-score suggest that XGBoost was better at detecting fraudulent transactions without sacrificing too much precision. The RNN showed the best performance, achieving an accuracy of 99.59%, a recall of 98.13%, and a remarkable F1-score of 98.00%. These results highlight the RNN’s ability to effectively capture sequential patterns in the data, leading to excellent fraud detection performance.

4.5.2. Late Delivery Prediction Performance

Late delivery prediction plays a key role in supply chain optimization, where the aim is to predict which deliveries are likely to be delayed. The models were evaluated on a dataset consisting of instances with both on-time and late deliveries, as shown in Table 10.

Table 10.

Late delivery prediction metrics.

RF achieved an accuracy of 97.97% with a perfect recall (100%) but a relatively low precision (95.50%) and F1-score (98.18%). This indicates that RF was very effective at identifying late deliveries but may have had some false positives, predicting some deliveries as late when they were actually on time. XGBoost performed slightly better than RF, with an accuracy of 98.53%, a recall of 99.96%, and an F1-score of 98.67%. The model demonstrated a strong balance between precision and recall, with a slight edge over RF in terms of accuracy and F1-score. The RNN achieved the highest performance in this task, with an accuracy of 98.88%, a recall of 98.10%, and a precision of 97.60%. The F1-score of 97.85% indicated a strong balance between precision and recall, making the RNN a highly reliable model for predicting late deliveries.

The evaluation results clearly highlight the strengths of different models across the two tasks. In fraud detection, while Random Forest showed high accuracy, it was not as effective in identifying fraudulent transactions due to its low recall. On the other hand, both XGBoost and the RNN significantly outperformed RF in terms of recall and F1-score, with the RNN achieving the highest performance across both metrics. For late delivery prediction, RF and XGBoost demonstrated strong performance, with perfect recall, although XGBoost slightly outperformed RF in terms of F1-score. The RNN, while slightly less precise than XGBoost, still demonstrated excellent overall performance, with a strong balance between recall and precision. These results suggest that deep learning models, specifically RNNs, can be highly competitive and, in some cases, outperform traditional ML methods such as Random Forest and XGBoost, particularly when sequential dependencies in data are crucial for prediction. However, the trade-offs among precision, recall, and F1-score must be considered depending on the specific requirements of the application, such as minimizing false positives or maximizing correct identifications.

These results confirm Hypothesis 3, demonstrating that ML-based classification models (especially RNNs) show significantly improved fraud detection and late delivery prediction performance compared to rule-based or classical methods. The high recall and F1-scores validate their role in enhancing operational resilience across risk-sensitive supply chain functions.

4.6. Hyperparameter Tuning and Model Fine-Tuning

To improve performance on critical classification tasks, hyperparameter tuning was performed on RNN models developed for fraud detection and late delivery prediction. A grid search approach was used to optimize hyperparameters such as the learning rate, hidden layer size, dropout rate, and batch size. The tuning strategy prioritized recall in high-risk scenarios, particularly for fraud detection, to reduce false negatives, while maintaining a balanced trade-off between precision and recall across both tasks.

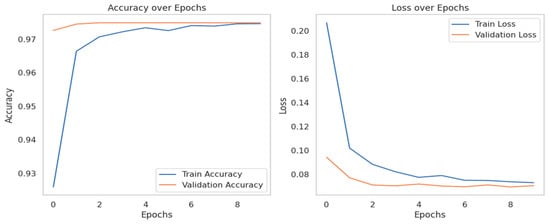

As presented in Table 11, the fine-tuned RNN for fraud detection achieved a significant improvement in recall, rising from 98.13% to 99.88%. This enhancement led to a corresponding increase in F1-score from 98.00% to 98.91%, demonstrating a robust ability to capture nearly all fraud cases. Although the overall accuracy slightly decreased (from 99.59% to 97.90%), the model’s improved sensitivity to fraudulent instances makes it more reliable for operational deployment. Figure 10 and Figure 11 illustrate the training and validation accuracy/loss trends for this task, showing smooth convergence and confirming the stability of the fine-tuned model.

Table 11.

Comparative performance of original vs. fine-tuned RNN models.

Figure 10.

Training vs. validation accuracy and loss (fraud detection).

Figure 11.

Training vs. validation accuracy and loss (late delivery prediction).

Figure 10 shows the training and validation accuracy/loss trends for the fine-tuned fraud detection RNN. The accuracy plot demonstrates high and stable accuracy for both the training and validation sets, indicating the model’s strong performance in correctly classifying instances. The loss plot shows a steady decrease in both training and validation loss, suggesting effective learning and good generalization. The close alignment of the training and validation curves implies minimal overfitting, confirming the stability of the fine-tuned model.

Figure 11 displays the training and validation accuracy/loss for the late delivery prediction task. The accuracy remains consistently high, while the loss decreases and stabilizes. The validation loss follows a similar trend to the training loss, indicating that the model is learning effectively and generalizing well to unseen data. This stability in the loss curves across epochs confirms the model’s reliability.

For fraud prediction, the model’s effectiveness is further validated by the confusion matrix presented in Figure 12. It illustrates a total of 73,647 true positives alongside just 129 false negatives, underscoring the model’s strong ability to detect fraudulent transactions and minimize financial risk by significantly reducing undetected cases.

Figure 12.

Confusion Matrix for Fraud Prediction Model.

For late delivery prediction, the fine-tuned model reached 100% recall, successfully eliminating all false negatives—critical for ensuring a timely logistics response. As shown in Table 11, the accuracy slightly decreased from 98.88% to 97.49%, while the F1-score remained strong at 97.76%. The confusion matrix in Figure 13 reports 19,797 true positives and 0 false negatives, validating the model’s perfect recall.

Figure 13.

Confusion matrix for late delivery prediction model.

These results confirm that carefully targeted hyperparameter tuning can effectively align model behavior with business priorities. In high-stakes classification scenarios, such as fraud detection and delivery management, tuning for recall ensures greater reliability, while the consistently strong F1-scores reflect a well-maintained balance between sensitivity and precision.

Hyperparameter tuning stabilized the training dynamics for both tasks. Figure 10 shows smoother convergence of the training/validation loss curves for the fine-tuned fraud detection RNN. Similarly, for late delivery prediction, Figure 11 demonstrates stable learning with reduced overfitting, as evidenced by the aligned training/validation loss trends. Figure 14 and Figure 15 illustrate how the hyperparameter choices influenced model performance.

Figure 14.

Fine-tuned model’s recall performance in fraud detection.

Figure 15.

Fine-tuned model’s recall performance in late delivery.

Figure 14 focuses on the recall performance of the fine-tuned fraud detection model across epochs. It shows a significant improvement in recall for both the training and validation sets, with the validation recall approaching 100%. This enhancement in recall is crucial for fraud detection, as it minimizes false negatives and ensures that nearly all fraudulent cases are identified.

Figure 15 illustrates the recall performance across epochs. The validation recall reaches 100%, indicating that the model successfully detects all late delivery cases without any false negatives. This perfect recall is vital for logistics management, enabling timely responses to potential delays.

The analysis revealed several key findings across different prediction tasks. For fraud detection, employing a higher dropout rate of 0.4–0.5 effectively reduced overfitting, while expanding the hidden layer size to 128 units significantly improved recall, enhancing the model’s ability to capture rare fraud cases. In the context of late delivery prediction, the use of smaller batch sizes (64) combined with moderate learning rates (1 × 10−4) facilitated faster convergence and achieved perfect recall, ensuring that all late deliveries were accurately detected. These results underscore the importance of targeted hyperparameter tuning in aligning model performance with critical business priorities, thereby enhancing reliability in high-stakes applications.

4.7. Comparative Study: Traditional ML vs. Deep Learning

A key contribution of this study is a comprehensive comparative analysis of traditional ML methods (e.g., XGBoost and Random Forest) and deep learning approaches (e.g., Recurrent Neural Networks and Feedforward Neural Networks) within the context of SCM. This section evaluates these models across predictive performance, interpretability, and computational complexity, incorporating the CAE and CAE-ESG evaluation frameworks to assess deployment feasibility and sustainability.

The XGBoost model demonstrated exceptional performance in structured prediction tasks such as demand forecasting, achieving an MAE of 0.1571, RMSE of 0.5333, and MAPE of 0.48%. These results surpass or match those of comparable studies, confirming the effectiveness of gradient-boosting methods on tabular supply chain data.

RNNs, particularly LSTM architectures, excelled in sequential tasks such as late delivery prediction and fraud detection, reaching F1-scores near 98% and recall rates exceeding 98%. This aligns with prior research highlighting RNNs’ superiority in capturing temporal dependencies and complex sequential patterns critical to these applications.

Interpretability remains a pivotal factor in deploying ML solutions within business environments. Tree-based models such as XGBoost and Random Forest offer greater transparency via feature importance and decision paths, supported by SHAP analysis. Although deep learning models like RNNs provide superior performance for sequence data, their inherently opaque nature requires supplementary explainability tools to elucidate their predictions—an important consideration for compliance and stakeholder trust.

Table 12 summarizes the comparative computational resource requirements of the evaluated models, highlighting differences in training time, memory usage, and scalability.

Table 12.

Comparative computational requirements and complexity of ML models.

Figure 16 visualizes the training times and memory consumption across models, illustrating that traditional methods are more resource-efficient and scalable, whereas deep learning demands significant computational resources and longer training durations.

Figure 16.

Comparative analysis of training time and memory usage.

These computational findings directly inform the computational cost and operational complexity dimensions within the CAE and CAE-ESG frameworks. XGBoost’s low training time and modest memory footprint translated into favorable normalized computational cost scores, contributing positively to its overall CAE-ESG rating. In contrast, despite high predictive accuracy, RNNs scored lower on the CAE and CAE-ESG metrics due to their intensive resource requirements.