1. Introduction

In the field of programming education, the use of visual representations of program flow has been shown to be effective in helping beginners comprehend abstract grammatical structures and logical operations [

1]. One early example is the TRAKLA2 system proposed by Malmi et al. [

2], which enables students to interactively manipulate data structures (such as arrays, lists, and trees) and observe algorithm behavior through a graphical interface. This initiative marked a significant step in integrating visualization into programming instruction. Building upon this, Rajala et al. [

3] incorporated automated feedback and real-time testing mechanisms into TRAKLA2, allowing learners to identify and correct errors during their operations. This advancement reinforced students’ conceptual understanding of algorithms and demonstrated that combining visualization with formative assessment can greatly enhance learning outcomes.

More recently, visualization technologies have advanced further. For instance, Dr. Scratch not only provides a graphical interface but also performs behavioral analysis and assesses computational thinking skills based on students’ learning processes. This development represents a shift from simple “instructional assistance” to “diagnostic feedback” [

4]. In another study, Paredes-Velasco et al. [

5] introduced the TutoApp platform, where students design teaching modules for their peers. This “learning-by-teaching” approach was found to improve comprehension of conditional logic and control structures, although the researchers noted that the absence of scaffolded guidance could impose additional cognitive load on learners.

Additionally, Venigalla and Chimalakonda [

6] developed FlowARP, an augmented reality visualization tool designed to demonstrate program flow. A user study involving 44 undergraduate students revealed that users solved code snippet-based problems more quickly when using FlowARP. In a similar vein, Hu et al. [

7] integrated a visual debugger within an IDE to allow students to observe variable changes and control flow in real time, thereby enhancing their early-stage debugging and testing abilities.

In summary, the evolution of visual programming instruction has progressed from image-assisted learning to more interactive environments featuring automatic feedback and diagnostic support. This trend continues to move toward more strategic and context-sensitive applications. In terms of learning outcomes, Visual Programming Instruction (VPI)—especially when integrating flowchart tools with robotics—has been shown to significantly enhance students’ programming performance and fluency. These benefits are observed regardless of learners’ prior programming experience and are closely linked to increased motivation to pursue further learning [

8,

9].

At the level of conceptual understanding, visualization tools such as augmented reality and animated control flow diagrams help learners grasp abstract concepts like variables, conditionals, and recursion more effectively. They also reduce problem-solving time and enhance mastery of algorithmic logic [

6,

7]. Moreover, interactive and visually oriented learning environments (e.g., robot programming and AR-based platforms) have been found to increase student engagement and motivation, making programming more accessible and appealing [

6,

8,

9].

The aforementioned studies primarily focus on introductory programming, especially the visual representation of programming syntax [

10]. However, once students move beyond the beginner stage and face more advanced courses or assignments, their challenges shift from syntax comprehension to solving complex programming problems. Even with foundational knowledge in syntax, data structures, and algorithms, students may still struggle with advanced problem solving. This indicates that, in programming education, the core challenge lies not merely in understanding syntax but in applying learned concepts to practical real-world problems. The failure to cultivate such essential skills may hinder students’ problem-solving capabilities and, by extension, impede progress toward the Sustainable Development Goals (SDGs) [

11].

SDG 4, “Quality Education,” specifically aims to promote educational equity and inclusion, while enhancing learners’ skills and literacy [

12]. Programming—an essential problem-solving tool—is not only a fundamental technical skill but also a catalyst for achieving other SDG goals, such as smart cities, sustainable energy, and reduced inequality. Without effective programming education, students may struggle to meet the demands of a digital and sustainability-driven future, weakening society’s overall problem-solving and innovation capacities, and thereby hindering SDG progress [

13].

To advance SDG 4, a practical educational framework that fosters comprehensive competencies is essential. The CDIO (Conceive, Design, Implement, Operate) framework offers an ideal structure to meet this need. CDIO emphasizes a student-centered approach that spans the full cycle from problem conception to system operation [

14]. This practice-oriented model fosters systematic thinking, critical analysis, and integrated problem solving—precisely the competencies targeted by SDG 4 [

15]. Furthermore, CDIO promotes interdisciplinary knowledge application and skill development, equipping students with the agility to tackle real-world challenges and aligning education more closely with the principles of sustainable development [

16,

17].

Additionally, CDIO aligns naturally with programming education, as programming inherently follows a structured process as follows: defining and decomposing problems (Conceive), designing solutions (Design), implementing logic (Implement), and validating and refining functions (Operate). These stages mirror the four core phases of CDIO [

18]. Programming also demands multilayered thinking, including abstraction, modularity, and logical reasoning—all of which are systematically cultivated through CDIO’s pedagogical model [

19,

20]. Moreover, CDIO supports project-based learning, a common practice in programming instruction. By encouraging students to design and develop real-world projects, CDIO not only supports the needs of programming education but also enhances students’ practical abilities, preparing them to meet the diverse challenges of their future careers [

21,

22].

In light of these considerations, this study adopts the CDIO framework as the core instructional model to promote innovative teaching in programming language courses. It integrates an automatic architecture diagram generation system as the primary tool and incorporates project-oriented learning to holistically enhance students’ programming proficiency and practical application capabilities. The application of the CDIO framework in this study is described as follows:

Conceive (C): In the “Conceive” phase, students are guided toward problem identification and the development of corresponding solutions. At the beginning of the course, students are introduced to the fundamental concepts, logical structures, and application scenarios of programming languages. They are encouraged to recognize real-world development challenges, such as complex program logic and the cognitive load associated with learning syntax. With the aid of the automatic architecture diagram generation system, students can intuitively comprehend the logical relationships within programs and begin to consider how to apply programming knowledge to address practical problems.

Design (D): The “Design” phase emphasizes students’ ability to plan and structure their projects. In this study’s experimental course, students are tasked with implementing an interpreter project. They are required to design the architecture of the project—from lexical analysis to syntax parsing—by gradually defining the functional modules of the interpreter. The automatic architecture diagram generation system serves as a visual aid, helping students better understand program structure and operational flow. During this phase, students also learn how to design efficient data structures while balancing system performance and maintainability.

Implement (I): After completing the design phase, students proceed to the “Implement” phase, where they transform their design into executable code. The implementation process is supported by an automatic grading system that provides real-time feedback, enabling students to identify execution errors and make necessary adjustments and optimizations.

Operate (O): The “Operate” phase focuses on the presentation and evaluation of the project’s application value. Upon completion of the interpreter project, students demonstrate the functionality of their implementations and critically assess their effectiveness and applicability. In-class higher order activities such as peer feedback and improvement proposals further support students in reflecting on the design and development process, thereby enhancing their capacity for continuous improvement.

The primary aim of this study is to develop a system that automatically generates architecture diagrams from source code to help students better understand the structure and logic of programming solutions more quickly and accurately. By introducing a tool that visualizes program code, this study aims to alleviate learning difficulties commonly encountered in programming courses. In addition to enhancing students’ learning outcomes and project completion rates, the system is also expected to serve as a valuable instructional aid for educators. The specific research objectives are as follows:

Evaluate the impact of the system on students’ learning, comprehension, and completion of the interpreter project, particularly in terms of reducing the difficulty and time associated with manually drawing architecture diagrams.

Manually generating architecture diagrams is often a challenging and time-consuming task for students, which not only increases their cognitive load but may also negatively affect their learning outcomes. The automatic diagram generation system developed in this study aims to alleviate this burden, enabling students to concentrate more on solving programming problems. To assess the impact of this tool, student feedback will be collected through a post-implementation questionnaire, allowing for a systematic evaluation of the system’s effectiveness in improving learning outcomes and project performance.

In the context of higher education programming curricula, this study specifically targets a third-year required course titled Programming Languages. This course is situated after foundational courses in C/C++ and data structures, and focuses on deeper concepts such as language semantics, interpreter development, and syntax analysis. Given the complexity of tasks involved, including implementing a Scheme-based interpreter, the course naturally aligns with the CDIO model’s emphasis on design, implementation, and problem solving. This pedagogical context differs from introductory programming education and better illustrates how CDIO can support higher level cognitive and practical learning in programming.

3. Methods

3.1. Experimental Course

The experimental course in this study is “Programming Language”, a compulsory course for third-year students in the Department of Information Engineering. The course aims to provide students with a deeper understanding of the fundamental concepts underlying programming languages, covering topics ranging from language design principles to practical issues encountered in language implementation.

The course begins by introducing the core elements of programming languages, including syntax, semantics, and execution mechanisms, which lay the foundation for comprehending more advanced features. Subsequently, students are introduced to the design philosophies of various languages, equipping them with the ability to evaluate and compare the advantages, disadvantages, and application contexts of different programming paradigms. In addition, the course explores key technical and conceptual challenges in programming language design, such as language expressiveness, compiler construction, and execution efficiency. Through this, students are trained to identify and resolve issues that arise in real-world programming language development.

The primary assignment in this course is to implement an interpreter for the Scheme language. This implementation requires students to address both syntactic and semantic analysis (hereinafter collectively referred to as “grammar analysis”). The interpreter must be capable of executing Scheme expressions only if the grammar analysis is successful; otherwise, it should return appropriate error-handling messages.

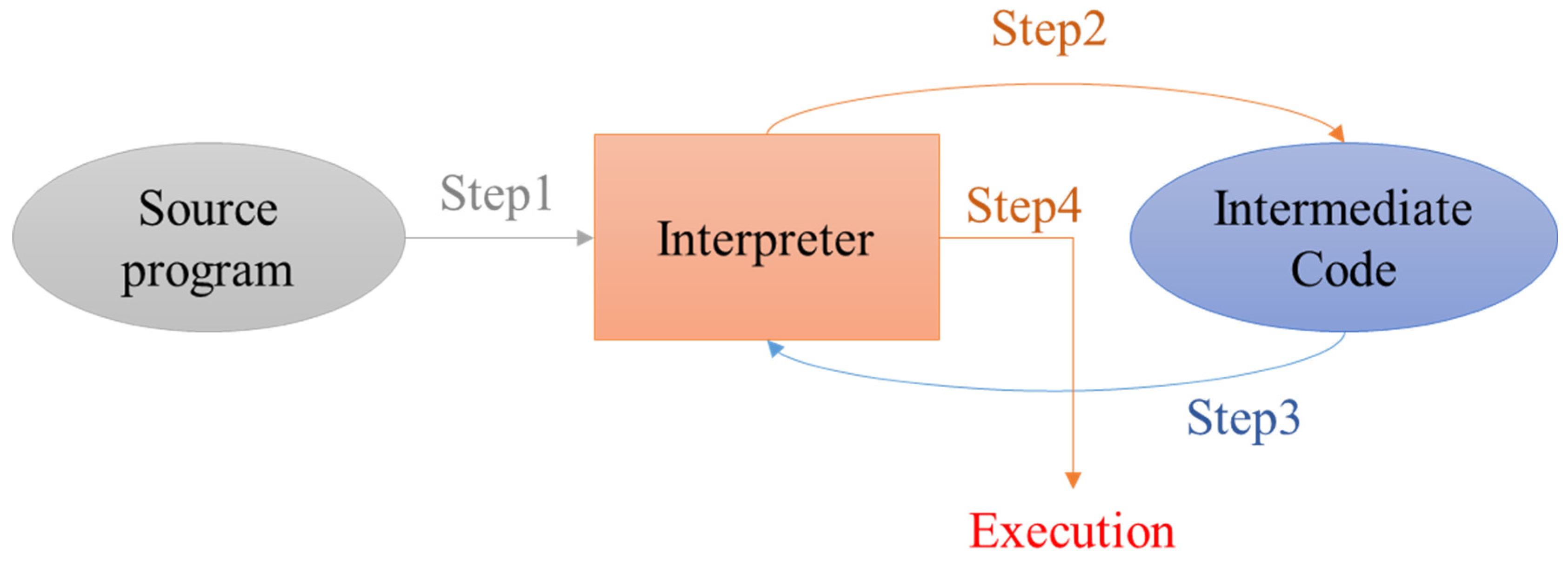

Figure 1 illustrates the interpreter’s execution flow. First, the original Scheme program is passed to the interpreter (Step 1). The interpreter then parses and translates each line into intermediate code (Step 2), which is fed back into the interpreter (Step 3) for execution using corresponding functions (Step 4). This process repeats from Step 2 to Step 4 until the program is fully executed.

To implement such an interpreter, students must first understand the fundamental characteristics of the target language. In this course, the target is Scheme, a functional programming language that is significantly different from imperative languages such as C/C++, Java, and Python. Scheme was originally designed for artificial intelligence applications and is based on a minimalist syntax centered on atoms and lists. Unlike imperative languages that rely on explicit data types and variable declarations (e.g., int a; in C), Scheme represents the smallest unit as an atom, which can be either a symbol or a number.

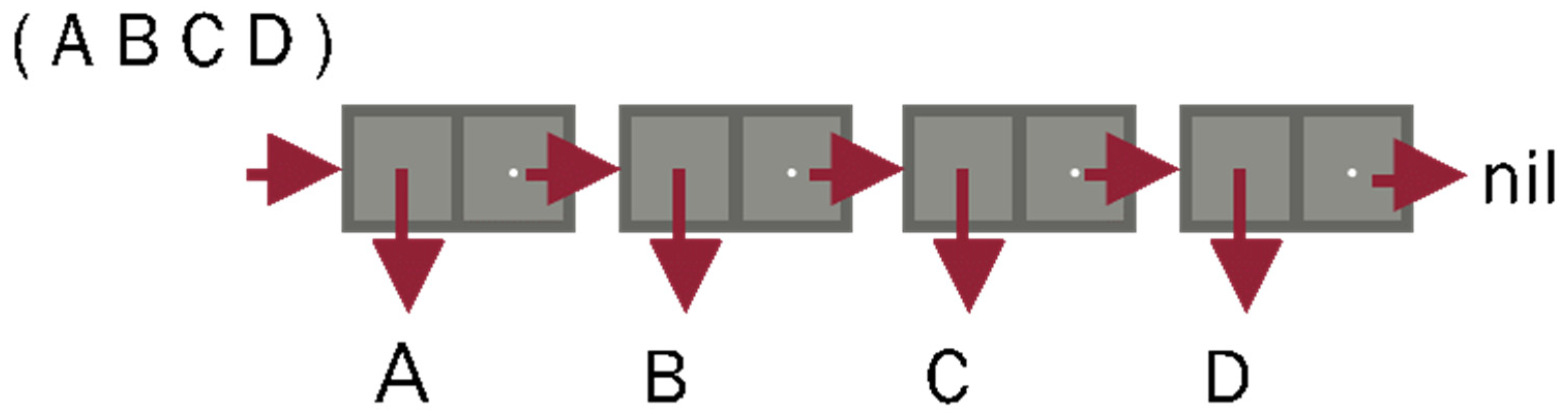

Another aspect that challenges students is Scheme’s list-based expression format. Lists in Scheme are enclosed in parentheses and represent a series of elements, such as (A B C D). If visualized as a linked list data structure, each list element corresponds to a node, where each node contains two pointers: one pointing to the content (e.g., A), and the other pointing to the next node. As shown in

Figure 2, the first arrow represents the starting reference of the list, which points to the first node. Each subsequent node follows the same structure, linking together to form the entire list.

This visual interpretation of lists not only helps students understand how Scheme internally represents data but also bridges the gap between abstract functional constructs and familiar data structures like linked lists, which they have previously encountered in imperative programming.

The architecture diagram helps to make program implementation more comprehensible. Therefore, instructors often use such architectural descriptions during lectures to explain program execution. As shown in

Figure 3, the Scheme language provides the built-in function list to create lists in the format of (arg1... argn). For example, when the interpreter receives the input (list 3 ‘(4 5) 6 ‘(7 8)), it should output (3 (4 5) 6 (7 8)). If visualized graphically, the structure of the output can be seen in

Figure 4. In this example, since list treats each argument as an element of a new list, the number 3 becomes the first element, the list (4 5) becomes the second element, 6 the third, and (7 8) the fourth.

From the examples in

Figure 2 through 4, it is evident that Scheme differs significantly from commonly used imperative programming languages in terms of syntax and expression. As a result, when students practice, they often spend a considerable amount of time just trying to understand the correct structure of the expected output for a given input. Even though instructors and teaching assistants provide a variety of examples in class, students still frequently require assistance in drawing architecture diagrams to comprehend the structure of various Scheme expressions.

To prevent students from simply tailoring their programs to pass fixed test cases—which would compromise the generality and reusability of their implementations—instructors often vary the problem requirements. However, this also causes students to feel confused or overwhelmed when attempting to create architecture diagrams before coding. This uncertainty can ultimately affect their implementation and timely submission of assignments.

To address this issue, the present study aims to develop a system that automatically generates architecture diagrams, helping students visualize abstract program structures more efficiently. This system not only alleviates the burden of manual diagram drawing but also enhances students’ understanding of program behavior and improves the practical completion rate of programming assignments.

3.2. Experimental Subjects

The participants in this study were students enrolled in the “Programming Languages” course, a required class focusing on the principles of programming language design and implementation, with a particular emphasis on the development and application of interpreters. In the spring semester of the 2025 academic year, a total of 118 students enrolled in this course and served as the experimental group. To evaluate the impact of the automatic architecture diagram generation system on students’ learning outcomes, this study compared the project completion rate of the experimental group with that of students who took the same course during the 2024 academic year (control group, n = 101), who did not use the system.

To minimize potential cohort bias, both the control group (2024) and experimental group (2025) enrolled in the same course during the post-pandemic period, where higher education in Taiwan had returned to stable in-person instruction. Therefore, no pandemic-related disruptions were present during data collection. Additionally, this course is offered in the senior year, and all students across both cohorts had completed the same required prerequisite programming and computer science courses, ensuring equivalent learning histories. To further verify equivalence in programming competence, we compared students’ grades in a core prerequisite course—Data Structures—from the previous semester and found no statistically significant difference between the two groups. These findings support the assumption that the control and experimental groups were comparable in baseline programming proficiency.

All participants were between the ages of 20 and 21 and possessed basic programming knowledge. Prior to the experiment, the research team explained this study’s purpose and procedures to all participants and obtained written informed consent in accordance with research ethics standards. Both groups were taught by the same instructor, covered identical course content, and completed the same project assignment. The only difference was that the experimental group received support from the automatic architecture diagram system.

To verify whether the sample size had adequate statistical power, this study conducted a post hoc power analysis using G*Power 3.1. Based on the mean and standard deviation of the project scores for the two groups (control: M = 11.36, SD = 9.02; experimental: M = 15.76, SD = 19.96), the Cohen’s d effect size was calculated to be approximately 0.29, indicating a small to medium effect. Using a significance level of α = 0.05 and a two-tailed test, the statistical power was found to be 0.86, exceeding the commonly accepted threshold of 0.80. This result suggests that the sample size was sufficient to detect a meaningful difference between the groups.

3.3. Experimental Procedure

The experimental procedure of this study was divided into the following stages (see

Figure 5’s research procedure) to effectively evaluate the influence of the automatic architecture diagram system on student learning outcomes and instructional workload:

This study used the Programming Languages course as the main experimental setting. The experimental group consisted of students currently enrolled in the course, while the control group was composed of students who completed the course in the previous academic year. Students in the experimental group used the automatic architecture diagram system throughout the semester, while those in the control group learned through traditional instruction methods, such as slide presentations and blackboard explanations. Both groups received the same teaching content and were required to complete the same interpreter implementation project.

- 2.

Data Collection Phase

During the semester, multiple sources of data were collected to analyze the system’s effectiveness. At the beginning of the term, students in the experimental group completed a pre-test questionnaire assessing learning motivation and cognitive load. The system was then used throughout the 17-week semester. Afterward, students completed a post-test questionnaire to assess changes in their learning experience. Additionally, this study recorded the number of interpreter project tasks completed and project grades, which served as quantitative indicators of project completion.

- 3.

Data Analysis Phase

After the data collection, statistical analyses were conducted to compare the project performance of the experimental and control groups. In addition, paired-sample analyses were used to examine changes in learning motivation and cognitive load within the experimental group. These analyses aimed to determine whether the use of the automatic architecture diagram system significantly affected students’ learning engagement and perceived cognitive effort.

3.4. Experimental Tools

3.4.1. Automatic Architecture Diagram System

To support students in the implementation of the interpreter project, the course provided a structured model illustrating the detailed processes of instruction reading, parsing, execution, and output. Due to the syntactic structure of the Scheme language, a Skewed Binary Tree (SBT) was adopted as the core data structure for command representation. However, students often found it difficult to translate these structures into actual program code using only textual explanations. To address this challenge, this study developed a visualization system based on a front-end/back-end architecture, referred to as the SBT visualization system.

Figure 6 shows that students interact with the system via a web browser by entering Scheme expressions into an input field. Upon submission, the API server handles the request and invokes the Scheme parser through a subprocess. The parser decomposes the input command and returns a structural description as a string. The server then converts this output into JSON format, which is passed to the front-end for rendering using D3.js to generate a real-time visualization of the SBT.

For instance, the input (A B C D) would be visualized as shown in

Figure 7, which shows an example of how students visualize the evaluation of nested Scheme expressions, such as recursive list applications, with the system automatically generating a node-based tree structure. This visual output allows students to verify whether their expression structures align with expected recursion logic.

To ensure system scalability, the API server and Scheme parser are developed as independent components. This modular design allows for flexible parser implementation in other languages, thus enabling future support for non-Scheme programming languages in the course. The actual user interface is shown in

Figure 8. Students can input any S-expression in the designated input area. For example, entering (list 3 ‘(4 5) 6 ‘(7 8)) and clicking the analyze button will immediately display the corresponding SBT structure. This system significantly reduces the instructional burden and improves students’ learning efficiency by enabling instant in-class visualizations and serving as an exploratory tool during project work. This tool is evaluated with the key objectives of assessing its impact on students’ learning, comprehension, and completion of the interpreter project, particularly by reducing the difficulty and time required for manual architecture diagram construction.

In addition, the system incorporates an input validation mechanism that checks the structural integrity of the submitted S-expressions before rendering. This feature ensures that users are notified when syntactic errors—such as unmatched parentheses or malformed list structures—are detected in their Scheme code. Instead of proceeding with incorrect visualization or execution, the system halts the rendering process and provides a real-time error message indicating the nature of the problem. This not only prevents confusion caused by erroneous outputs but also reinforces the importance of syntactic precision, thereby serving as a formative feedback tool to support students’ debugging and code-refinement skills.

From a system architecture perspective, the current implementation utilizes a Scheme expression parser that translates student-written code into S-expressions represented in JSON format, which are then rendered via a custom canvas using D3.js. This architecture allows real-time updates and tree-structured visualization of nested list expressions. Performance testing showed that the system can render structures up to 10 nested levels with acceptable latency (<500 ms), beyond which layout clarity and user interaction deteriorate.

It is important to clarify that the term “architecture diagram” in this context does not align with the conventional notion of software architecture diagrams in software engineering (e.g., UML class diagrams, call graphs, or module interaction maps). Rather, the system serves as a syntax-level structural visualization tool, aimed specifically at helping students mentally construct and analyze the internal logic of their programs. The terminology reflects the pedagogical intention of enabling learners to visualize and decompose abstract code structures in a manner that reduces cognitive load and fosters accurate mental models of recursion and list processing.

In the context of this study, the visualizations are primarily used during interpreter design and debugging stages, helping students to clarify how lists are composed, how expressions are evaluated, and how function applications are logically structured. While the current system does not yet support higher level representations such as control flow diagrams or module interaction views, it has proven effective in scaffolding students’ understanding of the data and logic structures specific to functional languages in this study. Future development aims to extend the system to support imperative constructs and broader program architecture visualizations, such as those applicable to C or Java.

It should be noted that

Figure 4 illustrates the type of diagrams originally created by instructors using PowerPoint’s built-in shapes and connectors during classroom teaching. As such, the diagrams were relatively rudimentary and time-consuming to prepare, especially when dealing with deeply nested Scheme expressions. The automatic architecture diagram system developed in this study was specifically designed to alleviate this burden. By automating the visualization process, the system not only increases instructional efficiency but also ensures consistency in diagrammatic representation. Furthermore, the system supports zoom functionality, enabling users to scale and inspect complex diagrams in detail, thereby enhancing readability and interaction for both students and teachers.

3.4.2. Learning Motivation and Cognitive Load Questionnaire

To assess students’ learning experiences, this study adopted and adapted the motivation and cognitive load scales developed by Hwang et al. [

31]. The learning motivation questionnaire was based on the ARCS model and included seven items measured on a six-point Likert scale. The items were revised to better reflect the context of this study. The cognitive load questionnaire was also adapted from Hwang, Yang, and Wang [

31]’s study, containing eight items, likewise measured on a six-point Likert scale. Both questionnaires were reviewed by subject experts to ensure content validity, and reliability analysis was conducted using pre-test data. The Cronbach’s alpha values for the learning motivation and cognitive load scales were 0.939 and 0.904, respectively—both exceeding the commonly accepted threshold of 0.85—indicating high internal consistency reliability.

3.4.3. Project Test System

Upon completing the Scheme interpreter project, students were required to submit their work to a designated platform. The project score was determined based on the number of test cases successfully passed, with a maximum score of 70 points. This automated evaluation mechanism ensured a consistent and objective measure of students’ project implementation performance.

3.5. Educational Context

The proposed instructional model was implemented in a third-year undergraduate course, Programming Languages, offered in the Department of Computer Science. This course is positioned after introductory programming (e.g., C/C++, Java, Python) and data structures, and introduces students to the theoretical and practical foundations of language design. Topics include lexical and syntax analysis, abstract syntax tree construction, interpreter implementation, and modular evaluation strategies. The major project required students to design and implement a simplified Scheme interpreter, which involves multi-stage system thinking, functional decomposition, and structural debugging—all of which align with CDIO principles.

While this study focused on an intermediate-to-advanced programming course, the visual architecture diagram system and pedagogical model proposed have the potential to be adapted to introductory-level courses with simplified features. This will be considered in future studies.

6. Conclusions, Limitations, and Future Research

6.1. Conclusions

While this study was conceptually grounded in the CDIO framework and aligned with the SDG 4 objective of promoting quality education, the findings also present several actionable insights for programming instruction in higher education. First, the integration of real-time structural visualization tools can significantly reduce students’ confusion when learning recursive or nested expression logic—an area traditionally difficult for novices. Second, embedding a staged interpreter project encourages iterative refinement and promotes deeper conceptual learning through practical engagement. Finally, the alignment of formative assessment checkpoints with visual feedback accelerates debugging skills and supports autonomous learning. These strategies are transferable across functional and imperative language courses and may serve as references for instructors aiming to enhance engagement and conceptual clarity in intermediate-level programming courses.

Compared with previous studies—most of which apply visual aids or animation tools primarily at the introductory grammar level to support learning of basic programming concepts such as variables, loops, or pointers—this study extends visualization tools into advanced project development, focusing on interpreter implementation in the Scheme language. This approach integrates two key aspects: “architectural comprehension” and “modular logic planning”, helping students synthesize knowledge and apply skills to context-specific challenges. Notably, this study is the first to apply an automatic diagram generation system within a real-world course that aligns ESD and CDIO principles, validating its pedagogical impact through empirical data.

The main contributions of this study lie not only in the system design and its measurable outcomes, but also in the construction of an innovative sustainability-oriented teaching model suitable for higher education. This model emphasizes ability cultivation, practical application, structural understanding, and problem solving. If motivational design and feedback mechanisms are integrated in future iterations, the proposed framework has the potential to evolve into a scalable programming pedagogy aligned with SDG 4, offering a powerful bridge between educational innovation and sustainable development.

Although this study focused on Scheme—a functional and declarative programming language—as the target for interpreter implementation, the core instructional approach is not language-dependent. The visual architecture diagram system emphasizes structural thinking, function nesting, and evaluation logic, which are equally applicable to imperative languages such as C or Java, especially when teaching control flow, recursion, and data structure operations.

We acknowledge that learning Scheme while simultaneously implementing an interpreter constitutes a dual cognitive challenge. However, this deliberate blending is pedagogically motivated for the following reason: students are required to internalize the syntax and semantics of a new language while also applying that understanding in a structured design process. This dual task, guided by visual feedback and staged CDIO activities, promotes deeper engagement and reflection.

Although this study adopts the CDIO framework and integrates visual tools—an approach that shares conceptual parallels with prior research (CDIO-ML) [

56]—the educational focus and technical implementation differ substantially. CDIO-ML emphasizes competition-driven activities and multi-disciplinary data fusion within the context of machine learning. In contrast, the current study targets the conceptual and practical difficulties encountered in programming language courses, specifically interpreter implementation in a functional language (Scheme). This curricular context demands high-level abstraction, including recursive structure processing and symbolic expression parsing, which distinguishes it from general programming or data science courses.

Although the average project performance increase (6.91 points) may appear modest when compared with skill enhancements reported in CDIO-ML (e.g., 15%), it is important to note that the project in this study involves significantly higher cognitive load and complexity. The improvements occurred in the context of a single-semester implementation with no competition-based incentives, underscoring the system’s value in scaffolding advanced programming tasks through visualization and formative assessment. This supports the CDIO framework’s goal of promoting sustainable and reflective learning in technically challenging contexts.

Moreover, although this study was conducted in a more advanced programming course, the core idea of integrating CDIO principles and visual programming tools offers promising potential for earlier-stage programming education. In future work, we plan to adapt the system for simplified syntax structures to support novice learners and explore its use in foundational courses such as Introduction to Programming and Data Structures.

Although the primary focus of this study was on enhancing the quality of programming education through structured visual scaffolding and CDIO-aligned project learning, it also aligns with the broader vision of Education for Sustainable Development (ESD) and Sustainable Development Goal 4 (SDG 4), particularly in fostering equitable access to complex knowledge through scaffolding, cognitive support, and formative assessment. In this sense, the system contributes to sustainability by supporting students with diverse prior knowledge and reducing dropout risks in advanced programming courses, which are often bottlenecks in computing curricula.

Rather than emphasizing environmental sustainability directly, the pedagogical model aims to advance sustainability in terms of instructional equity, learning continuity, and support for cognitive diversity—key principles of inclusive and lifelong learning emphasized in SDG 4.

6.2. Future Research

Although this study demonstrates a statistically significant improvement in project performance, we acknowledge limitations regarding the assessment of higher order learning outcomes such as systems thinking and knowledge transfer. The observed variability in project scores—evident in the relatively high standard deviation of the experimental group—suggests that the tool’s impact may differ across learner profiles.

Future research should incorporate longitudinal designs to assess knowledge retention over time and evaluate transferability to other programming paradigms and tasks. In addition, the integration of qualitative data (e.g., structured interviews, concept mapping, or diagram-based reasoning tasks) could yield deeper insights into students’ structural comprehension and metacognitive development.

Moreover, while the current system effectively functions as a cognitive scaffold for structuring recursive logic and list-based evaluation, its design has not yet fully incorporated motivational strategies aligned with the ARCS model (Attention, Relevance, Confidence, Satisfaction). The observed post-test decline in student motivation suggests that the system may lack elements that sustain engagement, especially over extended project cycles.

Future versions of the system will explore the integration of motivational design features, such as gamified progression (e.g., milestone unlocking), peer-based benchmarking, and adaptive goal setting. These features have been widely adopted in platforms like Codecademy to improve engagement and persistence in programming education. Additionally, competition-based strategies, as demonstrated successfully in CDIO-ML, will be considered—particularly in the form of peer review showcases or design challenges that align with the CDIO “Operate” phase.

Nevertheless, we also acknowledge that the current version of this study did not measure resource efficiency metrics such as instructor workload reduction, nor did it assess environmental or social impacts such as deployment energy consumption or digital equity. These aspects will be incorporated into future research through indicators such as time-on-task reduction for teachers, energy usage profiles of cloud-based deployment models, and accessibility evaluation for students with differing technological access. Future iterations will explore how these features can contribute not only to learning outcomes but also to sustained engagement—thus more fully realizing the inclusivity and sustainability values articulated in SDG 4.

To enhance the applicability of the proposed system, future work should also explore its adaptability to other programming paradigms. While this study focuses on functional programming using Scheme, the underlying visualization framework could be extended to support imperative languages such as C or Python by incorporating control flow elements and variable tracking features. Additionally, given its web-based architecture and automatic feedback capabilities, the system shows promise for integration into online learning environments, such as MOOCs or flipped classrooms for introductory programming courses. Adapting the interface and scaffolding levels for beginners could further broaden its utility across diverse educational contexts.

6.3. Study Limitations

Although the findings of this study demonstrate the effectiveness of the automatic architecture diagram generation system in programming instruction, several limitations in the research design may affect the explanatory power and generalizability of the results.

First, this study adopted a quasi-experimental design, with the experimental and control groups drawn from different academic years. Although the course content, instructional materials, and teaching arrangements were kept consistent across both cohorts, potential confounding factors—such as historical effects or cohort differences (e.g., variations in learning styles, prior programming experience, or academic backgrounds)—could not be fully controlled.

Second, the primary measure of learning achievement was students’ project scores, which, while reflective of practical performance, may not comprehensively capture changes in students’ underlying skills such as syntactic understanding, logical reasoning, and problem-solving ability. Relying solely on project-based evaluation may therefore limit the depth of insight into students’ cognitive skill development.

Third, this study employed quantitative questionnaires as the primary instruments for assessing learning motivation and cognitive load. While these tools offer standardized and scalable measurements, the absence of qualitative data (e.g., interviews, open-ended responses, or classroom observations) restricts the ability to explore students’ in-depth experiences, learning strategies, and cognitive processes while interacting with the system.

Future research is encouraged to adopt mixed-method approaches, integrating both quantitative and qualitative data, to gain a more holistic and nuanced understanding of students’ learning experiences and the mechanisms through which visual programming support tools influence cognitive and motivational outcomes.

Additionally, the current implementation of the architecture diagram system was tailored for functional programming in Scheme, which may limit its immediate applicability to other programming paradigms. While the underlying visualization mechanism is language-agnostic in theory, its parser and rendering logic are currently Scheme-specific. This restricts its use in imperative or object-oriented programming environments where structural complexity may require different forms of representation, such as control flow graphs or class hierarchies. Future development could focus on extending the system’s parser and visualization logic to support languages such as C, Python, or Java. This may involve implementing support for visualizing branching, loops, function calls, and object hierarchies. Moreover, the tool could be adapted for upper-level courses such as compiler construction, software testing, or software architecture, where students deal with complex program structures and require modular design thinking. These adaptations would increase the scalability and applicability of the system beyond its current scope.