1. Introduction

The transition to a more sustainable, resilient, and decarbonized energy system is accelerating the deployment of distributed generation (DG), mainly from renewable sources. However, integrating DG into distribution networks introduces several technical challenges, including the risk of unintentional islanding [

1]. These islands occur when a radial distribution grid, which is not prepared for islanded operation, loses its connection with the main grid but remains energized by the downstream connected DG units [

2]. The island’s electrical variables become uncontrollable, which constitutes a risk to the safety of users and maintenance personnel [

3,

4]. To prevent this problem, each DG must have special protection to identify islanding and disconnect itself. This protection is called anti-islanding (also known as loss of main) and is mandatory in many regulations and grid codes [

4,

5,

6,

7,

8,

9].

A critical characteristic of anti-islanding protection is sensitivity, defined as its ability to detect the formation of an island [

10] rapidly. Another essential characteristic of anti-islanding protection is its reliability, defined as the ability not to trigger false alarms under various disturbances [

10]. These disturbances, to which the anti-islanding protection must demonstrate reliable behavior, include both distribution system disturbances and bulk power system (BPS) disturbances, which the protection should not mistakenly identify as islanding events.

In a context of high DG penetration, the unreliable behavior of anti-islanding protection following a power system disturbance can trigger a massive disconnection of DG if the anti-islanding protections mistakenly interpret this event as an islanding event. This is precisely what caused the Low Frequency Demand Disconnection (LFDD) on 9 August 2019, following a disturbance in the UK bulk power system, which began with a lightning strike and was followed by other events in the system [

11].

Similarly, on 10 April 2023, a significant disturbance in the Southwestern United States highlighted ongoing challenges with anti-islanding protection in large-scale solar photovoltaic (PV) installations. During this event, one solar PV plant in Utah incorrectly triggered its anti-islanding protections in response to a usually cleared fault in the bulk power system (BPS). This led to the disconnection of over 900 MW of generation, as the anti-islanding protections misinterpreted the transient grid disturbance as an islanding event. Despite being intended for distribution-level applications, these protections were not adequately adjusted for BPS-connected inverters, further emphasizing the need for enhanced coordination and configuration of protection schemes in high-penetration DG scenarios [

12]. This incident, alongside the 2019 UK event, underscores the systemic risk that unreliable anti-islanding behavior poses to grid stability in systems with substantial DG.

The literature presents various methodologies for island detection, including remote methods, based on communications, and local methods, which are classified as active or passive depending on whether a disturbance is actively introduced into the network [

13,

14]. Among these, passive local methods are particularly significant, as they can be applied across a broader range of generation technologies, whereas active methods are typically limited to converter-based generation. This work, however, focuses on passive methods.

Passive islanding detection methods are generally divided into traditional and advanced categories. Conventional methods include the frequency and voltage threshold approach (FVT) identified by ANSI 81U/O and ANSI 27/59, the rate of change of frequency (RoCoF) approach as per ANSI 81R [

15], and the voltage vector shift (VVS) detection approach [

16,

17,

18]. These are among the most widely employed techniques in distribution systems with significant DER penetration [

7,

19,

20].

The primary challenge associated with classical passive anti-islanding protections lies in their large non-detection zones (NDZs). These NDZs represent operational conditions where the electrical variables, such as voltage, frequency, or RoCoF, remain within acceptable limits despite the formation of an island, making detection difficult. This issue arises because passive methods rely on thresholds to trigger detection. With minimal mismatch between generation and load, the system remains stable within those thresholds, leading to undetected islanding conditions [

21,

22,

23,

24].

Concerning the current regulations, the IEEE 1547-2018 standard [

5] establishes ride-through requirements, specifying the voltage, frequency, and RoCoF ranges within which DG must remain connected to the grid during adverse events. However, these requirements necessitate the adjustment of passive anti-islanding protections with calibration thresholds that increase the NDZs, thereby reducing their effectiveness in detecting specific critical islanding scenarios.

Advanced passive methods, in contrast, employ advanced signal processing, machine learning, and classification techniques to enhance their performance and reduce false tripping. However, despite their advantages, these methods require devices with high processing capabilities and significant computational resources for implementation due to the extensive data sets needed for training [

25,

26,

27]. Like traditional methods, advanced approaches still depend on accurately defining operational thresholds, which remains challenging for passive detection methods [

28].

In [

29], the Automatic Setting Map Methodology (ASMM) is presented. It proposes calculating optimal settings through an algorithm that maximizes a weighted objective function, where weights can be arbitrarily chosen based on a preference for higher sensitivity or stability. Significant computational effort is required due to the number of cases employed to maximize the objective function. Moreover, the methodology has not been evaluated for frequency transients caused by disturbances in the EPS, whose duration can be longer than the times involved in islanding detection, complicating the application of this method, which is based on the superposition of the variables’ dynamics in a temporal framework. Additionally, as it is based on a dynamic analysis of the variables, applying this method for the threshold adjustment of other protections necessitates modifications to the original algorithm, further increasing the computational effort, as is presented in [

30], where the ASMM is used to find the best under-voltage block setting to improve the performance of commonly used passive IDM.

In [

31], a methodology based on dynamic security regions has been presented to identify the minimum requirements for RoCoF-based (ANSI 81R) anti-islanding protections for DERs, which ensure the safe operation of the Brazilian Interconnected Power System. While this methodology successfully identified minimal safety conditions for setting the lowest RoCoF thresholds, it did not provide an analysis of the performance of these settings in terms of sensitivity across various islanding scenarios, relegating the contribution of this work solely to the study of security in the EPS.

The Bayesian Entropy Methodology (BEM) offers a novel approach for optimizing anti-islanding protection settings, balancing sensitivity and reliability. BEM minimizes the uncertainty in protection decisions by using entropy to measure uncertainty in detecting islanding events. Employing Bayesian inference and entropy modeling determines the optimal calibration thresholds (such as time delay and pickup settings) that enhance stability and reliability while reducing NDZs. The advantage of BEM lies in its ability to achieve these settings without the need for extensive computational resources or large datasets, as seen in other works to optimize the settings for anti-islanding protection [

32].

This work presents an improvement to the BEM by introducing a modification in the entropy modeling, incorporating specific penalties to eliminate low-entropy zones with no practical application in protection systems. This modification enhances the original BEM. Additionally, the modified entropy within BEM has been applied to obtain the optimal adjustment settings for passive protections in the IEEE 34-node test system. The results indicate that BEM allows for identifying optimal settings for passive protections in response to a wide range of events, including BPS disturbances, while utilizing reduced datasets. This highlights one of the main contributions of BEM: significantly reducing the computational effort required to achieve optimal anti-islanding protection settings for passive systems.

The remainder of this work is organized as follows:

Section 2 presents the BEM and proposed modifications in the entropy modeling through the modified entropy function.

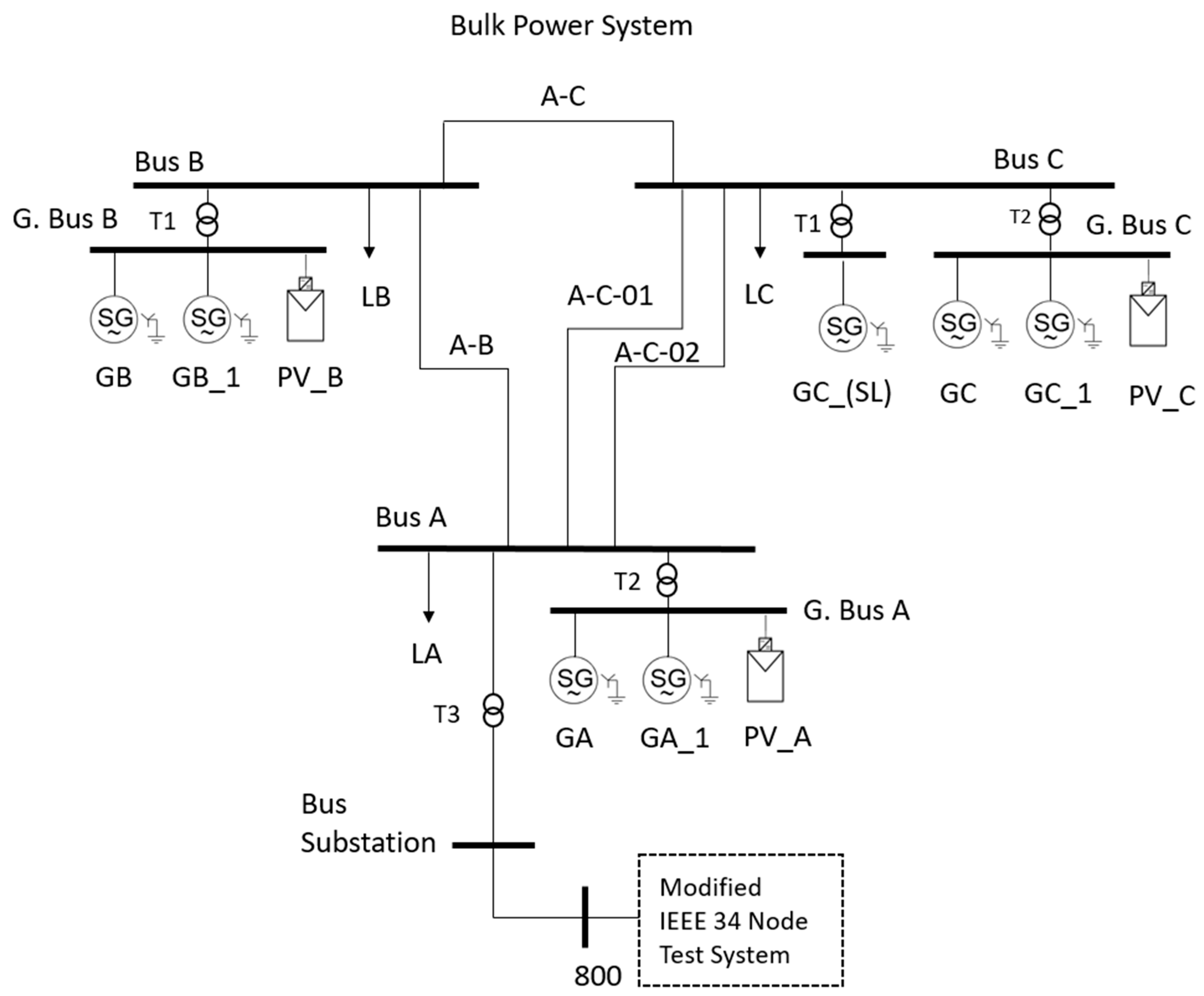

Section 3 introduces the IEEE 34-node test system and its changes, including the connection to a three-bus BPS to generate disturbance cases in this power system. Also, the datasets of cases obtained from this test system are presented.

Section 4 presents the results obtained and the validation instances. And finally, in

Section 5, the conclusions are presented.

2. Modified Entropy Surface and Bayesian Entropy Methodology

The BEM treats an anti-islanding protection system as a black box that processes information from the Point of Common Coupling (PCC) and decides whether to disconnect the Distributed Generator (DG) during a disturbance. The simplified protection model shown in

Figure 1 assumes that the ability to detect islanding events primarily depends on the calibration of the protection system, specifically the time-delay (

) and pick-up (

) settings. Thus, for each event that occurs (whether a disturbance or an islanding case), the protection system will have a certain probability of correctly detecting the event based on these settings. For islanding events, the probability of correctly detecting the island is denoted as

, which represents the probability of detection given that an island has occurred. Similarly, for non-islanding events,

represents the probability of not incorrectly detecting an island when no islanding event has occurred.

The calibration parameters,

and

for the simplified protection model presented in

Figure 1 define its overall behavior. An ideal protection system would have probabilities

and

equal to one, meaning perfect detection of islanding events and perfect avoidance of false positives. On the other hand, a chaotic protection system, where decisions are predominantly random, would have these probabilities close to 0.5, indicating erratic and unpredictable behavior, which is precisely the opposite of what is desired in a protection system. The objective of BEM is to assign a specific calibration value (

and pick-up:

settings), corresponding to a specific entropy value. In this model, entropy serves as a measure of the uncertainty or chaos in the decisions made by the anti-islanding protection system.

Equation (1) presents the entropy of a set of probabilities. In this context, entropy quantifies the uncertainty associated with protection actions—whether the protection system correctly detects an islanding event or mistakenly identifies a disturbance as an island.

In Equation (1), the total entropy (entropy of the protection) is obtained by the weighted sum of the information from the probability associated with each event defined for the operation of the electrical protection, according to the defined probabilistic tree model [

32]. Using these probabilities, presented in Equation (2), the protection entropy is calculated by treating it as a source of messages (trip or no trip) based on Shannon’s definition of entropy [

33].

In Equation (2), the set of forward and backward success and failure probabilities is presented [

32]. The subscripts stand for

D (detect),

I (islanding),

NI (no-islanding),

T (trip), and

NT (no-trip). Each probability in this set represents a specific outcome:

is the probability of detecting an island when it has actually occurred (forward success).

is the probability of mistakenly identifying a disturbance as an island (forward failure).

is the probability of failing to detect an island when it has occurred (forward failure).

is the probability of correctly identifying a disturbance without mistaking it for an island (forward success).

represents the probability that, given the protection was triggered, the cause was an actual islanding event (backward success).

is the probability that, given the protection was triggered, the cause was a disturbance (backward failure).

is the probability that, given the protection did not trigger, an islanding event still occurred (backward failure).

is the probability that, given the protection did not trigger, the event was indeed a disturbance and not an island (backward success).

From the set of probabilities presented in Equation (2), the independent variables are

and

, as the remaining probabilities can be derived from these two [

32]. Therefore, by estimating these two probabilities, it is possible to calculate the protection entropy using Shannon’s entropy formula, as shown in Equation (1).

The entropy model in Equation (1) reaches its maximum (maximum uncertainty) when all the probabilities defined in Equation (2) are equal

, and it is zero when the probabilities

and

are equal to one, representing an ideally perfect protection that always detects islanding events with no NDZ and never trips erroneously. However, this entropy model also results in zero under certain mathematical conditions that make no practical sense. When both probabilities,

and

, approach zero, the entropy model in Equation (1) also tends to zero, representing an ideal protection that never trips under any circumstances. In this hypothetical case, there is no uncertainty, but it leads to an unrealistic scenario for modeling electrical protection [

32].

To address this issue in the original uncertainty model of Equation (1) [

33], this work introduces a more practical model, fitted to the search for minimum entropy in electrical protection systems. This improved model, defined as modified entropy (C), and is presented in Equation (3).

The modification of the model proposed in Equation (3) includes two terms that act as penalties when both probabilities

and

approach zero. These penalties can be adjusted using the constants

and

, which allow fine-tuning the modified entropy model (C) relative to the original entropy model (H). In this way, it is possible to obtain an entropy model that penalizes calibrations that lead to protection having probabilities

and

close to zero and that adjusts as closely as possible to the original Shannon entropy model, allowing fine-tuning through the parameters

k,

y, and

a defined in Equation (3). The values of

a and

k control how “quickly” the C surface converges to the H surface as the probabilities approach unity. The adjustment of these parameters was carried out through trial and error, determining the most appropriate values as shown in

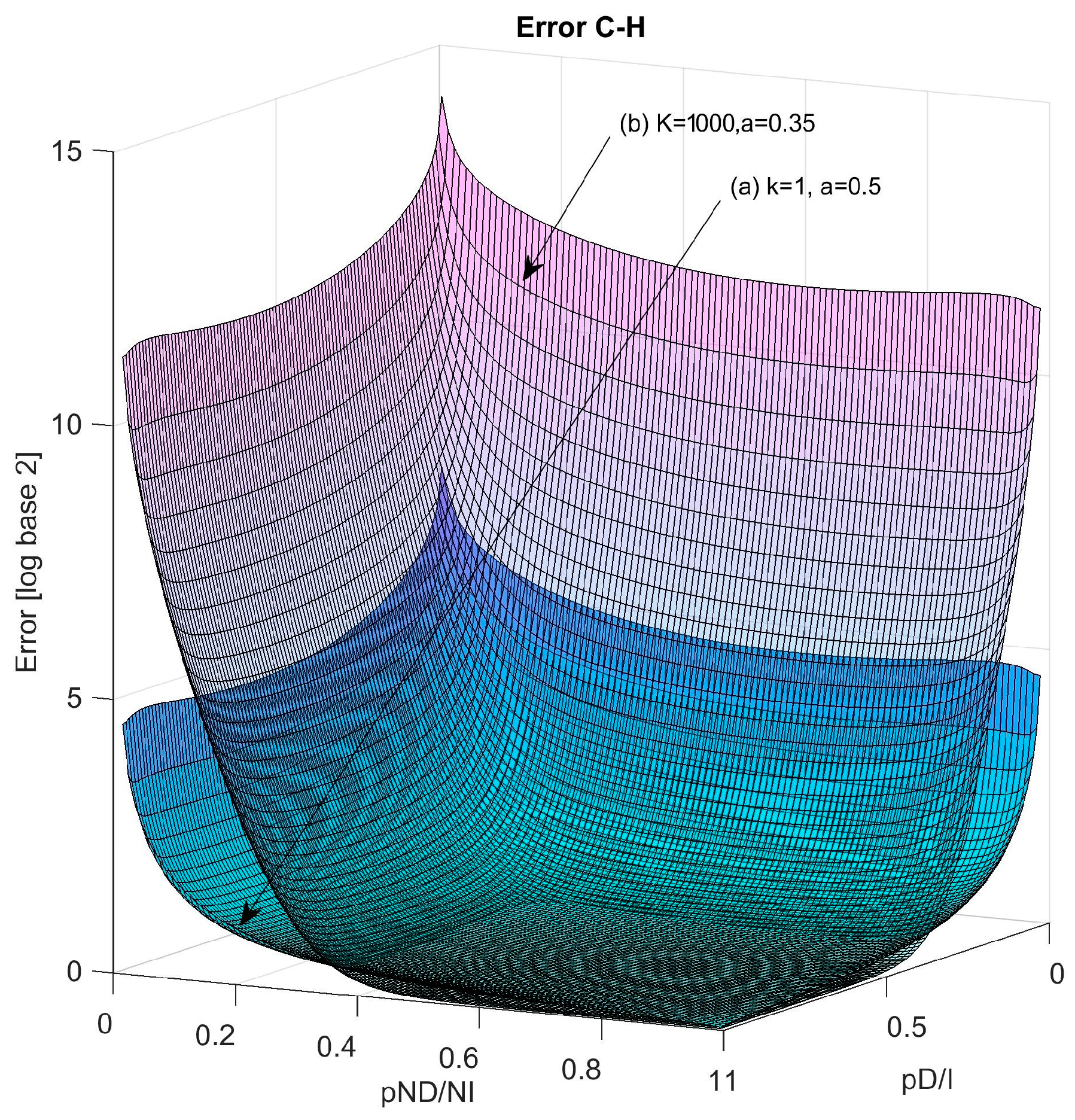

Figure 2. These parameters introduce degrees of freedom that allow for fine-tuning, optimizing the application of the proposed methodology.

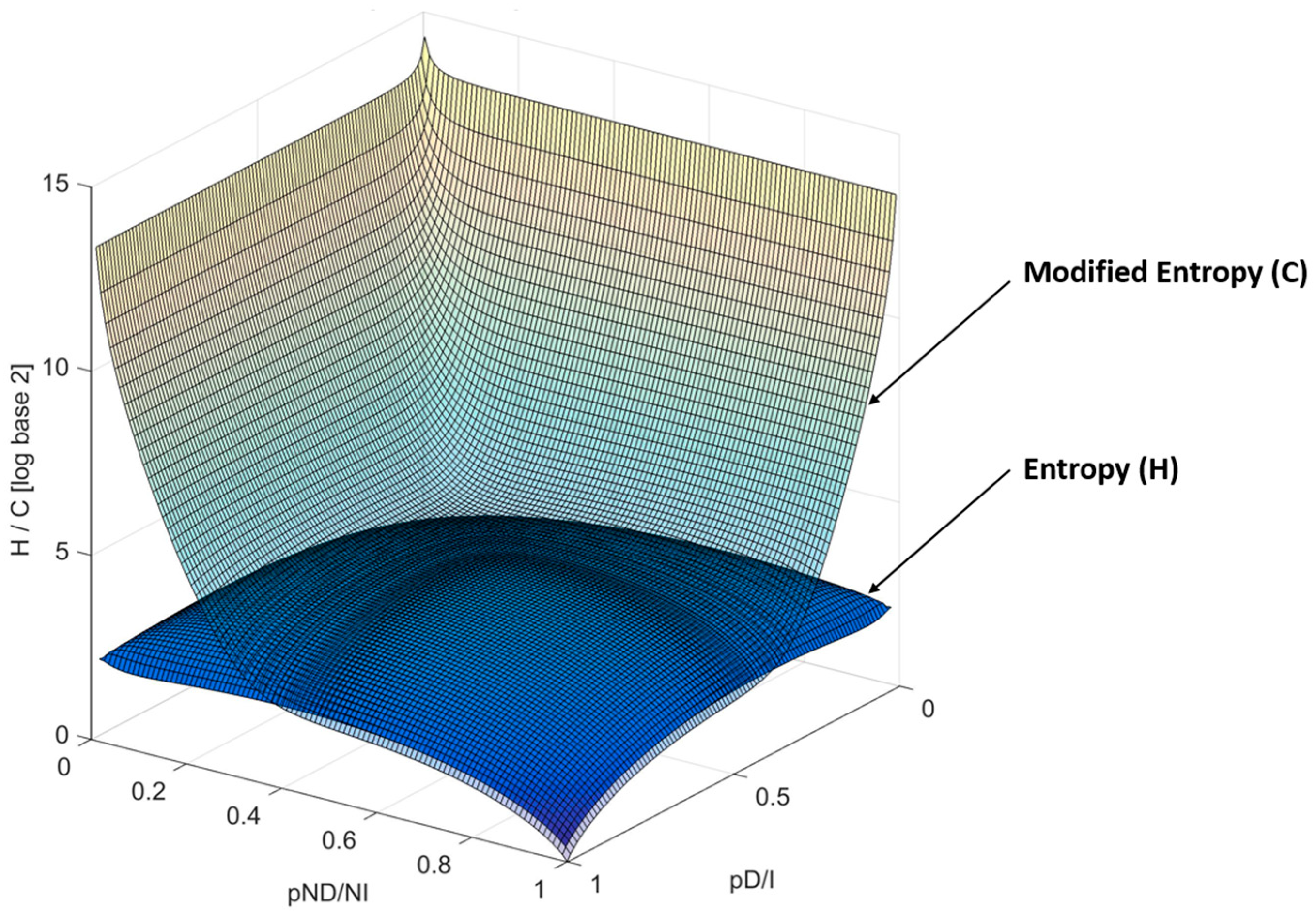

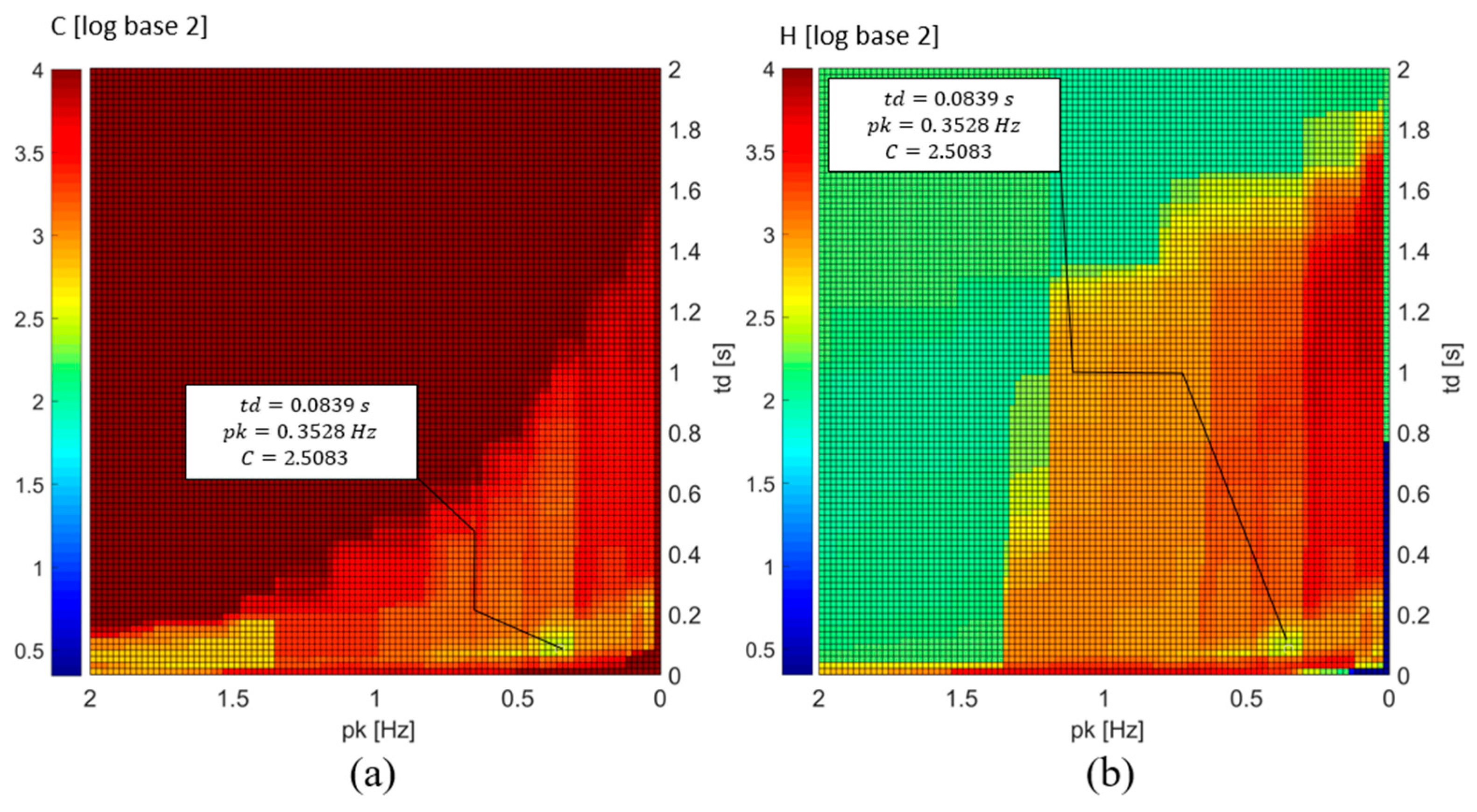

Figure 3 presents a comparison of entropy surfaces (H) and modified entropy (C).

Figure 2 shows error surfaces for different values of the adjustment parameters

k,

y, and

a.

The application of the entropy surface (H) described in Equation (1) is not suitable as a direct objective function due to the presence of mathematical minima that lack practical relevance in protection systems. In contrast, the modified entropy surface (C) serves as a cost function that facilitates the search for minimum uncertainty in the protection system, and allows for simultaneous modeling of the uncertainty or chaos within the protection system. Furthermore, near the global minimum, this modified surface enables direct calculation of entropy, converging almost without error to the Shannon entropy model.

To estimate the probabilities

and

, the BEM defines a statistical experiment by modeling islanding detection as a Bernoulli trial. This experiment uses Bayesian inference, which efficiently computes these probabilities based on a limited dataset, reducing the computational burden.

Figure 4 presents the flowchart for the application of the BEM.

Through the Bayesian approach, the probabilities of success are calculated as presented in Equation (4):

Equation (4) is the Bayes estimate of the parameter

under the squared-error loss function,

is the average number of successful cases,

is the number of times the statistical island detection experiment is repeated (in three-phase systems,

), and

is the number of cases analyzed in the defined dataset. In the specific problem of estimating the probabilities of anti-islanding protection

and

. This result allows for the point estimation of forward population success probabilities based on fixed anti-islanding settings,

and

[

32].

To carry out probability estimation using Bayesian inference, it is necessary to define a statistical experiment in which a dataset of island events of

cases and non-island events of

cases are, respectively, defined. These events must be randomly selected from a test system. Defining the size of the datasets allows for determining the minimum achievable entropy, as seen in Equations (5) and (6), which is one of the main contributions of the BEM, as the minimum achievable entropy precisely defines the required size of these case sets [

32].

In Equations (5) and (6), and represent the maximum probability values that can be estimated through the definition of a given experiment (defined by the island and non-island events randomly selected). This experiment also determines the minimum possible entropy, . To achieve lower entropy values, the size of the datasets must be increased. In this way, the necessary dataset size can be precisely determined to obtain a desired entropy value using the BEM.

It is important to highlight that, according to the statistical experiment and Bayesian inference approach defined in this study, the minimum achievable entropy shown in Equation (6) depends exclusively on the size of the dataset. As such, it is scalable to any test power system size, given the assumption that islanding and non-islanding cases are randomly sampled from a universe of infinite possible events. These cases could even correspond to real operating conditions from an actual power system or a combination of real and simulated events.

The size of the power system does not matter—both the minimum achievable entropy and the maximum probabilities depend solely on the size of the dataset and are related through Equations (5) and (6).

If 30 islanding cases and 30 non-islanding events are selected, a dataset of only 60 cases is formed. This results in maximum probabilities of . This maximum probability is interpreted as the highest probability that the BEM can “observe”. In other words, even if a theoretically perfect protection system is tested—one with probabilities of success equal to one—the BEM would only be able to estimate its performance using these maximum probabilities, as described in Equation (5).

In this sense, Equation (5), which relates the maximum observable probabilities to the size of the dataset, defines the limit of uncertainty that can be “seen” under a given experiment, determined by the dataset size. This is a key result, as it allows for accurately determining the dataset size required to achieve a desired level of performance. In the example of the ideal protection system, the gap between a perfect probability (equal to one) and the maximum probability estimated by the BEM represents the residual uncertainty intrinsic to the experiment itself.

4. Results

In the evaluation of the BEM in the IEEE 34-node system, five passive characteristic signals were considered: RoCoF, Frequency at the Point of Common Coupling (PCC), Voltage Deviation at the PCC, Rate of Change of Voltage (RoCoV), and Total Harmonic Distortion (THD) of the voltage waveform. These signals were obtained by processing the voltage signals at the PCC through EMT simulations and were sampled at a rate of 40 kHz using MATLAB R2023a. For the evaluation, the deviations of the signals from their nominal or pre-fault values were considered. Since the direction of the deviation does not influence detection (which is defined by threshold violation and time delay settings), the absolute values of all positive deviations were used for each characteristic signal. These five characteristic signals were key to applying the BEM in the IEEE 34-node system.

4.1. Process for Extracting the Characteristic Signals Analyzed at the PCC

Figure 8 illustrates the process for extracting characteristic signals from the simulated voltage of each case at Node 848-B. The simulation cases were first run in DIgSILENT PowerFactory, and the voltage signals were then processed in MATLAB with a sampling frequency of 40 kHz (40,000 Hz). This high-frequency sampling ensures that the signals capture transient behavior and relevant variations.

For each simulation, the characteristic signals were calculated based on the diagrams presented in

Figure 8. In diagram (a), the frequency deviation

and the rate of change of frequency

are calculated by first filtering the voltage signal and then determining the frequency. The deviation from the nominal frequency

and the RoCoF are computed, with the absolute values of these deviations extracted. The results are presented in

Figure 9.

Diagram (b) shows the process for calculating voltage deviation and the rate of change of voltage . After filtering the voltage signal, the envelope of the signal is calculated, and deviations from the average voltage are measured. Lastly, in diagram (c), total harmonic distortion (THD) is computed by using a 0.2 s, 8000-sample window to evaluate the distortion up to the 50th harmonic.

4.2. Optimal Settings Achieved with the BEM

Table 5 presents the results for the optimal settings achieved through exploration of the modified entropy surfaces (C) using the BEM. The table indicates the values of the time-delay

and pick-up

from the centroid of the regions of minimum entropy for each of the five analyzed features.

For the five studied features, it was not possible to find minimum entropy protections, meaning that the uncertainty of the experiment could not be fully reduced under the DS 848 02 calculation set. It is important to note, however, a trend was observed toward improved uncertainty in features that are temporal derivatives of others. The feature achieved a lower uncertainty value compared to the feature, and similarly, showed a reduction in uncertainty compared to . This finding suggests that the time derivative of a signal enhances its sensitivity and reliability for island detection.

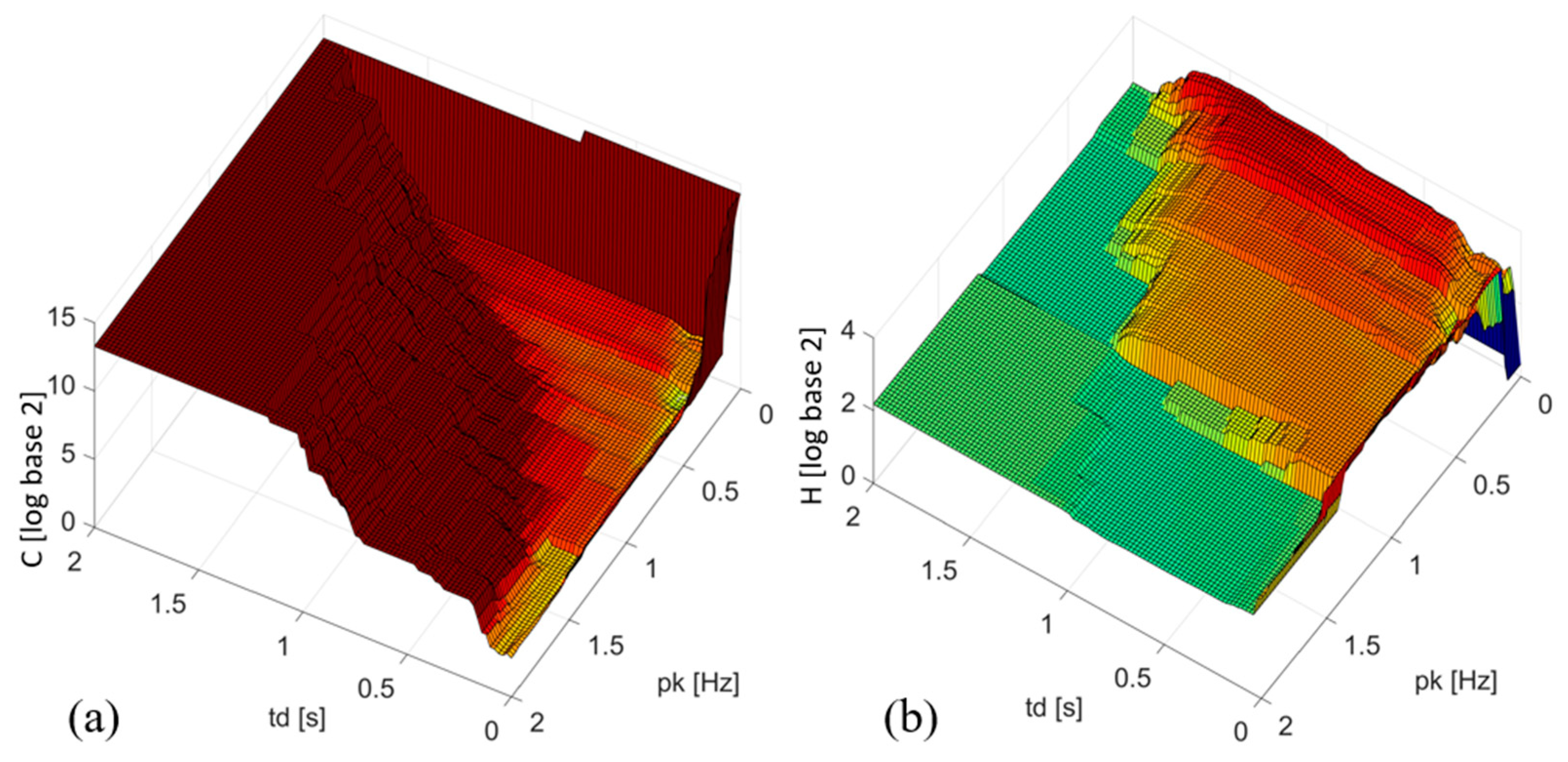

In the following, the focus will be placed on the results for

and

, as these features yielded the most promising outcomes. The optimal settings for

and

resulted in higher entropy values, indicating less reliable and sensible adjustments. This can be concluded from a simple inspection of the surfaces shown in

Figure 10, where no significant minima can be observed. Although a low entropy value was obtained for the THD % feature, it was a marginal result, as shown in the details presented in

Figure 11b, with no significant margin for calibration, and it fell within the permissible harmonic distortion thresholds set by regulations, making it impractical for islanding detection. Therefore,

and

will be prioritized in the analysis, as they demonstrate the highest potential for achieving optimal anti-islanding protection settings.

In

Figure 12 and

Figure 13, the modified entropy (C) and entropy (H) surfaces for the

feature signal are presented, obtained by exploring the

and

parameters from 100 × 100 uniformly distributed configurations in a Cartesian plane using the DS 848 02 calculation set. The comparison of the entropy surfaces verifies the hypothesis used to model the modified entropy function presented in Equation (3), where the goal was to penalize non-useful or local minima. The minimum entropy value (H = 0.34602) is only visible in the entropy surface (H), not in the modified entropy surface (C). This occurs because the settings that reach the minimum entropy in surface (H) correspond to a scenario without uncertainty according to the first model but with no practical use for a protection system. However, in the modified entropy model (C), as formulated in Equation (3), these settings are penalized, resulting in higher values.

Figure 13 shows the top views of the entropy surfaces from

Figure 12, highlighting the optimal settings found. In this region, the surfaces tend to converge, adopting similar shapes and heights. As analyzed in

Figure 2, surface (C) gradually aligns with surface (H). For this reason, the uncertainty of the optimal settings is expressed in terms of (C) rather than (H) as they reach identical values at that point. The analysis concludes that (C) improves the surface exploration process, as there are no local or non-useful mathematical minima for protection.

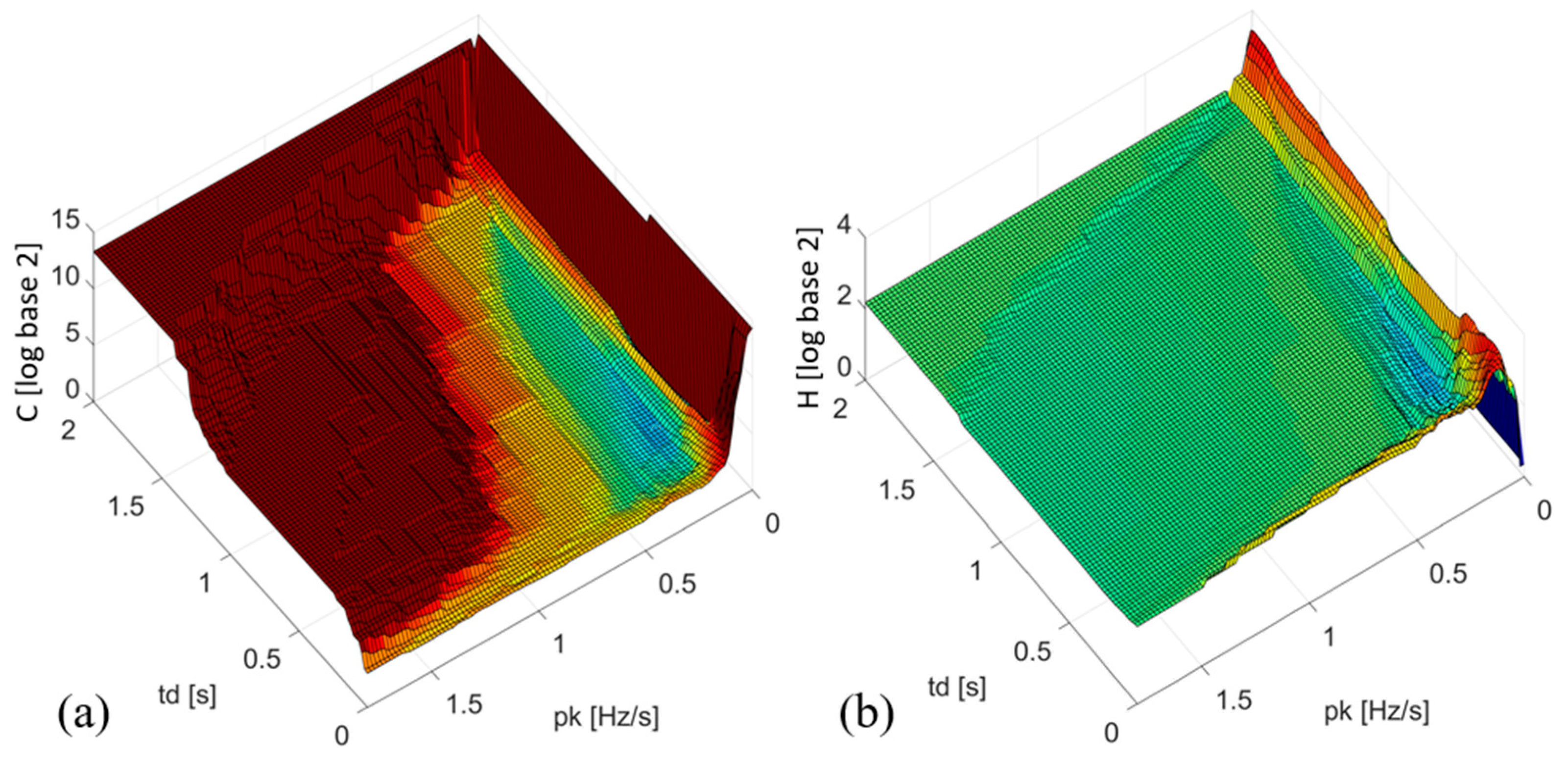

Figure 14 and

Figure 15 present the modified entropy (C) and entropy (H) surfaces for the

feature. These were calculated in the same way as for the

feature by exploring the

and

parameters from 100 × 100 = 10,000 uniformly distributed configurations in the defined region of the plane using the DS 848 02 calculation dataset. For the

feature, the convergence of (C) over (H) near the region of minimal uncertainty is even more evident.

Figure 15 illustrates the top views of the entropy surfaces previously shown in

Figure 14, highlighting the optimal settings identified on surface (C).

As additional validation of the settings obtained using the BEM, the optimal setting for RoCoF based anti-islanding protection obtained in [

29] is presented. This was obtained for the IEEE 34 system using the ASMM (Automatic Setting Map Methodology). Despite differences in the cases, number of events, and optimization methods used in both approaches, the setting achieved using the BEM closely matches that obtained through the ASMM. This result underscores the robustness and effectiveness of the BEM in achieving optimal settings under diverse operating conditions with significant computational savings, as the ASMM used 4606 cases, while the BEM only required 60 cases from the DS 848 02 calculation set.

4.3. Validation Instance

The optimal settings calculated with the BEM, as presented in

Table 5, were evaluated using the validation cases from validation datasets. Each of these datasets contains 200 cases, resulting in a total of 1200 validation cases when considering that the detection for each phase is independent (600 island cases and 600 non-island cases). With this large number of cases, it was possible to perform a frequency-based evaluation of detection success and non-detection errors by calculating the probabilities

and

, which represent favorable cases over possible cases. These probabilities are denoted

and

, to differentiate them from the Bayesian inference-based probabilities initially approximated by Bayes estimators, denoted as

and

.

In

Table 5, where the validation results are presented, the optimal settings for the

feature from [

29] have been included for comparison. The settings obtained using the Bayesian Entropy Methodology (BEM) demonstrate an improvement in terms of both sensitivity and reliability when compared to the previously published adjustments. Specifically, the BEM-derived settings provide a lower entropy value, indicating more robust performance in detecting islanding events while avoiding false detections during non-islanding events.

Furthermore, the settings from [

29] (R3) showed higher probabilities of false positives in the non-islanding validation set (DS 848 01), resulting in an increase in entropy values for those configurations. On the contrary, the optimal settings obtained using BEM allowed for better discrimination between islanding and non-islanding scenarios. The pk threshold and optimized td timing in the BEM calibration were crucial in minimizing false detections.

On the other hand, it is clear that the analyzed features are not sufficiently appropriate to achieve an adequate balance between sensitivity and reliability, as they fail to find calibrations with entropy equal to the entropy of the experiment defined by the number of cases considered in the optimal settings calculation dataset (H = C = 0.34602). This suggests that alternative approaches should be explored regarding the use of passive features for island detection with minimal entropy.

The graph presented in

Figure 16 illustrates the detection time [s] versus active power imbalance

for the

feature under Generation Scenario 1, where the island is formed with 75% synchronous generation (DG1) and 25% converter-based generation (DG2). The results compare three calibrations: R1 obtained using the BEM and R2 and R3 obtained from ASMM [

29]. The x-axis represents the active power imbalance, with negative values corresponding to power deficits and positive values to power excess. The y-axis denotes the detection time in seconds.

The three-phase responses for each calibration are plotted, where R1 (in red) shows a detection time slightly longer than that obtained with calibration R3 but much better than calibration R2, which failed to detect any of these cases satisfactorily. Calibration R3 demonstrates a similar performance to R1 but exhibits slightly more sensitivity with a lower pk setting, although at the cost of increased false detections in other validation scenarios (disturbances), which reduces the probability of avoiding erroneous detections and increases the measured entropy in the validation set, as presented in

Table 6.

Similarly to

Figure 16,

Figure 17 presents the detection time [s] versus the active power imbalance

for the

feature under Generation Scenario 2, which consists of a 50% synchronous generation and 50% converter-based generation mix.

In this scenario, the feature calibrated with R1 (in red) consistently shows a detection time slightly longer than that obtained with calibration R3. Due to the generation scenario, it can be observed that the NDZ has been reduced, as under this hypothesis of island formation (generation Scenario 2), the formed islands have less inertia, and the frequency deviations are much more pronounced than in Scenario 1, facilitating detection.

Regarding generation Scenario 3, the detection time graph has been omitted, but the obtained results are very similar to those of the previous two scenarios, showing a complete reduction in the non-detection zones for all three calibrations. In this generation scenario, it was possible to detect all island cases present in the validation set.

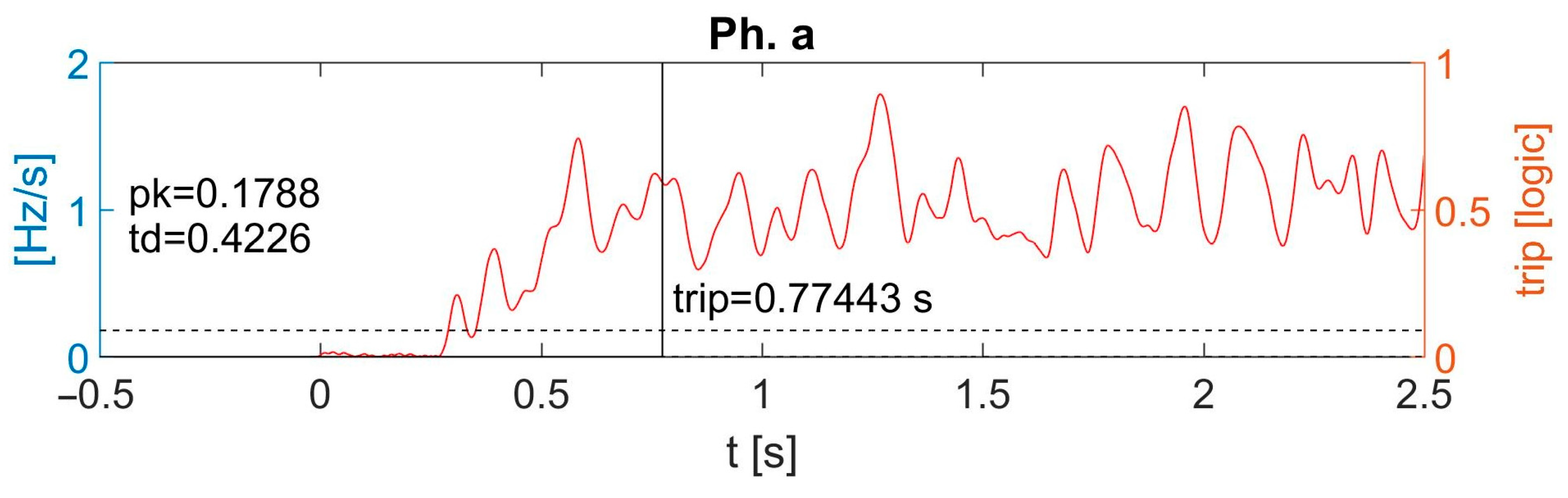

Figure 18 presents the detection test for calibration R1 obtained using the BEM in an island case corresponding to generation Scenario 3. Significant frequency deviations can be observed for small active and reactive power imbalances

and

obtained in this case. These large frequency deviations are due to the reduced inertia of the island formed under the case’s conditions.

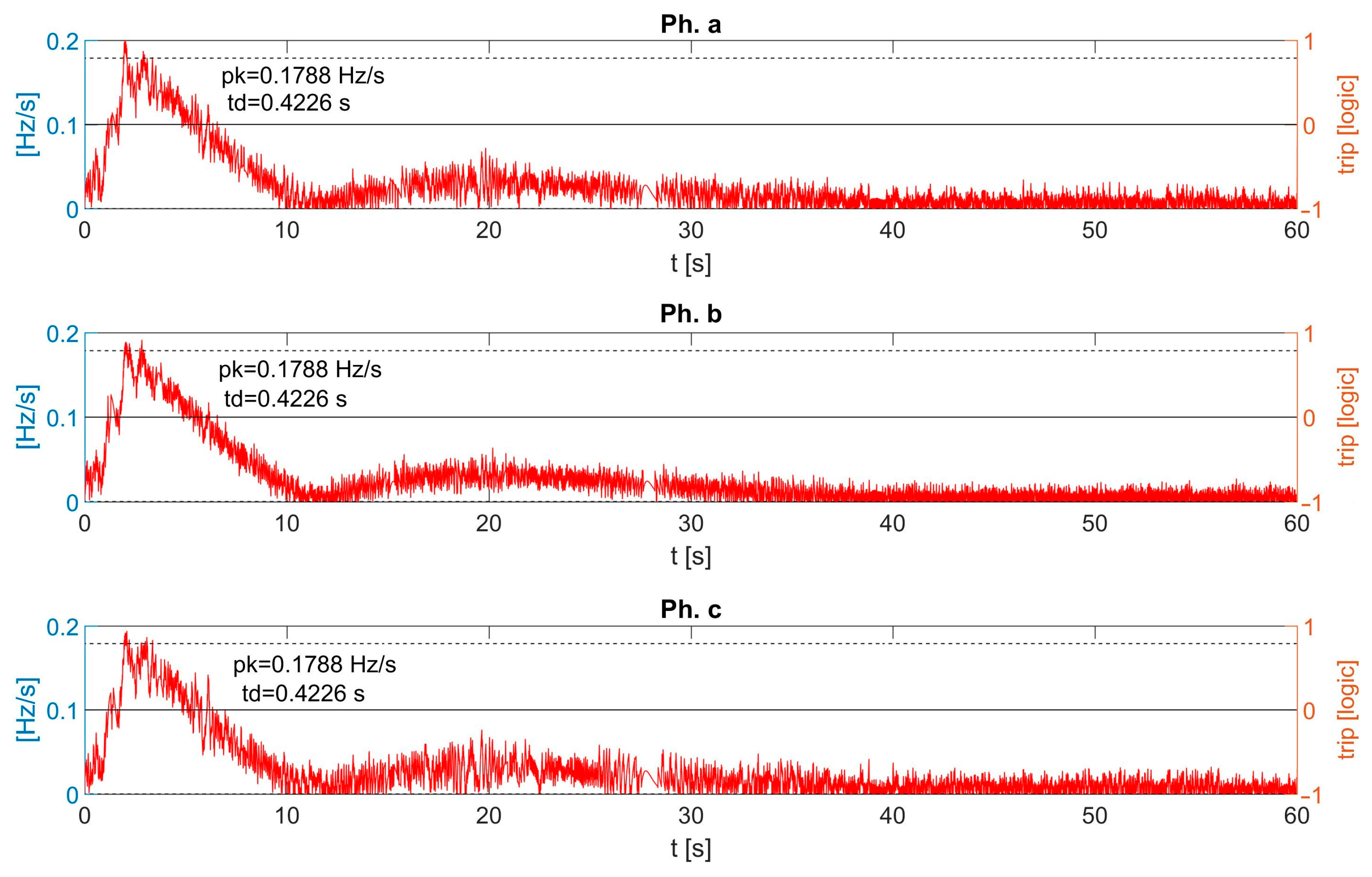

Finally,

Figure 19 illustrates the detection signals for

calibration R1, using the BEM in all three phases for a severe disturbance case (disturbance_189) from the validation dataset. In this scenario, the generator GA is disconnected, resulting in a significant disturbance with a power imbalance

and a maximum rate of change of frequency

, which exceeds the threshold of the optimal calibration found by the BEM (

,

). Despite this, the optimal calibration obtained with the BEM avoids triggering the protection, demonstrating an appropriate balance between sensitivity and reliability.

This is a critical observation, as the calibration successfully detects the severe disturbance while preventing false positives, which is a key performance indicator for protection systems. Moreover, the NDZ for the BEM-calibrated protection is similar to the calibration R3 obtained using ASMM. However, the BEM calibration is much more reliable, as it did not falsely trigger for any disturbances in the validation dataset. This highlights the superiority of the BEM method, providing both accurate detection of islands and robust protection against false alarms under various disturbance conditions, while also significantly reducing the number of cases analyzed in the dataset compared to the ASMM. The BEM achieves reliable calibrations with reduced dataset, optimizing the process and saving computational effort without compromising detection accuracy.

5. Conclusions

This work successfully demonstrated the effectiveness of the Bayesian Entropy Methodology (BEM) for optimizing the calibration settings of anti-islanding protections. The results highlight the BEM’s ability to identify optimal settings for sensitivity and reliability using a significantly reduced dataset compared to other methodologies.

Table 7 presents a comparison of the amount of data required in other works published in the literature. Notably, BEM required only 60 cases in the calculation dataset to achieve settings comparable to other works. This reduction represents a substantial saving in computational effort.

This work has also demonstrated the advantages of using the modified entropy function over the entropy function. The modified entropy allows for the elimination of low-uncertainty regions that have no practical application in protection systems. This improvement paves the way for future research to automate the search for calibration regions with minimal uncertainty through an optimization algorithm. This approach reduces the risk of “falling into erroneous zones” with mathematically low entropy but lacking real physical relevance. Consequently, the modified entropy function enhances the robustness and reliability of the search process for optimal calibration settings in protection systems.

The validation of the optimal settings against various disturbance and islanding scenarios confirmed that the BEM-derived settings provide a robust balance between sensitivity and reliability. The reduced non-detection zones (NDZs) and avoidance of false tripping across all tested conditions underscore the method’s superiority in maintaining operational reliability, even under severe power system disturbances.

Future work will focus on exploring combinations of characteristic signals to improve detection performance further. Investigating the potential for multi-signal feature fusion, such as integrating RoCoF with other features, may yield an even more sensitive and reliable detection signal. This approach could lead to the development of a composite detection algorithm that leverages the strengths of multiple features, thereby reducing entropy to even lower levels and improving the overall robustness of passive anti-islanding protections.

In conclusion, the BEM offers a novel, computationally efficient approach to optimizing anti-islanding protections. By incorporating additional signals and enhancing feature combinations in future work, there is significant potential to improve the detection accuracy and reliability of islanding protections in increasingly complex distribution networks with high DER penetration.