Abstract

With the increasing integration of renewable energy sources, distributed shared energy storage (DSES) systems play a critical role in enhancing power system flexibility, operational resilience, and energy sustainability. However, conventional scheduling methods often suffer from excessive communication burdens, limited scalability, and poor real-time responsiveness, especially when handling fast-changing frequency regulation signals and fluctuating renewable energy outputs. To address these challenges, this paper proposes a consensus-driven distributed online convex optimization method that enables a decentralized scheduling of energy storage units by leveraging the consensus algorithm for local decision-making while maintaining global consistency. Additionally, an adaptive event-triggered mechanism is designed to dynamically adjust the communication frequency based on system state variations, reducing redundant information exchange and ensuring convergence and stability in a fully distributed environment. Simulation results on the IEEE 14-bus test system show that the strategy reduces the communication load by 33–60% and improves the convergence speed by over 40% compared to baseline methods. It also demonstrates a strong adaptability to storage unit disconnection and reconnection. By enabling a fast and efficient response to grid services such as frequency regulation and renewable energy balancing, the proposed approach contributes to the development of intelligent and sustainable power systems.

1. Introduction

With the increasing integration of renewable energy, wind and photovoltaic power plants face challenges such as power fluctuations and deviation penalties, necessitating dynamic regulation through energy storage systems [1]. Shared energy storage, as a flexible resource allocation method, not only serves users and grid dispatching but also provides real-time leasing services for renewable energy plants, assisting in power smoothing and deviation management to enhance renewable energy utilization [2].

Shared energy storage has received increasing attention in both academia and industry due to its potential to improve energy flexibility, resource utilization, and cost-effectiveness in multi-user scenarios [3,4,5]. Based on the organization of storage resources, shared energy storage systems can be categorized into Centralized Shared Energy Storage (CSES) and Distributed Shared Energy Storage (DSES) [6]. CSES is typically deployed at specific locations in the form of large-scale storage stations, providing services to multiple users [7]. This model benefits from economies of scale and centralized management but also presents challenges such as high construction costs, spatial constraints, a limited flexibility, a strong dependence on the grid infrastructure, and a vulnerability to single-point failures. In contrast, DSES consists of multiple geographically dispersed storage units that coordinate efficiently through intelligent optimization strategies to achieve effective resource sharing [8]. This structure offers a greater flexibility and scalability but also introduces a higher control complexity due to the distributed architecture.

For a DSES system with multiple distributed storage units, optimization scheduling methods can be broadly categorized into centralized optimization scheduling and distributed optimization scheduling [9,10]. Centralized optimization scheduling relies on a central controller to collect global information and perform a unified optimization. Theoretically, this centralized approach provides an optimal coordination solution from a system-wide perspective [11]. However, due to the high computational complexity, extensive communication requirements, and the risk of single-point failures, its application in large-scale DSES is limited [12]. In particular, it struggles to coordinate a large number of storage units in real time, making it difficult to respond quickly to time-sensitive tasks such as frequency regulation [13]. Distributed coordination methods reduce reliance on a central coordinator by employing decentralized optimization strategies, where the optimization process primarily relies on local information exchange among storage units [14]. Each storage unit performs simplified independent computations based on local information rather than solving the full global optimization problem. Through iterative information sharing with neighboring units, the system gradually converges toward a globally optimal solution, effectively reducing computational complexity and improving optimization efficiency. Additionally, this method supports the dynamic integration and withdrawal of storage units, offering strong plug-and-play adaptability. Newly added storage units do not require a reconstruction of the global optimization model; instead, they can compute their local optimization variables and gradually integrate into the system through information exchange with neighboring units. This feature enhances the flexibility and scalability of DSES.

However, decentralized optimization methods that rely solely on local information exchange still face challenges in convergence speed and global consistency. Since each storage unit can only access limited information from neighboring units, the optimization process may converge slowly or, in some cases, fail to ensure that all units ultimately reach a globally optimal solution. Additionally, system topology and communication delays may cause information desynchronization, further affecting optimization performance. To address these issues, consensus algorithms have been widely applied in distributed optimization frameworks to ensure that all storage units gradually reach a consistent decision during iterations [15,16]. By leveraging consensus algorithms, storage units can not only optimize using neighboring information but also adjust their strategies through an iterative mechanism [17,18]. This approach accelerates convergence, enhances optimization stability, and ensures global consistency.

Moreover, uncertainty challenges remain a significant issue in the real-time scheduling of DSES systems. Current research primarily relies on forecast-based methods for optimizing energy storage scheduling [19,20,21]. For relatively stable and long-timescale signals, such as user demand, forecasting methods can effectively capture future load trends with reasonable accuracy. However, for commands requiring a second-level response, such as frequency regulation signals, the rapid fluctuations and high volatility of these signals—heavily influenced by grid conditions, frequency deviations, and other dynamic factors—make traditional forecasting methods insufficiently accurate [22,23]. As a result, the reliance on forecasting in such scenarios can degrade the effectiveness of real-time optimization and scheduling in shared energy storage systems. To address this issue, this paper employs a distributed online convex optimization approach, enabling DSES units to optimize adjustments based on real-time observation data without requiring accurate predictions of future states. By integrating online optimization and convex optimization theory, this method dynamically updates the optimal solution at each time step, allowing the system to adapt to rapid fluctuations in frequency regulation demand and enhance scheduling responsiveness [24]. Furthermore, the distributed optimization framework enables parallel computation across multiple storage units, avoiding the computational bottlenecks and single-point failure risks associated with centralized methods [25,26]. Additionally, the online optimization algorithm improves system adaptability and robustness in uncertain environments by utilizing incremental learning strategies rather than relying on complete historical data.

Distributed optimization relies on information exchange between energy storage units, requiring state synchronization at each iteration [27]. High-frequency communication not only increases bandwidth usage and computational overhead but also introduces potential communication delays, leading to asynchronous information updates that can slow down convergence [28]. Therefore, reducing communication frequency and redundant data exchange while maintaining optimization accuracy and convergence is a key challenge in distributed optimization. To address this issue, this paper introduces an event-triggered control mechanism, where communication is triggered only when state changes exceed a predefined threshold, effectively reducing unnecessary data transmission and improving computational efficiency. In recent years, event-triggered strategies have been applied to large-scale optimization problems, where information is updated only when specific trigger conditions are met [29,30,31,32,33]. This approach reduces communication frequency while ensuring convergence and the stability of the optimization process. However, existing event-triggered strategies still have notable limitations: (1) Fixed-threshold strategies determine communication triggers based on a preset threshold. However, in dynamic system environments, a fixed threshold may not adapt to varying state changes. When system state fluctuations are large, excessive triggering may occur, increasing communication overhead. Conversely, when state variations are small, delayed updates may degrade optimization performance. (2) Decreasing-threshold strategies progressively lower the trigger threshold to approximate the global optimum. However, if the threshold becomes too small, it may lead to an excessive communication frequency, increasing the information transmission burden rather than effectively reducing communication costs.

To address the aforementioned challenges, this paper proposes a distributed online convex optimization method based on consensus algorithms and adaptive event-triggered optimization, which significantly reduces the communication requirements in DSES while ensuring optimization performance. The main contributions of this work are as follows:

- (1)

- A novel consensus-based distributed online convex optimization method is proposed for the decentralized scheduling of energy storage units. The method leverages Lagrangian relaxation to address global constraints while maintaining scalability and robustness.

- (2)

- An adaptive event-triggered communication mechanism is developed to minimize communication overhead by dynamically adjusting the transmission threshold according to system states.

- (3)

- The algorithm’s robustness is demonstrated through simulations of dynamic disconnection and reconnection scenarios, showing a reliable scheduling performance under varying topologies.

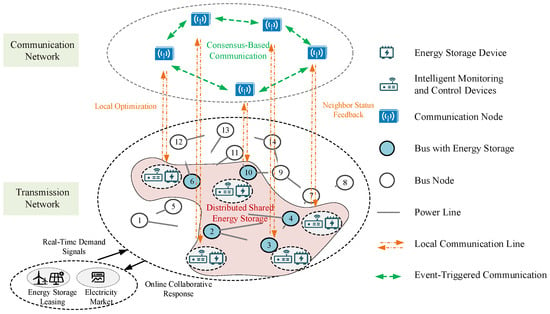

3. Distributed Real-Time Scheduling Framework Based on Consensus Algorithm

3.1. Real-Time Optimization Framework Design Based on Distributed Online Convex Optimization

A distributed energy storage system, due to its geographical dispersion and high communication costs, requires rapid response to scheduling demands. Traditional centralized optimization is constrained by computational burdens and communication latency. Distributed online convex optimization integrates convex optimization, distributed computing, and online optimization, enabling storage units to perform local computations while exchanging critical information via the communication network, thereby achieving decentralized global scheduling.

This method decomposes the global optimization problem into multiple subproblems corresponding to individual storage units. Each unit independently solves its local problem based on local information and exchanges key variables (such as Lagrange multipliers and local solutions) with neighboring units. Through iterative updates, the system gradually converges to the global optimum, enhancing scheduling efficiency and robustness.

In a distributed energy storage system, the objective of the global optimization problem is to achieve a coordinated scheduling of storage units through the communication network. In the context of online convex optimization, this is typically modeled by minimizing a cumulative time-varying loss function, where “loss” refers to a scalar cost function at each time step—not to be confused with physical energy loss. Specifically, the system’s global loss function is defined as follows:

where represents the local loss function at node at time , which is typically associated with the operating costs and scheduling objectives of the storage unit. The optimization goal is to minimize the cumulative loss over a finite time horizon :

where denotes the optimization variables; represents a compact and convex constraint set.

To describe the optimization objective within the system’s time horizon, specifically in relation to the operational costs and revenues of the shared energy storage system, combining the optimization objective defined in Section 2, can be further expressed as follows:

To evaluate the performance of the distributed optimization algorithm, a dynamic regret metric is typically introduced as an evaluation criterion, defined as follows:

where represents the decision solution provided by the algorithm at time ; denotes the optimal solution at time .

If the dynamic regret of the algorithm satisfies a sublinear convergence condition , then it indicates that the algorithm can gradually converge to the global optimal solution over the long-term operation.

The distributed optimization algorithm enables each storage unit node to independently compute gradients and achieve coordination by exchanging state information over the communication network. Each node computes its gradient at time and updates the local variable using the following formula:

where is the learning rate. Subsequently, the node exchanges state information with other nodes in its neighborhood and receives the state values from neighboring nodes.

To ensure global consistency in the optimization process, a consensus constraint must be satisfied, requiring that for all , the condition holds. This consistency can be achieved through distributed consensus algorithms, for example, using a weighted averaging update formula:

We will further explore how consensus algorithms enforce the consistency condition and analyze its application in distributed energy storage systems.

In practical applications, communication delay is an unavoidable factor in distributed energy storage systems. Communication delays may lead to asynchronous state updates, thereby affecting the sensitivity and efficiency of optimization algorithms. The communication delay represents the time difference in information transmission between node and node , i.e., . To address the delay issue, an asynchronous optimization approach can be adopted, allowing nodes to perform updates even when only partial information has been received.

3.2. The Distributed Optimization Strategy Based on Consensus Algorithm

The consensus algorithm helps distributed storage units agree on a common decision by exchanging information with neighbors. Its core goal is to reach a consistent system state without using a central controller, expressed as follows:

where represents the local decision variable of node at time ; is the globally consistent optimal value. In the optimization framework of this paper, corresponds to the power decision variable of storage unit at time .

In the optimization problem, the power balance constraint involves the power decisions of multiple storage units and serves as a global coupling constraint. Since this constraint spans all units, it cannot be enforced locally. We use the Lagrange multiplier method to embed the constraint into each unit’s objective. This allows all units to coordinate indirectly and converge toward a global solution through local updates and communication. To simplify notation, is used to denote this constraint. The Lagrangian form of the optimization objective is then given by the following:

For power limits and state of charge constraints, since these constraints only affect local variables, each storage unit can directly impose these constraints in its local optimization problem without requiring relaxation via the Lagrange multiplier.

Thus, the global optimization problem is formulated as follows:

subject to the following:

In real-time operation, due to the dynamic and uncertain nature of distributed energy storage systems, the optimization process must be executed in real-time. Therefore, we adopt an online optimization framework and update the solution using a gradient descent method. Specifically, at each time step, each storage unit can only obtain local information and the gradient direction , without access to the globally optimal solution. Consequently, we integrate the local gradient update with a consensus mechanism to ensure simultaneous optimization across all units. The power decision for each unit at time is formulated as the following optimization problem:

where, to facilitate understanding, the structure of Equation (22) is explained below in terms of three key components: the first term represents the gradient descent term, which optimizes power in the direction of historical gradients, allowing for cost reduction. Since the gradient direction inherently follows the steepest descent path, this term ensures that the storage unit adjusts power toward an optimal schedule. The second term is a regularization term, which penalizes drastic changes in power adjustment. This term prevents excessive fluctuations in power output that may affect system stability. The parameter controls the adjustment rate; larger values of result in slower power adjustments, leading to a smoother optimization process. The third term serves as a constraint correction term, where the Lagrange multiplier is used to adjust for the global constraint. The consensus algorithm ensures that each storage unit gradually aligns its power decisions with the global power balance constraint by incorporating the weighted information received from its neighboring nodes.

Since the optimization process adopts the Lagrange multiplier relaxation method, the Lagrange multiplier acts as a penalty factor for the global constraint , requiring coordination updates among storage units. Based on the consensus algorithm, each storage unit can only receive information from neighboring nodes , and its update follows a weighted averaging strategy:

where is the weight of the adjacency matrix, used to adjust the influence of transmitted information; is the consensus variable, which is further used to refine the optimization direction in the subsequent steps; is the step size parameter, which controls the update rate. This update equation ensures that the Lagrange multiplier gradually converges across all nodes, allowing all storage units to achieve coordinated optimization without a centralized controller.

In a distributed environment, an individual storage unit cannot directly obtain information about the global constraint . Therefore, an auxiliary variable is introduced as a local estimation of the global constraint. The update formula for the consensus variable is given by the following:

In this equation, the first term enables information exchange among neighboring nodes via the consensus algorithm, ensuring a gradual convergence of across all nodes. The second term represents the variation of the global constraint at time , which corrects to reflect the latest changes in the global system state. The core objective of this update strategy is to ensure that storage units share consistent constraint information, thereby enhancing the global feasibility of the optimization process.

4. Adaptive Event-Triggered Mechanism and Algorithm Deployment

4.1. Network Topology and Information Interaction Mechanism

In a DSES system, the communication network serves as a key support for achieving the coordinated optimization and scheduling of storage units. The topology structure not only affects the efficiency and stability of information exchange but also directly influences the system’s response speed to dispatch commands and the feasibility of control strategies.

Under a distributed control framework, storage units exchange information through a neighbor communication mechanism to achieve distributed consensus optimization. The mathematical modeling of the communication network is typically represented by a graph structure , where the node set represents the set of storage units, with each node corresponding to a storage unit, and the edge set represents the communication links between nodes. If there exists a communication link between two nodes , it is defined as an edge.

The communication relationship is described using an adjacency matrix , where the matrix elements satisfy if there is communication between nodes and , and if there is no communication. To ensure the stability and fairness of the network, the weight matrix typically satisfies a double stochasticity condition, i.e., .

In the communication network of a DSES system, different topology structures determine the methods and efficiency of information exchange. The following three typical topology models are introduced: fully connected topology, ring topology, and randomly sparse connection topology, with their corresponding adjacency matrix expressions provided.

In the communication network of a DSES system, different topology structures determine the methods and efficiency of information exchange. The following provides adjacency matrix representations for three typical topology models: fully connected topology, ring topology, and randomly sparse connected topology.

In a fully connected topology, all storage units can communicate directly, and each node can exchange information with all the other nodes. Taking a system with five storage units as an example, its adjacency matrix is given by the following:

where represents a communication link between storage units and , and indicates no self-connection.

In a ring topology, each storage unit only communicates with its two adjacent units, forming a closed ring network. This topology reduces communication overhead but may impact the overall system communication performance if some nodes fail. The adjacency matrix for this topology is given by the following:

In a randomly sparse connected topology, communication links in the network are randomly selected, with some nodes only communicating with a few others, resulting in a sparse connectivity structure. This topology minimizes communication costs but may lead to higher information transmission delays at certain nodes, potentially affecting the convergence speed of the consensus algorithm. An example adjacency matrix for a randomly sparse connected topology is the following:

The neighbor set of each node is defined as , where neighbors are the storage units that can directly communicate with node . The in-degree and out-degree of a node refer to the size of its neighbor set, defined as and , respectively.

The communication network is typically assumed to be independent of the physical network of the storage system, allowing for a flexible communication topology design. However, in practical applications, power lines often serve as the medium for data transmission, meaning that the network topology may be constrained by the physical layout of the power infrastructure.

4.2. The Design of the Adaptive Event-Triggered Mechanism

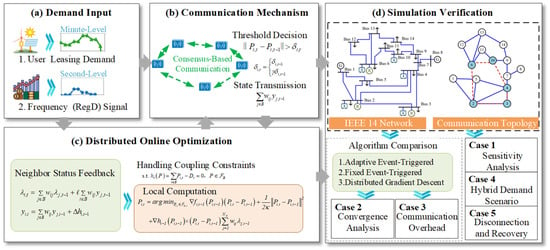

The design of the adaptive event-triggered mechanism is based on the idea that the system should dynamically adjust trigger conditions according to the changes in system state, ensuring that the system does not update information at a fixed frequency regardless of state variations. In the optimization framework of this paper, the adaptive event-triggered scheduling (AETS) is primarily implemented as follows.

At each time step , a storage unit only triggers communication when its state error exceeds a certain threshold. The transmission signal of storage unit at time is defined as follows:

where represents the state generated by storage unit at time , and is the previously transmitted state. Only when satisfies the trigger condition will it be updated and sent to neighboring nodes; otherwise, the previous value is maintained. denotes the set of specific time steps when storage unit needs to transmit information.

Traditional event-triggered mechanisms use a fixed threshold , whereas adaptive event-triggered mechanisms allow the threshold to dynamically change over time. If the trigger condition continues to be met, the threshold remains unchanged. However, if a long period has passed without triggering an event, the threshold gradually decreases to ensure that even small state variations are not ignored. The event-trigger condition is defined as follows:

where denotes the next triggering time of storage unit , and is the dynamically adjusted threshold at the previous time step.

The update rule for is given by the following:

where is the reduction factor that controls the rate of threshold decay over time. Equation (30) describes a classical static event-triggered mechanism based on error reduction, where is a fixed reduction factor. To improve the dynamic responsiveness and reduce communication overhead, we further develop the strategy by incorporating an adaptive event-triggered mechanism that adjusts the threshold based on system state variations (see Equation (35)).

Under the adaptive event-triggered mechanism, the signal error must always satisfy the constraint:

This constraint ensures that even at lower communication frequencies, system state errors remain controlled, preventing excessive error accumulation that could degrade optimization performance.

The Zeno phenomenon refers to a scenario where an infinite number of events are triggered within a finite time, which, in practical applications, can lead to unnecessary computational and communication overhead. To avoid this issue, the adaptive event-triggered mechanism imposes a minimum time interval between any two consecutive triggering times and , ensuring that, as follows:

This setting effectively prevents the occurrence of the Zeno phenomenon and improves the robustness of the system.

To ensure the convergence of the optimization algorithm, the adaptive event-triggered mechanism must satisfy the following assumption:

This assumption guarantees that as time progresses, the error threshold will not increase indefinitely while allowing global convergence to be achieved under a reasonable communication frequency.

4.3. Algorithm Implementation Process

This paper uses the power of storage units as the state variable in the adaptive event-triggered mechanism. At each time step , the system updates only when certain trigger conditions are met. Specifically, the trigger condition is defined as follows:

where is the adaptive adjustable trigger threshold, updated according to the following rule:

where is the forgetting factor, typically set within , ensuring smooth adjustments of the trigger threshold; is a dynamic adjustment coefficient used to modify the trigger threshold based on the magnitude of state changes.

When the system state changes significantly, decreases, increasing the triggering frequency; when system state variations are small, increases, reducing unnecessary information exchange.

To improve clarity and facilitate understanding, the proposed online optimization strategy is summarized in Algorithm 1 in the form of pseudo-code. This algorithm integrates the adaptive event-triggered mechanism into a consensus-based distributed online optimization process. At each time step, the algorithm checks the power variation against a dynamic threshold to decide whether to execute updates and exchange information. The threshold itself is updated adaptively, enabling a reduced communication overhead while ensuring coordination and convergence.

| Algorithm 1 Adaptive Event-Triggered Distributed Online Optimization Strategy | |

| Input: | Step size , forgetting factor , dynamic adjustment coefficient , initial power , initial threshold , dual variables |

| Output: | Updated power decision variable |

| 1: | Initialize time step |

| 2: | Initialize all variables: |

| 3: | repeat |

| 4: | Compute local gradients , |

| 5: | Calculate |

| 6: | if then |

| 7: | Update power variable using Equation (22) |

| 8: | Update Lagrange multiplier using Equation (23) |

| 9: | Update consensus variable using Equation (24) |

| 10: | Exchange info with neighbors |

| 11: | else |

| 12: | Skip update to reduce communication |

| 13: | end if |

| 14: | Update threshold: |

| 15: | Increment time step: |

| 16: | until or |

5. Case Study

5.1. Experimental Setup for the Case Study

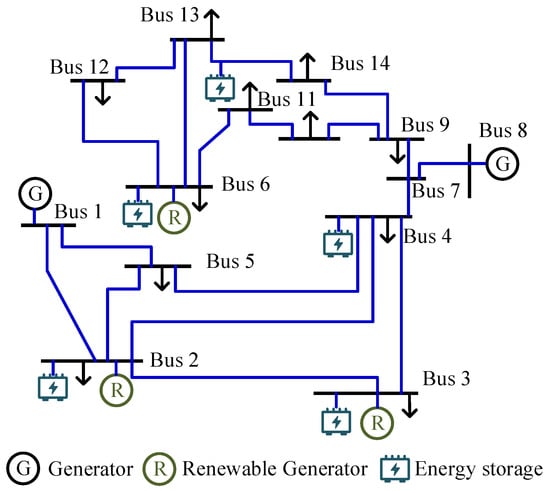

The IEEE 14-bus system is selected as the test platform, widely used for power system optimization and stability analysis. It consists of 5 generator buses, 11 transmission lines, and 11 load buses, with integrated renewable energy sources and energy storage units to simulate an intelligent grid environment (as shown in Figure 3). Detailed node numbering and equipment parameters are provided in Table 1.

Figure 3.

IEEE 14-bus power system test case with integrated renewable generation and energy storage.

Table 1.

Parameter settings of distributed BESS units.

Since distributed optimization control is used, each storage unit coordinates with neighbors via a communication network modeled by an adjacency matrix. A ring topology is set as the default, while fully connected and randomly sparse topologies are also analyzed in Case Study 2 to assess their impact on communication load.

The focus is on real-time optimization for storage units participating in frequency regulation services and power leasing. The system operates under a hybrid load scenario, where storage units dynamically adjust power allocation based on PJM RegD frequency regulation commands and renewable energy-based user demand.

Experiments are conducted in Python 3.8. The libraries NumPy (v1.21.0), Pandas (v1.3.0), Matplotlib (v3.4.2), and SciPy (v1.7.0) are used to handle data processing, visualization, and optimization, while NetworkX (v2.6.3) is used to construct the communication network.

The hardware setup includes an Intel i7-12700K processor and 32GB RAM, ensuring efficient computation and data processing.

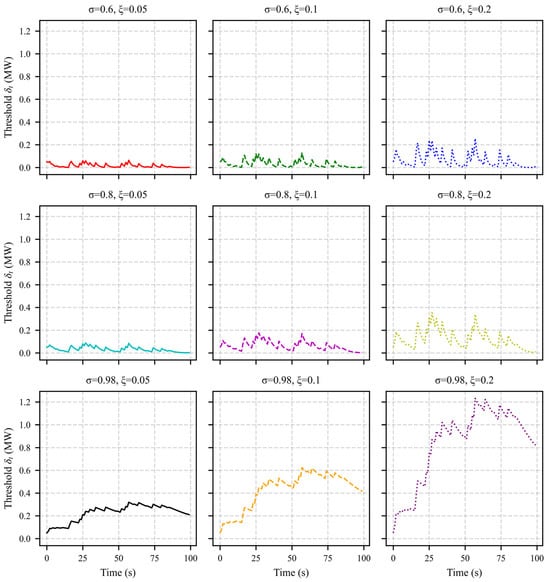

5.2. A Sensitivity Analysis of Adaptive Event-Triggered Parameters

To assess the behavior of the adaptive event-triggered mechanism under different threshold update strategies, we perform a sensitivity analysis using the power data of BESS 1. The analysis focuses on two key parameters: the forgetting factor , which influences the memory length of past thresholds, and the dynamic adjustment coefficient , which determines the responsiveness to power variations. Nine combinations are tested with and .

Figure 4 illustrates the evolution of the adaptive threshold across these combinations. As increases, the mechanism becomes more responsive to power fluctuations, resulting in more frequent threshold adjustments and fewer unnecessary communications. Higher values are especially beneficial during rapid system changes.

Figure 4.

The variation trends of the adaptive threshold under different values of the forgetting factor and dynamic adjustment coefficient.

In contrast, increasing slows the decay of , producing smoother and more stable threshold curves. A high (e.g., 0.98) effectively accumulates historical information, which is advantageous for systems with infrequent or bursty activity.

Overall, for systems with frequent dynamics, a larger (e.g., 0.2) enhances the adaptability. For systems with less frequent events, a larger (e.g., 0.98) ensures threshold stability. The setting achieves a good balance and is used in the main implementation.

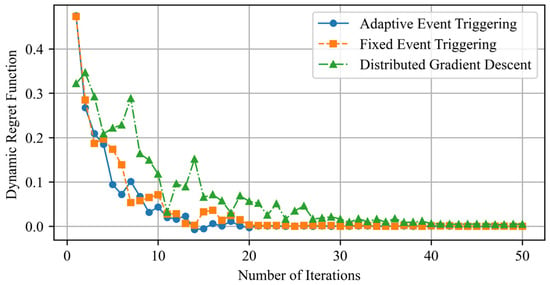

5.3. A Convergence Performance Comparison of Adaptive Event-Triggered and Other Algorithms

To evaluate the convergence performance of different optimization algorithms, this study experimentally compares the dynamic regret function values of three methods: adaptive event-triggered optimization, fixed event-triggered optimization, and distributed gradient descent. Adaptive event-triggering dynamically adjusts the threshold, reducing computation and communication while ensuring optimization accuracy. In contrast, fixed event-triggering updates at fixed time intervals, lacking the ability to dynamically adjust optimization frequency based on the optimization state. Although distributed gradient descent exhibits a good scalability, it executes computation and communication at every time step, resulting in a slower convergence.

Through comparative experiments, the advantages of adaptive event-triggering in terms of convergence speed, computational efficiency, and stability are verified. The dynamic regret function is used to measure the loss of an algorithm relative to the optimal strategy, providing an intuitive representation of the convergence trend. Figure 5 illustrates the variations in the dynamic regret function for different optimization methods.

Figure 5.

Variation trends of the dynamic regret function for different optimization methods.

Experimental results indicate that the adaptive event-triggered algorithm outperforms traditional methods in optimization convergence speed and stability. This method effectively reduces unnecessary computation and communication, rapidly adjusts during the initial optimization phase to improve convergence efficiency, and maintains stability in later stages, avoiding the computational overhead caused by frequent updates. In contrast, the fixed event-triggered method exhibits a relatively fast initial convergence but stabilizes after 15 iterations. Due to its triggering mechanism, it may result in unnecessary updates or information lag, thereby reducing the overall efficiency. The distributed gradient descent method has the slowest convergence speed, with smaller amplitude fluctuations in the optimization curve, indicating more oscillations in the optimization path, making rapid convergence difficult.

5.4. A Comparative Analysis of Communication Overhead and Consensus Performance Under Different Communication Mechanisms

In the real-time optimization of DSES systems, communication overhead is a key factor in evaluating algorithm efficiency. Excessive communication increases delays, computational costs, and affects real-time performance, while insufficient communication amplifies consensus errors, leading to suboptimal control strategies.

- The Impact of Different Communication Mechanisms on Communication Overhead

Firstly, a comparison was conducted among adaptive event-triggered optimization, fixed event-triggered optimization, and distributed gradient descent, analyzing their impact on the total communication occurrences, the average communication frequency, and the communication data volume during the optimization process. The experimental results are presented in Table 2.

Table 2.

A comparison of communication overhead under different communication mechanisms.

The total communication volume (in KB) is calculated as the total communication count multiplied by the data size per transmission. Each transmission includes state variables, gradient updates, and other optimization-related information. In this experiment, the data size per transmission is assumed to be 0.047 KB, and the total communication volume is derived accordingly.

Experimental results show that the adaptive event-triggered method incurs the lowest communication overhead, reducing the total communication by 33% compared to the fixed event-triggered method and 60% compared to distributed gradient descent. By triggering communication only when necessary, it minimizes redundant data exchanges while maintaining a low consensus error. The fixed event-triggered method results in a higher communication overhead due to fixed-interval updates, leading to unnecessary transmissions at certain time steps, totaling 180 communications. The distributed gradient descent method has the highest communication burden, performing global communication at every iteration, with a total of 300 communications. While it achieves the lowest consensus error, its high communication frequency imposes a significant system load.

- 2.

- The Impact of Network Topology on Communication Overhead

This study further analyzes the impact of different network topologies on communication overhead. Optimization experiments were conducted under ring topology, sparsely connected grid topology, and fully connected topology, with the total communication count, communication per iteration, and consensus error recorded. The results are presented in Table 3.

Table 3.

A comparison of communication overhead under different network topologies.

- 3.

- The Trade-off Analysis Between Communication Efficiency and Consensus Error

Consensus error measures the deviation of decision variables in distributed optimization from the global optimal solution. It is closely related to communication frequency—insufficient communication may result in delayed information exchange, leading to larger optimization deviations among storage units, whereas excessive communication increases computational and communication overhead.

To analyze the trade-off between consensus error and communication overhead, this study records the number of communications required to reach a target consensus error of 0.02 for different optimization methods, along with the iterations needed for convergence. The results are presented in Table 4.

Table 4.

The relationship between consensus error and communication overhead.

Experimental results show that the adaptive event-triggered method achieves the target consensus error with minimal communication overhead, requiring only 110 communications and 12 iterations for convergence. By dynamically adjusting communication frequency, it reduces redundancy while maintaining optimization performance.

The fixed event-triggered method takes 170 communications and 18 iterations, indicating that fixed triggering can cause unnecessary communication, leading to a slower convergence.

The distributed gradient descent method requires 250 communications and 40 iterations, imposing the highest communication overhead. While it ensures a high optimization accuracy, its computational burden makes it less suitable for large-scale distributed systems.

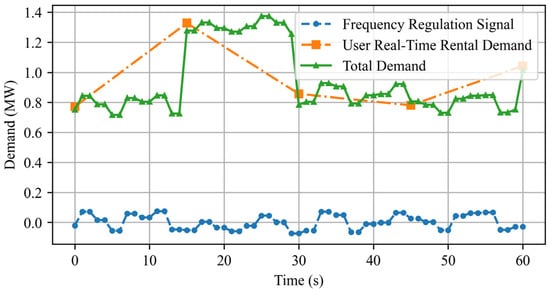

5.5. Real-Time Power Scheduling Optimization for Distributed Energy Storage Systems in a Hybrid Load Scenario

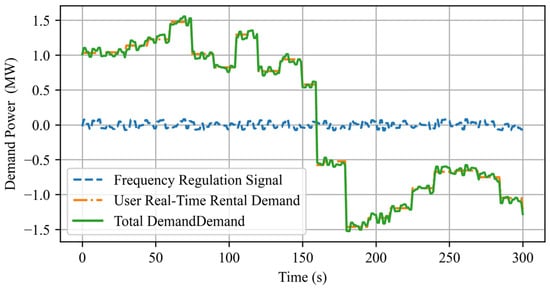

In the real-time scheduling of a distributed shared energy storage (DSES) cluster, optimizing power allocation to meet both frequency regulation needs and fluctuating user leasing demands is crucial. Figure 6 presents the input data, including frequency regulation commands, user leasing demand, and the total demand variations over time. Frequency regulation commands fluctuate rapidly with small amplitudes, reflecting real-time grid balancing needs, while user leasing demand changes less frequently, remaining stable over longer periods. This results in multi-level system demand variations, combining short-term fluctuations from frequency regulation with longer-term stability from user leasing requirements.

Figure 6.

Frequency regulation commands, user leasing demand, and the total demand for case 4.

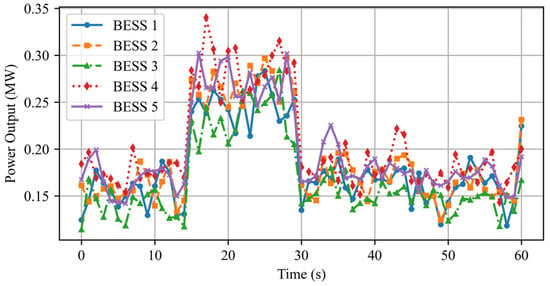

Figure 7 illustrates the power scheduling trajectory of the proposed distributed online optimization algorithm. Under algorithm guidance, storage units dynamically coordinate power output to adapt to demand fluctuations. When frequency regulation commands fluctuate, storage units adjust the power output accordingly. Similarly, during sudden shifts in user leasing demand, the system rapidly reallocates power to maintain supply–demand balance. Notably, during demand surges, power adjustments remain well balanced, demonstrating that the algorithm ensures both rapid response and controlled adjustments, preventing overreactions that could compromise system stability.

Figure 7.

Power scheduling response trajectory of the distributed energy storage system.

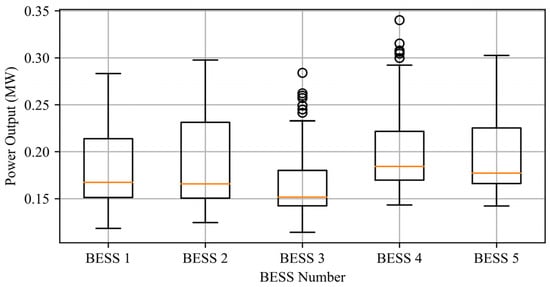

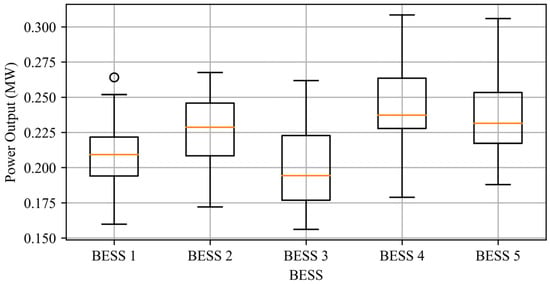

In distributed optimization, maintaining a balanced power allocation is as crucial as flexibility. Overloading a few storage units can shorten their lifespan, increase charge–discharge cycles, and accelerate battery degradation. Figure 8 presents the distribution of power scheduling among storage units using a box plot. The results show the uniform power distribution, with no single unit consistently handling excessive load. The box lengths and interquartile ranges indicate that power fluctuations remain within a controlled range, without extreme values, ensuring system-wide balance. The distribution of outliers suggests that while some units experience short-term high loads, these occurrences are sporadic and not persistent. Additionally, the median power outputs of storage units are closely aligned, demonstrating that the proposed optimization algorithm effectively maintains load balance, preventing premature aging due to prolonged high loads. Compared to traditional methods, which may overburden specific storage units, the proposed consensus-based optimization enables dynamic load-sharing across all units. During high-demand fluctuations, storage units coordinate power adjustments locally, avoiding the excessive charging or discharging of individual units. This balanced scheduling not only reduces equipment wear but also enhances system stability under sudden load variations, ensuring long-term efficient operation.

Figure 8.

A dispersion analysis of power scheduling in distributed energy storage units.

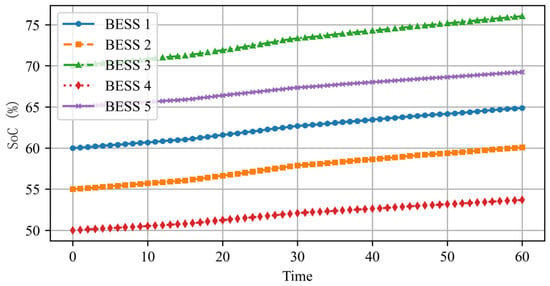

Figure 9 shows the SoC variations during scheduling. The results indicate that SoC levels remain stable and within the 20–80% safety range, avoiding overcharging and deep discharges, which helps preserve battery lifespan. The proposed optimization method effectively regulates charge–discharge behavior while ensuring synchronized SoC trends across storage units through consensus-based coordination, enabling efficient decentralized operation.

Figure 9.

The state of the charge variation of energy storage units for case 4.

5.6. Adaptability and Scalability Analysis Under Storage Unit Disconnection and Reconnection

In DSES operation, storage units may disconnect or rejoin due to maintenance, faults, or system expansion, requiring optimization algorithms to adapt dynamically for stable power scheduling. The proposed distributed online convex optimization algorithm, incorporating consensus mechanisms and adaptive event-triggering, is tested for scalability in this scenario.

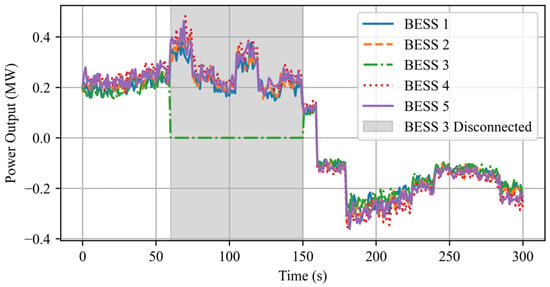

A five-unit DSES is simulated, where units operate independently under decentralized optimization, adjusting power output based on demand. From t = 60 to t = 150, storage unit 3 disconnects, and the remaining units compensate for the supply gap. At t = 151, unit 3 rejoins and resumes normal operation. Figure 10 presents the scenario data.

Figure 10.

Frequency regulation commands, user leasing demand, and the total demand for case 5.

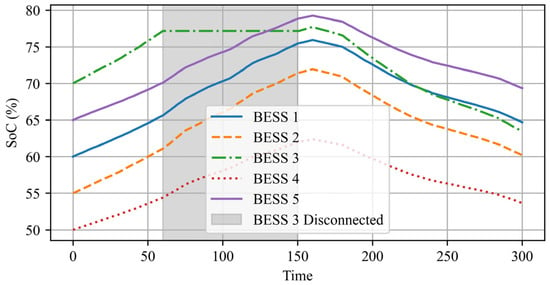

Figure 11 illustrates the power scheduling trajectory under storage unit disconnection and reconnection. When storage unit 3 disconnects, the system quickly adjusts, with other units increasing their output to compensate. Power trajectories remain stable, ensuring a balanced load distribution. Upon reconnection, unit 3 gradually restores output, and the system returns to pre-disconnection levels smoothly. These results confirm that the algorithm enables a rapid adaptation to unit outages and a stable reintegration, preventing scheduling instability. Figure 12 illustrates the SoC variations.

Figure 11.

Power scheduling response trajectory.

Figure 12.

The state of the charge variation of energy storage units for case 5.

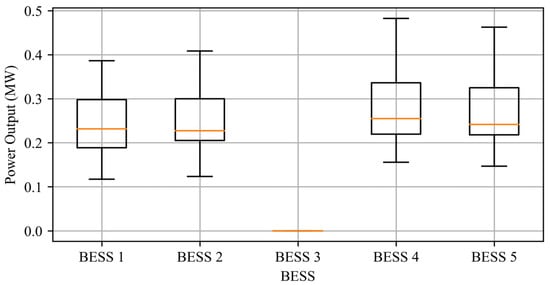

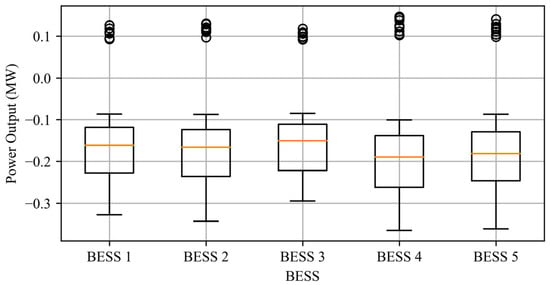

Figure 13, Figure 14 and Figure 15 illustrate the power output distribution of storage unit 3 before disconnection, during disconnection, and after reconnection, visualized through box plots to show power dispersion. Before disconnection, all storage units operate normally with a balanced power scheduling, as shown by similar medians and compact interquartile ranges in the box plot. During disconnection, unit 3’s output drops to zero, and other units compensate for the load, leading to higher medians and wider interquartile ranges while maintaining load balance. After reconnection, unit 3 gradually resumes output, the standard deviation decreases, and the system quickly stabilizes, ensuring an equitable power distribution.

Figure 13.

A power dispersion analysis of storage units before the disconnection of storage unit 3.

Figure 14.

A power dispersion analysis of storage units during the disconnection of storage unit 3.

Figure 15.

A power dispersion analysis of storage units after the reconnection of storage unit 3.

6. Conclusions

This paper presents a consensus-driven distributed online convex optimization strategy with an adaptive event-triggered mechanism to enhance the scheduling efficiency and scalability of DSES systems. The proposed approach leverages a consensus algorithm to achieve a decentralized and autonomous scheduling of storage units while maintaining global optimization consistency. By incorporating the Lagrangian relaxation method, the strategy effectively handles global power balance constraints, reducing the reliance on a central controller and enhancing system robustness and scalability.

To further improve communication efficiency, an adaptive event-triggered mechanism is developed to dynamically adjust the communication frequency based on system state variations. Instead of using a fixed triggering threshold, the mechanism employs a self-updating threshold that evolves in response to power changes. Communication is activated only when the state deviation exceeds this dynamically adjusted threshold, thereby reducing redundant transmissions while ensuring convergence stability. This approach minimizes communication overhead and enhances the system’s coordination capability under varying operating conditions.

Simulation experiments conducted on the IEEE 14-bus system validate the effectiveness of the proposed strategy. The results demonstrate that communication overhead is reduced by 33–60%, while convergence speed improves by over 40% compared to conventional methods. Moreover, the strategy exhibits strong adaptability in handling dynamic conditions, such as the integration and disconnection of storage units, ensuring stable and efficient scheduling in practical applications.

The proposed optimization framework not only achieves a balance between communication efficiency and optimization accuracy but also enhances the overall scalability of DSES systems. By reducing the reliance on frequent global information exchange, the method ensures a low consensus error while significantly lowering communication costs, making it particularly suitable for large-scale energy storage networks.

Despite the advantages of the proposed method, to improve the real-world applicability of the proposed method, future research will focus on several key directions. Firstly, it is important to develop delay-tolerant and noise-resilient control strategies that enhance robustness under practical communication constraints such as latency, data loss, or asynchronous updates. Secondly, the algorithm can be extended to support multi-time-scale coordination, enabling the integration of real-time control with day-ahead and hour-ahead planning layers commonly used in energy management systems. Thirdly, adaptive parameter tuning mechanisms based on reinforcement learning or real-time performance feedback can be incorporated to improve algorithm adaptability across different system conditions. Finally, conducting hardware-in-the-loop (HIL) simulations and field experiments will be essential to evaluate the deployment feasibility and performance of the proposed strategy in actual shared energy storage systems. These enhancements will collectively strengthen the practicality, robustness, and scalability of the framework, paving the way for real-world implementation.

Author Contributions

Conceptualization, Y.Z.; methodology, Y.Z.; software, Y.Z.; validation, Y.Z. and X.F.; formal analysis, Y.Z.; investigation, Y.Z.; resources, Y.Z.; writing, Y.Z. and X.F.; project administration, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CSES | Centralized Shared Energy Storage |

| DSES | Distributed Shared Energy Storage |

| SoC | State of Charge |

| BESS | Battery Energy Storage System |

References

- Liu, H.; Zhou, S.; Gu, W.; Zhuang, W.; Gao, M.; Chan, C.C.; Zhang, X. Coordinated planning model for multi-regional ammonia industries leveraging hydrogen supply chain and power grid integration: A case study of Shandong. Appl. Energy 2025, 377, 124456. [Google Scholar] [CrossRef]

- Dai, R.-C.; Esmaeilbeigi, R.; Charkhgard, H. The Utilization of Shared Energy Storage in Energy Systems: A Comprehensive Review. IEEE Trans. Smart Grid 2021, 12, 3163–3174. [Google Scholar] [CrossRef]

- Mao, Y.; Cai, Z.; Jiao, X.; Long, D. Multi-timescale optimization scheduling of integrated energy systems oriented towards generalized energy storage services. Sci. Rep. 2025, 15, 8549. [Google Scholar] [CrossRef] [PubMed]

- Mao, Y.; Wan, K.; Xu, D.; Long, D. Collaborative operation strategy of multiple microenergy grids considering demand-side energy-sharing behavior. Energy Sci. Eng. 2024, 12, 3663–3680. [Google Scholar] [CrossRef]

- Mao, Y.; Cai, Z.; Wan, K.; Long, D. Bargaining-based energy sharing framework for multiple CCHP systems with a shared energy storage provider. Energy Sci. Eng. 2024, 12, 1369–1388. [Google Scholar] [CrossRef]

- Gao, X.; Xu, Y.-Q.; Liu, Y.; Li, H.; Wang, X.; Wang, D.; Shi, Y. Differentiated Configuration Options for Centralized and Distributed Energy Storage. J. Phys. Conf. Ser. 2023, 2427, 012042. [Google Scholar] [CrossRef]

- Xu, C.; Wu, X.; Shan, Z.; Zhang, Q.; Dang, B.; Wang, Y.; Shi, C. Bi-level configuration and operation collaborative optimization of shared hydrogen energy storage system for a wind farm cluster. J. Energy Storage 2024, 86, 111107. [Google Scholar] [CrossRef]

- Huang, P.; Sun, Y.; Lovati, M.; Zhang, X. Solar-photovoltaic-power-sharing-based design optimization of distributed energy storage systems for performance improvements. Energy 2021, 222, 119931. [Google Scholar] [CrossRef]

- Babacan, O.; Ratnam, E.L.; Disfani, V.R.; Kleissl, J. Distributed energy storage system scheduling considering tariff structure, energy arbitrage and solar PV penetration. Appl. Energy 2017, 205, 1384–1393. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.; Du, E.; Fan, C.; Wu, Z.; Yao, Y.; Zhang, N. A review and outlook on cloud energy storage: An aggregated and shared utilizing method of energy storage system. Renew. Sustain. Energy Rev. 2023, 185, 113606. [Google Scholar] [CrossRef]

- Chang, L.; Zhang, W.; Xu, S.; Spence, K. Review on distributed energy storage systems for utility applications. CPSS Trans. Power Electron. Appl. 2017, 2, 267–276. [Google Scholar] [CrossRef]

- Pan, Y.; Sangwongwanich, A.; Yang, Y.; Blaabjerg, F. Distributed control of islanded series PV-battery-hybrid systems with low communication burden. IEEE Trans. Power Electron. 2021, 36, 10199–10213. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, H.; Huang, J.; Lin, X. Virtual energy storage sharing and capacity allocation. IEEE Trans. Smart Grid 2019, 11, 1112–1123. [Google Scholar] [CrossRef]

- Chen, T.; Ling, Q.; Giannakis, G.B. An online convex optimization approach to proactive network resource allocation. IEEE Trans. Signal Process. 2017, 65, 6350–6364. [Google Scholar] [CrossRef]

- Du, M.; Ma, X.; Zhang, Z.; Wang, X.; Chen, Q. A review on consensus algorithm of blockchain. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA; pp. 2567–2572. [Google Scholar]

- Koppel, A.; Jakubiec, F.Y.; Ribeiro, A. A saddle point algorithm for networked online convex optimization. IEEE Trans. Signal Process. 2015, 63, 5149–5164. [Google Scholar] [CrossRef]

- Yu, Z.; Qiao, Y.; Xu, W.; Ma, R.; Wang, S.; Lu, X. Research on Autonomous Optimization Strategy of Distributed Energy Storage Based on Consensus Algorithm. In Proceedings of the Panda Forum on Power and Energy (PandaFPE), Chengdu, China, 27–30 April 2023; IEEE: Piscataway, NJ, USA; pp. 2288–2295. [Google Scholar]

- Zhang, X.; Wang, X.; Liao, C.; Zhu, C.; Han, L.; Ying, L.; Tian, T. Distributed optimal scheduling strategy for energy storage units based on consensus algorithm. Power Demand Side Manag. 2021, 23, 41–46. [Google Scholar]

- Zhu, K.; Chowdhury, S.; Sun, M.; Zhang, J. Grid optimization of shared energy storage among wind farms based on wind forecasting. In Proceedings of the IEEE/PES Transmission and Distribution Conference and Exposition (T&D), Denver, CO, USA, 16–19 April 2018; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Bian, Y.; Lu, P.; Xie, L.; Ye, J.; Ma, L.; Yang, J. Bi-level optimal configuration of hybrid shared energy storage capacity in wind farms considering prediction error. CSEE J. Power Energy Syst. 2024, 1–10. [Google Scholar] [CrossRef]

- Yao, L.; Wang, W.; Liu, J.; Wang, Y.; Chen, Z. Economic optimal control of source-storage collaboration based on wind power forecasting for transient frequency regulation. J. Energy Storage 2024, 84, 111002. [Google Scholar] [CrossRef]

- Qiu, Y.; Lin, J.; Liu, F.; Dai, N.; Song, Y. Continuous random process modeling of AGC signals based on stochastic differential equations. IEEE Trans. Power Syst. 2021, 36, 4575–4587. [Google Scholar] [CrossRef]

- Zhao, T.; Parisio, A.; Milanović, J.V. Distributed control of battery energy storage systems for improved frequency regulation. IEEE Trans. Power Syst. 2020, 35, 3729–3738. [Google Scholar] [CrossRef]

- Li, X.; Yi, X.; Xie, L. Distributed online convex optimization with an aggregative variable. IEEE Trans. Control Netw. Syst. 2021, 9, 438–449. [Google Scholar] [CrossRef]

- Zhang, Y.; Ravier, R.J.; Zavlanos, M.M.; Tarokh, V. A distributed online convex optimization algorithm with improved dynamic regret. In Proceedings of the IEEE 58th Conference on Decision and Control (CDC), Nice, France, 11–13 December 2019; IEEE: Piscataway, NJ, USA; pp. 2449–2454. [Google Scholar]

- Tu, Z.; Wang, X.; Hong, Y.; Wang, L.; Yuan, D.; Shi, G. Distributed online convex optimization with compressed communication. Adv. Neural Inf. Process. Syst. 2022, 35, 34492–34504. [Google Scholar]

- Gao, L.; Deng, S.; Li, H.; Li, C. An event-triggered approach for gradient tracking in consensus-based distributed optimization. IEEE Trans. Netw. Sci. Eng. 2021, 9, 510–523. [Google Scholar] [CrossRef]

- Li, H.; Liu, S.; Soh, Y.C.; Xie, L. Event-triggered communication and data rate constraint for distributed optimization of multiagent systems. IEEE Trans. Syst. Man Cybern. Syst. 2017, 48, 1908–1919. [Google Scholar] [CrossRef]

- Li, S.; Nian, X.; Deng, Z. Distributed optimization of second-order nonlinear multiagent systems with event-triggered communication. IEEE Trans. Control Netw. Syst. 2021, 8, 1954–1963. [Google Scholar] [CrossRef]

- Su, X.; Liu, X.; Song, Y.D. Event-triggered sliding-mode control for multi-area power systems. IEEE Trans. Ind. Electron. 2017, 64, 6732–6741. [Google Scholar] [CrossRef]

- Zhang, N.; Sun, Q.; Yang, L.; Li, Y. Event-triggered distributed hybrid control scheme for the integrated energy system. IEEE Trans. Ind. Inform. 2021, 18, 835–846. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, J.; Xu, S. H-infinity load frequency control of networked power systems via an event-triggered scheme. IEEE Trans. Ind. Electron. 2019, 67, 7104–7113. [Google Scholar] [CrossRef]

- Zhang, X.M.; Han, Q.L.; Zhang, B.L. An overview and deep investigation on sampled-data-based event-triggered control and filtering for networked systems. IEEE Trans. Ind. Inform. 2016, 13, 4–16. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).