Ethical Use of Generative Artificial Intelligence Among Ecuadorian University Students

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Inclusion Criteria

2.3. Participants

2.4. Data Collection Instrument

- Affective learning (19 items): measures emotional attitudes and perceptions toward AI and its relationship with ethics in learning.

- Behavioral learning (11 items): assesses the frequency and manner in which students use AI tools in their academic environment.

- Cognitive learning (9 items): analyzes students’ level of knowledge and understanding of AI and its ethical implications.

- Ethical learning (16 items): measures the internalization of ethical principles in the use of AI in the educational context.

2.5. Procedure

2.6. Ethical Considerations

2.7. Data Analysis

3. Results

3.1. Preferences in the Use of Artificial Intelligence Applications

3.2. KMO Sampling Adequacy Test and Bartlett’s Test of Sphericity

3.3. Instrument Reliability Analysis

3.4. Convergent Validity of the Instrument

3.5. Discriminant Validity Between Constructs

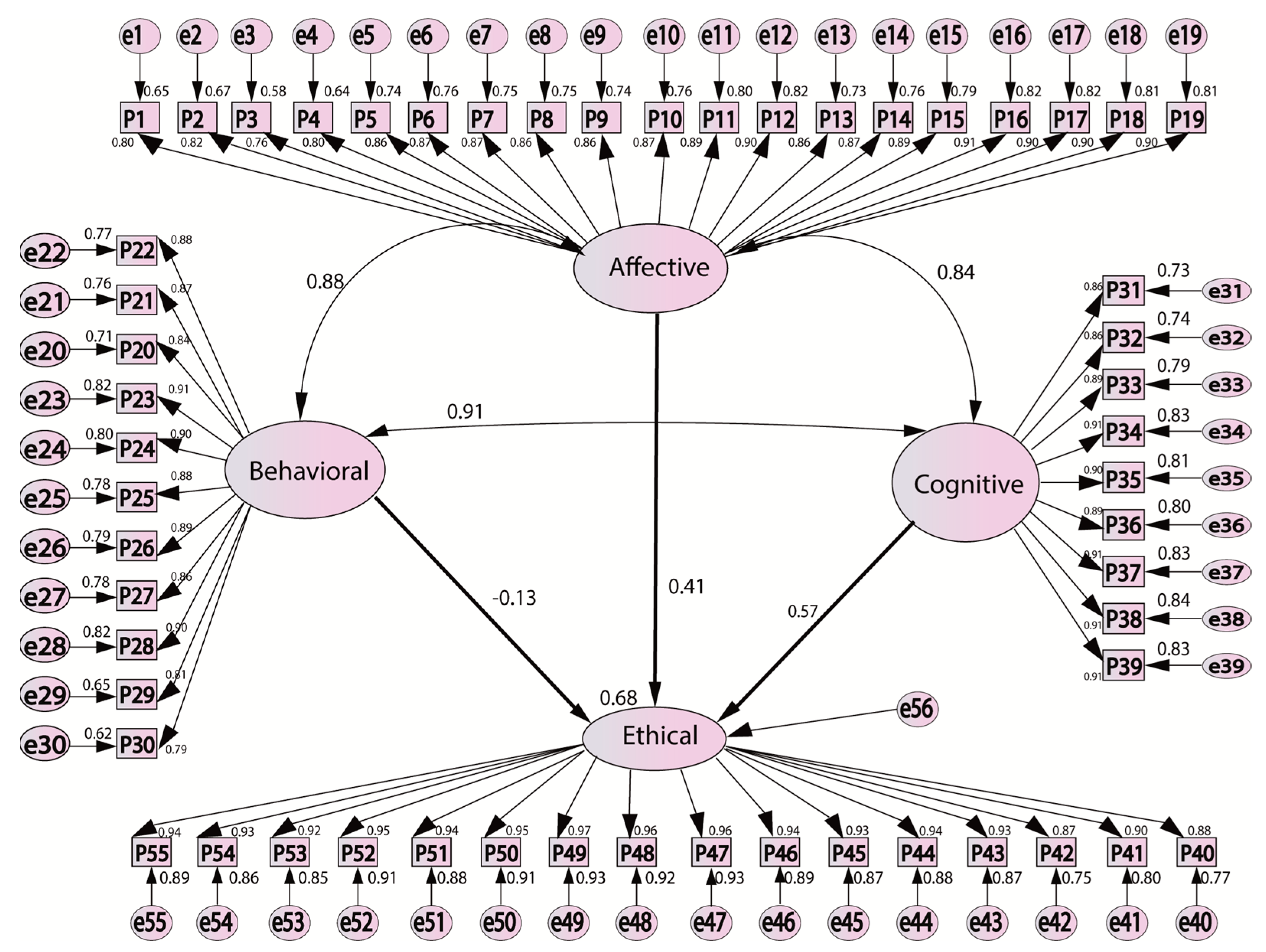

4. Structural Model Analysis

4.1. Model Fit

- CMIN/DF = 4.294 (acceptable if <5),

- NFI = 0.927,

- RFI = 0.925,

- IFI = 0.944,

- TLI = 0.939,

- CFI = 0.944,

- RMSEA = 0.062,

- AIC = 6506.289.

4.2. Structural Hypothesis Validation

5. Discussion

5.1. AI Tools

5.2. Impact of AI Learning on Academic Ethics

5.3. Sustainability Risks and Challenges

5.4. Regulations and Implementation Strategies

5.5. Limitations and Future Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Escotet, M.Á. The Optimistic Future of Artificial Intelligence in Higher Education. Prospects 2024, 54, 531–540. [Google Scholar] [CrossRef]

- Rahiman, H.U.; Kodikal, R. Revolutionizing Education: Artificial Intelligence Empowered Learning in Higher Education. Cogent Educ. 2024, 11, 2293431. [Google Scholar] [CrossRef]

- Yang, Y.; Zhuang, Y.; Pan, Y. Multiple Knowledge Representation for Big Data Artificial Intelligence: Framework, Applications, and Case Studies. Front. Inf. Technol. Electron. Eng. 2021, 22, 1551–1558. [Google Scholar] [CrossRef]

- Nguyen, D.K.; Sermpinis, G.; Stasinakis, C. Big Data, Artificial Intelligence and Machine Learning: A Transformative Symbiosis in Favour of Financial Technology. Eur. Financ. Manag. 2023, 29, 517–548. [Google Scholar] [CrossRef]

- Rashid, A.B.; Kausik, M.A.K. AI Revolutionizing Industries Worldwide: A Comprehensive Overview of Its Diverse Applications. Hybrid Adv. 2024, 7, 100277. [Google Scholar] [CrossRef]

- Bekbolatova, M.; Mayer, J.; Ong, C.W.; Toma, M. Transformative Potential of AI in Healthcare: Definitions, Applications, and Navigating the Ethical Landscape and Public Perspectives. Healthcare 2024, 12, 125. [Google Scholar] [CrossRef]

- Mohamed, Y.A.; Khanan, A.; Bashir, M.; Mohamed, A.H.H.M.; Adiel, M.A.E.; Elsadig, M.A. The Impact of Artificial Intelligence on Language Translation: A Review. IEEE Access 2024, 12, 25553–25579. [Google Scholar] [CrossRef]

- Alharbi, W. AI in the Foreign Language Classroom: A Pedagogical Overview of Automated Writing Assistance Tools. Educ. Res. Int. 2023, 2023, 4253331. [Google Scholar] [CrossRef]

- Jiang, R. How Does Artificial Intelligence Empower EFL Teaching and Learning Nowadays? A Review on Artificial Intelligence in the EFL Context. Front. Psychol. 2022, 13, 1049401. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, J.; Lin, J. Generative AI Exacerbates the Climate Crisis. Science 2025, 387, 587. [Google Scholar] [CrossRef]

- Ibrahim, K. Using AI-Based Detectors to Control AI-Assisted Plagiarism in ESL Writing: “The Terminator Versus the Machines”. Lang Test Asia 2023, 13, 46. [Google Scholar] [CrossRef]

- Qadir, J. Engineering Education in the Era of ChatGPT: Promise and Pitfalls of Generative AI for Education. In Proceedings of the 2023 IEEE Global Engineering Education Conference (EDUCON), Kuwait, Kuwait, 1–4 May 2023; pp. 1–9. [Google Scholar]

- Swiecki, Z.; Khosravi, H.; Chen, G.; Martinez-Maldonado, R.; Lodge, J.M.; Milligan, S.; Selwyn, N.; Gašević, D. Assessment in the Age of Artificial Intelligence. Comput. Educ. Artif. Intell. 2022, 3, 100075. [Google Scholar] [CrossRef]

- Wang, Y. Artificial Intelligence in Educational Leadership: A Symbiotic Role of Human-Artificial Intelligence Decision-Making. J. Educ. Adm. 2021, 59, 256–270. [Google Scholar] [CrossRef]

- Ateeq, A.; Alzoraiki, M.; Milhem, M.; Ateeq, R.A. Artificial Intelligence in Education: Implications for Academic Integrity and the Shift toward Holistic Assessment. Front. Educ. 2024, 9, 1470979. [Google Scholar] [CrossRef]

- Mittal, S.; Vashist, S.; Chaudhary, K. Equitable Education and Sustainable Learning: A Literary Exploration of Integration of Artificial Intelligence in Education for SDGs Advancement. In Explainable AI for Education: Recent Trends and Challenges; Singh, T., Dutta, S., Vyas, S., Rocha, Á., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 101–118. ISBN 978-3-031-72410-7. [Google Scholar]

- Malik, A.R.; Pratiwi, Y.; Andajani, K.; Numertayasa, I.W.; Suharti, S.; Darwis, A. Marzuki Exploring Artificial Intelligence in Academic Essay: Higher Education Student’s Perspective. Int. J. Educ. Res. Open 2023, 5, 100296. [Google Scholar] [CrossRef]

- Nguyen, A.; Ngo, H.N.; Hong, Y.; Dang, B.; Nguyen, B.P.T. Ethical Principles for Artificial Intelligence in Education. Educ. Inf. Technol. 2023, 28, 4221–4241. [Google Scholar] [CrossRef]

- Bond, M.; Khosravi, H.; De Laat, M.; Bergdahl, N.; Negrea, V.; Oxley, E.; Pham, P.; Chong, S.W.; Siemens, G. A Meta Systematic Review of Artificial Intelligence in Higher Education: A Call for Increased Ethics, Collaboration, and Rigour. Int. J. Educ. Technol. High. Educ. 2024, 21, 4. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Wu, W.; Leung, J.K.L.; Chiu, T.K.F.; Chu, S.K.W. Design and Validation of the AI Literacy Questionnaire: The Affective, Behavioural, Cognitive and Ethical Approach. Br. J. Educ. Technol. 2024, 55, 1082–1104. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report 2024. Available online: https://cdn.openai.com/papers/gpt-4.pdf (accessed on 6 May 2025).

- Hoy, M.B. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Med. Ref. Serv. Q. 2018, 37, 81–88. [Google Scholar] [CrossRef]

- Kaiser, H.F. An Index of Factorial Simplicity. Psychometrika 1974, 39, 31–36. [Google Scholar] [CrossRef]

- Hair, J.F.; Gabriel, M.L.D.S.; Silva, D.d.; Braga, S. Development and Validation of Attitudes Measurement Scales: Fundamental and Practical Aspects. RAUSP Manag. J. 2019, 54, 490–507. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Hu, L.; Bentler, P.M. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives. Struct. Equ. Model. A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Hu, W. Students’ Voices on Generative AI: Perceptions, Benefits, and Challenges in Higher Education. Int. J. Educ. Technol. High Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- George Pallivathukal, R.; Kyaw Soe, H.H.; Donald, P.M.; Samson, R.S.; Hj Ismail, A.R. ChatGPT for Academic Purposes: Survey Among Undergraduate Healthcare Students in Malaysia. Cureus 2024, 16, e53032. [Google Scholar] [CrossRef]

- Ayala-Chauvin, M.; Avilés-Castillo, F. Optimizing Natural Language Processing: A Comparative Analysis of GPT-3.5, GPT-4, and GPT-4o. Data Metadata 2024, 3, 359. [Google Scholar] [CrossRef]

- Digital Education Council. Digital Education Council Global AI Student Survey 2024. 2024. Available online: https://www.digitaleducationcouncil.com/post/digital-education-council-global-ai-student-survey-2024 (accessed on 6 May 2025).

- Ravšelj, D.; Keržič, D.; Tomaževič, N.; Umek, L.; Brezovar, N.; Iahad, N.A.; Abdulla, A.A.; Akopyan, A.; Segura, M.W.A.; AlHumaid, J.; et al. Higher Education Students’ Perceptions of ChatGPT: A Global Study of Early Reactions. PLoS ONE 2025, 20, e0315011. [Google Scholar] [CrossRef] [PubMed]

- Dai, C.-P.; Ke, F. Educational Applications of Artificial Intelligence in Simulation-Based Learning: A Systematic Mapping Review. Comput. Educ. Artif. Intell. 2022, 3, 100087. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Sun, J.C.-Y.; Tsai, H.-E.; Cheng, W.K.R. Effects of Integrating an Open Learner Model with AI-Enabled Visualization on Students’ Self-Regulation Strategies Usage and Behavioral Patterns in an Online Research Ethics Course. Comput. Educ. Artif. Intell. 2023, 4, 100120. [Google Scholar] [CrossRef]

- Selwyn, N.; Cordoba, B.G.; Andrejevic, M.; Campbell, L. AI for Social Good: Australian Public Attitudes Toward AI and Society; Monash University: Clayton, VIC, Australia, 2020. [Google Scholar] [CrossRef]

- Ouyang, F.; Wu, M.; Zheng, L.; Zhang, L.; Jiao, P. Integration of Artificial Intelligence Performance Prediction and Learning Analytics to Improve Student Learning in Online Engineering Course. Int. J. Educ. Technol. High Educ. 2023, 20, 4. [Google Scholar] [CrossRef]

- Chen, K.; Tallant, A.C.; Selig, I. Exploring Generative AI Literacy in Higher Education: Student Adoption, Interaction, Evaluation and Ethical Perceptions. Inf. Learn. Sci. 2024, 126, 132–148. [Google Scholar] [CrossRef]

- The Ethics of Artificial Intelligence in Education: Practices, Challenges, and Debates; Holmes, W., Porayska-Pomsta, K., Eds.; Routledge, Taylor & Francis Group: New York, NY, USA, 2022. [Google Scholar]

- Sullivan, M.; Kelly, A.; McLaughlan, P. ChatGPT in Higher Education: Considerations for Academic Integrity and Student Learning. J. Appl. Learn. Teach. 2023, 6, 31–40. [Google Scholar] [CrossRef]

- Williams, R.T. The Ethical Implications of Using Generative Chatbots in Higher Education. Front. Educ. 2024, 8, 1331607. [Google Scholar] [CrossRef]

- Venkatesh, V.; Bala, H. Technology Acceptance Model 3 and a Research Agenda on Interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef]

- Xu, X.; Song, Y. Is There a Conflict between Automation and Environment? Implications of Artificial Intelligence for Carbon Emissions in China. Sustainability 2023, 15, 12437. [Google Scholar] [CrossRef]

- Bashir, N.; Donti, P.; Cuff, J.; Sroka, S.; Ilic, M.; Sze, V.; Delimitrou, C.; Olivetti, E. The Climate and Sustainability Implications of Generative AI. MIT Explor. Gener. AI 2024, 1–45. [Google Scholar] [CrossRef]

- Hosseini, M.; Gao, P.; Vivas-Valencia, C. A Social-Environmental Impact Perspective of Generative Artificial Intelligence. Environ. Sci. Ecotechnol. 2025, 23, 100520. [Google Scholar] [CrossRef]

- Berthelot, A.; Caron, E.; Jay, M.; Lefèvre, L. Estimating the Environmental Impact of Generative-AI Services Using an LCA-Based Methodology. Procedia CIRP 2024, 122, 707–712. [Google Scholar] [CrossRef]

- Hutter, R.; Hutter, M. Chances and Risks of Artificial Intelligence—A Concept of Developing and Exploiting Machine Intelligence for Future Societies. Appl. Syst. Innov. 2021, 4, 37. [Google Scholar] [CrossRef]

- Wach, K.; Công, D.D.; Ejdys, J.; Kazlauskaitė, R.; Korzyński, P.; Mazurek, G.; Paliszkiewicz, J.; Ziemba, E.W. The Dark Side of Generative Artificial Intelligence: A Critical Analysis of Controversies and Risks of ChatGPT. Entrep. Bus. Econ. Rev. 2023, 11, 7–30. [Google Scholar] [CrossRef]

- Capraro, V.; Lentsch, A.; Acemoglu, D.; Akgun, S.; Akhmedova, A.; Bilancini, E.; Bonnefon, J.-F.; Brañas-Garza, P.; Butera, L.; Douglas, K.M.; et al. The Impact of Generative Artificial Intelligence on Socioeconomic Inequalities and Policy Making. Proc. Natl. Acad. Sci. USA Nexus 2024, 3, 191. [Google Scholar] [CrossRef]

- Nedungadi, P.; Tang, K.-Y.; Raman, R. The Transformative Power of Generative Artificial Intelligence for Achieving the Sustainable Development Goal of Quality Education. Sustainability 2024, 16, 9779. [Google Scholar] [CrossRef]

- Kong, S.-C.; Cheung, W.M.-Y.; Zhang, G. Evaluating an Artificial Intelligence Literacy Programme for Developing University Students’ Conceptual Understanding, Literacy, Empowerment and Ethical Awareness. Educ. Technol. Soc. 2023, 26, 16–30. [Google Scholar]

- UNESCO Recommendation on the Ethics of Artificial Intelligence. 2021, p. 21. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000380455 (accessed on 6 May 2025).

- OECD Recommendation of the Council on Artificial Intelligence. 2024, p. 12. Available online: https://legalinstruments.oecd.org/en/instruments/%20OECD-LEGAL-0449 (accessed on 6 May 2025).

- European Parliament and Council. Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 March 2024 laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013 and (EU) 2018/858. Off. J. Eur. Union 2024, L 168, 1–157. Available online: http://data.europa.eu/eli/reg/2024/1689/oj (accessed on 3 May 2025).

- Comissão Temporária sobre Inteligência Artificial no Brasil–CTIA. Projeto de Lei nº 2338, de 2023. Dispõe sobre o uso da inteligência artificial no Brasil; Senado Federal: Brasília, Brazil, 2023; Available online: https://www25.senado.leg.br/web/atividade/materias/-/materia/162339 (accessed on 3 May 2025).

- Congreso de la República del Perú. Ley N.° 31814: Ley Que Promueve el Uso de la Inteligencia Artificial en Favor del Desarrollo Económico y Social del País. Lima, Peru. 2023. Available online: https://www.gob.pe/institucion/congreso-de-la-republica/normas-legales/4565760-31814 (accessed on 3 May 2025).

- Núñez Ramos, S. Proyecto de Ley Orgánica de Regulación y Promoción de la Inteligencia Artificial en Ecuador; Asamblea Nacional del Ecuador: Quito, Ecuador, 2024; Available online: https://www.asambleanacional.gob.ec/sites/default/files/private/asambleanacional/filesasambleanacionalnameuid-19130/2192.%20Proyecto%20de%20Ley%20Org%C3%A1nica%20de%20Regulaci%C3%B3n%20y%20Promoci%C3%B3n%20de%20la%20Inteligencia%20Artificial%20en%20Ecuador%20-pnu%C3%B1ez/pp%20-%20proyecto%20de%20ley%20450889-nu%C3%B1ez.pdf (accessed on 3 May 2025).

- Memarian, B.; Doleck, T. Fairness, Accountability, Transparency, and Ethics (FATE) in Artificial Intelligence (AI) and Higher Education: A Systematic Review. Comput. Educ. Artif. Intell. 2023, 5, 100152. [Google Scholar] [CrossRef]

- Pawlicki, M.; Pawlicka, A.; Uccello, F.; Szelest, S.; D’Antonio, S.; Kozik, R.; Choraś, M. Evaluating the Necessity of the Multiple Metrics for Assessing Explainable AI: A Critical Examination. Neurocomputing 2024, 602, 128282. [Google Scholar] [CrossRef]

- Qadhi, S.M.; Alduais, A.; Chaaban, Y.; Khraisheh, M. Generative AI, Research Ethics, and Higher Education Research: Insights from a Scientometric Analysis. Information 2024, 15, 325. [Google Scholar] [CrossRef]

- ISO/IEC 42001:2023; Information Technology—Artificial Intelligence—Management System. International Organization for Standardization: Geneva, Switzerland, 2023.

- NIST-AI-600-1; Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile. National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024.

- Soler Garrido, J.; De Nigris, S.; Bassani, E.; Sanchez, I.; Evas, T.; André, A.-A.; Boulangé, T. Harmonised Standards for the European AI Act; JRC Publications Repository: Brussels, Belgium, 2024. [Google Scholar]

- Wu, C.; Zhang, H.; Carroll, J.M. AI Governance in Higher Education: Case Studies of Guidance at Big Ten Universities. Future Internet 2024, 16, 354. [Google Scholar] [CrossRef]

- Mahajan, P. What Is Ethical: AIHED Driving Humans or Human-Driven AIHED? A Conceptual Framework Enabling the Ethos of AI-Driven Higher Education. 2025. Available online: https://www.researchgate.net/publication/389622934_What_is_Ethical_AIHED_Driving_Humans_or_Human-Driven_AIHED_A_Conceptual_Framework_enabling_the_‘Ethos’_of_AI-driven_Higher_Education (accessed on 12 April 2025).

| AI Application | Frequency | Percentage (%) |

|---|---|---|

| ChatGPT | 518 | 62.2 |

| Gemini | 131 | 15.7 |

| Siri | 70 | 8.4 |

| Google Bard | 41 | 4.9 |

| Copilot | 10 | 1.2 |

| Midjourney | 8 | 1.0 |

| DALL-E | 6 | 0.7 |

| Perplexity | 6 | 0.7 |

| Fireflies | 5 | 0.6 |

| Claude | 4 | 0.5 |

| Deepseek | 4 | 0.5 |

| Monica | 3 | 0.4 |

| Others (<0.1%) | 7 | 0.8 |

| None | 20 | 2.4 |

| Total | 833 | 100 |

| Factors | Cronbach’s α | McDonald’s Ω | Number of Items |

|---|---|---|---|

| Affective learning | 0.982 | 0.982 | 19 |

| Behavioral learning | 0.970 | 0.970 | 11 |

| Cognitive learning | 0.973 | 0.972 | 9 |

| Ethical learning | 0.991 | 0.991 | 16 |

| Total | 0.992 | 0.992 | 55 |

| Factors | Item | Factor Loading (λ) | Cronbach’s Alpha | Composite Reliability (CR) | Average Variance Extracted (AVE) |

|---|---|---|---|---|---|

| Affective learning | AA1 | 0.804 | 0.982 | 0.982 | 0.747 |

| AA2 | 0.817 | 0.982 | |||

| AA3 | 0.759 | 0.982 | |||

| AA4 | 0.797 | 0.982 | |||

| AA5 | 0.863 | 0.981 | |||

| AA6 | 0.874 | 0.981 | |||

| AA7 | 0.865 | 0.981 | |||

| AA8 | 0.865 | 0.981 | |||

| AA9 | 0.862 | 0.981 | |||

| AA10 | 0.873 | 0.981 | |||

| AA11 | 0.892 | 0.981 | |||

| AA12 | 0.903 | 0.981 | |||

| AA13 | 0.857 | 0.982 | |||

| AA14 | 0.874 | 0.981 | |||

| AA15 | 0.889 | 0.981 | |||

| AA16 | 0.906 | 0.981 | |||

| AA17 | 0.904 | 0.981 | |||

| AA18 | 0.899 | 0.981 | |||

| AA19 | 0.902 | 0.981 | |||

| Behavioral learning | AC1 | 0.842 | 0.969 | 0.971 | 0.754 |

| AC2 | 0.869 | 0.968 | |||

| AC3 | 0.875 | 0.968 | |||

| AC4 | 0.905 | 0.967 | |||

| AC5 | 0.897 | 0.967 | |||

| AC6 | 0.883 | 0.968 | |||

| AC7 | 0.887 | 0.967 | |||

| AC8 | 0.885 | 0.967 | |||

| AC9 | 0.905 | 0.967 | |||

| AC10 | 0.807 | 0.969 | |||

| AC11 | 0.79 | 0.970 | |||

| Cognitive learning | ACO1 | 0.856 | 0.971 | 0.973 | 0.801 |

| ACO2 | 0.861 | 0.971 | |||

| ACO3 | 0.889 | 0.970 | |||

| ACO4 | 0.909 | 0.969 | |||

| ACO5 | 0.902 | 0.969 | |||

| ACO6 | 0.892 | 0.970 | |||

| ACO7 | 0.913 | 0.969 | |||

| ACO8 | 0.915 | 0.969 | |||

| ACO9 | 0.913 | 0.969 | |||

| Ethical learning | AE1 | 0.875 | 0.991 | 0.991 | 0.869 |

| AE2 | 0.897 | 0.990 | |||

| AE3 | 0.868 | 0.991 | |||

| AE4 | 0.932 | 0.990 | |||

| AE5 | 0.936 | 0.990 | |||

| AE6 | 0.934 | 0.990 | |||

| AE7 | 0.944 | 0.990 | |||

| AE8 | 0.963 | 0.990 | |||

| AE9 | 0.959 | 0.990 | |||

| AE10 | 0.967 | 0.990 | |||

| AE11 | 0.952 | 0.990 | |||

| AE12 | 0.939 | 0.990 | |||

| AE13 | 0.952 | 0.990 | |||

| AE14 | 0.922 | 0.990 | |||

| AE15 | 0.926 | 0.990 | |||

| AE16 | 0.945 | 0.990 |

| Factors | A-Affective | A-Behavioral | A-Cognitive | A-Ethical |

|---|---|---|---|---|

| A-Affective | - | - | - | - |

| A-Behavioral | 0.884 | - | - | - |

| A-Cognitive | 0.847 | 0.917 | - | - |

| A-Ethical | 0.783 | 0.767 | 0.811 | - |

| Hypothesis | Relationship | β | p-Value | Result |

|---|---|---|---|---|

| H1 | Total → Ethical | 0.675 | *** | Accepted |

| H2 | Behavioral → Ethical | −0.128 | 0.058 | Not accepted |

| H3 | Cognitive → Ethical | 0.567 | *** | Accepted |

| H4 | Affective → Ethical | 0.413 | *** | Accepted |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buele, J.; Sabando-García, Á.R.; Sabando-García, B.J.; Yánez-Rueda, H. Ethical Use of Generative Artificial Intelligence Among Ecuadorian University Students. Sustainability 2025, 17, 4435. https://doi.org/10.3390/su17104435

Buele J, Sabando-García ÁR, Sabando-García BJ, Yánez-Rueda H. Ethical Use of Generative Artificial Intelligence Among Ecuadorian University Students. Sustainability. 2025; 17(10):4435. https://doi.org/10.3390/su17104435

Chicago/Turabian StyleBuele, Jorge, Ángel Ramón Sabando-García, Bosco Javier Sabando-García, and Hugo Yánez-Rueda. 2025. "Ethical Use of Generative Artificial Intelligence Among Ecuadorian University Students" Sustainability 17, no. 10: 4435. https://doi.org/10.3390/su17104435

APA StyleBuele, J., Sabando-García, Á. R., Sabando-García, B. J., & Yánez-Rueda, H. (2025). Ethical Use of Generative Artificial Intelligence Among Ecuadorian University Students. Sustainability, 17(10), 4435. https://doi.org/10.3390/su17104435