Abstract

Recent advances in generative artificial intelligence (Gen AI) enable financial services firms to enhance operational efficiency and foster innovation through human–AI collaboration, yet also pose technical and managerial challenges. Drawing on collaboration theory and prior research, this study examines how employee skills, data reliability, trusted systems, and effective management jointly influence innovation capability and managerial performance in Gen AI-supported work environments. Through survey design, data were collected from China’s financial sector and analyzed using multiple regression analyses and fuzzy-set qualitative comparative analysis (fsQCA). The findings show that all four factors exert a positive influence on innovation capability and managerial performance, with innovation capability acting as a partial mediator. Complementarily, fsQCA identifies distinct configurations of these factors that lead to high levels of innovation capability and managerial performance. To fully leverage human–Gen AI collaboration, financial services firms should upskill employees, strengthen data reliability through robust governance, establish trusted AI systems, and effectively integrate Gen AI into workflows through strong managerial oversight. These findings provide actionable insights for talent development, data governance, and workflow optimization, ultimately enhancing firms’ resilience, adaptability, and long-term sustainability in financial services.

1. Introduction

The integration of human expertise with generative artificial intelligence (Gen AI) is reshaping financial services by enabling data-driven insights and collaborative strategies essential for service excellence. Moreover, harnessing AI’s ability to collaborate with human experts is increasingly critical for bolstering long-term resilience and promoting sustainable practices in financial services. For example, Gen AI assists frontline employees by retrieving financial data and conducting market analyses, thereby streamlining operations, enhancing credit evaluations, and enabling customized recommendations [1,2,3]. Existing surveys typically provide descriptive overviews of AI technologies and potential applications [1,2,3,4] or investigate specific industry scenarios [5,6,7,8]. However, empirical evidence clarifying how Gen AI-enabled collaboration impacts firm-level outcomes remains limited, particularly within financial contexts that demand rigorous oversight and continuous innovation. Thus, this study presents an initial investigation into how such collaborative practices enhance innovation capability and managerial performance, offering novel insights for financial services management and underscoring these practices’ relevance to sustainable finance.

Recent studies highlight Gen AI’s broad potential to boost productivity and provide data-driven insights across diverse business functions [5,6,7,8]. However, realizing these benefits depends greatly on human expertise—a factor often overlooked in early explorations of AI use. A related review by Bankins et al. [9] consolidates multiple empirical findings, indicating that individual performance and attitudes toward AI significantly influence collaborative efficiency, reflecting a complex, rather than linear, relationship. Bridging these skill gaps not only fosters effective AI–human synergy but also aligns with the overarching sustainable development goals. (This point broadly aligns with multiple SDGs—particularly 4, 8, 9, and 16—underscoring how inclusive skill development, resilient infrastructure, and ethical governance are indispensable for strengthening human–AI collaboration in sustainable financial services) (SDGs) [10] drive inclusive and responsible growth in financial services.

Despite its considerable advantages, Gen AI also poses technical and firm-specific challenges. For instance, AI models may exhibit “hallucinations” [11] or exacerbate biases stemming from incomplete or biased training data [12]. Inadequate privacy safeguards and ambiguous transparency guidelines can erode employee trust, undermining a firm’s long-term service objectives. A McKinsey report [13] revealed that approximately 44 percent of respondents had experienced at least one adverse AI-related incident in the past year, highlighting the urgent necessity of robust oversight, reliable data and infrastructure, and clear governance practices.

Fostering employee–AI synergy is multifaceted. As firms grapple with escalating operational and regulatory demands, ranging from algorithmic opacity to workforce skill gaps, managers must embrace more effective management strategies to facilitate the adoption of AI. Without vigilant oversight of compliance, process design, and information processing, AI’s transformative potential may remain underrealized [14,15,16], constraining both innovation and overall organizational effectiveness. By contrast, robust managerial frameworks can align AI capabilities with overarching firm objectives, ultimately driving higher levels of innovation and performance.

This study adopts collaboration theory due to its explicit emphasis on the dynamic interplay inherent in human–AI collaboration, thus providing complementary insights that extend existing theoretical perspectives. It focuses on four key factors, namely employee skills, data reliability, trusted systems, and effective management, that influence innovation capability and managerial performance. To achieve these objectives, data were collected through a survey research design from China’s financial sector and analyzed using regression to assess the effects among key variables. This study also employed fuzzy-set qualitative comparative analysis (fsQCA) to capture the inherent complexity of AI collaboration, thereby revealing multiple configurations to superior outcomes. Consequently, two research questions (RQs) guide this inquiry as follows:

- RQ1: what factors within human–Gen AI collaboration influence innovation capability and managerial performance in financial services?

- RQ2: how do these factors affect innovation capability and managerial performance, and how does innovation capability mediate these effects?

This study offers several novel contributions to research and practice. Theoretically, it integrates collaboration theory with the emerging context of Gen AI-supported teamwork, providing a new lens to examine how human–Gen AI collaboration drives innovation and performance. Additionally, it represents one of the first empirical examinations of Gen AI-enabled collaboration in financial services, offering initial evidence from a critically important yet underexplored context. Methodologically, by combining fsQCA with traditional regression analysis, this study uncovers complex configurations of factors leading to superior innovation and managerial performance outcomes—patterns often obscured by single-method approaches. Practically, the findings offer actionable guidance for managers by highlighting the importance of employee upskilling, data reliability, trustworthy AI systems, and robust managerial oversight to fully capitalize on Gen AI for sustainable innovation and enhanced performance. Finally, this study acknowledges certain limitations and provides directions for future research.

2. Background and Hypotheses

This chapter introduces collaboration theory as the foundation for hypothesis development, emphasizing human–AI collaboration as a dynamic, mutually beneficial, and complementary process in service-oriented contexts. It identifies critical enablers of effective human–AI collaboration, examining their influence on innovation capability and managerial performance. By highlighting human–AI complementarity in fostering responsible innovation and performance, the discussion further illustrates how these synergies advance sustainable financial services.

2.1. Collaboration Theory

Collaboration refers to a process in which participants jointly pursue shared objectives through mutual problem solving and resource exchange, extending beyond traditional teamwork [17,18]. While early formulations of collaboration theory primarily examined human interactions or firm-level dynamics, the advent of AI has shifted focus toward human–AI partnerships. Rather than simply automating tasks or substituting labor [16,19,20], this renewed perspective highlights hybrid intelligence [5,21], in which human intuition, creativity, and abstract reasoning converge with AI’s data processing and analytical strengths [22,23,24]. Such synergy can yield outcomes unattainable by humans or AI alone [25]. Drawing on collaboration theory and hybrid intelligence, this study defines human–Gen AI collaboration as a dynamic mutually beneficial process wherein human employees and AI systems leverage complementary capabilities and exchange information and knowledge, ultimately aiming to enhance work objectives and performance.

Despite the promise of hybrid intelligence, existing theoretical frameworks often lack specificity in operationalizing human–Gen AI collaboration within service-oriented contexts. Although socio-technical systems (STS) theory [26,27] and the technology acceptance model (TAM) [28] provide valuable insights into human–technology integration, STS primarily addresses broad systems-level interactions [29], while TAM focuses narrowly on individual perceptions of technological usefulness and ease of use. Consequently, both approaches have limited utility in explicating nuanced collaborative dynamics. In contrast, collaboration theory explicitly targets this theoretical gap by exploring how humans and intelligent machines coordinate actions, communicate, and mutually adapt in pursuit of shared objectives. Despite its relative underutilization, collaboration theory offers a more process-oriented perspective, effectively capturing how complementary human and Gen AI capabilities contribute to superior collective outcomes.

Furthermore, drawing on interdisciplinary insights, this study examines how such collaboration advances firm-level performance. Specifically, AI acceptance and trust—viewed as cornerstones of collaboration [24,30]—are critical for ensuring information and system quality, transparency, and broad user adoption, all of which collectively elevate collaborative effectiveness. These considerations align with the information system success model [31,32] and trust-focused research [33,34]. Relatedly, human–Gen AI collaboration fosters the generation, exchange, and utilization of knowledge within the firm [22], reflecting knowledge-based views [35,36] that emphasize how robust knowledge processes spur innovation. Furthermore, a firm’s AI capability [37]—comprising solid technical infrastructure, specialized human capital, and intangible managerial assets—ensures that AI-based initiatives cohere with strategic objectives. Building on these insights, the following sections further demonstrate how collaboration theory underpins the conceptual framework and guides hypothesis development in the financial services context.

2.2. Human–Gen AI Collaboration in Financial Services

Unlike traditional AI systems built primarily on rule-based reasoning or predictive algorithms, Gen AI employs advanced deep learning architectures—such as transformer-based language models [38]—to autonomously produce context-specific content, including text, visual imagery, videos, and executable code. In financial services, Gen AI automates tasks such as market analysis, investment planning, credit assessment, and report generation [39], delivering insights beyond the structured outputs of traditional AI. Moreover, Gen AI models can continuously improve through reinforcement learning from human feedback [8,40,41,42], aligning their outputs with organizational objectives. ChatABC—an AI assistant developed by the Agricultural Bank of China—exemplifies this human–AI synergy by handling customer inquiries and synthesizing content [43], freeing employees to focus on strategic initiatives. Consequently, Gen AI has become indispensable for tasks such as accurate forecasting and efficient workflow management, especially when integrated with human expertise, advantages which are unattainable through traditional AI systems alone.

Ensuring high-quality data—especially for sentiment analysis, entity recognition, and real-time reporting—is vital to producing reliable outcomes. When fine-tuned on industry-specific datasets, Gen AI models excel at analysis, prediction, and complex problem solving [20,44]. Solutions such as BloombergGPT [45], developed using finance-focused corpora, and knowledge graphs further refine contextual understanding [46]. This approach also deepens knowledge practices within the firm, highlighting how human–Gen AI collaboration fosters the creation, exchange, and application of both domain expertise and data-driven insights—key drivers of innovation and competitive advantage in sustainable financial services.

In line with service-quality theory, the SERVQUAL model [47] highlights reliability, assurance, responsiveness, etc., as essential dimensions for high-quality service delivery—a notion especially pertinent in finance, where rapid market shifts and complex data analysis demand robust human–AI collaboration. In such contexts, employees must glean broad, accurate insights from real-time data to boost decision-making speed and precision—an objective that hinges on reliable data and trustworthy AI systems. Meanwhile, well-trained staff provide critical human discernment and the empathetic capacity that machines alone cannot replicate, ensuring that AI-driven insights align with strategic goals and ethical standards. Additionally, Gen AI-enabled agents or chatbots significantly enhance responsiveness by offering real-time support, thus complementing human efforts and enabling more agile customer-centric service. Hence, these elements—reliable data, trustworthy systems, and proficient staff—coalesce to foster effective human–AI collaboration, ultimately fueling heightened innovation and performance.

Despite these benefits, ethical challenges associated with Gen AI include hallucinations, data biases, and insufficient transparency or privacy safeguards [4,44,48,49]. Such biases can erode trust, while opaque AI processes complicate auditing and regulatory compliance. Addressing these issues requires continuous oversight, rigorous data governance, and comprehensive ethical frameworks, particularly in finance where accuracy and security are paramount [4]. Thus, safeguarding privacy, fairness, and transparency is essential to maintaining data reliability and trusted systems, which underpin effective human–AI collaboration in financial services. Domain specialists remain indispensable for interpreting AI outputs, validating insights, and ensuring regulatory compliance. Hence, effective human–AI collaboration is necessary for experts to monitor, evaluate, and provide feedback on system-generated results while leveraging AI’s computational strengths.

2.3. Innovation Capability

In today’s rapidly evolving financial services landscape, innovation capability is pivotal for delivering personalized offerings, advancing performance, and sustaining competitive advantage. This capability reflects a firm’s aptitude for leveraging technologies, such as AI, to enhance customer experiences and create enduring value. Indeed, AI is widely recognized as a critical enabler of novel solutions in product and service development and for augmenting decision-making processes [50], particularly when human–Gen AI collaboration integrates machine intelligence with human judgment [22]. By automating repetitive tasks, employees can devote more attention to higher-level responsibilities requiring creativity and contextual knowledge, thereby reinforcing a firm’s overall innovativeness.

Knowledge is central to innovation [22,51,52,53]. Gen AI contributes by extracting insights from voluminous datasets and converting complex information into actionable outputs that inform decision making. For example, it can synthesize emerging industry trends, assess consumer sentiment, and uncover latent market opportunities, thereby facilitating knowledge-sharing practices and data-driven strategies. These functions align with the knowledge-based view, wherein knowledge is a principal impetus for innovation. Yet, technology alone is insufficient for success; a supportive firm culture—one that encourages employees to embrace AI tools and adapt to evolving workflows—is crucial [9,54,55,56,57]. By merging technological resources with employee expertise, firms reinforce their innovation capability and secure sustainable growth. Moreover, robust innovation capability sets the stage for broader managerial benefits, establishing a foundation for improved performance and competitive advantage.

2.4. Managerial Performance

Managers in financial firms seek to enhance managerial performance by combining Gen AI with human expertise. This study focuses on indicators such as process efficiency, customer retention, financial outcomes, and risk mitigation to elucidate how managerial strategies can strengthen competitive advantage. Alongside knowledge management and cultural support, innovation capability emerges as a critical driver of managerial performance [50,53,55,58].

Integrating Gen AI into core business processes amplifies efficiency by automating routine tasks and streamlining workflows [7,56,59]. Therefore, firms can refine product portfolios and customize marketing initiatives, thereby improving customer experiences and retention [22]. Simultaneously, data-driven insights inform financial decision making, contributing to enhanced value creation and operational excellence. Gen AI can also reveal hidden patterns and boost fraud detection in financial transactions [43,60,61,62], enabling proactive risk mitigation that preserves both public trust and corporate assets. Additionally, human–Gen AI collaboration fosters creativity and professional development, as employees concentrate on strategic responsibilities once routine tasks are streamlined or automated.

However, particularly within financial services, ethical challenges, such as algorithmic opacity, data complexity, and privacy concerns, necessitate rigorous oversight, comprehensive compliance frameworks, and robust information technology infrastructures. Achieving an appropriate balance between innovation and accountability enables managers to leverage AI’s capabilities effectively while safeguarding stakeholder confidence [3,63,64]. Consequently, emphasizing transparency, fairness, and stringent data governance practices becomes imperative to build trustworthy AI systems and reinforce stakeholder trust in financial firms [4].

2.5. Building Hypotheses

Collaboration theory posits that effective partnerships arise from aligning complementary human and technological resources toward a shared goal. Using this theoretical lens, four key factors emerge as primary enablers of successful human–AI collaboration in the financial sector: employee skills, data reliability, trusted systems, and effective management. Employee skills encompass irreplaceable human qualities, such as creativity, judgment, and domain knowledge, combined with the technical proficiency to work effectively alongside AI. This comprehensive skill set is crucial for complementing AI capabilities. Data reliability ensures that AI systems operate on high-quality timely information; without reliable data, even the most advanced models can produce flawed outputs. Trusted systems, characterized by transparency and explainability, foster user confidence and thus encourage the adoption of Gen AI-driven recommendations. Effective management orchestrates these elements by allocating resources and leveraging Gen AI-driven insights to enhance organizational adaptability. In practice, this requires structured oversight, clear communication, and active knowledge sharing.

However, despite their prominence in the existing literature, the effects of these specific factors on innovation capability and managerial performance in human–AI collaboration within financial services remain underexplored. Broader organizational conditions, such as technological readiness, leadership style, and organizational culture, are undoubtedly significant determinants of collaborative effectiveness; nonetheless, this study prioritizes employee skills, data reliability, trusted systems, and effective management due to their critical alignment with the financial sector’s stringent requirements for regulatory compliance, data integrity, systemic transparency and security, and managerial accountability. Moreover, concentrating on these elements directly corresponds with collaboration theory’s emphasis on the immediate human–AI synergy that drives optimal performance outcomes. These considerations inform the hypothesis development detailed in the subsequent section.

2.5.1. Employee Skills

Employee skills encompass both business and technical skills [37]. Although machines excel at computation, humans uniquely contribute intuition, insight, abstract reasoning, and creative decision making [23]. Prior studies underscore individual expertise as pivotal for cultivating trust and collaboration between humans and AI [65]. Employees thus require specialized knowledge of markets, products, services, and firm processes [66] to make informed decisions and address complex challenges.

AI literacy is vital for effective communication and collaboration [67]. To optimize interactions with AI, employees must continually refine their technical proficiency [48,55,67,68], enabling them to adapt swiftly to new information and enhance efficiency, quality, and innovation [69]. The capacity to interpret AI-driven decisions is equally important, ensuring human discernment in collaborative efforts. From a resource-based perspective, organizations cultivating business and technical skilled employees demonstrate enhanced organizational creativity [37]. Although prior research indicates a complex, rather than strictly linear, relationship between individual performance and human–AI collaboration outcomes [9], these firm-level competencies are critically associated with both innovation and performance. Based on these considerations, the following hypotheses are proposed:

H1a:

employee skills in human–Gen AI collaboration positively influence innovation capability.

H1b:

employee skills in human–Gen AI collaboration positively influence managerial performance.

2.5.2. Data Reliability

The integrity, accessibility, and relevance of training data are critical for AI’s ability to extract valuable insights from extensive datasets and generate contextual content. High-quality training data markedly improve AI system performance, particularly in large language models tailored to specific business domains [2,70]. Domain-specific knowledge graphs can enrich specialized comprehension, benefiting firms that require deep industry insight [46].

Baabdullah [71] posits that the quality and reliability of information strongly affect decision making and consequent innovation outcomes. Advanced technologies such as large language models like ChatGPT can generate superior content when trained on precise unbiased data, thereby enabling more profound insights into consumer behavior and market trends [72]. Rigorous data analysis and mining facilitate comprehensive market and competitive evaluations, informing decisions and improving industrial competitiveness [72]. Therefore, ensuring the impartiality and accuracy of AI system-generated results is imperative for a firm’s reputation and growth. Such precise and relevant information enables firms to identify and exploit new opportunities, ultimately enhancing both their innovation and performance. Therefore, ensuring the impartiality and accuracy of AI system-generated results is imperative for a firm’s reputation and growth. Based on these dimensions, the following hypotheses are proposed:

H2a:

data reliability in human–Gen AI collaboration positively influences innovation capability.

H2b:

data reliability in human–Gen AI collaboration positively influences managerial performance.

2.5.3. Trusted Systems

Designing AI systems that inspire adequate trust from humans is critical in AI implementation [14]. Trusted systems, emphasizing explainability, reliability, and safety, are essential for fostering innovation and enhancing performance, forming a core element of human-centered technology [19,73,74]. However, the complexity and opacity of machine learning algorithms often render them “black boxes” to users, reducing transparency and trust [75]. When employees cannot understand AI decision-making processes, it can lead to distrust and unease [30,34,76,77]. Extensive research on technology acceptance supports the notion that, when an AI system is perceived as explainable and transparent, employees are more likely to fully engage with it, experimenting with its suggestions and integrating AI-generated insights into their work.

Additionally, heightened data visibility demands robust privacy protections [77]. Pre-trained models can inadvertently expose confidential information, posing significant challenges to data handling and privacy [56]. Deficient privacy measures can undermine team collaboration and trust. Although firms strive to innovate through data openness, privacy and security threats can constrain data sharing. Cybersecurity measures are thus indispensable for secure AI deployment [50]. Against this backdrop, transparency, explainability, and data protection are fundamental for cultivating employee trust and propelling firm advancement. Based on this, the following hypotheses are proposed:

H3a:

trusted systems in human–Gen AI collaboration positively impact innovation capability.

H3b:

trusted systems in human–Gen AI collaboration positively impact managerial performance.

2.5.4. Effective Management

Effective management is vital for nurturing innovation and optimizing performance through human–AI collaboration, particularly in finance, where technology must align with business goals. Adopting advanced technologies—often facilitated by external partnerships [57]—broadens the scope of AI-driven initiatives while underscoring the necessity of prudent oversight. Managers are central to technology planning, including business process reengineering to leverage advanced tools, realizing returns on technology investments, and minimizing risks [16,78].

In finance, managers should establish clear communication channels, provide guidelines for human–AI interaction, and conduct ongoing evaluations to reinforce trust. Aligned with Edmondson [79]’s notion of psychological safety and Amabile et al. [80]’s emphasis on supportive leadership for creativity, managers who promote transparent communication and encourage experimentation create an environment conducive to innovation. This is particularly effective when Gen AI initiatives are strategically integrated and accompanied by clear guidance that facilitates translating AI-driven insights into actionable outcomes. As technological advancements reshape work roles, managers must proactively monitor these changes and ensure that employees receive relevant and timely training [63,77,81,82]. Furthermore, cross-functional collaboration, extensive information-sharing, and robust knowledge-management practices—enabled by integrated data systems—strengthen team cooperation and innovation [83]. Concurrently, rigorous managerial oversight supports regulatory compliance and mitigates risks, with regular audits and assessments enhancing organizational responsiveness. Hence, the following hypotheses are proposed:

H4a:

effective management in human–Gen AI collaboration positively impacts innovation capability.

H4b:

effective management in human–Gen AI collaboration positively impacts managerial performance.

2.5.5. The Mediating Role of Innovation Capability

Generally, incorporating AI into firm processes offers numerous benefits, including enhanced product innovation, optimized processes, and refined business models [50,84,85]. Implementing Gen AI in key business processes improves efficiency, drives innovation, and boosts overall managerial performance [50].

In financial services, human–Gen AI collaboration nurtures innovation in areas such as customer service and marketing, spurring more effective strategies and campaigns. Rajaram and Tinguely [57] emphasize that transforming cultural practices is vital to fully leveraging AI’s potential. A collaborative innovation-driven culture amplifies AI’s value, a view supported by Chatterjee et al. [54], who assert that data-centric cultures bolster employee innovation consciousness. When employees are encouraged to experiment and innovate, they are more inclined to embrace AI and related technologies, thus generating notable improvements in managerial performance. Based on this discussion, the following hypotheses are proposed:

H5:

innovation capability positively affects managerial performance.

H6a–d:

innovation capability mediates the relationships between employee skills, data quality, trusted systems, effective management in human–Gen AI collaboration, and managerial performance.

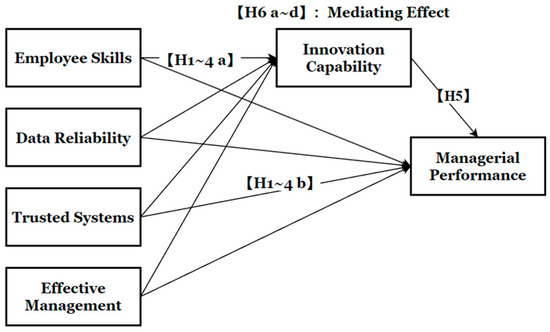

2.6. Research Model

Considering the preceding hypotheses, Figure 1 presents the research model, showing how the four key factors—employee skills, data reliability, trusted systems, and effective management—affect innovation capability and managerial performance, with innovation capability acting as a mediator.

Figure 1.

Research model.

3. Empirical Study

This chapter details the development of measurement, outlines the data collection, and presents the analytical methods and results from both the regression and fsQCA.

3.1. Research Methods

3.1.1. Measurement

A structured questionnaire employing a five-point Likert scale, ranging from “completely disagree” at 1 to “completely agree” at 5, was designed to capture the key constructs underpinning human–Gen AI collaboration in financial services, with each construct comprising five measurement items. Most items were newly developed following a comprehensive literature review, with several adapted from existing scales; all were refined to reflect the specific context.

Building on the distinction made by Mikalef and Gupta [37] between business and technical skills, employee skills were operationalized to include decision-making capabilities, financial literacy, data analytics proficiency, and collaborative technology skills [48,67,69]. Next, data reliability focused on the timeliness, domain alignment, and credibility of data used for content generation, including its congruence with broader societal development goals [12,20,46,70,71,72]. The trusted systems constructed incorporated factors such as security, transparency, stringent access controls, advanced technological safeguards, and openly documented modeling algorithms [12,48,73,74]. Similarly, effective management was gauged via organizational practices in technology adoption, compliance supervision, coordination of human–machine tasks, and the facilitation of knowledge exchange [16,57,86]. In addition, innovation capability measured a firm’s capacity to foster an innovative culture, develop new products and services, and optimize business processes [22,40,50,54,55]. Finally, managerial performance was assessed in terms of financial stability, process efficiency, risk management, and customer loyalty [1,7,43,59,60,62]. Operational definitions and sources are summarized in Table 1.

Table 1.

Operational definitions and sources of construct development.

Moreover, firm type (traditional firms vs. fintech firms) and department (banking, insurance, securities, funds, and asset management) were included as control variables to account for structural differences among the surveyed firms.

3.1.2. Data Collection

To rigorously fulfill the objectives of this research, a two-phase purposive sampling strategy was adopted. In light of the rapid advancement of Gen AI in Chinese tech firms and the heightened interest in such tools within the financial sector [3,64,86,87,88], data were gathered in China. Throughout the data-collection process, several key principles were meticulously upheld as follows: (1) only participants with direct experience utilizing Gen AI (e.g., content creation, decision support) were recruited; (2) all individuals participated voluntarily; and (3) no sensitive or personally identifiable information was collected to ensure confidentiality.

During the pilot phase, surveys were administered to 32 employees from six financial firms in Shandong Province. This phase sought to evaluate the clarity of the questionnaire items and to determine whether frontline staff found the measured constructs comprehensible. Among these 32 respondents, 21 reported actively employing Gen AI (e.g., ERNIE Bot-like systems) in their day-to-day operations, thereby confirming the instrument’s practical relevance to the financial context. Drawing on the feedback from this pilot study, minor revisions were introduced to enhance clarity, for instance, simplifying technical jargon and reordering items to mirror typical AI usage workflows.

Building on the pilot-phase insights, the scope of data collection was expanded to include financial firms in Shandong and Jiangsu provinces. These regions were selected because early evidence indicated that local financial firms had begun actively adopting Gen AI solutions, whether developed in-house or acquired through external partnerships, to enhance their operational processes [44,89]. Data collection occurred between 3 August and 30 September 2023, under stringent confidentiality protocols that precluded the collection of any personal or sensitive data. During this phase, participants were purposively selected based on their active daily use of Gen AI tools, ensuring that the sample consisted solely of regular users of this technology. This approach captured a broad spectrum of Gen AI-supported work practices, as participants’ interactions with Gen AI ranged from basic prompt–response interactions to sustained complex human–Gen AI collaborations. Initially, one researcher visited branch offices and headquarters to distribute paper questionnaires to employees who met the inclusion criteria, ultimately disseminating 260 questionnaires across 36 institutions and obtaining 151 valid responses (a 58% response rate). To reach institutions not accessible in person, the researcher introduced the study via electronic communication and administered an online survey using the wjx.cn platform. This online outreach yielded an additional 51 valid responses from eight further institutions, bringing the total to 202 valid questionnaires retained for analysis.

Potential outliers were evaluated using Cook’s D [90], revealing no values that exceeded the critical threshold of 1.0. G*Power [91] was then employed to verify the adequacy of the sample size. An a priori F-test (effect size f2 = 0.15, α = 0.05, power = 0.95, and 5 predictors) indicated a minimum requirement of 138 responses. Hence, the final sample of 202 was deemed sufficient, thereby providing robust statistical power for subsequent analyses.

Table 2 summarizes the demographic profiles of the 202 participants: 110 men (54.5%) and 92 women (45.5%). The majority (88.2%) were aged 20–40 years. Regarding professional roles, 94 served as general staff (46.5%), 87 as managers (43.1%), 18 as senior managers (8.9%), and 3 as executives (1.5%). By industry, 73 participants (36.1%) were in banking, followed by 35 (17.3%) in securities and 33 (16.3%) in insurance. Overall, this distribution reflects a diverse yet representative sample of financial service professionals who directly use Gen AI within their respective firms.

Table 2.

Demographic information.

3.1.3. Reliability and Validity

In this study, exploratory factor analysis (EFA) was conducted in IBM SPSS Statistics 27, employing principal component analysis in conjunction with the Kaiser criterion (eigenvalues > 1) and a scree plot to determine factor extraction. The Promax oblique method was applied for factor rotation to accurately represent correlations among factors, as recommended by Howard [92]. Items were retained if their factor loadings exceeded 0.50, with consideration given to cross-loadings above 0.38 if the difference was greater than 0.15. Six factors were identified with eigenvalues ranging from 1.297 to 7.953, encompassing a total of 26 items (employee skills item 4, innovation capability items 2 and 5, and managerial performance item 2 were removed). These factors explained 60.290% of the variance, exceeding the 60% threshold [93]. The Kaiser–Meyer–Olkin index was 0.880, and Bartlett’s test was significant (p < 0.001), confirming the suitability of the data for factor analysis.

To further validate the EFA structure, a confirmatory factor analysis (CFA) using AMOS 26 validated the model with good fit indices (χ2/df = 1.239, RMSEA = 0.034, GFI = 0.883, AGFI = 0.855, SRMR = 0.048, CFI = 0.961). The constructs identified in EFA were confirmed, with all factor loadings exceeding 0.5 and composite reliability (CR) values above 0.70, demonstrating reliability and convergent validity [94]. The average variance extracted (AVE) for some constructs was slightly below the recommended 0.50 threshold. However, according to Fornell and Larcker (as noted on p. 46, AVE is a conservative validity measure, and researchers may infer sufficient convergent validity based on CR alone) [94], such AVE values are acceptable when CR exceeds 0.60—a criterion met here given that all constructs had CR well above 0.70. This approach is consistent with precedents in top-tier academic publications [95]. Overall, all constructs demonstrated strong reliability and validity (see Table 3).

Table 3.

Factor analysis results (measurement of Gen AI collaboration context).

Table 4 presents the means, standard deviations, and correlation coefficients for the six variables. Discriminant validity is confirmed based on the Fornell-Larcker criterion, with diagonal elements representing the square root of AVE.

Table 4.

Correlation analysis results.

3.1.4. Common Method and Non-Response Bias

To mitigate the potential impact of common method bias (CMB) on this study’s validity, several proactive steps were taken during the questionnaire design and data collection, following established protocols [96,97,98]. Participants voluntarily engaged in the survey, ensuring active involvement, and were informed of its academic nature, emphasizing that there were no right or wrong answers [99]. Anonymity was strictly maintained, and questions were carefully formulated to respect organizational and personal privacy [91]. To assess CMB, Harman’s single-factor test was conducted [100]. Using principal axis factoring, a single factor explained 28.639% of the variance, well below the 50% threshold indicative of CMB. Acknowledging the limitations of Harman’s test [85], an independent marker variable, “Work Departments”, was employed, distinct from this study’s theoretical framework and context [101]. Its inclusion did not alter core construct correlations or exhibit significant associations with them, suggesting minimal CMB concern [102].

Non-response bias was evaluated by comparing early and late survey responses [103]. An independent samples t-test compared the first and last 25% of responses, focusing on innovation capability and managerial performance items. No statistically significant differences were found (p-values: 0.135–0.593, p > 0.05), indicating that non-response bias did not notably affect the findings.

3.2. Hypotheses Testing

Before conducting regression analysis, the underlying assumptions were evaluated. Normality was assessed using skewness (−0.98 to −0.231) and kurtosis (−0.775 to 1.932) indices, all within the acceptable ±3 range [104]. A sufficiently large sample size further supported normality, consistent with the central limit theorem [105]. Multicollinearity was examined via variance inflation factors (1.317–1.565), remaining well below the threshold of 10 [106]. Linearity was tested through variance analysis, revealing both linear and nonlinear relationships. OLS regression analysis confirmed predominantly linear patterns [107], supported by a significant Pearson’s correlation (p < 0.01). Homoscedasticity was evaluated using scatterplots of standardized residuals, indicating no violations. Overall, the data satisfied all multivariate assumptions, ensuring robust regression analysis.

The results of the regression analyses show coefficients of determination (R2) ranging from 0.199 to 0.328, exceeding the recommended threshold of 0.1 proposed by Falk and Miller [108]. F-values, ranging from 22.588 to 49.625, were statistically significant, confirming the robustness of these models. Standardized residual plots indicated a normal distribution of residuals. Durbin–Watson values for residuals ranged from 1.768 to 2.178, close to the ideal value of 2, suggesting minimal deviation from the critical range of 0 to 4 and negligible autocorrelation among residuals. In summary, the regression models demonstrated strong goodness-of-fit and significant predictive capabilities, effectively illustrating relationships among the variables (see Table 5).

Table 5.

Regression analysis results.

The findings presented in Table 5 provide statistical support for the first nine hypotheses. In the regression model where innovation capability is the dependent variable, several predictors exhibited significant positive effects. Specifically, employee skills (B = 0.205, p = 0.011), data reliability (B = 0.250, p = 0.004), trusted systems (B = 0.179, p = 0.016), and effective management (B = 0.207, p = 0.008) all demonstrated significant positive effects on innovation capability. These results, with a 95% confidence interval, strongly reject the null hypotheses and support H1a, H2a, H3a, and H4a. Additionally, including three control variables—organizational type, technology acquisition strategy, and organizational department—resulted in an adjusted R-squared of 0.297. Importantly, these control variables did not significantly alter the relationship between the main predictors and innovation capability.

Similarly, in the regression analysis where managerial performance was the dependent variable, significant positive effects were observed for employee skills (B = 0.213, p = 0.003), data reliability (B = 0.187, p = 0.014), trusted systems (B = 0.187, p = 0.004), and effective management (B = 0.183, p = 0.008). Again, within the 95% confidence interval, these findings strongly reject the null hypotheses and support H1b, H2b, H3b, and H4b. Including control variables resulted in an adjusted R-squared of 0.309, indicating minimal influence on managerial performance. Furthermore, the analysis revealed that innovation capability (B = 0.398, p < 0.001) significantly influenced managerial performance, supporting H5. Even with the control variables, the adjusted R-squared remained stable at 0.187, highlighting the robust relationship between innovation capability and managerial performance.

3.3. Mediating Effect

Mediation effects involve the influence of a third variable, known as a mediator, on the relationship between independent and dependent variables. Partial mediation occurs when the mediator partially explains this relationship, while a direct relationship remains significant. Complete mediation occurs when the direct effect is not significant, indicating that the mediator fully explains the relationship. In this study, mediation effects were assessed using the Sobel test [109]. This test calculates the standard error of indirect effects based on the standard error of direct effects, providing insights into whether mediation is present [109].

Table 6 illustrates the significant mediating effects of innovation capability on these factors (Z = 2.405 *; 2.683 **; 2.313 *; 2.508 *), suggesting that innovation capability partially mediates the relationship. Thus, H6a~d is supported.

Table 6.

Mediating effect.

3.4. fsQCA

After completing the regression analysis, this study employed fsQCA to capture complex configurations that conventional regression methods often overlook. Regression analysis typically assumes symmetrical causality, implying a linear and unidirectional relationship where changes in X directly elicit changes in Y. However, such assumptions may fail to capture the real-world nonlinearity and context-specific nuances inherent in many phenomena [110]. Recent research has demonstrated how differences in individual abilities or performance levels can have an asymmetrical impact on AI collaboration [111,112,113,114], as underscored by Bankins et al. [9]. In other words, the success of human–AI collaboration often hinges on the complementarity among employees’ skills, AI system capabilities, and firm management practices. For instance, highly skilled employees may compensate for a less sophisticated AI system if supported by robust managerial structures, whereas an advanced AI system could offset employees’ lower skill levels when coupled with dedicated training and clear oversight.

According to Woodside [115], complexity theory rests on three fundamental principles: the recipe principle, equifinality, and causal asymmetry. The recipe principle posits that distinct combinations of factors can produce a specific result. Equifinality indicates that different configurations of factors can yield the same outcome. Causal asymmetry clarifies that factors leading to one outcome do not simply invert to produce its opposite; in other words, the absence or negation of certain conditions may not generate a contrasting effect. Building on these insights, fsQCA pinpoints particular configurations of antecedent conditions that collectively explain specific outcomes.

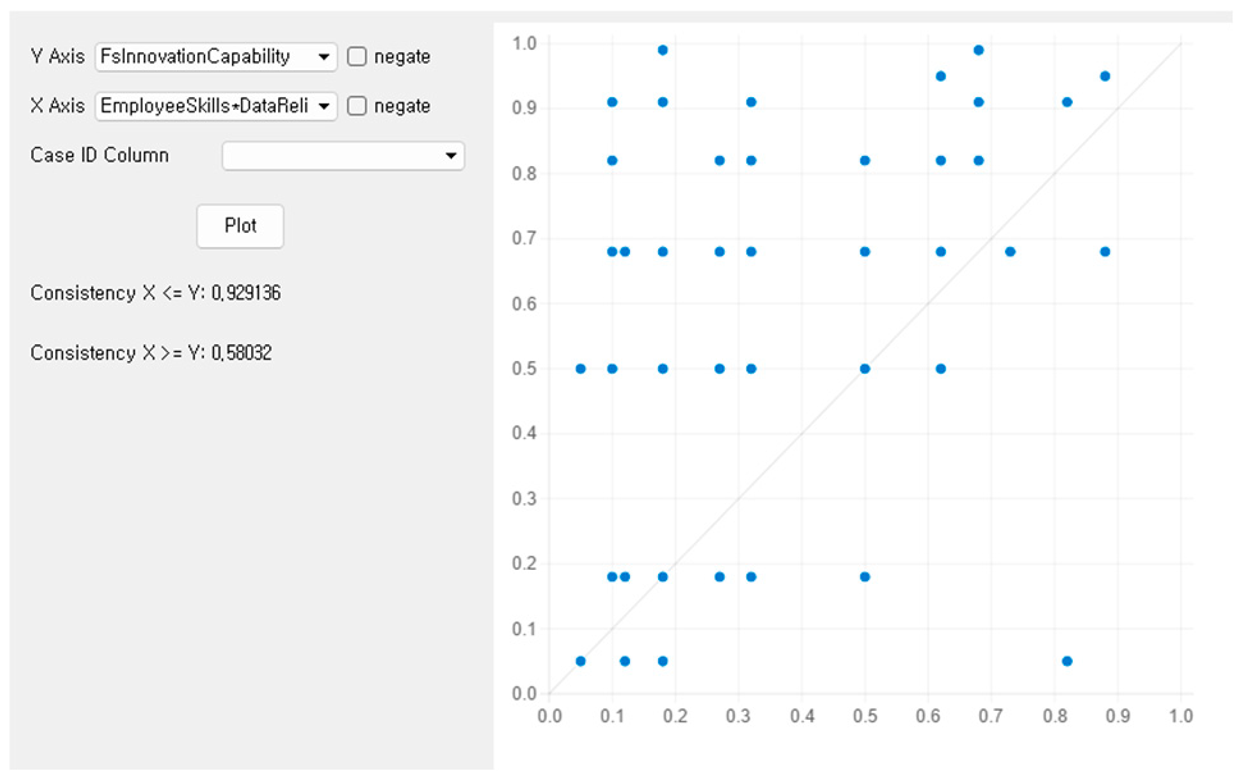

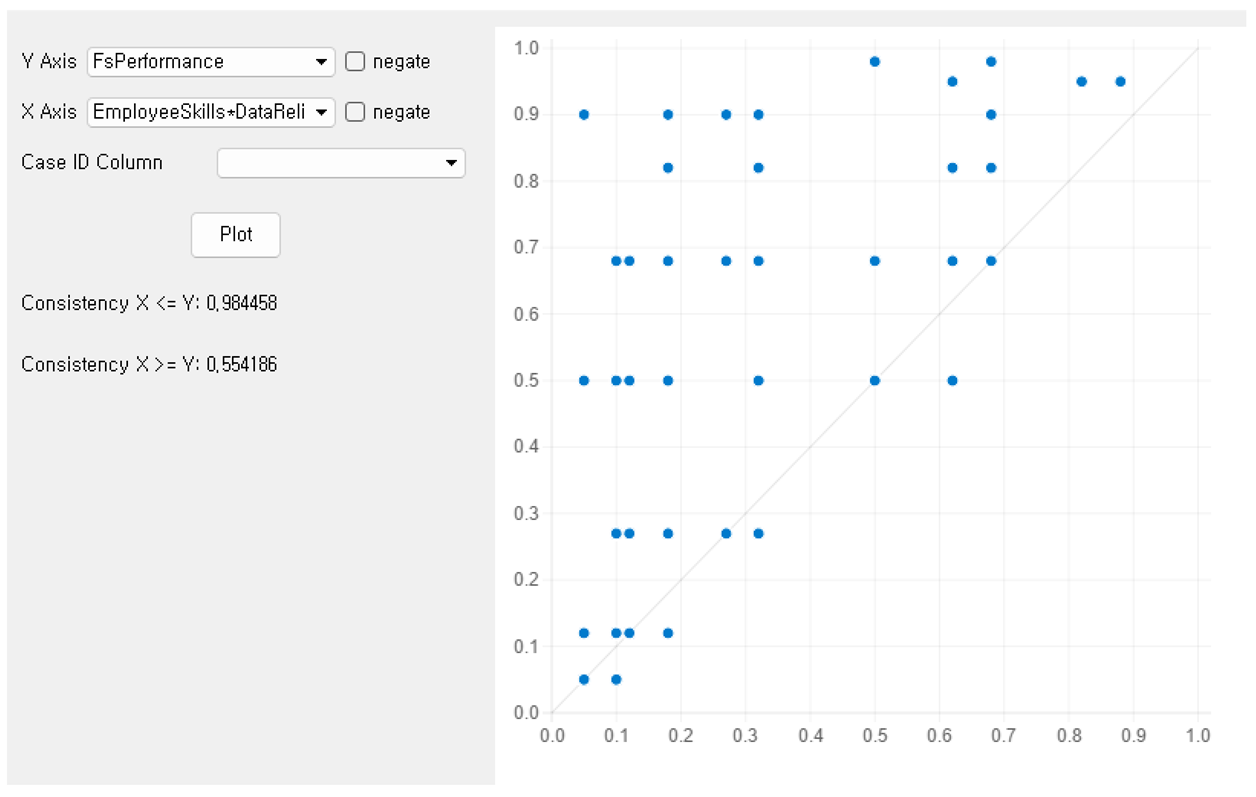

Following Pappas and Woodside [116]’s guidelines, a contrarian case analysis (see Appendix A) identified observations not fully explained by the main effects in correlation or regression, reinforcing the need for fsQCA [115,116]. The data were then calibrated into fuzzy-set membership scores (0.0 = non-membership, 1.0 = full membership) using quantile thresholds of 0.95, 0.50, and 0.05. Necessary and sufficient conditions were subsequently examined, alongside tests of predictive validity and robustness, confirming the reliability of the fsQCA findings.

3.4.1. Conditions Analysis

Conditions analysis explores both necessary and sufficient conditions. According to Ragin [117], a condition consistency exceeding 0.9 is necessary for an outcome to occur. However, none of the conditions met this threshold in impacting innovation capabilities and managerial performance (see Appendix B). For sufficient conditions, a consistency threshold of 0.8 and a frequency threshold of 3 were applied [118,119], excluding combinations with a proportional reduction in inconsistency below 0.7 [120]. Using the fsQCA framework, three distinct solutions were identified: complex, parsimonious, and intermediate. For clarity and interpretation, the intermediate and parsimonious solutions were merged to distinguish core and peripheral conditions [118]. The consistency index serves as an analog to correlation, while the coverage index resembles R-squared in multiple regression.

Table 7 identifies eight solutions: four lead to high innovation capability (IC1–IC4) and four lead to high managerial performance (MP1–MP4). Regarding innovation capability, first, highly trusted systems and data reliability enhance innovation capability independently of employee skills and effective management (IC1). Second, high data reliability can lead to significant innovation capability even with lower levels of employee skills and effective management, irrespective of trusted systems (IC2). Third, the combination of high employee skill levels, data reliability, and effective management substantially boosts innovation capabilities without necessarily relying on trusted systems (IC3). Lastly, effective management can drive high innovation capabilities even in the absence of high levels of employee skills, data reliability, and trusted systems (IC4).

Table 7.

Solutions to innovation capability and managerial performance.

Turning to managerial performance, high data reliability can achieve strong managerial performance regardless of innovation capability, even with lower employee skills, trusted systems, and effective management (MP1). Moreover, combining high data reliability with innovation capability enhances managerial performance, even in scenarios with lower employee skills and effective management, independently of trusted systems (MP2). Even when employee skills, data reliability, effective management, and innovation capability are low—emphasizing system security, reliability, transparency, and strict access policies—they can still lead to high managerial performance (MP3). Finally, integrating high employee skills, data reliability, trusted systems, effective management, and innovation capability maximizes overall managerial performance (MP4). Importantly, all solutions surpass the recommended thresholds for consistency (>0.80) and coverage (>0.20) [121], thereby reinforcing their theoretical significance [116,122].

3.4.2. Predictive Validity and Robustness

This study followed Pappas and Woodside [116]’s guidelines to assess the predictive validity of the fsQCA results. The analysis confirmed the substantial predictive power of the identified pathways. Additional robustness checks, employing adjusted frequency thresholds at the 0.90, 0.50, and 0.10 quantiles, revealed no significant departures from the initial findings, thus reinforcing the predictive validity and reliability of the fsQCA (see Appendix C and Appendix D).

4. Discussion

4.1. Summary and Theoretical Implications

This study explores the emergent domain of human–Gen AI collaboration, focusing on four key factors—employee skills, data reliability, trusted systems, and effective management—to discern their effects on innovation capability and managerial performance in financial services. The analysis indicates direct positive influences from each factor on both outcomes, with innovation capability functioning as a partial mediator that amplifies these relationships. Furthermore, fsQCA identifies multiple distinct configurations of factors that jointly foster high innovation capability and managerial performance, illustrating a nuanced interplay among the antecedents.

By examining Gen AI as an active collaborator rather than merely an automated tool, this study broadens the theory of collaboration and underscores how advanced technologies can complement human expertise in financial services. In contrast with earlier AI systems that focused on repetitive or rule-based tasks, Gen AI dynamically produces context-specific outputs, thus requiring greater human judgment and oversight. Although previous research has recognized employee skills, data, and systems as influential in driving innovation and performance, this study provides timely empirical evidence of their critical roles in the context of Gen AI applications. These results align with prior works. For example, Chatterjee et al. [54] found that a strong data-driven culture significantly boosts product and process innovation, thereby enhancing competitiveness. Similarly, Sachan et al. [8] emphasize that high-performing human–AI systems arise from a combination of human expertise, rigorous data validation, and consistent decision auditing. Similarly, Makarius et al. [82] warn that, without employee understanding and engagement, AI is unlikely to add value, underscoring the importance of “AI socialization” among staff. Hence, these findings extend existing frameworks in service management and AI collaboration by demonstrating how flexible, human-centric strategies must incorporate Gen AI’s dynamic content-generation capabilities, updating prior models that often assumed deterministic AI processes. Consequently, the results expand the knowledge-based view by emphasizing the importance of reliable data, trusted systems, managerial practices aligned with specialized employee skills, and the synergy between human judgment and AI capabilities. As such, human–AI partnerships can bolster both innovation and long-term viability, which is an increasingly important consideration for sustainable financial services.

Unlike studies that rely primarily on linear approaches, the fsQCA perspective highlights the nonlinear set-theoretic nature of these relationships, revealing multiple pathways that underpin effective human–AI collaboration. These fsQCA results align with complexity theory principles (specifically the recipe principle, equifinality, and causal asymmetry), demonstrating that different configurations of factors can lead to similar outcomes. These findings echo recent research emphasizing that individual performance levels significantly influence collaboration efficiency in AI-driven contexts [111,113,114]. Hence, these results indicate that not all four key factors in a given human–AI collaboration configuration need to be at high levels for the desired outcome to occur. Instead, complementary combinations of these factors can produce the outcome even if certain individual factors are not maximized. Moreover, Jarrahi [23] notes that effective AI–human symbiosis arises when AI’s analytical capabilities are paired with humans’ holistic intuitive judgment. In service settings, Mele et al. [15] find that human–robot collaboration improves customer care by establishing a shared activity system around common goals. This configurational perspective highlights the theoretical importance of flexible human-centric strategies for coordinating human–AI collaboration at the firm level, particularly in dynamic service-intensive contexts. Collectively, these insights illuminate how human–Gen AI collaboration can enhance both innovation capacity and managerial effectiveness, prompting further reflection on the evolving partnership between human expertise and AI solutions in pursuit of sustainable financial services.

4.2. Practical Implications

This study provides empirical evidence that human–AI collaboration enhances sustainable financial services by combining human expertise with AI capabilities, thereby offering a forward-looking approach. Managers in financial services firms should integrate AI systems with human expertise to optimize service outcomes. First, it is essential to cultivate employee competencies by bridging professional expertise with technical skills, particularly AI literacy. Building on Makarius et al. [82]’s notion of AI socialization, firms could implement practical initiatives such as hands-on workshops or “AI sandbox” projects, enabling employees to engage with Gen AI tools in realistic scenarios. Tailored training programs and adaptive organizational policies further support staff in acquiring advanced analytical and interpretive skills, enhancing service resilience. Additionally, proactively addressing digital and generational divides fosters inclusivity and strengthens trust in AI-driven processes, thereby promoting equitable and responsible innovation in financial services.

Second, ensuring data reliability and establishing trusted AI systems are equally critical for generating accurate outputs and achieving regulatory compliance. As Sachan et al. [8] emphasize, consistent auditing of past human judgments and thorough validation of data alignment with firm policies are essential prior to full-scale AI deployment. Robust data governance frameworks—including regular audits, stringent validation processes, and domain-specific quality assessments—strengthen accountability, mitigate reputational and operational risks, and reinforce sustainable practices. Furthermore, financial firms could explore advanced AI applications, such as sophisticated knowledge graphs and adaptive learning systems, that align human creativity more effectively with machine-generated insights. Concurrently, maintaining secure infrastructure and transparent clearly documented AI algorithms builds employee and customer trust, facilitating productive human–AI interactions.

Lastly, effective management functions as an integrative mechanism, embedding AI tools into daily workflows while maintaining vigilant oversight. Managers should promote ethical usage standards, encourage cross-functional collaboration, and actively build continuous trust to fully leverage AI’s potential. Policymakers and regulators could also draw on these findings to establish principle-based guidelines that protect consumer interests while encouraging responsible future-oriented AI competencies within sustainable financial services.

Notably, the fsQCA results underscore equifinality, i.e., different configurations of employee skills, data practices, trusted systems, and management approaches can achieve high innovation and managerial performance. Therefore, firms should assess and flexibly adopt the configuration best aligned with their existing capabilities and operational context. By emphasizing a strategic combination of employee training, data governance, trustworthy AI infrastructures, and managerial oversight, organizations can enhance their long-term stability. Overall, such integrated strategies directly support multiple SDGs, particularly SDG 4 (Quality Education) through workforce upskilling, SDG 8 (Decent Work and Economic Growth) and SDG 9 (Industry, Innovation and Infrastructure) via improved productivity, and SDG 16 (Peace, Justice and Strong Institutions) through enhanced transparency and accountable governance [10,123,124].

4.3. Limitations and Future Research

Although this study provides valuable insights, several limitations must be acknowledged. Firstly, the exclusive focus on China’s financial sector potentially constrains the generalizability of findings to regions with distinct cultural or regulatory frameworks. Future studies could validate these findings in other regulated sectors (e.g., healthcare, retail, public administration) and diverse geographical contexts, thereby enhancing their applicability. Secondly, reliance on self-reported survey data introduces risks of social desirability and recall biases, as participants’ subjective perceptions and varying technological understandings may influence responses. Integrating objective data sources—such as audited financial reports, transaction logs, or longitudinal analyses—could deepen insights into how critical factors and AI technologies co-evolve to shape innovation trajectories. Additionally, given the cross-sectional design and rapid evolution of Gen AI, future longitudinal or experimental studies are essential to establish causality and capture dynamic changes in human–AI collaborative outcomes.

Furthermore, as Gen AI advances, its role in facilitating sophisticated decision making across diverse business functions and platforms will likely grow [125]. Subsequent research might investigate how organizations integrate multiple AI tools within stringent compliance frameworks and manage diverse stakeholder expectations, enriching the understanding of complex sustainable service delivery. Interdisciplinary insights from management information systems, human resource management [78], and leadership [30] could further illuminate the intricacies of human–AI interactions in progressively AI-intensive service contexts. As discussions around AI autonomy and accountability continue to evolve, effectively balancing innovation with ethical considerations and regulatory oversight will become increasingly important. Lastly, given the novelty of applying collaboration theory to human–AI interactions, future research is encouraged to further examine complementary capabilities, diverse collaboration forms, and dynamic interaction patterns, thereby contributing to a deeper theoretical understanding in this emergent research domain.

5. Conclusions

In conclusion, this study demonstrates that integrating employee skills, data reliability, trusted systems, and effective management collectively enhances innovation capability and managerial performance in financial services. By identifying multiple configurations and underscoring the mediating role of innovation capability, the findings advocate flexible, knowledge-centric, and human-centered approaches to AI implementation. These insights enrich scholarly and practical discussions on human–Gen AI collaboration, guiding organizations toward resilient, human-centric, and sustainable AI practices in service-intensive domains.

Author Contributions

Conceptualization, C.X. and S.-E.C.; methodology, C.X. and S.-E.C.; formal analysis, C.X.; data curation, C.X.; writing—original draft, C.X.; writing—review and editing, C.X. and S.-E.C.; supervision, S.-E.C. All authors have read and agreed to the published version of the manuscript.

Funding

The second author acknowledges that this study is partially supported by Gyeongsang National University Fund for professors on sabbatical leave, 2024.

Institutional Review Board Statement

Given the content and methodologies of this study, and in accordance with the Bioethics and Safety Act and Enforcement Decree of the Bioethics Act in South Korea, this type of research does not typically require ethical approval.

Informed Consent Statement

Informed consent was obtained from all participants involved in this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Contrarian Case Analysis

| Innovation Capability | Managerial Performance | ||||||||||||||

| Employee Skills; Phi2 = 0.350, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | Employee Skills; Phi2 = 0.343, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | ||

| 1 | 10 | 16 | 6 | 0 | 1 | 33 | 1 | 17 | 8 | 2 | 3 | 3 | 33 | ||

| 2 | 20 | 15 | 11 | 7 | 4 | 57 | 2 | 18 | 15 | 14 | 6 | 4 | 57 | ||

| 3 | 5 | 6 | 12 | 9 | 2 | 34 | 3 | 2 | 5 | 11 | 11 | 5 | 34 | ||

| 4 | 4 | 6 | 4 | 15 | 5 | 34 | 4 | 6 | 7 | 5 | 6 | 10 | 34 | ||

| 5 | 4 | 3 | 10 | 12 | 15 | 44 | 5 | 1 | 6 | 7 | 9 | 21 | 44 | ||

| Total | 43 | 46 | 43 | 43 | 27 | 202 | Total | 44 | 41 | 39 | 35 | 43 | 202 | ||

| Data Reliability; Phi2 = 0.404, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | Data Reliability; Phi2 = 0.440, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | ||

| 1 | 18 | 15 | 5 | 0 | 5 | 43 | 1 | 20 | 10 | 5 | 4 | 4 | 43 | ||

| 2 | 9 | 15 | 10 | 6 | 0 | 40 | 2 | 10 | 14 | 9 | 5 | 2 | 40 | ||

| 3 | 4 | 8 | 13 | 8 | 2 | 35 | 3 | 4 | 7 | 11 | 8 | 5 | 35 | ||

| 4 | 7 | 6 | 10 | 22 | 6 | 51 | 4 | 7 | 7 | 13 | 15 | 9 | 51 | ||

| 5 | 5 | 2 | 5 | 7 | 14 | 33 | 5 | 3 | 3 | 1 | 3 | 23 | 33 | ||

| Total | 43 | 46 | 43 | 43 | 27 | 202 | Total | 44 | 41 | 39 | 35 | 43 | 202 | ||

| Trusted Systems; Phi2 = 0.400, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | Trusted Systems; Phi2 = 0.384, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | ||

| 1 | 18 | 13 | 4 | 1 | 2 | 38 | 1 | 21 | 9 | 4 | 2 | 2 | 38 | ||

| 2 | 10 | 19 | 9 | 3 | 6 | 47 | 2 | 10 | 18 | 9 | 5 | 5 | 47 | ||

| 3 | 8 | 5 | 5 | 11 | 3 | 32 | 3 | 5 | 4 | 9 | 9 | 5 | 32 | ||

| 4 | 1 | 4 | 17 | 12 | 2 | 36 | 4 | 5 | 4 | 9 | 11 | 7 | 36 | ||

| 5 | 6 | 5 | 8 | 16 | 14 | 49 | 5 | 3 | 6 | 8 | 8 | 24 | 49 | ||

| Total | 43 | 46 | 43 | 43 | 27 | 202 | Total | 44 | 41 | 39 | 35 | 43 | 202 | ||

| Effective Management; Phi2 = 0.401, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | Effective Management; Phi2 = 0.456, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | ||

| 1 | 19 | 9 | 5 | 0 | 4 | 37 | 1 | 21 | 4 | 6 | 3 | 3 | 37 | ||

| 2 | 8 | 19 | 9 | 3 | 1 | 40 | 2 | 9 | 17 | 9 | 4 | 1 | 40 | ||

| 3 | 2 | 7 | 8 | 9 | 1 | 27 | 3 | 5 | 5 | 10 | 4 | 3 | 27 | ||

| 4 | 8 | 9 | 14 | 25 | 9 | 65 | 4 | 7 | 12 | 11 | 19 | 16 | 65 | ||

| 5 | 6 | 2 | 7 | 6 | 12 | 33 | 5 | 2 | 3 | 3 | 5 | 20 | 33 | ||

| Total | 43 | 46 | 43 | 43 | 27 | 202 | Total | 44 | 41 | 39 | 35 | 43 | 202 | ||

| Innovation Capability; Phi2 = 0.483, p < 0.001 | 1 | 2 | 3 | 4 | 5 | Total | |||||||||

| 1 | 23 | 10 | 2 | 1 | 7 | 43 | |||||||||

| 2 | 13 | 16 | 10 | 6 | 1 | 46 | |||||||||

| 3 | 4 | 8 | 12 | 12 | 7 | 43 | |||||||||

| 4 | 2 | 4 | 12 | 14 | 11 | 43 | |||||||||

| 5 | 2 | 3 | 3 | 2 | 17 | 27 | |||||||||

| Total | 44 | 41 | 39 | 35 | 43 | 202 | |||||||||

| Note: The top left and bottom right cases illustrate the main effects, whereas the bottom left and top right cases depict contrarian scenarios, which are not accounted for by the main effects. The contrarian cases are counter to the main effect size, with a phi2 range from 0.05 to 0.50 [116]. | |||||||||||||||

Appendix B. Necessary Condition Analysis

| Conditions | High Innovation Capability | Low Innovation Capability | High Managerial Performance | Low Managerial Performance | ||||

| Consistency | Coverage | Consistency | Coverage | Consistency | Coverage | Consistency | Coverage | |

| ES | 0.784 | 0.770 | 0.555 | 0.554 | 0.789 | 0.767 | 0.573 | 0.577 |

| ~ES | 0.546 | 0.548 | 0.770 | 0.783 | 0.565 | 0.560 | 0.769 | 0.791 |

| DR | 0.754 | 0.801 | 0.551 | 0.595 | 0.766 | 0.805 | 0.560 | 0.611 |

| ~DR | 0.619 | 0.576 | 0.816 | 0.771 | 0.630 | 0.580 | 0.821 | 0.784 |

| TS | 0.770 | 0.779 | 0.565 | 0.580 | 0.787 | 0.787 | 0.567 | 0.588 |

| ~TS | 0.585 | 0.570 | 0.785 | 0.776 | 0.588 | 0.567 | 0.795 | 0.795 |

| EM | 0.739 | 0.811 | 0.534 | 0.594 | 0.755 | 0.819 | 0.529 | 0.595 |

| ~EM | 0.629 | 0.571 | 0.830 | 0.764 | 0.626 | 0.562 | 0.839 | 0.780 |

| IC | - | - | - | - | 0.797 | 0.789 | 0.561 | 0.576 |

| ~IC | - | - | - | - | 0.571 | 0.556 | 0.794 | 0.803 |

| NOTE: ES—employee skills, DR—data reliability, TS—trusted systems, EM—effective management, IC—innovation capability. | ||||||||

Appendix C. Robustness Analysis (0.90 Quantile, 0.50 Median, and 0.10 Quantile)

| Configurations for Innovation Capability | Coverage | Consistency | |

| Solution 1 | Data Reliability * Trusted Systems | 0.626 | 0.869 |

| Solution 2 | Employee Skills * ~Data Reliability * ~Effective Management | 0.361 | 0.800 |

| Solution 3 | ~Employee Skills * Data Reliability * ~Effective Management | 0.307 | 0.803 |

| Solution 4 | Employee Skills * Data Reliability * Effective Management | 0.517 | 0.898 |

| Solution coverage: 0.806 | |||

| Solution consistency: 0.789 | |||

| Configurations for Performance | Coverage | Consistency | |

| Solution 1 | ~Employee Skills * Data Reliability * ~Trusted Systems * ~Effective Management | 0.265 | 0.811 |

| Solution 2 | ~Employee Skills * Data Reliability * ~Effective Management * Innovation Capability | 0.265 | 0.852 |

| Solution 3 | ~Employee Skills *~Data Reliability * Trusted Systems * ~Effective Management * ~Innovation Capability | 0.270 | 0.825 |

| Solution 4 | Employee Skills * Data Reliability * Trusted Systems * Effective Management * Innovation Capability | 0.478 | 0.968 |

| Solution coverage: 0.664 | |||

| Solution consistency: 0.843 | |||

| NOTE: The symbol "*" denotes the logical operator and, whereas "~" indicates a low level of the condition. | |||

Appendix D. Predictive Validity Analysis

| Configurations of Sub-Sample 1 | XY Graph of Sub-Sample 2 |

| Configurations for Innovation Capability |  |

| Employee Skills * Data Reliability * Effective Management Solution coverage: 0.578 Solution consistency: 0.871 | |

| Configurations for Performance |  |

| Employee Skills * Data Reliability * Trusted Systems * Effective Management * Innovation Capability Solution coverage: 0.530 Solution consistency: 0.945 | |

| NOTE: The symbol “*” denotes the logical operator “and”. | |

To assess predictive validity, the entire sample was randomly divided into two sub-samples: Sub-Sample 1 and Sub-Sample 2. Sub-Sample 1 data were analyzed using the same procedures as before to derive a solution, while an XY graph was created from the data in Sub-Sample 2. The results demonstrate that the solution derived from Sub-Sample 1 exhibited satisfactory consistency and coverage in Sub-Sample 2, indicating high predictive power [116].

References

- Dahal, S.B. Utilizing generative AI for real-time financial market analysis: Opportunities and challenges. Adv. Intell. Inf. Syst. 2023, 8, 1–11. [Google Scholar]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So what if ChatGPT wrote it?” multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Kalia, S. Potential impact of generative artificial intelligence (AI) on the financial industry. Int. J. Cybern. Inform. 2023, 12, 37–51. [Google Scholar] [CrossRef]

- Qureshi, N.I.; Choudhuri, S.S.; Nagamani, Y.; Varma, R.A.; Shah, R. Ethical considerations of AI in financial services: Privacy, bias, and algorithmic transparency. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Cao, S.; Jiang, W.; Wang, J.; Yang, B. From man vs. machine to man+ machine: The art and AI of stock analyses. J. Financ. Econ. 2024, 160, 103910. [Google Scholar] [CrossRef]

- Che, C.; Huang, Z.; Li, C.; Zheng, H.; Tian, X. Integrating generative AI into financial market prediction for improved decision making. arXiv 2024, arXiv:2404.03523. [Google Scholar] [CrossRef]

- Chen, B.; Wu, Z.; Zhao, R. From fiction to fact: The growing role of generative AI in business and finance. J. Chin. Econ. Bus. Stud. 2023, 21, 471–496. [Google Scholar] [CrossRef]

- Sachan, S.; Almaghrabi, F.; Yang, J.B.; Xu, D.L. Human-AI collaboration to mitigate decision noise in financial underwriting: A study on fintech innovation in a lending firm. Int. Rev. Financ. Anal. 2024, 93, 103149. [Google Scholar] [CrossRef]

- Bankins, S.; Ocampo, A.C.; Marrone, M.; Restubog, S.L.D.; Woo, S.E. A multilevel review of artificial intelligence in organizations: Implications for organizational behavior research and practice. J. Organ. Behav. 2024, 45, 159–182. [Google Scholar] [CrossRef]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Nerini, F.F. The role of artificial intelligence in achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef]

- Hicks, M.T.; Humphries, J.; Slater, J. ChatGPT is bullshit. Ethics Inf. Technol. 2024, 26, 38. [Google Scholar] [CrossRef]

- Gill, S.S.; Kaur, R. ChatGPT: Vision and challenges. Internet Things Cyber-Phys. Syst. 2023, 3, 262–271. [Google Scholar] [CrossRef]

- McKinsey; McKinsey Digital. The State of AI in Early 2024: Gen AI Adoption Spikes and Starts to Generate Value. 2024. Available online: https://www.mckinsey.com/~/media/mckinsey/business%20functions/quantumblack/our%20insights/the%20state%20of%20ai/2024/the-state-of-ai-in-early-2024-final.pdf?shouldIndex=false (accessed on 14 December 2024).

- Haefner, N.; Wincent, J.; Parida, V.; Gassmann, O. Artificial intelligence and innovation management: A review, framework, and research agenda. Technol. Forecast. Soc. Change 2021, 162, 120392. [Google Scholar] [CrossRef]

- Mele, C.; Russo-Spena, T.; Ranieri, A.; Di Bernardo, I. A system and learning perspective on human–robot collaboration. J. Serv. Manag. 2024; ahead-of-print. [Google Scholar] [CrossRef]

- Wilson, H.J.; Daugherty, P.R. Collaborative intelligence: Humans and AI are joining forces. Harv. Bus. Rev. 2018, 96, 114–123. [Google Scholar]

- Bedwell, W.L.; Wildman, J.L.; DiazGranados, D.; Salazar, M.; Kramer, W.S.; Salas, E. Collaboration at work: An integrative multilevel conceptualization. Hum. Resour. Manag. Rev. 2012, 22, 128–145. [Google Scholar] [CrossRef]

- Vangen, S. Developing practice-oriented theory on collaboration: A paradox lens. Public Adm. Rev. 2017, 77, 263–272. [Google Scholar] [CrossRef]

- Garibay, O.O.; Winslow, B.; Andolina, S.; Antona, M.; Bodenschatz, A.; Coursaris, C.; Falco, G.; Fiore, S.M.; Garibay, I.; Grieman, K.; et al. Six human-centered artificial intelligence grand challenges. Int. J. Hum. Comput. Interact. 2023, 39, 391–437. [Google Scholar] [CrossRef]

- Ren, C.; Lee, S.J.; Hu, C. Assessing the efficacy of ChatGPT in addressing Chinese financial conundrums: An in-depth comparative analysis of human and AI-generated responses. Comput. Hum. Behav. Artif. Intell. 2023, 1, 100007. [Google Scholar] [CrossRef]

- Zheng, N.N.; Liu, Z.Y.; Ren, P.J.; Ma, Y.Q.; Chen, S.T.; Yu, S.Y.; Xue, J.; Chen, B.; Wang, F.Y. Hybrid-augmented intelligence: Collaboration and cognition. Front. Inf. Technol. Electron. Eng. 2017, 18, 153–179. [Google Scholar] [CrossRef]

- Bouschery, S.G.; Blazevic, V.; Piller, F.T. Augmenting human innovation teams with artificial intelligence: Exploring transformer-based language models. J. Prod. Innov. Manag. 2023, 40, 139–153. [Google Scholar] [CrossRef]

- Jarrahi, M.H. Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus. Horiz. 2018, 61, 577–586. [Google Scholar] [CrossRef]

- Noble, S.M.; Mende, M.; Grewal, D.; Parasuraman, A. The fifth industrial revolution: How harmonious human–machine collaboration is triggering a retail and service [R]evolution. J. Retail. 2022, 98, 199–208. [Google Scholar] [CrossRef]

- Malone, T.W. How human-computer “superminds” are redefining the future of work. MIT Sloan Manag. Rev. 2018, 59, 34–41. [Google Scholar] [CrossRef]

- Trist, E.L. The Evolution of Socio-Technical Systems: A Conceptual Framework and an Action Research Program; Occasional Paper No. 2; Ontario Quality of Working Life Centre: Toronto, ON, Canada, 1981. [Google Scholar]

- Appelbaum, S.H. Socio-technical systems theory: An intervention strategy for organizational development. Manag. Decis. 1997, 35, 452–463. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Vann Yaroson, E.; Abadie, A.; Roux, M. Human-artificial intelligence collaboration in supply chain outcomes: The mediating role of responsible artificial intelligence. Ann. Oper. Res. 2025, 1–35. [Google Scholar] [CrossRef]

- Van Riel, A.C.R.; Tabatabaei, F.; Yang, X.; Maslowska, E.; Palanichamy, V.; Clark, D.; Luongo, M. A new competitive edge: Crafting a service climate that facilitates optimal human–AI collaboration. J. Serv. Manag. 2024; ahead-of-print. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar] [CrossRef]

- Siau, K.; Wang, W. Building trust in artificial intelligence, machine learning, and robotics. Cut. Bus. Technol. J. 2018, 31, 47–53. [Google Scholar]

- Vössing, M.; Kühl, N.; Lind, M.; Satzger, G. Designing transparency for effective human–AI collaboration. Inf. Syst. Front. 2022, 24, 877–895. [Google Scholar] [CrossRef]

- Grant, R.M. Toward a knowledge-based theory of the firm. Strateg. Manag. J. 1996, 17, 109–122. [Google Scholar] [CrossRef]

- Grant, R.M. The knowledge-based view of the firm: Implications for management practice. Long Range Plan. 1997, 30, 450–454. [Google Scholar] [CrossRef]

- Mikalef, P.; Gupta, M. Artificial intelligence capability: Conceptualization, measurement calibration, and empirical study on its impact on organizational creativity and firm performance. Inf. Manag. 2021, 58, 103434. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Holmström, J.; Carroll, N. How organizations can innovate with generative AI. Bus. Horiz. 2024; in press. [Google Scholar] [CrossRef]

- Christiano, P.F.; Leike, J.; Brown, T.; Martic, M.; Legg, S.; Amodei, D. Deep reinforcement learning from human preferences. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Omar, M. The Growing Need for Human Feedback with Generative AI and LLMs. Available online: https://www.forbes.com/sites/forbestechcouncil/2023/05/25/the-growing-need-for-human-feedback-with-generative-ai-and-llms/ (accessed on 25 May 2023).

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar] [CrossRef]

- Wang, Y. Generative AI in operational risk management: Harnessing the future of finance. SSRN 2023. [Google Scholar] [CrossRef]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Kambadur, P.; Rosenberg, D.; Mann, G. BloombergGPT: A large language model for finance. arXiv 2023, arXiv:2303.17564. [Google Scholar] [CrossRef]

- Brecque, C. The Role of Knowledge Graphs in Overcoming LLM Limitations. Available online: https://www.forbes.com/sites/forbesbusinesscouncil/2023/12/07/the-role-of-knowledge-graphs-in-overcoming-llm-limitations/ (accessed on 7 December 2023).

- Parasuraman, A.; Zeithaml, V.A.; Berry, L.L. SERVQUAL: A Multiple-Item Scale for Measuring Consumer Perceptions of Service Quality. J. Retail. 1988, 64, 12–40. [Google Scholar]