Urban Traffic Flow Prediction Based on Bayesian Deep Learning Considering Optimal Aggregation Time Interval

Abstract

1. Introduction

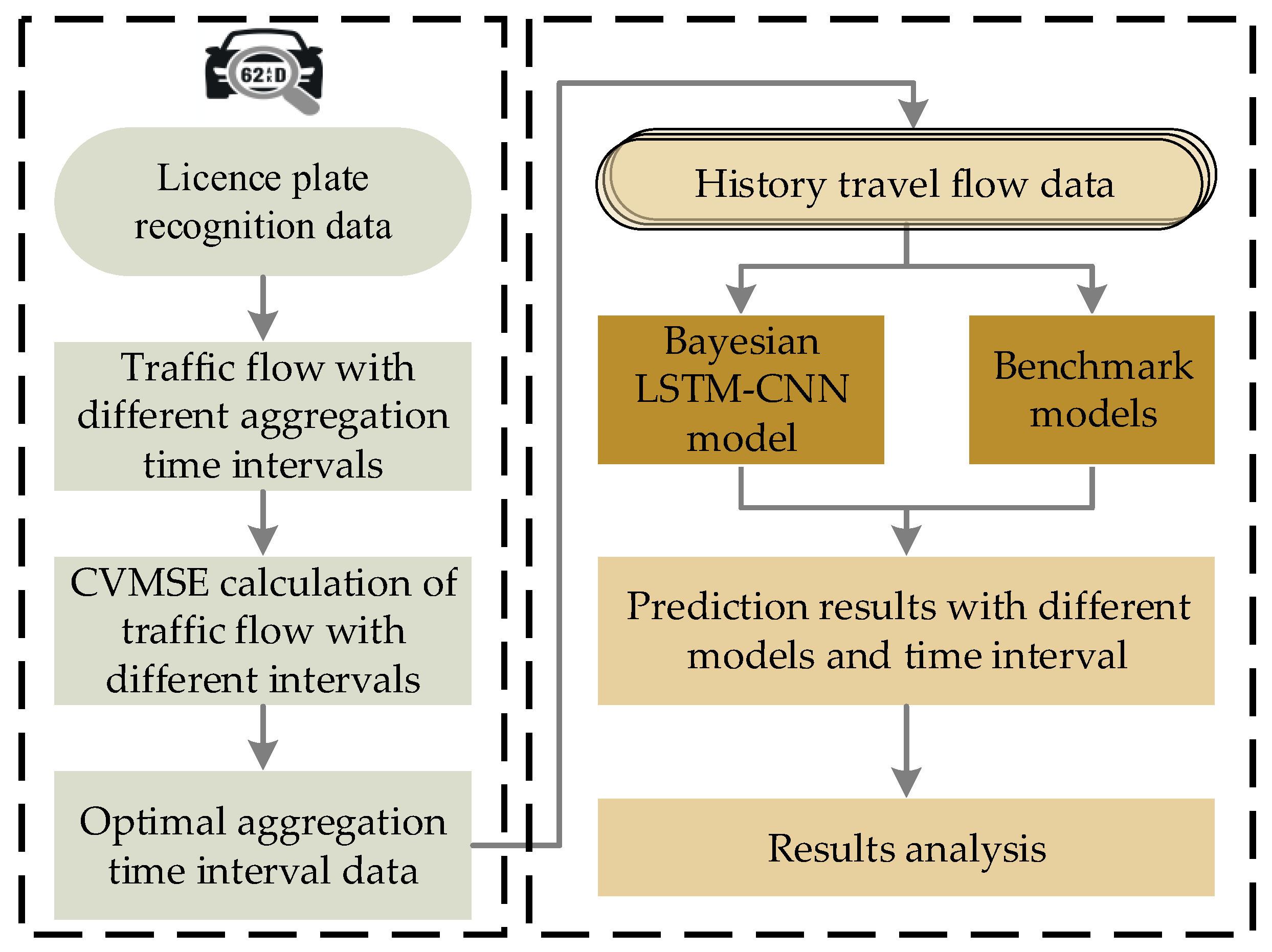

2. Materials and Methods

2.1. Problem Definition

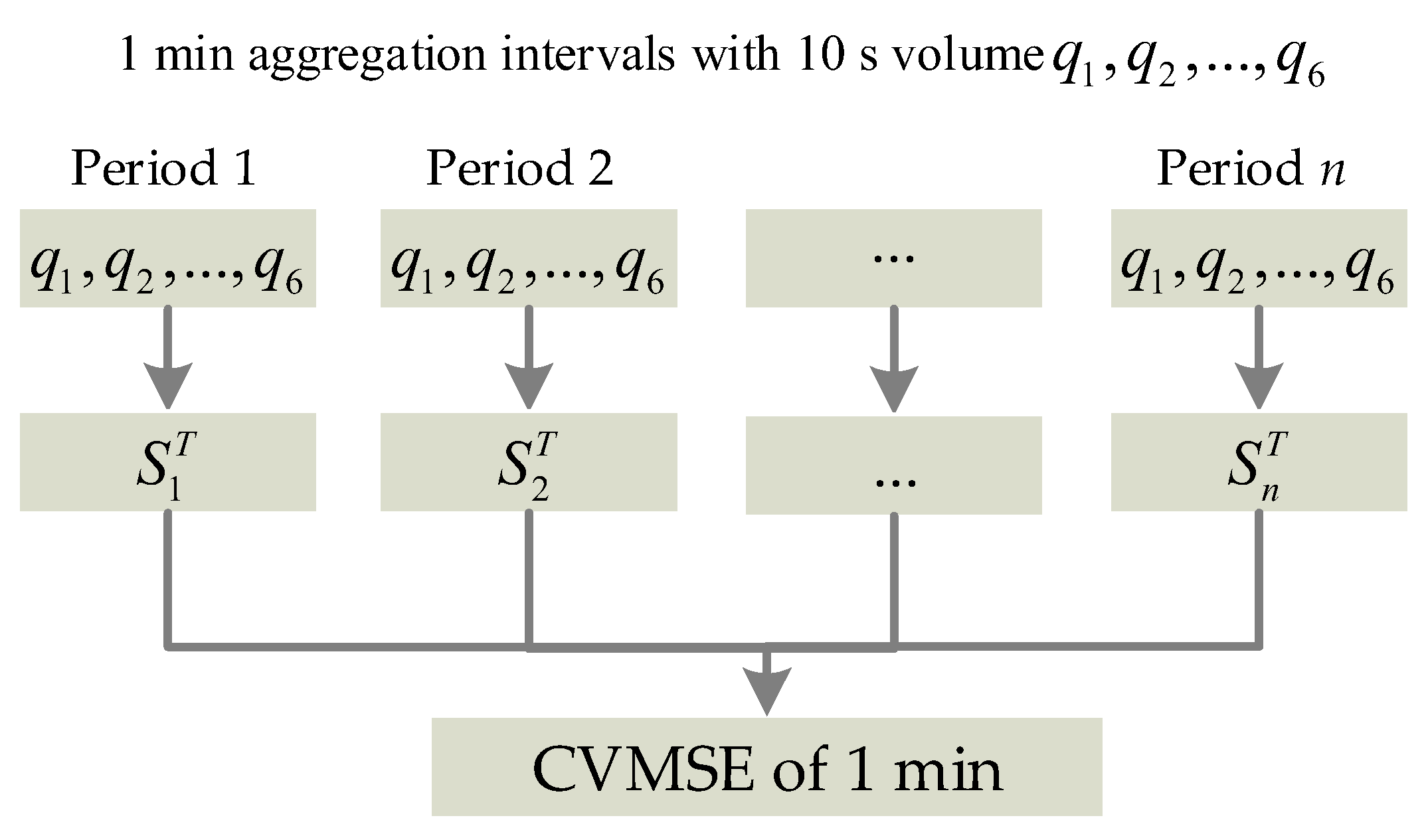

2.2. Optimal Aggregation Time Interval Based on Cross-Validation Mean Square Error

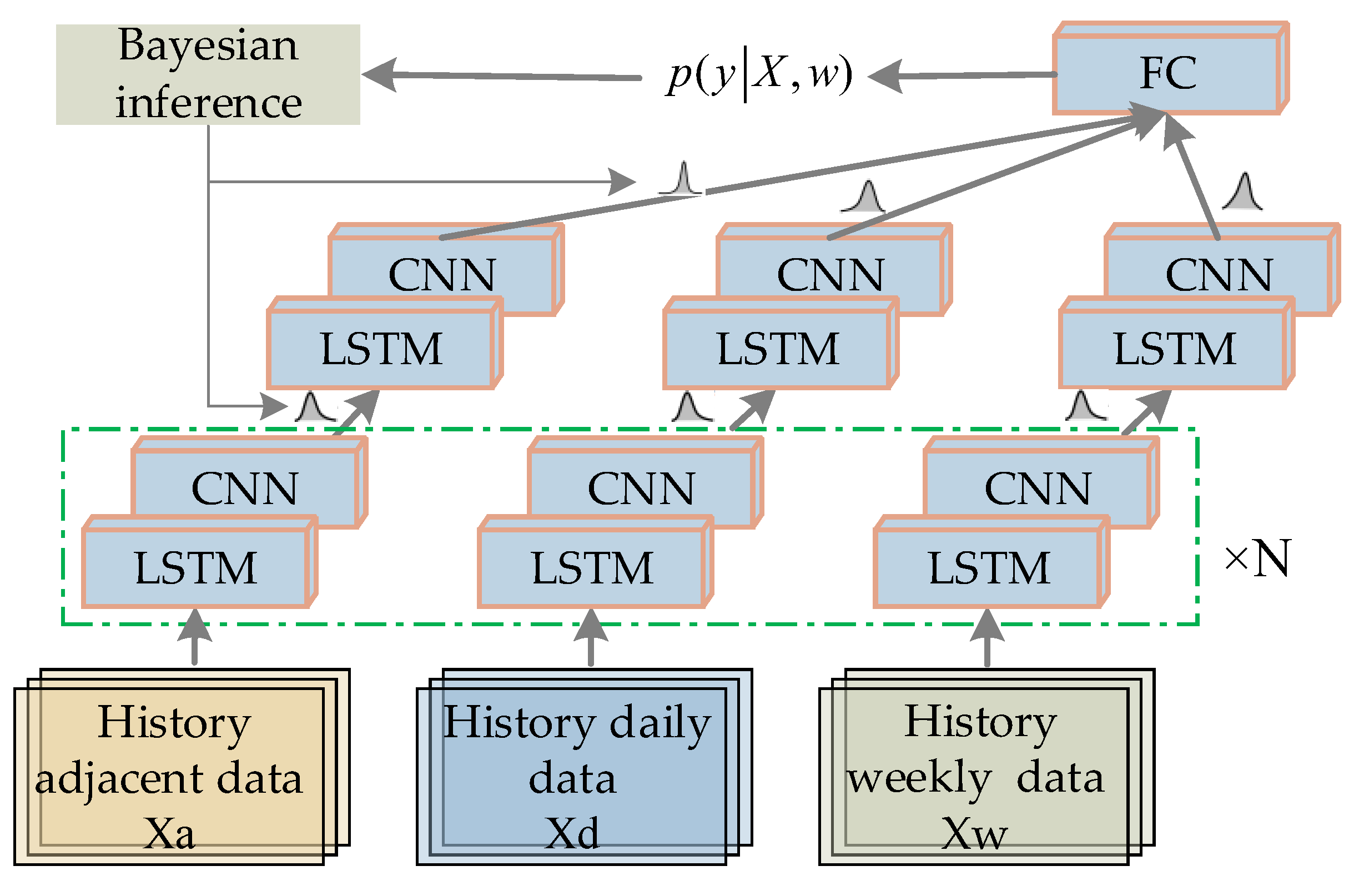

2.3. Bayesian LSTM-CNN

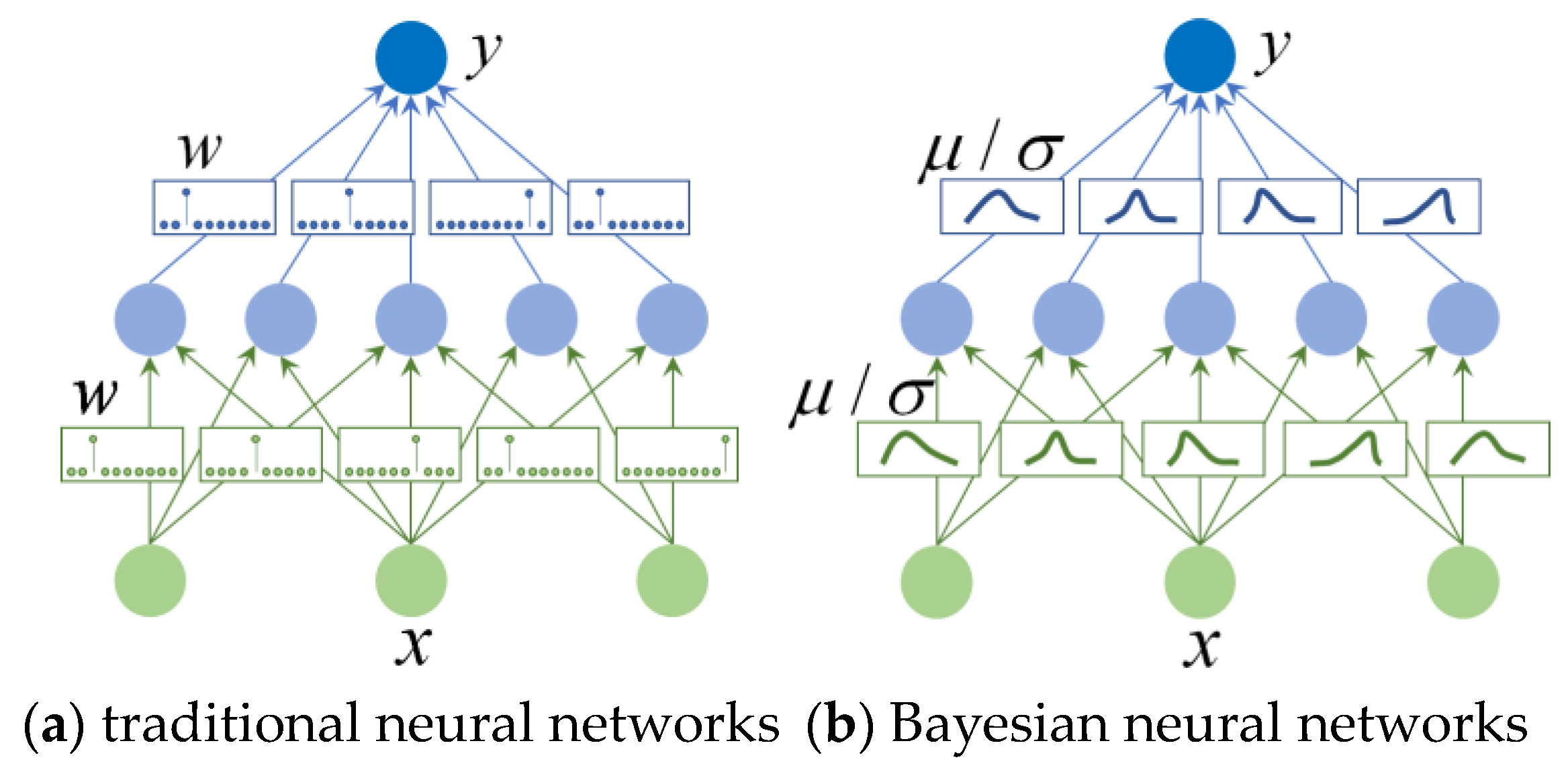

Bayesian Extension

3. Results

3.1. Data Collection

3.2. Optimal Aggregation Time Interval

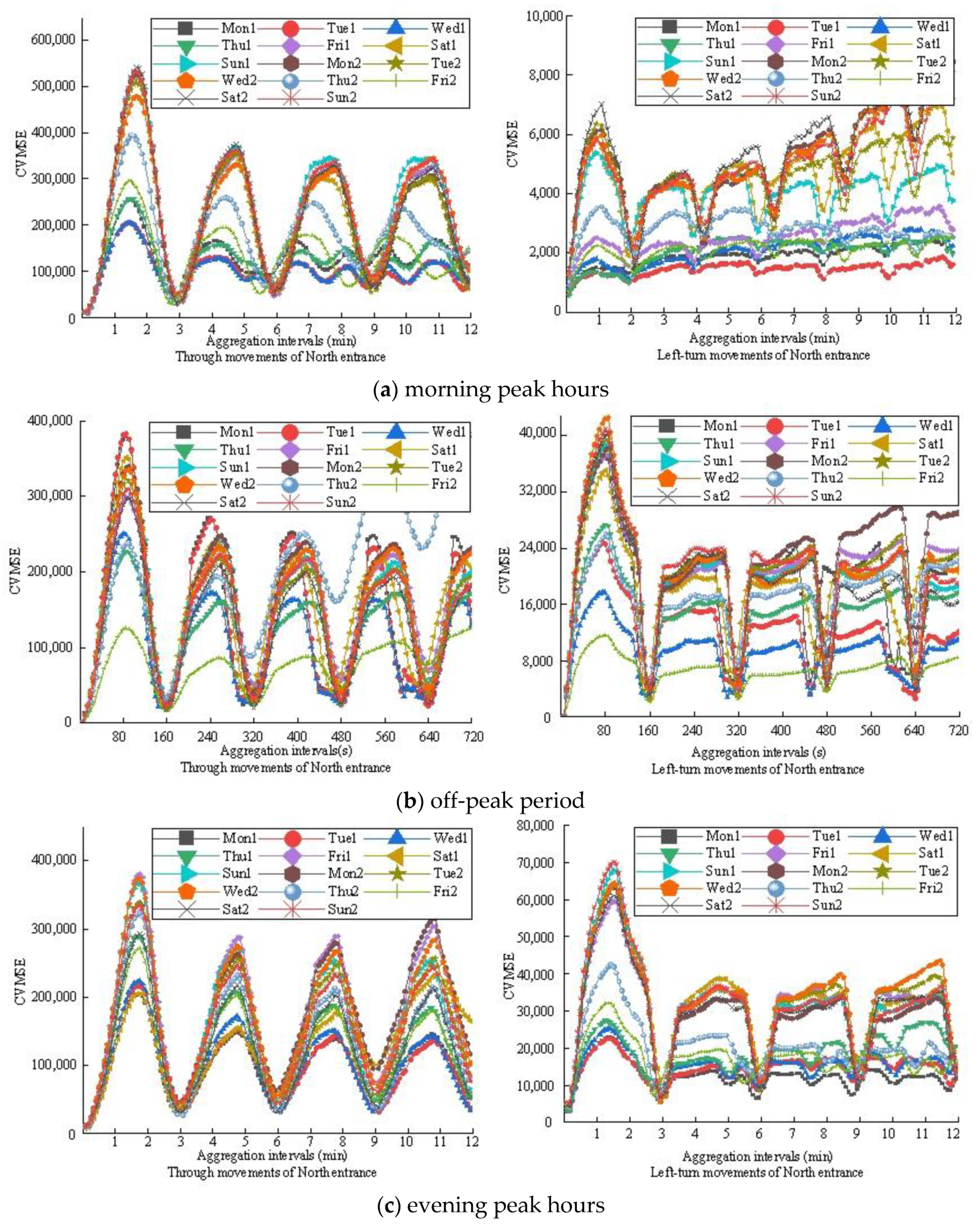

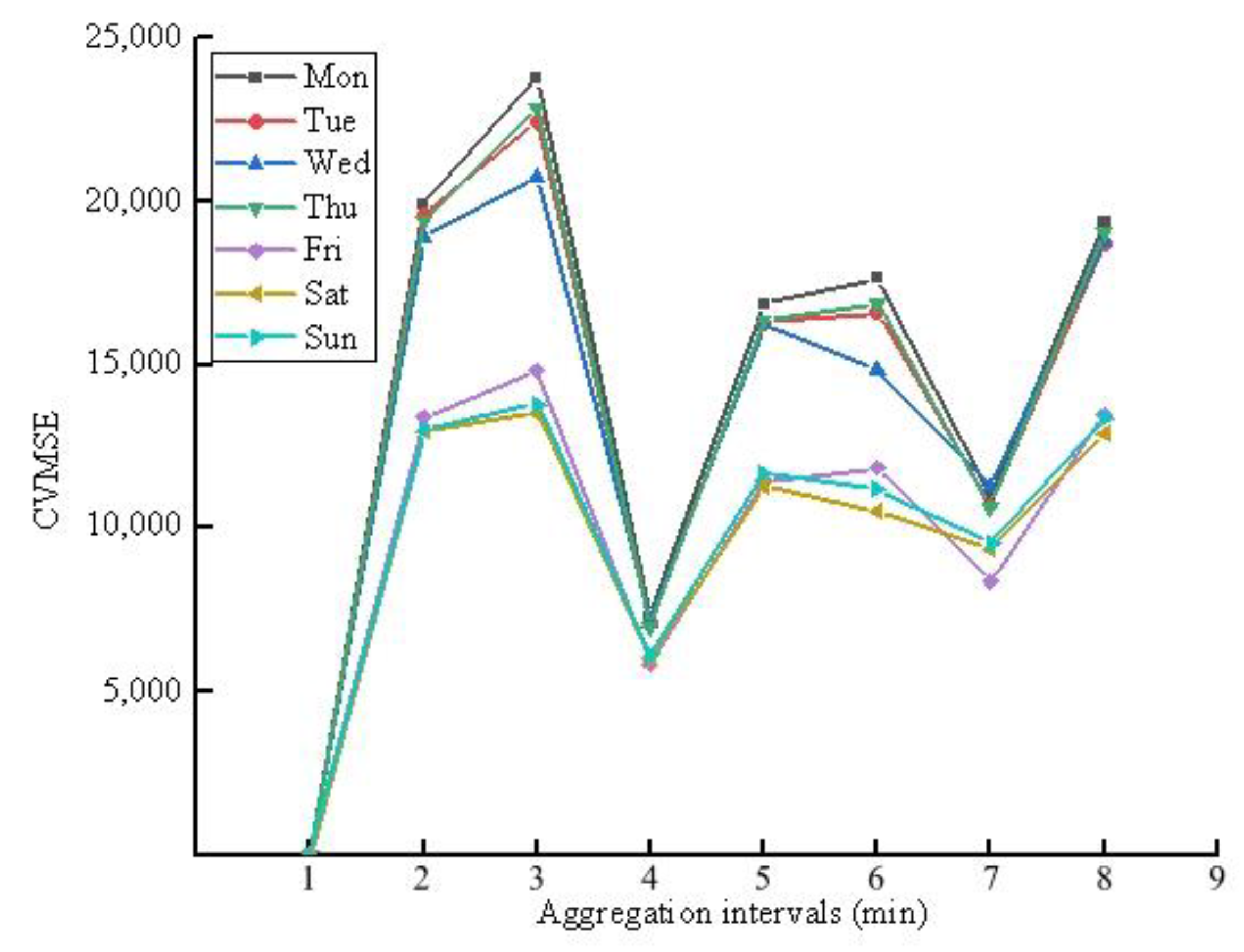

3.2.1. Single Intersection Scenario

3.2.2. Network Scenario

3.3. Prediction Results

3.3.1. Evaluation Metrics

3.3.2. Benchmark Methods

3.3.3. Parameter Settings

3.3.4. Results

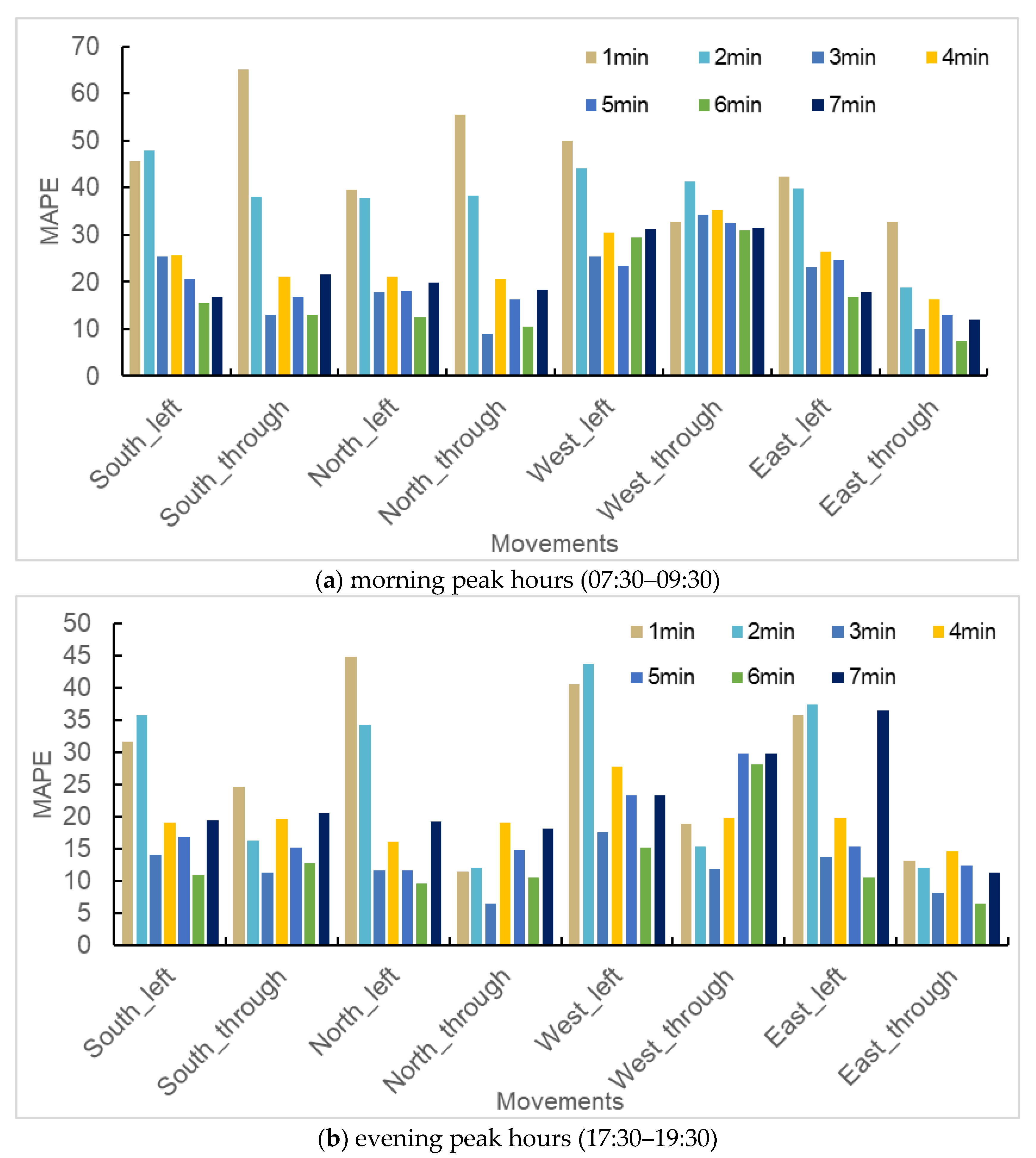

Performance on Signal Intersection

Performance on Network Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stathopoulos, A.; Karlaftis, M.G. A multivariate state space approach for urban traffic flow modeling and prediction. Transp. Res. Part C Emerg. Technol. 2003, 11, 121–135. [Google Scholar] [CrossRef]

- Chen, M.; Chien, S. Dynamic Freeway Travel-Time Prediction with Probe Vehicle Data: Link Based Versus Path Based. Transp. Res. Rec. J. Transp. Res. Board 2001, 1768, 157–161. [Google Scholar] [CrossRef]

- Kamarianakis, Y.; Prastacos, P. Forecasting traffic flow conditions in an urban network: Comparison of multivariate and univariate approaches. Transp. Res. Rec. J. Transp. Res. Board 2003, 1857, 74–84. [Google Scholar] [CrossRef]

- Asif, M.T.; Dauwels, J.; Goh, C.Y.; Oran, A.; Fathi, E.; Xu, M.; Dhanya, M.M.; Mitrovic, N.; Jaillet, P. Spatiotemporal patterns in large-scale traffic speed prediction. IEEE Trans. Intell. Transp. Syst. 2014, 15, 794–804. [Google Scholar] [CrossRef]

- Zheng, Z.; Su, D. Short-term traffic volume forecasting: A k-nearest neighbor approach enhanced by constrained linearly sewing principle component algorithm. Transp. Res. Part C Emerg. Technol. 2014, 43, 143–157. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, W.; Wu, X.; Chen, P.C.Y.; Liu, J. LSTM network:a deep learning approach for short-term traffic forecast. IET Intell. Transp. Syst. 2017, 11, 68–75. [Google Scholar] [CrossRef]

- Tian, C.; Chan, W. Spatial-temporal attention wavenet: A deep learning framework for traffic prediction considering spatial-temporal dependencies. IET Intell. Transp. Syst. 2021, 15, 549–561. [Google Scholar] [CrossRef]

- Tang, J.; Zeng, J. Spatiotemporal gated graph attention. network for urban traffic flow prediction based on license plate recognition data. Comput. Aided Civ. Infrastruct. Eng. 2022, 37, 3–23. [Google Scholar] [CrossRef]

- Zellner, A.; Montmarquette, C. A study of some aspects of temporal aggregation problems in econometric analyses. Rev. Econ. Stat. 1971, 53, 335–342. [Google Scholar] [CrossRef]

- Rossana, R.J.; Seater, J.J. Temporal aggregation and economic time series. J. Bus. Econ. Stat. 1995, 13, 441–451. [Google Scholar]

- Vlahogianni, E.I.; Karlaftis, M.G.; Golias, J.C. Statistical methods for detecting nonlinearity and nonstationarity in univariate short-term time-series of traffic volume. Transp. Res. Part C 2006, 14, 351–367. [Google Scholar] [CrossRef]

- Byron, J.; Shawn, M.; William, L.; Clifford, S. Intelligent Transportation System data archiving: Statistical techniques for determining optimalaggregation widths for inductance loop detectors. Transp. Res. Rec. 2000, 1719, 85–93. [Google Scholar]

- Park, D.; Rilett, L.; Gajewski, B.; Spiegelman, C.; Choi, C. Identifying optimal data aggregation interval sizes for link and corridor travel time estimation and forecasting. Transportation 2009, 36, 77–95. [Google Scholar] [CrossRef]

- Smith, B.; Ulmer, J. Freeway traffic flow rate measurement: Investigation into impact of measurement time interval. J. Transp. Eng. 2003, 129, 223–229. [Google Scholar] [CrossRef]

- Yu, L.; Chen, X.-M.; Grng, Y.-B. Data integration method of intelligent transportation system based on wavelet decomposition. J. Tsinghua Univ. Nat. Sci. Ed. 2004, 44, 793–796. [Google Scholar]

- Weerasekera, R.; Sridharan, M.; Ranjitkar, P. Implications of spatiotemporal data aggregation on short-term traffic prediction using machine learning algorithms. J. Adv. Transp. 2020, 2020, 1–21. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Jospin, L.V.; Laga, H.; Boussaid, F.; Buntine, W.; Bennamoun, M. Hands-on Bayesian neural networks—A tutorial for deep learning users. IEEE Comput. Intell. Mag. 2022, 17, 29–48. [Google Scholar] [CrossRef]

- Gelman, A.; Stern, H.S.; Carlin, J.B.; Dunson, D.B.; Vehtari, A.; Rubin, D.B. Bayesian Data Analysis; Chapman and Hall/CRC: New York, NY, USA, 2013. [Google Scholar]

- Chen, T.; Fox, E.; Guestrin, C. Stochastic gradient hamiltonian monte carlo. Proc. Mach. Learn. Res. 2014, 32, 1683–1691. [Google Scholar]

- Kumar, S.V.; Vanajakshi, L. Short-term traffic flow prediction using seasonal ARIMA model with limited input data. Eur. Transp. Res. Rev. 2015, 7, 1–9. [Google Scholar] [CrossRef]

- Toan, T.D.; Truong, V.H. Support vector machine for short-term traffic flow prediction and improvement of its model training using nearest neighbor approach. Transp. Res. Rec. 2021, 2675, 362–373. [Google Scholar] [CrossRef]

- Cheng, S.F.; Lu, F.; Peng, P.; Wu, S. Short-term traffic forecasting: An adaptive ST-KNN model that considers spatial heterogeneity. Comput. Environ. Urban Syst. 2018, 71, 186–198. [Google Scholar] [CrossRef]

- Guo, S.N.; Lin, Y.F.; Li, S.J.; Chen, Z.; Wan, H. Deep Spatial-Temporal 3D Convolutional Neural Networks for Traffic Data Forecasting. IEEE Trans. Intell. Transp. Syst. 2021, 20, 3913–3926. [Google Scholar] [CrossRef]

- Wu, Z.H.; Pan, S.R.; Long, G.D.; Jiang, J.; Zhang, C. Graph WaveNet for Deep Spatial-Temporal Graph Modeling. arXiv 2019, arXiv:1906.00121. [Google Scholar]

- Song, C.; Lin, Y.; Guo, S.; Wan, H. Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting. Proc. AAAI Conf. Artif. Intell. 2020, 34, 914–921. [Google Scholar] [CrossRef]

- Li, F.; Feng, J.; Yan, H.; Jin, G.; Yang, F.; Sun, F.; Jin, D.; Li, Y. Dynamic Graph Convolutional Recurrent Network for Traffic Prediction: Benchmark and Solution. IEEE Trans. Knowl. Eng. 2021, 17, 1–21. [Google Scholar] [CrossRef]

| Model | ARIMA | SVR | KNN | CapsNet | GRU | STAWnet | Ours |

|---|---|---|---|---|---|---|---|

| South_left | 3.99 | 3.32 | 3.41 | 3.52 | 3.15 | 3.11 | 3.07 |

| South_through | 11.54 | 7.62 | 8.16 | 10.03 | 7.31 | 8.12 | 7.20 |

| North_left | 3.61 | 3.09 | 3.25 | 3.17 | 2.99 | 3.09 | 3.03 |

| North_through | 13.75 | 9.29 | 9.59 | 12.53 | 8.78 | 9.55 | 8.75 |

| West_left | 4.27 | 3.36 | 3.33 | 4.19 | 3.24 | 3.17 | 3.20 |

| West_through | 1.93 | 1.86 | 1.82 | 1.87 | 1.62 | 1.74 | 1.66 |

| East_left | 2.29 | 2.17 | 2.34 | 2.19 | 2.15 | 2.19 | 2.04 |

| East_through | 3.91 | 3.65 | 3.84 | 3.74 | 3.51 | 3.70 | 3.45 |

| Mean | 5.66 | 4.29 | 4.47 | 5.15 | 4.19 | 4.32 | 4.09 |

| Model | ARIMA | SVR | KNN | CapsNet | GRU | STAWnet | Ours |

|---|---|---|---|---|---|---|---|

| South_left | 4.98 | 4.25 | 4.33 | 4.27 | 4.05 | 3.86 | 3.72 |

| South_through | 14.40 | 9.86 | 10.38 | 12.35 | 9.55 | 10.28 | 9.26 |

| North_left | 4.54 | 3.81 | 4.00 | 3.84 | 3.69 | 3.63 | 3.47 |

| North_through | 16.83 | 11.92 | 12.16 | 15.12 | 11.34 | 12.35 | 11.09 |

| West_left | 5.46 | 4.24 | 4.24 | 5.32 | 3.99 | 3.97 | 3.78 |

| West_through | 2.43 | 2.18 | 2.14 | 2.30 | 2.05 | 2.02 | 1.84 |

| East_left | 2.86 | 2.71 | 2.81 | 2.78 | 2.64 | 2.68 | 2.38 |

| East_through | 4.83 | 4.47 | 4.62 | 4.59 | 4.25 | 4.52 | 4.07 |

| Mean | 7.04 | 5.43 | 5.58 | 6.32 | 5.20 | 5.41 | 4.95 |

| Aggregation Intervals | Metrics | ||

|---|---|---|---|

| MAE | RMSE | MAPE | |

| 3 min | 3.83 | 4.38 | 28.87 |

| 4 min | 3.50 | 4.26 | 25.65 |

| 5 min | 3.67 | 5.30 | 26.59 |

| 6 min | 4.77 | 5.95 | 26.42 |

| 7 min | 4.09 | 6.50 | 25.25 |

| 8 min | 4.82 | 7.20 | 27.98 |

| Interval | Metrics | ARIMA | KNN | SVR | LSTM | ASTGCN | STSGCN | Graph-Wavenet | DGCRN | Ours |

|---|---|---|---|---|---|---|---|---|---|---|

| 4 min | MAE | 4.29 | 5.23 | 4.89 | 4.26 | 4.12 | 4.42 | 4.21 | 4.13 | 3.50 |

| RMSE | 6.26 | 7.13 | 6.69 | 6.12 | 5.94 | 6.36 | 5.96 | 5.79 | 4.26 | |

| MAPE | 30.35 | 37.71 | 35.83 | 28.48 | 26.43 | 28.49 | 26.15 | 26.29 | 25.65 | |

| 7 min | MAE | 4.25 | 5.19 | 4.85 | 4.20 | 4.07 | 4.39 | 4.08 | 4.04 | 3.17 |

| RMSE | 6.01 | 7.02 | 6.56 | 6.05 | 5.94 | 6.31 | 5.88 | 5.48 | 4.18 | |

| MAPE | 30.26 | 37.59 | 35.73 | 28.35 | 26.31 | 28.35 | 26.09 | 26.18 | 25.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, F.; Wang, D.; Sun, M.; Xie, R.; Cai, Z. Urban Traffic Flow Prediction Based on Bayesian Deep Learning Considering Optimal Aggregation Time Interval. Sustainability 2024, 16, 1818. https://doi.org/10.3390/su16051818

Fu F, Wang D, Sun M, Xie R, Cai Z. Urban Traffic Flow Prediction Based on Bayesian Deep Learning Considering Optimal Aggregation Time Interval. Sustainability. 2024; 16(5):1818. https://doi.org/10.3390/su16051818

Chicago/Turabian StyleFu, Fengjie, Dianhai Wang, Meng Sun, Rui Xie, and Zhengyi Cai. 2024. "Urban Traffic Flow Prediction Based on Bayesian Deep Learning Considering Optimal Aggregation Time Interval" Sustainability 16, no. 5: 1818. https://doi.org/10.3390/su16051818

APA StyleFu, F., Wang, D., Sun, M., Xie, R., & Cai, Z. (2024). Urban Traffic Flow Prediction Based on Bayesian Deep Learning Considering Optimal Aggregation Time Interval. Sustainability, 16(5), 1818. https://doi.org/10.3390/su16051818