Abstract

Grasslands are biomes of significant fiscal, social and environmental value. Grassland or rangeland management often monitors and manages grassland productivity. Productivity is determined by various biophysical parameters, one such being grass aboveground biomass. Advancements in remote sensing have enabled near-real-time monitoring of grassland productivity. Furthermore, the increase in sophisticated machine learning algorithms has provided a powerful tool for remote sensing analytics. This study compared the performance of two neural networks, namely, Artificial Neural Networks (ANN) and Convolutional Neural Networks (CNN), in predicting dry season aboveground biomass using open-access Sentinel-2 MSI data. Sentinel-2 spectral bands and derived vegetation indices were used as input data for the two algorithms. Overall, findings in this study showed that the deep CNN outperformed the ANN in estimating aboveground biomass with an R2 of 0.83, an RMSE of 3.36 g/m2 and an RMSE% of 6.09. In comparison, the ANN produced an R2 of 0.75, an RMSE of 5.78 g/m2 and an RMSE% of 8.90. The sensitivity analysis suggested that the blue band, Green Chlorophyll Index (GCl), and Green Normalised Difference Vegetation Index (GNDVI) were the most significant for model development for both neural networks. This study can be considered a pilot study as it is one of the first to compare different neural network performances using freely available satellite data. This is useful for more rapid biomass estimation, and this study exhibits the great potential of deep learning for remote sensing applications.

1. Introduction

The study and observation of natural phenomena are increasingly becoming more imperative as the world faces unprecedented environmental change [1]. The consistent improvements made to airborne and spaceborne platforms and sensors have resulted in a proliferation of remote sensing research [2]. Remote sensing has facilitated earth observations in various facets of the natural world, ranging from weather to vegetation. Vegetation monitoring, in particular, has become an influential research area in remote sensing academia due to the need for continuous and reliable data to assist in decision-making processes [2]. The advent of remote sensing, from simple aerial photographs to current high-resolution imagery, has enabled scientists to study larger spatial and temporal scales [2]. Recently, there has been a significant increase in remote sensing data and ground data in vegetation studies, which have established a solid foundation for vegetation monitoring presently and in the future [1].

The inundation of remotely sensed data has directed scientists towards finding novel methods for data processing and analysis [1]. Remotely sensed data has proven to be voluminous, with data being captured at monthly, weekly and even hourly scales [3]. The heterogeneity of remotely sensed data, with a vast array of sensors at varying spatial, temporal and radiometric resolutions, has produced challenges in data processing and analysis [1,3]. This challenge has ushered scientists into discovering new methodologies for discerning multi-dimensional data, resulting in a paradigm shift from conventional statistical methods towards machine learning solutions [3]. Artificial intelligence advancements and machine learning technologies have enabled scientists and practitioners to address pressing environmental issues due to their real-time processing of data and strong predictive abilities [3].

Neural networks, considered a subset of machine learning, are algorithms designed by mimicking a biological brain’s operation [4]. The artificial neural network (ANN), specifically, has been extensively used for remote sensing applications since the 1990s, as it has provided promising results [4]. Mas and Flores [4] state that ANNs have been reported to perform much more admirably as compared to traditional statistical methods due to their ability to learn complex patterns, study nonlinear relationships between variables, generalisation abilities and perform various analyses without necessitating the meeting of data assumptions (e.g., normally distributed data). Jensen et al. [5] acknowledge that ANNs have been used relatively successfully in remote sensing for biophysical estimation and land classification. However, they have their limitations. These include the complex architectures of ANNs and their demanding computational requirements, the need for large amounts of training data, and supervised algorithm training to ensure better accuracy and output [4,5].

In the last decade, there has been another paradigm shift in machine learning, with the focus now on deep learning approaches [6]. This is essentially a refinement and improvement on traditional ANNs to improve predictive accuracy and reduce the complexity of the previous algorithms [7]. Zhu et al. [7] define deep learning as neural networks characterised by more than two deep layers in the neural network structure that extract distinct feature patterns from input data. One such example of a deep neural network is the convolutional neural network (CNN), which has been specially engineered for image processing and analysis [7,8]. The increased number of layers and interconnections in CNNs has meant that more complex and intricate patterns and relationships can be deciphered, which is particularly useful for vegetation remote sensing studies [8]. CNNs have an advantage over ANNs because they require less computational time and power and produce higher predictive accuracies. However, they require vast amounts of training data to be able to make such accurate predictions and classifications [9]. Although the use of CNNs in remote sensing is trend-setting, it is currently only in its inception and has to be tested further to reveal its strengths and weaknesses [8].

Grasslands are biomes of high socio-economic and conservational value, particularly in southern Africa [10]. Grasslands are highly sensitive to environmental change and are often moderated by biophysical factors such as rainfall and grazing [11]. An anticipated increase in climate variability and natural disasters such as droughts, particularly in southern Africa, poses a threat to grassland distribution as well as grass species composition and abundance [12]. Understanding such changes in grasslands is of paramount importance to the agricultural sector and ultimately food security in regions where livestock production is almost entirely dependent on rangelands [13]. Soussana et al. [14] state that grasslands are essential in mitigating carbon and methane emissions from ruminants during livestock production, ensuring that rangeland-fed livestock production is more environmentally sustainable than intensive factory farming. Furthermore, grasslands play a pivotal role in greenhouse gas sequestration, as studies have ascertained that grasslands are more effective carbon sinks as opposed to forests [15]. Therefore, the estimation of aboveground grass biomass is critical to effectively monitoring and managing the impacts of climate change on the functioning and utilisation of grassland ecosystems.

Vegetation parameters are used in vegetation monitoring to assess the health and condition of plants. This can either be physically measured or remotely estimated by remote sensing [2]. Aboveground biomass is one such vegetation measure used to observe and monitor grassland productivity [1]. Neural networks, especially ANNs, have been used to assess and predict aboveground vegetation biomass for a considerable time [1]. In most cases, ANNs have outperformed typical Bayesian and iterative statistical methods for estimating biomass, as well as other machine learning algorithms such as support vector machines [1]. Recent grass biomass studies have gradually incorporated the utilisation of CNNs for biomass predictions, with varying results based on sensor resolutions and platform type [8].

There is a substantial lack of grassland biomass studies, in relation to remote sensing, in South Africa, as reported by Masenyama et al. [16]. Furthermore, in a South African context, no research has attempted to investigate the performance of conventional ANNs and contemporary CNNs in estimating aboveground grass biomass. The study of grasslands is imperative in the face of climate change. Hence, the use of machine learning techniques to observe and assess grassland health would be valuable to both researchers and practitioners [1,16]. Therefore, the objectives of this study were to: (1) compare the predictive performance of shallow ANNs and deep CNNs in estimating aboveground grass biomass using Sentinel-2 MSI and (2) utilise both neural networks on Sentinel-2 MSI to predict dry season aboveground biomass for the Vulindlela communal area in South Africa.

2. Methods

2.1. Study Area

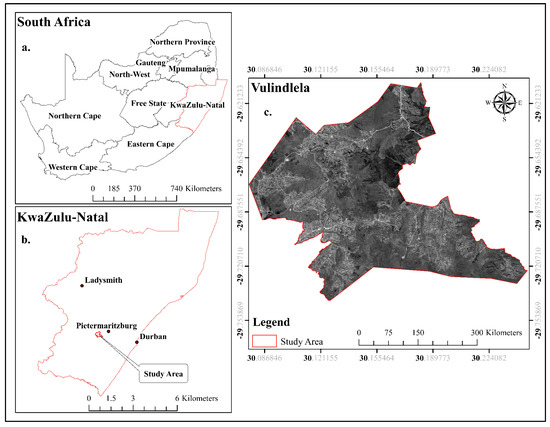

The Vulindlela area is situated at 29°40′24″ S and 30°8′42″ E in the greater Umngeni Catchment of the KwaZulu-Natal province, South Africa. The study area is part of the Umgungundlovu district and falls under the uMsunduzi Municipality (Figure 1). The local climate can be classified as a subtropical oceanic climate, with cool and dry winters and mild and wet summers [17]. A mean annual rainfall of 979 mm with a median annual rainfall of 850 mm is received in the study area. The annual maximum and minimum temperatures are approximately 22 °C and 10 °C, respectively [17]. Vegetation growth in the area is limited primarily by climate, with low precipitation, low temperatures, and frost being the major factors [17]. Vulindlela has a mean annual potential evaporation that ranges between 1580 and 1620 mm, which indicates a possible deficit in relation to mean annual rainfall [17]. The edaphic factors of Vulindlela are characterised by shallow soils with moderate to poor drainage; this presents a potential soil erosion risk if not properly managed [17].

Figure 1.

Location of the study area (c) Vulindlela relative to (a) South Africa and (b) the KwaZulu-Natal Province.

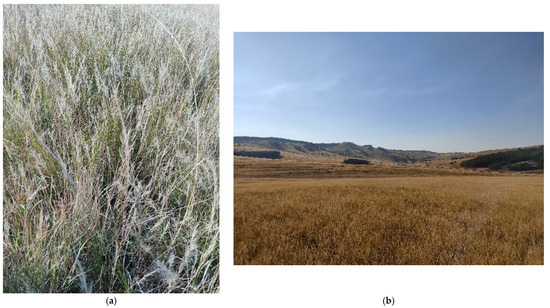

Grasslands within the study area are characterised as mesic and typically consist of species such as Themeda triandra, Eragrostis tenuifolia, Tristachya leucothrix, Paspalum urvillei, Sorghum bicolour, Panicum maximum, Setaria sphacelata, Aristida junciformis (Figure 2a), and Alloteropsis semialata, amongst others [18]. According to Scott-Shaw and Morris [19], the most palatable species to livestock from the abovementioned grasses are Themeda triandra, Tristachya leucothrix and Aristida junciformis, in order of palatability. Masemola et al. [20] state that the dry season in KwaZulu-Natal usually spans between June and July, whereas Roffe et al. [21] state that the wet season in KwaZulu-Natal typically spans between October and March. Grasslands in the study area are not formally managed using scientific management regimes and are considered communal grasslands (Figure 2b). These grasslands are managed using indigenous knowledge systems by the traditional authorities. The grasslands are used by locals as grazing land for livestock, particularly cattle, sheep, and goats. The locals use this as a means of subsistence as well as income generation.

Figure 2.

Visual representation of in-field conditions within the study area where (a) Aristida junciformis dominated grassland and (b) image of a grassland within the study area during the dry season.

2.2. Sentinel-2 MSI Satellite Imagery

Sentinel-2 is a multispectral imaging sensor operated by the European Space Agency and provides open, freely accessible data. Cloud-free Sentinel-2 data covering the study area was acquired from Land Viewer [22] on the 21 October 2021. The image downloaded was a Sentinel-2B product, which is an orthorectified and atmospherically corrected product. The Sen2Cor algorithm is used within the Sentinel Application Platform environment (SNAP) to perform atmospheric correction and provide the bottom-of-atmosphere reflectance data [23]. The image was captured on 22 June 2021, and aligns with the field data collection days. The Sentinel-2 mission acquires 12-bit images with a swath width of 290 km and a temporal resolution of 5–19 days at spatial resolutions of 10, 20, and 60 m. The orthoimages have a UTM/WGS84 projection.

The Sentinel-2 MSI consists of 13 spectral bands that cover the visible, NIR, and SWIR sections. The three bands with a 60 m spatial resolution were excluded from the analysis as they are primarily used for atmospheric monitoring [24]. Sentinel-2 spectral bands are summarised in Table 1.

Table 1.

Sentinel-2B spectral bands [25].

2.3. Field Data Collection and Measurements

Grass biomass samples were collected in the study area between 21 June 2021 and 23 June 2021. A total of one hundred and twenty 10 m × 10 m quadrats, spaced approximately 100 m apart, were established within Vulindlela using a purposive sampling technique, as conducted by Royimani et al. [26]. A GPS reading was recorded using the Trimble GPS within each plot, which is a highly accurate sub-metre GPS system. Within each plot, two 1 m × 1 m sub-plots were established, and grass clippings were taken, with the dry biomass mass being averaged within each plot [27]. Grass clippings were cut approximately 5 cm from the ground, and only tufts within the sub-plot were taken. Only grasses were sampled; other vegetation, such as forbs and sedges, was discarded. Grass samples were placed into labelled brown paper bags, and a calibrated digital scale was used to measure the fresh biomass weight on the day of collection, which is known as wet mass. Grass samples were then placed in an oven at 70 °C for 48 h to remove moisture. The samples were then reweighed after drying to determine dry mass.

2.4. Vegetation Indices

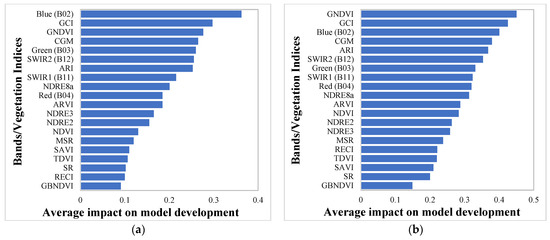

Vegetation indices (VIs) were computed using the spectral bands to assess aboveground biomass (Table 2). The VIs used in this study were computed using ArcGIS 10.4 software [28]. All VIs listed in Table 2 were tested for their importance in model development for both neural networks. However, only VIs that had an average impact of 0.1 or higher on model development were deemed to be significant for the estimation of aboveground grass biomass.

Table 2.

Various vegetation indices (VIs) used in this study.

2.5. Statistical Analysis and Machine Learning

2.5.1. Artificial Neural Network (ANN)

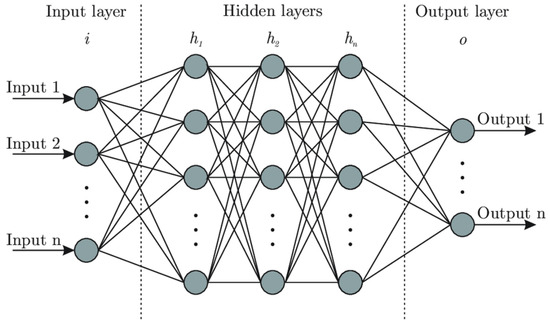

This study utilised an artificial neural network (ANN) to predict aboveground biomass using a Sentinel-2 multispectral dataset. The ANN is a machine learning algorithm based on the human brain’s computational mechanisms [4]. ANNs can be trained to recognise patterns, perform complex computations, and develop self-organising abilities [4]. ANNs are typically comprised of multiple layers (Figure 3): an input, an output, and one or more hidden layers [43]. A greater number of layers is associated with greater complexity of the model [43]. In terms of remote sensing applications, ANNs have been extensively utilised and have proven to provide more reliable results than conventional statistical methods [4]. Regarding aboveground biomass studies, Deb et al. [44] and Yang et al. [43] used ANNs to predict aboveground grass biomass. In this study, the ANN model successfully determined the relationship between the variables by manually changing the number of nodes in the hidden layer. Table 3 shows the hyper-parameters used to train the ANN model.

Figure 3.

The general architecture of an ANN [5].

Table 3.

Hyper-parameters used to train the ANN and CNN models.

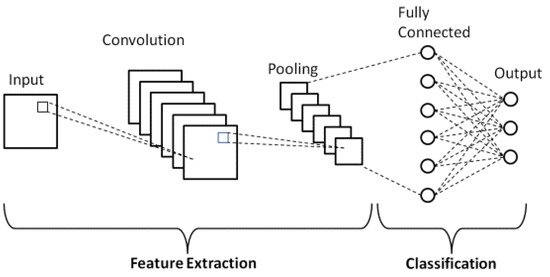

2.5.2. Convolutional Neural Network (CNN)

CNNs are an advancement to typical ANNs and have been developed explicitly for analysing visual imagery [45]. CNNs have increasingly become a valuable and powerful tool in the remote sensing field, especially with image classification [45]. Unlike ANNs that use weights or neurons to “learn” the data, CNNs use multiple layers cast on images to analyse them (Figure 4) [45]. ANNs are more suited to concrete datasets, whereas CNNs are more suited for visual datasets. CNNs also provide a more automated approach to deep learning, as they can detect important patterns and features in images with minimal human supervision [27]. For example, Ma et al. [27] successfully utilised a deep CNN to estimate aboveground biomass for wheat.

Figure 4.

The general architecture of a CNN [6].

The input image underwent a convolution operation employing a kernel specifically designed for feature detection, utilising 32 filters (Table 3). Following this initial convolution, downsampling was performed through max-pooling with a parameter of 2, resulting in a halving of the image size. Subsequently, a second convolution operation followed, utilising 64 new filters and generating a new set of features maps. A second downsampling was applied to this features group using max-pooling with a parameter of 2. Following this, a third convolution operation occurred with 128 new filters, establishing another set of new features maps. A third downsampling was then applied to this features group using max-pooling with a parameter of 2. Subsequently, a fourth convolution operation took place with 256 new filters, creating yet another set of features maps. A fourth downsampling was then applied to this features group using max-pooling with a parameter of 2. The final convolution layer utilised 512 filters to generate the last features maps. The last downsampling step involved max-pooling with a parameter of 2. In the concluding phase of the feature extraction layer, a flattening process was executed. This was conducted through an R interface using the Keras neural network API.

The ANN and CNN models were run to determine the relationship between VIs and spectral data with aboveground biomass. Table 3 lists the hyper-parameters used to train the ANN and CNN models.

2.6. Accuracy Assessment

The models were run a maximum of five times with random initial weights. Model performance was analysed using the coefficient of determination (R2), root mean square error (RMSE), and percentage root mean square error (RMSE%) assessments. The coefficient of determination is a statistical measure of the accuracy of a regression by comparing actual versus predicted data points [46]. The value of R2 ranges from 0 to 1, with a higher value indicating a higher accuracy [46]. The equation for R2 is found below [47]:

where yj and y represents measured and estimated biomass values, respectively; Y is the average measured biomass over all samples and n denotes the number of samples [47].

According to Shoko et al. [24], the RMSE measures the difference between actual and predicted values, in this instance, actual and predicted biomass values. The RMSE was calculated using the following formula as documented by Shoko et al. [24]:

where measured value is the measured biomass in the field, predicted value is the predicted biomass by the model and i is the predictor variable included. The RMSE% was calculated using the following formula as expressed by Shoko et al. [24]:

where n is the number of measured values, yi is the measured value, Yi is the estimated value and y is the average of the measured aboveground biomass [24].

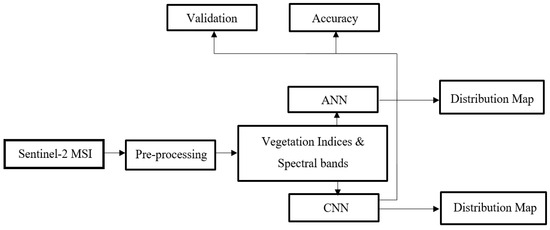

Models yielding the highest R2 and lowest RMSE/RMSE% between predicted and measured biomass levels, based on an independent test dataset (i.e., 30% of the dataset) were retained for predicting biomass levels. An aboveground biomass distribution map was computed using the ANN and CNN models with spectral data and VIs. A sensitivity analysis was also conducted to determine which variables were most important in model development for the ANN and CNN. All statistical analyses were conducted utilising R statistical software package version 3.1.3. The methodology used in this study is illustrated in Figure 5.

Figure 5.

Flowchart of the methodology undertaken in this study.

3. Results

3.1. Descriptive Statistics

Observed grass biomass (g/m2) during the dry season across 120 sample plots had an average of 47.82 g/m2 with a standard deviation of 23.38 g/m2. The highest biomass recorded was 123.8 g/m2 whereas 8.2 g/m2 was the lowest (Table 4).

Table 4.

Descriptive statistics of the observed biomass (g/m2) over the dry season.

3.2. ANN vs. CNN

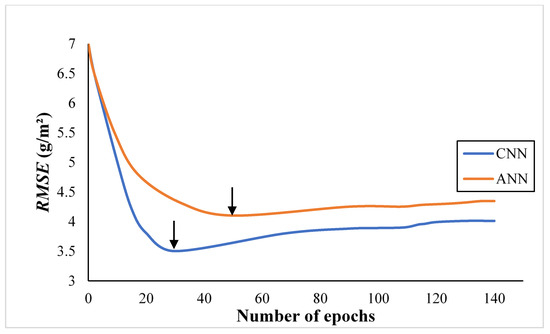

Figure 6 shows both models’ training and validation processes with their set hyperparameters. The x-axis represents the number of epochs, and the y-axis represents the root mean square error in biomass. An epoch is essentially one cycle of the forward- and back-propagation phases. The CNN model was more adept at learning than the ANN, as the CNN required 30 epochs to minimise error, whereas the ANN required 50 epochs to minimise error. The error remained more or less constant after the 30th epoch in the CNN and the 50th epoch in the ANN. Determining a suitable number of epochs is essential to preventing under- or overfitting of models [1,48].

Figure 6.

Number of epochs for each model. The arrows indicate that for the CNN and ANN models the number of epochs that gave the lowest error was 30 and 50, respectively.

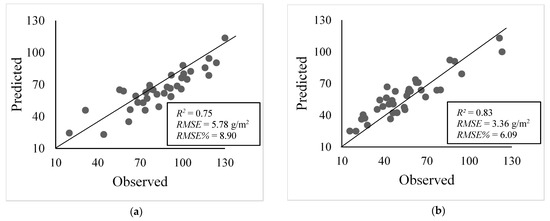

In assessing the predictive performance of both the ANN and CNN machine learning algorithms in estimating aboveground biomass during the dry season, the ANN produced an R2 value of 0.75 with an RMSE of 5.78 g/m2 and a RMSE% of 8.90 (Figure 7a). In comparison, the CNN produced an R2 value of 0.83 with an RMSE of 3.36 g/m2 and a RMSE% of 6.09 (Figure 7b).

Figure 7.

Scatterplots showing biomass over the dry season for (a) ANN and (b) CNN.

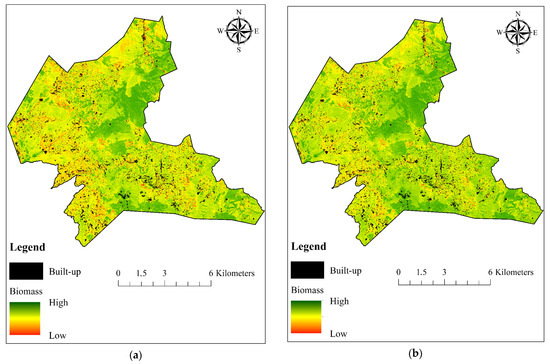

Figure 8 illustrates the spatial distribution of aboveground grass biomass in the study area during the dry season, as predicted by both the ANN and CNN. It can be observed that the predictive map conjured by the CNN has slightly more accurate aboveground biomass representation as compared to the ANN, especially in the peripheral areas of the study site.

Figure 8.

Biomass (g/m2) over the dry season for (a) ANN and (b) CNN.

Sensitivity Analysis

In terms of a sensitivity analysis (Figure 9), whereby the importance of spectral bands and VIs are determined and ranked in relation to dry season biomass estimation for each model, the blue band (B02) from Sentinel-2 MSI was the most important band for the ANN, followed by the GCI and the GNDVI. In comparison, the GNDVI was the most important variable for estimating biomass for the CNN, followed closely by the GCI and the blue spectral band (B02) from Sentinel-2 MSI. The GBNDVI was the least significant variable in biomass estimation for both models. Only variables with an average impact of >0.1 were included in the models.

Figure 9.

Ranking the importance of variables for developing the (a) ANN and (b) CNN models for grass aboveground biomass estimation.

4. Discussion

This study investigated the utilisation of two neural networks in predicting aboveground grass biomass and compared their respective performances. The advancement in machine learning has provided scientists with numerous opportunities to test their performance in real-world applications, such as remote sensing and vegetation monitoring [1]. Comparing the accuracies of two different neural networks helps reveal the relationships between biomass and remote sensing variables [49]. To date, machine learning algorithms have proven to be much more complex and dynamic than traditional statistical modelling, allowing for more complex modelling of biophysical parameters and more decisive findings and correlations [3]. This study specifically demonstrated the refinement of neural networks, as the contemporary convolutional neural network (R2 = 0.83) outperformed the conventional artificial neural network (R2 = 0.75) in aboveground grass biomass predictions. This indicates the onset of deep learning approaches in remote sensing applications [7].

Numerous recent studies have conducted a comparative analysis between different machine learning algorithms in remote sensing applications, particularly for vegetation monitoring. The ANN is one of the oldest machine learning algorithms and is used extensively for grassland biomass retrieval [1]. A study by Xie et al. [50] compared the performance of ANN to multiple linear regression (MLR) in estimating the aboveground biomass of grasslands in Mongolia. The study used Landsat ETM+ (NDVI, bands 1,3,4,5,7) data, and the results showed that the ANN (R2 = 0.817, NRMSE = 42.36%) outperformed the multiple linear regression (R2 = 0.591, NRMSE = 53.2%). Similarly, Yang et al. [43] found that the ANN (R2 = 0.75–0.85) outperforms MLR (R2 = 0.4–0.64) in estimating grass biomass. Their study utilised the normalised difference vegetative index (NDVI), enhanced vegetation index (EVI), modified soil adjusted vegetation index (MSAVI), soil adjusted vegetation index (SAVI), and optimised soil adjusted vegetation index (OSAVI) derived from MODIS data. Xie et al. [50] utilised a single date image, whereas Yang et al. [43] utilised a multi-temporal time series. This proved that machine learning techniques are an improvement to typical regression analyses, even at different spatio-temporal scales [1].

Masenyama et al. [16] have stated that the average R2 value for remote sensing-grassland productivity studies ranges between 0.65 (65%) and 0.75 (75%). In comparison, the performance of both the ANN and CNN in this study is commendable, with a model accuracy of 75% for the ANN and 83% for the CNN. Furthermore, studies by Dong et al. [49] and Schreiber et al. [46] found that CNNs outperformed ANNs in aboveground biomass estimation from remotely sensed data. Dong et al. [49] compared the performance of CNNs against three other machine learning algorithms, namely random forest, support vector regression, and ANN, in estimating the aboveground biomass of bamboo. The WorldView-2 platform was used in this study, with spectral bands and vegetation indices as input data. Overall, the CNN produced better results than the ANN, with an R2 of 0.94 and an RMSE of 23.1%, whereas the ANN could only achieve an R2 of 0.86 and an RMSE of 36.1%. The random forest and support vector regression obtained slightly better accuracy than the CNN. However, it must be noted that the CNN had limited input variables in this study, with only spectral bands being used as input data compared to the other two algorithms that had spectral, VIs, and texture data [49].

Similarly, Schreiber et al. [46] compared the performance of ANNs and CNNs in predicting the aboveground biomass of wheat using UAV-based imagery (RGB imagery with a 2.14 cm2 pixel size). Their findings showed that the CNN reached an R2 of 0.9065, whereas the ANN reached an R2 of 0.9056. In this case, the ANN was slightly outperformed by the CNN. However, Schreiber et al. [46] also acknowledge that the homogeneity of wheat cultivation could be a slight advantage to the ANN. In contrast, more heterogenous study environments could see the accuracy of ANNs diminish and the accuracy of CNNs flourish. Furthermore, they also note that the use of hyperspectral data and vegetation indices could greatly improve the accuracy of CNNs, which was absent in their study.

Kattenborn et al. [8] stated that since CNNs have been specialised for image analysis and processing, they are highly suitable for remote sensing applications. CNNs have proven to be extremely useful in extracting biophysical parameters of vegetation from remotely sensed data, such as species composition and biomass [8]. Deep learning approaches, which include CNNs, are gradually replacing shallow learning techniques such as ANNs as they analyse, interpret and predict spatial data much more effectively [7,8,45]. There has been a steady influx of biomass estimation studies utilising CNNs and remotely sensed data in academic and research circles.

Ma et al. [27] utilised a deep CNN in tandem with very high-resolution RGB digital imagery to estimate the aboveground biomass of wheat. The CNN had a high coefficient of determination (R2 = 0.808) and a low NRMSE (NRMSE = 24.95%) in predicting wheat biomass [27]. Karila et al. [51] utilised a drone with RGB and hyperspectral capabilities to estimate grass sward quality and quantity. They compared the performance of multiple deep neural networks, CNN included, to the random forest method. Overall, their findings demonstrated that the CNN model (NRMSE = 21%) performed better than the random forest model in estimating aboveground grass biomass, with the CNN being the most consistent with hyperspectral data as compared to only RGB data. Varela et al. [52] predicted various key traits, one of them being aboveground biomass, of Miscanthus grass using UAV imagery and two CNNs. The best R2 achieved by the 2D CNN, which was multispectral input from a single image, was 0.59 with an RMSE of 180 g. In contrast, the 3D CNN, which was multispectral and multi-temporal, produced a slightly higher R2 of 0.69 and an RMSE of 149 g.

There have been numerous biomass estimation studies for grasslands using remote sensing and machine learning in a southern African context. However, none of these attempted to utilise CNNs for biomass prediction [16]. For example, Ramoelo and Cho [53] estimated dry season aboveground grass biomass using the random forest algorithm and by comparing Landsat 8 and RapidEye data. They only utilised band reflectance data to estimate biomass, stating that VIs are not always plausible for biomass estimation during the dry season since the vegetation lacks “greenness” [53]. RapidEye yielded better results with random forest, with an R2 of 0.86, an RMSE of 13.42 g/m2, and an RRMSE of 10.61%, whereas Landsat 8 yielded an R2 of 0.81, an RMSE of 15.79 g/m2, and an RRMSE of 12.49%.

Shoko et al. [24] utilised sparse partial least squares regression (SPLSR) to estimate grass biomass using three different satellites, namely Sentinel-2 MSI, Landsat 8 OLI, and WorldView-2 in the Drakensberg. They utilised seven spectral bands from Landsat 8, ten from Sentinel-2, eight from WorldView-2, and various VIs. Their findings showed that WorldView-2 derived variables yielded the best predictive accuracies (R2 between 0.71 and 0.83; RMSE between 6.92% and 9.84%), followed by Sentinel-2 (R2 between 0.6 and 0.79; RMSE between 7.66% and 14.66%), and lastly Landsat 8 (R2 between 0.52 and 0.71; RMSE between 9.07% and 19.88%). Vundla et al. [11] assessed the aboveground biomass of grasslands in the Eastern Cape using Sentinel-2 MSI and the partial least squares regression (PLSR) algorithm. They utilised the visible, red-edge, and shortwave infrared bands, NDVI, and simple ratio (SR) as input data for the PLSR. Their results show that the PLSR performed well in estimating grass biomass, with an R2 of 0.83 and an RMSE of 19.11 g/m2.

The sensitivity analysis for both models was conducted to determine which spectral bands and VIs were most important in estimating aboveground grass biomass. This is discerned by examining the correlation between aboveground biomass and spectral/VI values [47]. For both models, the vegetation indices and spectral bands proved to be relatively accurate proxies for estimating aboveground grass biomass, a finding that also concurs with Pang et al. [54]. This contrasts with the suggestions of Ramoelo and Cho [53] that vegetation indices may not be suitable for dry-season grass biomass estimation due to grass senescence, with only spectral data yielding better results during the dry season. For both the ANN and CNN models, five bands (blue, green, red, SWIR2, SWIR1), nine VIs (GNDVI, CGM, ARVI, NDVI, MSR, SAVI, TDVI, SR, GBNDVI), and six red edge VIs (GCI, ARI, NDRE8a, NDRE3, NDRE2, RECI) were considered important for model development. Other studies also showed that utilising both spectral data and VIs improved biomass predictions as opposed to using them independently [24,43,47,54].

By comparing the results of the sensitivity analysis in this study to other similar biomass studies, it is evident that direct comparisons cannot be established due to the diversity in platforms and machine learning algorithms used. Shoko et al. [24], using Sentinel-2 MSI and SPLSR, found that SWIR1, SWIR2, green, red, and red edge 1 were the most important spectral variables, whereas NDRE1 and NDVI were the most important VIs in predicting grass biomass. Parallels can be drawn between the spectral bands for this study and Shoko et al. [24]; however, this study provides substantially more VIs of significance. Vundla et al. [11], using Sentinel-2 MSI and PLSR, discovered that simple ratio VIs had the highest importance, whilst NDVI had the lowest importance in assessing grass biomass. Their findings on the simple ratio VIs contradict the findings in this study, with the simple ratio VI being of less importance in both the ANN and CNN models. However, NDVI was shown to have reduced significance in both studies.

NDVI, a widely used VI in remote sensing, was shown to have a moderate impact on biomass estimation in this study for both models. This concurs with Deb et al. [44], who also found that other VIs produced better biomass estimates than NDVI when paired with neural networks. Ramoelo and Cho [53] suggest that NDVI is susceptible to grass senescence during the dry season and will tend to underestimate biomass. This is due to NDVI essentially measuring vegetation “greenness,” which is primarily absent from grasses during the dry season [53]. Deb et al. [44] stated that NDVI is often subjected to variations in atmospheric conditions, soil elements, plant phenology, and external disturbances that hinder its efficacy in estimating biomass. The findings in this study also show a lesser impact of NDVI on biomass estimates than other VIs.

No known studies to date have utilised Sentinel-2 data with CNNs to assess aboveground biomass. Hence, comparing sensitivity analysis results for the CNN is not plausible. Findings in this study come closest to findings by Li et al. [47], who predicted aboveground grass biomass using Sentinel-2 MSI in tandem with RF and XGBoost algorithms. They found that the GNDVI and GCI were the most important variables for developing the RF model. GNDVI and GCI were also high-impacting variables in this study for both the ANN and CNN models. According to Dusseux et al. [55], GNDVI has been well documented and used concerning vegetation biomass. GCI and other red-edge-based VIs have also proven to be particularly useful in biomass studies due to their important relationship with chlorophyll content and nutrients present in plant cells [11,55]. Ramoelo and Cho [53] found that the blue, green, and red edge spectral data were important for predicting aboveground grass biomass during the dry season, albeit using RapidEye and RF. This study also shows the importance of blue and green spectra; however, shortwave infrared was deemed more significant in this study than red edge spectra.

Compared to the abovementioned studies, the performance of both NNs in this study is relatively commendable. However, it must be acknowledged that all machine learning algorithms used in remote sensing studies are not comprehensive tools for classifying or predicting biophysical attributes [49]. They each have their own benefits, strengths, and limitations based on numerous factors such as sensor type, spatial resolution, temporal resolution, and spectral resolution [3]. Dong et al. [49] state that both CNN and ANN are highly sensitive to the architecture and parameter settings; hence, these aspects must be geared appropriately to avoid poor model performance. Model performance can either be too low, whereby the model’s predictive ability is poor, or too high, whereby the model begins to overfit the data [8]. Neural networks are typically known for their tendency to overfit data; hence, preventative solutions must be implemented during data processing to mitigate this [1].

Furthermore, both types of neural networks used in this study require high computational power and are time-consuming [49]. Other machine learning techniques, such as random forest, are much more compatible with smaller sample sizes or input data than neural networks, and these factors must be accommodated for [1]. It must also be noted that CNNs perform better with multi-temporal spatial data (3D CNNs) when compared to single-date imagery (2D CNNs) [52]. The utilisation of CNNs in remote sensing applications is still gaining momentum. Hence, there is a need for future research to optimise the algorithm for vegetation remote sensing [8]. Much of the focus of CNNs in vegetation remote sensing has been on object identification and classification. However, semantic segmentation applications, such as biomass and LAI predictions, must be explored further [8]. It is unlikely that CNNs will replace ANNs altogether in the remote sensing field, as they both provide advantages and disadvantages, and the practicality of each is case-specific [1].

It is evident based on the findings in this study that the use of remote sensing and deep learning algorithms such as CNNs may have substantial implications for the monitoring and management of grasslands and their ecological functions. Numerous studies have attempted to better study grasslands; however, these are often at small spatial scales and often fail to cover a broad range of environmental conditions [56]. High-resolution imagery and machine learning have enabled a data-driven and robust technique for assessing grassland functions at much larger spatial scales [56]. Furthermore, such techniques have the potential to be applied to grasslands with varying biophysical conditions, such as soil types, topography, climatic conditions, and management intensities [56]. Proxy data such as species richness, biomass, and abundance may be derived using such techniques [57]. This would significantly assist in the development of tools for end-user operations such as farmers and ecologists to ultimately improve the management and sustainable use of grassland ecosystems [57].

5. Conclusions

This study compared two neural networks’ performance in estimating grass’s aboveground biomass using Sentinel-2 space-borne spectral data and derived vegetation indices. Findings in this study suggest that the deep learning neural network, CNN, outperforms the traditional ANN. However, both algorithms performed satisfactorily in predicting grass biomass. This study can be considered pilot-scale research, particularly in a southern African context, as no known research has attempted to compare the performance of two different neural networks in grassland monitoring. Although each algorithm has pros and cons, with large training datasets and computational time being a common disadvantage, this pioneering research establishes great potential for the utilisation of CNNs in remote sensing research in the future. Future research can improve upon this research by incorporating larger training datasets and utilising multi-temporal and higher-resolution data to enhance the performance of CNNs in biophysical remote sensing studies. The primary objective of this study was to determine which neural network would better predict grass biomass using open-access and freely available satellite data. The CNN model developed in this study can be considered effective for accurate estimation of biomass in grassland monitoring and is evident in the advancement of applied deep learning.

Author Contributions

Conceptualisation, M.I.V., R.L., O.M. and K.P.; Methodology, R.L. and K.P.; Software, M.I.V., R.L., O.M. and K.P.; Validation, M.I.V.; Formal analysis, M.I.V., R.L. and K.P.; Investigation, M.I.V., R.L. and K.P.; Resources, O.M. and M.S.; Writing—original draft, M.I.V.; Writing—review & editing, M.I.V., R.L., O.M., K.P. and M.S.; Supervision, R.L. and O.M.; Funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

The Water Research Commission (WRC) of South Africa is acknowledged for partial funding through the WRC Project, No. CON2020/2021-00490, “Geospatial modelling of rangelands productivity in water-limited environments of South Africa”. The research was also funded by the National Research Foundation of South Africa (Grant Numbers: MND210517601900, 127354 and 114898).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to thank Timothy Dube, John Odindi and Tafadzwanashe Mabhaudhi for their valuable input.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Ali, I.; Greifeneder, F.; Stamenkovic, J.; Neumann, M.; Notarnicola, C. Review of Machine Learning Approaches for Biomass and Soil Moisture Retrievals from Remote Sensing Data. Remote Sens. 2015, 7, 16398–16421. [Google Scholar] [CrossRef]

- Mutanga, O.; Dube, T.; Ahmed, F. Progress in Remote Sensing: Vegetation Monitoring in South Africa. South Afr. Geogr. J. 2016, 98, 461–471. [Google Scholar] [CrossRef]

- Das, M.; Ghosh, S.K.; Chowdary, V.M.; Mitra, P.; Rijal, S. Statistical and Machine Learning Models for Remote Sensing Data Mining—Recent Advancements. Remote Sens. 2022, 14, 1906. [Google Scholar] [CrossRef]

- Mas, J.F.; Flores, J.J. The Application of Artificial Neural Networks to the Analysis of Remotely Sensed Data. Int. J. Remote Sens. 2008, 29, 617–663. [Google Scholar] [CrossRef]

- Jensen, R.R.; Hardin, P.J.; Yu, G. Artificial Neural Networks and Remote Sensing. Geogr. Compass 2009, 3, 630–646. [Google Scholar] [CrossRef]

- Liu, X.; Han, F.; Ghazali, K.H.; Mohamed, I.I.; Zhao, Y. A Review of Convolutional Neural Networks in Remote Sensing Image. In Proceedings of the 2019 8th International Conference on Software and Computer Applications, Penang, Malaysia, 19–21 February 2019; pp. 263–267. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in Vegetation Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Brodrick, P.G.; Davies, A.B.; Asner, G.P. Uncovering Ecological Patterns with Convolutional Neural Networks. Trends Ecol. Evol. 2019, 34, 734–745. [Google Scholar] [CrossRef]

- Palmer, A.; Short, A.; Yunusa, I.A. Biomass Production and Water Use Efficiency of Grassland in KwaZulu-Natal, South Africa. Afr. J. Range Forage Sci. 2010, 27, 163–169. [Google Scholar] [CrossRef]

- Vundla, T.; Mutanga, O.; Sibanda, M. Quantifying Grass Productivity Using Remotely Sensed Data: An Assessment of Grassland Restoration Benefits. Afr. J. Range Forage Sci. 2020, 37, 247–256. [Google Scholar] [CrossRef]

- Clementini, C.; Pomente, A.; Latini, D.; Kanamaru, H.; Vuolo, M.R.; Heureux, A.; Fujisawa, M.; Schiavon, G.; Del Frate, F. Long-term Grass Biomass Estimation of Pastures from Satellite Data. Remote Sens. 2020, 12, 2160. [Google Scholar] [CrossRef]

- Hoffman, T.; Vogel, C. Climate Change Impacts on African Rangelands. Rangelands 2008, 30, 12–17. [Google Scholar] [CrossRef]

- Soussana, J.-F.; Tallec, T.; Blanfort, V. Mitigating the Greenhouse Gas Balance of Ruminant Production Systems through Carbon Sequestration in Grasslands. Animal 2010, 4, 334–350. [Google Scholar] [CrossRef]

- Wei, J.; Cheng, J.; Li, W.; Liu, W. Comparing the Effect of Naturally Restored Forest and Grassland on Carbon Sequestration and Its Vertical Distribution in the Chinese Loess Plateau. PLoS ONE 2012, 7, e40123. [Google Scholar] [CrossRef]

- Masenyama, A.; Mutanga, O.; Dube, T.; Bangira, T.; Sibanda, M.; Mabhaudhi, T. A Systematic Review on the Use of Remote Sensing Technologies in Quantifying Grasslands Ecosystem Services. GIScience Remote Sens. 2022, 59, 1000–1025. [Google Scholar] [CrossRef]

- Sibanda, M.; Onisimo, M.; Dube, T.; Mabhaudhi, T. Quantitative Assessment of Grassland Foliar Moisture Parameters as an Inference on Rangeland Condition in the Mesic Rangelands of Southern Africa. Int. J. Remote Sens. 2021, 42, 1474–1491. [Google Scholar] [CrossRef]

- Fynn, R.; Morris, C.; Ward, D.; Kirkman, K. Trait–Environment Relations for Dominant Grasses in South African Mesic Grassland Support a General Leaf Economic Model. J. Veg. Sci. 2011, 22, 528–540. [Google Scholar] [CrossRef]

- Scott-Shaw, R.; Morris, C.D. Grazing Depletes Forb Species Diversity in the Mesic Grasslands of KwaZulu-Natal, South Africa. Afr. J. Range Forage Sci. 2015, 32, 21–31. [Google Scholar] [CrossRef]

- Masemola, C.; Cho, M.A.; Ramoelo, A. Sentinel-2 Time Series Based Optimal Features and Time Window for Mapping Invasive Australian Native Acacia Species in KwaZulu Natal, South Africa. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 102207. [Google Scholar] [CrossRef]

- Roffe, S.J.; Fitchett, J.M.; Curtis, C.J. Determining the Utility of a Percentile-Based Wet-Season Start-And End-Date Metrics across South Africa. Theor. Appl. Climatol. 2020, 140, 1331–1347. [Google Scholar] [CrossRef]

- EOS Data Analytics. Landviewer. Available online: https://eos.com/products/landviewer/ (accessed on 21 October 2021).

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Proceedings of the Image and Signal Processing for Remote Sensing XXIII, Warsaw, Poland, 11–13 September 2017; p. 1042704. [Google Scholar]

- Shoko, C.; Mutanga, O.; Dube, T.; Slotow, R. Characterizing the Spatio-Temporal Variations of C3 and C4 Dominated Grasslands Aboveground Biomass in the Drakensberg, South Africa. Int. J. Appl. Earth Obs. Geoinf. 2018, 68, 51–60. [Google Scholar] [CrossRef]

- EOS Data Analytics. Sentinel 2 Satellite Images. Available online: https://eos.com/find-satellite/sentinel-2/ (accessed on 21 October 2021).

- Royimani, L.; Mutanga, O.; Odindi, J.; Sibanda, M.; Chamane, S. Determining the Onset of Autumn Grass Senescence in Subtropical Sour-Veld Grasslands Using Remote Sensing Proxies and the Breakpoint Approach. Ecol. Inform. 2022, 69, 101651. [Google Scholar] [CrossRef]

- Ma, J.; Li, Y.; Chen, Y.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. Estimating above Ground Biomass of Winter Wheat at Early Growth Stages Using Digital Images and Deep Convolutional Neural Network. Eur. J. Agron. 2019, 103, 117–129. [Google Scholar] [CrossRef]

- ESRI. Available online: www.esri.com (accessed on 18 October 2021).

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the Radiometric and Biophysical Performance of the MODIS Vegetation Indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Huete, A.R. A Soil-Adjusted Vegetation Index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Roujean, J.-L.; Breon, F.-M. Estimating PAR Absorbed by Vegetation from Bidirectional Reflectance Measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Fernández-Manso, A.; Fernández-Manso, O.; Quintano, C. Sentinel-2a Red-Edge Spectral Indices Suitability for Discriminating Burn Severity. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 170–175. [Google Scholar] [CrossRef]

- Santoso, H.; Gunawan, T.; Jatmiko, R.H.; Darmosarkoro, W.; Minasny, B. Mapping and Identifying Basal Stem Rot Disease in Oil Palms in North Sumatra with Quickbird Imagery. Precis. Agric. 2011, 12, 233–248. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote Estimation of Chlorophyll Content in Higher Plant Leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Gamon, J.; Surfus, J. Assessing Leaf Pigment Content and Activity with a Reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Strategy for Direct and Indirect Methods for Correcting the Aerosol Effect on Remote Sensing: From Avhrr to Eos-Modis. Remote Sens. Environ. 1996, 55, 65–79. [Google Scholar] [CrossRef]

- Bannari, A.; Asalhi, H.; Teillet, P.M. Transformed Difference Vegetation Index (TDVI) for Vegetation Cover Mapping. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; pp. 3053–3055. [Google Scholar]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Guerini Filho, M.; Kuplich, T.M.; Quadros, F.L.D. Estimating Natural Grassland Biomass by Vegetation Indices Using Sentinel 2 Remote Sensing Data. Int. J. Remote Sens. 2020, 41, 2861–2876. [Google Scholar] [CrossRef]

- Kobayashi, N.; Tani, H.; Wang, X.; Sonobe, R. Crop Classification Using Spectral Indices Derived from Sentinel-2a Imagery. J. Inf. Telecommun. 2020, 4, 67–90. [Google Scholar] [CrossRef]

- Clevers, J.G.; Gitelson, A.A. Remote Estimation of Crop and Grass Chlorophyll and Nitrogen Content Using Red-Edge Bands on Sentinel-2 and-3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Yang, S.; Feng, Q.; Liang, T.; Liu, B.; Zhang, W.; Xie, H. Modeling Grassland Above-Ground Biomass Based on Artificial Neural Network and Remote Sensing in the Three-River Headwaters Region. Remote Sens. Environ. 2018, 204, 448–455. [Google Scholar] [CrossRef]

- Deb, D.; Singh, J.; Deb, S.; Datta, D.; Ghosh, A.; Chaurasia, R. An Alternative Approach for Estimating above Ground Biomass Using Resourcesat-2 Satellite Data and Artificial Neural Network in Bundelkhand Region of India. Environ. Monit. Assess. 2017, 189, 576. [Google Scholar] [CrossRef]

- Pires de Lima, R.; Marfurt, K. Convolutional Neural Network for Remote-Sensing Scene Classification: Transfer Learning Analysis. Remote Sens. 2020, 12, 86. [Google Scholar] [CrossRef]

- Schreiber, L.V.; Atkinson Amorim, J.G.; Guimarães, L.; Motta Matos, D.; Maciel da Costa, C.; Parraga, A. Above-ground Biomass Wheat Estimation: Deep Learning with UAV-based RGB Images. Appl. Artif. Intell. 2022, 36, 2055392. [Google Scholar] [CrossRef]

- Li, C.; Zhou, L.; Xu, W. Estimating Aboveground Biomass Using Sentinel-2 MSI Data and Ensemble Algorithms for Grassland in the Shengjin Lake Wetland, China. Remote Sens. 2021, 13, 1595. [Google Scholar] [CrossRef]

- Ali, I.; Cawkwell, F.; Dwyer, E.; Green, S. Modeling Managed Grassland Biomass Estimation by Using Multitemporal Remote Sensing Data—A Machine Learning Approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 3254–3264. [Google Scholar] [CrossRef]

- Dong, L.; Du, H.; Han, N.; Li, X.; Zhu, D.e.; Mao, F.; Zhang, M.; Zheng, J.; Liu, H.; Huang, Z. Application of Convolutional Neural Network on Lei Bamboo above-Ground-Biomass (AGB) Estimation Using Worldview-2. Remote Sens. 2020, 12, 958. [Google Scholar] [CrossRef]

- Xie, Y.; Sha, Z.; Yu, M.; Bai, Y.; Zhang, L. A Comparison of Two Models with Landsat Data for Estimating above ground Grassland Biomass in Inner Mongolia, China. Ecol. Model. 2009, 220, 1810–1818. [Google Scholar] [CrossRef]

- Karila, K.; Alves Oliveira, R.; Ek, J.; Kaivosoja, J.; Koivumäki, N.; Korhonen, P.; Niemeläinen, O.; Nyholm, L.; Näsi, R.; Pölönen, I. Estimating Grass Sward Quality and Quantity Parameters Using Drone Remote Sensing with Deep Neural Networks. Remote Sens. 2022, 14, 2692. [Google Scholar] [CrossRef]

- Varela, S.; Zheng, X.-Y.; Njuguna, J.; Sacks, E.; Allen, D.; Ruhter, J.; Leakey, A.D. Deep Convolutional Neural Networks Exploit High Spatial and Temporal Resolution Aerial Imagery to Predict Key Traits in Miscanthus. AgriRxiv 2022, 20220405560. [Google Scholar] [CrossRef]

- Ramoelo, A.; Cho, M.A. Dry Season Biomass Estimation as an Indicator of Rangeland Quantity Using Multi-Scale Remote Sensing Data. 2014. Available online: http://pta-dspace-dmz.csir.co.za/dspace/handle/10204/7852 (accessed on 26 October 2021).

- Pang, H.; Zhang, A.; Kang, X.; He, N.; Dong, G. Estimation of the Grassland Aboveground Biomass of the Inner Mongolia Plateau Using the Simulated Spectra of Sentinel-2 Images. Remote Sens. 2020, 12, 4155. [Google Scholar] [CrossRef]

- Dusseux, P.; Guyet, T.; Pattier, P.; Barbier, V.; Nicolas, H. Monitoring of Grassland Productivity Using Sentinel-2 Remote Sensing Data. Int. J. Appl. Earth Obs. Geoinf. 2022, 111, 102843. [Google Scholar] [CrossRef]

- Muro, J.; Linstädter, A.; Magdon, P.; Wöllauer, S.; Männer, F.A.; Schwarz, L.-M.; Ghazaryan, G.; Schultz, J.; Malenovský, Z.; Dubovyk, O. Predicting Plant Biomass and Species Richness in Temperate Grasslands across Regions, Time, and Land Management with Remote Sensing and Deep Learning. Remote Sens. Environ. 2022, 282, 113262. [Google Scholar] [CrossRef]

- Ali, I.; Cawkwell, F.; Dwyer, E.; Barrett, B.; Green, S. Satellite Remote Sensing of Grasslands: From Observation to Management. J. Plant Ecol. 2016, 9, 649–671. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).