Abstract

This exploratory research conducted a thematic analysis of students’ experiences and utilization of AI tools by students in educational settings. We surveyed 87 undergraduates from two different educational courses at a comprehensive university in Western Canada. Nine integral themes that represent AI’s role in student learning and key issues with respect to AI have been identified. The study yielded three critical insights: the potential of AI to expand educational access for a diverse student body, the necessity for robust ethical frameworks to govern AI, and the benefits of personalized AI-driven support. Based on the results, a model is proposed along with recommendations for an optimal learning environment, where AI facilitates meaningful learning. We argue that integrating AI tools into learning has the potential to promote inclusivity and accessibility by making learning more accessible to diverse students. We also advocate for a shift in perception among educational stakeholders towards AI, calling for de-stigmatization of its use in education. Overall, our findings suggest that academic institutions should establish clear, empirical guidelines defining student conduct with respect to what is considered appropriate AI use.

1. Introduction

In just two years since the public launch of ChatGPT in 2022, we have witnessed how artificial intelligence (AI) is changing the landscape of teaching and learning ranging from self-regulation and personalized learning to pedagogical shifts across various disciplines [1,2,3]. With this rapid technological advancement, it is crucial to evaluate whether AI technologies employed in learning environments are effectively addressing the diverse needs of various stakeholders. This inquiry is both timely and critical, reflecting the global nature of education and the growing emphasis on digital literacy and personalized learning experiences. Several researchers have claimed that AI has the potential to increase student engagement through interactive learning, improve knowledge acquisition by tailoring materials to individual learners, and develop writing skills through timely feedback [4,5,6]. However, it remains unclear how AI systems can create an equitable, inclusive, sustainable, and accommodating learning environment. In other words, the integration of AI tools raises important questions about equity, inclusivity, and potential biases embedded within these existing AI systems [7,8,9].

The rapid integration of AI in education has brought with the urgent need to address ethical implications. As AI capabilities expand, particularly in the development of large language models (LLMs) for educational applications, concerns about academic integrity, data biases, and the trial-and-error nature of AI are significant [9,10,11]. A key question is whether the use of AI in education can align with ethical considerations that address the needs of various stakeholders including students, teachers, policymakers, as well as the limitations of the academic environment [12,13]. Recent endeavors in reviewing AI integration in higher education involve establishing guidelines for more specific AI conducts, such as “fairness”, “accountability”, “transparency”, and “ethics”, collectively referred to as the “FATE” framework [10,14,15].

The concept of fairness is rooted in both social and technical facets in AI contexts, reflected in mitigating biases in statistical and algorithmic processes to make fair decisions that do not lead to discriminatory or unjust consequences [16,17]. Accountability is addressed both at the technical level, concerning the explicability of automated decision-making processes [18], and at the social level, regarding who should be held accountable for these decisions and under what circumstances [19,20,21]. Transparency refers to AI’s capacity to clarify its internal processes and institutional policies and motivations. The understanding that learners develop about how these technologies function significantly influences their engagement, conceptualization, and skill development. Ethics, a broader concept compared to fairness, accountability, and transparency, draws from the fundamental principles such as the Belmont Report [22] to guide AI development, highlighting that ethical AI should respect human autonomy, prioritize benefits over risks, and ensure equitable distribution of both its benefits and risks [20]. While “fairness” has been relatively comprehensively defined, crucial aspects of “accountability” and “transparency” remain underdeveloped in the existing literature on AI in education [10]. This study investigates students’ perceptions of AI’s role in academic learning, exploring both its benefits and challenges. It aims to contribute to the sustainable integration of AI in higher education, with an emphasis on the importance of fair, transparent, accountable, and ethical AI applications.

2. Literature Review

Two primary narratives prevail regarding AI in education: the inevitability of AI adoption and the potential for AI to alter the traditional role of teachers [23]. The authors explored how AI might shift accountability within educational practices and examined the impact of AI on learning and student–teacher relationships. Recent studies (i.e., [24,25,26]) have indicated that students generally perceive AI tools as useful and engaging for assisting with personal tasks, providing immediate feedback, and offering tailored support for academic tasks. On the other hand, they are also concerned about the accuracy and reliability of the information provided by AI chatbots, and the potential negative impacts of AI applications on their learning processes, critical thinking, and creativity [25].

While AI technologies offer the potential for adaptive learning opportunities and efficient learning management [19], realizing the potential hinges on the responsible and ethical use of these technologies. While the rapid growth of AI technology has given rise to concerns related to bias, lack of transparency, and the need for clear accountability, addressing the accountability of AI in education requires attention at both the macro and micro levels, considering diverse legal, regulatory, and moral frameworks [10,27]. As AI systems grow more autonomous, accountability becomes a central issue, raising questions about who is responsible for AI-induced errors or harm. The integration of AI into teaching practices fundamentally shifts the dynamics of accountability.

The issue of transparency gains particular significance when considering the risk of reinforcing stereotypes and marginalizing individuals from diverse cultural and linguistic backgrounds [28,29]. Transparency encompasses not only technical aspects, but also ethical considerations related to student data, consent, and the right to seek redress [30]. By relating to the transparency principle and how students use AI in their learning process, educators can find ways to foster more equitable and accountable AI systems in education. To align with the overarching goal of promoting transparency in AI-supported learning experiences, it is essential to provide comprehensive information to end users [27] and openly communicate the benefits and drawbacks of AI applications [20].

To address issues such as bias and fairness, lack of transparency, and the challenges associated with accountability, ref. [31] proposes strategies for transparency, such as the implementation of explainable AI (XAI), advocating for open data sharing, and embracing ethical AI frameworks. Promoting fairness in AI algorithms can involve the use of fairness metrics, diverse training data, and continuous monitoring for iterative improvement.

Ethical lapses in research can damage the broader scientific community [32]. Despite the crucial need for ethical and responsible use of AI in education, comprehensive analyses in this area remain scarce [33]. In a systematic review of algorithm aversion, ref. [34] emphasized that AI systems should support but not replace human judgment, asserting that final decision-making authority must always reside with humans. They advocated for ethical AI design in educational technology (AIED), the development of ethics curricula for AIED, and the establishment of policies to ensure data security, reliability, and transparency. Ref. [35] proposed four core ethical principles to govern AI usage in education: beneficence, accountability, justice, and respect for human values. The literature also highlights the importance of adhering to ethical standards that respect student autonomy and privacy, addressing ethical challenges in personalized learning environments, and safeguarding both educational integrity and students’ personal data [24,36,37,38].

Educators and students engaging with AI tools enter a symbiotic relationship with AI tools, sharing responsibility for understanding and using these tools [23]. This shift demands a deeper understanding of the capabilities and limitations of both educator and AI systems, ensuring that transparency and accountability are clearly defined and appropriately managed. Both constructs are fundamental for building trust among stakeholders in the ecosystem of AI supported teaching and learning. Students’ awareness of ethical issues related to AI usage and active engagement in the discourse that shape AI policy development is critical.

In reviewing AI’s role in higher education, ref. [39] examined 146 papers that focus on AI technical applications. They have found that while these papers explore various applications, including student profiling for admission and retention, the implementation of intelligent tutoring and personalized learning systems, and the development of automated assessment and feedback mechanisms, they originated from specialized AI, educational technology, or computing journals rather than journals focusing on higher education. They also argued that AI has been conceptualized as a component of “digital colonialism” reinforcing and extending existing societal inequalities [40]. Given these perspectives, a comprehensive exploration of AI’s intersection with higher education is urgently needed. Understanding learners’ experiences can help us understand the potential biases in their learning processes, extending to the creators of AI systems and potentially resulting in educational inequalities [41].

Recent reviews of AI in education have emphasized the lack of educational perspectives in AI research, development, or implementation [39,42]. To address the gap, this study, which focuses on student perspectives, provides valuable insights into the challenges and opportunities of integrating AI in education. In this context, this research aims to contribute to the ongoing conversation about integrating AI into higher education, focusing particularly on accountability, transparency, and ethics considerations in AI educational technologies from the student perspective. Building on the FATE framework, we aim to develop an exploratory yet evidence-based conceptual framework that stresses the shared responsibility of both AI systems and educators in shaping an inclusive, adaptable, and ethical learning environment. Through this exploration, our goal is to contribute to an educational digital transformation that is not only inclusive but also accountable, ensuring fair benefits for learners from all backgrounds. Our focus is on student perspectives on the impact of AI technologies, such as chatbots, on their learning outcomes, especially in terms of engagement with learning materials, knowledge acquisition, and skill development. We also assess how current AI tools, for instance, Grammarly and ChatGPT, are viewed in terms of their inclusivity and adaptability to the varied cultural and linguistic backgrounds of students. We address three research questions:

- 1

- How do students perceive the impact of AI technologies on their learning experiences?

- 2

- To what extent do AI technologies address the diverse needs of students from various cultural and linguistic backgrounds?

- 3

- What are some of the challenges in integrating AI in learning from students’ perspectives, and how can AI technologies be optimized to better support individual learning?

3. Materials and Methods

3.1. Participants and Context

Data were collected from a total of 87 undergraduate students enrolled in two distinct online courses at a comprehensive university located in western Canada. Fifty-eight students were enrolled in a third-year elective education course on learning communities, and twenty-nine education students intending to pursue teacher education were enrolled in a second-year mandatory course on research methodology in education. Both courses spanned 13 weeks, during which students engaged in various educational activities, including writing proposals for educational podcasts and research methodology, participating in online discussions and group projects, and completing quizzes and exams. University academic integrity policies were clearly stated in course syllabi, with the research methodology course explicitly permitting the use of AI as a learning aid without violating academic integrity principles.

3.2. Procedure and Instrument

All 87 students participated in an end-of-course anonymous survey eliciting their experiences with AI tools they have used in their online courses. The survey, offering a maximum of 2% of course grades based on the depth of responses, was part of a broader initiative by the online learning hub at the university to enhance students’ learning experiences in online courses. Given its aim to improve future course offerings and program curricula, the initiative has received the institutional research ethics board exemption.

The survey was administered through the Canvas learning management system during the twelfth week of the courses, aligning with the completion of the core curriculum. This timing allowed students to reflect on their overall course experience and use of AI tools. The survey instrument used in the study consisted of five open-ended questions, as detailed in Table 1. These survey questions were devised to investigate students’ utilization and perception of AI tools in their course learning, and to explore the intersection of AI technologies and students’ learning experience in higher education.

Table 1.

The five open-ended survey questions.

We situated the five survey questions within the context of constructivism. Constructivism posits that learning is an active, contextual process in which learners construct knowledge based on their experiences and interactions with AI technologies [43]. By promoting reflection and meaning-making, which are fundamental to the constructivist approach to learning, the questions aimed to capture how students made sense of their experiences, encouraged students to expand on their thoughts and personal interpretations of AI in educational settings and consider the context in which AI influenced their learning experiences. Drawing on the constructivist theoretical framework, the survey questions were formulated to provide insights into the effective integration of AI tools, such as ChatGPT or other generative AI tools, in educational settings.

Methodologically, the open-ended nature of the five survey items was meant to encourage in-depth and personal responses, thus providing rich qualitative data for analysis [44]. Participant responses were analyzed using thematic analysis [45]. This methodology aimed to gain a comprehensive understanding of the participants’ views and experiences, thereby contributing valuable exploratory insights into the role and impact of AI technologies in educational settings.

3.3. Data Analysis

Our data collection and analysis followed grounded theory conventions [46,47,48] and employed thematic analysis as the analytical approach. Data were extracted verbatim from Canvas, a learning management system, without collecting student information. The thematic analysis adhered to the six phases outlined by [45]. Two researchers began with a detailed examination of each response, noting down initial ideas about features. The entire project team held regular meetings to discuss features of the data to develop initial codes, calibrate and make necessary adjustments, and reach a consensus, ensuring rigour and consistency in the coding process. We then collated the codes into potential themes, which were defined collectively to reflect the context and relate to the research questions. This collaborative approach allowed us to identify and refine themes that encapsulate key aspects of students’ experiences with AI technologies [49,50].

4. Results

4.1. Codes and Emergent Themes

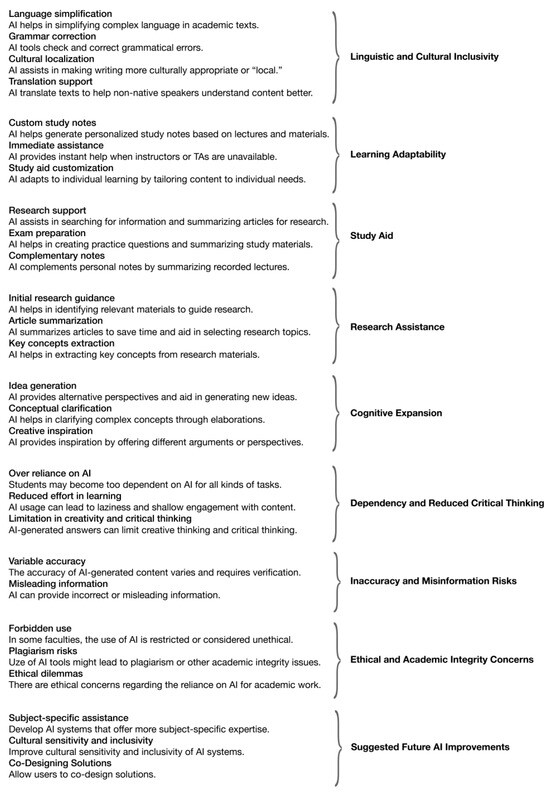

From the 87 student responses, we identified 27 initial codes across 298 quotes, which included both individual sentences and groups of sentences as meaning units representing the codes. We created a thematic structure diagram including each code and its description (Figure 1) and then merged these codes by drawing connections between them, resulting in nine themes that represent students’ perceptions of using AI tools in learning (Table 2).

Figure 1.

Codes and themes based on student responses.

Table 2.

Key themes, definitions, and illustrative excerpts from student responses.

4.2. Inter-Rater Reliability and Agreement

Both Cohen’s kappa and percentage agreement were calculated to evaluate coding reliability. Two coders analyzed 13 responses from a total sample of 87 responses. As the unit of observation was at the sentence level based on meaning units, kappa was calculated using quotes identified as representative of the codes. Based on a total of 36 quotes from the 13 responses, the results showed acceptable agreement between the two coders (kappa = 0.845, p < 0.001) with a percentage agreement of 81.66%.

4.3. Theme Patterns and Connections

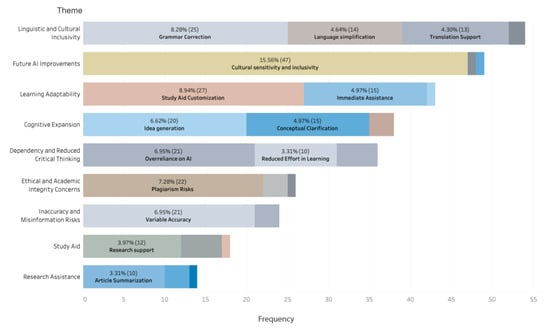

As shown in Figure 2, the most prominent themes, making up over 50% of all responses, highlight the positive impact of AI tools on linguistic and cultural inclusivity, learning adaptability, and cognitive expansion. Participants noted that AI tools support non-English speakers by correcting grammar in their writing, simplifying language for better understanding, and providing translation when needed. They appreciated how AI tools could adapt to their individual needs and offer immediate help when instructors were unavailable. Many students found AI helpful for generating ideas, clarifying concepts, and facilitating their brainstorming and research process. Some also valued AI as a study aid and research assistant. While recognizing the benefits of using AI tools, some students also expressed concerns about potential negative consequences of the over-reliance on AI, plagiarism, and the risk of misinformation. For future improvements, the highest percentage of responses (15.56%) suggested enhancing AI technologies to be free from cultural bias and improving their inclusivity. One student emphasized that AI tools should be more subject-specific to better support learning, while another highlighted the importance of involving users in the development process to co-design solutions.

Figure 2.

Frequency of AI-Related themes in student responses.

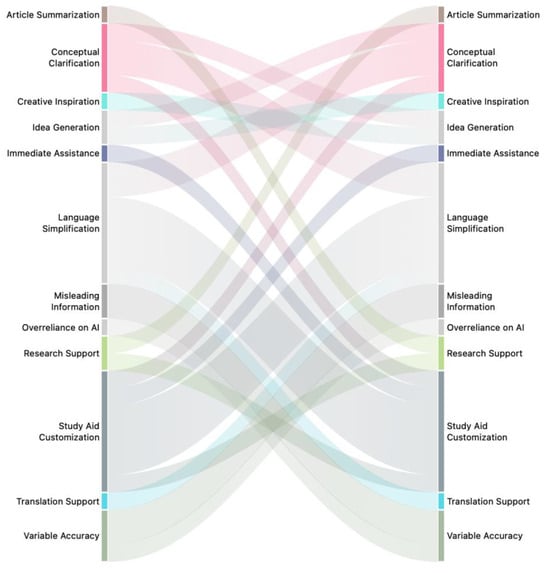

The code co-occurrence analysis results presented in the Sankey diagram in Figure 3 indicate that codes within the themes of linguistic and cultural inclusivity, learning adaptability, cognitive expansion, research assistance, and study aid tended to co-occur. This pattern highlights students’ top needs for adaptive support in conceptual understanding, research, and cognitive development. Language simplification plays a critical role in clarifying concepts and adapting to individual learning needs. Students expressed concerns about inaccurate and misleading information provided by ChatGPT and were cautious about potential negative consequences from over-reliance on AI tools, such as reduced learning efforts and diminished creativity and critical thinking skills.

Figure 3.

Code co-occurrence Sankey diagram.

4.4. Enhancing Learning and Research Capacities

4.4.1. Linguistic and Cultural Inclusivity

Most students mentioned that AI technologies are particularly beneficial for students who are English as an additional language (EAL) speakers. These technologies assist EAL students to learn more effectively by simplifying and clarifying academic materials, which often contain complex theoretical concepts, making the academic content more accessible and comprehensible. As both courses are writing-intensive, AI technologies support EAL learners by mitigating the language barrier. Students stated that AI technologies could be used to “correct some mistakes, especially grammar”, “polish our writing assignments”, “help us enhance our writing”, and “give many suggestions to make my articles more ‘local’”. While some expressed that while AI was not specifically designed for diverse students, they believe it can still be a beneficial tool for their learning: “While I do not believe they were designed for the catering of diverse students, such as those from cultural/linguistic backgrounds, I believe they are largely beneficial for this population”.

Acknowledging the linguistic support of AI, students pointed out the potential limitations of AI to be culturally inclusive: “Even while ChatGPT is flexible in its language usage, it might not fully understand linguistic or cultural quirks”. They emphasized that “culturally speaking, AI technologies may be more well fitted to western and Eurocentric research as some information such as stories in Indigenous culture are not available online”, and “enhancements ought to concentrate on integrating a wider range of cultural, linguistic, and socio-political environments, guaranteeing that AI reactions are not only precise but also culturally aware and inclusive of different points of view”.

Students expressed concerns about inclusivity in other areas, noting that those with disabilities, such as blindness, might be excluded due to the lack of voice recording features in AI technologies. They highlighted socio-economic barriers, observing that, “AI tools are mainly available to students of the upper or middle class who have access to technology”, while “some students may not be able to afford it”. Despite these concerns, some students were enthusiastic about the use of AI in education for inclusivity: “I think giving opportunities in the learning environment to work with AI is a step in the right direction to make these technologies more inclusive!”.

4.4.2. Learning Adaptability

AI can play a tutor’s role by simplifying intricate academic concepts, thus making learning more adaptable for students with diverse learning needs. Students highlighted AI’s potential in personalizing their learning experience by explaining ideas “like I am 10” or “breaking down any complex queries …to comprehend and process”. They noted benefits in understanding their learning materials better: “When doing readings sometimes I struggle understanding words or sentences. When I need help, asking ChatGPT to simplify the words or sentences really helps me understand the course material better. This would help the diverse needs of all students because it could help students get a better understanding of the material”. They appreciated the adaptability of learning through AI, “I value how AI technologies—chatbots in particular—have helped my education as a student. These resources offer me great access, making it possible for me to get help and information whenever I need it, which makes learning more adaptable and effective. The personalization feature is helpful because chatbots adjust recommendations and content based on my unique learning style, which makes the course material more relevant to me”.

Students also suggested further improving the adaptability of AI technologies by providing “choices on how to customize their learning, such as how the material is presented, how they acquire it, and what kinds of assessments they can take”, and “multiple ways of input and output, such as touch, gesture, voice, text, image and sound. This can cater to the needs to a much more diverse community of students”.

4.4.3. Study Aid

AI chatbots are known for their capabilities to transcribe and summarize audio recordings and extract key points. Some students effectively utilize this technology as a study aid to streamline their note-taking process and ensure they capture essential lecture content: “AI Chatbots… I would voice record the lecture and use Rewind to summarize the voice lecture and give me some essential notes”. Students also appreciated the efficiency AI technologies provide: “Personally, I do not see any benefits of watching an hour-long video when I can read the highlights/notes in 10–15 min. AI does have the ability to make what would be an unnecessarily long process into something that is more efficient for the students. Reading is a much faster process than watching a video so students would be able to get more done in a smaller amount of time”.

To prepare for exams and practice writing skills, students used AI to generate practice questions: “When studying for an exam, … generate me some questions that I would answer it myself onto the AI chatbot. It will mark me whether I am right or wrong and give me explanations on why it was wrong”. Some students used AI to improve their writing skills by generating writing prompts: “AI platforms like ChatGPT can give prompts for questions you have asked it and these prompts can be great ways to practice writing paragraphs on the topic you study”.

4.4.4. Research Assistance

A majority of students agreed that AI can be used to assist academic research. Specifically, AI is utilized for initial research guidance, summarizing articles, and improving academic writing, especially for non-native English speakers. Students used AI chatbots to enhance efficiency, understanding, and decision-making in their research process: “If I do see an article, after reading the introduction myself, I can input that article into an AI chatbot to help me summarize it for me and do a simple explanation so I can decide whether that article will be useful to me or not”. University students often have heavy course loads. By quickly assessing an article’s relevance, they were able to prioritize their reading and allocate time more effectively.

Some students expressed concerns that overreliance on AI could reduce the depth of their research experience: “It has reduced many of the steps of academic research. This would deprive us of the real experience of the research process”. They were also aware that “an over-reliance on AI for education may impede the growth of analytical and problem-solving abilities”.

4.4.5. Cognitive Expansion

Exposure to AI as an innovative technology stimulated students’ interest in the broader implications of AI technology: “I was able to research more into that realm of AI that connects to learning and how it can impact the human experience and our brain”. The example highlights students’ curiosity about the potential impacts of AI on learning and cognition. Students noted that AI, particularly ChatGPT, has broadened their perspectives and enhanced their thinking, enabling them to “understand concepts and from many different perspectives”, “brainstorm ideas”, “provide diverse perspectives to challenge and broaden the person’s lens of the world”, and “expand the scope of thinking”.

4.5. Challenges and Risks Associated with AI in Education

4.5.1. Dependency and Reduced Critical Thinking

While students found AI technologies to be helpful in personalizing their learning experience, they also recognized potential drawbacks. Some students have expressed concerns about an over-reliance on AI, which could potentially lead to a decline in critical thinking and research skills: “We can easily become dependent on artificial intelligence. This can also lead to laziness in thinking or digging deeper into the problem”. They also cautioned about demotivation: “AI is able to produce so much in so little time that students may start to question why they need to try so hard. Thus, jeopardizing their motivation to learn”. Some students were proactive in self-monitoring to avoid over-dependence on technology for idea generation, aiming to “to grow my own writing and critical thinking skills, which I won’t be able to do as efficiently with the use of AI technologies”. They also cautioned that “learners may rely too heavily on automated solutions without fully understanding the underlying concepts”, and noted the risk of diminished intellectual engagement: “if it’s being used to generate ideas or”“think”“ … it starts to become a problem”.

4.5.2. Inaccuracy and Misinformation Risks

Using AI as a learning tool, students also recognized the risks associated with misinformation: “The level of accuracy varies from one AI system to another. Especially when the student does not understand the context needed to get the answer given, the student will not learn or will be taught wrong information without knowing that it is wrong”. Concerns about the sources of information were also raised: “I don’t know where it takes its information from, so it can actually be inaccurate or plagiarized, so it is not necessarily good to just blindly trust what it generates either”. They stressed the importance of fact-checking and verifying primary sources: “AI sometimes provides false information which requires me to be critical and verify the information by myself”, and noted, “…and the sporadic inaccuracies, which serve as a reminder to me of the value of cross-referencing material”. Some students suggested improving AI interactions by clarifying the context in prompts: “They might provide inaccurate or irrelevant information if the context is not clear”.

4.5.3. Ethical and Academic Integrity Concerns

Academic integrity emerged as a significant theme in the data. Students were aware that it was “academically dishonest to use ideas or writing that was generated by AI technology and claim it as your own”. Concerns were voiced about the impact of AI on originality and academic honesty: “With the rise of AI technologies in education, there are things that are academically wrong, such as having ChatGPT write an essay for you or using it to answer questions on tests or quizzes”. Some viewed AI as “dangerous because it can quickly result in academic dishonesty or be used to cheat”, while others noted a worrying trend: “It scares me because I would only think of the negatives—potential cheating, the sole reliance on AI for academic purposes, etc.”.

Conversely, some students expressed anxiety over the issue of false alarm and its impact on their well-being: “I find that since many educators are aware of this new technology, it is more likely for people to be flagged for plagiarism or AI use. … this is a point that scares me a little bit when submitting assignments. Will educators think my work was created by AI, when in reality it was not? I think there is no way to know for sure, which is a bit stressful as a student”.

4.6. Improving AI: Inclusivity, Specialization, and User Co-Design

Students suggested enhancing AI outputs by ensuring that the training data include a broader range of cultural and socioeconomic perspectives to reduce biases and enhance inclusivity. “Diversify training data, incorporating various cultural, linguistic, and socioeconomic perspectives to mitigate biases”. Students suggested AI systems could offer more subject-specific support, and provide detailed assistance for complex subjects like mathematics: “Another way to improve would be having specific AI’s depending on the subject. For example, if we need help solving a math equation then asking an AI specialized in that area would be more helpful instead of a general answer”. Students expressed interest in partaking in co-designing AI solutions: “I would also recommend adding in a new system where it primarily focuses on understanding diverse user communities. This involves empathizing with users to understand their needs and challenges, and co-designing solutions with them, not for them”.

4.7. A Model for Enhanced Learning and Ethical Engagement with AI

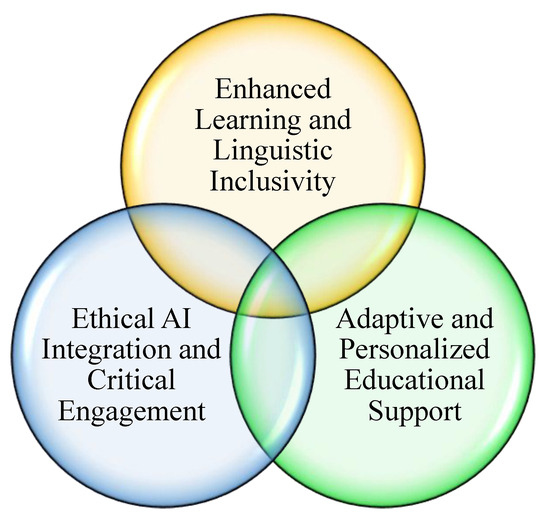

From the analysis of these 8 themes, we further consolidated them into three main categories to conceptualize an ideal ecosystem of AI use from students’ perspective (Figure 4): (1) enhanced learning and linguistic inclusivity, (2) ethical AI integration and critical engagement, and (3) adaptive and personalized educational support.

Figure 4.

A refined model representing an ideal ecosystem of AI use from student perspectives.

The theme of “Enhanced Learning and Linguistic Inclusivity” highlights how AI improves the learning experience by including learners from different linguistic backgrounds and delivering personalized content for more effective learning. It highlights AI’s role in breaking down language barriers, simplifying complex concepts, and providing customized support for research and academic writing. In relation to equitable educational practice, this theme emphasizes that AI tools have the potential to adapt to diverse linguistic backgrounds and learning contexts, enhancing understanding and efficiency in education. However, there have been ongoing concerns about “ethical AI Integration and critical engagement” despite the potential of AI to enhance learning for diverse students. These concerns revolve around dependency, misinformation, and academic integrity in education. The theme emphasizes the need for AI tools to be designed and used in a way that encourages critical thinking and ethical use, while also ensuring the accuracy and reliability of the information provided. It advocates for a balanced approach where AI complements traditional educational methods, encouraging students to engage critically with content and develop independent learning skills. Another important aspect of AI in learning is personalized educational support, which is referred to as “adaptive and personalized educational support”. This theme focuses on AI’s ability to adapt and personalize education. It encompasses AI’s role as a study aid and its capacity to provide customized learning experiences based on individual learner needs and preferences. The theme advocates for flexible and responsive AI technologies that support each student’s unique educational situations and journeys, ensuring that learning is personalized and adaptive. It further highlights the importance of AI in accommodating diverse learning paths and catering to specific educational goals. The intersection of the three themes in Figure 1 represents the optimal boundary students should navigate while using AI for learning.

5. Discussion

The investigation into student perceptions of AI technologies in education revealed nuanced views, characterized by appreciation for AI’s utility and concerns about its implications. We have identified nine primary themes from student survey data surrounding the use of AI in education, which were further refined as three core interrelated themes in a conceptual framework guiding the integration of AI technologies in higher education. We encourage educational stakeholders to prioritize: (1) enhanced learning and linguistic inclusivity, (2) ethical AI integration and critical engagement, and (3) adaptive and personalized educational support. The convergence of the three themes, as illustrated in Figure 2, depicts an optimal, idealized learning boundary for AI-facilitated learning. This model envisions a pedagogical ecosystem where AI not only adapts to individual linguistic and learning needs but also operates within an ethical framework that encourages critical engagement that encourages students’ active, reflective, and informed participation in the learning process. As the findings of this study highlight, students should be guided to not only use AI tools as study aid, tutor, and research support, but also evaluate and challenge the role of these technologies in their education. This critical engagement requires students to reflect on how AI affects their learning, how they can use it more effectively to meet individual learning needs, and to remain aware of its limitations and ethical risks.

The findings of this study reveal the dual nature of AI in educational contexts. On the one hand, AI technologies like chatbots are praised for enhancing understanding, assisting in research, and creating study aids. Our results showed that, from the student perspective, integrating AI in classroom teaching and learning is likely to enhance inclusivity, as these tools make learning more accessible for students from diverse cultural backgrounds. On the other hand, concerns about misinformation, dependency, and ethical issues present challenges that need to be addressed. These findings directly relate to the FATE framework, specifically the principles of accountability and transparency. Our findings imply a need for a balanced approach to implementing AI in education. This approach should take advantage of its benefits, such as personalized tutoring and adaptive learning, while also carefully addressing ethical concerns. Interestingly, the study further reveals a gap in cultural and linguistic responsiveness in AI. While AI shows potential in language translation and support for multilingual students (i.e., students who are learning English as an additional language), it often falls short in understanding and valuing cultural nuances, which corroborates findings from previous research [28,29,51]. Challenges such as bias in training data, which may focus disproportionately on certain demographics, and reliance on data of mixed quality in generative models highlight some of the current issues [5]. This underscores the importance of developing genuinely inclusive AI technologies that better address diverse students, particularly in terms of cultural sensitivity [52,53].

Overall, the emergent themes underscore the need for AI tools that democratize education while providing clear ethical guidelines on utilizing AI for their learning. With the increasing use of AI by students, particularly those from culturally diverse groups, there is growing tension surrounding the use of AI technologies in learning due to concerns regarding academic integrity and plagiarism [54]. Our findings showed that students view AI positively as a helpful learning tool and study aid while also recognizing its negative implications, such as limited inclusivity, ethical concerns, and overreliance on AI technology. From a disciplinary standpoint, AI can potentially assist emerging writers in their writing and learning [1,55,56]. We urge educators and policymakers to refrain from categorically stigmatizing students or viewing the use of AI as inherently unethical, provided it is employed within clearly defined academic guidelines.

Enhancing AI technologies for education may best be achieved by soliciting feedback from educational communities and incorporating theoretical, conceptual, practical, and empirical insights from educational research into development [55,57]. It is time to begin to accept the rapidly evolving landscape of educational practices and incorporate these changes into our current educational methodologies [5]. Student participation is essential in providing valuable data for innovating AI technologies. More research is needed in this area to investigate the use of generative AI in education and its positive and negative impact on the learning process.

The integration of AI must be critically examined and guided by ethical principles to avoid student misconduct. It is crucial that students be engaged not just as passive recipients of personalized education but as active participants in a discourse on the ethical use of AI in their learning. Currently, students’ voices in AI’s impact on academic integrity are underrepresented [58]. Our research offers valuable insights directly from students’ perspectives. The optimal ecosystem model we propose represents an ideal state where AI’s adaptability, ethical conduct, and support for personalized learning act systematically to empower students and enhance learning. This triadic intersection offers a guiding principle for educational stakeholders and policymakers, ensuring that AI tools are not only effective but also equitable and responsible.

Consequently, it is essential for academic policies and guidelines to explicitly delineate what is considered appropriate and inappropriate use of AI. In cases where students cross these boundaries, educators should not label them as dishonest AI users seeking to gain academic advantage; instead, they should provide additional educational opportunities for students to understand these limits more comprehensively and holistically. This approach serves as a preventative strategy against future academic infractions. Given this, we recommend that AI technologies be accessible to all learners, regardless of socioeconomic status, thereby contributing to educational inclusivity and accessibility [3,59]. This requires a concerted effort to create AI solutions that are not only advanced but also financially and practically accessible to a broader student population [52,53,54,60].

These themes relate to sustainability in education through various dimensions that impact individual learning processes and the broader educational ecosystem. Linguistic inclusivity and learning adaptability, by mitigating language barriers and providing personalized learning experiences, enable students’ equitable access to educational resources, aligning with sustainable education principles that emphasize accessibility and inclusivity [61]. AI as a study aid promotes efficiency in learning and research processes, reducing the time and resources spent on obtaining and understanding information, promoting more sustainable use of academic resources and enhancing learner autonomy. Sustainable education requires a balanced environment that fosters students’ ability to engage deeply and think independently. Maintaining the accuracy and reliability of educational tools is crucial for preserving the integrity and sustainability of educational outcomes. Ethical considerations, including risks of plagiarism and the inappropriate use of AI, raise concerns about the sustainability of educational practices. Promoting ethical use and understanding the limitations of AI tools is crucial to upholding academic integrity and sustainable educational practices. Overall, these themes reflect a complex interplay between enhancing and potentially undermining sustainability in education. The key to leveraging these AI-related advantages while mitigating risks lies in balanced integration, ethical usage guidelines, and ongoing evaluation of their impact on educational practices and outcomes.

6. Conclusions

In the current exploratory study, we aim to develop a conceptual framework that emphasizes the shared responsibility of AI systems and educators in creating an inclusive and adaptable learning environment. We have identified nine themes related to students’ use of AI tools in their educational experience. Our study reveals three key insights: the potential for AI to enhance accessibility and learning for a diverse student body, the necessity for robust ethical guidelines to govern AI use, and the effectiveness of AI in providing personalized educational support. A key outcome from our analysis is the conceptualization of an optimal model that represents an idealized ecosystem that supports student learning while also encouraging ethical engagement with AI as a learning companion for personalized education. We urge educational stakeholders to destigmatize AI use among students. Our empirically driven model suggests that institutions and academic policies should clearly define what constitutes appropriate AI use to guide students in ethically navigating the boundaries in their learning process.

While illuminating key issues related to students’ use of AI, our study has limitations. Our findings are primarily based on self-report data from students, which serves as an initial exploration that can guide future research in this field. The analysis was based on data collected from two education courses, which limited the generalizability of the findings. Future studies should aim to gather perspectives from a more diverse student population using more rigorous sampling methods and involve other stakeholders engaged in integrating AI for educational purposes, aiming to provide a more comprehensive understanding and deeper insights into the role of AI in supporting effective and equitable education.

Author Contributions

Conceptualization, D.H.C., M.P.-C.L., E.P. and A.L.L.; methodology, D.H.C. and M.P.-C.L.; validation, D.H.C., M.P.-C.L. and A.L.L.; formal analysis, D.H.C., M.P.-C.L. and A.L.L.; investigation, D.H.C.; writing—original draft preparation, D.H.C. and M.P.-C.L.; writing—review and editing, E.P., M.C., D.H.C., M.P.-C.L. and A.L.L.; visualization, A.L.L.; supervision, M.P.-C.L.; project administration, D.H.C. and M.P.-C.L.; funding acquisition, D.H.C. and M.P.-C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by MSVU New Scholar’s Grant 42-0-140442 (Michael Lin) and the Social Sciences and Humanities Research Council of Canada (SSHRC), 430-2024-00269 (Michael Lin), and 430-2023-00368 (Daniel Chang). The APC was funded by two separate SSHRC Insight Development Grants from Michael Lin and Daniel Chang, respectively.

Institutional Review Board Statement

The study adhered to the Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans (TCPS 2). Given that the study focused on collecting student experiences to inform teaching feedback and ensure quality assurance of online courses, it qualified as an exemption under Article 2.5 of the TCPS 2 guidelines. The study was reviewed and exempted from a full REB review by Simon Fraser University [Study Number: 30001484].

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the last author due to institutional regulations regarding research ethics and student data confidentiality.

Acknowledgments

We thank the journal editor and all the anonymous reviewers for offering feedback. All names/courses/institution names have been mocked to ensure confidentiality.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chang, D.H.; Lin, M.P.-C.; Hajian, S.; Wang, Q.Q. Educational design principles of using AI chatbot that supports self-regulated learning in education: Goal setting, feedback, and personalization. Sustainability 2023, 15, 12921. [Google Scholar] [CrossRef]

- Kılınç, S. Embracing the future of distance science education: Opportunities and challenges of ChatGPT integration. Asian J. Distance Educ. 2023, 18, 205–237. [Google Scholar] [CrossRef]

- Lin, M.P.C.; Chang, D. CHAT-ACTS: A pedagogical framework for personalized chatbot to enhance active learning and self-regulated learning. Comput. Educ. Artif. Intell. 2023, 5, 100167. [Google Scholar] [CrossRef]

- Atlas, S. ChatGPT for Higher Education and Professional Development: A Guide to Conversational AI. 2023. Available online: https://digitalcommons.uri.edu/cgi/viewcontent.cgi?article=1547&context=cba_facpubs (accessed on 30 September 2024).

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Grassini, S. Shaping the future of education: Exploring the potential and consequences of AI and ChatGPT in educational settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Chi, N.; Lurie, E.; Mulligan, D.K. Reconfiguring diversity and inclusion for AI ethics. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Association for Computing Machinery, Virtual, 19–21 May 2021; pp. 447–457. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K.; Holstein, K.; Sutherland, E.; Baker, T.; Shum, S.B.; Santos, O.C.; Rodrigo, M.T.; Cukurova, M.; Bittencourt, I.I.; et al. Ethics of AI in education: Towards a community-wide framework. Int. J. Artif. Intell. Educ. 2022, 32, 504–526. [Google Scholar] [CrossRef]

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Memarian, B.; Doleck, T. Fairness, Accountability, Transparency, and Ethics (FATE) in Artificial Intelligence (AI) and higher education: A systematic review. Comput. Educ. Artif. Intell. 2023, 5, 100152. [Google Scholar] [CrossRef]

- Raji, I.D.; Scheuerman, M.K.; Amironesei, R. You can’t sit with us: Exclusionary pedagogy in AI ethics education. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Association for Computing Machinery, Virtual, 3–10 March 2021; pp. 515–525. [Google Scholar] [CrossRef]

- Borenstein, J.; Howard, A. Emerging challenges in AI and the need for AI ethics education. AI Ethics 2021, 1, 61–65. [Google Scholar] [CrossRef]

- Sikdar, S.; Lemmerich, F.; Strohmaier, M. GetFair: Generalized fairness tuning of classification models. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Association for Computing Machinery, Seoul, Republic of Korea, 21–24 June 2022; pp. 289–299. [Google Scholar] [CrossRef]

- Khosravi, H.; Shum, S.B.; Chen, G.; Conati, C.; Tsai, Y.-S.; Kay, J.; Knight, S.; Martinez-Maldonado, R.; Sadiq, S.; Gašević, D. Explainable artificial intelligence in education. Comput. Educ. Artif. Intell. 2022, 3, 100074. [Google Scholar] [CrossRef]

- Woolf, B. Introduction to IJAIED special issue, FATE in AIED. Int. J. Artif. Intell. Educ. 2022, 32, 501–503. [Google Scholar] [CrossRef]

- Islam, S.R.; Russell, I.; Eberle, W.; Dicheva, D. Incorporating the concepts of fairness and bias into an undergraduate computer science course to promote fair automated decision systems. In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 2, Providence, RI, USA, 3–5 March 2022; Association for Computing Machinery: New York, NY, USA, 2022; p. 1075. [Google Scholar] [CrossRef]

- Li, C.; Xing, W.; Leite, W. Using fair AI to predict students’ math learning outcomes in an online platform. Interact. Learn. Environ. 2024, 32, 1117–1136. [Google Scholar] [CrossRef]

- Shin, D.; Rasul, A.; Fotiadis, A. Why am I seeing this? Deconstructing algorithm literacy through the lens of users. Internet Res. 2022, 32, 1214–1234. [Google Scholar] [CrossRef]

- Beerkens, M. An evolution of performance data in higher education governance: A path towards a ‘big data’ era? Qual. High. Educ. 2022, 28, 29–49. [Google Scholar] [CrossRef]

- Kong, S.-C.; Cheung, W.M.-Y.; Zhang, G. Evaluating an Artificial Intelligence Literacy Programme for Developing University Students’ Conceptual Understanding, Literacy, Empowerment and Ethical Awareness. Educ. Technol. Soc. 2023, 26, 16–30. [Google Scholar]

- Prinsloo, P.; Slade, S. An elephant in the learning analytics room: The obligation to act. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, in LAK ’17, Vancouver, BC, Canada, 13–17 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 46–55. [Google Scholar] [CrossRef]

- Friesen, P.; Kearns, L.; Redman, B.; Caplan, A.L. Rethinking the Belmont report? Am. J. Bioeth. 2017, 17, 15–21. [Google Scholar] [CrossRef]

- Bearman, M.; Ryan, J.; Ajjawi, R. Discourses of artificial intelligence in higher education: A critical literature review. High. Educ. 2023, 86, 369–385. [Google Scholar] [CrossRef]

- Ayeni, O.O.; Al Hamad, N.M.; Chisom, O.N.; Osawaru, B.; Adewusi, O.E. AI in education: A review of personalized learning and educational technology. GSC Adv. Res. Rev. 2024, 18, 261–271. [Google Scholar] [CrossRef]

- Schei, O.M.; Møgelvang, A.; Ludvigsen, K. Perceptions and use of AI chatbots among students in higher education: A scoping review of empirical studies. Educ. Sci. 2024, 14, 922. [Google Scholar] [CrossRef]

- Wang, K.; Ruan, Q.; Zhang, X.; Fu, C.; Duan, B. Pre-service teachers’ GenAI anxiety, technology self-efficacy, and TPACK: Their structural relations with behavioral intention to design GenAI-assisted teaching. Behav. Sci. 2024, 14, 373. [Google Scholar] [CrossRef]

- Pagallo, U. From automation to autonomous systems: A legal phenomenology with problems of accountability. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, Melbourne, Australia, 19–25 August 2017; IJCAI-17. Sierra, C., Ed.; International Joint Conferences on Artificial Intelligence: Melbourne, Australia, 2017; pp. 17–23. [Google Scholar] [CrossRef]

- Salas-Pilco, S.Z.; Xiao, K.; Oshima, J. Artificial intelligence and new technologies in inclusive education for minority students: A systematic review. Sustainability 2022, 14, 13572. [Google Scholar] [CrossRef]

- Yang, H.; Kyun, S. The current research trend of artificial intelligence in language learning: A systematic empirical literature review from an activity theory perspective. Australas. J. Educ. Technol. 2022, 38, 180–210. [Google Scholar] [CrossRef]

- Ungerer, L.; Slade, S. Ethical considerations of artificial intelligence in learning analytics in distance education contexts. In Learning Analytics in Open and Distributed Learning: Potential and Challenges; Prinsloo, P., Slate, S., Khalil, M., Eds.; Springer Nature Singapore: Singapore, 2022; pp. 105–120. [Google Scholar] [CrossRef]

- Akinrinola, O.; Okoye, C.C.; Ofodile, O.C.; Ugochukwu, C.E. Navigating and reviewing ethical dilemmas in AI development: Strategies for transparency, fairness, and accountability. GSC Adv. Res. Rev. 2024, 18, 050–058. [Google Scholar] [CrossRef]

- Fu, Y.; Weng, Z. Navigating the ethical terrain of AI in education: A systematic review on framing responsible human-centered AI practices. Comput. Educ. Artif. Intell. 2024, 7, 100306. [Google Scholar] [CrossRef]

- Petousi, V.; Sifaki, E. Contextualising harm in the framework of research misconduct. Findings from discourse analysis of scientific publications. Int. J. Sustain. Dev. 2021, 23, 149–174. Available online: https://www.inderscienceonline.com/doi/10.1504/IJSD.2020.115206 (accessed on 30 September 2024). [CrossRef]

- Mahmud, H.; Islam, A.K.M.N.; Ahmed, S.I.; Smolander, K. What influences algorithmic decision-making? A systematic literature review on algorithm aversion. Technol. Forecast. Soc. Chang. 2022, 175, 121390. [Google Scholar] [CrossRef]

- Klimova, B.; Pikhart, M.; Kacetl, J. Ethical issues of the use of AI-driven mobile apps for education. Front. Public Health 2023, 10, 1118116. [Google Scholar] [CrossRef]

- Salloum, S.A. AI perils in education: Exploring ethical concerns. In Artificial Intelligence in Education: The Power and Dangers of ChatGPT in the Classroom; Al-Marzouqi, A., Salloum, S.A., Al-Saidat, M., Aburayya, A., Gupta, B., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 669–675. [Google Scholar] [CrossRef]

- Airaj, M. Ethical artificial intelligence for teaching-learning in higher education. Educ. Inf. Technol. 2024. [Google Scholar] [CrossRef]

- Smuha, N.A. Pitfalls and pathways for trustworthy artificial intelligence in education. In The Ethics of Artificial Intelligence in Education; Holmes, W., Porayska-Pomsta, K., Eds.; Routledge: New York, NY, USA, 2022. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Kwet, M. Digital colonialism: US empire and the new imperialism in the Global South. Race Class 2019, 60, 3–26. [Google Scholar] [CrossRef]

- Moran, T.C. Racial technological bias and the white, feminine voice of AI VAs. Commun. Crit./Cult. Stud. 2021, 18, 19–36. [Google Scholar] [CrossRef]

- Antonenko, P.D.; Dawson, K.; Cheng, L.; Wang, J. Using technology to address individual differences in learning. In Handbook of Research in Educational Communications and Technology: Learning Design; Bishop, M.J., Boling, E., Elen, J., Svihla, V., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 99–114. [Google Scholar] [CrossRef]

- Gibson, D.; Kovanovic, V.; Ifenthaler, D.; Dexter, S.; Feng, S. Learning theories for artificial intelligence promoting learning processes. Br. J. Educ. Technol. 2023, 54, 1125–1146. [Google Scholar] [CrossRef]

- Moser, A.; Korstjens, I. Series: Practical guidance to qualitative research. Part 3: Sampling, data collection and analysis. Eur. J. Gen. Pract. 2018, 24, 9–18. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Tie, Y.C.; Birks, M.; Francis, K. Grounded theory research: A design framework for novice researchers. SAGE Open Med. 2019, 7. [Google Scholar] [CrossRef]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education, 7th ed.; Routledge: London, UK, 2011. [Google Scholar]

- Martin, P.Y.; Turner, B.A. Grounded theory and organizational research. J. Appl. Behav. Sci. 1986, 22, 141–157. [Google Scholar] [CrossRef]

- Holloway, I.; Todres, L. The status of method: Flexibility, consistency and coherence. Qual. Res. 2003, 3, 345–357. [Google Scholar] [CrossRef]

- Nemorin, S.; Vlachidis, A.; Ayerakwa, H.M.; Andriotis, P. AI hyped? A horizon scan of discourse on artificial intelligence in education (AIED) and development. Learn. Media Technol. 2023, 48, 38–51. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Holstein, K.; Doroudi, S.; Holstein, K.R.; Doroudi, S. Equity and artificial intelligence in education. In The Ethics of Artificial Intelligence in Education; Holmes, W., Porayska-Pomsta, K., Eds.; Routledge: New York, NY, USA, 2022; pp. 151–173. [Google Scholar]

- Lin, M.P.C.; Chang, D. Exploring inclusivity in ai education: Perceptions and pathways for diverse learners. In Generative Intelligence and Intelligent Tutoring Systems; Sifaleras, A., Lin, F., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 237–249. [Google Scholar]

- Johnston, H.; Wells, R.F.; Shanks, E.M.; Boey, T.; Parsons, B.N. Student perspectives on the use of generative artificial intelligence technologies in higher education. Int. J. Educ. Integr. 2024, 20, 2. [Google Scholar] [CrossRef]

- Lin, M.P.C.; Chang, D.H.; Winne, P.H. A proposed methodology for investigating student-chatbot interaction patterns in giving peer feedback. Educ. Technol. Res. Dev. 2024. [Google Scholar] [CrossRef]

- Lin, M.P.C.; Chang, D. Enhancing post-secondary writers’ writing skills with a chatbot: A mixed-method classroom study. J. Educ. Technol. Soc. 2020, 23, 78–92. [Google Scholar] [CrossRef]

- Zhang, K.; Aslan, A.B. AI technologies for education: Recent research & future directions. Comput. Educ. Artif. Intell. 2021, 2, 100025. [Google Scholar] [CrossRef]

- Sullivan, M.; Kelly, A.; Mclaughlan, P. ChatGPT in higher education: Considerations for academic integrity and student learning. J. Appl. Learn. Teach. 2023, 6, 31–40. [Google Scholar] [CrossRef]

- Lin, M.P.C. A Proposed Methodology for Investigating Chatbot Effects in Peer Review. Doctoral Dissertation, Simon Fraser University, Burnaby, BC, Canada, 2020. Available online: https://summit.sfu.ca/item/20533 (accessed on 8 September 2024).

- Schiff, D. Education for AI, not AI for education: The role of education and ethics in national AI policy strategies. Int. J. Artif. Intell. Educ. 2022, 32, 527–563. [Google Scholar] [CrossRef]

- Tariq, M.U. Equity and inclusion in learning ecosystems. In Preparing Students for the Future Educational Paradigm; IGI Global: Hershey, PA, USA, 2024; pp. 155–176. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).