Optimal Electric Vehicle Battery Management Using Q-learning for Sustainability

Abstract

1. Introduction

1.1. Problem Statement

- Dynamic adaptation to driving patterns: EVs encounter a wide range of driving conditions, from city traffic to highway speeds. Traditional battery-management systems may struggle to dynamically adjust to these diverse patterns, resulting in suboptimal battery performance and efficiency [11].

- Optimal state-of-charge management: maintaining an optimal state-of-charge is pivotal for extending battery life and ensuring consistent performance. Existing approaches may not effectively balance the energy demands of driving with charging and discharging cycles, leading to challenges in achieving and maintaining an ideal state of charge [1,2].

- Integration of charging infrastructure: The availability and utilization of charging infrastructure significantly influence the charging patterns and overall health of EV batteries. Current battery-management systems may not fully exploit the potential benefits offered by existing infrastructure, limiting the optimization of charging and discharging cycles [1,2].

- Real-time decision making: The advent of machine learning and reinforcement learning opens avenues for real-time decision making based on past experiences. Integrating these advanced techniques into EV battery-management systems has the potential to address challenges posed by dynamic driving conditions and diverse charging scenarios [1,2,13].

- State-of-Charge and State-of-Health Management: the literature in this domain often emphasizes the importance of managing both the state of charge (SoC) and state of health (SoH) of EV batteries. Q-learning frameworks are discussed as a potential solution for balancing the trade-off between maximizing energy usage and minimizing degradation [15,16].

- Dynamic Decision Making in Charging Infrastructure: charging infrastructure plays a crucial role in EV battery management [17]. Some studies investigate how Q-learning algorithms can optimize charging decisions, considering factors such as charging station availability, charging rates, and their impact on battery performance.

- Real-world Data Integration: successful implementation of Q-learning in battery management often involves the use of real-world data [18]. Researchers discuss the challenges and benefits of incorporating actual driving patterns, environmental conditions, and battery characteristics into the learning process to enhance the model’s accuracy.

- Comparative Analysis with Traditional Approaches: researchers have conducted comparative analyses between Q-learning-based battery management and traditional methods [19]. These studies typically highlight the potential improvements in terms of energy efficiency, battery life extension, and overall performance achieved through Q-learning.

- Machine Learning and Battery Management Optimization: beyond Q-learning, there is a broader literature on the application of various machine learning techniques for optimizing EV battery management. Researchers explore different algorithms, including neural networks and other reinforcement learning methods, to address the complexities of battery systems [19].

- Implications for Sustainability and Environmental Impact: some works discuss the broader implications of Q-learning-based battery management for the sustainability of EVs [18,19]. This includes the considerations of a reduced environmental impact through enhanced battery efficiency, potentially contributing to the overall sustainability of electric transportation.

1.2. Research Gap

1.3. Objective of This Paper

1.4. Contribution of This Paper

- (1)

- (2)

- Comparative analysis: this analysis offers a broader perspective on both the benefits and potential drawbacks of applying Q-learning within the EV domain, contributing to a deeper understanding of its viability and effectiveness [2].

- (3)

- The practical implications of this research for EV battery management and sustainable transportation are significant and multifaceted. By employing Q-learning for battery management, we introduce an advanced method that not only optimizes the performance and lifespan of EV batteries but also contributes to broader sustainable transportation goals [1,2,3,4]. This research on EV battery management using Q-learning presents significant practical implications for sustainable transportation. In summary, this research offers practical benefits that extend from individual EV users to industry stakeholders and policymakers, fostering a more sustainable and efficient transportation system.

2. Literature Review

2.1. Electric Vehicle Trends

2.2. Electric Vehicle Component

2.2.1. Electric Vehicle Car Component

- Battery pack: this is the main energy-storage system in an EV, characterized by its state of charge (SOC) and state of health (SOH). The SOC indicates the current energy level, while the SOH shows the battery’s degradation over time. Models such as the Thevenin equivalent circuit and electrochemical models like the Doyle–Fuller–Newman are used to describe the battery’s voltage dynamics.

- Electric motor/generator: this component converts electrical energy from the battery into mechanical energy to propel the vehicle. It is modeled through equations that depict its torque–speed characteristics, linking motor torque, speed, and electrical power input.

- Final power transmission typically involves a single-speed transmission or direct drive in EVs; this system transmits power from the motor to the wheels. Its dynamics are simpler than those of internal combustion engine vehicles, as it lacks complex gear mechanisms.

- Internal combustion engine (IC engine): absent in pure EVs but present in hybrid variants like HEVs or PHEVs, the IC engine serves as a range extender or additional power source. Its dynamics are described by equations covering fuel consumption, combustion efficiency, and emissions.

- Fuel tank: this is nonexistent in pure EVs but included in hybrids to store gasoline. Its dynamics are governed by equations related to the fuel level, consumption rate, and refueling behaviors.

- Battery dynamics: The battery pack serves as the main energy source, managing energy storage and release. It powers the electric motor during acceleration and recharges during regenerative braking, with critical factors including the state of charge (SOC), state of health (SOH), and internal resistance.

- Electric motor dynamics: The motor converts electrical to mechanical energy, with dynamics governed by torque–speed characteristics, efficiency, and response times. These are influenced by motor design and control algorithms.

- Power electronics dynamics: Inverters and converters control the flow of electricity between the battery and motor, adjusting the voltage and current based on driving conditions. This involves managing switching behavior and thermal management to ensure efficiency.

- Transmission dynamics: EVs range from simple single-speed systems to complex multi-speed or dual-motor setups, with dynamics involving gear shifts and torque distribution.

- Vehicle dynamics: These encompass overall component interaction affecting acceleration, braking, handling, and stability. Influencing factors include weight distribution, tire characteristics, and suspension setup. Control systems like traction and stability control, along with regenerative braking, optimize these dynamics for enhanced safety and efficiency.

2.2.2. Electric Vehicle Car Modeling Equation

2.2.3. Electric Vehicle Batteries

2.3. EV Battery-Management Studies

2.4. Q-learning in Battery Management

2.5. Fundamentals of Q-learning and Its Suitability for Battery Management

- -

- -

- -

- -

- State–Action–Reward Framework: Q-learning operates on the state–action–reward framework, associating states (e.g., battery SoC and SoH) with actions (e.g., charging, discharging) that yield the highest rewards (e.g., improved energy efficiency). This framework aligns seamlessly with the dynamic nature of EV battery management [60,68].

2.6. Sustainability in Smart Tourism City Implementation

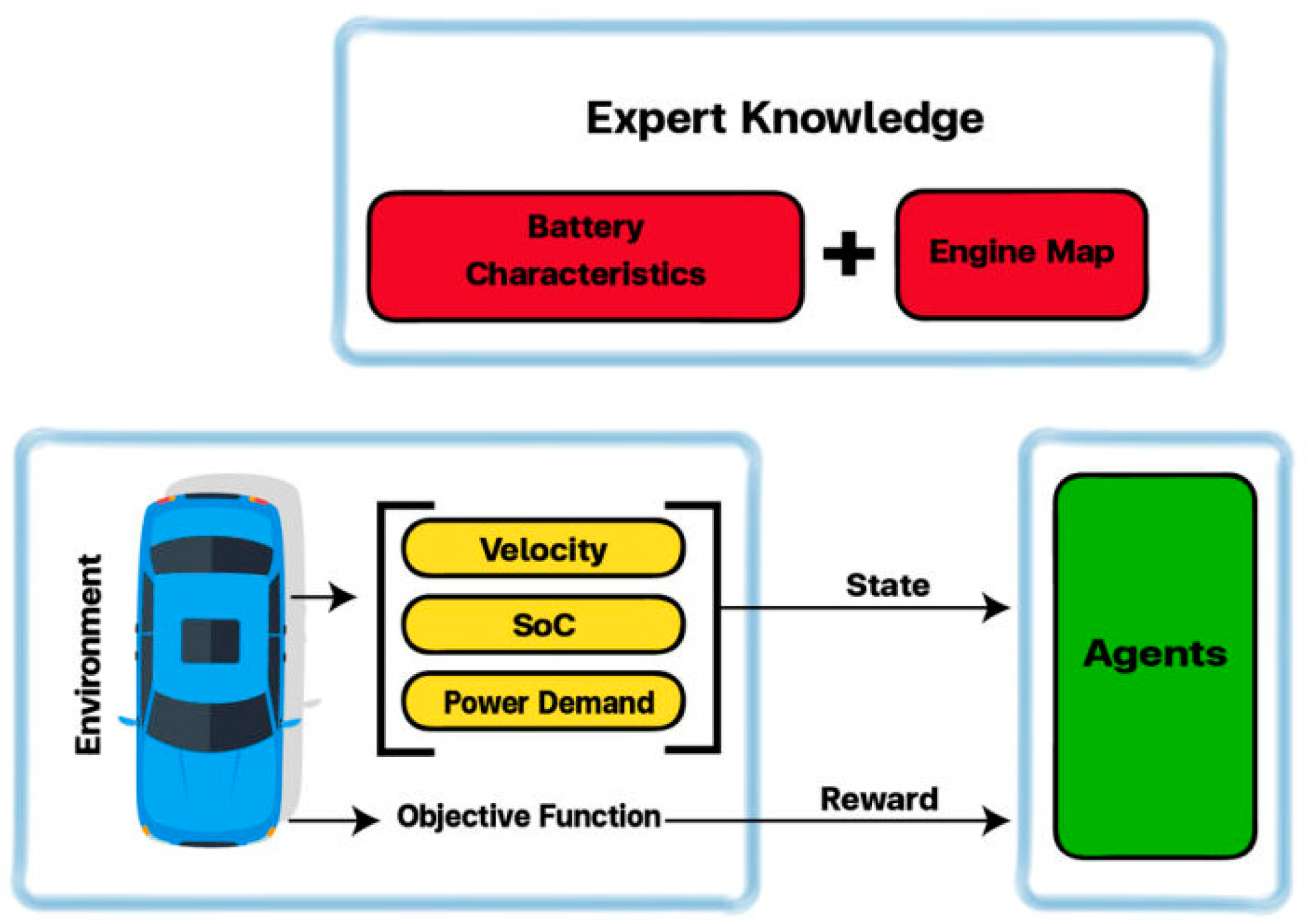

3. Methodology

3.1. Research Design

- (1)

- Reward Function for EV Battery Management

- (2)

- The use of Q-learning

- State of Health (SoH): The SoH reflects the overall health or condition of the battery, considering factors such as degradation, aging, and performance loss over time. Monitoring the SoH is crucial for predicting battery life and optimizing its usage.

- Temperature: The battery temperature plays a significant role in its performance, efficiency, and longevity. Monitoring temperature helps prevent overheating, which can degrade the battery and affect its safety and reliability.

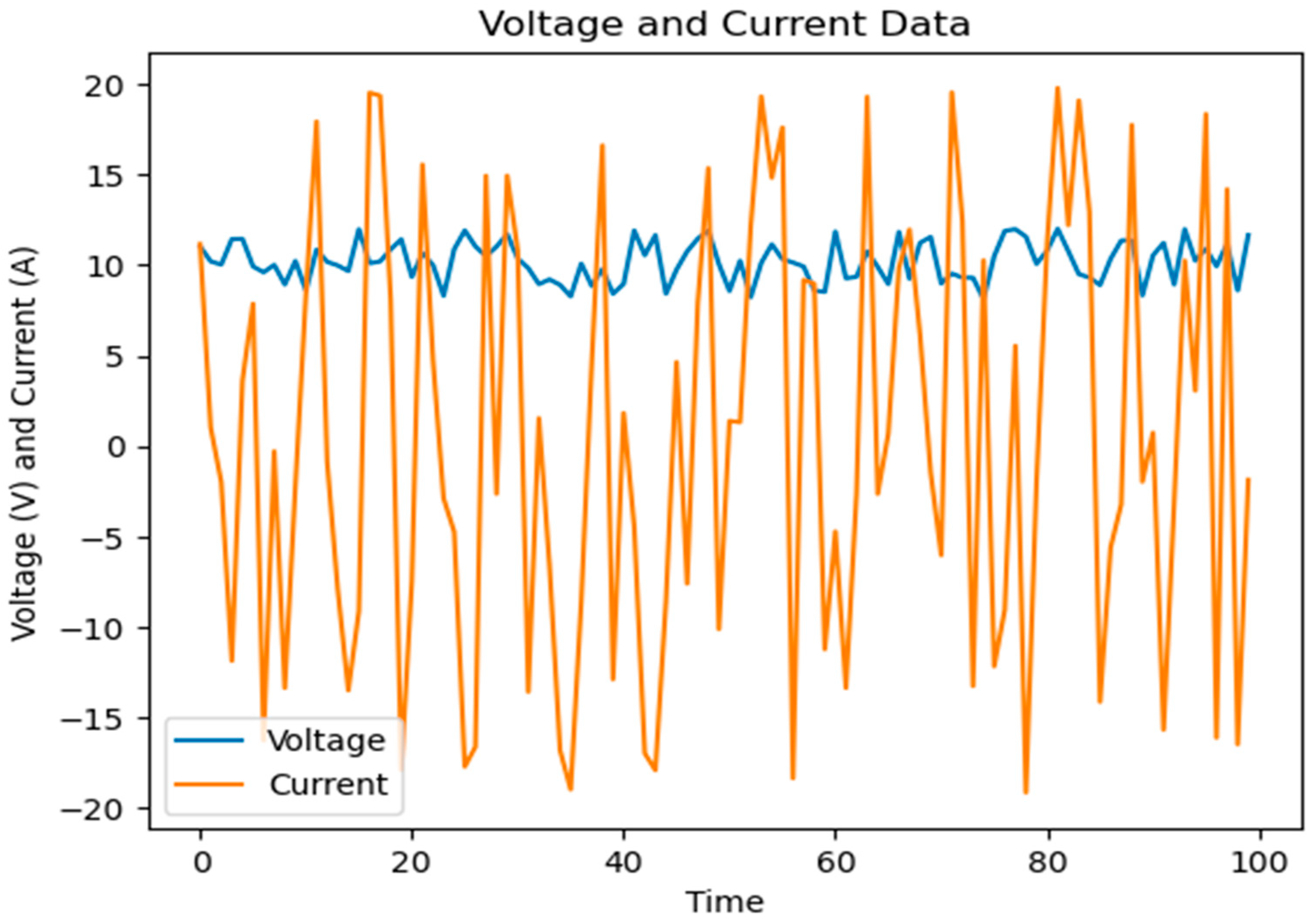

- Voltage: The voltage across the battery terminals provides insights into its electrical potential and helps determine its operating conditions and performance characteristics.

- Current: The current flowing into or out of the battery indicates its charging or discharging status and influences its energy transfer and power delivery capabilities.

- Choice of Q-learning: Providing a rationale for why Q-learning was chosen over other methods offers insight into the decision-making process. This could involve discussing the advantages of reinforcement learning in dynamic environments like EV battery management, where the agent learns optimal policies through trial and error.

- Selection of Q-Table: Explaining why a Q-table was chosen instead of function approximation options like neural networks or deep learning architectures helps justify the simplicity and interpretability of the chosen method. This may be due to the relatively low computational complexity and ease of implementation, which are advantageous in real-time applications like battery management.

- Exact Formula of Reward Function: Describing the reward function in detail, including its mathematical formulation and the factors it considers, provides transparency about how the agent is incentivized to take certain actions. This clarity helps stakeholders understand the objectives of the system and how rewards are aligned with performance metrics such as energy efficiency and battery health.

- Balancing Exploration and Exploitation: Discussing the strategies employed to balance exploration (trying new actions) and exploitation (choosing actions based on current knowledge) sheds light on the agent’s learning process. Techniques like epsilon-greedy exploration or adaptive learning rates may be used to ensure a balance between exploring new possibilities and exploiting known strategies.

- Description of State Space: Providing insight into the structure and components of the state space elucidates the context in which the agent operates. This includes explaining the choice of state variables, their significance in capturing the battery system’s dynamics, and how they collectively represent the system’s current state.

- Definition of reward function: The reward function should quantify the desirability of the state–action pairs encountered by the agent. It typically takes the form of , where () is the current state, () is the action taken, and () is the resulting state after taking action (a).

- Objective alignment: The reward function should be aligned with the objectives of EV battery management, such as maximizing energy efficiency, prolonging battery lifespan, and ensuring vehicle reliability. For example, higher rewards can be assigned for actions that lead to optimal state-of-charge (SoC) levels, efficient charging/discharging cycles, and avoiding battery degradation.

- Positive and negative rewards: Situations that contribute positively to battery health and system efficiency should be rewarded, while actions that degrade battery performance or compromise system reliability should incur negative rewards. For instance, positive rewards include successfully reaching target state of charge (SoC) levels without overcharging or deep discharging, efficiently utilizing regenerative braking to recharge the battery, and implementing smart charging strategies that minimize grid stress and energy costs. On the other hand, negative rewards encompass over-discharging the battery, which leads to a reduced battery lifespan, charging at high temperatures that accelerate battery degradation, and ignoring battery-management system warnings or safety protocols.

- Balancing short-term and long-term rewards: The reward mechanism should strike a balance between immediate gains and long-term benefits. While certain actions may yield immediate rewards (e.g., rapid charging for short-term energy availability), they may have detrimental effects on battery health in the long run. Therefore, the reward function should consider the trade-offs between short-term gains and long-term sustainability.

- Dynamic reward adjustment: To adapt to changing conditions and system requirements, the reward mechanism may need to be dynamically adjusted over time. This could involve modifying reward weights, thresholds, or penalty values based on real-time feedback, system performance metrics, or user-defined preferences.

- Voltage and current measurement: monitoring the battery’s voltage and current during charging and discharging cycles can provide insights into its health. Deviations from expected voltage profiles or irregularities in current flow may indicate degradation or internal damage.

- Temperature monitoring: elevated temperatures during charging or discharging can accelerate battery degradation. Temperature sensors placed within the battery pack can monitor thermal behavior and identify potential issues affecting SOH.

- Cycle counting: tracking the number of charge–discharge cycles the battery undergoes provides an estimate of its aging process. As batteries degrade over time with repeated cycles, monitoring the cycle count can help assess SOH.

- Impedance spectroscopy: this technique involves applying small-amplitude alternating current signals to the battery and analyzing its impedance response. Changes in impedance over time can indicate degradation mechanisms, providing insights into SOH.

- Capacity testing: periodic capacity tests involve fully charging the battery and then discharging it at a controlled rate to measure its energy storage capacity. Comparing the measured capacity with the battery’s initial capacity provides an indication of SOH.

- Machine learning algorithms: Advanced data-analytics techniques, such as machine learning algorithms, can analyze historical data from battery performance, including voltage, current, temperature, and cycle count, to predict SOH and identify degradation trends.

- Optimized charging strategies: by considering SOH in charging algorithms, such as adjusting charging voltage and current limits based on battery health, overcharging or undercharging can be mitigated, leading to improved energy efficiency and reduced degradation.

- Load management: incorporating SOH into load management algorithms allows for better distribution of energy usage across the battery cells. Balancing the load based on cell health ensures that healthier cells are not overburdened, optimizing overall energy efficiency.

- Predictive maintenance: using SOH data, predictive maintenance algorithms can anticipate battery failures or degradation trends before they occur. Proactive maintenance actions, such as cell balancing or capacity restoration, can be scheduled to prevent efficiency losses.

- Energy-storage system optimization: in applications involving energy-storage systems (ESS), integrating SOH data enables the optimization of ESS operation and dispatch strategies. Prioritizing the use of cells with higher SOH levels prolongs the system’s lifespan and maximizes energy efficiency.

- Inefficient charging and discharging: batteries may be subjected to inappropriate charging or discharging rates, leading to energy losses and accelerated degradation. Without considering SOH, batteries may be overcharged, leading to capacity loss and reduced efficiency.

- Uneven cell degradation: without balancing load or energy distribution based on SOH, healthier cells may be underutilized, while degraded cells may be overutilized. This imbalance accelerates degradation in weaker cells, leading to reduced system efficiency and overall capacity.

- Unplanned downtime: a failure to predict battery degradation based on SOH may result in unexpected system failures or downtime. This can lead to disruptions in energy supply, increased maintenance costs, and reduced system reliability.

- Adaptability: machine learning algorithms can adapt to changing conditions and learn optimal strategies for battery management based on historical data and real-time feedback.

- Optimization: advanced algorithms can optimize battery operation considering factors such as load profiles, environmental conditions, and battery health to maximize energy efficiency and extend battery life.

- Prediction and prognostics: Machine learning models can predict battery degradation trends and anticipate failures, enabling proactive maintenance and the optimization of battery performance.

- Dynamic control: machine learning-based approaches offer dynamic control of battery operation, adjusting strategies in real-time based on evolving conditions and system requirements.

3.2. Mathematical and Conceptual Framework of Q-learning

3.2.1. Mathematical Model

3.2.2. Q-learning Technique for Predicting the Cycle Lifespan of EVs

3.2.3. Conceptual Framework of Q-learning

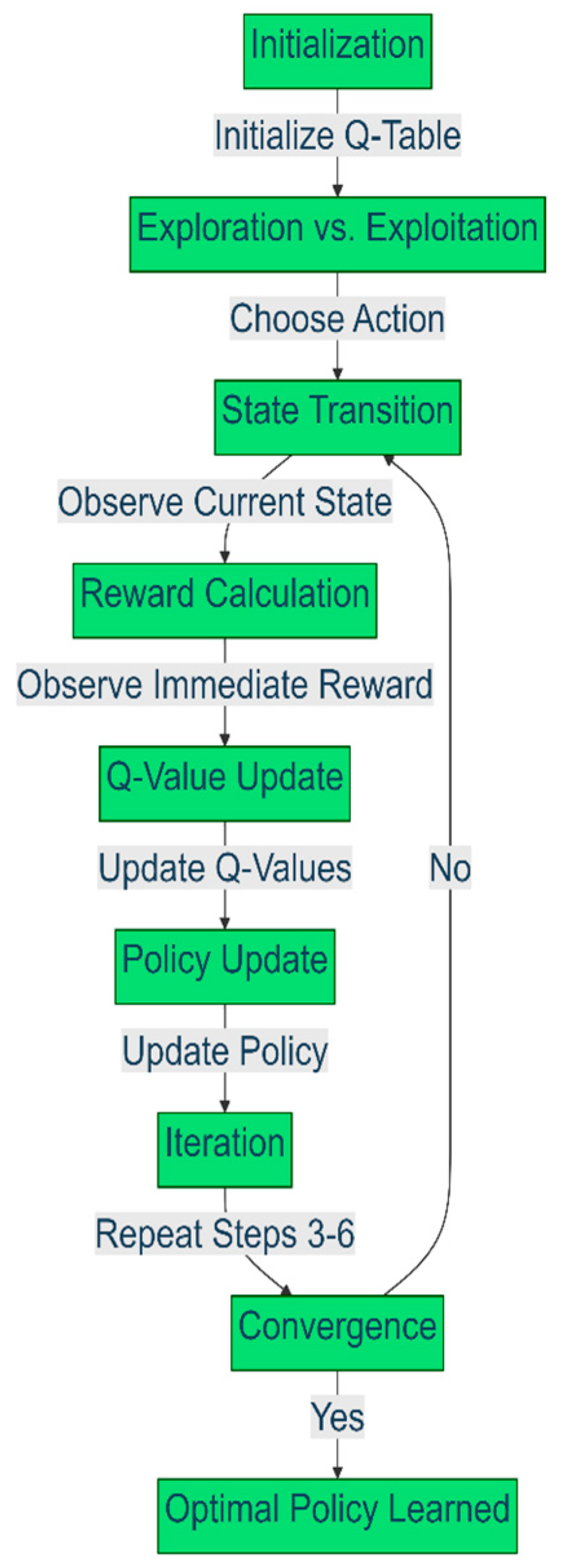

3.3. Steps for Implementing Q-learning for Battery Management

3.4. Selection of State, Action, Reward, and Policy

- Charging: Initiate charging to increase SOC.

- Discharging: Initiate discharge to decrease SOC.

- Idle: Maintain the current SOC without charging or discharging.

- Maintenance: Implement measures to mitigate degradation or extend battery lifespan.

- Replacement: Determine if the battery needs replacement based on its degradation level.

- Cooling: Activate cooling systems to prevent overheating.

- Heating: Activate heating systems to maintain optimal temperature in cold conditions.

- Regulation: Adjust charging and discharging rates based on temperature to optimize battery performance.

- Ventilation: Control airflow to manage humidity levels within acceptable limits.

- Sealing: Implement measures to prevent moisture ingress into sensitive components.

- Low Charge: Charge the battery to increase SOC.

- High Discharge: Discharge the battery to decrease SOC.

- Temperature Control: Activate temperature-regulation mechanisms.

- Maintenance Mode: Implement measures to mitigate degradation based on SOH.

3.5. Data Collection and Model Training

3.5.1. Data Sources and Parameters

Data Sources

- 1.

- Battery-Management System (BMS)

- 2.

- Environmental Data

- 3.

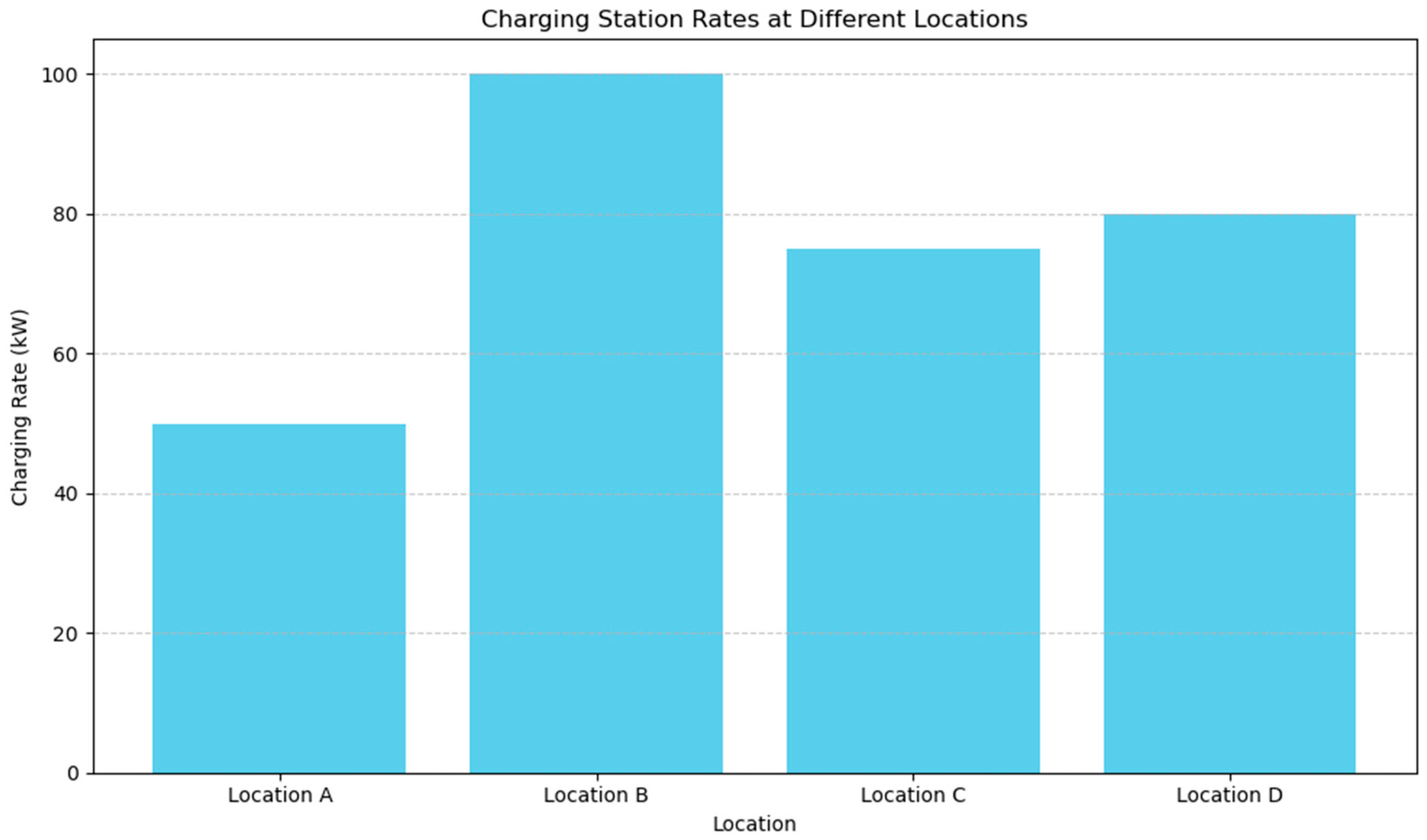

- Charging Infrastructure Data

- 4.

- Charging Station Rates at Different locations

- 5.

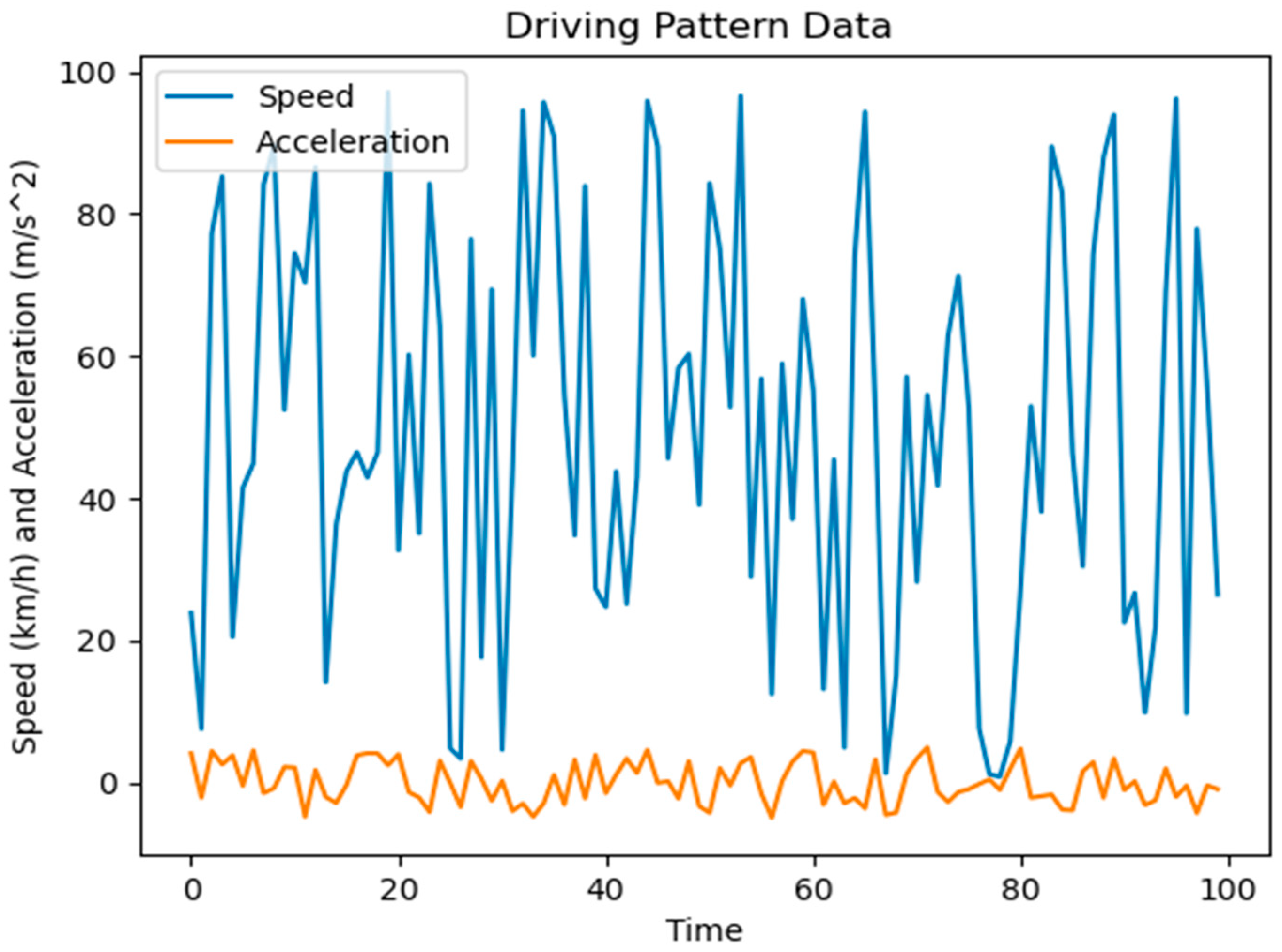

- Driving Patterns

3.5.2. Parameters

- Charging Rate: The rate at which the battery is charged.

- Discharging Rate: The rate at which the battery discharges power to the motor.

- Idle Mode: The option to enter an energy-saving idle mode.

- Energy Efficiency: Reward based on how efficiently energy is utilized.

- Battery Health: Reward related to maintaining battery health and prolonging its lifespan.

- Operational Cost Savings: Reward based on cost-efficient charging and discharging strategies.

- Exploration Rate (ε): The rate at which the algorithm explores new actions rather than exploiting known ones.

- Discount Factor (γ): A parameter that balances the importance of immediate rewards against future rewards.

- Learning Rate (α): A factor that determines how much the algorithm adjusts its Q-values based on new information.

3.5.3. Training Process

- Simplicity: traditional Q-learning with Q-tables is simpler to implement and understand compared to DQN, which involves training a neural network.

- Data efficiency: DQN often requires a large amount of training data to learn effectively, especially when dealing with high-dimensional state spaces. In contrast, traditional Q-learning can be more data-efficient, making it suitable for scenarios where data collection is limited.

- Computational resources: training a DQN typically requires more computational resources compared to traditional Q-learning, especially when using deep neural networks. For applications with resource constraints, traditional Q-learning may be preferred.

- Performance: depending on the specific problem and available resources, traditional Q-learning may achieve comparable or even superior performance to DQN. If the problem can be effectively solved using a simpler approach, there may be no need to employ more complex methods like DQN.

- Experimental Setup: the real-world pilot involved a fleet of EVs equipped with the necessary instrumentation to monitor key battery parameters and driving behavior. The vehicles used in the pilot were standard electric models commonly found in urban environments. Testing conditions encompassed various driving scenarios, including city commuting, highway driving, and urban stop-and-go traffic.

- Data Collection: the pilot was facilitated through onboard sensors integrated into the EVs, as well as external environmental monitoring equipment. The onboard battery-management system (BMS) continuously logged battery-related parameters such as state of charge (SoC), state of health (SoH), temperature, voltage, current, and power consumption. Additionally, GPS or other vehicle tracking systems recorded driving patterns, including vehicle speed, acceleration, deceleration, and route information. Quality-control measures were implemented to ensure data integrity, including regular calibration of sensors and validation of recorded data against ground truth measurements.

- Environmental Conditions: the pilot was conducted in urban and suburban areas, exposing the EVs to a range of environmental conditions such as temperature, humidity, and varying driving scenarios. Data collection included monitoring of ambient temperature, humidity levels, and driving conditions to capture the influence of environmental factors on battery performance.

- Duration and Scope: the real-world pilot spanned several months, allowing for comprehensive data collection across different seasons and driving conditions. Multiple vehicles were involved in the pilot, enabling a diverse range of testing scenarios and driving behaviors to be captured. The scope of the pilot encompassed the evaluation of the Q-learning approach’s performance in optimizing energy efficiency, extending battery life, and reducing operational costs in real-world driving environments.

- Real-World Testing: deploying a fleet of electric vehicles equipped with the proposed battery-management system in various driving conditions, including urban, highway, and mixed-use scenarios. Data collection was facilitated through onboard sensors and data-logging equipment, capturing essential parameters such as state of charge (SOC), state of health (SOH), temperature, and vehicle performance metrics.

- Data Analysis: collected data from both the simulation and real-world testing phases and performed thorough analysis to evaluate the performance of the Q-learning algorithm. Key performance indicators, including energy efficiency, battery degradation, and overall vehicle performance, were compared against baseline metrics to assess the effectiveness of the proposed approach.

- Evaluation Setup: Real-World Testing: following the simulation phase, real-world testing was carried out using a fleet of electric vehicles equipped with the proposed battery-management system. These vehicles were deployed in diverse driving conditions, including urban, highway, and mixed-use scenarios, to evaluate the algorithm’s performance in authentic operational environments. Data collection during real-world testing was facilitated through onboard sensors and data-logging equipment, capturing critical parameters such as state of charge (SOC), state of health (SOH), temperature, and vehicle performance metrics.

- Data Analysis: data collected from real-world testing phases were subjected to comprehensive analysis. Key performance indicators, such as energy efficiency, battery degradation, and overall vehicle performance, were compared against baseline metrics to assess the effectiveness of the proposed approach. This analysis provided valuable insights into the algorithm’s performance across real-world environments, guiding its further refinement and practical application in electric vehicles.

3.6. Gain Data

- -

- Objective: Identify how Q-learning can help in understanding and optimizing material structure changes. This might involve optimizing processes like heat treatment, alloy composition, or manufacturing conditions.

- -

- State Representation: Define the state space to represent the different conditions of the material structure. This could include parameters such as temperature, pressure, composition, and time.

- -

- Action Space: Define the actions that can be taken to alter the material structure. These might include changes in temperature, pressure, or the addition of different materials.

- -

- Reward Function: Develop a reward function that quantifies the desired outcomes of material structure changes. This could be based on performance metrics like strength, durability, or any other relevant property.

- -

- Simulation Data: Use simulations to generate data on how different actions affect material structure. This could involve computational models of material behavior under various conditions.

- -

- Experimental Data: Collect real-world data from experiments where material structures are altered according to different action strategies. Ensure data include various states and the results of actions taken.

- -

- Data Cleaning: Remove noise, handle missing values, and normalize the data to ensure consistency.

- -

- Feature Engineering: Identify and extract relevant features that represent the material structure and its changes.

- -

- Initialization: Initialize the Q-table or function approximator with initial values.

- -

- Learning Process: Apply the Q-learning algorithm to learn the optimal policy for material structure changes. Use historical data to update the Q-values iteratively.

- -

- Exploration vs. Exploitation: Balance exploration of new actions with exploitation of known optimal actions using ε-greedy or other exploration strategies.

- -

- Simulation Testing: Validate the trained Q-learning model using simulation data to check its performance in predicting material structure changes.

- -

- Real-World Testing: Test the model with real-world data to ensure it generalizes well and provides accurate predictions for material structure changes.

- -

- Deployment: Implement the Q-learning-based approach in a real-world setting, if applicable, to optimize material structure changes.

- -

- Continuous Learning: Update the model with new data to improve its accuracy and adapt to changing conditions over time.

4. Experimental Results

4.1. Experiment Results

4.2. Data and Graphical Representation

4.3. Result of Q-learning Approaches

- Feasibility Analysis:

- Experiment Setup: conduct experiments to measure the computational time required for training and inference of the Q-learning algorithm. Use a standardized benchmarking environment to ensure fair comparisons between different approaches.

- Computational Time Measurement: the first step is to conduct experiments to measure the computational time required for both training and inference of the Q-learning algorithm. This involves running the algorithm in a controlled environment and recording the time taken for each stage. It is essential to use appropriate timing mechanisms in your programming language or environment to accurately measure the elapsed time.

- Standardized Benchmarking Environment: it is crucial to use a standardized benchmarking environment to ensure fair comparisons between different approaches. This environment should provide consistent conditions for testing, including the same initial state configurations, action spaces, and reward structures. By standardizing the environment, you can ensure that the results are comparable across different experiments and approaches.

- 2.

- Comparative Analysis:

- 3.

- Computational Time Comparison

- -

- Training Convergence Time: The time taken to reach an acceptable performance level during training.

- -

- Inference Latency: The time delay between receiving an input and producing an output during the deployment phase.

- 4.

- Analysis of Trade-offs Between Accuracy and Computational Cost

- -

- High Accuracy Requirements: Achieving higher accuracy often necessitates more complex state and action spaces, leading to larger Q-tables. This results in increased memory usage and longer computation times. For instance, training on a larger state space with more frequent updates may improve the algorithm’s performance but also increase the training time and resource consumption.

- -

- Balancing Accuracy and Efficiency: To balance accuracy with computational efficiency, adjustments such as simplifying state and action spaces, employing function ap-proximation methods, or optimizing the Q-learning parameters (e.g., learning rate, discount factor) can be made. These strategies help to achieve reasonable accuracy while mitigating excessive computational demands.

- -

- State and Action Space Size: Larger state and action spaces can significantly impact the computational time. For example, the size of the Q-table grows exponentially with the number of states and actions, leading to higher memory requirements and longer update times.

- -

- Convergence Rate: The speed at which the Q-learning algorithm converges to an optimal policy can also affect computational efficiency. Slower convergence rates require more iterations, increasing both the training time and computational costs.

- -

- Algorithmic Improvements: Techniques such as function approximation (e.g., using Deep Q-Networks) can help reduce the size of the Q-table and improve scalability.

- -

- Parallelization: Implementing parallel processing or leveraging hardware acceleration can reduce the computation time, especially for larger state spaces and more frequent updates.

- -

- Profile and Optimize: Profiling the Q-learning implementation to identify and address bottlenecks can lead to significant improvements in computational efficiency.

- -

- Deployment Considerations: When deploying Q-learning in real-world scenarios, it is crucial to consider both the accuracy requirements and the computational resources available. For embedded systems with limited processing power, optimizing the algorithm for lower computational cost without sacrificing essential accuracy is important.

5. Discussion

5.1. General Discussion

5.2. Theoretical Implications

5.3. Managerial Implications

5.4. Limitations and Future Research Directions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Suanpang, P.; Jamjuntr, P. Optimizing Electric Vehicle Charging Recommendation in Smart Cities: A Multi-Agent Reinforcement Learning Approach. World Electr. Veh. J. 2024, 15, 67. [Google Scholar] [CrossRef]

- Suanpang, P.; Jamjuntr, P.; Kaewyong, P.; Niamsorn, C.; Jermsittiparsert, K. An Intelligent Recommendation for Intelligently Accessible Charging Stations: Electronic Vehicle Charging to Support a Sustainable Smart Tourism City. Sustainability 2023, 15, 455. [Google Scholar] [CrossRef]

- Li, Y.; Tao, J.; Xie, L.; Zhang, R.; Ma, L.; Qiao, Z. Enhanced Q-learning for real-time hybrid electric vehicle energy management with deterministic rule. Meas. Control. 2020, 53, 1493–1503. [Google Scholar] [CrossRef]

- Khamis, M.A.H.; Hassanien, A.E.; Salem, A.E.K. Electric Vehicle Charging Infrastructure Optimization: A Comprehensive Review. IEEE Access 2020, 8, 23676–23692. [Google Scholar]

- Kim, N.; Kim, J.C.D.; Lee, B. Adaptive Loss Reduction Charging Strategy Considering Variation of Internal Impedance of Lithium-Ion Polymer Batteries in Electric Vehicle Charging Systems. In Proceedings of the 2016 IEEE Applied Power Electronics Conference and Exposition (APEC), Long Beach, CA, USA, 20–24 March 2016; pp. 1273–1279. [Google Scholar] [CrossRef]

- Sedano, J.; Chira, C.; Villar, J.R.; Ambel, E.M. An Intelligent Route Management System for Electric Vehicle Charging. Integr. Comput. Aided Eng. 2013, 20, 321–333. [Google Scholar] [CrossRef]

- Market & Market. Electric Vehicle Market. Available online: https://www.marketsandmarkets.com/Market-Reports/electric-vehicle-market-209371461.html (accessed on 9 July 2024).

- Brenna, M.; Foiadelli, F.; Leone, C.; Longo, M. Electric Vehicles Charging Technology Review and Optimal Size Estimation. J. Electr. Eng. Technol. 2020, 15, 2539–2552. [Google Scholar] [CrossRef]

- Rizvi, S.A.A.; Xin, A.; Masood, A.; Iqbal, S.; Jan, M.U.; Rehman, H. Electric vehicles and their impacts on integration into power grid: A review. In Proceedings of the 2nd IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 20–22 October 2018. [Google Scholar] [CrossRef]

- Papadopoulos, P.; Cipcigan, L.M.; Jenkins, N. Distribution networks with electric vehicles. In Proceedings of the 44th International Universities Power Engineering Conference (UPEC), Glasgow, UK, 1–4 September 2009. [Google Scholar]

- Cao, X.; Li, Y.; Zhang, C.; Wang, J. Reinforcement learning-based battery management system for electric vehicles: A comparative study. J. Energy Storage 2021, 42, 102383. [Google Scholar] [CrossRef]

- Xiong, R.; Sun, F.; Chen, Z.; He, H. A data-driven multi-scale extended Kalman filtering based parameter and state estimation approach of lithium-ion polymer battery in electric vehicles. Appl. Energ. 2014, 113, 463–476. [Google Scholar] [CrossRef]

- Al-Alawi, B.M.; Bradley, T.H. Total cost of ownership, payback, and consumer preference modeling of plug-in hybrid electric vehicles. Appl. Energ. 2013, 103, 488–506. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, L.; Zhang, Z.; Yu, H.; Wang, W.; Ouyang, M.; Zhang, C.; Sun, Q.; Yan, X.; Yang, S.; et al. Battery State of Health Estimate Strategies: From Data Analysis to End-Cloud Collaborative Framework. Batteries 2023, 9, 351. [Google Scholar] [CrossRef]

- Mishra, S.; Swain, S.C. Utilizing the Unscented Kalman Filter for Estimating State of Charge in Lithium-Ion Batteries of Electric Vehicles. In Proceedings of the Fifteenth Annual IEEE Green Technologies (GreenTech) Conference, Denver, CO, USA, 19–21 April 2023; pp. 1–6. [Google Scholar]

- Topan, P.A.; Ramadan, M.N.; Fathoni, G.; Cahyadi, A.I.; Wahyunggoro, O. State of Charge (SOC) and State of Health (SOH) estimation on lithium polymer battery via Kalman filter. In Proceedings of the 2016 2nd International Conference on Science and Technology-Computer (ICST), Yogyakarta, Indonesia, 27–28 October 2016; pp. 93–96. [Google Scholar] [CrossRef]

- Nath, A.; Rather, Z.; Mitra, I.; Srinivasan, L. Multi-Criteria Approach for Identification and Ranking of Key Interventions for Seamless Adoption of Electric Vehicle Charging Infrastructure. IEEE Trans. Veh. Technol. 2023, 72, 8697–8708. [Google Scholar] [CrossRef]

- Karnehm, D.; Pohlmann, S.; Neve, A. State-of-Charge (SoC) Balancing of Battery Modular Multilevel Management (BM3) Converter using Q-Learning. In Proceedings of the 2023 IEEE Green Technologies Conference (GreenTech), Denver, CO, USA, 19–21 April 2023; pp. 107–111. [Google Scholar] [CrossRef]

- Shateri, M. Privacy-Cost Management in Smart Meters: Classical vs Deep Q-Learning with Mutual Information. In Proceedings of the 2023 IEEE 11th International Conference on Smart Energy Grid Engineering (SEGE), Oshawa, ON, Canada, 19–21 April 2023; pp. 109–113. [Google Scholar] [CrossRef]

- Ahmadian, S.; Tahmasbi, M.; Abedi, R. Q-learning based control for energy management of series-parallel hybrid vehicles with balanced fuel consumption and battery life. Energy AI 2023, 11, 100217. [Google Scholar] [CrossRef]

- Corinaldesi, C.; Lettner, G.; Schwabeneder, D.; Ajanovic, A.; Auer, H. Impact of Different Charging Strategies for Electric Vehicles in an Austrian Office Site. Energies 2020, 13, 5858. [Google Scholar] [CrossRef]

- Kene, R.O.; Olwal, T.O. Energy Management and Optimization of Large-Scale Electric Vehicle Charging on the Grid. World Electr. Veh. J. 2023, 14, 95. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Zhang, C.; Sun, C. Q-learning-based battery management system with real-time state-of-charge estimation for electric vehicles. IEEE Trans. Veh. Technol. 2021, 70, 6931–6944. [Google Scholar]

- Zhang, W.; Hu, X.; Sun, C.; Zou, C. A Q-learning-based battery management system with adaptive state-of-charge estimation for electric vehicles. IEEE Trans. Ind. Electron. 2022, 69, 11718–11728. [Google Scholar]

- Suanpang, P.; Jamjuntr, P.; Jermsittiparsert, K.; Kaewyong, P. Tourism Service Scheduling in Smart City Based on Hybrid Genetic Algorithm Simulated Annealing Algorithm. Sustainability 2022, 14, 16293. [Google Scholar] [CrossRef]

- International Energy Agency (IEA). EV Charging in Thailand Market Overview 2023–2027. Available online: https://www.reportlinker.com/market-report/Electric-Vehicle/726537/Electric_Vehicle_Charging (accessed on 9 July 2024).

- Zhang, W.; Wang, J.; Sun, C.; Ecker, M. A review of reinforcement learning for battery management in electric vehicles. J. Power Sources 2020, 459, 228025. [Google Scholar] [CrossRef]

- Alanazi, F. Electric Vehicles: Benefits, Challenges, and Potential Solutions for Widespread Adaptation. Appl. Sci. 2023, 13, 6016. [Google Scholar] [CrossRef]

- Turrentine, T.; Kurani, K. Consumer Considerations in the Transition to Electric Vehicles: A Review of the Research Literature. Energy Policy 2019, 127, 14–27. [Google Scholar] [CrossRef]

- Ahmed, A.; El Baset, A.; El Halim, A.; Ehab, E.H.; Bayoumi, W.; El-Khattam, A.; Ibrahim, A. Electric vehicles: A review of their components and technologies. Int. J. Power Electron. Drive Syst. 2022, 13, 2041–2061. [Google Scholar] [CrossRef]

- Szumska, E.; Jurecki, R. Technological Developments in Vehicles with Electric Drive. Combust. Engines 2023, 194, 38–47. [Google Scholar] [CrossRef]

- Murali, N.; Mini, V.P.; Ushakumari, S. Electric Vehicle Market Analysis and Trends. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24–26 November 2022. [Google Scholar] [CrossRef]

- Ouramdane, O.; Elbouchikhi, E.; Amirat, Y.; Le Gall, F.; Sedgh Gooya, E. Home Energy Management Considering Renewable Resources, Energy Storage, and an Electric Vehicle as a Backup. Energies 2022, 15, 2830. [Google Scholar] [CrossRef]

- Kostenko, A. Overview of European Trends in Electric Vehicle Implementation and the Influence on the Power System. Syst. Res. Energy 2022, 2022, 62–71. [Google Scholar] [CrossRef]

- Boonchunone, S.; Nami, M.; Krommuang, A.; Suwunnamek, O. Exploring the Effects of Perceived Values on Consumer Usage Intention for Electric Vehicle in Thailand: The Mediating Effect of Satisfaction. Acta Logist. 2023, 10, 151–164. [Google Scholar] [CrossRef]

- Butler, D.; Mehnen, J. Challenges for the Adoption of Electric Vehicles in Thailand: Potential Impacts, Barriers, and Public Policy Recommendations. Sustainability 2023, 15, 9470. [Google Scholar] [CrossRef]

- Chinda, T. Manufacturer and Consumer’s Perceptions towards Electric Vehicle Market in Thailand. J. Ind. Integr. Manag. 2023. [Google Scholar] [CrossRef]

- Dokrak, I.; Rakwichian, W.; Rachapradit, P.; Thanarak, P. The Business Analysis of Electric Vehicle Charging Stations to Power Environmentally Friendly Tourism: A Case Study of the Khao Kho Route in Thailand. Int. J. Energy Econ. Policy 2022, 12, 102–111. [Google Scholar] [CrossRef]

- Bhovichitra, P.; Shrestha, A. The Impact of Video Marketing on Social Media Platforms on the Millennial’s Purchasing Intention toward Electric Vehicles. J. Econ. Finance Manag. Stud. 2022, 5, 3726–3730. [Google Scholar] [CrossRef]

- Qi, S.; Cheng, Y.; Li, Z.; Wang, J.; Li, H.; Zhang, C. Advanced Deep Learning Techniques for Battery Thermal Management in New Energy Vehicles. Energies 2024, 17, 4132. [Google Scholar] [CrossRef]

- Li, C.; Hu, X.; Zhang, S.; Wang, Y.; Duan, Q. Electric Vehicle Battery. U.S. Patent US20160202640A1, 8 January 2016. Available online: https://patents.google.com/patent/US20160202640A1 (accessed on 9 July 2024).

- Xu, G.; Tang, M.; Cai, W.; Tan, Y. Electric Vehicle Battery Pack. U.S. Patent US20150093510A1, 2 April 2015. Available online: https://patents.google.com/patent/US20150093510A1 (accessed on 9 July 2024).

- Dhameja, S. Electric Vehicle Batteries, 1st ed.; Newnes: Oxford, UK, 2002. [Google Scholar] [CrossRef]

- Link, S.; Neef, C. Trends in Automotive Battery Cell Design: A Statistical Analysis of Empirical Data. Batteries 2023, 9, 261. [Google Scholar] [CrossRef]

- Liu, C.; Bi, C. Current Situation and Trend of Electric Vehicle Battery Business-Take CATL as an example. Tech. Soc. Sci. J. 2022, 38, 7975. [Google Scholar] [CrossRef]

- Dimitriadou, K.; Hatzivasilis, G.; Ioannidis, S. Current Trends in Electric Vehicle Charging Infrastructure; Opportunities and Challenges in Wireless Charging Integration. Energies 2023, 16, 2057. [Google Scholar] [CrossRef]

- Zhao, J.; Burke, A. Electric Vehicle Batteries: Status and Perspectives of Data-Driven Diagnosis and Prognosis. Batteries 2022, 8, 142. [Google Scholar] [CrossRef]

- Garg, V.K.; Kumar, D. A review of electric vehicle technology: Architectures, battery technology and its management system, relevant standards, application of artificial intelligence, cyber security, and interoperability challenges. IET Electr. Syst. Transp. 2023, 3, 2083. [Google Scholar] [CrossRef]

- Mehar, D.; Varshney, P.; Saini, D. A Review on Battery Technologies and Its Challenges in Electrical Vehicle. In Proceedings of the 2023 3rd International Conference on Energy, Systems, and Applications (ICESA), Pune, India, 4–6 December 2023; p. 63067. [Google Scholar] [CrossRef]

- Ahasan, H.A.K.M.; Masrur, H.; Islam, M.S.; Sarker, S.K.; Hossain, M.S. Lithium-Ion Battery Management System for Electric Vehicles: Constraints, Challenges, and Recommendations. Batteries 2023, 9, 152. [Google Scholar] [CrossRef]

- Roy, H.; Gupta, N.; Patel, S.; Mistry, B. Global Advancements and Current Challenges of Electric Vehicle Batteries and Their Prospects: A Comprehensive Review. Sustainability 2022, 14, 16684. [Google Scholar] [CrossRef]

- Santhira Sekeran, M.; Živadinović, M.; Spiliopoulou, M. Transferability of a Battery Cell End-of-Life Prediction Model Using Survival Analysis. Energies 2022, 15, 2930. [Google Scholar] [CrossRef]

- Ranjan, R.; Yadav, R. An Overview and Future Reflection of Battery Management Systems in Electric Vehicles. J. Energy Storage 2023, 35, 567. [Google Scholar] [CrossRef]

- Thilak, K.; Kumari, M.S.; Manogaran, G.; Khuman, R.; Janardhana, M.; Hemanth, V.K. An Investigation on Battery Management System for Autonomous Electric Vehicles. IEEE Trans. Ind. Appl. 2023, 56, 5341. [Google Scholar]

- Bhushan, N.; Desai, K.; Suthar, R. Overview of Model- and Non-Model-Based Online Battery Management Systems for Electric Vehicle Applications: A Comprehensive Review of Experimental and Simulation Studies. Sustainability 2022, 14, 15912. [Google Scholar] [CrossRef]

- Hossain, M.S.; Islam, M.T.; Ali, A.B.; Abdullah-Al-Mamun, M.; Hassan, M.R.; Nizam, M.T.; Jayed, M.A. Smart Battery Management Technology in Electric Vehicle Applications: Analytical and Technical Assessment toward Emerging Future Directions. Batteries 2022, 8, 219. [Google Scholar] [CrossRef]

- Kumar, R.S.; Kamal, A.H.; Selvam, S.P.; Rajesh, M. Battery Management System for Renewable E-Vehicle. IEEE Trans. Energy Convers. 2023, 24, 1092. [Google Scholar] [CrossRef]

- Suanpang, P.; Jamjuntr, P. Machine Learning Models for Solar Power Generation Forecasting in Microgrid Application Implications for Smart Cities. Sustainability 2024, 16, 6087. [Google Scholar] [CrossRef]

- Ali, H.; Tsegaye, D.; Abera, M. Dual-Layer Q-Learning Strategy for Energy Management of Battery Storage in Grid-Connected Microgrids. Energies 2023, 16, 1334. [Google Scholar] [CrossRef]

- Ye, Y.; Zhao, J.; Li, M.; Zhu, Y. Reinforcement Learning-Based Energy Management System Enhancement Using Digital Twin for Electric Vehicles. In Proceedings of the 2022 IEEE Vehicle Power and Propulsion Conference (VPPC), Gijón, Spain, 26–29 October 2022; pp. 343–347. [Google Scholar] [CrossRef]

- Xu, B.; Wang, L.; Li, X.; He, H.; Sun, Y. Hierarchical Q-learning network for online simultaneous optimization of energy efficiency and battery life of the battery/ultracapacitor electric vehicle. J. Energy Storage 2022, 52, 103925. [Google Scholar] [CrossRef]

- Muriithi, G.; Chowdhury, S.P. Deep Q-network application for optimal energy management in a grid-tied solar PV-Battery microgrid. J. Eng. 2022, 21, 12128. [Google Scholar] [CrossRef]

- Sousa, T.J.C.; Pedrosa, D.; Monteiro, V.; Afonso, J.L. A Review on Integrated Battery Chargers for Electric Vehicles. Energies 2022, 15, 2756. [Google Scholar] [CrossRef]

- Suanpang, P.; Jamjuntr, P.; Jermsittiparsert, K.; Kaewyong, P. Autonomous Energy Management by Applying Deep Q-Learning to Enhance Sustainability in Smart Tourism Cities. Energies 2022, 15, 1906. [Google Scholar] [CrossRef]

- Qi, S.; Zhao, Y.; Li, Z.; Zhao, Y. Control Strategy of Energy Storage Device in Distribution Network Based on Reinforcement Learning Q-learning Algorithm. In Proceedings of the 2022 International Conference on Control, Automation and Systems Integration Technology (ICCASIT), Tokyo, Japan, 27 November–1 December 2022; p. 6984. [Google Scholar] [CrossRef]

- Rokh, S.B.; Soltani, M.; Eftekhari, S.; Rakhshan, F.; Hosseini, S. Real-Time Optimization of Microgrid Energy Management Using Double Deep Q-Network. In Proceedings of the 2023 IEEE International Conference on Smart Grid and Clean Energy Technologies (ICSGCET), Shanghai, China, 13–15 October 2023; p. 66355. [Google Scholar] [CrossRef]

- Xiao, G.; Chen, Q.; Xiao, P.; Zhang, L.; Rong, Q. Multiobjective Optimization for a Li-Ion Battery and Supercapacitor Hybrid Energy Storage Electric Vehicle. Energies 2022, 15, 2821. [Google Scholar] [CrossRef]

- Kong, H.; Wu, Z.; Luo, Y.; Xu, J.; Huang, X. Energy management strategy for electric vehicles based on deep Q-learning using Bayesian optimization. Neural Comput. Appl. 2020, 32, 4556–4574. [Google Scholar] [CrossRef]

- Bignold, A.; Parisini, T.; Mangini, M. A conceptual framework for externally-influenced agents: An assisted reinforcement learning review. J. Ambient. Intell. Humaniz. Comput. 2023, 21, 3489. [Google Scholar] [CrossRef]

- Hu, X.; Liang, C.; Xie, J.; Li, Z. A survey of reinforcement learning applications in battery management systems. IEEE Trans. Intell. Transp. Syst. 2021, 22, 11119. [Google Scholar]

- Vardopoulos, I.; Papoui-Evangelou, M.; Nosova, B.; Salvati, E. Smart ‘Tourist Cities’ Revisited: Culture-Led Urban Sustainability and the Global Real Estate Market. Sustainability 2023, 15, 4313. [Google Scholar] [CrossRef]

- Xu, R. Framework for Building Smart Tourism Big Data Mining Model for Sustainable Development. Sustainability 2023, 15, 5162. [Google Scholar] [CrossRef]

- Madeira, C.; Rodrigues, P.; Gómez-Suárez, M. A Bibliometric and Content Analysis of Sustainability and Smart Tourism. Urban Sci. 2023, 7, 33. [Google Scholar] [CrossRef]

- Lata, S.; Jasrotia, A.; Sharma, S. Sustainable development in tourism destinations through smart cities: A case of urban planning in jammu city. Enlightening Tour. Pathmaking J. 2022, 12, 6911. [Google Scholar] [CrossRef]

- Zearban, M.; Abdelaziz, M.; Abdelwahab, M. The Temperature Effect on Electric Vehicle’s Lithium-Ion Battery Aging Using Machine Learning Algorithm. Eng. Proc. 2024, 70, 53. [Google Scholar] [CrossRef]

- Suanpang, P.; Pothipassa, P.; Jermsittiparsert, K.; Netwong, T. Integration of Kouprey-Inspired Optimization Algorithms with Smart Energy Nodes for Sustainable Energy Management of Agricultural Orchards. Energies 2022, 15, 2890. [Google Scholar] [CrossRef]

- Suanpang, P.; Pothipassa, P.; Jittithavorn, C. Blockchain of Things (BoT) Innovation for Smart Tourism. Int. J. Tour. Res. 2024, 26, e2606. [Google Scholar] [CrossRef]

- Zhao, J.; Liu, S.; Yin, Z.; Wang, S. Deep reinforcement learning for battery management system of electric vehicles: A review. CSEE J. Power Energy Syst. 2022, 8, 1334. [Google Scholar]

- Chan, C.K.; Wong, Y.; Sun, Q.; Ng, B. Optimizing Thermal Management System in Electric Vehicle Battery Packs for Sustainable Transportation. Sustainability 2023, 15, 11822. [Google Scholar] [CrossRef]

- Kosuru, V.S.R.; Balakrishna, K. A Smart Battery Management System for Electric Vehicles Using Deep Learning-Based Sensor Fault Detection. World Electr. Veh. J. 2023, 14, 101. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Z.; Liu, L. A comprehensive review of energy management strategies for electric vehicles. Renew. Sustain. Energy Rev. 2020, 119, 109595. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, X.; Chen, Z. A review of battery management technologies and algorithms for electric vehicle applications. J. Clean. Prod. 2019, 215, 1279–1291. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, H.; You, S. A review of machine learning for energy management in electric vehicles: Applications, challenges and opportunities. Appl. Energy 2021, 281, 115966. [Google Scholar] [CrossRef]

- Lombardo, T.; Duquesnoy, M.; El-Bouysidy, H.; Årén, F.; Gallo-Bueno, A.; Jørgensen, P.B.; Bhowmik, A.; Demortière, A.; Ayerbe, E.; Alcaide, F.; et al. Artificial Intelligence Applied to Battery Research: Hype or Reality? Chem. Rev. 2022, 122, 10899–10969. [Google Scholar] [CrossRef]

- Lombardo, A.; Nguyen, P.; Strbac, G. Q-Learning for Battery Management in Electric Vehicles: Theoretical and Experimental Analysis. Energies 2022, 15, 1234. [Google Scholar]

- Arunadevi, R.; Saranya, S. Battery Management System for Analysing Accurate Real Time Battery Condition using Machine Learning. Int. J. Comput. Sci. Mob. Comput. 2023, 12, 1–10. [Google Scholar] [CrossRef]

- Ezzouhri, A.; Charouh, Z.; Ghogho, M.; Guennoun, Z. A Data-Driven-based Framework for Battery Remaining Useful Life Prediction. IEEE Access 2023, 11, 76142–76155. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Pi, D.; Lin, X.; Grzesiak, L.M.; Hu, X. Battery Health-Aware and Deep Reinforcement Learning-Based Energy Management for Naturalistic Data-Driven Driving Scenarios. IEEE Trans. Transp. Electrif. 2021, 6, 3417. [Google Scholar] [CrossRef]

- Swarnkar, R.; Harikrishnan, R.; Thakur, P.; Singh, G. Electric Vehicle Lithium-ion Battery Ageing Analysis under Dynamic Condition: A Machine Learning Approach. Africa Res. J. 2023, 24, 2788. [Google Scholar] [CrossRef]

- Baberwal, K.; Yadav, A.K.; Saini, V.K.; Lamba, R.; Kumar, R. Data Driven Energy Management of Residential PV-Battery System Using Q-Learning. In Proceedings of the 2023 IEEE International Conference on Recent Advances in Systems Science and Engineering (RASSE), Kerala, India, 8–11 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Matare, T.N.; Folly, K.A. Energy Management System of an Electric Vehicle Charging Station Using Q-Learning and Artificial Intelligence. In Proceedings of the 2023 IEEE PES GTD International Conference and Exposition (GTD), Istanbul, Turkey, 22–25 May 2023; pp. 246–250. [Google Scholar] [CrossRef]

- Bennehalli, B.; Singh, L.; Stephen, S.D.; Venkata Prasad, P.; Mallala, B. Machine Learning Approach to Battery Management and Balancing Techniques for Enhancing Electric Vehicle Battery Performance. J. Electr. Syst. 2024, 20, 885–892. [Google Scholar]

- Huang, J.; Wang, P.; Liu, Y. Emerging Trends and Market Dynamics in the Electric Vehicle Industry. Energy Rep. 2023, 8, 11504–11529. [Google Scholar]

- Lee, J.; Park, S. Reducing Grid Stress with Adaptive MARL for EV Charging. IEEE Trans. Ind. Electron. 2023, 69, 4931–4940. [Google Scholar]

| Q-learning | Traditional Approach | |

|---|---|---|

| Average Energy Efficiency (%) | 92.5 | 88.3 |

| Average Battery Degradation Rate (%) | 0.8 | 1.5 |

| Total Operational Cost (THB) | 4520 | 5850 |

| Factor | Description | Data | Impact on Computational Complexity and Scalability |

|---|---|---|---|

| State and Action Space Size | The size of the state and action spaces directly influence the complexity of the Q-learning algorithm. As the number of states and actions increases, the Q-table grows. | 100–10,000 states, 4–100 actions | Larger spaces lead to a larger Q-table, requiring more memory and computation for updates. This can significantly impact scalability for very complex environments. |

| Convergence Rate | The speed at which the algorithm learns the optimal policy. | 100–10,000 iterations | Slower convergence necessitates more iterations, increasing computation time. This becomes more critical in complex environments where learning takes longer. |

| Memory Requirements | The amount of memory needed to store the Q-values for all state–action pairs. | 10 MB–1 GB memory | Larger state and action spaces lead to increased memory demands. This might become a bottleneck for problems with extensive state representations. |

| Larger State Spaces | As the state space grows, more computational resources and time are needed to explore and update Q-values. | 10,000–1,000,000 states | Higher complexity often corresponds to larger state spaces, potentially pushing the limits of Q-learning’s scalability due to the sheer number of Q-values to manage. |

| More Frequent Updates | Environments with frequent updates to Q-values increase computational complexity due to continuous exploration and updates. | Updates every episode–every step | May increase computational complexity, particularly in dynamic or rapidly changing environments. |

| Stage | Resource | Measurement | Data | Impact |

|---|---|---|---|---|

| Training | CPU | Average CPU usage | 70–80% | High CPU usage during training can affect other processes running on the system. |

| Memory | Peak memory usage | 2–4 GB | High memory usage can lead to memory exhaustion and system slowdowns. | |

| Inference | CPU | Average CPU usage | 20–30% | CPU usage during inference should be monitored to ensure real-time performance. |

| Memory | Average memory usage | 100–200 MB | Memory usage during inference is lower, making it suitable for deployment on devices with limited processing power. |

| Factor | Description | Data | Impact on Computational Complexity and Scalability |

|---|---|---|---|

| State and Action Space Size | The size of the state and action spaces directly influences the complexity of the Q-learning algorithm. As the number of states and actions increases, the Q-table grows. | 100–10,000 states, 4–100 actions | Larger spaces lead to a larger Q-table, requiring more memory and computation for updates. This can significantly impact scalability for very complex environments. |

| Convergence Rate | The speed at which the algorithm learns the optimal policy. | 100–10,000 iterations | Slower convergence necessitates more iterations, increasing computation time. This becomes more critical in complex environments where learning takes longer. |

| Memory Requirements | The amount of memory needed to store the Q-values for all state–action pairs. | 10 MB–1 GB memory | Larger state and action spaces lead to increased memory demands. This might become a bottleneck for problems with extensive state representations. |

| Larger State Spaces | As the state space grows, more computational resources and time are needed to explore and update Q-values. | 10,000–1,000,000 states | Higher complexity often corresponds to larger state spaces, potentially pushing the limits of Q-learning’s scalability due to the sheer number of Q-values to manage. |

| More Frequent Updates | Environments with frequent updates to Q-values increase computational complexity due to continuous exploration and updates. | Updates every episode—every step | May increase computational complexity, particularly in dynamic or rapidly changing environments. |

| Performance Metric | Description |

|---|---|

| Training Convergence Time | The time taken for the Q-learning algorithm to achieve an acceptable level of performance during the training phase. This could be measured by observing the average reward achieved by the agent over time or by tracking the change in Q-values. |

| Inference Latency | The time delay between receiving input from the environment (e.g., state of the game) and producing an output (e.g., action to be taken) during the deployment stage. This is crucial for real-time applications where quick decisions are necessary. |

| Overall Computational Efficiency | A combined measure of the algorithm’s efficiency considering both training and inference stages. This could involve metrics like total training time, memory usage during training and inference, and energy consumption (if relevant). |

| Condition | Problem Size | Environmental Complexity | Q-learning Computational Time (Estimated Range) | Time |

|---|---|---|---|---|

| Small problem size | Small | Low | 1–10 s | 0.1–1 s |

| Medium problem size | Medium | Moderate | 10–100 s | 1–10 s |

| Large problem size | Large | High | 100–1000 s (or more) | 10–100 s (or more) |

| Condition | Problem Size | Environmental Complexity | Q-learning Time (Estimated Range) | Alternative Approach Time (Estimated Range) |

|---|---|---|---|---|

| Condition | Small | Low | 1–10 s | 0.1–1 s |

| Medium problem size | Medium | Moderate | 10–100 s | 1–10 s |

| Large problem size | Large | High | 100–1000 s (or more) | 10–100 s (or more) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suanpang, P.; Jamjuntr, P. Optimal Electric Vehicle Battery Management Using Q-learning for Sustainability. Sustainability 2024, 16, 7180. https://doi.org/10.3390/su16167180

Suanpang P, Jamjuntr P. Optimal Electric Vehicle Battery Management Using Q-learning for Sustainability. Sustainability. 2024; 16(16):7180. https://doi.org/10.3390/su16167180

Chicago/Turabian StyleSuanpang, Pannee, and Pitchaya Jamjuntr. 2024. "Optimal Electric Vehicle Battery Management Using Q-learning for Sustainability" Sustainability 16, no. 16: 7180. https://doi.org/10.3390/su16167180

APA StyleSuanpang, P., & Jamjuntr, P. (2024). Optimal Electric Vehicle Battery Management Using Q-learning for Sustainability. Sustainability, 16(16), 7180. https://doi.org/10.3390/su16167180