1. Introduction

Artificial intelligence has attracted a lot of attention in recent times. It has great potential and can give promising results. AI’s ability to recognize and learn from data and images in different formats is of great benefit. Various industries are currently trying to incorporate artificial intelligence into their daily activities. Agriculture is one of the industries that started implementing AI to achieve a more efficient and faster way of doing things. The role that artificial intelligence (AI) plays in agriculture is becoming more important as the world population grows and the climate changes.

Crops are affected by an extensive variety of pests. Pests have been one of the biggest challenges in the agricultural sector for years [

1]. Pests reduce the yield and quality of agriculture production—about fifty percent of the crop yield is destroyed through pest infestation [

2]. Global losses for pests range between 17% and 23% for major crops [

3]. Consequently, careful pest control is an important task to reduce crop losses and improve crop yields globally. Pests should be identified after they have infected the field at an early stage, so that the farmers can perform well-timed supervision and avoid the spread of pests. Most farmers use traditional methods to identify and treat pest infestation. However, these traditional methods have many drawbacks. Firstly, the most widely used method for identification is done manually during which farmers themselves, with some expert opinions, inspect the field by hand on a daily, weekly, and monthly basis to identify and detect any signs of pests. Secondly, there are enormous amounts of types and species of pests that belong to the same family [

4] and also a wide diversity of cultivated crops, which are, as a result, difficult to identify and detect manually. Thirdly, the regular emergence and re-emergence of pests also plays a vital role. So, many conventional techniques are unable to detect pests at an early stage and the use of sprayers for the removal of pests results in damaging the plant along with the pests and also intoxicates the crops, which creates many health issues later [

5]. Traditional pest detection methods are prone to errors, can be time consuming, and can be very tedious to perform. Additionally, they may pose health risks.

Machine learning (ML) algorithms have played an important role in automating pest detection [

6,

7]. Chodey et al. [

8] used

k-means and expectation–maximization clustering to detect and identify pests in crops. Detection is done by manually extracting features from the dataset, which is a slow process if the images are of higher resolution and becomes a tedious task on a larger dataset. In another study, a support vector machine was used to detect pests in crops [

9]. After acquiring the dataset, the features were extracted manually by removing the background, and applying histogram equalization, contrast stretching, and noise removal to extract features from the images. This method achieved higher accuracy, but feature extraction gets tedious and time consuming if a larger dataset is used with a variety of pest species. Although traditional ML algorithms have been shown to work fine when the quantity of crop pest species is low, they become ineffective when several features are manually extracted.

Object detection with deep learning has various applications in agriculture [

10]; it is used to detect weeds [

11,

12], pests [

13,

14], yield prediction [

15,

16], and has many other applications in agriculture and other fields of life [

17,

18]. It has recently been applied to detect and classify different pests and has achieved great results in many pest detection and classification applications [

19]. Objects can be detected from an image or from real-time without manually extracting features from each image [

20]. Object detection in deep learning uses different state-of-the-art convolutional neural networks (CNNs) to detect a variety of objects [

21]. Pest detection using deep learning also comes with serious complexities, such as working in a wild environment, detecting small-sized pests, detecting pests of the same color as the plant, and detecting different kinds of pests in the same plant species [

22].

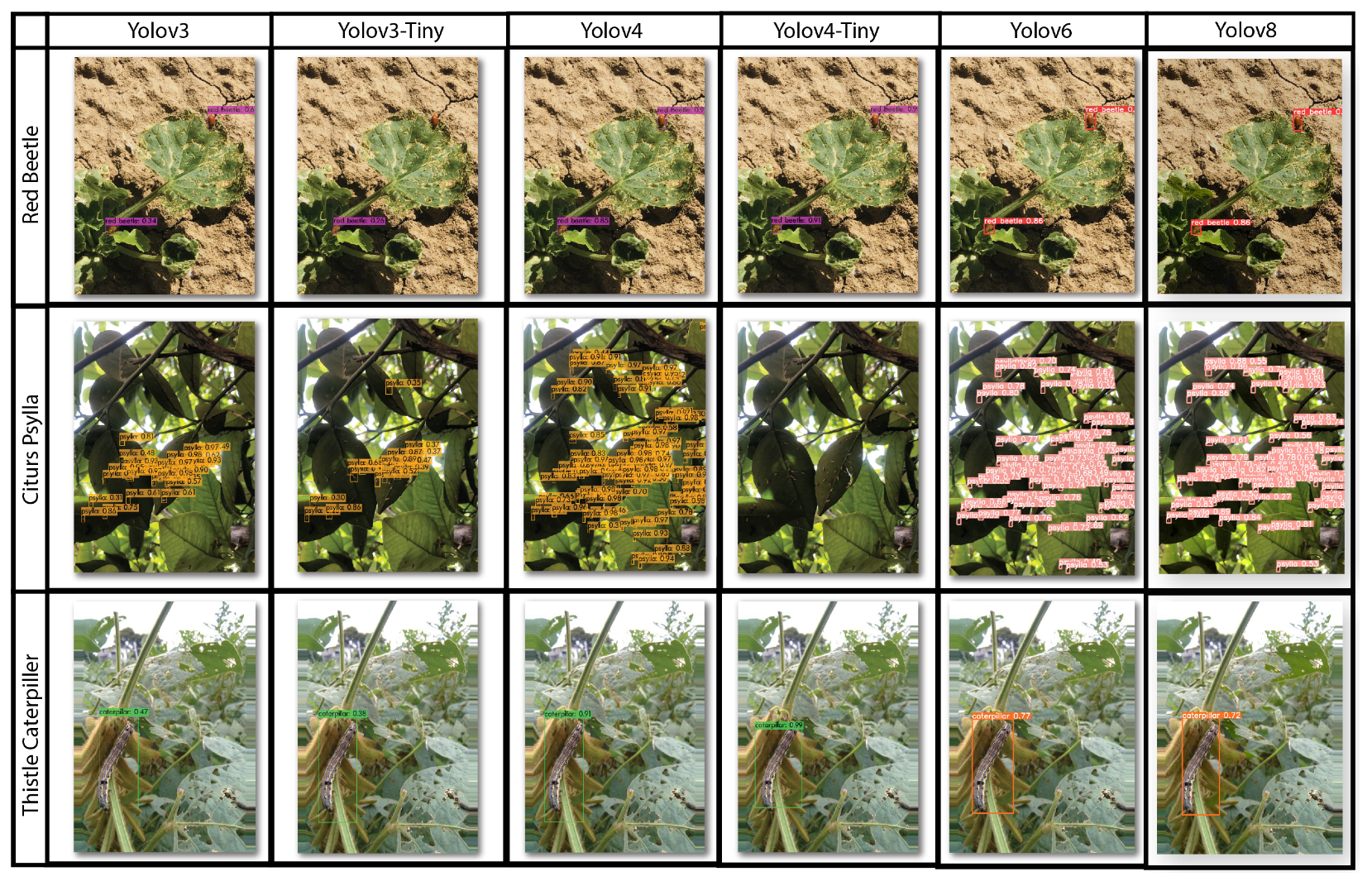

In this paper, object detection is implemented to detect thistle caterpillars, citrus psylla, and red beetles. Six state-of-the-art object detection convolutional neural networks (CNNs) are used, namely Yolov3, Yolov3-Tiny, Yolov4, Yolov4-Tiny, Yolov6, and Yolov8. The following sections describe the dataset, training processes, and evaluation of pests regarding the accuracy of all models as well as detection and localization. The remainder of the article is structured as follows.

Section 2 provides an explanation of the literature where different AI-/deep learning-/machine learning-based approaches have been used for pest management.

Section 3 gives details about the deep learning algorithms that are implemented in this work.

Section 4 gives an overview of the dataset and training of the models used in the study.

Section 5 goes through the outcomes and performance comparisons of all the models used.

Section 6 gives the study’s conclusion.

2. Review of the Literature

The literature discusses different aspects of pest detection. A variety of datasets and different detection algorithms are discussed based on small pest detection and different species of pests under different environmental conditions. Li et al. [

13] presented a framework for the detection of whitefly and thrips where images were captured by using sticky traps and the data were collected in a greenhouse environment. A two-stage custom detector was created in comparison with Faster R-CNN, which shows weak performance in recognizing small pests, and this model uses images from sticky traps, which in return give less accuracy if tested on images with complex backgrounds. The intent of [

14] was to set up models that identify three types of pest moths in pheromone trap pictures utilizing deep learning and object detection strategies. Pheromone traps were assembled and seven distinctive deep learning object detection models were applied to look at the speed and precision. Faster R-CNN gave the highest accuracy in terms of mAP. However, the dataset utilized in this review had a few impediments since they are not real pictures taken from a field. Barbedo and Castro [

23] provide a comprehensive overview of the current state of automatic pest detection using proximal images and machine learning techniques. They highlighted the challenges faced in building robust pest detection systems and the need for more comprehensive pest image databases and suggested solutions such as involving farmers and entomologists through citizen science initiatives and data sharing between research groups. They also reviewed the limitations of current monitoring techniques, such as the cost and complexity of high-density imaging sensors, and the need for better edge computing solutions for effective data processing. The study concludes by stating that automating pest monitoring is a challenging task, but has the potential for practical applications with the advancements in machine learning algorithms.

Rustia et al. [

24] implemented deep learning models for the automatic object detection of pests from traps. A CNN model was applied for the detection and filtering of irrelevant objects from the traps. The main part of this study was to represent a model for counting the number of pests present in respective images. Li et al. [

13] meant to set up a deep learning-based detection model for whitefly and thrips detection from pictures gathered from a controlled environment. For detecting tiny pests from sticky traps, a hybrid model known as the TPest-RCNN model was applied. In [

25], they have put forward a pre-prepared profound CNN-based structure for the classification and recognition of tomato pests. The DenseNet169 gave the highest accuracy of 88.83%.

Hong et al. [

26] detected a pest named matsucoccus thunbergianae, which is present on black pine on pheromone traps. Distinctively the best deep learning algorithms for object detection were used to count and detect pests from pheromone trap images. However, the trained algorithm may provide less efficient results on images from real-time and complex environments.

De Cesaro Júnior and Rieder [

27] provided a survey of techniques used for deep learning and computer vision to perform object detection for pests and diseases in plants. It was observed that CNNs need to be customized when performing feature extraction to obtain better accuracy and classification. It also explained that the resolution of images, use of good hardware, and a larger dataset influence the training of CNNs and also the accuracy and efficiency of the algorithm.

Turkoglu et al. [

28] designed a hybrid model named Multi-model LSTM-based Pre-trained Convolutional Neural Networks (MLP-CNNs). It is focused on a hybrid of the LSTM network and pre-trained CNN models. A variety of feature extraction models were used, which include AlexNet, GoogleNet, and DenseNet201, respectively. It focuses on extracting deep features from pest images which are forwarded into the LSTM layer to build a hybrid model to detect pests and diseases in apples.

Jiao et al. [

29] presented an anchor-free region convolutional network to recognize and classify agricultural pests into 24 classes. The main contribution of this study was to introduce a feature fusion model to generate fused future maps, which is helpful for multi-scale pest detection and detecting small pests. However, images used in this study are obtained from Google; a better dataset collected in real time can give better results. Their proposed model achieved a mAP of 56.4%, which can be improved because the size of the pest was too small in the images, greatly affecting the overall accuracy.

Patel and Bhatt [

30] presented multi-class pest detection utilizing the Faster R-CNN model. A smaller dataset was used and the results were compared by focusing on the accuracy of image augmentation. The limitation of this study was that it used a smaller dataset with images from traps.

Rahman et al. [

31] developed a different deep learning-based methodology for detecting and recognizing diseases and pests on rice plant images. VGG16 and InceptionV3 were used and improved to detect and identify rice diseases and pests. The results were later compared with MobileNet, NasNet Mobile, and SqueezeNet.

Legaspi et al. [

32] used Yolov3 with Darknet Architecture to detect whiteflies and fruit flies. The model struggles to detect small pests under complex environments resulting in low accuracy. Yolov2 and Yolov3 were applied by [

33] to detect pests and diseases from tea plantations. The model achieved an accuracy of 58% and 60.8% in detecting small pests. An improved deep learning algorithm can be utilized for small object detection.

Önler and Eray [

34] developed a deep learning-based object detection system to detect thistle caterpillars in real time, which is based on Yolov5 single-stage object detection. They used a public dataset and achieved a maximum mAP of 59% on 65 FPS. The Yolov5 model was trained with transfer learning and their model could detect different caterpillar species under different environmental lightening conditions with maximum accuracy achieved by Yolov5m. The accuracy of the object detection system can be improved by increasing the dataset size by adding new images, using a variety of data augmentation methods, and using a different deep learning model for object detection.

Although the studies discussed above show good performance results on pest detection, they also face some challenges, such as the surrounding of the images, detection under a variation of light conditions, and the detection of small pests, which are still difficult to overcome. Therefore, in this study, state-of-the-art approaches of CNN object detection models are deployed for pest detection in agricultural fields under different light conditions and environments.

3. Background

In this section, an overview of the object detection model Yolo and its six different variants used in this work for pest detection is presented.

3.1. Yolo for Pest Detection

You Only Look Once or Yolo is a model that detects and perceives many objects in an image or video. Additionally, it can also detect objects in real-time with good speed and accuracy. It carries out object detection as a regression issue and conveys the class probabilities of the recognized pictures.

Object detection using Yolo is performed by deploying convolutional neural networks. In order to detect and classify objects, Yolo uses a neural network with single forward propagation. The algorithm has exceptional speed and accuracy for detecting and classifying multiple objects present in an image or a video or from real-time. The Yolo algorithm has many variants. In this study, six variants of Yolo are applied to detect multiple pests.

3.2. Yolov3

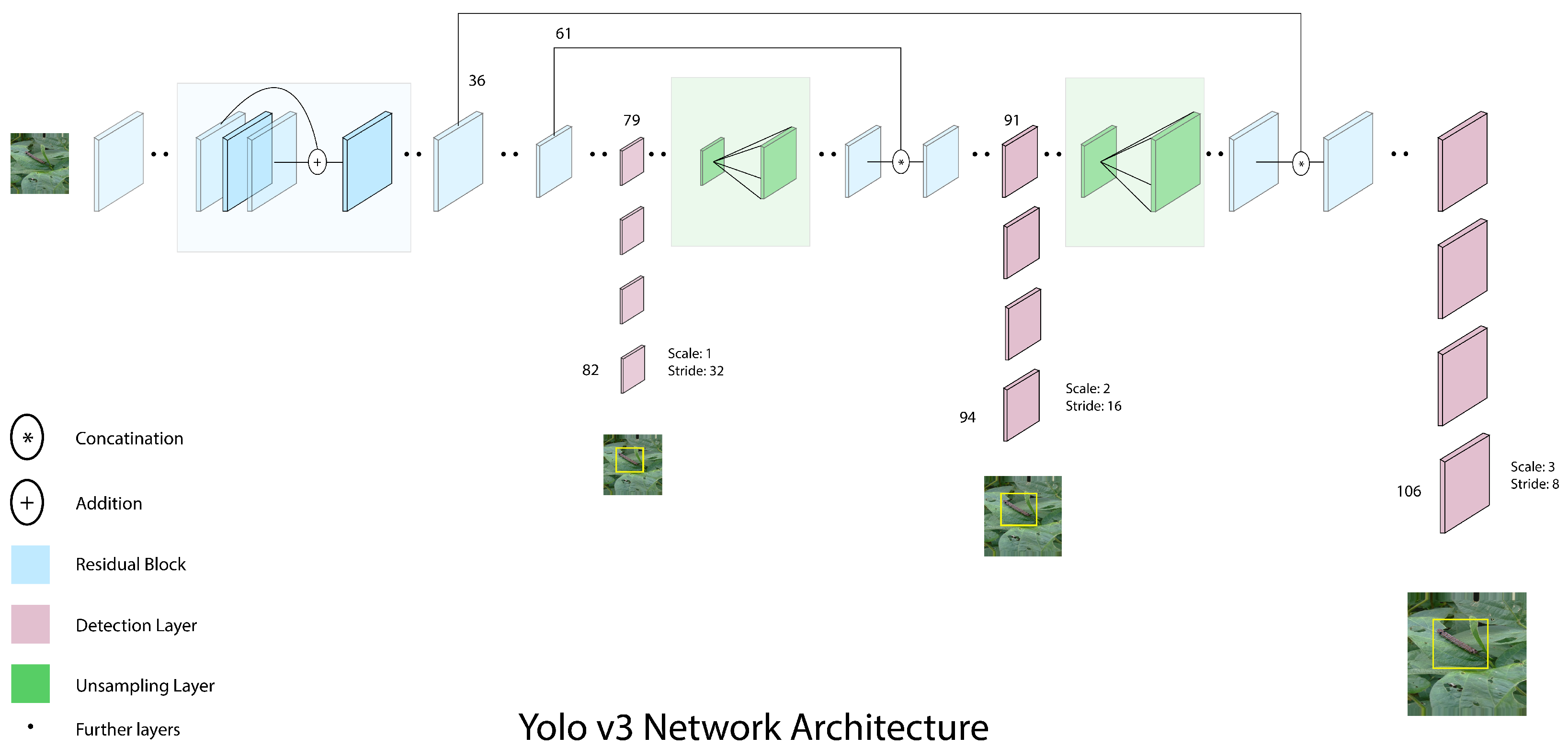

Yolov3 is a state-of-the-art object detection model [

35]. Yolov3 can maintain high speeds and accurate prediction for object detection. It is a great improvement over its predecessor Yolov2. The most highlighted improvement includes the change in the overall network structure. It utilizes Darknet-53 to extract multi-scale features to detect objects.

Yolov3 is made up of 53 convolutional layers, also known as Darknet-53; however, for detecting tasks, the original design is layered with 53 additional levels, for a total of 106 layers, which is given in

Figure 1 [

36]. Yolov3 includes some of the most important aspects, such as residual blocks, skip connections, and upsampling, with each convolutional layer followed by a batch normalization layer and a leaky ReLU activation function. There are no pooling layers, but, instead, extra convolutional layers with stride 2 are used to downsample feature maps. This is done to prevent the loss of low-level features that polling layers exclude. As a result, capturing low-level features helps to improve the ability to detect small objects.

In Yolov3, object detections are performed on three different layers, specifically at positions 82, 94, and 106 within the network architecture. As the network processes the input image, it downsamples the resolution by factors of 32, 16, and 8, respectively, at these three different locations. These downsampling factors, also known as strides, indicate that the output at each of these layers is smaller than the input to the network. By performing detections at multiple scales within the network, Yolov3 is able to detect objects of different sizes and aspect ratios with high accuracy.

For prediction, Yolov3 calculates the offset of the anchor, also known as log-space transform, to predict the center coordinates of the bounding boxes. Yolov3 passes the output centers to the sigmoid function. This is done to eliminate gradients during training. The objectness score is usually predicted through predicted probability and IoU between predicted and ground truth.

3.3. Yolov3-Tiny

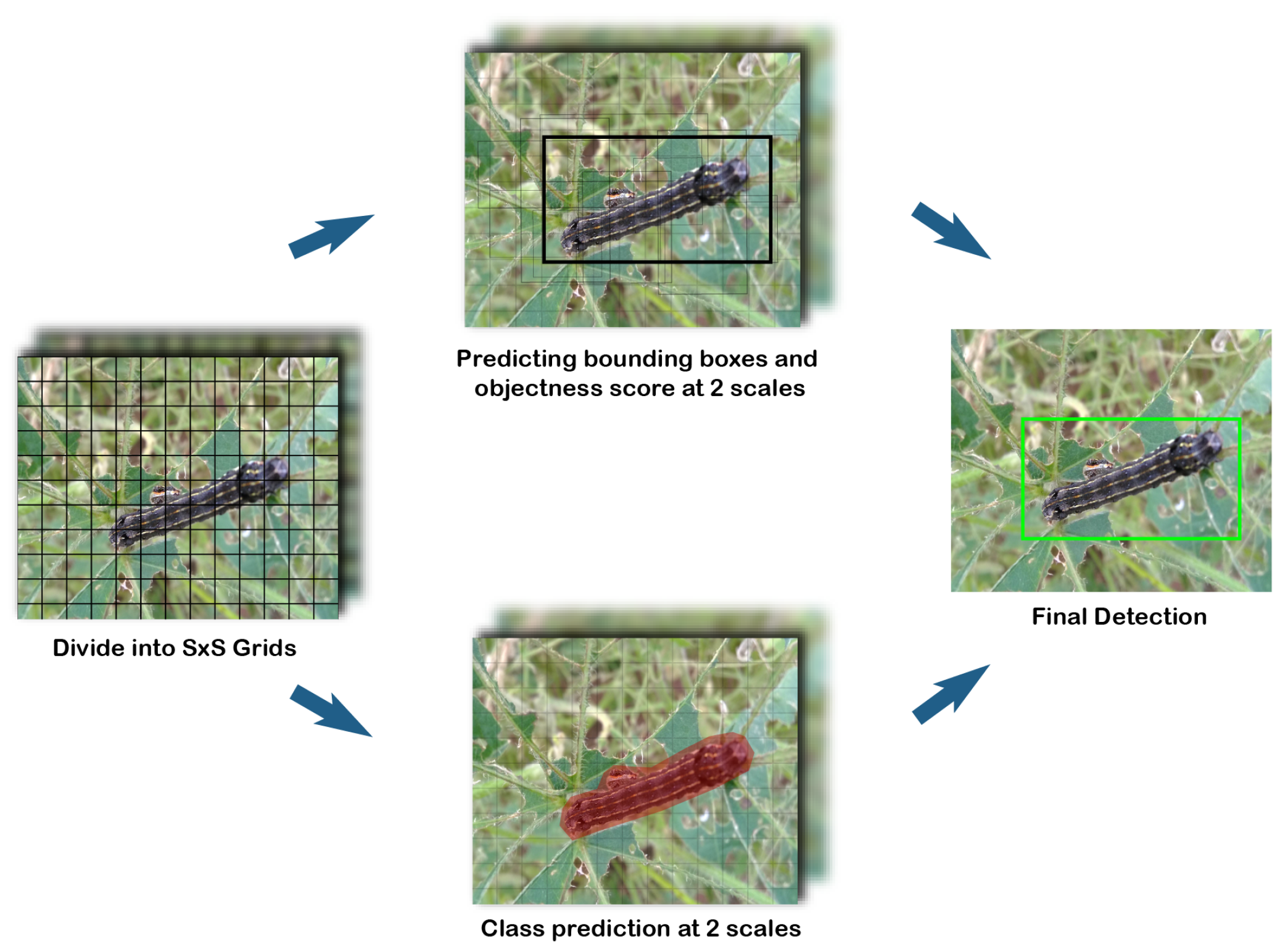

Yolov3-Tiny is a lightweight version of Yolov3 [

35]. It has a reduced depth of convolution layer compared to the Yolov3. Yolov3-Tiny also uses Darknet-53 architecture for extracting features.

Figure 2 [

37] explains the working of Yolov3-Tiny [

38]. It employs the pooling layer while lowering the figure for the convolutional layer. It splits the picture first into S×S grid cells, then predicts a three-dimensional tensor encapsulating bounding box, objectness score, and class predictions at two distinct scales. In the last stage, the bounding boxes without the best objectness score are ignored for the final detection of objects.

Yolov3-Tiny has a complex structure, as shown in

Figure 3 [

38,

39]. It contains nine convolutional and six max-pooling layers to carry out feature extraction. The bounding boxes are predicted at two different scales, which include a feature map of 13 × 13 and a merged feature map acquired by merging a 26 × 26 feature map with an unsampled 13 × 13 feature map. Such a simple structure of Yolov3-Tiny brings a very fast detection speed, but the accuracy of the model is sacrificed as compared to Yolov3.

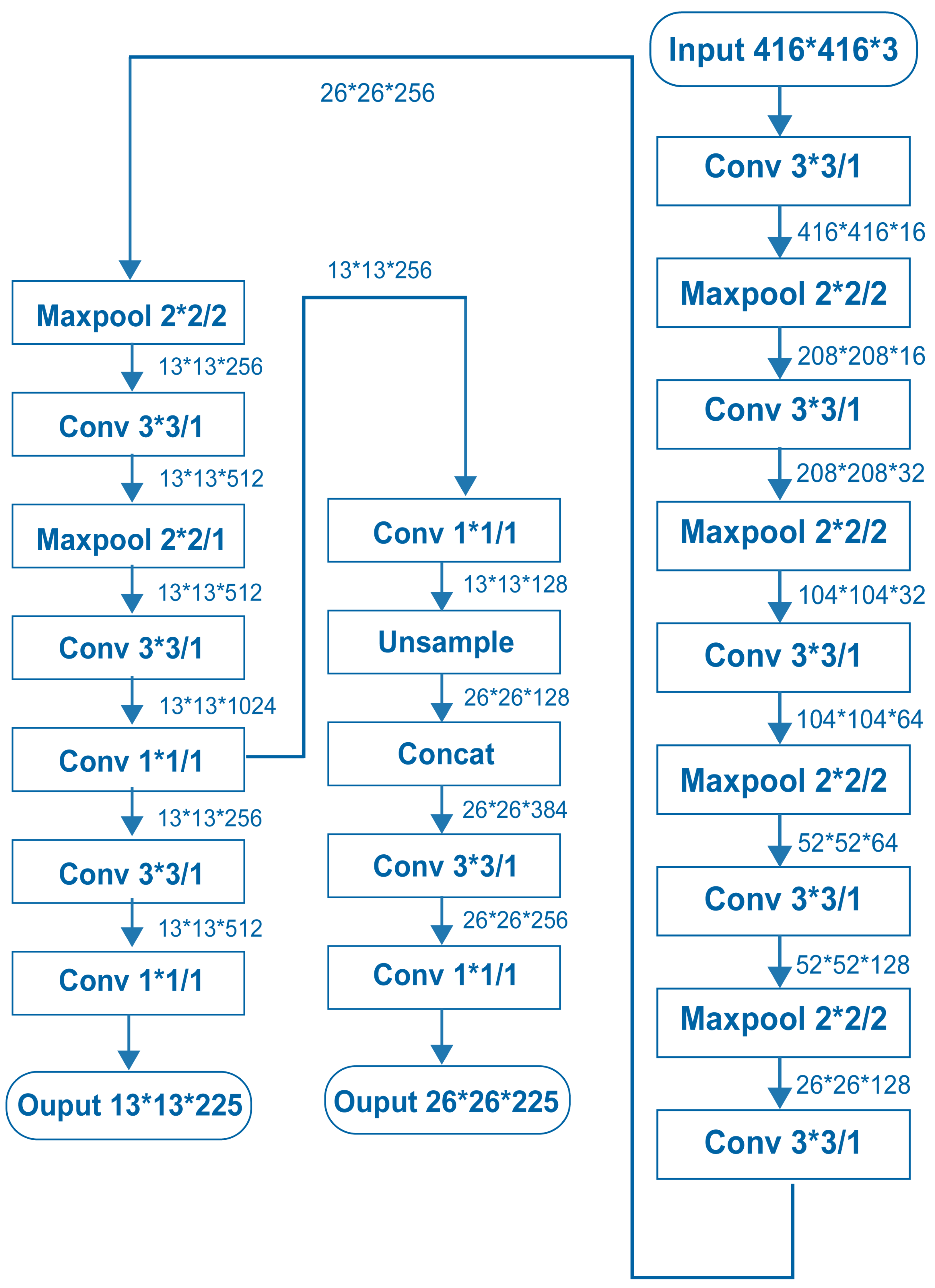

3.4. Yolov4

Yolov4 is the framework used for object detection in real-time [

40]. It can be trained based on position and classification at a fast speed and can achieve higher accuracy for both. Yolov4 achieves superior performance in comparison with the previous versions of Yolo [

40]. Its main architecture is mainly composed of three parts, as given in

Figure 4 [

41]. Firstly, the backbone in which CSP-Darknet-53 is employed for the training of the object detection model. Secondly, the neck in which spatial pyramid pooling (SPP) is merged with a path aggregation network (PANet) to extract different layers of feature maps. Lastly, the main head of Yolov4 utilizes the Yolo head. The CSP-Darknet-53 network uses a cross-stage partial network (CSPNet) and the Darknet-53 model. Most object detection models require higher computation and a relatively large amount of computation time. However, CSPNet handles this issue and improves the computation [

40]. It does that by integrating the feature maps at the start and the end to reduce heavy computation. The neck in Yolov4 intertwines various scales of feature maps which utilize SPP to concatenate various scales of feature maps toward the end of the network and uses PANet to embed another layer-based feature pyramid network.

3.5. Yolov4-Tiny

Yolov4-Tiny is a scaled-down type of Yolov4. Yolov4-Tiny has a quicker detection speed and higher accuracy than Yolov3-Tiny [

42]. Yolov4-Tiny’s network architecture is depicted in

Figure 5 [

43]. Yolov4-Tiny’s backbone network is significantly simpler than Yolov4. A feature pyramid network is utilized for 32 times downsampling and 16 times downsampling to provide two distinct sizes of feature maps for detection, which enhances detection accuracy. The Yolov4-Tiny network topology consists of many fundamental components. Conv convolution, BN normalization, and the leaky ReLU activation function are all part of the CBL module. To meet the aim of downsampling, the iteration length of the convolution kernel is adjusted to 2. To further incorporate feature information, the CSP module refers to the CSPNet network topology and is made up of the CBL module and the concat tensor splicing module.

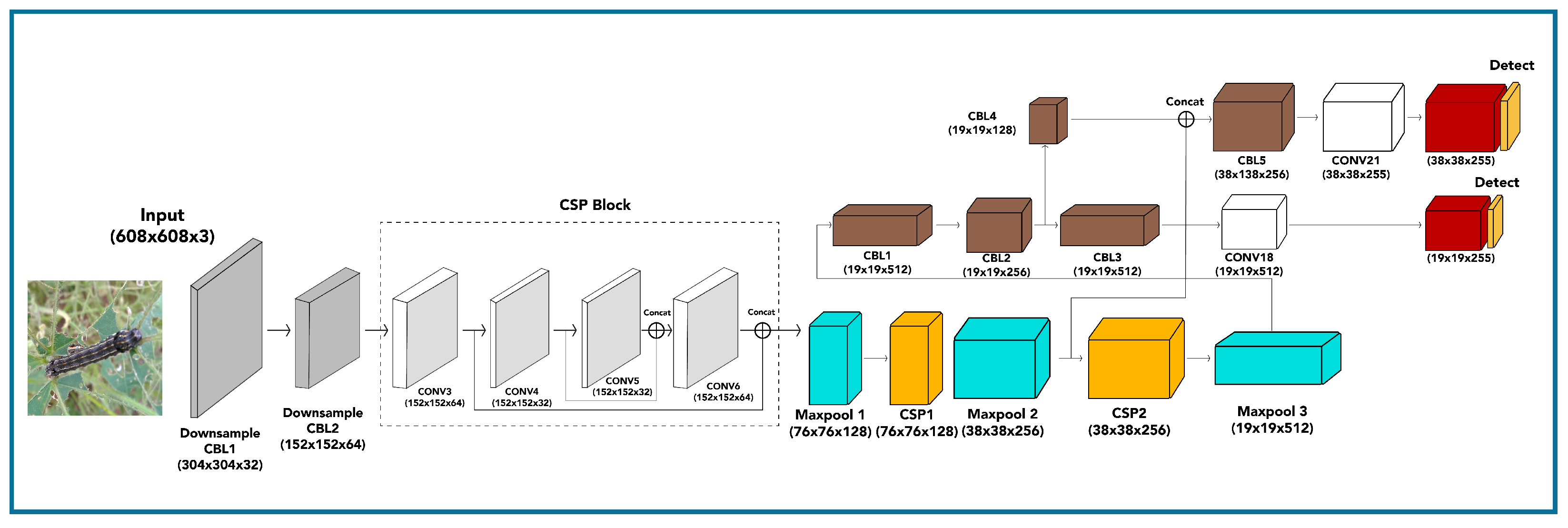

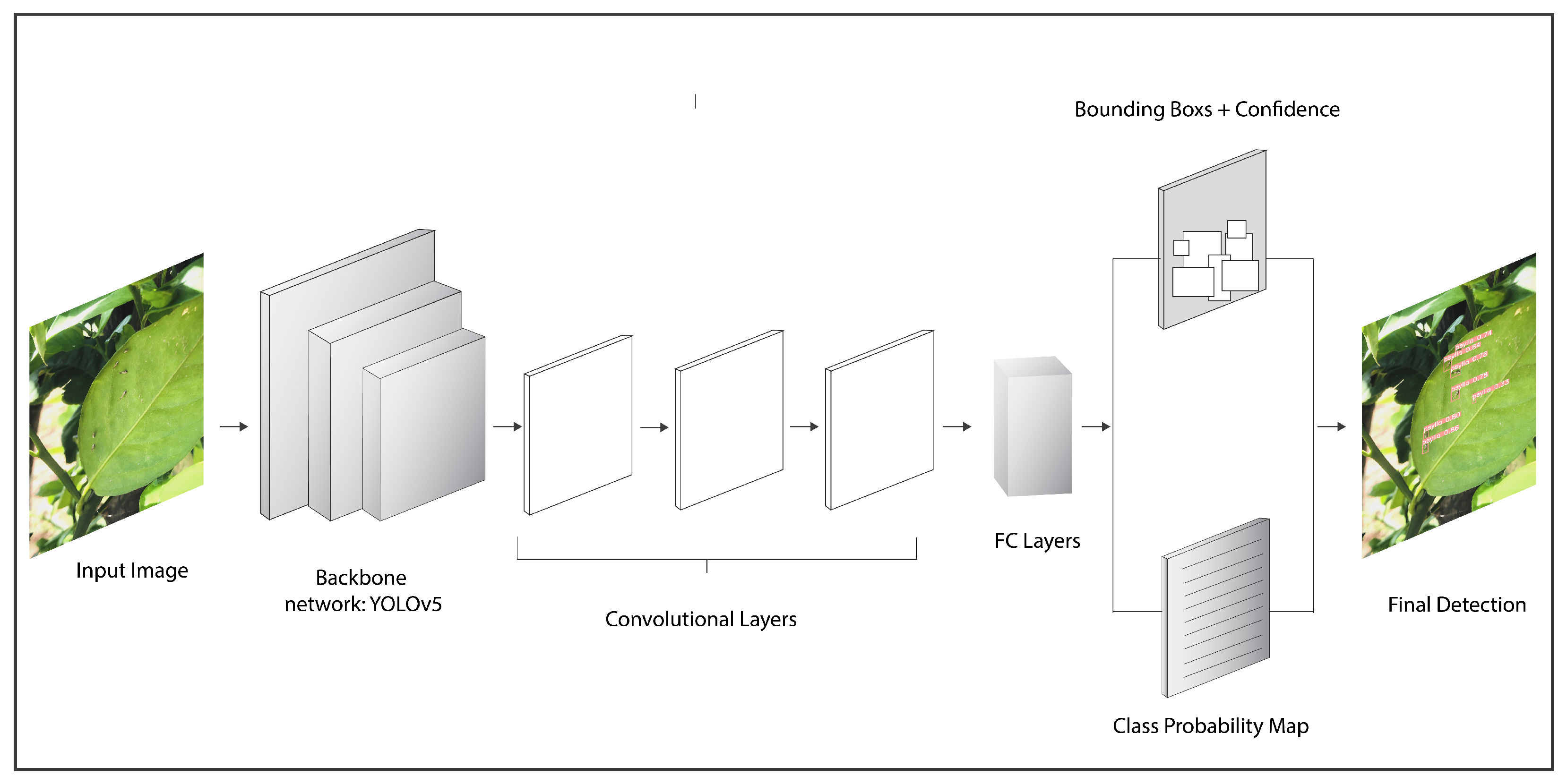

3.6. Yolov6

Yolov6 is a single-stage object detection framework which was released by [

44]. It directly predicts object bounding boxes for an image in one stage. Yolov6 architecture components consist of a backbone network based on Yolov5, a region proposal network, the use of interest aligns (RoI), and the construction of a fully connected layer, see

Figure 6. For detection, the image is divided into grid cells and bounding boxes are predicted for each object in the image. Object detection capability has been demonstrated to be equivalent to that of previous CNN-based Yolo algorithms [

35], with the algorithm’s progressive iterations showing gains in both speed and accuracy [

40]. In this study, Yolov6s is applied to detect the pests.

3.7. Yolov8

The Yolov8 is an advanced model that enhances the achievements of the prior Yolo versions by introducing novel functionalities and upgrades to increase its efficiency and adaptability [

45]. A comparison can be seen in

Figure 7 [

45]. The model employs an anchor-free detection methodology and incorporates novel convolutional layers to enhance the precision of its predictions. This enabled us to achieve highly accurate pest detection results.

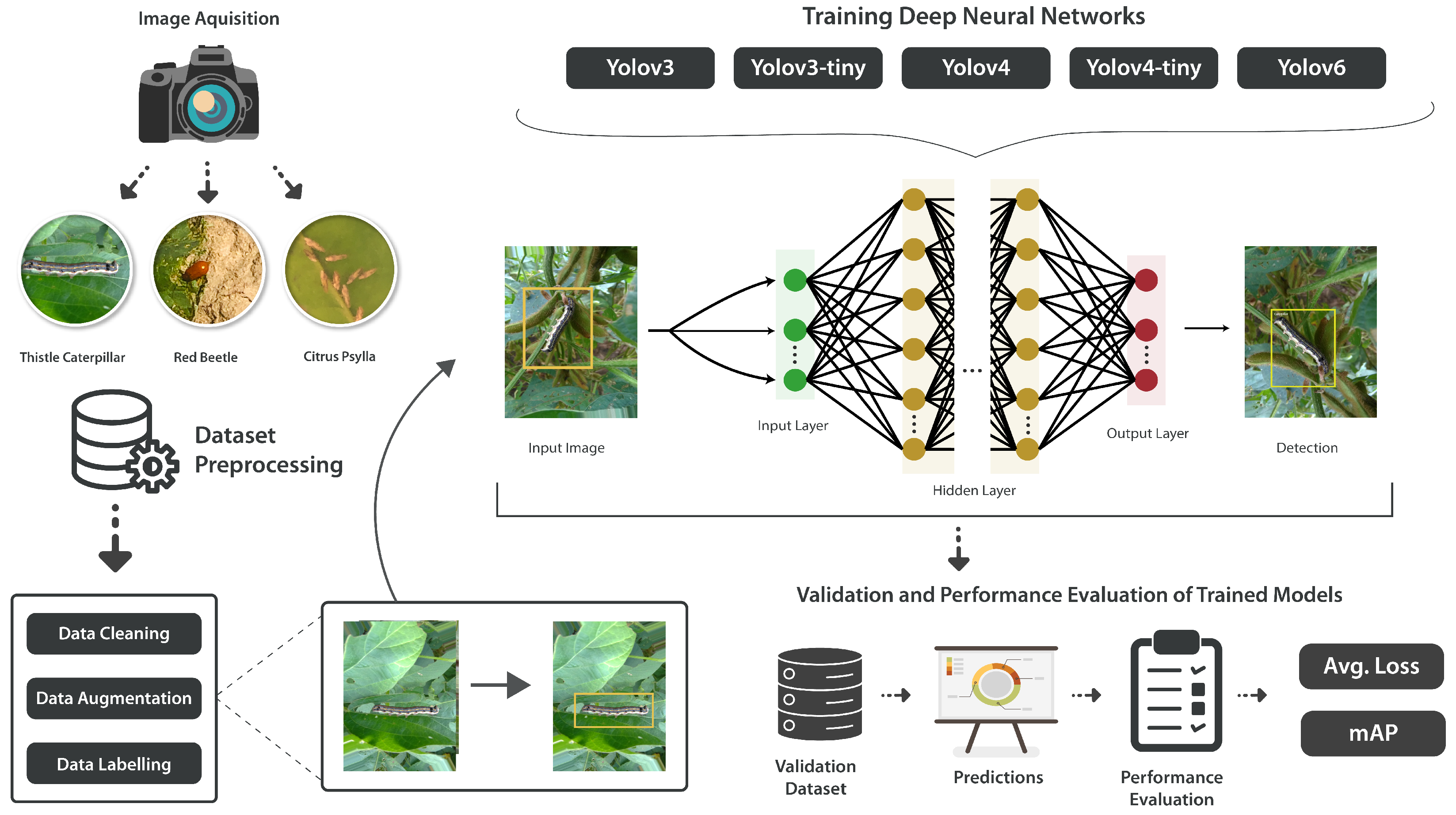

4. Methodology

This part provides an overview of the methodology, a description of the dataset and its pre-processing information, and the experimental setup.

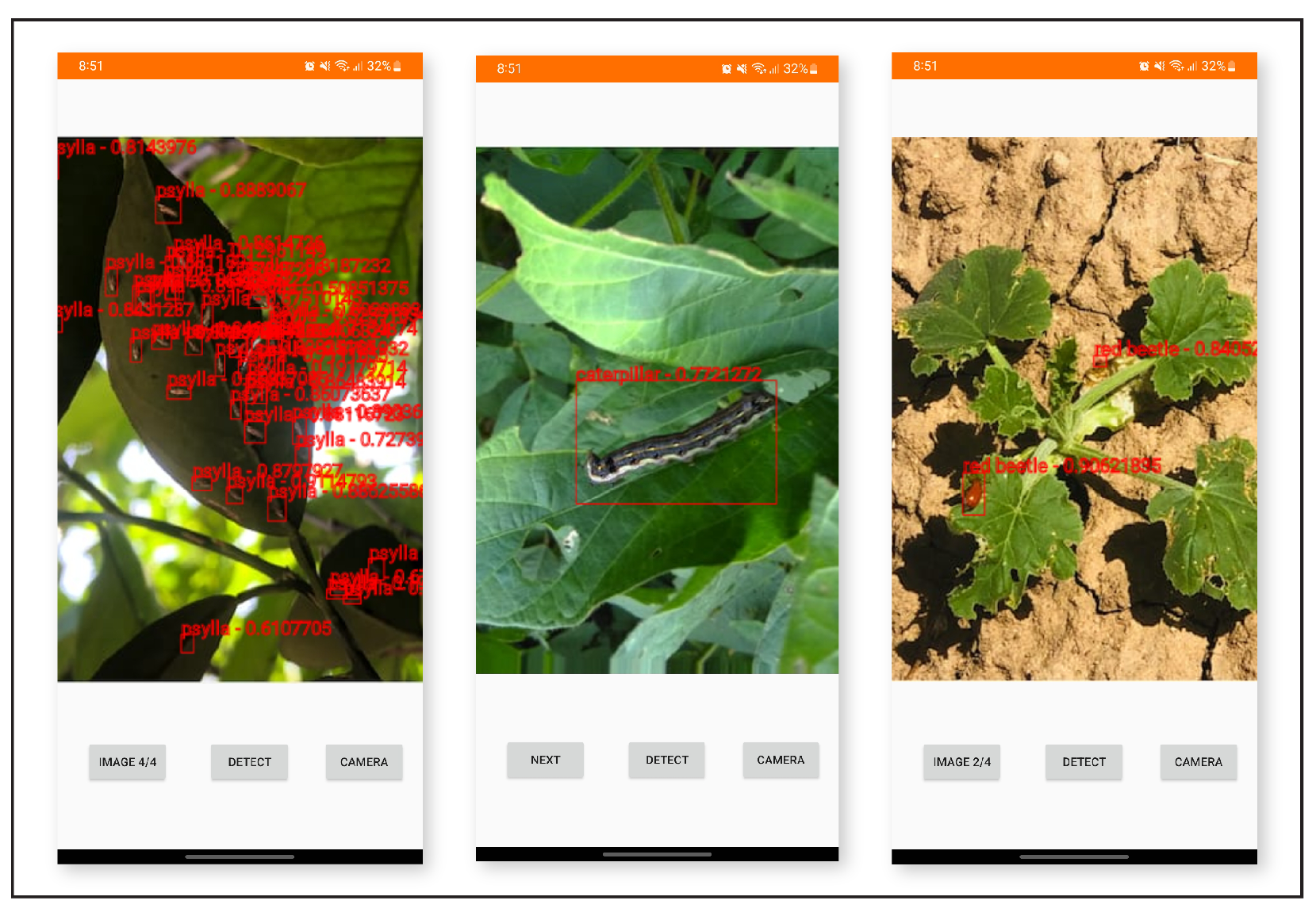

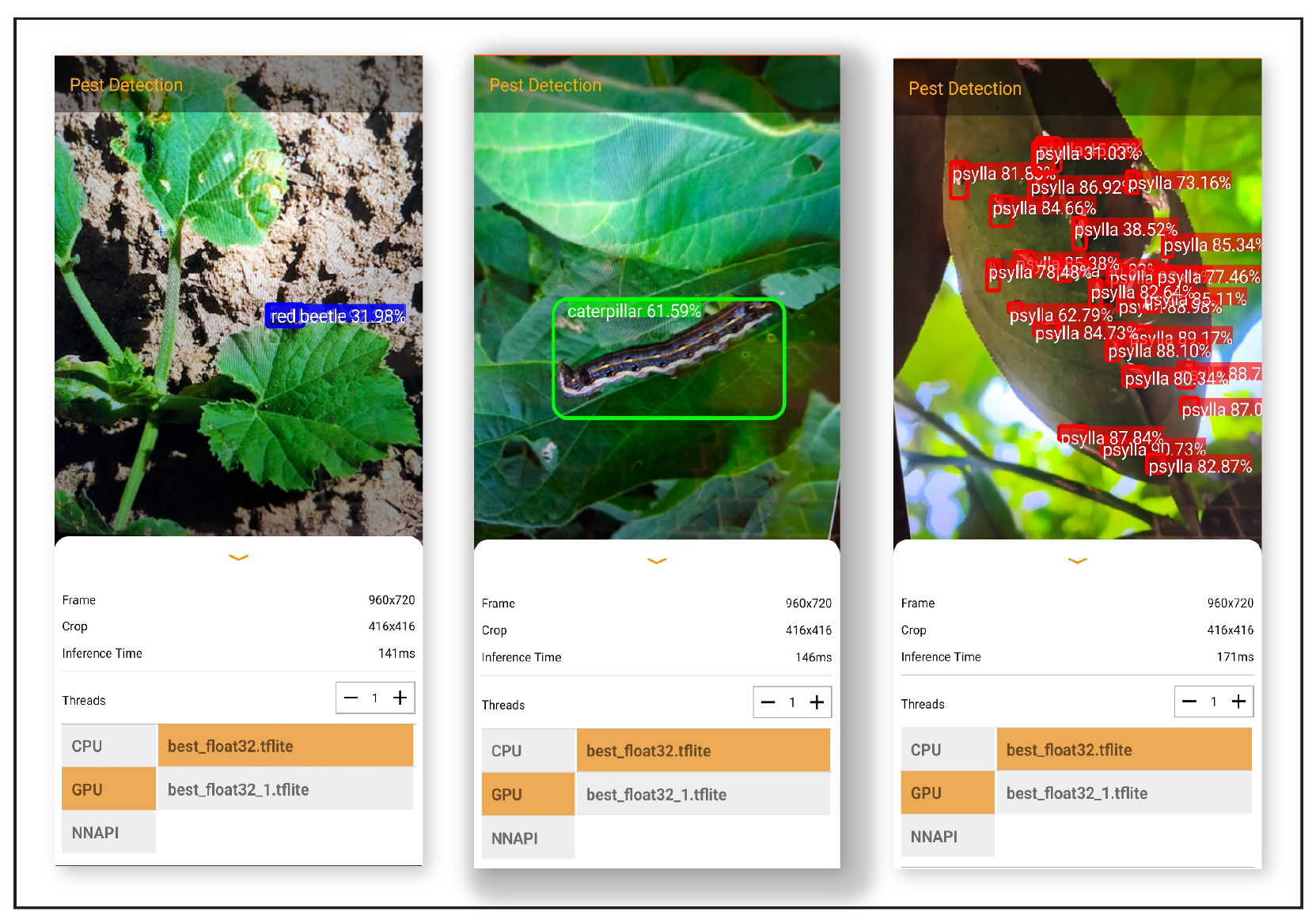

4.1. Detection Workflow

Data exploration to understand the data and data augmentation is applied. Then, the image data is labeled in Yolo_Mark, accordingly, in text (.txt) format, which is required for the architectures used. Subsequently, the dataset is split into training and testing, and validation, respectively. Following this, the models are trained on the given dataset. After training, validation is performed on the testing dataset to check the overall detection accuracy of the model. Next, the results of each model are discussed and compared. At the end, the Yolov8 model is integrated in an Android application for real-time pest detection. An overview of the methodology adopted in this study for pest detection given in the

Figure 8.

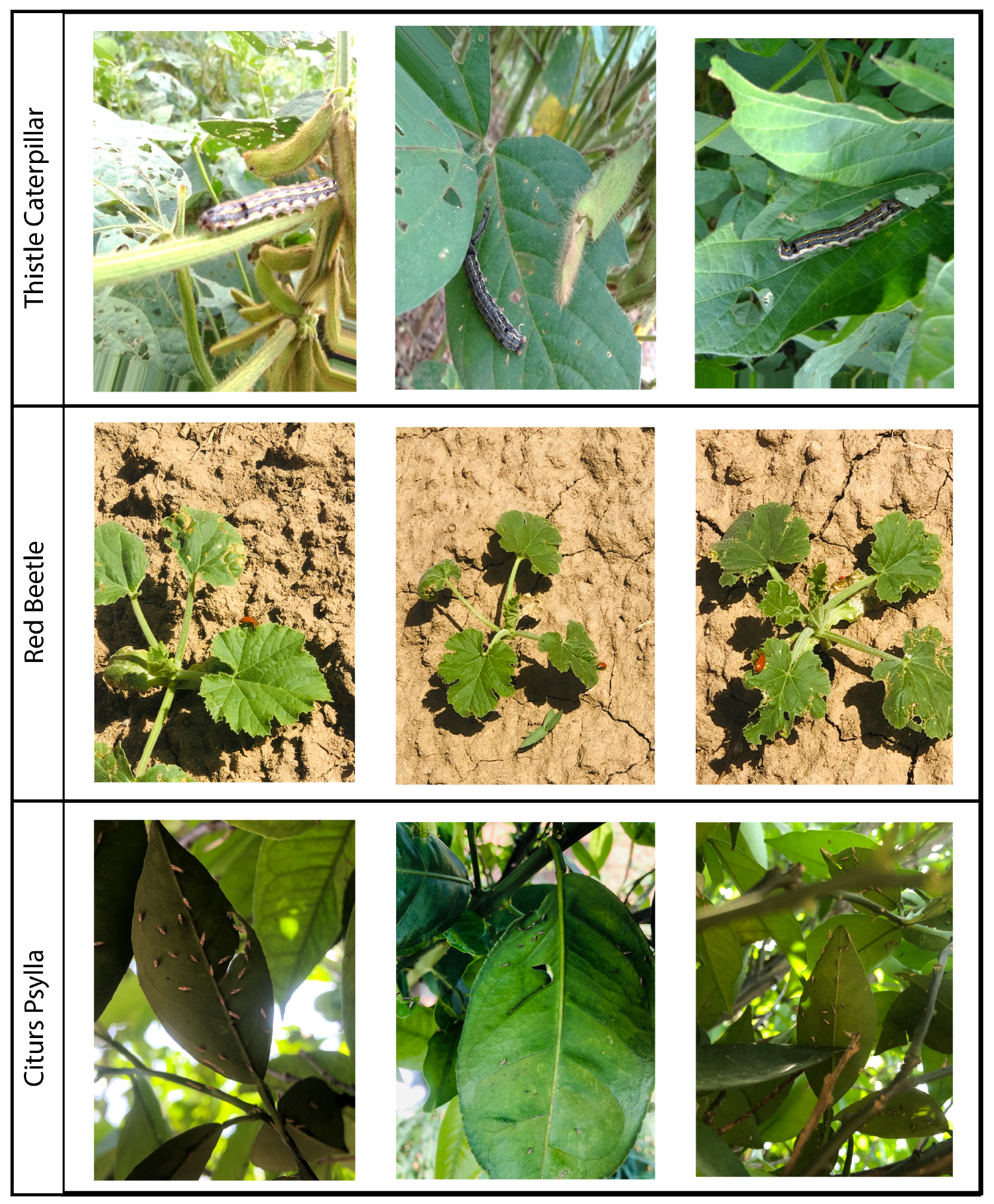

4.2. Dataset

The dataset consists of images of three pests, namely thistle caterpillars (Vanessa cardui), red beetles (Aulacophora foveicollis), and citrus psylla (Diaphorina citri). Images of thistle caterpillars were obtained from [

46]. However, images of red beetles and psylla were captured manually using iPhone X with a resolution of 3032 × 4032. All of the images were captured at different times of the day, such as dawn, morning, noon, afternoon, and under a variety of lighting conditions, including direct sunlight, indirect sunlight, shade, and mixed lighting conditions. In order to enrich the dataset, the images are further augmented and a combined dataset consisting of three classes with 9875 total images of pests is composed. An overview of the images from the dataset is given in

Figure 9.

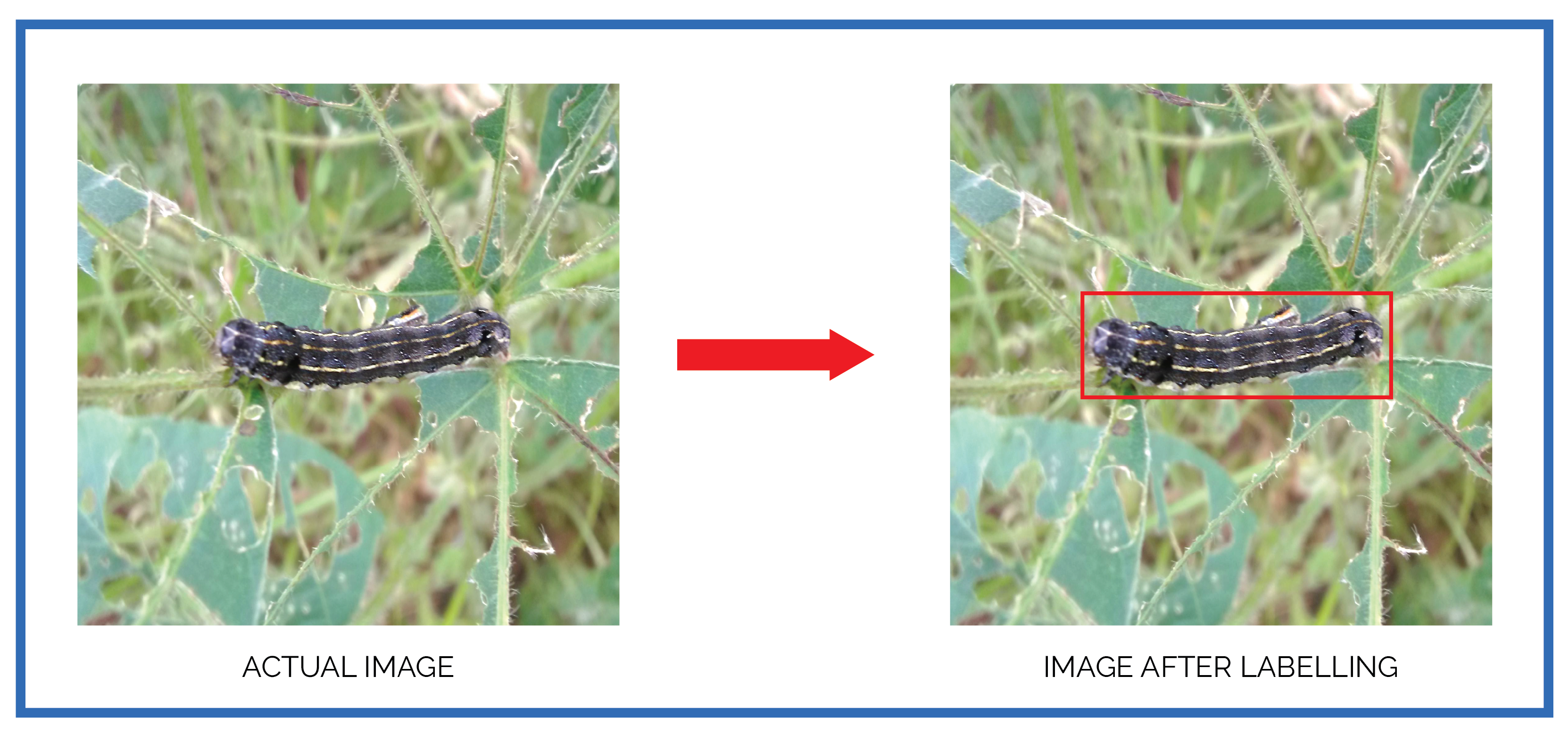

Data Labeling

Beginning with the pest dataset, Yolo Mark is used as annotation tool to manually mark the location of each pest in every image with a bounding box. The annotations are saved in txt format. The purpose of this annotation method is to label the pest class and position in the image. The outcome of this procedure are the coordinates of bounding boxes of variable size with their associated classes, which is assessed as intersection-over-union (IoU) by predicting the network results throughout testing. To make it clearer, the annotation of the bounding box is given in

Figure 10. The red box depicts the caterpillar-infected sections of the plant as well as parts of the background.

4.3. Experimental Setup

Six cutting edge object detection deep learning models are deployed. To conduct the experiment, the dataset was separated into three parts: 80% for training, 10% for validation, and 10% for testing. The model is trained from training images, followed by an evaluation of the validation images, and, when the experiment is ready to accomplish the predicted results, the final evaluation is done on the testing set.

Table 1 shows the experimental setting in which our proposed system was trained and tested.

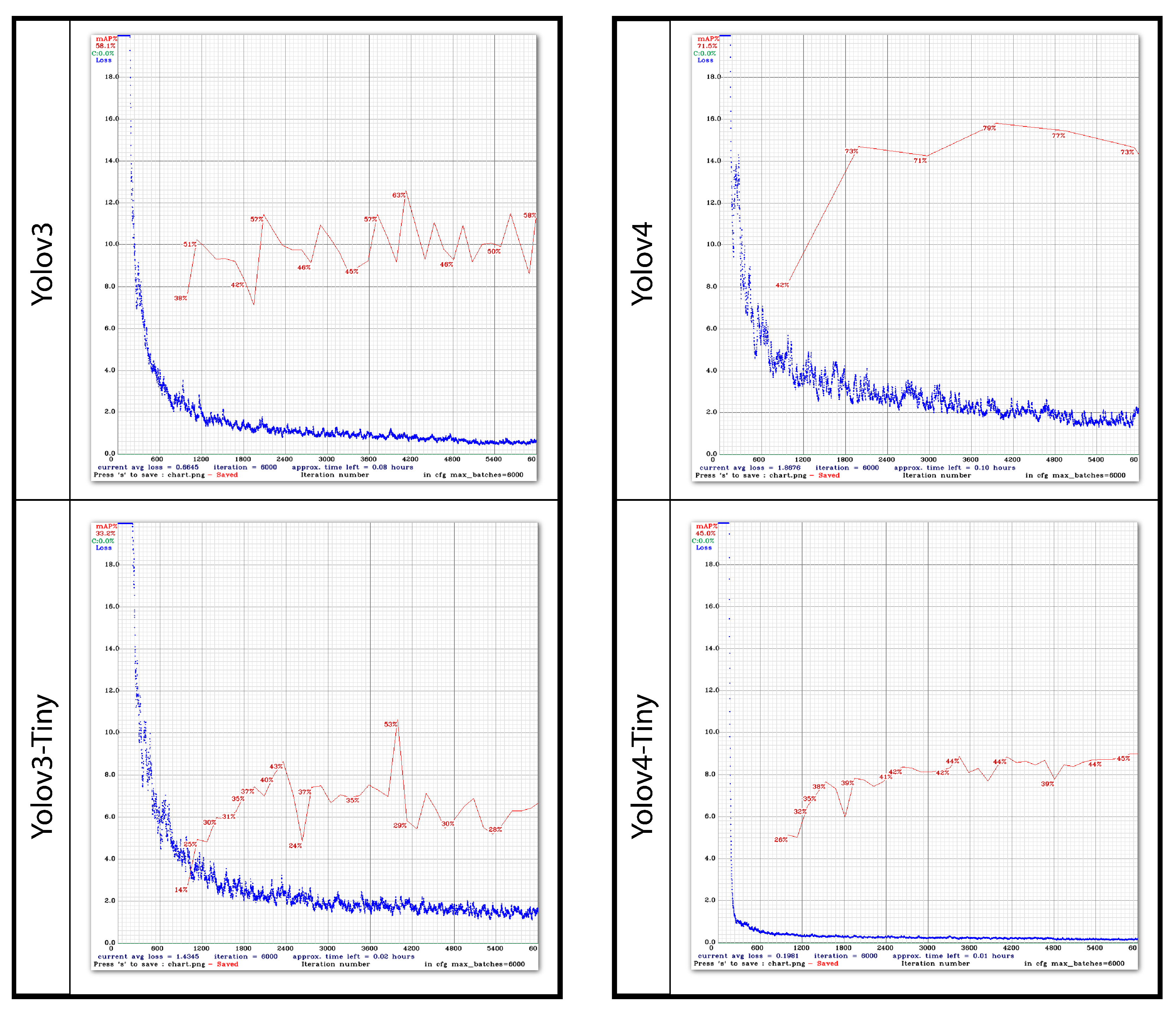

4.4. Model Training

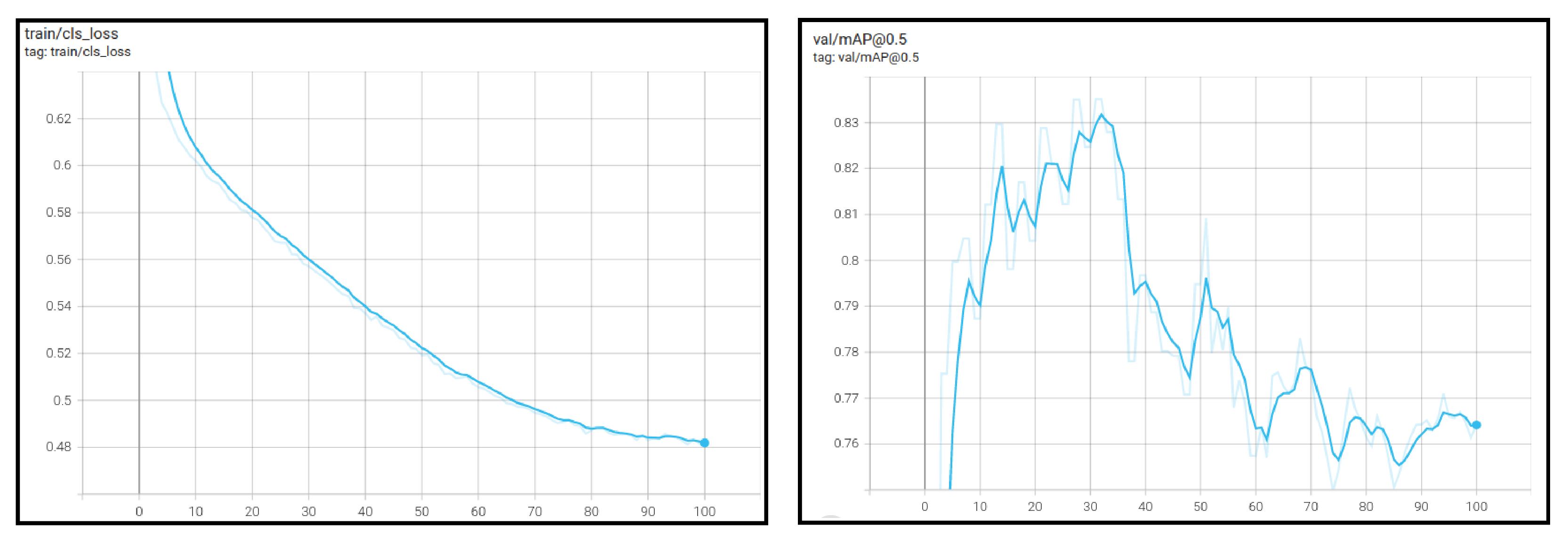

The Yolo object detection models are configured and trained on the images of thistle caterpillars, citrus psylla, and red beetles. Training can be started after the dataset is labeled. The same configuration is used to train Yolov3, Yolov3-Tiny, Yolov4, and Yolov4-Tiny. Before beginning the training, the model is configured concerning the dataset and configuration according to GPU, CUDNN, and OPENCV. In the configuration, the batch is set to 64 and the subdivisions are set to 8. Three classes are used in training so the max_batches are equal to 6000 and the steps are set to 5400 and 1800. The filters for 3 class are 14. The width and height of the images are set to 416 × 416, respectively. As for Yolov6 and Yolov8, the training process and configuration is different as it follows a different backbone architecture. Before training, the batch size is set to 64 and pre-trained weights of Yolov6s and Yolov8n are used. The image size is also set to 416 and training is performed with 100 epochs only.

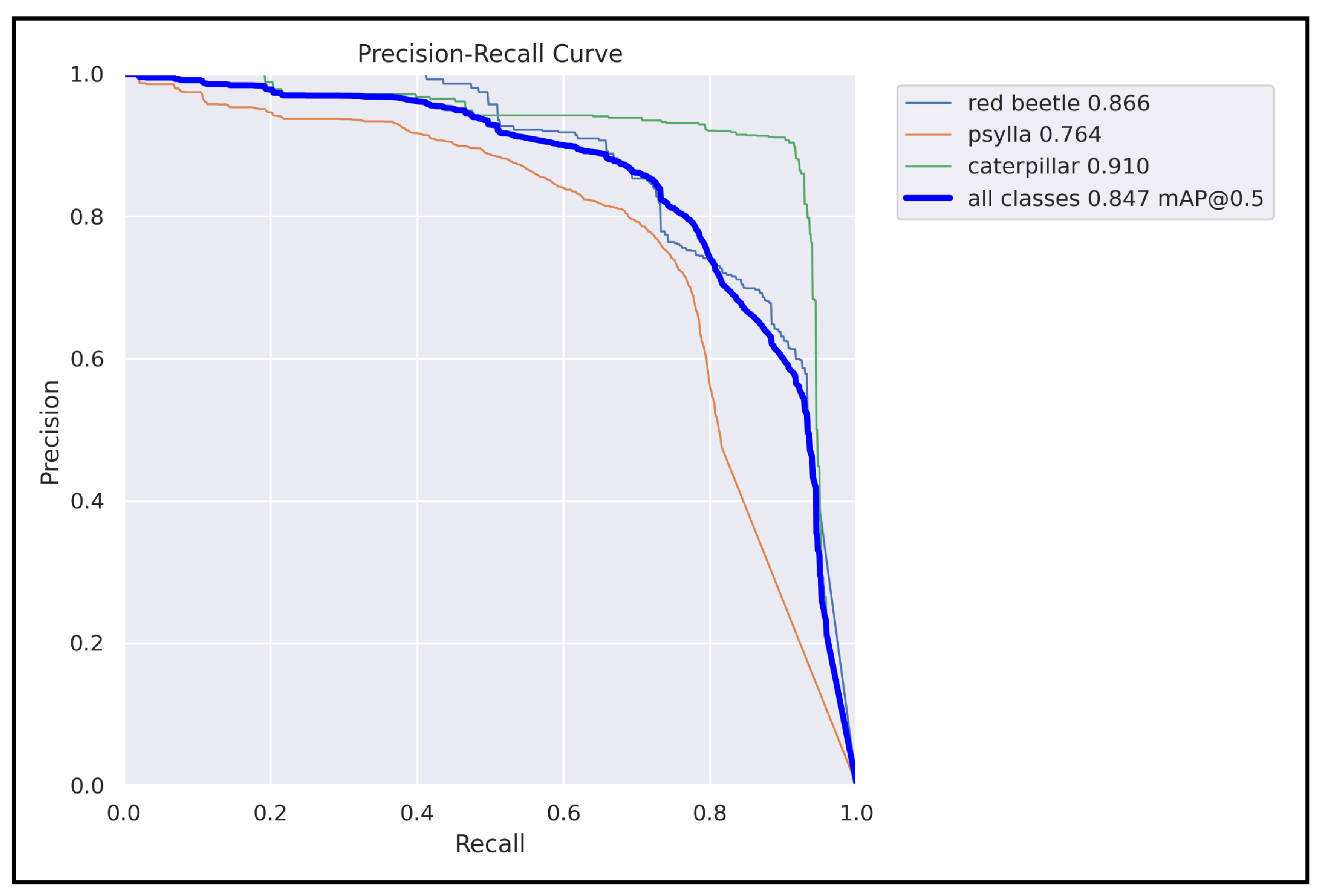

6. Conclusions

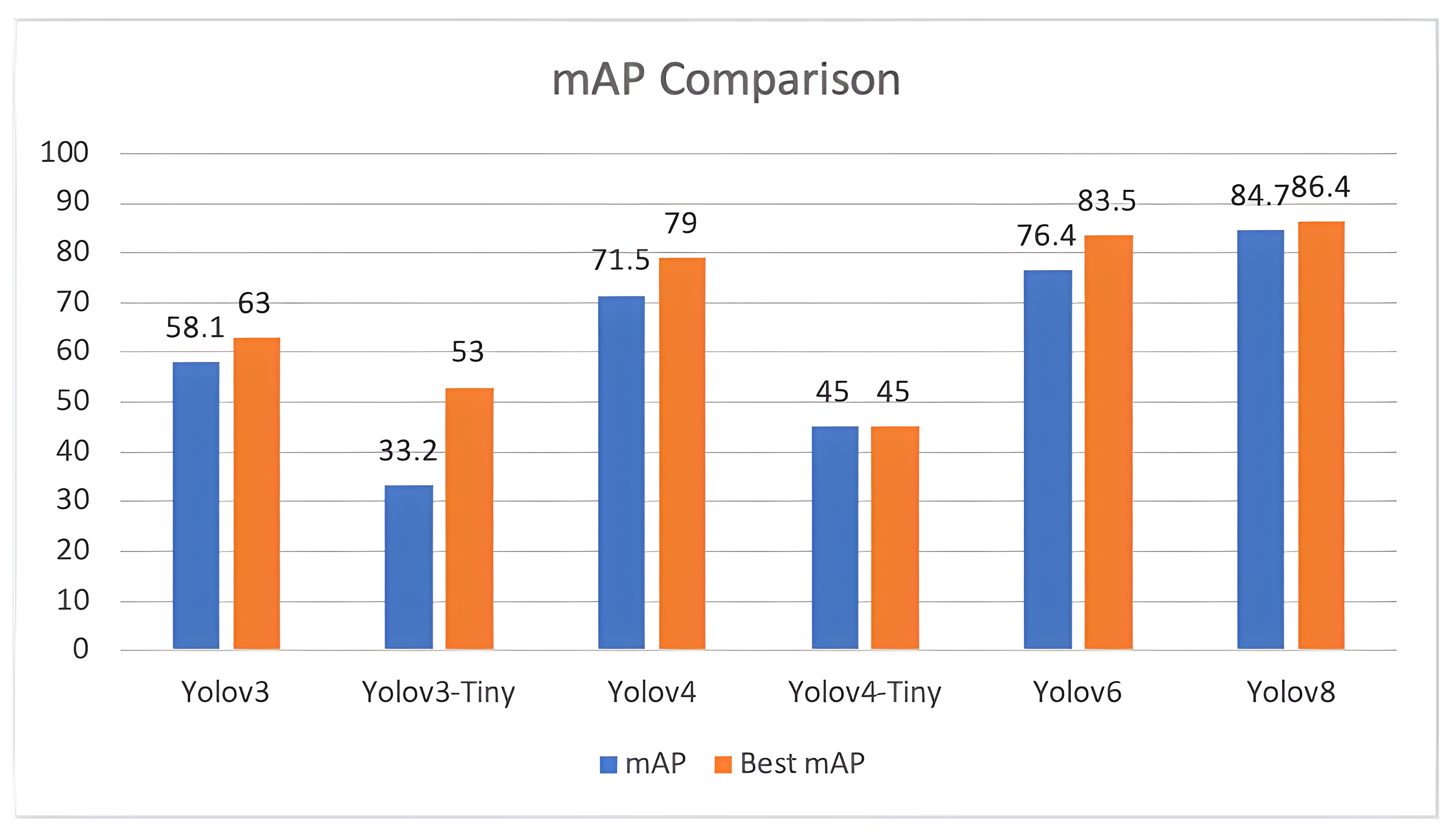

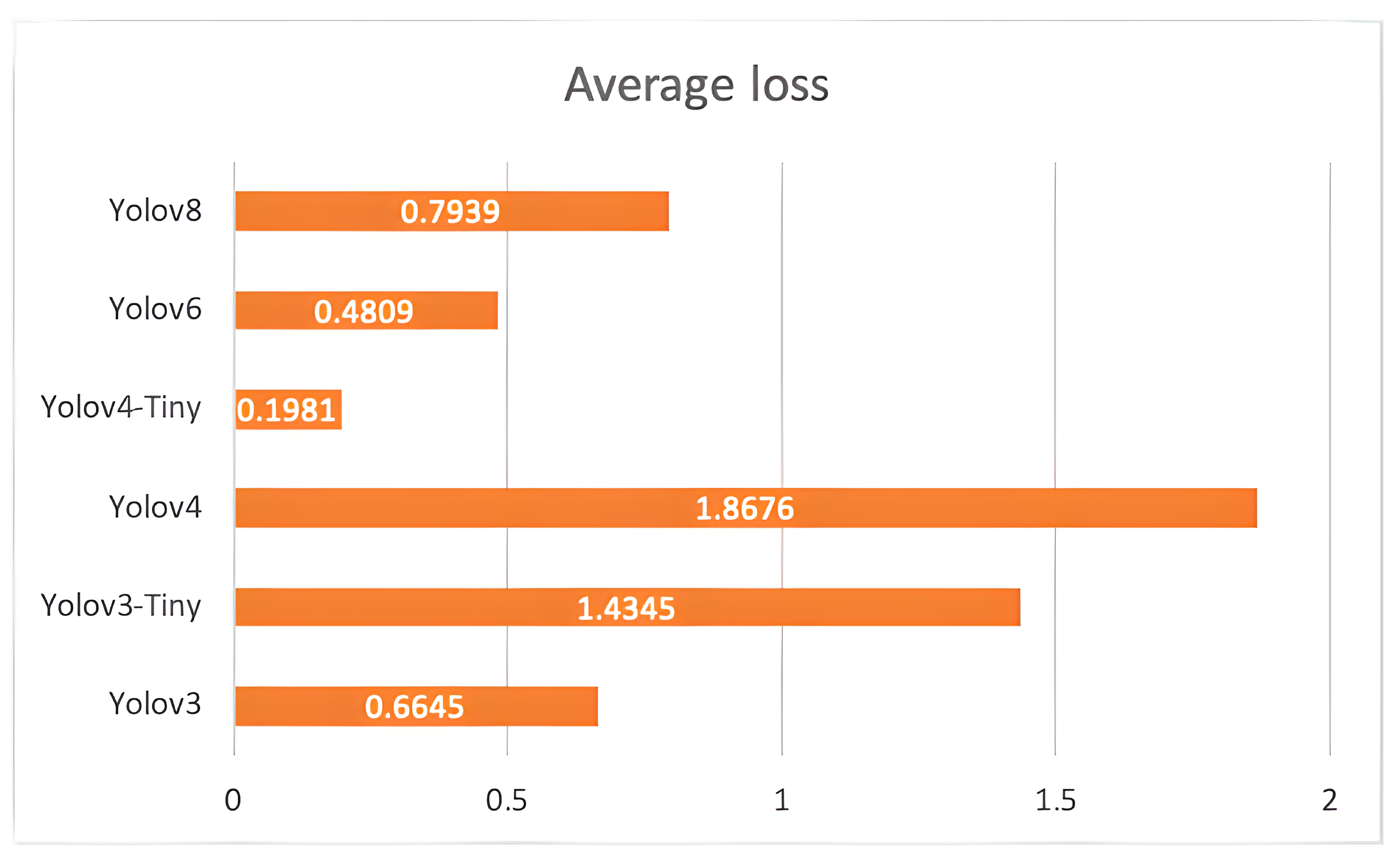

The study proposed a deep learning-based object detection model for pest management in agriculture, which involved comparing the performance of five Yolo-based models in detecting thistle caterpillars, red beetles, and citrus psylla. Real image data of two pests, red beetle and citrus psylla, were collected for pest management in crops. After training the models, the prediction results were compared by providing real pest images. Yolov8 was found to be the best model for pest detection among the six Yolo-based models, with the highest mean average precision value of 84.7%. This model was also converted into tflite, allowing it to detect pests in real-time from images that can be provided from the gallery. It successfully detects pests of small and large sizes, making it a better choice for real-time pest detection applications for variable rate spraying technologies. Moreover, non-experts can also use this model to detect pests. In contrast, other models, such as Yolov3 and Yolov4, while still capable of performing well, may not have the same level of efficiency and accuracy in small object detection due to their different architectures and feature extraction methods.

Overall, the results suggest that Yolov8 is the best suitable model for the detection of even small-sized pests and could be integrated with spot-specific spraying technologies, offering improved accuracy and efficiency compared to other Yolo-based models. Moreover, the dataset collected in this work is an important contribution to pest management practices and could be used as input in other models for improved accuracy and precision. Additionally, the Yolov8 model was converted into an Android app, making it easy to use for non-experts in pest management. Overall, this study provides a valuable contribution to the field of pest management in agriculture, demonstrating the effectiveness of deep learning-based object detection models for detecting pests and their potential for integration with real-time spraying technologies.

Future research could focus on evaluating the performance of the proposed model on larger datasets to assess its generalization and robustness. Additionally, research could be conducted on integrating the proposed pest detection model into precision spraying technologies to further improve the efficiency and accuracy of pest management practices in agriculture.