A Critical Review for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges with Human-In-The-Loop

Abstract

1. Introduction

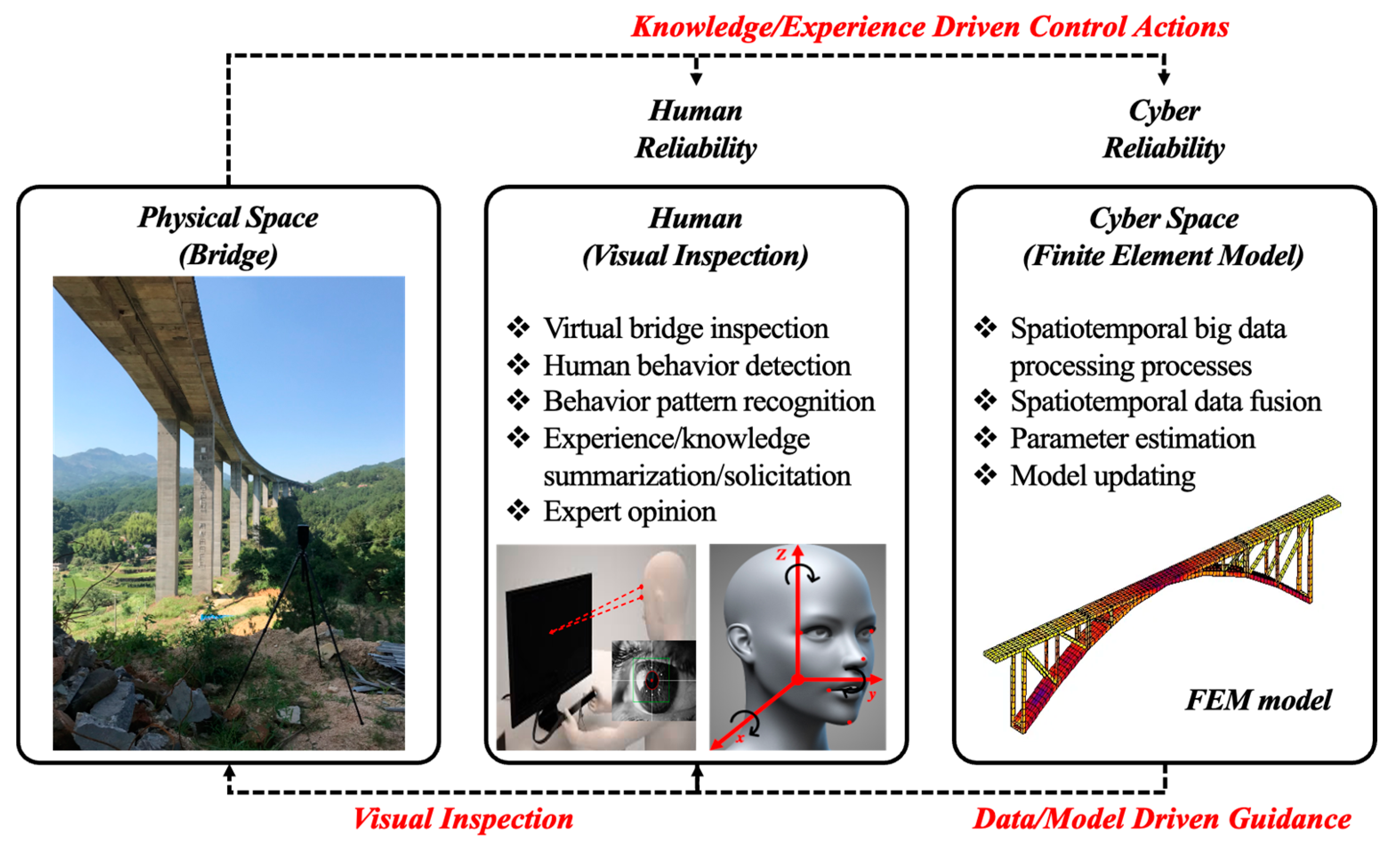

2. Motivating Case: Human-Cyber Reliability Issues in Structural Health Monitoring and Risk Prognosis of Bridges

2.1. Human Reliability Issues

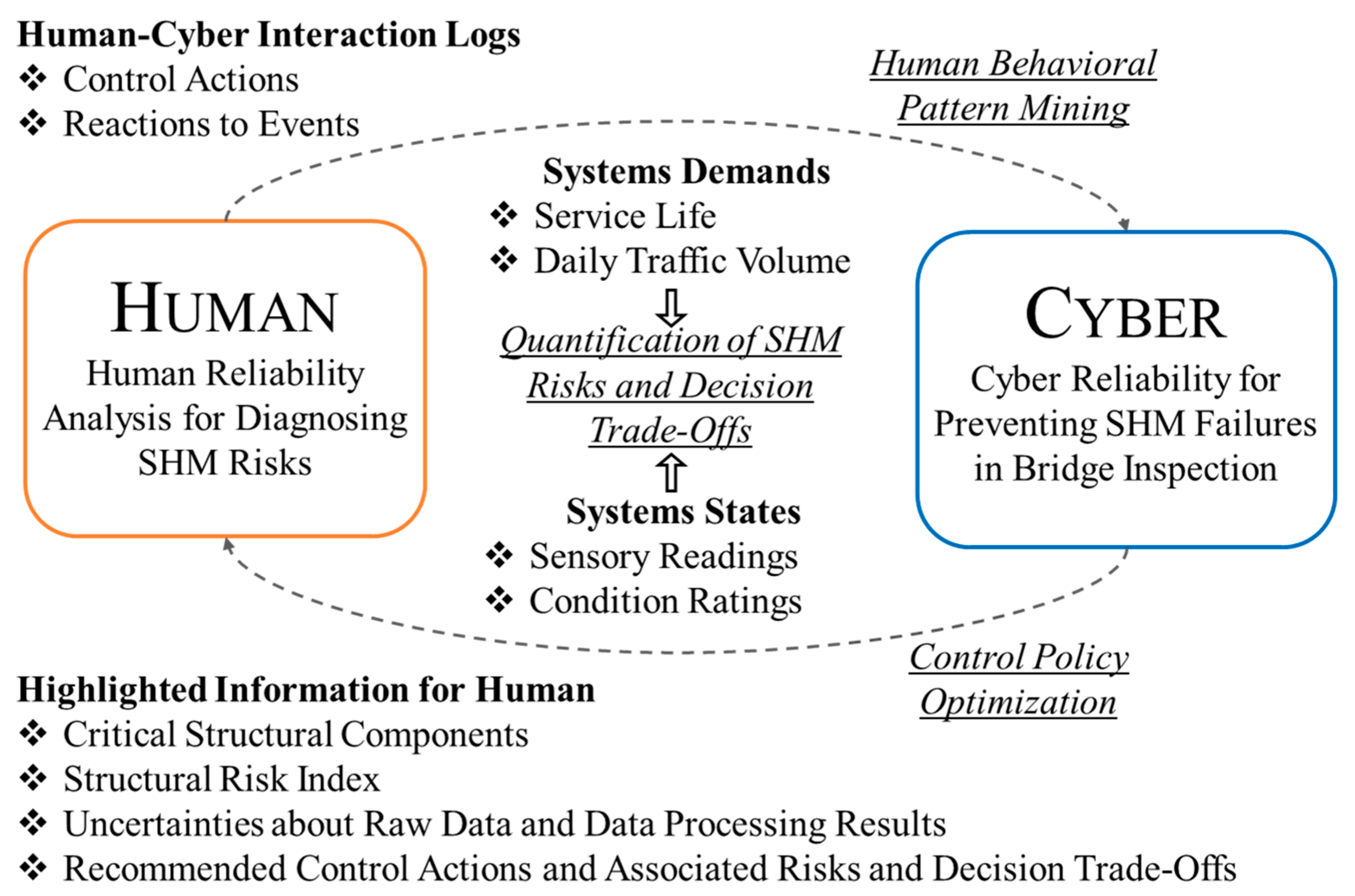

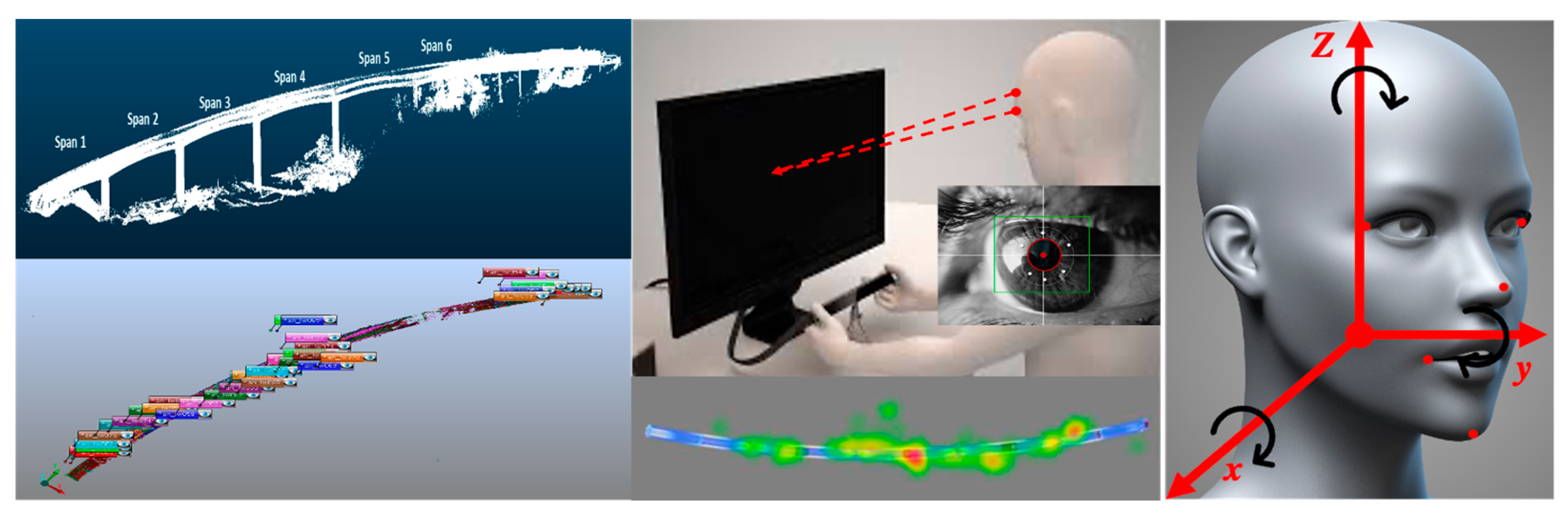

2.2. Cyber Reliability Issues

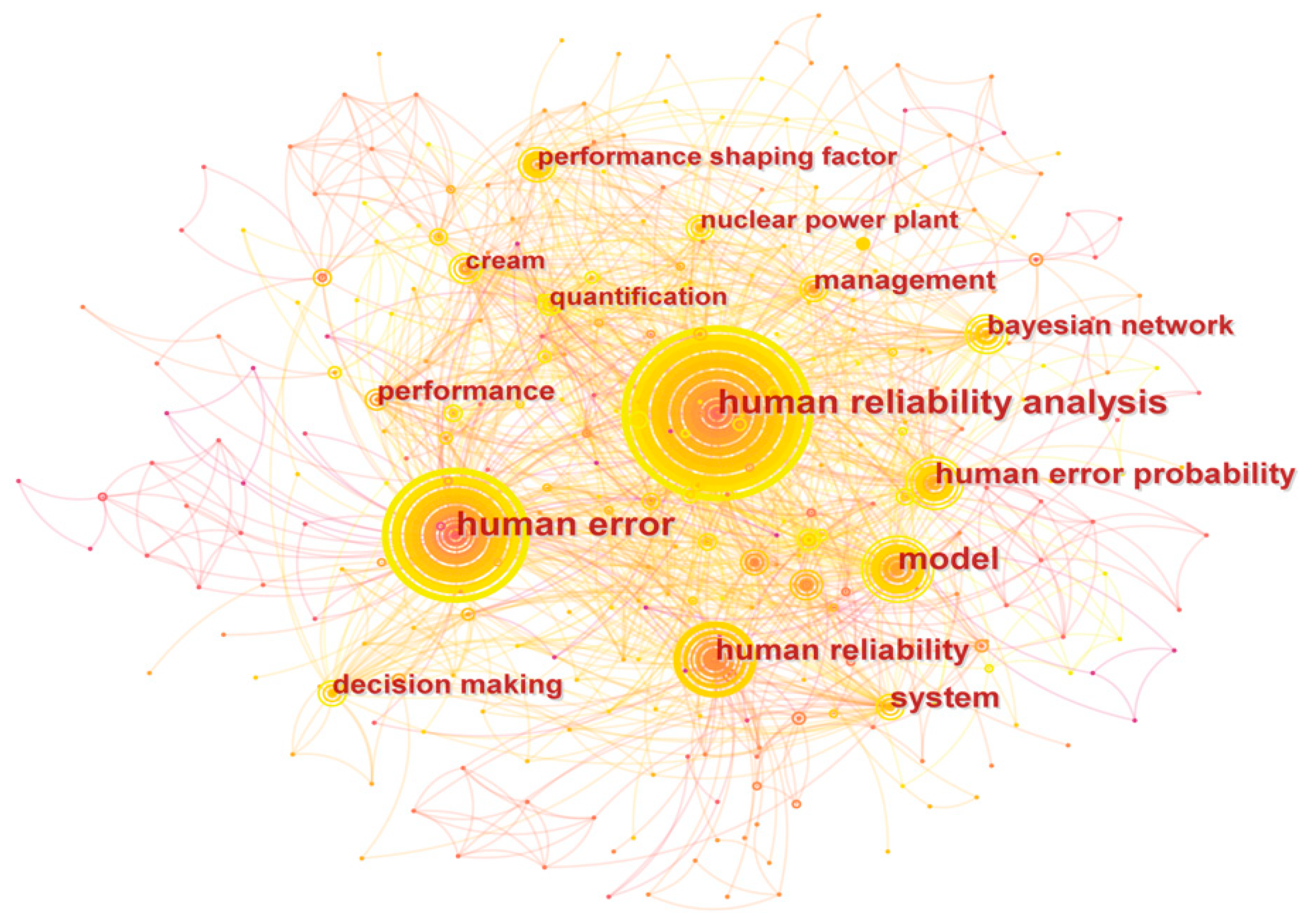

3. Human Reliability Analysis and Team Cognition for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges

3.1. Human Reliability (Individual Level)

3.2. Human Reliability (Team Level)

4. Cyber Reliability for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges

4.1. Data and Model Reliability

4.2. Computational Reliability

4.3. Data Storage, Exchange, and Transmission Reliability

5. Human-Cyber Reliability for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges

6. Conclusions: A Research Road Map for Advancing Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Durango-Cohen, P.L.; Madanat, S.M. Optimization of inspection and maintenance decisions for infrastructure facilities under performance model uncertainty: A quasi-Bayes approach. Transp. Res. Part A Policy Pract. 2008, 42, 1074–1085. [Google Scholar] [CrossRef]

- Van Riel, W.; Langeveld, J.; Herder, P.; Clemens, F. The influence of information quality on decision-making for networked infrastructure management. Struct. Infrastruct. Eng. 2017, 13, 696–708. [Google Scholar] [CrossRef]

- Gil, M.; Albert, M.; Fons, J.; Pelechano, V. Engineering human-in-the-loop interactions in cyber-physical systems. Inf. Softw. Technol. 2020, 126, 106349. [Google Scholar] [CrossRef]

- Dong, C.; Catbas, F.N. A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit. 2021, 20, 692–743. [Google Scholar] [CrossRef]

- Abdulkarem, M.; Samsudin, K.; Rokhani, F.Z.; Rasid, M.F.A. Wireless sensor network for structural health monitoring: A contemporary review of technologies, challenges, and future direction. Struct. Health Monit. 2020, 19, 693–735. [Google Scholar] [CrossRef]

- Wu, R.; Jahanshahi, M.R. Data fusion approaches for structural health monitoring and system identification: Past, present, and future. Struct. Health Monit. 2020, 19, 552–586. [Google Scholar] [CrossRef]

- Fan, W.; Chen, Y.; Li, J.; Sun, Y.; Feng, J.; Hassanin, H.; Sareh, P. Machine learning applied to the design and inspection of reinforced concrete bridges: Resilient methods and emerging applications. Structures 2021, 33, 3954–3963. [Google Scholar] [CrossRef]

- Masciotta, M.; Ramos, L.F.; Lourenço, P.B.; Vasta, M.; De Roeck, G. A spectrum-driven damage identification technique: Application and validation through the numerical simulation of the Z24 Bridge. Mech. Syst. Signal Process. 2016, 70–71, 578–600. [Google Scholar] [CrossRef]

- Astroza, R.; Ebrahimian, H.; Conte, J.P. Performance comparison of Kalman−based filters for nonlinear structural finite element model updating. J. Sound Vib. 2019, 438, 520–542. [Google Scholar] [CrossRef]

- Moaveni, B.; Conte, J.P. System and Damage Identification of Civil Structures. Encyclopedia of Earthquake Engineering; University of California: Berkeley, CA, USA, 2014; pp. 1–9. [Google Scholar]

- Averell, L.; Heathcote, A. The form of the forgetting curve and the fate of memories. J. Math. Psychol. 2011, 55, 25–35. [Google Scholar] [CrossRef]

- Tribukait, A.; Eiken, O. On the time course of short-term forgetting: A human experimental model for the sense of balance. Cogn. Neurodynamics 2016, 10, 7–22. [Google Scholar] [CrossRef]

- Brown, A.W.; Kaiser, K.A.; Allison, D.B. Issues with data and analyses: Errors, underlying themes, and potential solutions. Proc. Natl. Acad. Sci. USA 2018, 115, 2563–2570. [Google Scholar] [CrossRef] [PubMed]

- Kirwan, B.; Smith, A.; Rycraft, H. Human Error Data Collection and Data Generation. Int. J. Qual. Reliab. Manag. 1990, 7. [Google Scholar] [CrossRef]

- Kotek, L.; Mukhametzianova, L. Validation of Human Error Probabilities with Statistical Analysis of Misbehaviours. Procedia Eng. 2012, 42, 1955–1959. [Google Scholar] [CrossRef]

- Bolton, M.L. Model Checking Human–Human Communication Protocols Using Task Models and Miscommunication Generation. J. Aerosp. Inf. Syst. 2015, 12, 476–489. [Google Scholar] [CrossRef]

- Pan, D.; Bolton, M.L. Properties for formally assessing the performance level of human-human collaborative procedures with miscommunications and erroneous human behavior. Int. J. Ind. Ergon. 2015, 63, 75–88. [Google Scholar] [CrossRef]

- Gonzalez, C. The boundaries of instance-based learning theory for explaining decisions from experience. Prog. Brain Res. 2013, 202, 73–98. [Google Scholar] [PubMed]

- Zhu, X.S.; Wolfson, M.A.; Dalal, D.K.; Mathieu, J.E. Team Decision Making: The Dynamic Effects of Team Decision Style Composition and Performance via Decision Strategy. J. Manag. 2021, 47, 1281–1304. [Google Scholar] [CrossRef]

- Kosoris, N.; Chastine, J. A Study of the Correlations between Augmented Reality and its Ability to Influence User Behavior. IEEE 2015, 113–118. [Google Scholar]

- Love, P.E.D.; Edwards, D.J.; Han, S.; Goh, Y.M. Design error reduction: Toward the effective utilization of building information modeling. Res. Eng. Des. 2011, 22, 173–187. [Google Scholar] [CrossRef]

- Shin, J.-C.; Baek, Y.-I.; Park, W.-T. Analysis of Errors in Tunnel Quantity Estimation with 3D-BIM Compared with Routine Method Based 2D. J. Korean Geotech. Soc. 2011, 27, 63–71. [Google Scholar] [CrossRef]

- Oberkampf, W.L.; Roy, C.J. Verification and Validation in Scientific Computing; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Randell, B.; Lee, P.; Treleaven, P.C. Reliability Issues in Computing System Design. ACM Comput. Surv. 1978, 10, 123–165. [Google Scholar] [CrossRef]

- Tsapatsoulis, N.; Djouvas, C. Opinion Mining from Social Media Short Texts: Does Collective Intelligence Beat Deep Learning? Front. Robot. AI 2019, 5, 138. [Google Scholar] [CrossRef]

- Das, M.; Cheng, J.C.P.; Kumar, S.S. BIMCloud: A Distributed Cloud-Based Social BIM Framework for Project Collaboration. In Computing in Civil and Building Engineering; ASCE: Orlando, FL, USA, 2014. [Google Scholar]

- Xu, Z.; Zhang, L.; Li, H.; Lin, Y.H.; Yin, S. Combining IFC and 3D Tiles to Create 3D Visualization for Building Information Modeling. Autom. Constr. 2020, 109, 1–16. [Google Scholar] [CrossRef]

- Chen, W.; Chen, K.; Cheng, J.C.; Wang, Q.; Gan, V.J. BIM-based framework for automatic scheduling of facility maintenance work orders. Autom. Constr. 2018, 91, 15–30. [Google Scholar] [CrossRef]

- Sun, Z.; Xing, J.; Tang, P.; Cooke, N.J.; Boring, R.L. Human reliability for safe and efficient civil infrastructure operation and maintenance–A review. Dev. Built Environ. 2020, 4, 100028. [Google Scholar] [CrossRef]

- Liu, P.; Xiong, R.; Tang, P. Mining Observation and Cognitive Behavior Process Patterns of Bridge Inspectors. J. Comput. Civ. Eng. 2021, 2022, 604–612. Available online: https://ascelibrary.org/doi/abs/10.1061/9780784483893.075 (accessed on 12 February 2023).

- Xiong, R.; Liu, P.; Tang, P. Human Reliability Analysis and Prediction for Visual Inspection in Bridge Maintenance. In Computing in Civil Engineering; ASCE: Reston, VA, USA, 2021; pp. 254–262. [Google Scholar]

- Zong, Z.; Xia, Z.; Liu, H.; Li, Y.; Huang, X. Collapse Failure of Prestressed Concrete Continuous Rigid-Frame Bridge under Strong Earthquake Excitation: Testing and Simulation. J. Bridg. Eng. 2016, 21, 04016047. [Google Scholar] [CrossRef]

- Yang, H.; Xu, X.; Neumann, I. Laser Scanning-Based Updating of a Finite-Element Model for Structural Health Monitoring. IEEE Sens. J. 2016, 16, 2100–2104. [Google Scholar] [CrossRef]

- Sun, Z.; Shi, Y.; Xiong, W.; Tang, P. Vision-Based Correlated Change Analysis for Supporting Finite Element Model Updating on Curved Continuous Rigid Frame Bridges. In Proceedings of the Construction Research Congress 2020: Infrastructure Systems and Sustainability, American Society of Civil Engineers (ASCE), Tempe, Arizona, 8–10 March 2020; pp. 380–389. [Google Scholar]

- Posenato, D.; Lanata, F.; Inaudi, D.; Smith, I.F. Model-free data interpretation for continuous monitoring of complex structures. Adv. Eng. Inform. 2008, 22, 135–144. [Google Scholar] [CrossRef]

- Raphael, B.; Smith, I.F.C. Global Search through Sampling Using a PDF; Springer: Berlin/Heidelberg, Germany, 2003; pp. 71–82. [Google Scholar]

- Panteli, M.; Mancarella, P. Modeling and Evaluating the Resilience of Critical Electrical Power Infrastructure to Extreme Weather Events. IEEE Syst. J. 2017, 11, 1733–1742. [Google Scholar] [CrossRef]

- Gupta, S.; Kumar, P.; Raju, G.Y. A fuzzy causal relational mapping and rough set-based model for context-specific human error rate estimation. Int. J. Occup. Saf. Ergon. 2019, 27, 63–78. [Google Scholar] [CrossRef]

- Akyuz, E.; Celik, M.; Akgun, I.; Cicek, K. Prediction of human error probabilities in a critical marine engineering operation on-board chemical tanker ship: The case of ship bunkering. Saf. Sci. 2018, 110, 102–109. [Google Scholar] [CrossRef]

- Akyuz, E.; Celik, M. A modified human reliability analysis for cargo operation in single point mooring (SPM) off-shore units. Appl. Ocean. Res. 2016, 58, 11–20. [Google Scholar] [CrossRef]

- Akyuz, E.; Celik, M. A methodological extension to human reliability analysis for cargo tank cleaning operation on board chemical tanker ships. Saf. Sci. 2015, 75, 146–155. [Google Scholar] [CrossRef]

- Liversedge, S.P.; Findlay, J.M. Saccadic eye movements and cognition. Trends Cogn. Sci. 2000, 4, 6–14. [Google Scholar] [CrossRef]

- McAlpine, D. Creating a sense of auditory space. J. Physiology. 2005, 566, 21–28. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Joseph, P.V. Contrasting Patterns of Gene Duplication, Relocation, and Selection Among Human Taste Genes. Evol. Bioinform. 2021, 17, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Gostelow, P.; Parsons, S.A.; Stuetz, R.M. Sewage Treatment Works Odour Measurement. Waterence Technol. 2000, 41, 6. [Google Scholar] [CrossRef]

- Dijkerman, H.C.; de Haan, E.H.F. Somatosensory processes subserving perception and action. Behav. Brain Sci. 2007, 30, 224–230. [Google Scholar] [CrossRef]

- Marr, D. Vision: A Computational Investigation into the Human Representation and Processing of Visual Information; MIT Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Moussaïd, M.; Helbing, D.; Theraulaz, G. How simple rules determine pedestrian behavior and crowd disasters. Proc. Natl. Acad. Sci. USA 2011, 108, 6884–6888. [Google Scholar] [CrossRef] [PubMed]

- Thomas, M.L.; Green, M.F.; Hellemann, G.; Sugar, C.A.; Tarasenko, M.; Calkins, M.E.; Greenwood, T.A.; Gur, R.E.; Gur, R.C.; Lazzeroni, L.C.; et al. Modeling Deficits from Early Auditory Information Processing to Psychosocial Functioning in Schizophrenia. JAMA Psychiatry 2017, 74, 37–46. [Google Scholar] [CrossRef] [PubMed]

- Hoyer, W.D.; Stokburger-Sauer, N.E. The role of aesthetic taste in consumer behavior. J. Acad. Mark. Sci. 2012, 40, 167–180. [Google Scholar] [CrossRef]

- Shabgou, M.; Daryani, S.M. Towards the sensory marketing: Stimulating the five senses (sigHC, hearing, smell, touch and taste) and its impact on consumer behavior. Indian J. Fundam. Appl. Life Sci. 2014, 4, 573–581. [Google Scholar]

- Borghi, A.M.; Cimatti, F. Embodied cognition and beyond: Acting and sensing the body. Neuropsychologia 2010, 48, 763–773. [Google Scholar] [CrossRef] [PubMed]

- Kang, Y.; Williams, L.E.; Clark, M.S.; Gray, J.R.; Bargh, J.A. Physical temperature effects on trust behavior: The role of insula. Soc. Cogn. Affect. Neurosci. 2011, 6, 507–515. [Google Scholar] [CrossRef] [PubMed]

- Wieser, M.J.; Pauli, P.; Grosseibl, M.; Molzow, I.; Mühlberger, A. Virtual social interactions in social anxiety-The impact of sex, gaze, and interpersonal distance. Cyberpsychology Behav. Soc. Netw. 2010, 13, 547–554. [Google Scholar] [CrossRef]

- Zitouni, M.S.; Sluzek, A.; Bhaskar, H. Visual analysis of socio-cognitive crowd behaviors for surveillance: A survey and categorization of trends and methods. Eng. Appl. Artif. Intell. 2019, 82, 294–312. [Google Scholar] [CrossRef]

- Miller, W.R.; Rose, G.S. Toward a Theory of Motivational Interviewing. Am. Psychol. 2009, 64, 527. [Google Scholar] [CrossRef] [PubMed]

- Lee, B.C.; Duffy, V.G. The Effects of Task Interruption on Human Performance: A Study of the Systematic Classification of Human Behavior and Interruption Frequency. Hum. Factors Ergon. Manuf. 2015, 25, 137–152. [Google Scholar] [CrossRef]

- Naujoks, F.; Wiedemann, K.; Schömig, N. The importance of interruption management for usefulness and acceptance of automated driving. In Proceedings of the Automotive UI 2017-9th International ACM Conference on Automotive User Interfaces and Interactive Vehicular Applications, Oldenburg, Germany, 24–27 September 2017. [Google Scholar]

- Núñez, R.; Cooperrider, K. The tangle of space and time in human cognition. Trends Cogn. Sci. 2013, 17, 220–229. [Google Scholar] [CrossRef] [PubMed]

- Silber, B.; Schmitt, J. Effects of tryptophan loading on human cognition, mood, and sleep. Neurosci. Biobehav. Rev. 2010, 34, 387–407. [Google Scholar] [CrossRef]

- Salman, I.; Turhan, B.; Vegas, S. A controlled experiment on time pressure and confirmation bias in functional software testing. Empir. Softw. Eng. 2019, 24, 1727–1761. [Google Scholar] [CrossRef]

- Zakay, D. The Impact of Time Perception Processes on Decision Making under Time Stress. Time Press. Stress Hum. Judgm. Decis. Mak. 1993, 59–72. [Google Scholar] [CrossRef]

- Blakely, M.J.; Kemp, S.; Helton, W.S. Volitional Running and Tone Counting: The Impact of Cognitive Load on Running over Natural Terrain. IIE Trans. Occup. Ergon. Hum. Factors 2016, 4, 104–114. [Google Scholar] [CrossRef]

- Blakely, M.J.; Wilson, K.; Russell, P.N.; Helton, W.S. The impact of cognitive load on volitional running. In Proceedings of the Human Factors and Ergonomics Society; SAGE Publications: Los Angeles, CA, USA, 2016. [Google Scholar]

- Laumann, K.; Rasmussen, M. Suggested Improvements to the Definitions of Standardized Plant Analysis of Risk-Human Reliability Analysis (SPAR-H) Performance Shaping Factors, their Levels and Multipliers and the Nominal Tasks. Reliab. Eng. Syst. Saf. 2016, 145, 287–300. [Google Scholar] [CrossRef]

- Schirner, G.; Erdogmus, D.; Chowdhury, K.; Padir, T. The future of human-in-the-loop cyber-physical systems. Computer 2013, 46, 36–45. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Y.; Cooke, N.; Tang, P. Real-time Facial Expression and Head Pose Analysis for Monitoring the Workloads of Air Traffic Controllers. In Proceedings of the AIAA Aviation 2019 Forum, Dallas, TX, USA, 17–21 June 2019; p. 3412. [Google Scholar]

- Demir, M.; McNeese, N.J.; Cooke, N.J. Team situation awareness within the context of human-autonomy teaming. Cogn. Syst. Res. 2017, 46, 3–12. [Google Scholar] [CrossRef]

- Sun, Z.; Tang, P. Automatic Communication Error Detection Using Speech Recognition and Linguistic Analysis for Proactive Control of Loss of Separation. Transp. Res. Rec. J. Transp. Res. Board 2020, 2675, 1–12. [Google Scholar] [CrossRef]

- Chalhoub, J.; Alsafouri, S.; Ayer, S.K. Leveraging site survey points for mixed reality bim visualization. In Proceedings of the Construction Research Congress 2018: Construction Information Technology-Selected Papers from the Construction Research Congress, New Orleans, Louisiana, 2–4 April 2018; pp. 326–335. [Google Scholar]

- Shi, Y.; Du, J. Simulation of Spatial Memory for Human Navigation Based on Visual Attention in Floorplan Review. In Proceedings of the Winter Simulation Conference, National Harbor, MD, USA, 8–11 December 2019; pp. 3031–3040. [Google Scholar]

- Wolpaw, J.; Birbaumer, N.; Heetderks, W.; McFarland, D.; Peckham, P.; Schalk, G.; Donchin, E.; Quatrano, L.; Robinson, C.; Vaughan, T. Brain-computer interface technology: A review of the first international meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Mosleh, A. Cognitive modeling and dynamic probabilistic simulation of operating crew response to complex system accidents: Part 1: Overview of the IDAC Model. Reliab. Eng. Syst. Saf. 2007, 92, 997–1013. [Google Scholar] [CrossRef]

- Bao, Y.; Guo, C.; Zhang, J.; Wu, J.; Pang, S.; Zhang, Z. Impact analysis of human factors on power system operation reliability. J. Mod. Power Syst. Clean Energy 2018, 6, 27–39. [Google Scholar] [CrossRef]

- Chen, X.; Liu, X.; Qin, Y. An extended CREAM model based on analytic network process under the type-2 fuzzy environment for human reliability analysis in the high-speed train operation. Qual. Reliab. Eng. Int. 2021, 37, 284–308. [Google Scholar] [CrossRef]

- Boring, R. Top-Down and Bottom-up Definitions of Human Failure Events in Human Reliability Analysis. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2014, 58, 563–567. [Google Scholar] [CrossRef]

- Gertman, D.I.; Blackman, H.S.; Marble, J.L.; Smith, C.; Boring, R.L.; O’Reilly, P. The SPAR H human reliability analysis method. In Proceedings of the American Nuclear Society 4th International Topical Meeting on Nuclear Plant Instrumentation, Control and Human Machine Interface Technology, Charlotte, NC, USA, 1 January 2004. [Google Scholar]

- Blackman, H.S.; Gertman, D.I.; Boring, R.L. Human error quantification using performance shaping factors in the SPAR-H method. In Proceedings of the Human Factors and Ergonomics Society, New York City, NY, USA, 22–26 September 2008. [Google Scholar]

- Boring, R.L.; Blackman, H.S. The origins of the SPAR-H method’s performance shaping factor multipliers. In Proceedings of the IEEE Conference on Human Factors and Power Plants, Monterey, CA, USA, 26–31 August 2007. [Google Scholar]

- Demirkesen, S.; Arditi, D. Construction safety personnel′s perceptions of safety training practices. Int. J. Proj. Manag. 2015, 33, 1160–1169. [Google Scholar] [CrossRef]

- Wang, T.-K.; Huang, J.; Liao, P.-C.; Piao, Y. Does Augmented Reality Effectively Foster Visual Learning Process in Construction? An Eye-Tracking Study in Steel Installation. Adv. Civ. Eng. 2018, 2018, 2472167. [Google Scholar] [CrossRef]

- Hollnagel, E. Cognitive Reliability and Error Analysis Method (CREAM); Elsevier: Amsterdam, The Netherlands, 1998. [Google Scholar]

- Alsafouri, S.; Ayer, S.K. Mobile Augmented Reality to Influence Design and Constructability Review Sessions. J. Arch. Eng. 2019, 25, 04019016. [Google Scholar] [CrossRef]

- Alvarenga, M.; e Melo, P.F. A review of the cognitive basis for human reliability analysis. Prog. Nucl. Energy 2019, 117, 103050. [Google Scholar] [CrossRef]

- French, S.; Bedford, T.; Pollard, S.J.; Soane, E. Human reliability analysis: A critique and review for managers. Saf. Sci. 2011, 49, 753–763. [Google Scholar] [CrossRef]

- Smart, P.R.; Shadbolt, N.R. Modelling the dynamics of team sensemaking: A constraint satisfaction approach. Knowl. Syst. Coalit. Oper. 2012, 1–10. [Google Scholar]

- Bost, X.; Senay, G.; El-Bèze, M.; De Mori, R. Multiple topic identification in human/human conversations. Comput. Speech Lang. 2015, 34, 18–42. [Google Scholar] [CrossRef]

- Erdogan, H.; Sarikaya, R.; Chen, S.F.; Gao, Y.; Picheny, M. Using semantic analysis to improve speech recognition performance. Comput. Speech Lang. 2005, 19, 321–343. [Google Scholar] [CrossRef]

- Gostelow, P.; Parsons, S.; Stuetz, R. Odour measurements for sewage treatment works. Water Res. 2001, 35, 579–597. [Google Scholar] [CrossRef] [PubMed]

- Lynch, E.J.; Petrov, A.P. The Sense of Taste; Nova Biomedical: Waltham, MA, USA, 2012. [Google Scholar]

- Loft, S.; Sanderson, P.; Neal, A.; Mooij, M. Modeling and Predicting Mental Workload in en Route Air Traffic Control: Critical Review and Broader Implications. Hum. Factors J. Hum. Factors Ergon. Soc. 2007, 49, 376–399. [Google Scholar] [CrossRef] [PubMed]

- Borghini, G.; Aricò, P.; DI Flumeri, G.; Cartocci, G.; Colosimo, A.; Bonelli, S.; Golfetti, A.; Imbert, J.P.; Granger, G.; Benhacene, R.; et al. EEG-Based Cognitive Control Behaviour Assessment: An Ecological study with Professional Air Traffic Controllers. Sci. Rep. 2017, 7, 547. [Google Scholar] [CrossRef]

- Kapalo, K.A.; Bockelman, P.; LaViola, J.J. ‘Sizing up’ Emerging technology for firefigHCing: Augmented reality for incident assessment. In Proceedings of the Human Factors and Ergonomics Society, Philadelphia, PA, USA, 1–5 October 2018. [Google Scholar]

- Yang, L.; Liang, Y.; Wu, D.; Gault, J. Train and equip firefighters with cognitive virtual and augmented reality. In Proceedings of the 4th IEEE International Conference on Collaboration and Internet Computing, CIC 2018, Philadelphia, PA, USA, 18–20 October 2018. [Google Scholar]

- Boring, R.L. Dynamic human reliability analysis: Benefits and challenges of simulating human performance. In Proceedings of the European Safety and Reliability Conference 2007, ESREL 2007-Risk, Reliability and Societal Safety, Stavanger, Norway, 25–27 June 2007. [Google Scholar]

- Lyons, M.; Adams, S.; Woloshynowych, M.; Vincent, C. Human reliability analysis in healthcare: A review of techniques. Int. J. Risk Saf. Med. 2004, 16, 223–237. [Google Scholar]

- Pyy, P. Human Reliability Analysis Methods for Probabilistic Safety Assessment; VTT Publications: Espoo, Finland, 2000. [Google Scholar]

- Goldin-Meadow, S. The role of gesture in communication and thinking. Trends Cogn. Sci. 1999, 3, 419–429. [Google Scholar] [CrossRef]

- Motty, A.; Yogitha, A.; Nandakumar, R. Flag semaphore detection using tensorflow and opencv. Int. J. Recent Technol. Eng. 2019, 7, 2277–3878. [Google Scholar]

- Pigou, L.; Dieleman, S.; Kindermans, P.J.; Schrauwen, B. Sign language recognition using convolutional neural networks. In Proceedings of the Computer Vision-ECCV 2014 Workshops, Zurich, Switzerland, 6–7 and 12 September 2014. [Google Scholar]

- Prather, J.F.; Peters, S.; Nowicki, S.; Mooney, R. Precise auditory-vocal mirroring in neurons for learned vocal communication. Nature 2008, 451, 305–310. [Google Scholar] [CrossRef] [PubMed]

- Kendon, A.; Birdwhistell, R.L. Kinesics and Context: Essays on Body Motion Communication. Am. J. Psychol. 1972, 85, 441. [Google Scholar] [CrossRef]

- Keysers, C.; Kaas, J.H.; Gazzola, V. Somatosensation in social perception. Nat. Rev. Neurosci. 2010, 11, 417–428. [Google Scholar] [CrossRef]

- Bourbousson, J.; Feigean, M.; Seiler, R. Team cognition in sport: How current insights into how teamwork is achieved in naturalistic settings can lead to simulation studies. Front. Psychol. 2019, 10, 2082. [Google Scholar] [CrossRef]

- Cooke, N.J.; Gorman, J.C.; Myers, C.; Duran, J. Theoretical underpinning of interactive team cognition. In Theories of Team Cognition: Cross-Disciplinary Perspectives; Routledge: Abingdon, Oxfordshire, 2013. [Google Scholar]

- Salas, E.; Rosen, M.A.; Held, J.D.; Weissmuller, J.J. Performance measurement in simulation-based training: A review and best practices. Simul. Gaming 2009, 40, 328–376. [Google Scholar] [CrossRef]

- Williams, A.M.; Ericsson, K.A.; Ward, P.; Eccles, D.W. Research on Expertise in Sport: Implications for the Military. Mil. Psychol. 2008, 20, S123–S145. [Google Scholar] [CrossRef]

- Gutwin, C.; Greenberg, S. The importance of awareness for team cognition in distributed collaboration. In Team Cognition: Understanding the Factors That Drive Process and Performance; APA: Washington DC, WA, USA, 2005. [Google Scholar]

- Kaplan, S.; LaPort, K.; Waller, M.J. The role of positive affectivity in team effectiveness during crises. J. Organ. Behav. 2013, 34, 473–491. [Google Scholar] [CrossRef]

- Talat, A.; Riaz, Z. An integrated model of team resilience: Exploring the roles of team sensemaking, team bricolage and task interdependence. Pers. Rev. 2020, 49, 2007–2033. [Google Scholar] [CrossRef]

- Wang, M.-H.; Yang, T.-Y. Explaining Team Creativity through Team Cognition Theory and Problem Solving based on Input-Mediator-Output Approach. J. Electron. Commer. 2015, 17, 91–138. [Google Scholar]

- Cooke, N.J.; Gorman, J.C. Interaction-Based Measures of Cognitive Systems. J. Cogn. Eng. Decis. Mak. 2009, 3, 27–46. [Google Scholar]

- Cooke, N.J.; Gorman, J.C.; Winner, J.L. Team Cognition. Handbook of Applied Cognition, 2nd ed.; APA: Washington DC, WA, USA, 2008. [Google Scholar]

- Lai, H.-Y.; Chen, C.-H.; Khoo, L.-P.; Zheng, P. Unstable approach in aviation: Mental model disconnects between pilots and air traffic controllers and interaction conflicts. Reliab. Eng. Syst. Saf. 2019, 185, 383–391. [Google Scholar] [CrossRef]

- Liston, K.; Fischer, M.; Winograd, T. Focused sharing of information for multi-disciplinary decision making by project teams. Electron. J. Inf. Technol. Constr. 2001, 6, 69–82. [Google Scholar]

- Gorman, J.C.; Cooke, N.J.; Winner, J.L. Measuring team situation awareness in decentralized command and control environments. Ergonomics 2006, 49, 1312–1325. [Google Scholar] [CrossRef] [PubMed]

- Bell, S.T.; Brown, S.G.; Mitchell, T. What We Know about Team Dynamics for Long-Distance Space Missions: A Systematic Review of Analog Research. Front. Psychol. 2019, 10, 811. [Google Scholar] [CrossRef] [PubMed]

- Cha, K.-M.; Lee, H.-C. A novel qEEG measure of teamwork for human error analysis: An EEG hyperscanning study. Nucl. Eng. Technol. 2018, 51, 683–691. [Google Scholar] [CrossRef]

- Gorman, J.C.; Hessler, E.E.; Amazeen, P.G.; Cooke, N.J.; Shope, S.M. Dynamical analysis in real time: Detecting perturbations to team communication. Ergonomics 2012, 55, 825–839. [Google Scholar] [CrossRef]

- Gorman, J.C.; Amazeen, P.G.; Cooke, N.J. Team coordination dynamics. Nonlinear Dyn. Psychol. Life Sci. 2010, 14, 265–289. [Google Scholar] [CrossRef]

- Cooke, N.J. Team Cognition as Interaction. Curr. Dir. Psychol. Sci. 2015, 24, 415–419. [Google Scholar]

- Cooke, N.J.; Gorman, J.C.; Myers, C.W.; Duran, J.L. Interactive Team Cognition. Cogn. Sci. 2013, 37, 255–285. [Google Scholar] [CrossRef] [PubMed]

- Keebler, J.R.; Dietz, A.S.; Baker, A. Effects of communication lag in long duration space fligHC missions: Potential mitigation strategies. In Proceedings of the Human Factors and Ergonomics Society; SAGE Publications: Los Angeles, CA, USA, 2015. [Google Scholar]

- Landon, L.B.; Slack, K.J.; Barrett, J.D. Teamwork and collaboration in long-duration space missions: Going to extremes. Am. Psychol. 2018, 73, 563–575. [Google Scholar] [CrossRef]

- Noe, R.a.; Mcconnell Dachner, A.; Saxton, B.; Keeton, K.E. Team Training for Long-duration Missions in Isolated and Confined Environments: A Literature Review, an Operational Assessment, and Recommendations for Practice and Research. Res. Net 2011, 44. [Google Scholar]

- Demir, M.; Likens, A.D.; Cooke, N.J.; Amazeen, P.G.; McNeese, N.J. Team Coordination and Effectiveness in Human-Autonomy Teaming. IEEE Trans. Hum. Mach. Syst. 2019, 49, 150–159. [Google Scholar] [CrossRef]

- Tang, Z.; Chen, Z.; Bao, Y.; Li, H. Convolutional Neural Network-based Data Anomaly Detection Method using Multiple Information for Structural Health Monitoring. Struct. Control. Health Monit. 2019, 26. [Google Scholar] [CrossRef]

- Sun, L.; Shang, Z.; Xia, Y.; Bhowmick, S.; Nagarajaiah, S. Review of Bridge Structural Health Monitoring Aided by Big Data and Artificial Intelligence: From Condition Assessment to Damage Detection. J. Struct. Eng. 2020, 146, 04020073. [Google Scholar] [CrossRef]

- Ni, F.; Zhang, J.; Noori, M.N. Deep learning for data anomaly detection and data compression of a long-span suspension bridge. Comput. Civ. Infrastruct. Eng. 2020, 35, 685–700. [Google Scholar] [CrossRef]

- Smarsly, K.; Law, K.H. Decentralized fault detection and isolation in wireless structural health monitoring systems using analytical redundancy. Adv. Eng. Softw. 2014, 73, 1–10. [Google Scholar] [CrossRef]

- Okasha, N.M.; Frangopol, D.; Saydam, D.; Salvino, L.W. Reliability analysis and damage detection in high-speed naval craft based on structural health monitoring data. Struct. Health Monit. 2011, 10, 361–379. [Google Scholar] [CrossRef]

- Barnhart, T.B.; Crosby, B.T. Comparing Two Methods of Surface Change Detection on an Evolving Thermokarst Using High-Temporal-Frequency Terrestrial Laser Scanning, Selawik River, Alaska. Remote Sens. 2013, 5, 2813–2837. [Google Scholar] [CrossRef]

- Dai, F.; Rashidi, A.; Brilakis, I.; Vela, P. Comparison of Image-Based and Time-of-FligHC-Based Technologies for Three-Dimensional Reconstruction of Infrastructure. J. Constr. Eng. Manag. 2013, 139, 69–79. [Google Scholar] [CrossRef]

- Cao, H.; Tian, Y.; Lei, J.; Tan, X.; Gao, D.; Kopsaftopoulos, F.; Chang, F.K. Deformation data recovery based on compressed sensing in bridge structural health monitoring. Struct. Health Monit. 2017, 1, 888–895. [Google Scholar]

- Chen, Z.; Bao, Y.; Li, H.; Spencer, B.F. LQD-RKHS-based distribution-to-distribution regression methodology for restoring the probability distributions of missing SHM data. Mech. Syst. Signal Process. 2019, 121, 655–674. [Google Scholar] [CrossRef]

- Choudhury, A.; Kosorok, M.R. Missing data imputation for classification problems. arXiv 2020, arXiv:2002.10709. [Google Scholar]

- Deng, X.; Hu, Y.; Deng, Y. Bridge condition assessment using D numbers. Sci. World J. 2014, 2014, 358057. [Google Scholar] [CrossRef] [PubMed]

- Law, K.H.; Jeong, S.; Ferguson, M. A data-driven approach for sensor data reconstruction for bridge monitoring. In Proceedings of the 2017 World Congress on Advances in Structural Engineering and Mechanics, Seoul, Republic of Korea, 28 August–1 September 2017. [Google Scholar]

- Ma, J.W.; Czerniawski, T.; Leite, F. Semantic segmentation of point clouds of building interiors with deep learning: Augmenting training datasets with synthetic BIM-based point clouds. Autom. Constr. 2020, 113, 103144. [Google Scholar] [CrossRef]

- Saydam, D.; Frangopol, D.M.; Dong, Y. Assessment of Risk Using Bridge Element Condition Ratings. J. Infrastruct. Syst. 2013, 19, 252–265. [Google Scholar] [CrossRef]

- Tang, H.; Schrimpf, M.; Lotter, W.; Moerman, C.; Paredes, A.; Caro, J.O.; Hardesty, W.; Cox, D.; Kreiman, G. Recurrent computations for visual pattern completion. Proc. Natl. Acad. Sci. USA 2018, 35, 8835–8840. [Google Scholar] [CrossRef] [PubMed]

- Ye, S.; Lai, X.; Bartoli, I.; Aktan, A.E. Technology for condition and performance evaluation of highway bridges. J. Civ. Struct. Health Monit. 2020, 10, 573–594. [Google Scholar] [CrossRef]

- Zhang, C.; Tang, P. Visual complexity analysis of sparse imageries for automatic laser scan planning in dynamic environments. In Proceedings of the Congress on Computing in Civil Engineering, Proceedings, Austin, TX, USA, 21–23 June 2015; pp. 271–279. [Google Scholar]

- Yee, W.G.; Frieder, O. On search in peer-to-peer file sharing systems. In Proceedings of the ACM Symposium on Applied Computing, Santa Fe, NM, USA, 13–17 March 2005. [Google Scholar]

- Fan, H.; Su, H.; Guibas, L. A point set generation network for 3D object reconstruction from a single image. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Pontes, J.K.; Kong, C.; Sridharan, S.; Lucey, S.; Eriksson, A.; Fookes, C. Image2Mesh: A Learning Framework for Single Image 3D Reconstruction. In Proceedings of the Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2019. [Google Scholar]

- Brand, M. Morphable 3D models from video. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Din, Z.U.; Tang, P. Automatic Logical Inconsistency Detection in the National Bridge Inventory. Procedia Eng. 2016, 145, 729–737. [Google Scholar] [CrossRef]

- Moore, M.; Phares, B.; Graybeal, B.; Rolander, D.; Washer, G. Reliability of Visual Inspection for Highway Bridges. FHWA-RD-01-021. 2001. Available online: https://www.fhwa.dot.gov/publications/research/nde/pdfs/01021a.pdf (accessed on 12 February 2023).

- Emer, M.C.F.P.; Vergilio, S.R.; Jino, M. Testing relational database schemas with alternative instance analysis. In Proceedings of the 20th International Conference on Software Engineering and Knowledge Engineering, SEKE 2008, San Francisco, CA, USA, 1–3 July 2008. [Google Scholar]

- Chaudhuri, S.; Dayal, U. An Overview of Data Warehousing and OLAP Technology. ACM Sigmod Rec. 1997, 26, 65–74. [Google Scholar] [CrossRef]

- Hunter, A.; Konieczny, S. Measuring inconsistency through minimal inconsistent sets. In Proceedings of the International Workshop on Temporal Representation and Reasoning, Montréal, QC, Canada, 16–18 June 2008. [Google Scholar]

- Farfoura, M.E.; Horng, S.-J.; Lai, J.-L.; Run, R.-S.; Chen, R.-J.; Khan, M.K. A blind reversible method for watermarking relational databases based on a time-stamping protocol. Expert Syst. Appl. 2012, 39, 3185–3196. [Google Scholar] [CrossRef]

- Storey, V.C. Understanding semantic relationships. VLDB J. 1993, 2, 455–488. [Google Scholar] [CrossRef]

- Chen, Z.; Li, H.; Bao, Y. Analyzing and modeling inter-sensor relationships for strain monitoring data and missing data imputation: A copula and functional data-analytic approach. Struct. Health Monit. 2019, 18, 1168–1188. [Google Scholar] [CrossRef]

- Delmarco, S.; Tom, V.; Webb, H.; Lefebvre, D. A Verification Metric for Multi-Sensor Image Registration; SPIE: Bellingham, WA, USA, 2007; Volume 6567, p. 656718. [Google Scholar]

- Snineh, S.M.; Bouattane, O.; Youssfi, M.; Daaif, A. Towards a multi-agents model for errors detection and correction in big data flows. In Proceedings of the 2019 3rd International Conference on Intelligent Computing in Data Sciences, ICDS 2019, Marrakech, Morocco, 28–30 October 2019. [Google Scholar]

- Vosselman, G.; Kessels, P.; Gorte, B. The utilisation of airborne laser scanning for mapping. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 177–186. [Google Scholar] [CrossRef]

- Yan, L.; Liu, H.; Tan, J.; Li, Z.; Xie, H.; Chen, C. Scan Line Based Road Marking Extraction from Mobile LiDAR Point Clouds. Sensors 2016, 16, 903. [Google Scholar] [CrossRef] [PubMed]

- Inatsuka, H.; Uchino, M.; Okuda, M. Level of detail control for texture on 3D maps. In Proceedings of the International Conference on Parallel and Distributed Systems-ICPADS, Fuduoka, Japan, 20–22 July 2005. [Google Scholar]

- Guerneve, T.; Petillot, Y. Underwater 3D reconstruction using BlueView imaging sonar. In MTS/IEEE OCEANS 2015-Genova: Discovering Sustainable Ocean Energy for a New World; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Salman Azhar, S. Building Information Modeling (BIM): Trends, Benefits, Risks, and Challenges for the AEC Industry. Leadersh. Manag. Eng. 2011, 11, 241–252. [Google Scholar] [CrossRef]

- Cheng, J.C.P.; Deng, Y. An Integrated BIM-GIS Framework for Utility Information Management and Analyses. In Proceedings of the Computing in Civil Engineering 2015, Austin, TX, USA, 21–23 June 2015; pp. 667–674. [Google Scholar] [CrossRef]

- Kalasapudi, V.S.; Tang, P.; Zhang, C.; Diosdado, J.; Ganapathy, R. Adaptive 3D Imaging and Tolerance Analysis of Prefabricated Components for Accelerated Construction. Procedia Eng. 2015, 118, 1060–1067. [Google Scholar] [CrossRef]

- Boton, C.; Kubicki, S.; Halin, G. The Challenge of Level of Development in 4D/BIM Simulation Across AEC Project Lifecyle. A Case Study. Procedia Eng. 2015, 123, 59–67. [Google Scholar] [CrossRef]

- Taleb, I.; Serhani, M.A.; Dssouli, R. Big Data Quality Assessment Model for Unstructured Data. In Proceedings of the 2018 13th International Conference on Innovations in Information Technology (IIT), Al Ain, United Arab Emirates, 18–19 November 2018; pp. 69–74. [Google Scholar] [CrossRef]

- Gorla, N.; Somers, T.M.; Wong, B. Organizational impact of system quality, information quality, and service quality. J. Strateg. Inf. Syst. 2010, 19, 207–228. [Google Scholar] [CrossRef]

- Hunt, L.; White, J.; Hoogenboom, G. Agronomic data: Advances in documentation and protocols for exchange and use. Agric. Syst. 2001, 70, 477–492. [Google Scholar] [CrossRef]

- Chen, K.; Lu, W.; Xue, F.; Tang, P.; Li, L.H. Automatic building information model reconstruction in high-density urban areas: Augmenting multi-source data with architectural knowledge. Autom. Constr. 2018, 93, 22–34. [Google Scholar] [CrossRef]

- Yilmaz, A.; Li, X.; Shah, M. Contour-based object tracking with occlusion handling in video acquired using mobile cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1531–1536. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Li, J.; Li, Q. Computational uncertainty and the application of a high-performance multiple precision scheme to obtaining the correct reference solution of Lorenz equations. Numer. Algorithms 2012, 59, 147–159. [Google Scholar] [CrossRef]

- Ansehel, O.; Baram, N.; Shimkin, N. Averaged-DQN: Variance reduction and stabilization for Deep Reinforcement Learning. In Proceedings of the 34th International Conference on Machine Learning, ICML, Sydney, Australia, 6–11 August 2017. [Google Scholar]

- Sukhoy, V.; Stoytchev, A. Numerical error analysis of the ICZT algorithm for chirp contours on the unit circle. Sci. Rep. 2020, 10, 4852. [Google Scholar] [CrossRef]

- Tucker, W. Validated Numerics: A Short Introduction to Rigorous Computations; JSTOR: New York City, NY, USA, 2011. [Google Scholar]

- Kendall, A.; Gal, Y. What uncertainties do we need in Bayesian deep learning for computer vision? In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Mesnil, G.; Dauphin, Y.; Glorot, X.; Rifai, S.; Bengio, Y.; Goodfellow, I.J.; Lavoie, E.; Muller, X.; Desjardins, G.; Warde-Farley, D.; et al. Unsupervised and Transfer Learning Challenge: A Deep Learning approach. In Proceedings of the Unsupervised and Transfer Learning Challenge and Workshop, Bellevue, WA, USA, 2 July 2012. [Google Scholar]

- Seeger, M. Gaussian Processes for Machine Learning. Int. J. Neural Syst. 2004, 14, 69–106. [Google Scholar] [CrossRef] [PubMed]

- Di Franco, A.; Guo, H.; Rubio-Gonzalez, C. A comprehensive study of real-world numerical bug characteristics. In Proceedings of the 32nd IEEE/ACM International Conference on Automated Software Engineering, Urbana, IL, USA, 30 October–3 November 2017. [Google Scholar]

- Zhou, L.; Ye, J.; Kaess, M. A Stable Algebraic Camera Pose Estimation for Minimal Configurations of 2D/3D Point and Line Correspondences. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2019; pp. 273–288. [Google Scholar]

- Tang, P.; Akinci, B. Automatic execution of workflows on laser-scanned data for extracting bridge surveying goals. Adv. Eng. Inform. 2012, 26, 889–903. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, C.; Tang, P. Geometry-based optimized point cloud compression methodology for construction and infrastructure management. In Proceedings of the Congress on Computing in Civil Engineering, Seattle, WA, USA, 25–27 June 2017. [Google Scholar]

- Trčka, N.; Van Der Aalst, W.M.P.; Sidorova, N. Data-flow anti-patterns: Discovering data-flow errors in workflows. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Wu, I.-C.; Borrmann, A.; Beißert, U.; König, M.; Rank, E. Bridge construction schedule generation with pattern-based construction methods and constraint-based simulation. Adv. Eng. Inform. 2010, 24, 379–388. [Google Scholar] [CrossRef]

- Wilson, A.G.; Adams, R.P. Gaussian process kernels for pattern discovery and extrapolation. In Proceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- AbouRizk, S.M.; Hajjar, D. A framework for applying simulation in construction. Can. J. Civ. Eng. 2011, 25, 604–617. [Google Scholar] [CrossRef]

- Lee, S.H.; Peña-Mora, F.; Park, M. Dynamic planning and control methodology for strategic and operational construction project management. Autom. Constr. 2006, 15, 84–97. [Google Scholar] [CrossRef]

- Vurukonda, N.; Rao, B.T. A Study on Data Storage Security Issues in Cloud Computing. Procedia Comput. Sci. 2016, 92, 128–135. [Google Scholar] [CrossRef]

- Chen, H.-M.; Chang, K.-C.; Lin, T.-H. A cloud-based system framework for performing online viewing, storage, and analysis on big data of massive BIMs. Autom. Constr. 2016, 71, 34–48. [Google Scholar] [CrossRef]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M.; et al. The Cancer Imaging Archive (TCIA): Maintaining and Operating a Public Information Repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef]

- Alherbawi, N.; Shukur, Z.; Sulaiman, R. Systematic Literature Review on Data Carving in Digital Forensic. Procedia Technol. 2013, 11, 86–92. [Google Scholar] [CrossRef]

- Fernández-Alemán, J.L.; Señor, I.C.; Lozoya, P.O.; Toval, A. Security and privacy in electronic health records: A systematic literature review. J. Biomed. Inform. 2013, 46, 541–562. [Google Scholar] [CrossRef]

- Zhang, J.; El-Gohary, N.M. Automated Information Transformation for Automated Regulatory Compliance Checking in Construction. J. Comput. Civ. Eng. 2015, 29, B4015001. [Google Scholar] [CrossRef]

- Garrett, J.; Akinci, B.; Wang, H. Towards Domain-Oriented Semi-Automated Model Matching for Supporting Data Exchange. In Proceedings of the International Conference on Computing in Civil and Building Engineering, ICCCBE, Weimar, Germany, 2–4 June 2004. [Google Scholar]

- Wang, H.; Akinci, B.; Garrett, J.H.; Nyberg, E.; Reed, K.A. Semi-automated model matching using version difference. Adv. Eng. Inform. 2009, 23, 1–11. [Google Scholar] [CrossRef]

- Jiao, J.; Nie, S.X.; Yang, Y.; Gu, S.S.; Wu, S.H.; Zhang, Q.Y. Distributed systematic raptor coding scheme in deep space communications. Yuhang Xuebao/J. Astronaut. 2016, 37, 1232–1238. [Google Scholar]

- Afsari, K.; Eastman, C.M.; Castro-Lacouture, D. JavaScript Object Notation (JSON) data serialization for IFC schema in web-based BIM data exchange. Autom. Constr. 2017, 77, 24–51. [Google Scholar] [CrossRef]

- Mahmood, M.A.; Seah, W.K.; Welch, I. Reliability in wireless sensor networks: A survey and challenges ahead. Comput. Netw. 2015, 79, 166–187. [Google Scholar] [CrossRef]

- Shen, J.; Tan, H.; Wang, J.; Wang, J.; Lee, S. A novel routing protocol providing good transmission reliability in underwater sensor networks. J. Internet Technol. 2015, 16, 171–178. [Google Scholar]

- Bello-Orgaz, G.; Jung, J.J.; Camacho, D. Social big data: Recent achievements and new challenges. Inf. Fusion 2016, 28, 45–59. [Google Scholar] [CrossRef] [PubMed]

- Patil, L.; Dutta, D.; Sriram, R. Ontology-based exchange of product data semantics. IEEE Trans. Autom. Sci. Eng. 2005, 2, 213–225. [Google Scholar] [CrossRef]

- Shrestha, K.; Shrestha, P.P.; Bajracharya, D.; Yfantis, E.A. Hard-Hat Detection for Construction Safety Visualization. J. Constr. Eng. 2015, 2015, 721380. [Google Scholar] [CrossRef]

- Petricek, T.; Svoboda, T. Point cloud registration from local feature correspondences—Evaluation on challenging datasets. PLoS ONE 2017, 12, e0187943. [Google Scholar] [CrossRef] [PubMed]

- Gard, N.A.; Chen, J.; Tang, P.; Yilmaz, A. Deep Learning and Anthropometric Plane Based Workflow Monitoring by Detecting and Tracking Workers. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 149–154. [Google Scholar] [CrossRef]

- Puttonen, E.; Lehtomäki, M.; Kaartinen, H.; Zhu, L.; Kukko, A.; Jaakkola, A. Improved Sampling for Terrestrial and Mobile Laser Scanner Point Cloud Data. Remote Sens. 2013, 5, 1754–1773. [Google Scholar] [CrossRef]

- Lu, M.; Zhao, J.; Guo, Y.; Ma, Y. Accelerated Coherent Point Drift for Automatic Three-Dimensional Point Cloud Registration. IEEE Geosci. Remote Sens. Lett. 2016, 13, 162–166. [Google Scholar] [CrossRef]

- Schneider, K.M. Using information extraction to build a directory of conference announcements. In Lecture Notes in Computer Science (including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Wang, Z.; Chung, R. Recovering human pose in 3D by visual manifolds. In Proceedings of the International Conference on Pattern Recognition, Tsukuba, Japan, 11–15 November 2012. [Google Scholar]

- Possegger, H.; Mauthner, T.; Roth, P.M.; Bischof, H. Occlusion geodesics for online multi-object tracking. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Chellappa, R.; Sankaranarayanan, A.C.; Veeraraghavan, A.; Turaga, P. Statistical Methods and Models for Video-Based Tracking, Modeling, and Recognition. Found. Trends Signal Process. 2009, 3, 1–151. [Google Scholar] [CrossRef]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Yang, D.; Liu, Y.; Li, S.; Li, X.; Ma, L. Gear fault diagnosis based on support vector machine optimized by artificial bee colony algorithm. Mech. Mach. Theory 2015, 90, 219–229. [Google Scholar] [CrossRef]

- Lorenz, R.; Hampshire, A.; Leech, R. Neuroadaptive Bayesian Optimization and Hypothesis Testing. Trends Cogn. Sci. 2017, 21, 155–167. [Google Scholar] [CrossRef] [PubMed]

- Rosman, B.; Ramamoorthy, S. Learning spatial relationships between objects. Int. J. Robot. Res. 2011, 30, 1328–1342. [Google Scholar] [CrossRef]

- Yuan, L.; Yu, Z.; Luo, W. Towards the next-generation GIS: A geometric algebra approach. Ann. GIS 2019, 25, 195–206. [Google Scholar] [CrossRef]

- Kim, B.S.; Kang, B.G.; Choi, S.H.; Kim, T.G. Data modeling versus simulation modeling in the big data era: Case study of a greenhouse control system. Simulation 2017, 93, 579–594. [Google Scholar] [CrossRef]

- Lee, H.; Lee, J.-K.; Park, S.; Kim, I. Translating building legislation into a computer-executable format for evaluating building permit requirements. Autom. Constr. 2016, 71, 49–61. [Google Scholar] [CrossRef]

- Tanguy, L.; Tulechki, N.; Urieli, A.; Hermann, E.; Raynal, C. Natural language processing for aviation safety reports: From classification to interactive analysis. Comput. Ind. 2016, 78, 80–95. [Google Scholar] [CrossRef]

- Tixier, A.J.-P.; Hallowell, M.R.; Rajagopalan, B.; Bowman, D. Automated content analysis for construction safety: A natural language processing system to extract precursors and outcomes from unstructured injury reports. Autom. Constr. 2016, 62, 45–56. [Google Scholar] [CrossRef]

- Liu, K.; El-Gohary, N. Ontology-based semi-supervised conditional random fields for automated information extraction from bridge inspection reports. Autom. Constr. 2017, 81, 313–327. [Google Scholar] [CrossRef]

- Zou, Y.; Kiviniemi, A.; Jones, S.W. Retrieving similar cases for construction project risk management using Natural Language Processing techniques. Autom. Constr. 2017, 80, 66–76. [Google Scholar] [CrossRef]

| Title | Objectives |

|---|---|

| Modeling and Evaluating the Resilience of Critical Electrical Power Infrastructure to Extreme Weather Events [37] | This study established a framework for comprehending the impact of human responses on power systems resilience during severe weather events. |

| A fuzzy causal relational mapping and rough set-based model for context-specific human error rate estimation [38] | This study established a fuzzy rule-based causal relational mapping approach for deriving human error rates under different contexts. |

| Prediction of human error probabilities in a critical marine engineering operation on-board chemical tanker ship: The case of ship bunkering [39] | This study presents a Shipboard Operation Human Reliability Analysis (SOHRA) method for predicting human errors during bunkering operations. |

| A modified human reliability analysis for cargo operation in single point mooring (SPM) off-shore units [40] | This study established a framework for a human error assessment and reduction technique (HEART) with human uncertainties in decision-making. |

| A methodological extension to human reliability analysis for cargo tank cleaning operation on board chemical tanker ships [41] | This study developed a method for augmenting human reliability analysis in examining human reliability impacts on cargo tank cleaning operations. |

| Perception Reliability—Reliability of the sensed spatiotemporal information about the self and environmental objects | Visual perception [42]; Auditory sense [43]; Taste sense [44]; Sense of smell [45]; Tactile and somatosensory [46] |

| Cognition Reliability—Impact of the self-sensed physical conditions of human bodies and environmental conditions on the decisions of human individuals and teams | Visual information [47,48]; Auditory information [49]; Taste [50]; Smell [51]; Body motions [52]; Temperature [53]; Space size (confined space) [54]; Motion speeds [55]; Frequencies of changes [56]; Interruptions/Distractions [57,58] |

| Response Reliability—Impact of the individual’s capability and team’s situational awareness on the risks and efficiency of collaborative operations of a team | Reaction time [59,60]; Time limits [61,62]; Physical demand [63,64]; The impact of the environmental conditions (performance shaping factors—PSFs) on the operational performance of individual workers [65] |

| Perception Reliability—Reliability of the sensed spatiotemporal information about the self and environmental objects | Visual communication: Gestures [98]; Flag [99]; Signs [100]; Auditory communication [101]; Motion communication [102]; Somatosensory and visual and auditory [103] |

| Cognition Reliability—Impact of the self-sensed physical conditions of human bodies and environmental conditions on the decisions of human individuals and teams | Motions and positions [104]; Voice [105]; Impact of environmental conditions gained through team communication and collaboration on the team decisions; Relative motions [106,107]; Relative differences between workspaces [108]; Speeds of changes in remote workspaces [108] |

| Response Reliability—Impact of the individual’s capability and team’s situational awareness on the risks and efficiency of team operations | Team reaction time [109]; Task independence [110,111]; The impact of environmental conditions on team performance [112] |

| Title | Objectives |

|---|---|

| Convolutional neural network-based data anomaly detection method using multiple information for structural health monitoring [127] | This study established an anomaly detection method based on convolutional neural networks that mimic human vision and decision making. |

| Review of Bridge Structural Health Monitoring Aided by Big Data and Artificial Intelligence: From Condition Assessment to Damage Detection [128] | This study has established a method that uses big data (BD) and artificial intelligence (AI) techniques to solve the data interpretation problem. |

| Deep learning for data anomaly detection and data compression of a long-span suspension bridge [129] | This study has established a method for data compression and reconstruction based on deep learning. |

| Decentralized fault detection and isolation in wireless structural health monitoring systems using analytical redundancy [130] | This study has established a decentralized approach for automatic sensor fault detection and isolation for wireless SHM systems. |

| Reliability analysis and damage detection in high-speed naval craft based on structural health monitoring data [131] | This study has established a method for reliability analysis and damage detection of high-speed ships (HSNC) using SHM data. |

| Category | Example Studies of Reliability Issues | |

|---|---|---|

| Data | Visual and Geometric Data | Accuracy and level of detail of 3D imagery data reconstructed from photos [145]; spatial resolutions of images [146]; temporal resolution of videos [147] |

| Reports | Errors in field notes [148]; omitted structural defects in inspection reports [149] | |

| Tabular Data | Missing and anomalous values of locations, structural condition ratings in the NBI database [148] | |

| Relational Database | Incorrect external keys for representing the related columns in two tables and linking the information from two tables [150]; redundant information items having inconsistent values at different parts of the database [151,152]; missing relationships between two tables while the link should exist for linking common columns in two tables [153,154] | |

| Sensory Data | Errors or missing values in time series sensory data that measures structure vibrations [155] | |

| Metadata | Errors in the metadata for specifying the formats and organization of datasets, such as the meaning of columns of numbers in a data file [144]; errors in the metadata for specifying the time and data collection environments [156]; errors in the metadata for specifying the methods of processing and transforming the data, such as a transformation matrix for transforming point clouds to a global coordinate system [157] | |

| Model | 2D/3D Maps | Location errors of points [158]; length and direction errors of lines representing paths on 2D or 3D maps [159]; level of detail of maps [160]; missing values in the properties of objects on 2D or 3D maps [161] |

| Semantic-Rich Digital Models | Missing and additional objects [162]; dimensional and shape deviations from actual dimensions [163,164]; wrong type information of objects [165] |

| Reliability Issues | Example Studies | |

|---|---|---|

| Data Storage | Data and information losses due to compression of data for saving storage space | Point cloud compression research for reducing point cloud data sizes while keeping the geometric changes captured in the point clouds [189] |

| Losses of data and information due to data saving errors and hardware defects | Corrupted files or missing parts of files due to problematic file saving processes for saving data of large sizes or unique data structures, such as Gigabytes of imagery datasets [190] | |

| Losses of data and information due to decaying hardware devices for storing the data files | Corrupted files or missing parts of files due to storage unit failures under unfavorable environmental conditions or decaying of storage media materials [191,192] | |

| Data Exchange | Losses of data and information while converting files between different formats | Mapping the same objects stored in different formats based on properties of objects while accepting losses of semantics associated with specific properties uniquely stored in only one of the formats [193] |

| Losses of data and information while updating the data schema | Mapping the entity definitions in different versions of a schema for automated updating of building information model files into files that use a new version of the schema [194,195] | |

| Data Transmission | Losses of data and information due to problems in communication protocols | Losses of data packets due to problems in data and file transmission protocols, especially for transferring large images and data files [196] |

| Stage | Inputs/Outputs | Reliability Issues |

|---|---|---|

| Data Pre-Processing (Prepare the raw data in formats that are suitable for reliable feature extraction and pattern recognition) |

|

|

| Data Processing (Extract features and data patterns that are corresponding to objects and changes captured in the spatiotemporal patterns of features) |

|

|

| Data Interpretation (Analyze relationships between objects and events to interpret the correlated objects and events into meaningful change information of the facilities and workspaces) |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Z.; Chen, T.; Meng, X.; Bao, Y.; Hu, L.; Zhao, R. A Critical Review for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges with Human-In-The-Loop. Sustainability 2023, 15, 6389. https://doi.org/10.3390/su15086389

Sun Z, Chen T, Meng X, Bao Y, Hu L, Zhao R. A Critical Review for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges with Human-In-The-Loop. Sustainability. 2023; 15(8):6389. https://doi.org/10.3390/su15086389

Chicago/Turabian StyleSun, Zhe, Tiantian Chen, Xiaolin Meng, Yan Bao, Liangliang Hu, and Ruirui Zhao. 2023. "A Critical Review for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges with Human-In-The-Loop" Sustainability 15, no. 8: 6389. https://doi.org/10.3390/su15086389

APA StyleSun, Z., Chen, T., Meng, X., Bao, Y., Hu, L., & Zhao, R. (2023). A Critical Review for Trustworthy and Explainable Structural Health Monitoring and Risk Prognosis of Bridges with Human-In-The-Loop. Sustainability, 15(8), 6389. https://doi.org/10.3390/su15086389