The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis

Abstract

1. Introduction

- Q1.

- Does the use of educational robots in the classroom improve student learning outcomes?

- Q2.

- Does the effect vary by

- (a)

- The educational level (pre-school, primary school, secondary school, higher education)?

- (b)

- The subject area (social science and humanities, science)?

- (c)

- The treatment duration (0–4 weeks, 4–8 weeks, above 8 weeks)?

- (d)

- The type of assessment (exam mark, skill-based measure, attitude)?

- (e)

- The robotic type (robotic kits, zoomorphic social robot, humanoid robot)?

2. Method

2.1. Literature Search and Inclusion Criteria

2.2. Coding Procedure

2.3. Quality Assessment

2.4. Statistical Analysis

2.5. Sensitivity Analysis and Moderator Analyses

3. Results

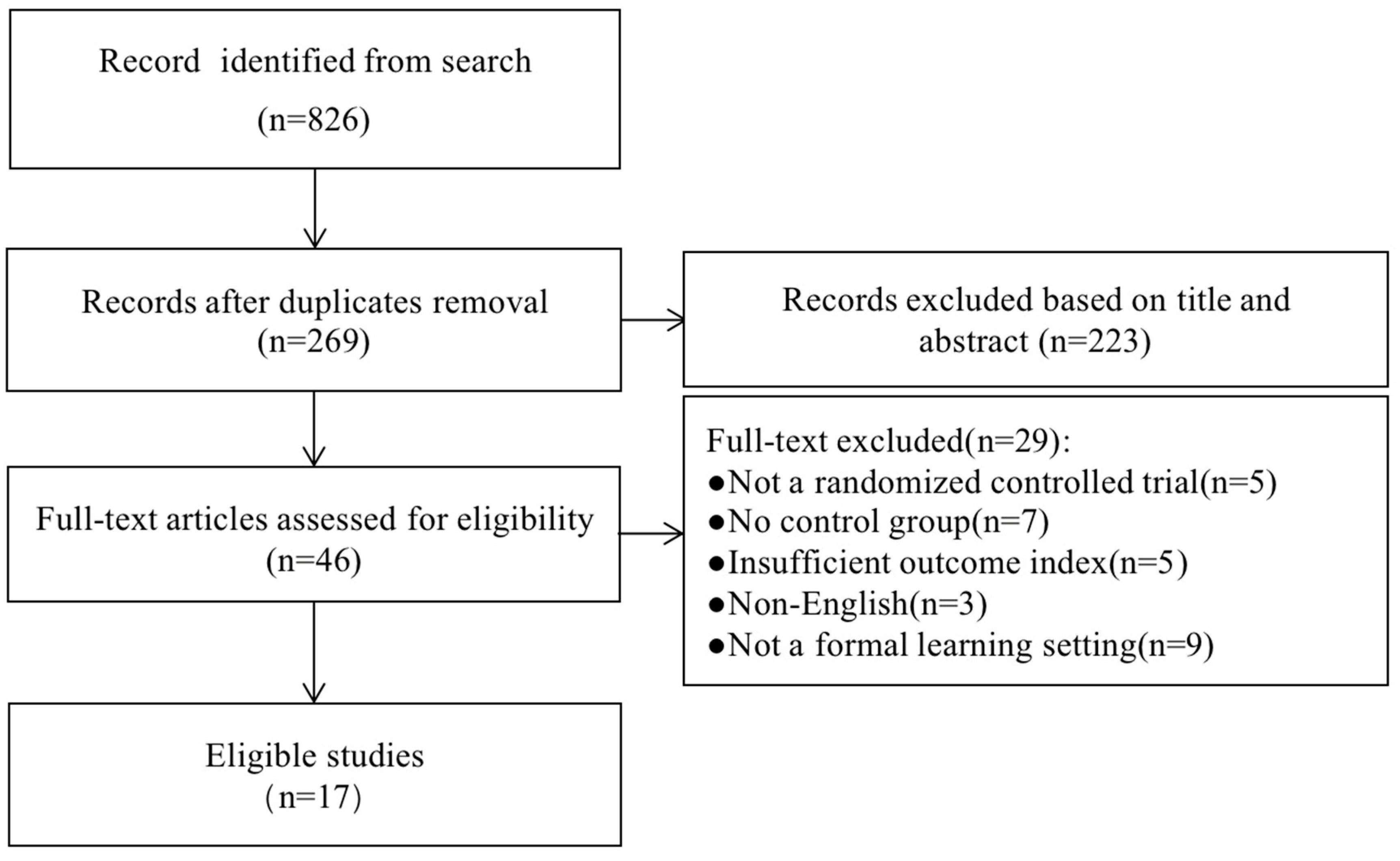

3.1. Search Results

3.2. Characteristics of Included Studies

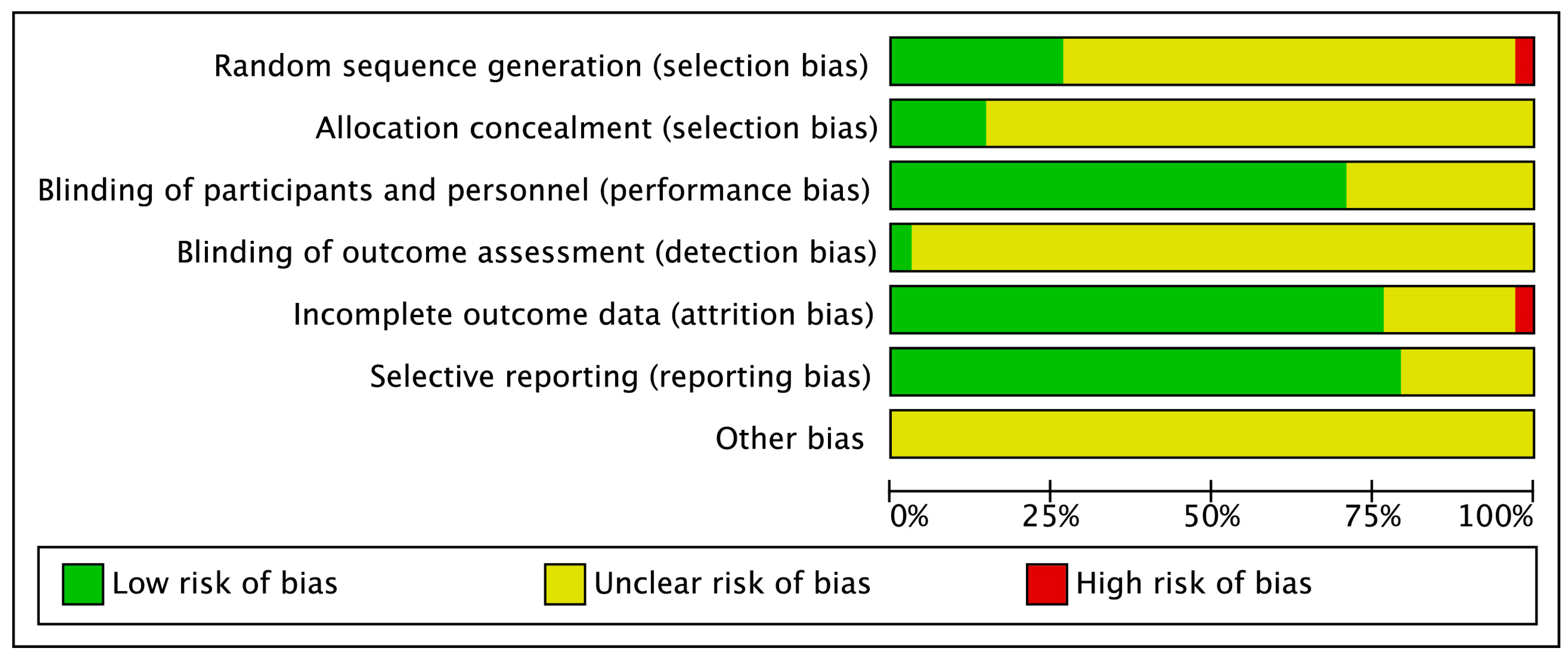

3.3. Study Quality

3.4. Random-Effect Model Meta-Analysis

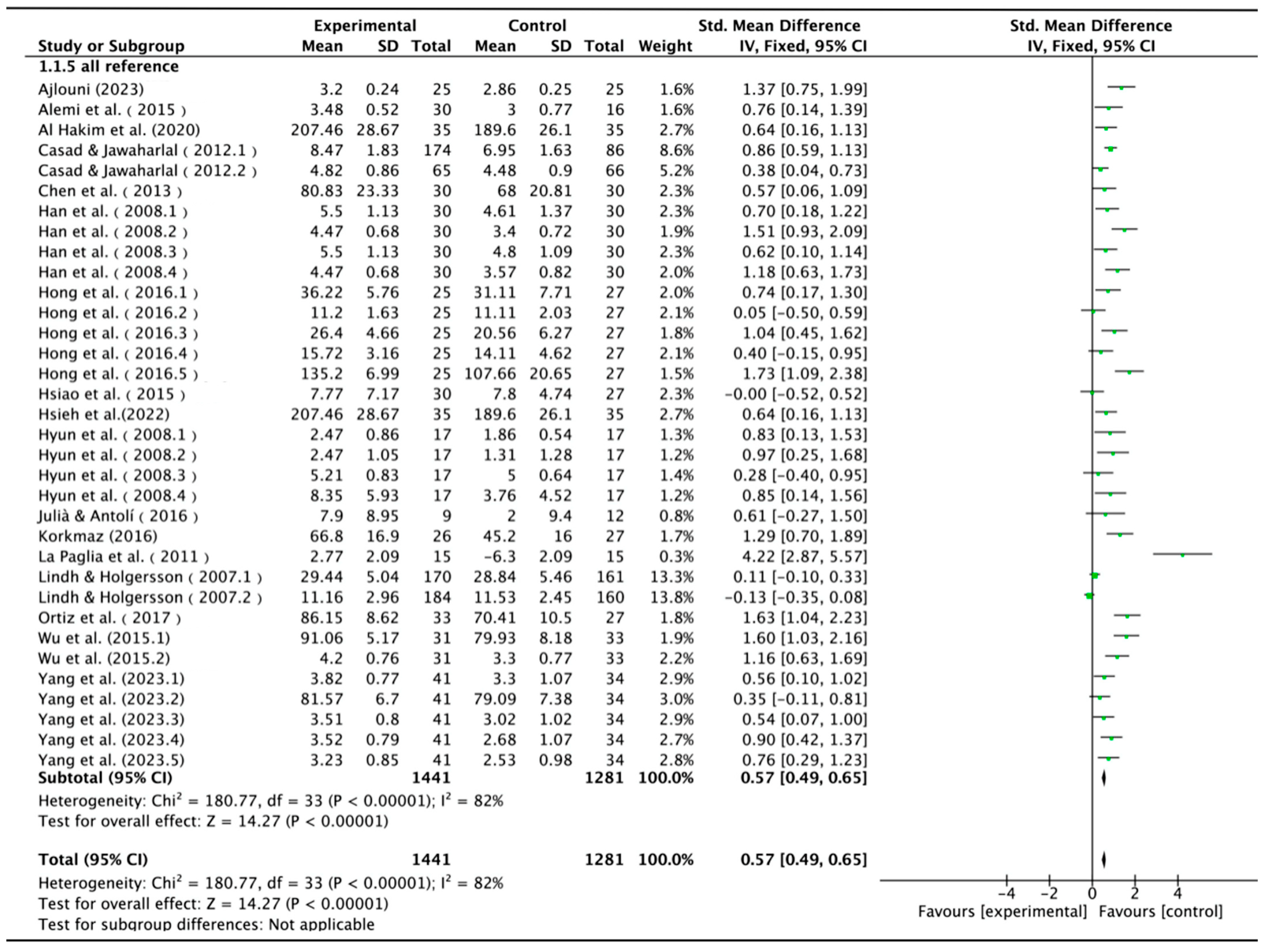

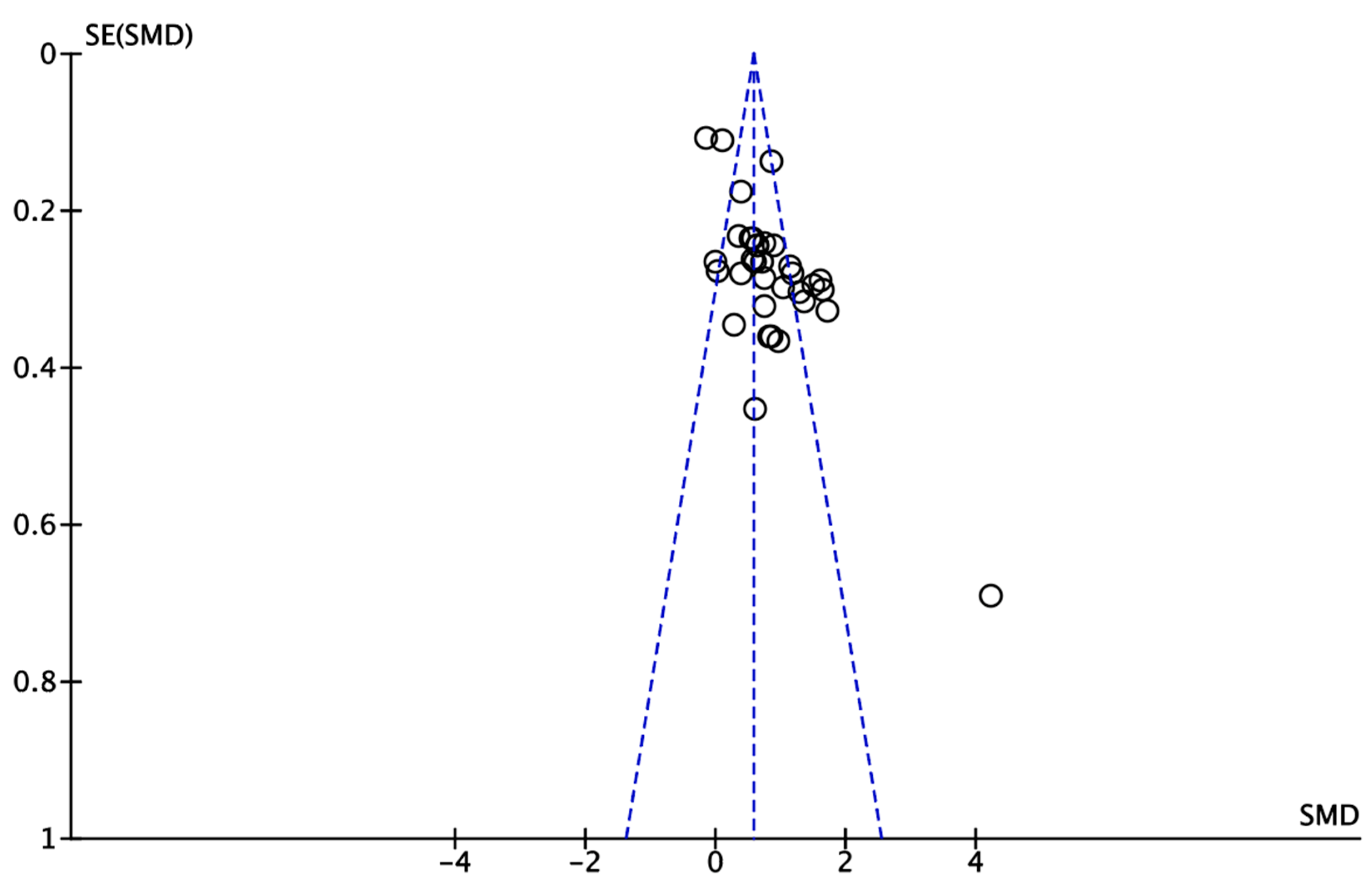

3.4.1. Main Effect

3.4.2. Moderator Analyses

4. Discussion

4.1. The Learning Effectiveness of Educational Robots

4.2. Moderators for Educational Robots on Learning Effectiveness

5. Conclusions

6. Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sachs, J.D.; Schmidt-Traub, G.; Mazzucato, M.; Messner, D.; Nakicenovic, N.; Rockström, J. Six transformations to achieve the Sustainable Development Goals. Nat. Sustain. 2019, 2, 805–814. [Google Scholar] [CrossRef]

- Guenat, S.; Purnell, P.; Davies, Z.G.; Nawrath, M.; Stringer, L.C.; Babu, G.R.; Balasubramanian, M.; Ballantyne, E.E.F.; Bylappa, B.K.; Chen, B.; et al. Meeting sustainable development goals via robotics and autonomous systems. Nat. Commun. 2022, 13, 3559. [Google Scholar] [CrossRef] [PubMed]

- Papert, S.; Solomon, C. Twenty Things to Do with a Computer; Constructing Modern Knowledge Press: Cambridge, MA, USA, 1971. [Google Scholar]

- Karim, M.E.; Lemaignan, S.; Mondada, F. A review: Can robots reshape K-12 STEM education? In Proceedings of the 2015 IEEE International Workshop on Advanced Robotics and Its Social Impacts (ARSO), Lyon, France, 30 June–2 July 2015; pp. 1–8. [Google Scholar]

- Papadopoulos, I.; Lazzarino, R.; Miah, S.; Weaver, T.; Thomas, B.; Koulouglioti, C. A systematic review of the literature regarding socially assistive robots in pre-tertiary education. Comput. Educ. 2020, 155, 103924. [Google Scholar] [CrossRef]

- Li, D.; Rau, P.P.; Li, Y. A cross-cultural study: Effect of robot appearance and task. Int. J. Soc. Robot. 2010, 2, 175–186. [Google Scholar] [CrossRef]

- Mubin, O.; Stevens, C.J.; Shahid, S.; Al Mahmud, A.; Dong, J.J. A review of the applicability of robots in education. J. Technol. Educ. Learn. 2013, 1, 13. [Google Scholar] [CrossRef]

- Barker, B.S.; Ansorge, J. Robotics as means to increase achievement scores in an informal learning environment. J. Res. Technol. Educ. 2007, 39, 229–243. [Google Scholar] [CrossRef]

- Woods, S.; Dautenhahn, K.; Schulz, J. The design space of robots: Investigating children’s views. In Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication, Kurashiki, Japan, 22 September 2004; pp. 47–52. [Google Scholar]

- Serholt, S.; Barendregt, W. Robots tutoring children: Longitudinal evaluation of social engagement in child-robot interaction. In Proceedings of the 9th Nordic Conference on Human-Computer Interaction (NordiCHI’16), Gothenburg, Sweden, 23–27 October 2016; pp. 1–10. [Google Scholar]

- Obaid, M.; Aylett, R.; Barendregt, W.; Basedow, C.; Corrigan, L.J.; Hall, L.; Jones, A.; Kappas, A.; Kuster, D.; Paiva, A.; et al. Endowing a robotic tutor with empathic qualities: Design and pilot evaluation. Int. J. Hum. Robot. 2018, 15, 1850025. [Google Scholar] [CrossRef]

- Keane, T.; Chalmers, C.; Boden, M.; Williams, M. Humanoid robots: Learning a programming language to learn a traditional language. Technol. Pedagog. Educ. 2019, 28, 533–546. [Google Scholar] [CrossRef]

- Edwards, B.I.; Cheok, A.D. Why not robot teachers: Artificial intelligence for addressing teacher shortage. Appl. Artif. Intell. 2018, 32, 345–360. [Google Scholar] [CrossRef]

- Movellan, J.R.; Tanaka, F.; Fortenberry, B.; Aisaka, K. The RUBI/QRIO project: Origins, principles, and first steps. In Proceedings of the 4th IEEE International Conference on Development and Learning (ICDL-2005), Osaka, Japan, 19–21 July 2005; pp. 80–86. [Google Scholar]

- Harmin, M.; Toth, M. Inspiring Active Learning: A Complete Handbook for Today’s Teachers; ASCD: Alexandria, VA, USA, 2006. [Google Scholar]

- Vygotsky, L.S. Mind in Society: The Development of Higher Psychological Processes; Harvard University Press: Cambridge, MA, USA, 1980. [Google Scholar]

- Papert, S. Mindstorms: Children, Computers and Powerful Ideas; Basic Books: New York, NY, USA, 1980. [Google Scholar]

- Barak, M.; Zadok, Y. Robotics projects and learning concepts in science, technology and problem solving. Int. J. Technol. Des. Educ. 2009, 19, 289–307. [Google Scholar] [CrossRef]

- Hong, J.C.; Yu, K.C.; Chen, M.Y. Collaborative learning in technological project design. Int. J. Technol. Des. Educ. 2011, 21, 335–347. [Google Scholar] [CrossRef]

- Kubilinskienė, S.; Žilinskienė, I.; Dagienė, V.; Sinkevičius, V. Applying robotics in school education: A systematic review. Balt. J. Mod. Comput. 2017, 5, 50–69. [Google Scholar] [CrossRef]

- Rusk, N.; Resnick, M.; Berg, R.; Pezalla-Granlund, M. New pathways into robotics: Strategies for broadening participation. J. Sci. Educ. Technol. 2008, 17, 59–69. [Google Scholar] [CrossRef]

- Han, J.; Kim, D. r-Learning services for elementary school students with a teaching assistant robot. In Proceedings of the 2009 4th ACM/IEEE International Conference on Human-Robot Interaction (HRI), La Jolla, CA, USA, 11–13 March 2009; pp. 255–256. [Google Scholar]

- Alimisis, D. Educational robotics: Open questions and new challenges. Themes Sci. Technol. Educ. 2013, 6, 63–71. [Google Scholar]

- Baker, T.; Smith, L.; Anissa, N. Educ-AI-tion Rebooted? Exploring the Future of Artificial Intelligence in Schools and Colleges. NESTA. 2019. Available online: https://www.nesta.org.uk/report/education-rebooted/ (accessed on 8 February 2023).

- Tuomi, I. The Impact of Artificial Intelligence on Learning, Teaching, and Education: Policies for the Future; JRC Science for Policy Report; Publications Office of the European Union: Luxembourg, 2018. [Google Scholar]

- Hong, Z.W.; Huang, Y.M.; Hsu, M.; Shen, W.W. Authoring robot-assisted instructional materials for improving learning performance and motivation in EFL classrooms. J. Educ. Technol. Soc. 2016, 19, 337–349. [Google Scholar]

- Toh, L.P.E.; Causo, A.; Tzuo, P.W.; Chen, I.M.; Yeo, S.H. A review on the use of robots in education and young children. J. Educ. Technol. Soc. 2016, 19, 148–163. [Google Scholar]

- McDonald, S.; Howell, J. Watching, creating and achieving: Creative technologies as a conduit for learning in the early years. Br. J. Educ. Technol. 2012, 43, 641–651. [Google Scholar] [CrossRef]

- Mathers, N.; Goktogen, A.; Rankin, J.; Anderson, M. Robotic mission to mars: Hands-on, minds-on, web-based learning. Acta Astronaut. 2012, 80, 124–131. [Google Scholar] [CrossRef]

- Chin, K.Y.; Hong, Z.W.; Chen, Y.L. Impact of using an educational robot-based learning system on students’ motivation in elementary education. IEEE Trans. Learn. Technol. 2014, 7, 333–345. [Google Scholar] [CrossRef]

- Chang, C.W.; Lee, J.H.; Chao, P.Y.; Wang, C.Y.; Chen, G.D. Exploring the possibility of using humanoid robots as instructional tools for teaching a second language in primary school. J. Educ. Technol. Soc. 2010, 13, 13–24. [Google Scholar]

- Fridin, M. Kindergarten social assistive robot: First meeting and ethical issues. Comput. Hum. Behav. 2014, 30, 262–272. [Google Scholar] [CrossRef]

- Uluer, P.; Akalın, N.; Köse, H. A new robotic platform for sign language tutoring. Int. J. Soc. Robot. 2015, 7, 571–585. [Google Scholar] [CrossRef]

- Benitti, F.B.V. Exploring the educational potential of robotics in schools: A systematic review. Comput. Educ. 2012, 58, 978–988. [Google Scholar] [CrossRef]

- Nugent, G.; Barker, B.; Grandgenett, N.; Adamchuk, V.I. Impact of robotics and geospatial technology interventions on youth STEM learning and attitudes. J. Res. Technol. Educ. 2010, 42, 391–408. [Google Scholar] [CrossRef]

- Spolaôr, N.; Benitti, F.B.V. Robotics applications grounded in learning theories on tertiary education: A systematic review. Comput. Educ. 2017, 112, 97–107. [Google Scholar] [CrossRef]

- Woo, H.; LeTendre, G.K.; Pham-Shouse, T.; Xiong, Y. The Use of Social Robots in Classrooms: A Review of Field-based Studies. Educ. Res. Rev. 2021, 33, 100388. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- Hallgren, K.A. Computing inter-rater reliability for observational data: An overview and tutorial. Tutor. Quant. Methods Psychol. 2012, 8, 23. [Google Scholar] [CrossRef]

- Gwet, K.L. Benchmarking inter-rater reliability coefficients. In Handbook of Inter-Rater Reliability, 3rd ed.; Advanced Analytics, LLC: Gaithersburg, MD, USA, 2012; pp. 121–128. [Google Scholar]

- Borenstein, M.; Cooper, H.; Hedges, L.; Valentine, J. Effect sizes for continuous data. In The Handbook of Research Synthesis and Meta-Analysis; Russell Sage Foundation: Manhattan, NY, USA, 2009; Volume 2, pp. 221–235. [Google Scholar]

- Hedges, L.V.; Tipton, E.; Johnson, M.C. Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 2010, 1, 39–65. [Google Scholar] [CrossRef] [PubMed]

- Scammacca, N.; Roberts, G.; Stuebing, K.K. Meta-analysis with complex research designs: Dealing with dependence from multiple measures and multiple group comparisons. Rev. Educ. Res. 2014, 84, 328–364. [Google Scholar] [CrossRef] [PubMed]

- Littell, J.H.; Corcoran, J.; Pillai, V. Systematic Reviews and Meta-Analysis; Oxford University Press: Oxford, UK, 2008. [Google Scholar]

- Higgins, J.P.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Collaboration, C. Review Manager (RevMan) Version 5.3; The Nordic Cochrane Centre: Copenhagen, Denmark, 2014. [Google Scholar]

- Tutal, Ö.; Yazar, T. Flipped classroom improves academic achievement, learning retention and attitude towards course: A meta-analysis. Asia Pac. Educ. Rev. 2021, 22, 655–673. [Google Scholar] [CrossRef]

- Hedges, L.V. Fitting categorical models to effect sizes from a series of experiments. J. Educ. Stat. 1982, 7, 119–137. [Google Scholar] [CrossRef]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef]

- Viechtbauer, W.; Cheung MW, L. Outlier and influence diagnostics for meta-analysis. Res. Synth. Methods 2010, 1, 112–125. [Google Scholar] [CrossRef]

- Li, X.; Dusseldorp, E.; Su, X.; Meulman, J.J. Multiple moderator meta-analysis using the R-package Meta-CART. Behav. Res. Methods 2020, 52, 2657–2673. [Google Scholar] [CrossRef] [PubMed]

- Casad, B.J.; Jawaharlal, M. Learning through guided discovery: An engaging approach to K-12 STEM education. In Proceedings of the 2012 ASEE Annual Conference & Exposition, San Antonio, TX, USA, 10–13 June 2012. [Google Scholar]

- Han, J.H.; Jo, M.H.; Jones, V.; Jo, J.H. Comparative study on the educational use of home robots for children. J. Inf. Proc. Syst. 2008, 4, 159–168. [Google Scholar] [CrossRef]

- Ajlouni, A. The Impact of Instruction-Based LEGO WeDo 2.0 Robotic and Hypermedia on Students’ Intrinsic Motivation to Learn Science. Int. J. Interact. Mob. Technol. 2023, 17, 22–39. [Google Scholar] [CrossRef]

- Alemi, M.; Meghdari, A.; Ghazisaedy, M. The impact of social robotics on L2 learners’ anxiety and attitude in English vocabulary acquisition. Int. J. Soc. Robot. 2015, 7, 523–535. [Google Scholar] [CrossRef]

- Al Hakim, V.G.; Yang, S.H.; Tsai, T.H.; Lo, W.H.; Wang, J.H.; Hsu, T.C.; Chen, G.D. Interactive Robot as Classroom Learning Host to Enhance Audience Participation in Digital Learning Theater. In Proceedings of the 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 95–97. [Google Scholar]

- Chen, G.D.; Nurkhamid; Wang, C.Y.; Yang, S.H.; Lu, W.Y.; Chang, C.K. Digital learning playground: Supporting authentic learning experiences in the classroom. Interact. Learn. Environ. 2013, 21, 172–183. [Google Scholar] [CrossRef]

- Hsiao, H.S.; Chang, C.S.; Lin, C.Y.; Hsu, H.L. “iRobiQ”: The influence of bidirectional interaction on kindergarteners’ reading motivation, literacy, and behavior. Interact. Learn. Environ. 2015, 23, 269–292. [Google Scholar] [CrossRef]

- Hsieh, M.C.; Pan, H.C.; Hsieh, S.W.; Hsu, M.J.; Chou, S.W. Teaching the concept of computational thinking: A STEM-based program with tangible robots on project-based learning courses. Front. Psychol. 2022, 12, 6628. [Google Scholar] [CrossRef] [PubMed]

- Hyun, E.J.; Kim, S.Y.; Jang, S.; Park, S. Comparative study of effects of language instruction program using intelligence robot and multimedia on linguistic ability of young children. In Proceedings of the RO-MAN 2008-The 17th IEEE International Symposium on Robot and Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 187–192. [Google Scholar]

- Julià, C.; Antolí, J.Ò. Spatial ability learning through educational robotics. Int. J. Technol. Des. Educ. 2016, 26, 185–203. [Google Scholar] [CrossRef]

- Korkmaz, Ö. The Effect of Lego Mindstorms Ev3 Based Design Activities on Students’ Attitudes towards Learning Computer Programming, Self-Efficacy Beliefs and Levels of Academic Achievement. Balt. J. Mod. Comput. 2016, 4, 994–1007. [Google Scholar]

- La Paglia, F.; Rizzo, R.; La Barbera, D. Use of robotics kits for the enhancement of metacognitive skills of mathematics: A possible approach. Annu. Rev. Cyberther. Telemed. 2011, 167, 26–30. [Google Scholar]

- Lindh, J.; Holgersson, T. Does Lego training stimulate pupils’ ability to solve logical problems? Comput. Educ. 2007, 49, 1097–1111. [Google Scholar] [CrossRef]

- Ortiz, O.O.; Franco, J.Á.P.; Garau, P.M.A.; Martín, R.H. Innovative mobile robot method: Improving the learning of programming languages in engineering degrees. IEEE Trans. Educ. 2016, 60, 143–148. [Google Scholar] [CrossRef]

- Wu, W.C.V.; Wang, R.J.; Chen, N.S. Instructional design using an in-house built teaching assistant robot to enhance elementary school English-as-a-foreign-language learning. Interact. Learn. Environ. 2015, 23, 696–714. [Google Scholar] [CrossRef]

- Yang, F.C.O.; Lai, H.M.; Wang, Y.W. Effect of augmented reality-based virtual educational robotics on programming students’ enjoyment of learning, computational thinking skills, and academic achievement. Comput. Educ. 2023, 195, 104721. [Google Scholar] [CrossRef]

- Cohen, L.; Lee, C. Instantaneous frequency, its standard deviation and multicomponent signals. In Advanced Algorithms and Architectures for Signal Processing III; Society of Photo Optical: Bellingham, WA, USA, 1988; pp. 186–208. [Google Scholar]

- Cheng, Y.W.; Sun, P.C.; Chen, N.S. The essential applications of educational robot: Requirement analysis from the perspectives of experts, researchers and instructors. Comput. Educ. 2018, 126, 399–416. [Google Scholar] [CrossRef]

- Cheung, A.C.; Slavin, R.E. The effectiveness of educational technology applications for enhancing mathematics achievement in K-12 classrooms: A meta-analysis. Educ. Res. Rev. 2013, 9, 88–113. [Google Scholar] [CrossRef]

- Slavin, R.; Madden, N.A. Measures inherent to treatments in program effectiveness reviews. J. Res. Educ. Eff. 2011, 4, 370–380. [Google Scholar] [CrossRef]

- Strelan, P.; Osborn, A.; Palmer, E. The flipped classroom: A meta-analysis of effects on student performance across disciplines and education levels. Educ. Res. Rev. 2020, 30, 100314. [Google Scholar] [CrossRef]

- Belpaeme, T.; Baxter, P.; De Greeff, J.; Kennedy, J.; Read, R.; Neerincx, M.; Baroni, I.; Looije, R.; Zelati, M.C. Child-robot interaction: Perspectives and challenges. In Proceedings of the 5th International Conference on Social Robotics, Bristol, UK, 27–29 October 2013; pp. 452–459. [Google Scholar]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef]

- Fernández-Llamas, C.; Conde, M.A.; Rodríguez-Lera, F.J.; Rodríguez-Sedano, F.J.; García, F. May I teach you? Students’ behavior when lectured by robotic vs. human teachers. Comput. Hum. Behav. 2018, 80, 460–469. [Google Scholar] [CrossRef]

- Hashimoto, T.; Kato, N.; Kobayashi, H. Development of educational system with the android robot SAYA and evaluation. Int. J. Adv. Robot. Syst. 2011, 8, 28. [Google Scholar] [CrossRef]

- Serholt, S. Breakdowns in children’s interactions with a robotic tutor: A longitudinal study. Comput. Hum. Behav. 2018, 81, 250–264. [Google Scholar] [CrossRef]

- Whittier, L.E.; Robinson, M. Teaching evolution to non-English proficient students by using Lego robotics. Am. Second. Educ. 2007, 35, 19–28. [Google Scholar]

- van Straten, C.L.; Peter, J.; Kühne, R. Child-robot relationship formation: A narrative review of empirical research. Int. J. Soc. Robot. 2020, 12, 325–344. [Google Scholar] [CrossRef] [PubMed]

| Study (Year) | Sample Size (E/C) | Discipline | Educational Level | Treatment Duration | Assessment | Robotic Type |

|---|---|---|---|---|---|---|

| Ajlouni (2023) [55] | 25/25 | Science | Primary education | 8 weeks | Intrinsic motivation | LEGO WeDo 2.0 robotic |

| Alemi et al. (2015) [56] | 30/16 | English | Secondary education | 5 weeks | Anxiety scores | Humanoid robot |

| Al Hakim et al. (2020) [57] | 24/26 | Theater | Secondary education | 6 weeks | Official drama performance | Social robot |

| Casad and Jawaharlal (2012) [53] | 174/86 | Robotics program | Primary education | 25 weeks | General academic performance | STEM robotic kits |

| 65/66 | Robotics program | Primary education | 6 months | Attitudes toward math | STEM robotic kits | |

| Chen et al. (2013) [58] | 30/30 | English | Primary education | 50 min | Learning achievement | Social robot |

| Han et al. (2008) [54] | 30/30 | English | Primary education | 40 min | Post-test only achievement | IROBI |

| 30/30 | English | Primary education | 40 min | Interest | IROBI | |

| 30/30 | English | Primary education | 40 min | Learning achievement | IROBI | |

| 30/30 | English | Primary education | 40 min | Interest | IROBI | |

| Hong et al. (2016) [26] | 25/27 | English | Primary education | 2 h | Listening | Humanoid robot |

| 25/27 | English | Primary education | 2 h | Speaking | Humanoid robot | |

| 25/27 | English | Primary education | 2 h | Reading | Humanoid robot | |

| 25/27 | English | Primary education | 2 h | Writing | Humanoid robot | |

| 25/27 | English | Primary education | 2 h | Learning motivation | Humanoid robot | |

| Hsiao et al. (2015) [59] | 30/27 | Chinese | Pre-school education | 8 weeks | Reading literacy | Social robot iRobiQ |

| Hsieh et al. (2022) [60] | 35/35 | Computer concepts | Higher education | 8 weeks | Computational thinking capabilities | Humanoid robot |

| Hyun et al. (2008) [61] | 17/17 | Korea linguistic ability | Pre-school education | 7 weeks | Story making | Social robot iRobiQ |

| 17/17 | Korea linguistic ability | Pre-school education | 7 weeks | Story understanding | Social robot iRobiQ | |

| 17/17 | Korea linguistic ability | Pre-school education | 7 weeks | Vocabulary | Social robot iRobiQ | |

| 17/17 | Korea linguistic ability | Pre-school education | 7 weeks | Word recognition | Social robot iRobiQ | |

| Julià and Antolí (2016) [62] | 9/12 | Mathematics | Primary education | 8 weeks | Spatial ability average scores | Lego |

| Korkmaz (2016) [63] | 27/26 | Computer programming | Higher education | 8 weeks | Academic achievement test | Lego Mindstorms Ev3 |

| La Paglia et al. (2011) [64] | 15/15 | Mathematics | Secondary education | 10 weeks | Metacognitive control | Robotic kits |

| Lindh and Holgersson (2007) [65] | 170/161 | Programmable construction | Primary education | 12 months | Mathematical problems | Lego |

| 184/160 | Programmable construction | Primary education | 12 months | Logical problems | Lego | |

| Ortiz et al. (2017) [66] | 33/27 | Computer programming | Higher education | 16 weeks | The structure of the vehicle and its components | Robotic kits |

| Wu et al. (2015) [67] | 31/33 | English | Primary education | 4 lecture hours | Learning outcomes | Humanoid robot |

| 31/33 | English | Primary education | 4 lecture hours | Learning motivation and interest | Humanoid robot | |

| Yang et al. (2023) [68] | 41/34 | Information management | Higher education | 5 weeks | Academic achievement | AR Bot |

| 41/34 | Information management | Higher education | 5 weeks | Enjoyment | AR Bot | |

| 41/34 | Information management | Higher education | 5 weeks | Problem decomposition skill | AR Bot | |

| 41/34 | Information management | Higher education | 5 weeks | Algorithm design skill | AR Bot | |

| 41/34 | Information management | Higher education | 5 weeks | Algorithm efficiency skill | AR Bot |

| Moderator Variables | k | SMD | Z | I2 (%) | p |

|---|---|---|---|---|---|

| Educational level | 73.5 | 0.01 * | |||

| 1. Pre-school | 5 | 0.55 | 2.64 | ||

| 2. Primary school | 18 | 0.78 | 5.13 | ||

| 3. Secondary school | 3 | 1.69 | 2.14 | ||

| 4. Higher education | 8 | 1.42 | 6.76 | ||

| Subject area | 0 | 0.69 | |||

| 1. Social science and humanities | 19 | 0.80 | 7.05 | ||

| 2. Science | 15 | 0.87 | 3.46 | ||

| Treatment duration | 0 | 0.57 | |||

| 1. 0–4 weeks | 12 | 0.92 | 6.17 | ||

| 2. 4–8 weeks | 13 | 0.72 | 5.01 | ||

| 3. Above 8 weeks | 9 | 0.79 | 3.43 | ||

| Type of assessment | 83.7 | 0.046 * | |||

| 1. Exam mark | 11 | 0.97 | 4.84 | ||

| 2. Skill-based measure | 16 | 0.49 | 3.56 | ||

| 3. Attitude | 7 | 1.23 | 8.08 | ||

| Robotic type | 0 | 0.54 | |||

| 1. Robotic kits | 9 | 0.88 | 3.56 | ||

| 2. Zoomorphic social robot | 11 | 0.71 | 5.36 | ||

| 3. Humanoid robot | 14 | 0.91 | 4.53 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, K.; Sang, G.-Y.; Huang, L.-Z.; Li, S.-H.; Guo, J.-W. The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis. Sustainability 2023, 15, 4637. https://doi.org/10.3390/su15054637

Wang K, Sang G-Y, Huang L-Z, Li S-H, Guo J-W. The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis. Sustainability. 2023; 15(5):4637. https://doi.org/10.3390/su15054637

Chicago/Turabian StyleWang, Kai, Guo-Yuan Sang, Lan-Zi Huang, Shi-Hua Li, and Jian-Wen Guo. 2023. "The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis" Sustainability 15, no. 5: 4637. https://doi.org/10.3390/su15054637

APA StyleWang, K., Sang, G.-Y., Huang, L.-Z., Li, S.-H., & Guo, J.-W. (2023). The Effectiveness of Educational Robots in Improving Learning Outcomes: A Meta-Analysis. Sustainability, 15(5), 4637. https://doi.org/10.3390/su15054637