Improving NoSQL Spatial-Query Processing with Server-Side In-Memory R*-Tree Indexes for Spatial Vector Data

Abstract

1. Introduction

2. Related Work

2.1. Sustainability in Geospatial Databases

2.2. NoSQL Databases and HBase

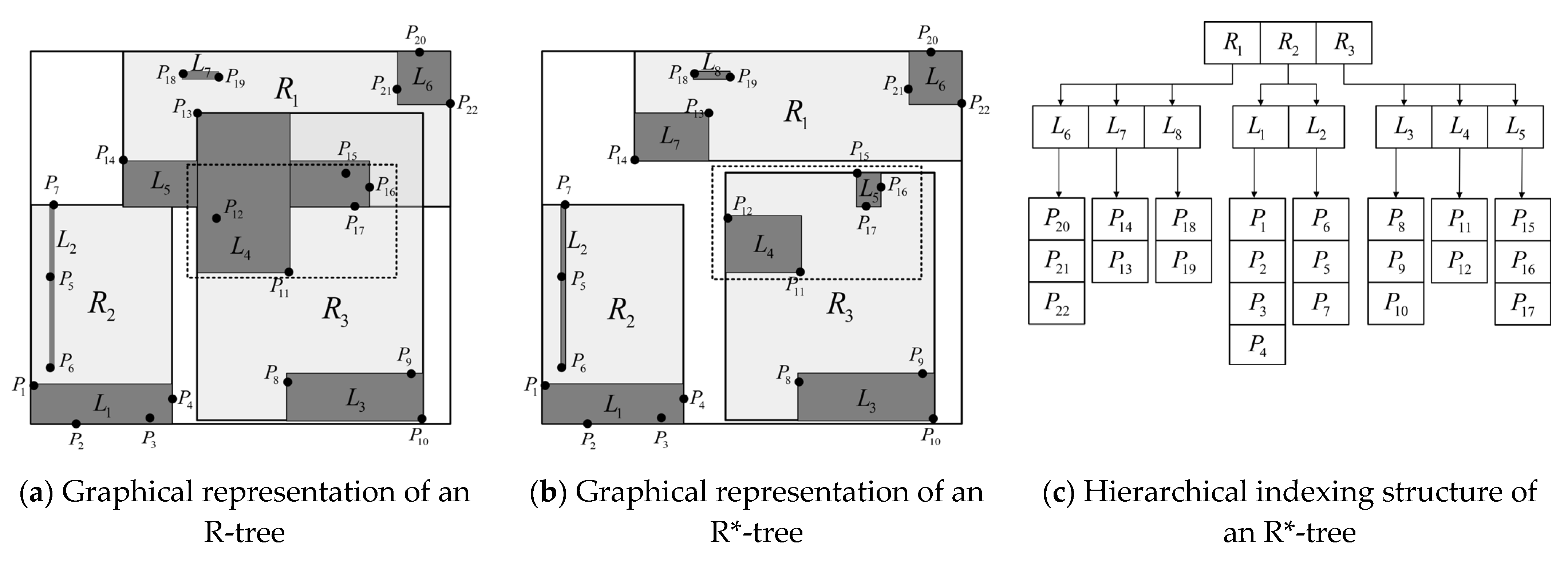

2.3. R*-Tree and R-Tree Family

2.4. Spatial-Query Processing on NoSQL Databases

3. Methodology

3.1. Application Types

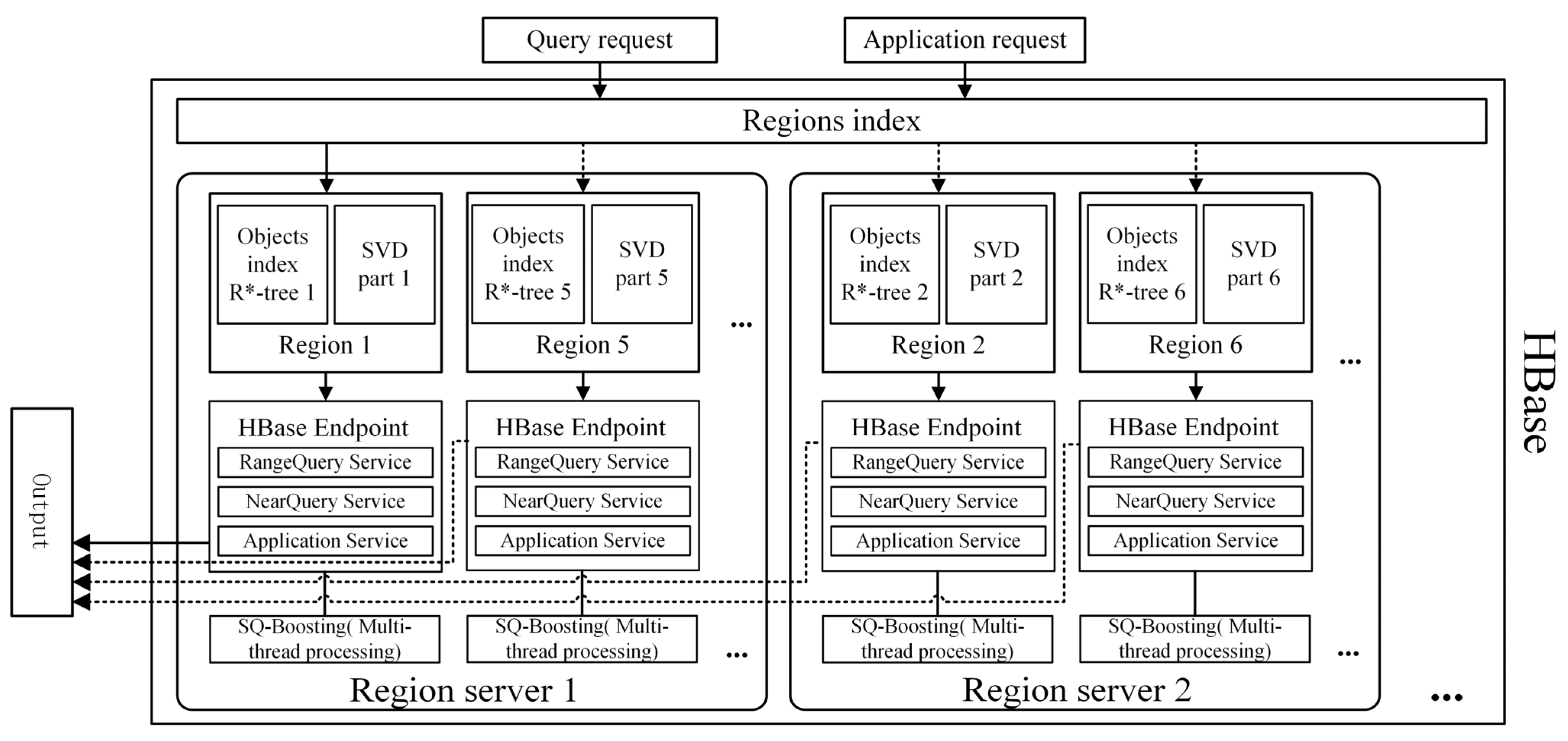

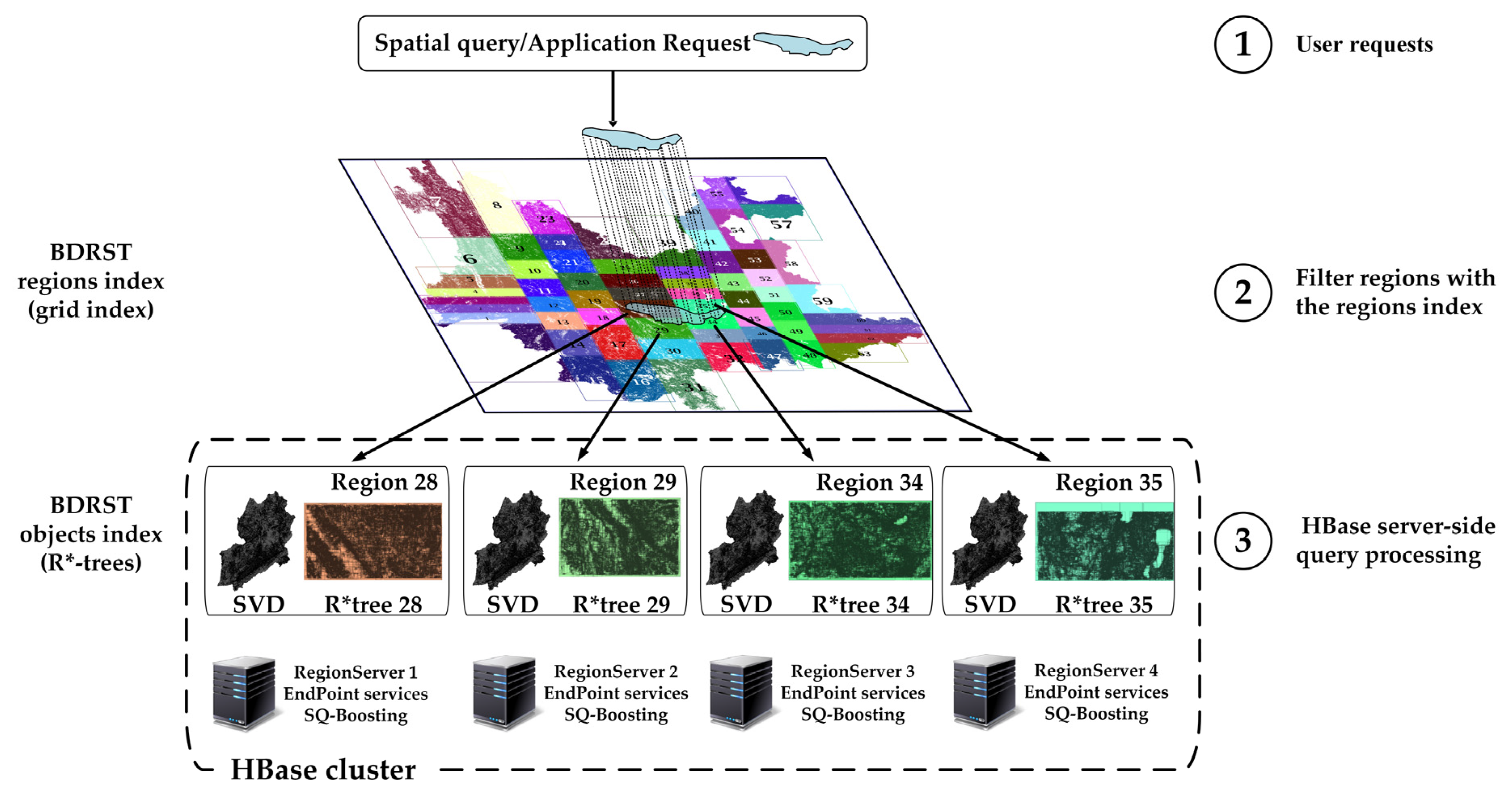

3.2. Architecture Overview

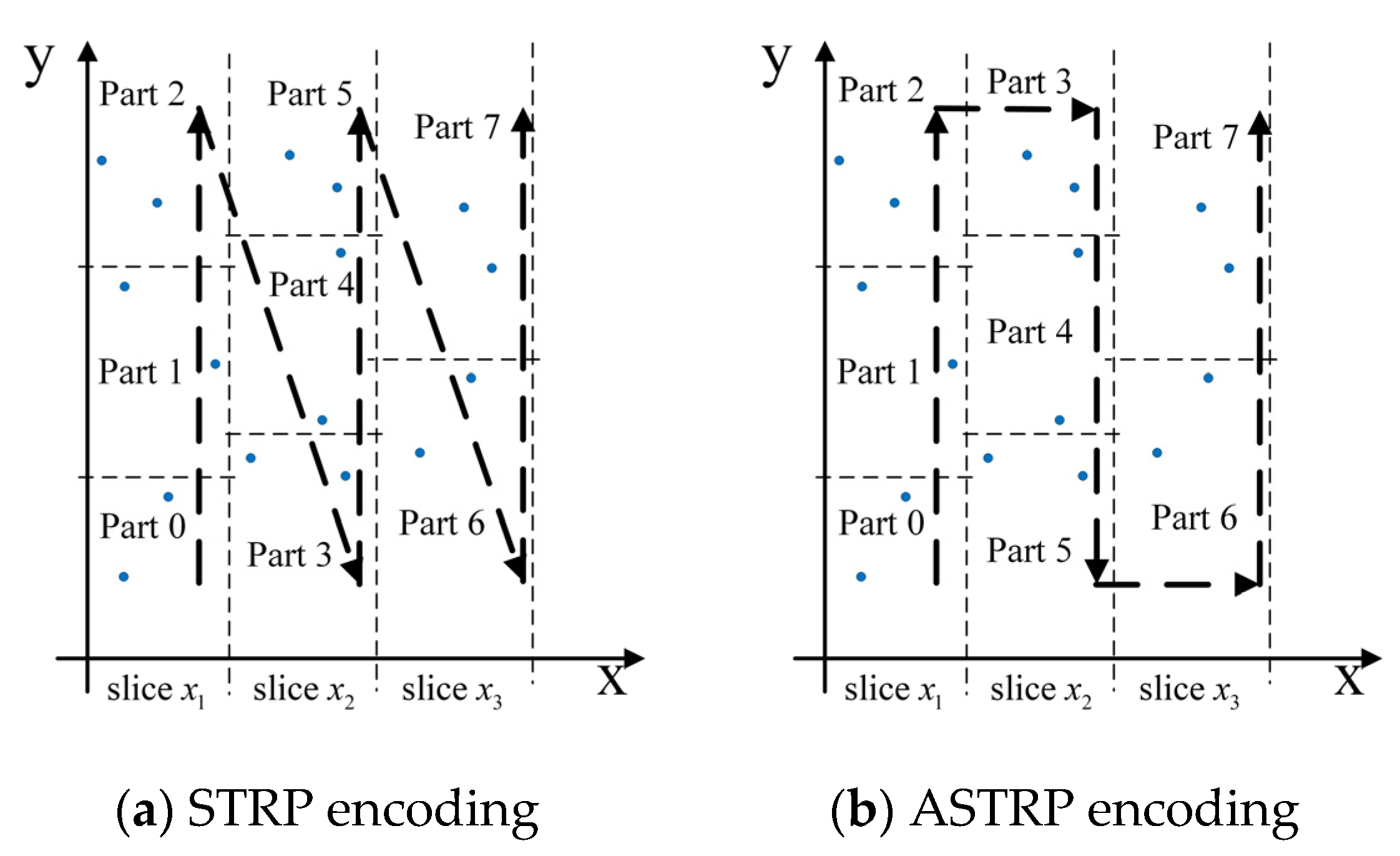

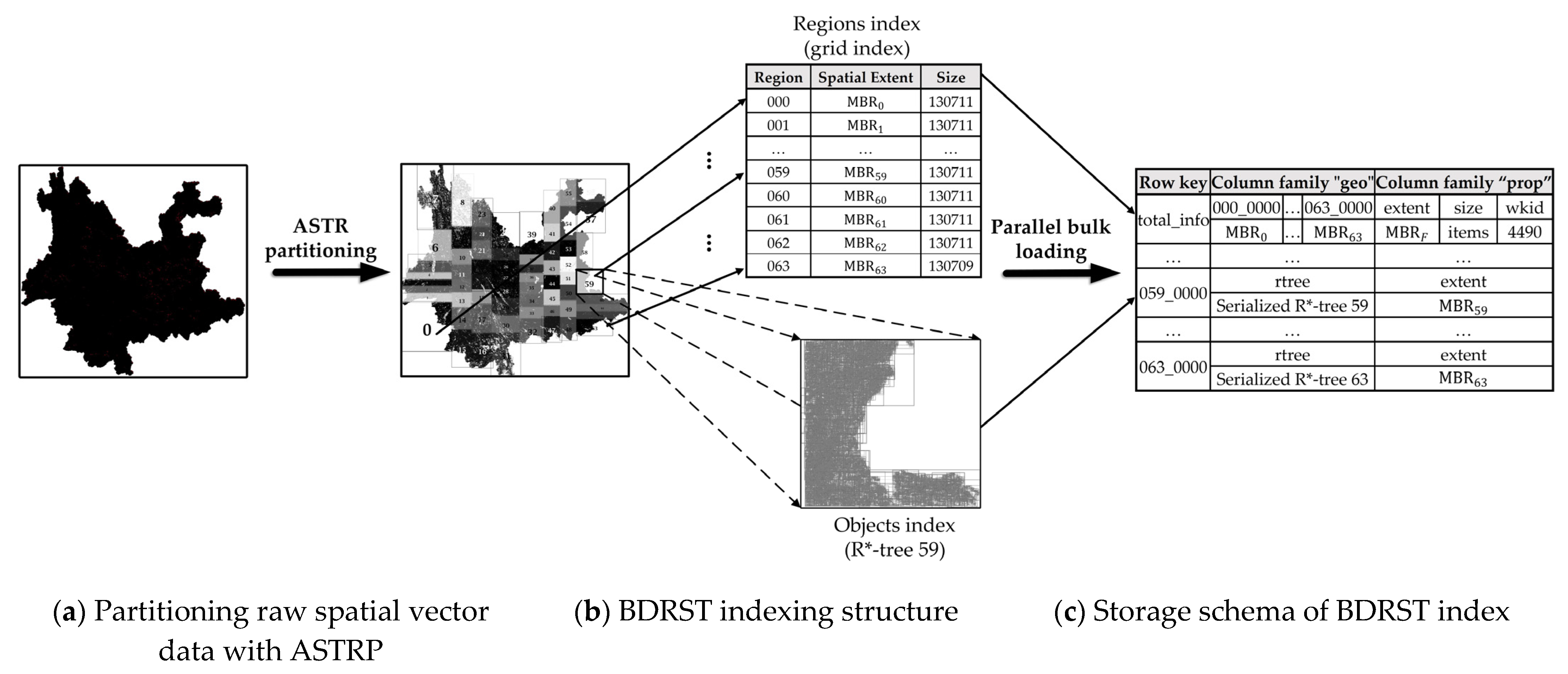

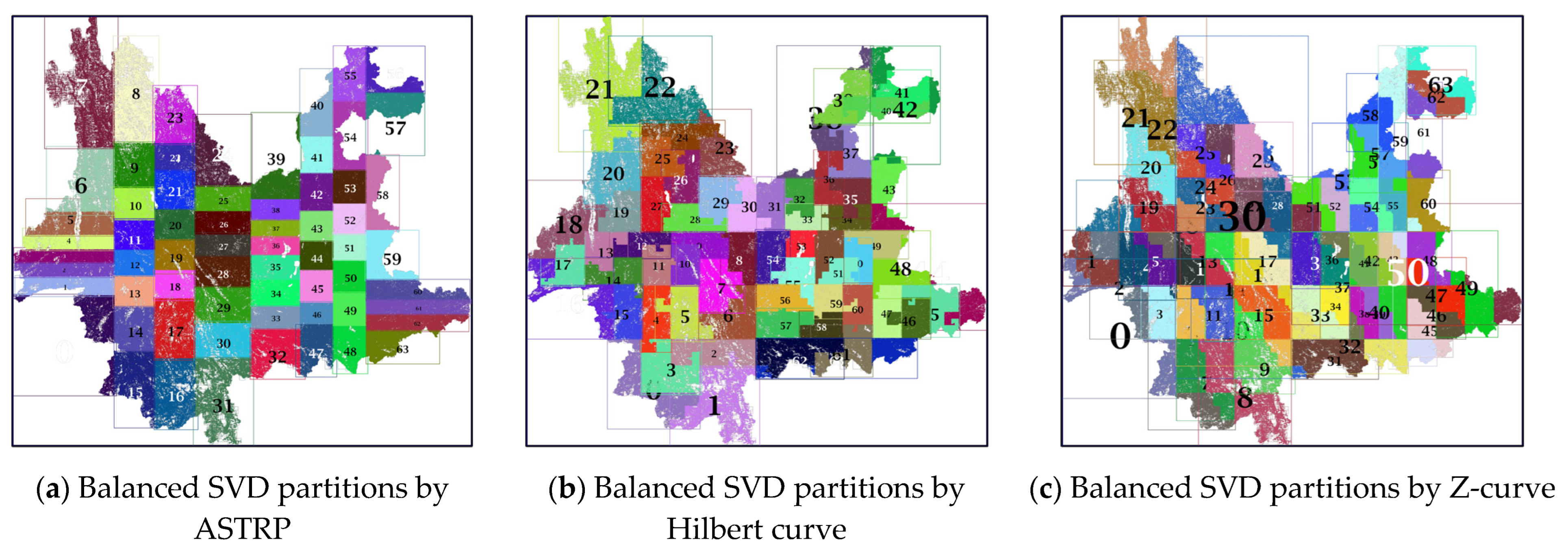

3.3. SVD Partitioning and Balancing

3.4. BDRST Indexing Approach for SVD

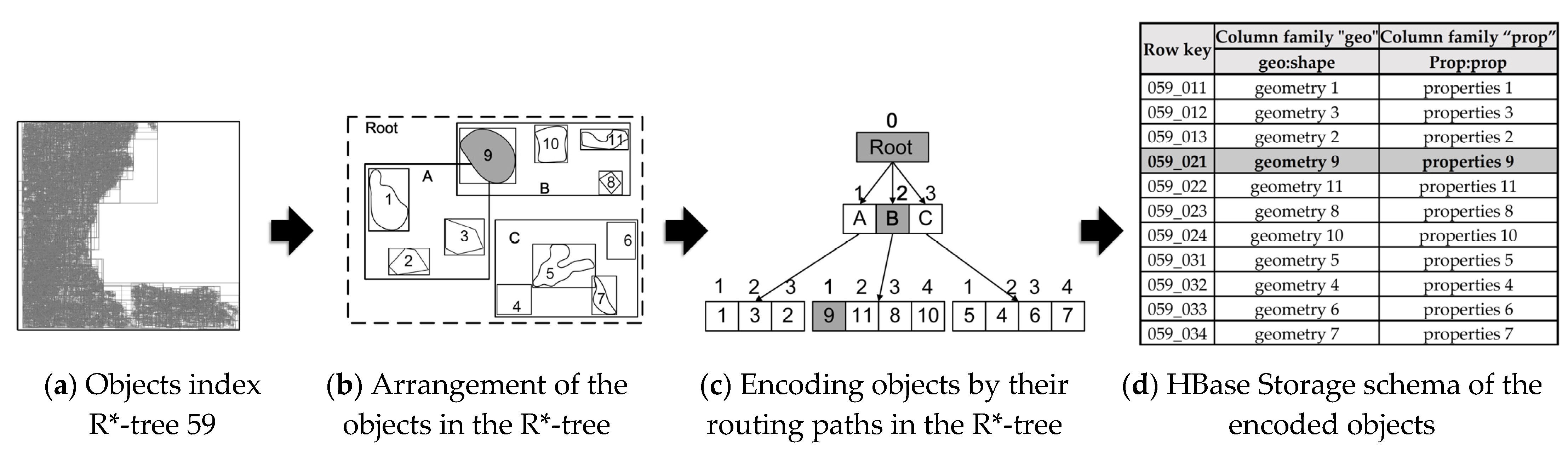

3.5. Storage Schema of BDRST Index on HBase

3.6. Server-Side Parallel Spatial-Query Processing on HBase

3.6.1. Processing Phases

3.6.2. Spatial-Query Processing Based on HBase Endpoint

| Algorithm 1 SSCP-based spatial query processing. |

| Input: input geometry , spatial predicate , limit Output: query result or |

| /Step 1: Filter regions with the regions index. Get the GMR gmr from the HBase table; Foreach in gmr do if matches spatial predicate with Add partid to qualified region list regionlist; Client launches a batch call that broadcasts to the endpoint services on the regions addressed in regionlist; / Step 2: Filter objects with the objects index in parallel |

| Foreach region in regionlist concurrently do |

| Get byte array rtreebytes of the local R*-tree from the LMR; Deserialize rtreebytes and create an R*-tree instance ; Query with ,, and get query result ; if requires geometry filter then if .size > threshold then Split into n subsets according to the SQ-boosting configuration; foreach subset in n subsets do Start a new thread to perform the function geoFilter(partid,) Append the geoFilter result into the result set ; else Perform geoFilter(partid,) and append the result into . else Append spatial objects mapped in to ; |

| return query result to the client; function geoFilter(partid,) for object identifier code in do Get geometry geo from the DTR by row key “partid_code”. if geo matches spatial predicate with then Append raw spatial vector data to local result set ; return ; |

3.6.3. Processing of Query-Based Applications

| Algorithm 2 Land-use compliance review processing function. |

| Input: partition identifier partid, index query result set , input geometry Output: land-use compliance review result |

| foreach objects code code in do |

| Get geometry geo and raw SVD from the DTR by row key “partid_code” if geo intersects with then Calculate the intersection resgeo between geo and q Calculate the planar and geodesic area of resgeo Put areas and other statistical data into and format Append into result set |

| return to the client. |

4. Experimental Evaluation

4.1. Measurement Metrics

4.2. Experimental Setup

4.3. Experimental Results

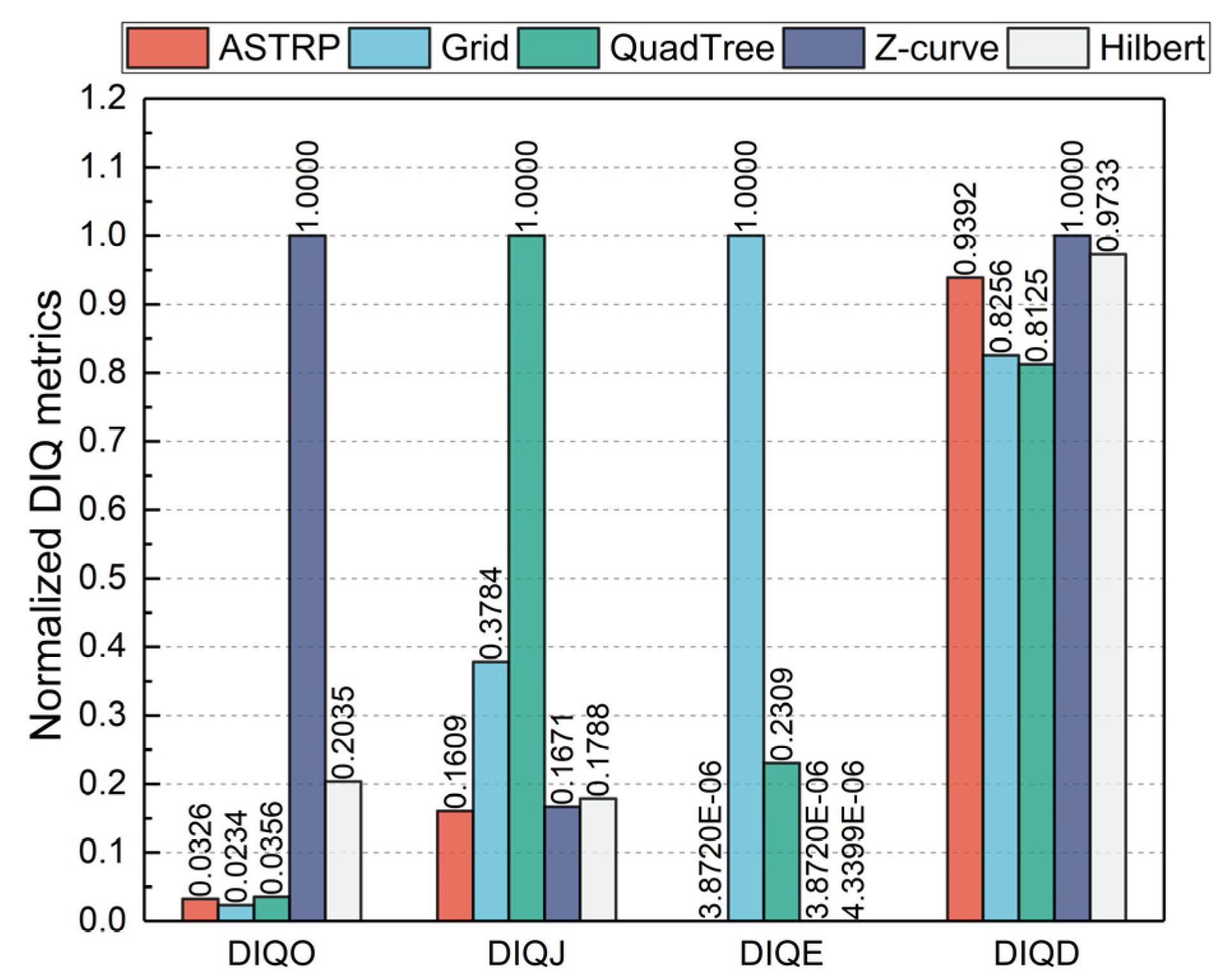

4.3.1. Distributed Spatial Index Quality Evaluation

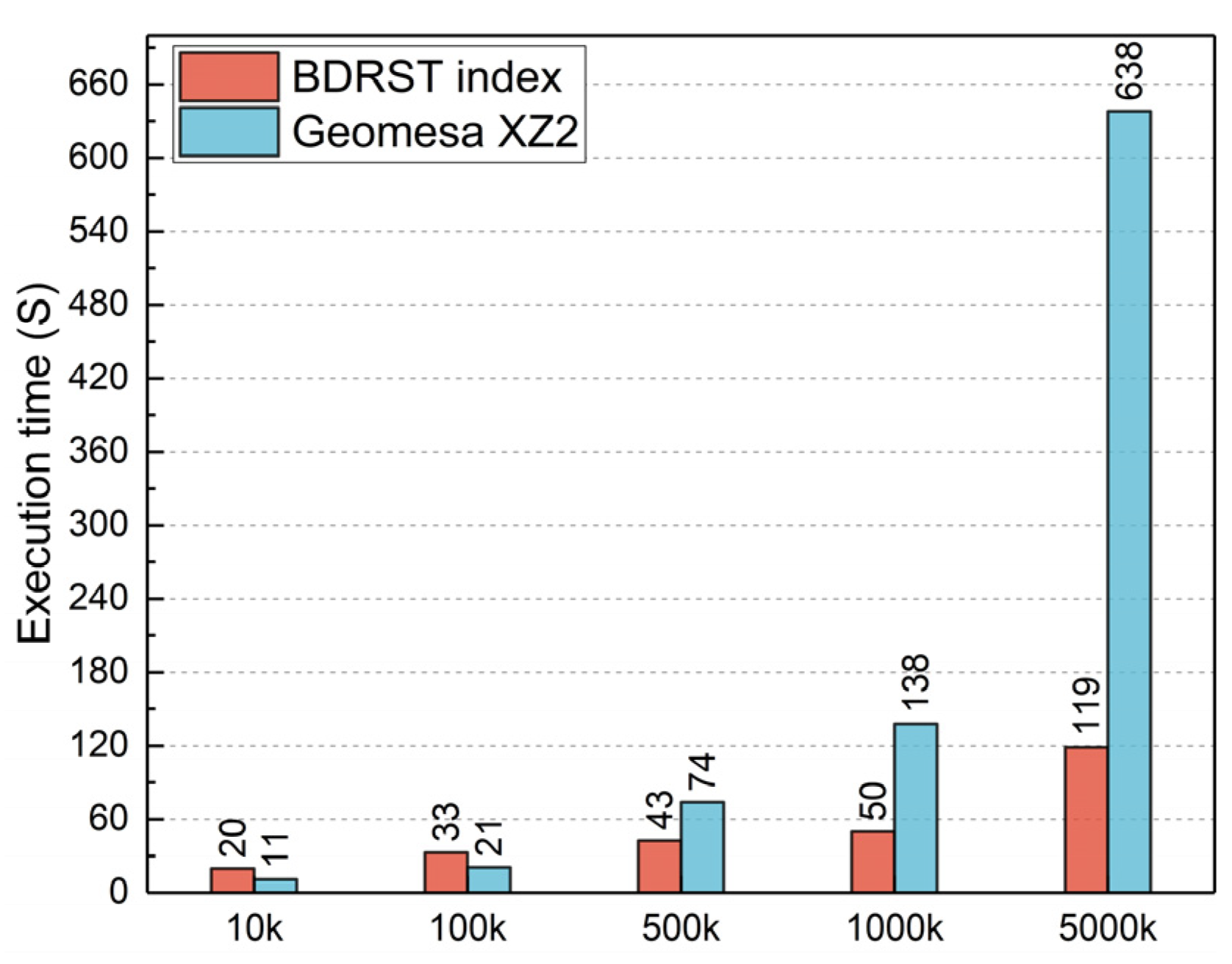

4.3.2. Index-Building Efficiency

4.3.3. Intersection-Range-Queries Performance

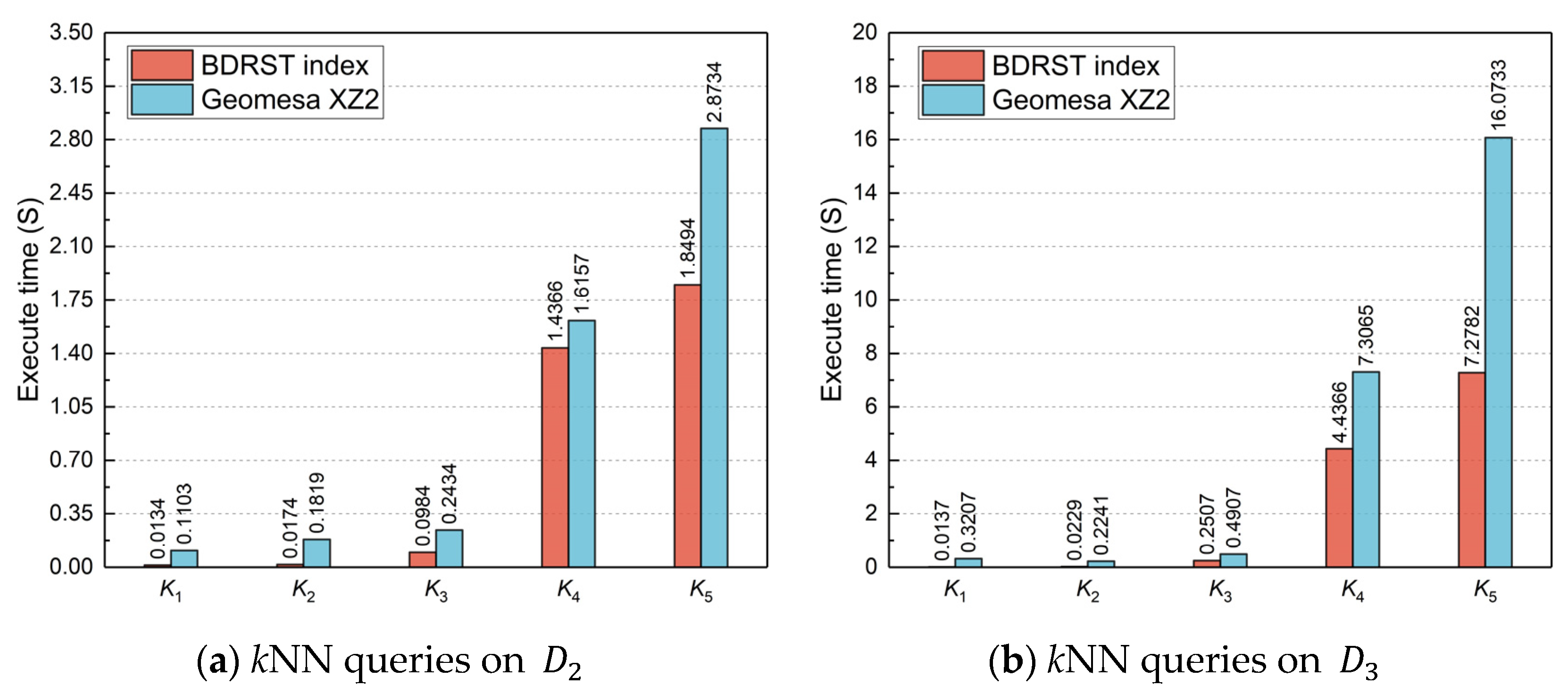

4.3.4. kNN-Queries Performance

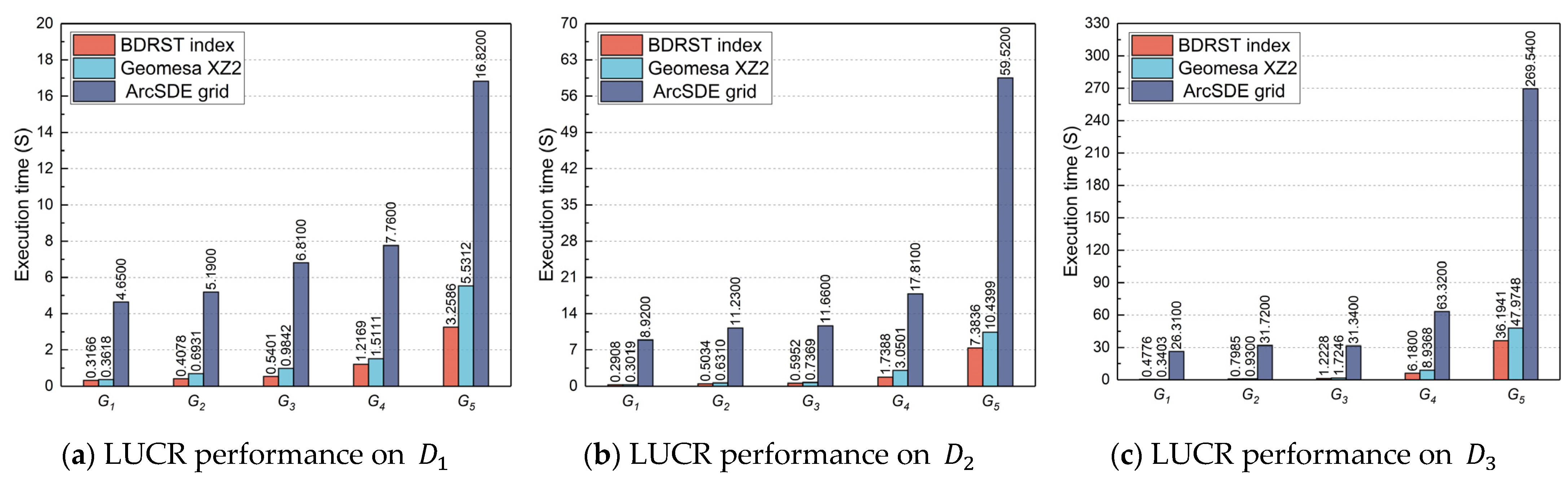

4.3.5. Application Performance

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bakker, K.; Ritts, M. Smart Earth: A meta-review and implications for environmental governance. Glob. Environ. Chang. 2018, 52, 201–211. [Google Scholar] [CrossRef]

- Wang, S.H.; Sun, Y.R.; Sun, Y.L.; Guan, Y.; Feng, Z.H.; Lu, H.; Cai, W.W.; Long, L. A Hybrid Framework for High-Performance Modeling of Three-Dimensional Pipe Networks. ISPRS Int. J. Geo-Inf. 2019, 8, 441. [Google Scholar] [CrossRef]

- Huang, K.J.; Wang, C.L.; Wang, S.H.; Liu, R.Y.; Chen, G.X.; Li, X.L. An Efficient, Platform-Independent Map Rendering Framework for Mobile Augmented Reality. ISPRS Int. J. Geo-Inf. 2021, 10, 593. [Google Scholar] [CrossRef]

- Heitzler, M.; Lam, J.C.; Hackl, J.; Adey, B.T.; Hurni, L. GPU-Accelerated Rendering Methods to Visually Analyze Large-Scale Disaster Simulation Data. J. Geovisual. Spat. Anal. 2017, 1, 3. [Google Scholar] [CrossRef]

- Zhou, Y.K.; Wang, S.H.; Guan, Y. An Efficient Parallel Algorithm for Polygons Overlay Analysis. Appl. Sci. 2019, 9, 4857. [Google Scholar] [CrossRef]

- Wang, S.H.; Zhong, Y.; Lu, H.; Wang, E.Q.; Yun, W.Y.; Cai, W.W. Geospatial Big Data Analytics Engine for Spark. In Proceedings of the 6th ACM SIGSPATIAL International Workshop on Analytics for Big Geospatial Data (BigSpatial), Redondo Beach, CA, USA, 7–10 November 2017; pp. 42–45. [Google Scholar]

- Eldawy, A.; Alarabi, L.; Mokbel, M.F. Spatial partitioning techniques in SpatialHadoop. Proc. VLDB Endow. 2015, 8, 1602–1605. [Google Scholar] [CrossRef]

- Yu, J.; Wu, J.; Sarwat, M. GeoSpark: A cluster computing framework for processing large-scale spatial data. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 3–6 November 2015; p. 70. [Google Scholar]

- Rueda-Ruiz, A.J.; Ogáyar-Anguita, C.J.; Segura-Sánchez, R.J.; Béjar-Martos, J.A.; Delgado-Garcia, J. SPSLiDAR: Towards a multi-purpose repository for large scale LiDAR datasets. Int. J. Geogr. Inf. Sci. 2022, 36, 992–1011. [Google Scholar] [CrossRef]

- Guo, D.; Onstein, E. State-of-the-art geospatial information processing in NoSQL databases. ISPRS Int. J. Geo-Inf. 2020, 9, 331. [Google Scholar] [CrossRef]

- Wang, S.H.; Zhong, Y.; Wang, E.Q. An integrated GIS platform architecture for spatiotemporal big data. Future Gener. Comput. Syst.-Int. J. Escience 2019, 94, 160–172. [Google Scholar] [CrossRef]

- Rys, M. Scalable SQL. Commun. ACM 2011, 54, 48–53. [Google Scholar] [CrossRef]

- Stonebraker, M. SQL databases v. NoSQL databases. Commun. ACM 2010, 53, 10–11. [Google Scholar] [CrossRef]

- Cattell, R. Scalable SQL and NoSQL data stores. Acm Sigmod. Rec. 2011, 39, 12–27. [Google Scholar] [CrossRef]

- Huang, W. What Were GIScience Scholars Interested in During the Past Decades? J. Geovisualization Spat. Anal. 2022, 6, 7. [Google Scholar] [CrossRef]

- Yue, P.; Tan, Z. 1.06-GIS Databases and NoSQL Databases. In Comprehensive Geographic Information Systems; Huang, B., Ed.; Elsevier: Oxford, UK, 2018; pp. 50–79. [Google Scholar]

- Chang, F.; Dean, J.; Ghemawat, S.; Hsieh, W.C.; Wallach, D.A.; Burrows, M.; Chandra, T.; Fikes, A.; Gruber, R.E. Bigtable: A distributed storage system for structured data. ACM Trans. Comput. Syst. 2008, 26, 1–26. [Google Scholar] [CrossRef]

- Li, R.; He, H.; Wang, R.; Huang, Y.; Liu, J.; Ruan, S.; He, T.; Bao, J.; Zheng, Y. Just: Jd urban spatio-temporal data engine. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 1558–1569. [Google Scholar]

- Hughes, J.N.; Annex, A.; Eichelberger, C.N.; Fox, A.; Hulbert, A.; Ronquest, M. Geomesa: A distributed architecture for spatio-temporal fusion. In Proceedings of the Geospatial Informatics, Fusion, and Motion Video Analytics V, Baltimore, MD, USA, 20–21 April 2015; pp. 128–140. [Google Scholar]

- Samet, H. 2. Object-Based and Image-Based Image Representations. In Foundations of Multidimensional and Metric Data Structures; The Morgan Kaufmann Series in Computer Graphics and Geometric Modeling; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 2005. [Google Scholar]

- Carniel, A.C.; Roumelis, G.; Ciferri, R.R.; Vassilakopoulos, M.; Corral, A.; Aguiar, C.D. Porting disk-based spatial index structures to flash-based solid state drives. Geoinformatica 2022, 26, 253–298. [Google Scholar] [CrossRef]

- BÖxhm, C.; Klump, G.; Kriegel, H.-P. Xz-ordering: A space-filling curve for objects with spatial extension. In Proceedings of the International Symposium on Spatial Databases, Hong Kong, China, 20–23 July 1999; pp. 75–90. [Google Scholar]

- Guttman, A. R-trees: A dynamic index structure for spatial searching. In Proceedings of the 1984 ACM SIGMOD International Conference on Management of Data, Boston MA, USA, 18–21 June 1984; pp. 47–57. [Google Scholar]

- Beckmann, N.; Kriegel, H.-P.; Schneider, R.; Seeger, B. The R*-tree: An efficient and robust access method for points and rectangles. In Proceedings of the 1990 ACM SIGMOD International Conference on Management of Data, Atlantic City, NJ, USA, 23–25 May 1990; pp. 322–331. [Google Scholar]

- Hadjieleftheriou, M.; Manolopoulos, Y.; Theodoridis, Y.; Tsotras, V.J. R-Trees: A Dynamic Index Structure for Spatial Searching. In Encyclopedia of GIS, Shekhar, S., Xiong, H., Zhou, X., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 1–13. [Google Scholar]

- Kothuri, R.K.V.; Ravada, S.; Abugov, D. Quadtree and R-tree indexes in oracle spatial: A comparison using GIS data. In Proceedings of the 2002 ACM SIGMOD International Conference on Management of Data, Madison, Wisconsin, USA, 3–6 June 2002; pp. 546–557. [Google Scholar]

- Xiang, L.G.; Huang, J.T.; Shao, X.T.; Wang, D.H. A MongoDB-Based Management of Planar Spatial Data with a Flattened R-Tree. ISPRS Int. J. Geo-Inf. 2016, 5, 119. [Google Scholar] [CrossRef]

- Jadallah, H.; Al Aghbari, Z. SwapQt: Cloud-based in-memory indexing of dynamic spatial data. Future Gener. Comput. Syst.-Int. J. Escience 2020, 106, 360–373. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, Y.; Liu, Z.; Dai, S. Improving NoSQL storage schema based on Z-curve for spatial vector data. IEEE Access 2019, 7, 78817–78829. [Google Scholar] [CrossRef]

- Du, N.; Zhan, J.; Zhao, M.; Xiao, D.; Xie, Y. Spatio-temporal data index model of moving objects on fixed networks using hbase. In Proceedings of the 2015 IEEE International Conference on Computational Intelligence & Communication Technology, Ghaziabad, India, 13–14 February 2015; pp. 247–251. [Google Scholar]

- Wang, L.; Chen, B.; Liu, Y. Distributed storage and index of vector spatial data based on HBase. In Proceedings of the 2013 21st International Conference on Geoinformatics, Kaifeng, China, 20–22 June 2013; pp. 1–5. [Google Scholar]

- Simmonds, R.; Watson, P.; Halliday, J. Antares: A Scalable, Real-Time, Fault Tolerant Data Store for Spatial Analysis. In Proceedings of the 2015 IEEE World Congress on Services, New York City, NY, USA, 27 June–2 July 2015; pp. 105–112. [Google Scholar]

- Limkar, S.V.; Jha, R.K. A novel method for parallel indexing of real time geospatial big data generated by IoT devices. Future Gener. Comput. Syst. 2019, 97, 433–452. [Google Scholar] [CrossRef]

- Tang, M.; Yu, Y.; Malluhi, Q.M.; Ouzzani, M.; Aref, W.G. LocationSpark: A distributed in-memory data management system for big spatial data. Proc. VLDB Endow. 2016, 9, 1565–1568. [Google Scholar] [CrossRef]

- Keeble, B.R. The Brundtland report: ‘Our common future’. Med. War 1988, 4, 17–25. [Google Scholar] [CrossRef]

- Nimmagadda, S.L.; Reiners, T.; Burke, G. Big Data Guided Design Science Information System (DSIS) Development for Sustainability Management and Accounting. Procedia Comput. Sci. 2017, 112, 1871–1880. [Google Scholar] [CrossRef]

- da Fonseca, E.P.R.; Caldeira, E.; Ramos Filho, H.S.; e Oliveira, L.B.; Pereira, A.C.M.; Vilela, P.S. Agro 4.0: A data science-based information system for sustainable agroecosystem management. Simul. Model. Pract. Theory 2020, 102, 102068. [Google Scholar] [CrossRef]

- Balaprakash, P.; Dunn, J.B. Overview of data science and sustainability analysis. In Data Science Applied to Sustainability Analysis; Elsevier: Oxford, UK, 2021; pp. 1–14. [Google Scholar]

- Liu, Z.; Ye, C.; Chen, R.; Zhao, S.X. Where are the frontiers of sustainability research? An overview based on Web of Science Database in 2013–2019. Habitat Int. 2021, 116, 102419. [Google Scholar] [CrossRef]

- Tang, J.; Fang, Y.; Tian, Z.; Gong, Y.; Yuan, L. Ecosystem Services Research in Green Sustainable Science and Technology Field: Trends, Issues, and Future Directions. Sustainability 2022, 15, 658. [Google Scholar] [CrossRef]

- Sharma, S.; Anees, M.; Sharma, M.; Joshi, P. Longitudinal study of changes in ecosystem services in a city of lakes, Bhopal, India. Energy Ecol. Environ. 2021, 6, 408–424. [Google Scholar] [CrossRef]

- Văculișteanu, G.; Doru, S.C.; Necula, N.; Niculiță, M.; Mărgărint, M.C. One Century of Pasture Dynamics in a Hilly Area of Eastern Europe, as Revealed by the Land-Use Change Approach. Sustainability 2022, 15, 406. [Google Scholar] [CrossRef]

- Kimothi, S.; Thapliyal, A.; Singh, R.; Rashid, M.; Gehlot, A.; Akram, S.V.; Javed, A.R. Comprehensive Database Creation for Potential Fish Zones Using IoT and ML with Assimilation of Geospatial Techniques. Sustainability 2023, 15, 1062. [Google Scholar] [CrossRef]

- Delgado, A.; Issaoui, M.; Vieira, M.C.; Saraiva de Carvalho, I.; Fardet, A. Food composition databases: Does it matter to human health? Nutrients 2021, 13, 2816. [Google Scholar] [CrossRef]

- Mahajan, D.; Blakeney, C.; Zong, Z.J.S.C.I. Improving the energy efficiency of relational and NoSQL databases via query optimizations. Sustain. Comput. Inform. Syst. 2019, 22, 120–133. [Google Scholar] [CrossRef]

- Naseri, A.; Ahmadi, M.; PourKarimi, L. Reduction of energy consumption and delay of control packets in Software-Defined Networking. Sustain. Comput. Inform. Syst. 2021, 31, 100574. [Google Scholar] [CrossRef]

- Arora, S.; Bala, A. Pap: Power aware prediction based framework to reduce disk energy consumption. Clust. Comput. 2020, 23, 3157–3174. [Google Scholar] [CrossRef]

- Pankowski, T. Consistency and availability of Data in replicated NoSQL databases. In Proceedings of the 2015 International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE), Barcelona, Spain, 29–30 April 2015; pp. 102–109. [Google Scholar]

- Stonebraker, M.; Cattell, R. 10 rules for scalable performance in ‘simple operation’ datastores. Commun. ACM 2011, 54, 72–80. [Google Scholar] [CrossRef]

- Li, L.H.; Liu, W.D.; Zhong, Z.Y.; Huang, C.Q. SP-Phoenix: A Massive Spatial Point Data Management System based on Phoenix. In Proceedings of the 20th IEEE International Conference on High Performance Computing and Communications (HPCC)/16th IEEE International Conference on Smart City (SmartCity)/4th IEEE International Conference on Data Science and Systems (DSS), Exeter, UK, 28–30 June 2018; pp. 1634–1641. [Google Scholar]

- Li, R.; He, H.; Wang, R.; Ruan, S.; Sui, Y.; Bao, J.; Zheng, Y. Trajmesa: A distributed nosql storage engine for big trajectory data. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering (ICDE), Dallas, TX, USA, 20–24 April 2020; pp. 2002–2005. [Google Scholar]

- Ma, T.; Xu, X.; Tang, M.; Jin, Y.; Shen, W. MHBase: A distributed real-time query scheme for meteorological data based on HBase. Future Internet 2016, 8, 6. [Google Scholar] [CrossRef]

- Xia, Y.; Chen, J.; Lu, X.; Wang, C.; Xu, C. Big traffic data processing framework for intelligent monitoring and recording systems. Neurocomputing 2016, 181, 139–146. [Google Scholar] [CrossRef]

- Robinson, J.T. The KDB-tree: A search structure for large multidimensional dynamic indexes. In Proceedings of the Proceedings of the 1981 ACM SIGMOD International Conference on Management of Data, Ann Arbor, MI, USA, 29 April–1 May 1981; pp. 10–18.

- Samet, H. The Quadtree and Related Hierarchical Data Structures. ACM Comput. Surv. 1984, 16, 187–260. [Google Scholar] [CrossRef]

- Zhao, K.; Jin, B.; Fan, H.; Yang, M. A data allocation strategy for geocomputation based on shape complexity in a cloud environment using parallel overlay analysis of polygons as an example. IEEE Access 2020, 8, 185981–185991. [Google Scholar] [CrossRef]

- Sharma, M.; Sharma, V.D.; Bundele, M.M. Performance analysis of RDBMS and no SQL databases: PostgreSQL, MongoDB and Neo4j. In Proceedings of the 2018 3rd International Conference and Workshops on Recent Advances and Innovations in Engineering (ICRAIE), Jaipur, India, 22–25 November 2018; pp. 1–5. [Google Scholar]

- Zheng, K.; Gu, D.P.; Fang, F.L.; Zhang, M.; Zheng, K.; Li, Q. Data storage optimization strategy in distributed column-oriented database by considering spatial adjacency. Clust. Comput.-J. Netw. Softw. Tools Appl. 2017, 20, 2833–2844. [Google Scholar] [CrossRef]

- Nishimura, S.; Das, S.; Agrawal, D.; El Abbadi, A.J.D. MD-HBase: Design and implementation of an elastic data infrastructure for cloud-scale location services. Distrib. Parallel Databases 2013, 31, 289–319. [Google Scholar] [CrossRef]

- Faloutsos, C.; Roseman, S. Fractals for secondary key retrieval. In Proceedings of the 8th ACM PODS, Philadelphia, PA, USA, 29–31 March 1989. [Google Scholar]

- Li, S.; Zhong, E.; Wang, S. An Algorithm for Hilbert Ordering Code Based on State-Transition Matrix. J. Geo-Inf. Sci. 2014, 16, 846–851. [Google Scholar] [CrossRef]

- Ma, Y.; Zhang, Y.; Meng, X. St-hbase: A scalable data management system for massive geo-tagged objects. In Proceedings of the International Conference on Web-Age Information Management, Beidaihe, China, 14–16 June 2013; pp. 155–166. [Google Scholar]

- Yu, J.; Sarwat, M. Two birds, one stone: A fast, yet lightweight, indexing scheme for modern database systems. Proc. VLDB Endow. 2016, 10, 385–396. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, Z.; Sarwat, M. Spatial data management in apache spark: The geospark perspective and beyond. GeoInformatica 2019, 23, 37–78. [Google Scholar] [CrossRef]

- Leutenegger, S.T.; Lopez, M.A.; Edgington, J. STR: A simple and efficient algorithm for R-tree packing. In Proceedings of the Proceedings 13th International Conference on Data Engineering, Birmingham, UK, 7–11 April 1997; pp. 497–506. [Google Scholar]

- Subramaniam, V. 2.4 Concurrency in Computationally Intensive Apps. In Programming Concurrency on the JVM; Pragmatic Bookshelf: Raleigh, NC, USA, 2011; pp. 1–280. [Google Scholar]

- Eldawy, A.; Mokbel, M.F. Spatialhadoop: A mapreduce framework for spatial data. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Republic of Korea, 13–17 April 2015; pp. 1352–1363. [Google Scholar]

| Type of Rules | Rules of Thumb | Our Measures |

|---|---|---|

| HBase table schema | Minimum and short column families | Column families: “geo” and “prop” |

| Minimum rows and column sizes | Tall-narrow table for slim rows | |

| Short and fixed-length row keys | Row keys of two fix-length part | |

| Even distributed rows | Spatial encoding based on R*-tree | |

| Minimum number of versions | One version for static SVD | |

| Bytes-based storage in cells | Serialized BDRST index | |

| SVD storage schema | Data locality and index locality | Each region stores a serialized R*-tree and an SVD partition |

| Minimum loading time of indices | Balanced, small, distributed R*-trees | |

| High spatial index quality | ASTRP strategy for BDRST index |

| Row Key | Row Type | Column Family “geo” | Column Family “prop” | |||||

|---|---|---|---|---|---|---|---|---|

| total_info | GMR | 000_0000 | 001_0000 | … | _0000 | extent | size | wkid |

| … | 8453243 | 4490 | ||||||

| 000_0000 | LMR | rtree | extent | |||||

| byte array of serialized local R*-tree | ||||||||

| 000_0111 | DTR | shape | propty | |||||

| byte array of spatial geometry (EsriShape) | byte array of properties (JSON) | |||||||

| 000_0112 | DTR | shape | propty | |||||

| byte array of spatial geometry (EsriShape) | byte array of properties (JSON) | |||||||

| … | … | … | … | |||||

| _0000 | LMR | rtree | extent | |||||

| byte array of serialized local R*-tree | ||||||||

| … | … | … | … | |||||

| _0565 | DTR | shape | propty | |||||

| byte array of spatial geometry (EsriShape) | byte array of properties (JSON) | |||||||

| Datasets | Contents | Number of Polygons (K) | Number of Vertices (M) | Number of Attributes | Data Size (GB) |

|---|---|---|---|---|---|

| Ecological conservation redlines | 56.06 | 61.57 | 16 | 1.66 | |

| Permanent basic farmlands | 1950.19 | 199.40 | 24 | 6.55 | |

| Land patches of land-use planning data | 8132.81 | 758.40 | 21 | 23.81 | |

| Land patches of land-use data | 8365.48 | 734.24 | 23 | 24.75 |

| Query Geometry | Spatial Extent (°) | Number of Results |

|---|---|---|

| 0.10.1 | 4796 | |

| 0.50.5 | 32,520 | |

| 1.01.0 | 130,833 | |

| 2.02.0 | 666,153 | |

| 3.03.0 | 1,789,596 | |

| 4.04.0 | 4,293,851 |

| Center Point | k | Values of k | Max Searching Radius (°) |

|---|---|---|---|

| 101.419725° E, 25.623202° N | 10 | 0.001 | |

| 100 | 0.01 | ||

| 10,000 | 0.1 | ||

| 1,000,000 | 1 | ||

| 2,000,000 | 1.5 |

| Input Polygons | Spatial Extent (°) | Number of Vertices | Return Items | ||

|---|---|---|---|---|---|

| 0.01 × 0.01 | 8 | 2 | 5 | 13 | |

| 0.03 × 0.03 | 15 | 0 | 105 | 62 | |

| 0.1 × 0.1 | 30 | 2 | 117 | 627 | |

| 0.3 × 0.3 | 80 | 227 | 2717 | 14899 | |

| 1.0 × 1.0 | 150 | 534 | 26426 | 115820 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, L.; Jin, B. Improving NoSQL Spatial-Query Processing with Server-Side In-Memory R*-Tree Indexes for Spatial Vector Data. Sustainability 2023, 15, 2442. https://doi.org/10.3390/su15032442

Sun L, Jin B. Improving NoSQL Spatial-Query Processing with Server-Side In-Memory R*-Tree Indexes for Spatial Vector Data. Sustainability. 2023; 15(3):2442. https://doi.org/10.3390/su15032442

Chicago/Turabian StyleSun, Lele, and Baoxuan Jin. 2023. "Improving NoSQL Spatial-Query Processing with Server-Side In-Memory R*-Tree Indexes for Spatial Vector Data" Sustainability 15, no. 3: 2442. https://doi.org/10.3390/su15032442

APA StyleSun, L., & Jin, B. (2023). Improving NoSQL Spatial-Query Processing with Server-Side In-Memory R*-Tree Indexes for Spatial Vector Data. Sustainability, 15(3), 2442. https://doi.org/10.3390/su15032442