Abstract

By 11 March 2020, the phrase “COVID-19” had officially entered everyday life across most of the word. Each level of education suddenly faced new changes and new challenges. Emergency remote teaching became widespread, and new methodologies to deliver classes and courses were adopted by educational institutions. In this paper, we focus on the impact of the remote learning experience of engineering students enrolled at the Politecnico di Milano. The subjects were recruited from all engineering courses from the first to the fifth year and were asked to complete a multidimensional survey. The survey featured 66 items regarding the participants’ perceptions of the challenges of emergency remote teaching compared with pre-COVID-19 in-person teaching. The questionnaire addressed six dimensions: the organization of emergency remote teaching, subjective well-being, metacognition, self-efficacy, identity, and socio-demographic information. In this paper, we describe the entire survey and discuss a preliminary analysis. Using Cronbach’s alpha test, a confirmatory factor analysis, and the t-test, we performed a more in-depth analysis concerning the outcomes of metacognition and self-efficacy. The data analysis suggested a small, unexpected change in the metacognition strategies. The students, in some regards, improved their learning strategies. Some other answers underlined their appreciation of the courses’ organization and the lack of relationships with their peers and teachers.

1. Introduction

On 30 January 2020, the Director-General of the World Health Organization (WHO) declared the novel coronavirus outbreak a public health emergency of international concern (PHEIC), which is the WHO’s highest level of alarm [1]. The first strategy used to contain the virus was a strong lockdown for the majority of activities, including working activities and educational activities. The first lockdown was issued in the Chinese city of Wuhan, the pandemic’s first epicenter [2]. In Italy, the first lockdown was issued in some areas in the north of Italy on 22 February 2020. From this date, in Italy, lifestyles strongly changed in many aspects. On 11 March 2020, the Director-General of the WHO declared COVID-19 a pandemic. Remote working and remote instruction at every level suddenly became extremely necessary. The COVID-19 pandemic forced universities around the world to change their teaching methodologies and move their educational activities onto online platforms [3,4]. Not all universities were prepared for such a transition, and their online teaching–learning processes evolved gradually. Many researchers started analyzing the impacts of COVID-19 on education systems. Many studies in different countries have investigated the effects of COVID-19-related university closures on student perceptions [5,6,7,8]. These studies mostly investigated the viewpoints of students on remote teaching during the COVID-19 pandemic using satisfaction surveys [3,4,9,10]. Some works [11] found that students believed that the learning experience was better in physical classrooms than through distance learning. Students, in particular, noted the lack of peer interaction [9]. However, findings from other studies showed that the students perceived remote teaching as helpful in allowing them to focus on their studies during the pandemic [10]. Many of these numerous early studies, involving more than 50 countries and referring to the early months of pandemic all over the world, were reviewed, and they showed particular features concerning the structure and the number of participants [12]. The most of them could be considered to have a descriptive approach, and furthermore the samples involved more than 400 participants in 1 of 4 studies that were reviewed. In this scenario, our work can be differentiated by the large number of students involved and by the use of inferential statistics. In response to the need to implement effective remote emergency learning–teaching strategies, universities worldwide started to adopt educational platforms and video-conferencing software and devices. In February 2020, the Politecnico di Milano also introduced a series of focused and systemic actions to support the passage to completely online teaching and to ensure the continuity of the activities that were previously developed in the classroom. At first, the different didactic situations, which were different from each other and in accordance with the courses’ features and the teachers’ attitudes, were collected, and some possible alternatives were suggested for each of them. In addition to technological support, methodological assistance was also activated to help teachers understand how to design their online teaching and how to face the difficulties of the new context.

The Metid Center at the Politecnico di Milano proposed webinars on the following topics: activating the virtual classroom, supporting student motivation, the management of groups, reviewing papers, and online assessment strategies. Continuous assistance was activated to check the needs of the users and to respond appropriately. Training seminars, discussions in small groups, and ad hoc consultancy were designed. From September 2021, a new approach became possible, known as the extended classroom, where some of the students attended online and some of the students attended in person. This new setting required classrooms with audio–video systems integrated with the virtual rooms in order to allow all of the students (those present in person and those attending from home) to attend the classes efficiently. The classroom sets, the hardware, and the software setups were chosen referring to the basis of the Pedagogy–Space–Technology (PST) frameset proposed by Radcliff [13], which emphasizes the connection between pedagogical approaches, spaces, and technological tools. In the two academic years of 2019–2020 and 2020–2021, more than 70 webinars were proposed to the teachers and students of the Politecnico di Milano, and more than 7500 stakeholders participated.

The literature suggested how the digital learning environment could help students in their learning styles, which are strictly dependent on self-regulated studying strategies, metacognitive strategies, and motivation [14,15]. Many studies have shown that students using effective metacognitive strategies can learn easily and effectively and have higher motivation and more self-confidence. Furthermore, some studies have highlighted that the role of the teacher is central in the whole process of self-regulated learning. One study [16] in particular revealed that these types of metacognition strategies (i.e., planning, monitoring, and regulating) are predictors of students’ learning performances. Students with metacognitive abilities enhanced their learning performance during the online learning due to the COVID-19 pandemic.

In this context, we conducted a survey in which we asked about the effects of these new strategies and the didactic organization. More than 3000 students enrolled in engineering courses at the Politecnico di Milano completed a survey with 66 questions. We asked the students about their perceptions of online instruction, their psychological well-being, their learning strategies, their job perspectives, and their attitudes towards being an engineer.

2. Materials and Methods

The questionnaire was proposed to the students enrolled in engineering courses provided by the Politecnico di Milano in July 2021 at the end of the second semester, referring to the didactic activities held in the 2019–2020 and 2020–2021 academic years. Students enrolled in any engineering courses from the first to the fifth year were asked to fill in the questionnaire. The engineering courses at the Politecnico di Milano can be grouped into three large groups: Ingegneria Civile Ambientale e Territoriale (Civil and Environmental Engineering), Ingegneria Industriale e dell’Informazione (Industrial and Information Engineering), and Ingegneria Edile e delle Costruzioni (Building and Construction Engineering). The respondents participated in the study on a voluntary basis, and a total of 3183 students completed the entire survey. This sample was composed of 2126 male students and 1057 female students. A total of 2227 students were attending bachelor’s degree courses, and 956 students were attending master’s degree courses. The survey was composed of 66 questions regarding the perceptions and challenges of online education, compared with the “state of the art” before COVID-19, divided into 6 main groups (Figure 1): remote teaching (RT), subjective well-being (SWB), metacognition (MC), self-efficacy (SE), identity (I), and socio-demographic information (SD). Because we could not analyze all topics in the survey in depth, we chose to focus on the items concerning remote teaching, metacognition, and self-efficacy. It has been highlighted that metacognitive factors regulate cognitive processing and mediate learning; thus, metacognition plays an essential role in leading to effective learning [17]. Similarly, considering the cognitive motivational constructs, self-efficacy has proven to be a highly successful predictor of students’ learning [18]. We proposed the following research questions:

Figure 1.

Map of the satisfaction survey.

RQ1: What is the generalized university students’ perception of the emergency remote teaching experience with respect to the previous in-person experiences?

RQ2: Could the transition from in-person teaching before the pandemic to remote teaching during the pandemic affect students’ metacognitive strategies? If so, did they become better or worse?

RQ3: Could the transition from in-person teaching before the pandemic to remote teaching during the pandemic affect students’ self-efficacy? If so, did they become better or worse?

Description of the Six Parts of the Survey

In the following section, we describe the six main parts into which the questionnaire was divided.

- Remote teaching (RT): The perceptions of the advantages and the difficulties of remote teaching during the second semester of the 2020–2021 academic year were measured through 14 questions adapted from previous surveys [7,19]. Starting from the results of these prior works, the items were grouped into three different subgroups focused on the following factors: students’ perceptions of difficulties in the switch from in-person instruction to online learning, including the effectiveness and the organization of the course; the students’ evaluations of their instructors; and the perceived difficulties due to the online learning modality. The range of possible answers was from 1 (not at all effective or definitely worse) to 5 (completely effective or definitely better) on a Likert scale, as described in the Supplemental Material. Every question was formulated to have an immediate valuation for the improvement or the worsening of the in-person didactic experiences compared to those taking place online.

- Subjective well-being (SWB): In order to measure subjective well-being, we used an instrument called PANAS (the Positive and Negative Affect Schedule) [20], which is used in psychology research. The PANAS scale consists of a series of 30 adjectives describing positive or negative attitudes towards an item, as used in [7]. The students had to give a rating from 1 (definitely less) to 5 (definitely more) regarding their feelings and their moods towards online teaching compared to in-person activities.

- Metacognition (MC): A total of 15 questions were reserved to investigate the personal cognitive process. By metacognition, we refer to the processes involving the monitoring, control, and regulation of cognition. Students were asked about learning strategies, how they take notes, or how they review material [21]. In this case, every question was identically proposed twice in the same instance but referring to before the pandemic and the present. The items were written as first-person statements, and the students were asked to rate their agreement or disagreement with each statement on a scale from 1 (totally disagree) to 5 (totally agree) on a Likert scale, as described in the Supplemental Material. The proposed items were adapted from the work in [7]. The 15 items were equally subdivided into 5 groups, with each one intended to measure one of the following cognitive processes: knowledge networking, knowledge extraction, knowledge practice, knowledge critique, and knowledge monitoring [22].

- Self-efficacy (SE): A total of 10 questions were dedicated to examining self-efficacy. Self-efficacy refers to ‘beliefs in one’s capabilities to organize and execute the courses of action required to produce given attainments’ [23]. Self-efficacy is considered by researcher in educational settings to be an important variable in the learning process of a student concerning their motivations, efforts, and learning strategies [24]. Additionally, in this case, every question was identically proposed twice in the same instance but referring to before the pandemic and the present. The items were written as first-person statements, and the students were asked to rate their agreement or disagreement with each statement from 1 (totally disagree) to 5 (totally agree) on a Likert scale, as described in the Supplemental Material. The items used in the questions were adapted from the work in [25].

- Identity (I): Concerning identity, we chose a 15-item scale adapted from [26] and [27]. In this survey, we considered five subgroups: the sense of belonging to the engineering community, the recognition of engineering roles in society, intrinsic interest in engineering, identifying as an engineer, and confidence in one’s own skills to be engineer. Additionally, in this case, every question was identically proposed twice in the same instance but referring to before the pandemic and the present. The items were written as first-person statements, and the students were asked to rate their agreement or disagreement with each statement on a scale from 1 (totally disagree) to 5 (totally agree) on a Likert scale, as described in the Supplemental Material. The items used in the questions were adapted from [28].

- Socio-demographic information (SD): In the survey, the participants were asked their gender, nationality, engineering discipline, the high school they attended before enrolling in engineering courses, and some information about logistics during remote teaching. Seven questions were dedicated to this kind of socio-demographic information.

3. Results

We analyzed the Likert scale data, assigning scores from 1 to 5 to the possible answers chosen by the students, as described in Supporting Materials Section A. Using the statistical software R by the R Foundation for Statistical Computing, at first, for every section, we computed the coefficient of skewness and the kurtosis to check the asymmetry and the peakedness of the distributions. Then, we also checked the normality of the distribution with the Shapiro–Wilk test. In any case, due to the large amount of data and referring to the central limit theorem, we could assume a normal distribution for the samples involved in this survey. First, we analyzed the frequency distribution concerning remote teaching. Then, we focused on the data concerning metacognition and self-efficacy. Using a confirmatory factor analysis (CFA), we checked the modeling fit. Then, we computed the Cronbach’s alpha statistics [29] to verify the internal consistency of the items investigated in the metacognition and self-efficacy sections. Finally, after computing the average value, the median, and the general descriptive statistics, we investigated the outcomes using a paired-comparison t-test. We compared the answers referring to “before pandemic” and “the present”. The main features of the sample are shown in Table 1 below.

Table 1.

Main features of the sample.

Then, we deepened the analysis in accordance with the research questions, and we present the results for each section below.

3.1. Remote Teaching (RT)

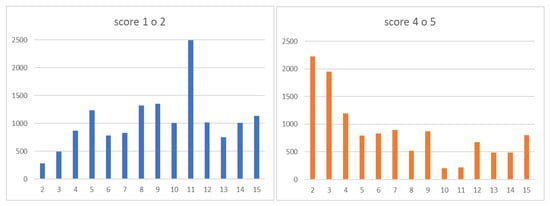

In accordance with the generality of RQ 1, in this work, we did not enter the details of this section. We computed the frequency distribution of the score with respect to the 14 questions, and we focused on some results. In the following graphs (Figure 2), the score distributions referring to remote teaching are reported and are grouped into negative and positive scores.

Figure 2.

Score distributions in the RT section, with negative scores in blue and positive scores in orange.

Table 2 shows the mean value, the standard deviation, and the median referring to all the questions from 2 to 14, as we computed in a previous work [30].

Table 2.

Main descriptive statistics for RT.

At first glance, three clear higher bars can be observed in the two histograms. Question 11 was scored negatively by almost 2500 students, while questions 2 and 3 were both scored positively by about 2000 students. The mean values and the medians for questions 2, 3, and 11, as in [30], confirmed these significant differences, while, in general, we observed that the mean value for the items overall was just below the neutral value of “3/5” on the Likert scale that was used in the questionnaire.

As can be found in Supporting Materials, items 2 and 3 concerned the courses’ organization, and these outcomes show how students appreciated the planning, the setting, and the logistics of the courses provided by the Politecnico di Milano. Item 11 referred to the relationships with pairs, and these results highlighted the lack of friendliness and the lack of good fellowship. For the sake of completeness of information, we report the text of questions 2 (Q2), 3 (Q3), and 11 (Q11). Q2: What do you think of the remote teaching provided by your course of study due to the COVID-19 pandemic? Q3: What do you think of the organization of teaching (timetables, exams) adopted by your course of study due to the COVID-19 pandemic? Q11: How has your interaction with your peers changed during the remote teaching experience compared to the face-to-face one?

3.2. Metacognition (MC)

At first, we computed the descriptive statistics. Then, according to Kline [31], using a confirmatory factor analysis, we checked the model fit of the five factors described previously [22] by calculating the root-mean-square error of approximation (RMSEA) [32] and the Tucker–Lewis index (TLI) [33]. The values of TLI (0.911 and 0.909) were greater than 0.90 (acceptable fit), and the RMSEA coefficients (0.076 and 0.077) were smaller than 0.08 (reasonable approximate fit), so the fit was confirmed [34]. The complete data are available in Supporting Materials. Then, we tested the internal consistency of each of the five factors by computing Cronbach’s alpha statistics [35].

Notwithstanding the low number of items, the reliability analysis reported in Table 3 supported the five-factor model [36,37]. The complete data, including the item–rest correlation, are available in Supporting Materials. The following section discusses the data concerning the descriptive statistics and the paired t-test.

Table 3.

Summary of the reliability analysis for each subscale.

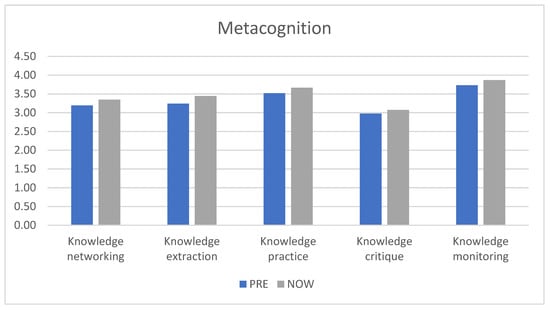

Concerning the raw scores, we observed that there was only one mean value smaller than the neutral score (3). In fact, the rest of the mean values (referring to the in-person period and the emergency period) were greater than the neutral score (3). Additionally, when we entered the details of each factor and compared the mean values from before pandemic (PRE) and during pandemic (NOW), we noticed a small increase for each factor. In order to check the reliability of this increase, we used the paired-comparison Student’s t-test. The conditions for using Student’s t-test were guaranteed in this case by the central limit theorem. Using EXCEL software for the statistical analysis, we verified that this small increase was significant from a statistical point of view, and the probability that the increase was not due to coincidence was clearly greater than 99% for each of the five factors that were investigated, as reported in Table 4. In order to strengthen this result, we checked if this increase was large enough from a statistical point of view by computing the effect size coefficient (Cohen’s d) [38]. We obtained an effect size (d) that varied from d = 0.14 to d = 0.25. In the literature, Cohen [39] suggested that d = 0.20 indicates a small effect size and d = 0.50 is a medium effect size for Student’s t-test. We surely had to read the interpretation of these results, that is to say, the weight of the effect size, in the context in which they were computed. For example, if we consider a sample correlation coefficient equal to 0.94, it would be very small for scientific disciplines such as physics or chemistry, but this would be considered very high in a psychological framework [38].

Table 4.

Descriptive statistics and t-test.

3.3. Self-Efficacy (SE)

At first, using an exploratory factor analysis, we checked the one-dimensional self-efficacy factor. Then, we confirmed the internal consistency by computing Cronbach’s alpha. Table 5 shows the statistical confidence, the main value, and the standard deviation. The exploratory factor analysis, using Kaiser’s criterion [40], allowed us to consider that the measure related to the 10 items concerning self-efficacy was unidimensional. In Section D, the tables concerning these results are reported, while those concerning Cronbach’s alpha statistics can be found in Supporting Materials.

Table 5.

Cronbach’s alpha and main descriptive statistics for SE.

Concerning the raw scores, we observed that the mean value (3,20) referring to the in-person period (PRE in Table 4) was greater than the neutral value (3). Additionally, when we considered the same value referring to the pandemic period (NOW in Table 4), we also noticed a small decrease to an average value of 3.15. In order to check the statistical significance of this decrease, we used the paired-comparison Student’s t-test. The conditions for using Student’s t-test were guaranteed in this case by the central limit theorem. Using software for statistical analysis, we checked that this small increase was significant from a statistical point of view and that the probability of the decrease not being due to coincidence was greater than 99%. In order to strengthen this result, we checked whether this decrease was large enough from a statistical point of view by computing the effect size coefficient using the software. We obtained an effect size of r = 0.03. In the literature, Cohen [29] suggested that r = 0.10 indicates a small effect size and r = 0.50 is a medium effect size for Student’s t-test, while an r-value smaller than 0.10 is negligible, as in our case.

4. Discussion and Conclusions

A significant number of students were interviewed about their perceptions regarding their online learning experiences. They clearly appreciated how the Politecnico di Milano dealt with the organization of the mandatory online courses. It should be noted that this survey referred to the period of the second lockdown. Even if we can call this experience “emergency remote teaching” [41], we have also to recognize that the students had time to deal with these new challenges. This consideration, together with the readiness and effectiveness of the Politecnico di Milano to introduce many important actions, as described in the introduction, could explain this unexpected outcome. The negative score in the RT section underlined the students’ difficulties regarding relationships. Students complained about their relationships with classmates during remote teaching, and they indicated that their relationships clearly worsened during the lockdown.

We also can infer that students had improved their effective learning strategies during the lockdown with respect to the period before the pandemic; it is possible to conclude that the students overcame the difficulties due to the emergency remote teaching by improving their cognitive processes. Each of the five factors describing the metacognitive processes showed increases in the scores that were obtained (see Figure 3). Moreover, these results can be strictly linked to the effectiveness of the actions of Politecnico di Milano that were described in the introduction. A recent work [14] highlighted the importance of having metacognitive support available in the digital environment.

Figure 3.

Means of metacognition factors concerning the pre-pandemic (PRE) period and the present (NOW) period.

However, these findings may contrast with the common-sense perception that COVID-19 has negatively impacted student learning strategies [42]. In fact, previous studies have revealed that the COVID-19 pandemic can have many psychological effects on college students, which can be expressed as anxiety, fear, and worry, among others [43,44,45], and this stress may lead to negative effects on the learning process [46].

The outcomes we have proposed also seem to contrast with the results of a similar survey referring to students enrolled in a physics faculty in Italy [7]. Finally, these results also seem to contrast with those concerning self-efficacy. The effect, even if considered negligible, was in the opposite direction. In other words, there was a small negligible decrease. We can infer, fortunately, that the online learning experience did not have a negative influence on the beliefs regarding students’ capabilities to organize and execute the courses of action required to produce the given attainments [17].

It could be interesting to deepen the results concerning the improvements in the metacognitive skills [47] and their dependence on environmental variables, for example, remote teaching, as in our situation. The next step to gain a deeper understanding of the perceptions of this large audience of engineering students could be to improve the statistical factor analysis to check the three dimensions of the RT section. In this work, we have proposed a preliminary estimation of the opinions given by the students of the Politecnico di Milano without discussing other predictors, i.e., in a mixed-method enquiry [48], it was indicated that female students appeared to be at higher risk of facing negative mental health consequences. We certainly assert that our research could be also expanded by investigating the influences of other factors such as gender or the year of the academic course that was attended. Another important upgrade could be collecting quantitative feedback of the metacognitive improvement, i.e., by analyzing the students’ grades obtained in their following examinations. GPA (grade point average) is one of the instruments that could be used to measure academic performance and strengthen our outcomes.

Investigating and confirming that students’ perceptions of their metacognitive processes have improved during the emergency remote teaching could be an important result to be explored in more detail. Surely, a near and real future will consider a massive use of information and communication technology in teaching–learning processes (the pedagogical concept of technology-mediated learning), and as we described in the introduction, the metacognition and self-efficacy seem to be crucial in the educational system, now and in the future.

In [49], it was reported that Internet, big data, artificial intelligence, 5G, and cloud-based platforms, among other technologies, will help society create a sustainable future in education. However, the authors also underlined that infrastructure is not enough for an effective teaching–learning process. It is necessary to shift from traditional, teacher-centered, and lecture-based activities towards more student-centered activities, including group activities, discussions, and hands-on learning activities. In this student-centered scenario, our outcomes could boost the process towards this new paradigm of teaching, adding another point in favor of distance learning. It is not our responsibility to equilibrate all the parameters, from logistic to pedagogical, and to decide how to use distance learning for the next generation, but we can assert that our outcomes weigh in favor of remote teaching for engineering students.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/su15032295/s1, The Questionnaire, Confirmatory Factor Analysis, Cronbach’s alpha statistic, Factor-Analysis.

Author Contributions

Methodology, M.B.; Software, M.B.; Validation, I.T. and S.S.; Formal analysis, M.B.; Investigation, M.Z.; Writing—original draft, R.M.; Writing—review & editing, I.T.; Supervision, M.Z.; Project administration, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Datasets are available on request from the authors. The R scripts for the confirmative factor analysis, Cronbach’s alpha statistics, and inferential tests that were carried out are available in Sections B, C, and D, respectively, while the entire questionnaire is available in Supporting Materials.

Conflicts of Interest

The authors declare no conflict of interest.

Section A

In this section, the questions (items) referring to the remote teaching, metacognition, and self-efficacy sections are reported.

Section B

In this section, the R script and the outcomes referring to the CFA concerning metacognition are reported.

Section C

In this section, the R script and the outcomes referring to the Cronbach’s alpha concerning metacognition and self-efficacy are reported.

Section D

In this section, the R script and the outcomes referring to the FA concerning self-efficacy are reported.

References

- World Health Organization Coronavirus Disease (COVID-19) Pandemic. 2021. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019 (accessed on 29 July 2022).

- Velavan, T.P.; Meyer, C.G. The COVID-19 epidemic. Trop. Med. Int. Health 2020, 25, 278–280. [Google Scholar] [CrossRef] [PubMed]

- Marinoni, G. The Impact of COVID-19 on Higher Education around the World; International Association of Universities: Paris, France, 2020; p. 50. [Google Scholar]

- Ives, B. University students experience the COVID-19 induced shift to remote instruction. Int. J. Educ. Technol. High. Educ. 2021, 18, 59. [Google Scholar] [CrossRef] [PubMed]

- Gamage, K.A. COVID-2019 Impacts on Education Systems and Future of Higher Education; MDPI: Basel, Switzerland, 2022. [Google Scholar] [CrossRef]

- Peimani, N.; Kamalipour, H. Online Education in the Post COVID-19 Era: Students’ Perception and Learning Experience. Educ. Sci. 2021, 11, 633. [Google Scholar] [CrossRef]

- Marzoli, I.; Colantonio, A.; Fazio, C.; Giliberti, M.; di Uccio, U.S.; Testa, I. Effects of emergency remote instruction during the COVID-19 pandemic on university physics students in Italy. Phys. Rev. Phys. Educ. Res. 2021, 17, 020130. [Google Scholar] [CrossRef]

- Bao, W. COVID-19 and online teaching in higher education: A case study of Peking University. Hum. Behav. Emerg. Technol. 2020, 2, 113–115. [Google Scholar] [CrossRef]

- Aguilera-Hermida, A.P. College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 2020, 1, 100011. [Google Scholar] [CrossRef]

- Mishra, L.; Gupta, T.; Shree, A. Online teaching-learning in higher education during lockdown period of COVID-19 pandemic. Int. J. Educ. Res. Open 2020, 1, 100012. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, P.; Mittal, P.; Gupta, M.S.; Yadav, S.; Arora, A. Opinion of students on online education during the COVID -19 pandemic. Hum. Behav. Emerg. Technol. 2020, 3, 357–365. [Google Scholar] [CrossRef]

- Bond, M.; Bedenlier, S.; Marín, V.I.; Händel, M. Emergency remote teaching in higher education: Mapping the first global online semester. Int. J. Educ. Technol. High. Educ. 2021, 18, 50. [Google Scholar] [CrossRef]

- Radcliffe, D.; Wilson, H.; Powell, D.; Tibbetts, B. Learning spaces in higher education: Positive outcomes by design. In Proceedings of the next generation learning spaces 2008 colloquium, University of Queensland, Brisbane, QLD, Australia, 1–2 October 2009. [Google Scholar]

- Joia, L.; Lorenzo, M. Zoom in, Zoom out: The Impact of the COVID-19 Pandemic in the Classroom. Sustainability 2021, 13, 2531. [Google Scholar] [CrossRef]

- Klimova, B.; Zamborova, K.; Cierniak-Emerych, A.; Dziuba, S. University Students and Their Ability to Perform Self-Regulated Online Learning Under the COVID-19 Pandemic. Front. Psychol. 2022, 13. [Google Scholar] [CrossRef] [PubMed]

- Anthonysamy, L. The use of metacognitive strategies for undisrupted online learning: Preparing university students in the age of pandemic. Educ. Inf. Technol. 2021, 26, 6881–6899. [Google Scholar] [CrossRef] [PubMed]

- Tsai, Y.-H.; Lin, C.-H.; Hong, J.-C.; Tai, K.-H. The effects of metacognition on online learning interest and continuance to learn with MOOCs. Comput. Educ. 2018, 121, 18–29. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-Efficacy: An Essential Motive to Learn. Contemp. Educ. Psychol. 2000, 25, 82–91. [Google Scholar] [CrossRef] [PubMed]

- Petillion, R.J.; McNeil, W.S. Student Experiences of Emergency Remote Teaching: Impacts of Instructor Practice on Student Learning, Engagement, and Well-Being. J. Chem. Educ. 2020, 97, 2486–2493. [Google Scholar] [CrossRef]

- Watson, D.; Clark, L.A.; Tellegen, E.A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Personal. Soc. Psychol. 1988, 54, 1063–1070. [Google Scholar] [CrossRef]

- Pintrich, P.R. The Role of Metacognitive Knowledge in Learning, Teaching, and Assessing. Theory Into Pr. 2002, 41, 219–225. [Google Scholar] [CrossRef]

- Manganelli, S.; Alivernini, F.; Mallia, L.; Biasci, V. The Development and Psychometric Properties of the «Self-Regulated Knowledge Scale—University» (SRKS-U). ECPS Educ. Cult. Psychol. Stud. 2015, 1, 235–254. [Google Scholar] [CrossRef]

- Bandura, A. Self-Efficacy: The Exercise of Control; W H Freeman/Times Books/Henry Holt & Co: New York, NY, USA, 1997; pp. ix, 604. [Google Scholar]

- Van Dinther, M.; Dochy, F.; Segers, M. Factors affecting students’ self-efficacy in higher education. Educ. Res. Rev. 2011, 6, 95–108. [Google Scholar] [CrossRef]

- Glynn, S.M.; Koballa, T.R. Motivation to learn in college science. In Handbook of College Science Teaching; National Sciences Teachers Association Press: Arlington, VA, USA, 2006; pp. 25–32. [Google Scholar]

- Testa, I.; Picione, R.D.L.; di Uccio, U.S. Patterns of Italian high school and university students’ attitudes towards physics: An analysis based on semiotic-cultural perspective. Eur. J. Psychol. Educ. 2021, 37, 785–806. [Google Scholar] [CrossRef]

- Choe, N.H.; Borrego, M. Prediction of Engineering Identity in Engineering Graduate Students. IEEE Trans. Educ. 2019, 62, 181–187. [Google Scholar] [CrossRef]

- Morelock, J.R. A systematic literature review of engineering identity: Definitions, factors, and interventions affecting development, and means of measurement. Eur. J. Eng. Educ. 2017, 42, 1240–1262. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Mazzola, R.; Bozzi, M.; Testa, I.; Brambilla, F.; Zani, M. Perception of Advantages/Difficulties of Remote Teaching during COVID-19 Pandemic: Results from a Survey with 3000 Italian Engineering Students. Edulearn 2022, 2440–2445. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling, 4th ed.; The Guilford Press: New York, NY, USA, 2018. [Google Scholar]

- Tucker, L.R.; Lewis, C. A reliability coefficient for maximum likelihood factor analysis. Psychometrika 1973, 38, 1–10. [Google Scholar] [CrossRef]

- Steiger, J.H. Structural Model Evaluation and Modification: An Interval Estimation Approach. Multivar. Behav. Res. 1990, 25, 173–180. [Google Scholar] [CrossRef]

- Bandalos, D.L. Measurement Theory and Applications for the Social Sciences; Guilford Publications: New York, NY, USA, 2018. [Google Scholar]

- Taber, K.S. The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Field, A.; Miles, J.; Field, Z. Discovering Statistics Using R; SAGE Publications Ltd: Thousand Oaks, CA, USA, 2012. [Google Scholar]

- Gardner, P.L. Measuring attitudes to science: Unidimensionality and internal consistency revisited. Res. Sci. Educ. 1995, 25, 283–289. [Google Scholar] [CrossRef]

- Soliani, L. Statistica di Base; PICCIN: Padova, Italy, 2015; pp. 280–285. [Google Scholar]

- Cohen, J. The statistical power of abnormal-social psychological research: A review. J. Abnorm. Soc. Psychol. 1962, 65, 145–153. [Google Scholar] [CrossRef] [PubMed]

- Taherdoost, H.; Sahibuddin, S.; Jalaliyoon, N.E.D. Exploratory Factor Analysis; Concepts and Theory. Adv. Appl. Pure Math. 2022, 27, 375–382. [Google Scholar]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, E.A. The Difference Between Emergency Remote Teaching and Online Learning; Educause: Boulder, CO, USA, 2020; p. 12. [Google Scholar]

- Gómez-García, G.; Ramos-Navas-Parejo, M.; de la Cruz-Campos, J.-C.; Rodríguez-Jiménez, C. Impact of COVID-19 on University Students: An Analysis of Its Influence on Psychological and Academic Factors. Int. J. Environ. Res. Public Health 2022, 19, 10433. [Google Scholar] [CrossRef] [PubMed]

- Cao, W.; Fang, Z.; Hou, G.; Han, M.; Xu, X.; Dong, J.; Zheng, J. The psychological impact of the COVID-19 epidemic on college students in China. Psychiatry Res. 2020, 287, 112934. [Google Scholar] [CrossRef] [PubMed]

- Li, H.Y.; Cao, H.; Leung, D.Y.P.; Mak, Y.W. The Psychological Impacts of a COVID-19 Outbreak on College Students in China: A Longitudinal Study. Int. J. Environ. Res. Public Health 2020, 17, 3933. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Pan, R.; Wan, X.; Tan, Y.; Xu, L.; Ho, C.S.; Ho, R.C. Immediate Psychological Responses and Associated Factors during the Initial Stage of the 2019 Coronavirus Disease (COVID-19) Epidemic among the General Population in China. Int. J. Environ. Res. Public Health 2020, 17, 1729. [Google Scholar] [CrossRef]

- Sahu, P. Closure of Universities Due to Coronavirus Disease 2019 (COVID-19): Impact on Education and Mental Health of Students and Academic Staff. Cureus 2020, 12, e7541. [Google Scholar] [CrossRef] [PubMed]

- Hartman, H.J. Developing Students’ Metacognitive Knowledge and Skills. Theory Res. Pract. 2001, 33–68. [Google Scholar] [CrossRef]

- Lischer, S.; Safi, N.; Dickson, C. Remote learning and students’ mental health during the COVID-19 pandemic: A mixed-method enquiry. Prospects 2021, 51, 589–599. [Google Scholar] [CrossRef]

- Zhu, X.; Liu, J. Education in and After COVID-19: Immediate Responses and Long-Term Visions. Postdigital Sci. Educ. 2020, 2, 695–699. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).