Leveraging Systems Thinking, Engagement, and Digital Competencies to Enhance First-Year Architecture Students’ Achievement in Design-Based Learning

Abstract

:1. Introduction

1.1. Theoretical Framework

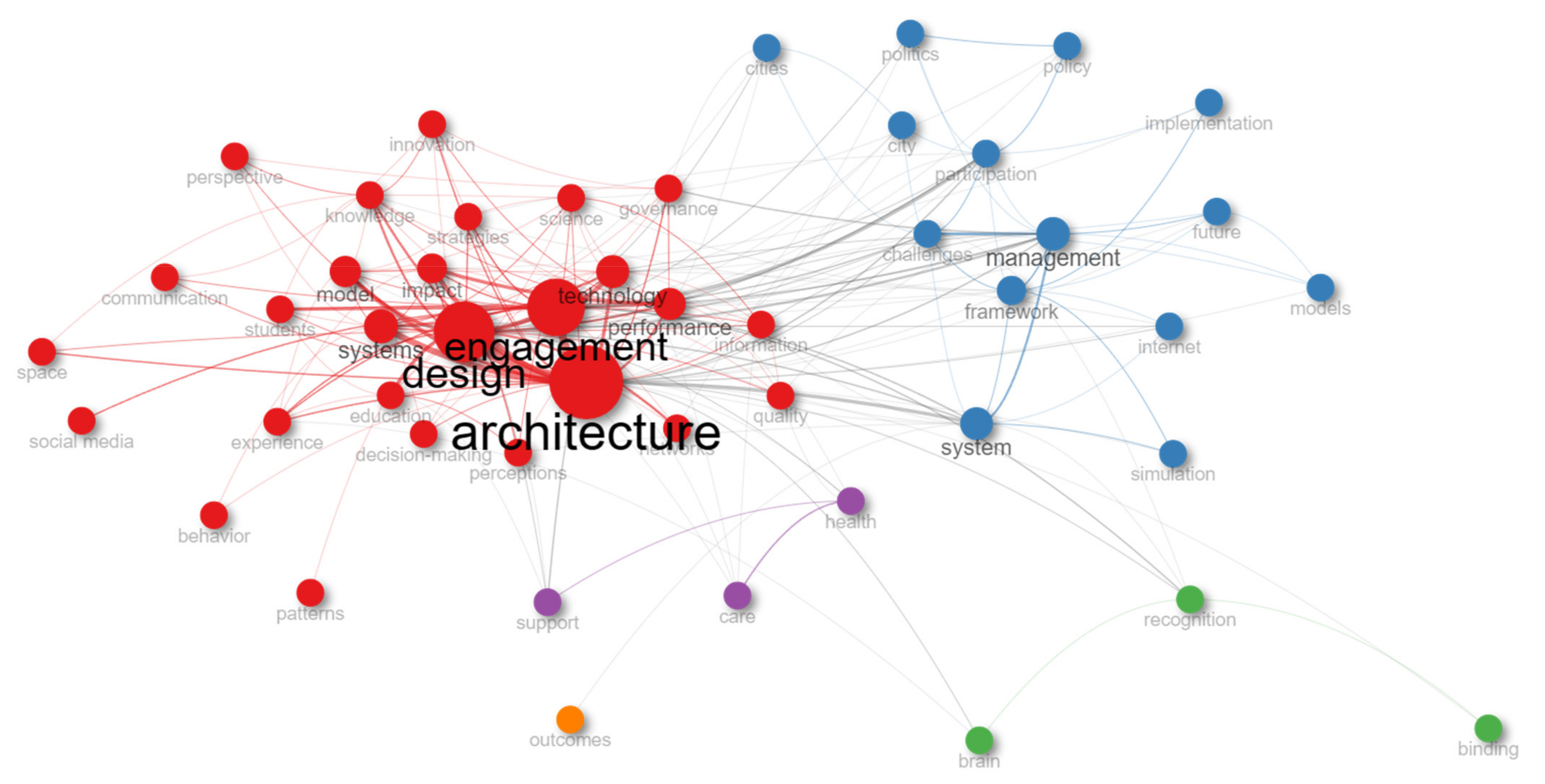

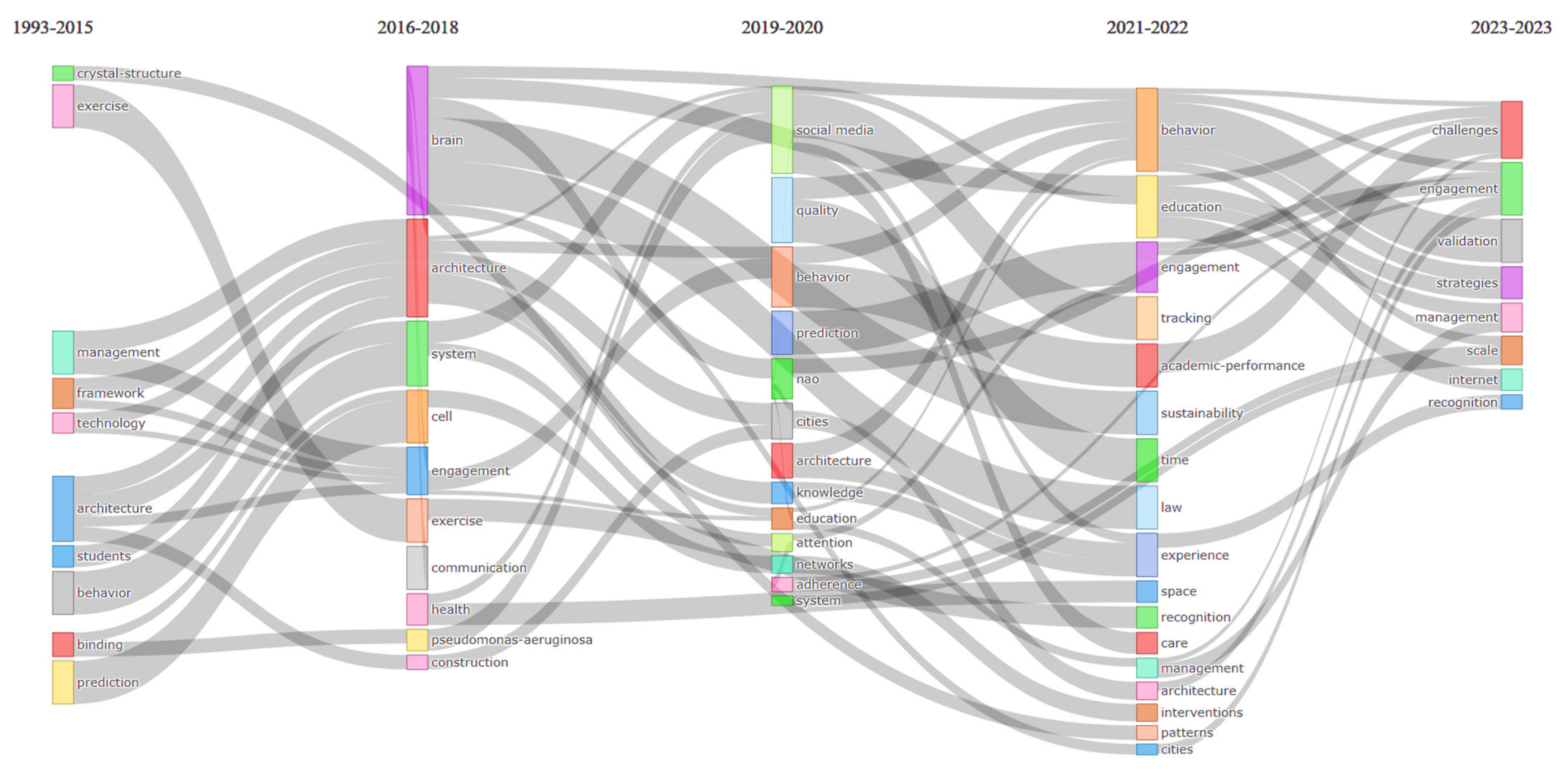

1.1.1. Bibliometric Analysis

1.1.2. Learning Dynamics in Technology-Enhanced Education Ecosystems

1.2. Study Context, Aim, and Research Questions

2. Materials and Methods

2.1. Architecture Education Basic Settings

2.2. Procedure and Sample

2.3. Measures

2.3.1. Systems Thinking

2.3.2. Student Engagement

2.3.3. ICT Self-Concept

2.3.4. Design Thinking

2.4. Data Analysis

2.4.1. Descriptive Statistics and Normality Tests

2.4.2. Validity Tests and Inferential Statistics

3. Results

3.1. Convergent and Discriminant Validity

3.1.1. Systems Thinking Skills

3.1.2. Student Engagement

3.1.3. ICT Self-Concept

3.1.4. Design Thinking

3.2. Descriptive Statistics and Normality Tests

3.3. Do Systems Thinking, Students’ Engagement in Learning, ICT-SC, and Design Thinking Influence the Effect of Different-Sized Cohort Groups on Achievement in a Design Project?

3.3.1. Systems Thinking

3.3.2. Student Engagement in Learning

3.4. Testing Sequential Mediation of Systems Thinking Skills, ICT-SC, and Engagement in Learning on Students’ Achievements in Design Projects

4. Discussion

4.1. Reliability and Validity of Measurement for Capturing Dynamics of Knowledge Creation and Transfer

4.2. First-Year Students’ Level of Systems Thinking, Engagement in Learning, ICT Self-Concept, and Design Thinking

4.3. Systems Thinking, Students’ Engagement in Learning, ICT Self-Concept, and Design Thinking Influence Achievement in Design Projects

4.4. Sequential Mediation of Systems Thinking Skills, ICT-SC, and Engagement in Learning on Students’ Achievements in Design Project

4.5. Limitations of the Study and Future Work

5. Conclusions and Implications

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kara, E.; Tonin, M.; Vlassopoulos, M. Class size effects in higher education: Differences across STEM and non-STEM fields. Econ. Educ. Rev. 2021, 82, 102104. [Google Scholar] [CrossRef]

- Cheung, S.K.S.; Kwok, L.F.; Phusavat, K.; Yang, H.H. Shaping the future learning environments with smart elements: Challenges and opportunities. Int. J. Educ. Technol. High. Educ. 2021, 18, 16. [Google Scholar] [CrossRef] [PubMed]

- Bicalho, R.N.d.; Coll, C.; Engel, A.; Lopes de Oliveira, M.C.S. Integration of ICTs in teaching practices: Propositions to the SAMR model. Educ. Technol. Res. Dev. 2023, 71, 563–578. [Google Scholar] [CrossRef] [PubMed]

- Vongkulluksna, V.W.; Xie, K.; Bowmana, M.A. The role of value on teachers’ internalization of external barriers and externalization of personal beliefs for classroom technology integration. Comput. Educ. 2018, 118, 70–81. [Google Scholar] [CrossRef]

- European Commission-EC. Digital Education Action Plan (2021–2027). Available online: https://education.ec.europa.eu/focus-topics/digital-education/action-plan (accessed on 13 August 2023).

- European University Association-EUA. The Voice of Europe’s Universities. Available online: https://eua.eu/ (accessed on 13 August 2023).

- Gunness, A.; Matanda, M.J.; Rajaguru, R. Effect of student responsiveness to instructional innovativeness on student engagement in semi-synchronous online learning environments: The mediating role of personal technological innovativeness and perceived usefulness. Comput. Educ. 2023, 205, 104884. [Google Scholar] [CrossRef]

- Duan, C.; Liu, X.; Yang, X.; Deng, C. Knowledge complexity and team information processing: The mediating role of team learning goal orientation. J. Knowl. Manag. 2023, 27, 1279–1298. [Google Scholar] [CrossRef]

- Kautz, T.; Heckman, J.J.; Diris, R.; ter Weel, B.; Borghans, L. Fostering and Measuring Skills: Improving Cognitive and Non-Cognitive Skills to Promote Lifetime Success; OECD Education Working Papers; OECD Publishing: Paris, France, 2014. [Google Scholar] [CrossRef]

- Gottschalk, F.; Weise, C. Digital Equity and Inclusion in Education: An Overview of Practice and Policy in OECD Countries; OECD Education Working Papers; OECD Publishing: Paris, France, 2023. [Google Scholar] [CrossRef]

- Cabrera, D.; Cabrera, L. What Is Systems Thinking? In Learning, Design, and Technology; Spector, M., Lockee, B., Childress, M., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Wakelam, E.; Jefferies, A.; Davey, N.; Sun, Y. The potential for student performance prediction in small cohorts with minimal available attributes. Br. J. Educ. Technol. 2019, 51, 347–370. [Google Scholar] [CrossRef]

- Mauldin, R.L.; Barros-Lane, L.; Tarbet, Z.; Fujimoto, K.; Narendorf, S.C. Cohort-Based Education and Other Factors Related to Student Peer Relationships: A Mixed Methods Social Network Analysis. Educ. Sci. 2022, 12, 205. [Google Scholar] [CrossRef]

- Christoph, G.; Goldhammer, F.; Zylka, J.; Hartig, J. Adolescents’ computer performance: The role of self-concept and motivational aspects. Comp. Educ. 2015, 81, 1–12. [Google Scholar] [CrossRef]

- Walsh, I.; Rowe, F. BIBGT: Combining bibliometrics and grounded theory to conduct a literature review. Eur. J. Inf. Syst. 2023, 32, 653–674. [Google Scholar] [CrossRef]

- Zupic, I.; Čater, T. Bibliometric Methods in Management and Organization. Organ. Res. Methods 2015, 18, 429–472. [Google Scholar] [CrossRef]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. J. Bus. Res. 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Bryce, T.G.K.; Blown, E.J. Ausubel’s meaningful learning re-visited. Curr. Psychol. 2023, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Ausubel, D.P. The Acquisition and Retention of Knowledge: A Cognitive View; Springer: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Ausubel, D.P. Educational Psychology: A Cognitive View; Holt, Rinehart and Winston: New York, NY, USA, 1968. [Google Scholar]

- Elfeky, A.I.M.; Masadeh, T.S.Y.; Elbyaly, M.Y.H. Advance organizers in flipped classroom via e-learning management system and the promotion of integrated science process skills. Think. Ski. Creat. 2020, 35, 100622. [Google Scholar] [CrossRef]

- Boekaerts, M. Engagement as an inherent aspect of the learning process. Learn. Instr. 2016, 43, 76–83. [Google Scholar] [CrossRef]

- Green, C.; Molloy, O.; Duggan, J. An Empirical Study of the Impact of Systems Thinking and Simulation on Sustainability Education. Sustainability 2022, 14, 394. [Google Scholar] [CrossRef]

- Naibert, N.; Barbera, J. Development and Evaluation of a Survey to Measure Student Engagement at the Activity Level in General Chemistry. J. Chem. Educ. 2022, 99, 1410–1419. [Google Scholar] [CrossRef]

- Nguyen, C.T. Autonomy and Aesthetic Engagement. Mind 2020, 129, 1127–1156. [Google Scholar] [CrossRef]

- Diessner, R.; Solom, R.C.; Frost, N.K.; Parsons, L.; Davidson, J. Engagement with beauty: Appreciating natural, artistic, and moral beauty. J. Psychol. 2008, 142, 303–329. [Google Scholar] [CrossRef]

- Sabadosh, P. A Russian Version of the Engagement with Beauty Scale: The Multitrait-Multimethod Model. Psychol. J. High. Sch. Econ. 2017, 14, 7–21. [Google Scholar] [CrossRef]

- Barlow, A.; Brown, S.; Lutz, B.; Pitterson, N.; Hunsu, N.; Adescope, O. Development of the student course cognitive engagement instrument (SCCEI) for college engineering courses. Int. J. STEM Educ. 2020, 7, 22. [Google Scholar] [CrossRef]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School Engagement: Potential of the Concept, State of the Evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Greene, B.A. Measuring cognitive engagement with self-report scales: Reflections from over 20 years of research. Educ. Psychol. 2015, 50, 14–30. [Google Scholar] [CrossRef]

- Hospel, V.; Galand, B.; Janosz, M. Multidimensionality of behavioural engagement: Empirical support and implications. Int. J. Educ. Res. 2016, 77, 37–49. [Google Scholar] [CrossRef]

- Kotluk, N.; Tormey, R. Emotional empathy and engineering students’ moral reasoning. In Proceedings of the European Society for Engineering Education (SEFI) 50th Annual Conference, Barcelona, Spain, 19–22 September 2022. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. Flow and the Foundations of Positive Psychology: The Collected Works of Mihaly Csikszentmihalyi; Springer: Dordrecht, The Netherlands, 2014. [Google Scholar]

- Bergdahl, N.; Nouri, J.; Fors, U.; Knutsson, O. Engagement, disengagement and performance when learning with technologies in upper secondary school. Comp. Educ. 2020, 149, 103783. [Google Scholar] [CrossRef]

- Lönngren, J.; Direito, I.; Tormey, R.; Huff, J.L. Emotions in engineering education. In International Handbook of Engineering Education Research; Johri, A., Ed.; Routledge: New York, NY, USA, 2023; pp. 156–182. [Google Scholar] [CrossRef]

- Tarrant, M.; Schweinsberg, S.; Landon, A.; Wearing, S.L.; McDonald, M.; Rubin, D. Exploring Student Engagement in Sustainability Education and Study Abroad. Sustainability 2021, 13, 12658. [Google Scholar] [CrossRef]

- Shany, O.; Greental, A.; Gilam, G.; Perry, D.; Bleich-Cohen, M.; Ovadia, M.; Cohen, A.; Raz, G. Somatic engagement alters subsequent neurobehavioral correlates of affective mentalizing. Hum. Brain Mapp. 2021, 42, 5846–5861. [Google Scholar] [CrossRef]

- Sinatra, G.M.; Heddy, B.C.; Lombardi, D. The Challenges of Defining and Measuring Student Engagement in Science. Educ. Psychol. 2015, 50, 1–13. [Google Scholar] [CrossRef]

- Dolansky, M.A.; Moore, S.M.; Palmieri, P.A.; Singh, M.K. Development and Validation of the Systems Thinking Scale. J. Gen. Intern. Med. 2020, 35, 2314–2320. [Google Scholar] [CrossRef]

- Moore, S.M.; Komton, V.; Adegbite-Adeniyi, C.; Dolansky, M.A.; Hardin, H.K.; Borawski, E.A. Development of the Systems Thinking Scale for Adolescent Behavior Change. West. J. Nurs. Res. 2018, 40, 375–387. [Google Scholar] [CrossRef]

- Elsawah, S.; Ho, A.T.L.; Ryan, M.J. Teaching Systems Thinking in Higher Education. INFORMS Tran. Educ. 2021, 22, 66–102. [Google Scholar] [CrossRef]

- Moore, S.M.; Dolansky, M.A.; Singh, M.; Palmieri, P.; Alemi, F. The Systems Thinking Scale; Case Western Reserve University: Cleveland, OH, USA, 2010; Available online: https://case.edu/nursing/sites/case.edu.nursing/files/2018-04/STS_Manual.pdf (accessed on 12 August 2023).

- Davis, A.C.; Leppanen, W.; Mularczyk, K.P.; Bedard, T.; Stroink, M.L. Systems Thinkers Express an Elevated Capacity for the Allocentric Components of Cognitive and Affective Empathy. Syst. Res. Behav. Sci. 2018, 35, 216–229. [Google Scholar] [CrossRef]

- Parent, R.; Roy, M.; St-Jacques, D. A systems-based dynamic knowledge transfer capacity model. J. Knowl. Manag. 2007, 11, 81–93. [Google Scholar] [CrossRef]

- Kuiken, J.; van der Sijde, P. Knowledge Transfer and Capacity for Dissemination: A Review and Proposals for Further Research on Academic Knowledge Transfer. Ind. High. Educ. 2011, 25, 173–179. [Google Scholar] [CrossRef]

- Pazicni, S.; Flynn, A.B. Systems Thinking in Chemistry Education: Theoretical Challenges and Opportunities. J. Chem. Educ. 2019, 96, 2752–2763. [Google Scholar] [CrossRef]

- Stave, K.; Hopper, M. What constitutes systems thinking? A proposed taxonomy. In Proceedings of the 25th International Conference of the System Dynamics Society, Boston, MA, USA, 29 July–2 August 2007; Available online: https://digitalscholarship.unlv.edu/sea_fac_articles/201 (accessed on 11 August 2023).

- Arnold, R.D.; Wade, J.P. A Definition of Systems Thinking: A Systems Approach. Procedia Comput. Sci. 2015, 44, 669–678. [Google Scholar] [CrossRef]

- Grohs, J.R.; Kirk, G.R.; Soledad, M.M.; Knight, D.B. Assessing systems thinking: A tool to measure complex reasoning through ill-structured problems. Think. Ski. Creat. 2018, 28, 110–130. [Google Scholar] [CrossRef]

- Engström, S.; Norström, P.; Söderberg, H. A Model for Teaching Systems Thinking: A Tool for Analysing Technology Teachers’ Conceptualising of Systems Thinking, and How it is Described in Technology Textbooks for Compulsory School. Techne Ser.-Forsk. I Slöjdpedagogik Och Slöjdvetenskap 2021, 28, 241–251. Available online: https://journals.oslomet.no/index.php/techneA/article/view/4320 (accessed on 10 August 2023).

- Arnold, R.; Wade, J.P. A Complete Set of Systems Thinking Skills. Insight 2017, 20, 9–17. [Google Scholar] [CrossRef]

- Šviráková, E.; Bianchi, G. Design thinking, system thinking, Grounded Theory, and system dynamics modeling—An integrative methodology for social sciences and humanities. Hum. Aff. 2018, 28, 312–327. [Google Scholar] [CrossRef]

- Mononen, L. Systems thinking and its contribution to understanding future designer thinking. Des. J. 2017, 20, S4529–S4538. [Google Scholar] [CrossRef]

- Grau, S.L.; Rockett, T. Creating Student-centred Experiences: Using Design Thinking to Create Student Engagement. J. Entrep. 2022, 31, S135–S159. [Google Scholar] [CrossRef]

- Voulvoulis, N.; Giakoumis, T.; Hunt, C.; Kioupi, V.; Petrou, N.; Souliotis, I.; Vaghela, C.J.G.E.C. Systems thinking as a paradigm shift for sustainability transformation. Glob. Environ. Change 2022, 75, 102544. [Google Scholar] [CrossRef]

- Pohl, C.; Pearce, B.B.; Mader, M.; Senn, L.; Krütli, P. Integrating systems and design thinking in transdisciplinary case studies. GAIA-Ecol. Perspect. Sci. Soc. 2020, 29, 258–266. [Google Scholar] [CrossRef]

- Watanabe, K.; Tomita, Y.; Ishibashi, K.; Ioki, M.; Shirasaka, S. Framework for Problem Definition—A Joint Method of Design Thinking and Systems Thinking. INCOSE Int. Symp. 2017, 27, 57–71. [Google Scholar] [CrossRef]

- Mugadza, G.; Marcus, R. A systems thinking and design thinking approach to leadership. Exp. J. Bus. Man. 2019, 7, 1–10. [Google Scholar]

- Avsec, S.; Jagiełło-Kowalczyk, M.; Żabicka, A. Enhancing Transformative Learning and Innovation Skills Using Remote Learning for Sustainable Architecture Design. Sustainability 2022, 14, 3928. [Google Scholar] [CrossRef]

- Schmitz, M.-L.; Antonietti, C.; Consoli, T.; Cattaneo, A.; Gonon, P.; Petko, D. Transformational leadership for technology integration in schools: Empowering teachers to use technology in a more demanding way. Comp. Educ. 2023, 204, 104880. [Google Scholar] [CrossRef]

- United Nations. The Sustainable Development Agenda. Available online: https://www.un.org/sustainabledevelopment/development-agenda/ (accessed on 16 October 2023).

- United Nations Educational, Scientific and Cultural Organization. Education for Sustainable Development Goals, Learning Objectives; UNESCO: Paris, France, 2017. [Google Scholar]

- Buchanan, R. Systems Thinking and Design Thinking: The Search for Principles in the World We Are Making. She Ji J. Des. Econ. Innov. 2019, 5, 85–104. [Google Scholar] [CrossRef]

- Leontiev, A.N. Problems of the Development of the Mind; Progress Publishers: Moscow, Russia, 1981. [Google Scholar]

- Kwong, C.-Y.C.; Churchill, D. Applying the Activity Theory framework to analyse the use of ePortfolios in an International Baccalaureate Middle Years Programme Sciences classroom: A longitudinal multiple-case study. Comp. Educ. 2023, 200, 104792. [Google Scholar] [CrossRef]

- Schauffel, N.; Schmidt, I.; Peiffer, H.; Ellwart, T. Self-concept related to information and communication technology: Scale development and validation. Comput. Hum. Behav. Rep. 2021, 4, 100149. [Google Scholar] [CrossRef]

- Li, S.C.; Zhu, J. Cognitive-motivational engagement in ICT mediates the effect of ICT use on academic achievements: Evidence from 52 countries. Comp. Educ. 2023, 204, 104871. [Google Scholar] [CrossRef]

- Goldie, J.G.S. Connectivism: A knowledge learning theory for the digital age? Medic. Teach. 2016, 38, 1064–1069. [Google Scholar] [CrossRef]

- Siemens, G. Connectivism: A learning theory for the digital age. Int. J. Instr. Technol. Dis. Learn. 2005, 2, 1–8. Available online: http://www.itdl.org/Journal/Jan_05/article01.htm (accessed on 10 June 2023).

- Downes, S. Connectivism and connective knowledge. In Essays on Meaning and Learning Networks; National Research Council: Ottawa, ON, Canada, 2012; Available online: http://www.downes.ca/files/books/Connective_Knowledge-19May2012.pdf (accessed on 25 August 2023).

- Borgonovi, F.; Pokropek, M.; Pokropek, A. Relations between academic boredom, academic achievement, ICT use, and teacher enthusiasm among adolescents. Comp. Educ. 2023, 200, 104807. [Google Scholar] [CrossRef]

- Eidin, E.; Bowers, J.; Damelin, D.; Krajcik, J. The effect of using different computational system modeling approaches on applying systems thinking. Front. Educ. 2023, 8, 1173792. [Google Scholar] [CrossRef]

- Johar, N.A.; Kew, S.N.; Tasir, Z.; Koh, E. Learning Analytics on Student Engagement to Enhance Students’ Learning Performance: A Systematic Review. Sustainability 2023, 15, 7849. [Google Scholar] [CrossRef]

- Eriksson, E.; Rivera, M.B.; Hedin, B.; Pargman, D.; Hasselqvist, H. Systems Thinking Exercises in Computing Education. In Proceedings of the 7th International Conference on ICT for Sustainability, Bristol, UK, 21–27 June 2020; pp. 170–176. [Google Scholar] [CrossRef]

- Avsec, S. Design Thinking to Envision More Sustainable Technology-Enhanced Teaching for Effective Knowledge Transfer. Sustainability 2023, 15, 1163. [Google Scholar] [CrossRef]

- Blundell, C.N.; Mukherjee, M.; Nykvist, S. A scoping review of the application of the SAMR model in research. Comp. Educ. Open 2022, 3, 100093. [Google Scholar] [CrossRef]

- Kuklick, L.; Greiff, S.; Lindner, M.A. Computer-based performance feedback: Effects of error message complexity on cognitive, metacognitive, and motivational outcomes. Comp. Educ. 2023, 200, 104785. [Google Scholar] [CrossRef]

- Senge, P. The Fifth Discipline: The Art and Practice of the Learning Organization, 2nd ed.; Doubleday: New York, NY, USA, 2006. [Google Scholar]

- Cracow University of Technology. Syllabus. Available online: http://syllabus.pk.edu.pl/ (accessed on 25 August 2023).

- Poznan University of Technology. Available online: https://architektura.put.poznan.pl/ (accessed on 25 August 2023).

- Kielce University of Technology. Available online: https://wbia.tu.kielce.pl/ (accessed on 25 August 2023).

- Ateskan, A.; Lane, J.F. Assessing teachers’ systems thinking skills during a professional development program in Turkey. J. Clean. Prod. 2018, 172, 4348–4356. [Google Scholar] [CrossRef]

- Velicer, W.F. Determining the number of components from the matrix of partial correlations. Psychometrika 1976, 41, 321–327. [Google Scholar] [CrossRef]

- Zwick, W.R.; Velicer, W.F. Comparison of five rules for determining the number of components to retain. Psych. Bull. 1986, 99, 432–442. [Google Scholar] [CrossRef]

- O’connor, B.P. SPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP test. Behav. Res. Meth. Instrum. Comput. 2000, 32, 396–402. [Google Scholar] [CrossRef]

- Tabachnick, B.G.; Fidell, L.S. Using Multivariate Statistics, 7th ed.; Pearson: Boston, MA, USA, 2019. [Google Scholar]

- Berleant, A. What is Aesthetic Engagement? Cont. Aesth. 2013, 11, 5. Available online: https://digitalcommons.risd.edu/liberalarts_contempaesthetics/vol11/iss1/5 (accessed on 18 August 2023).

- Schummer, J.; MacLennan, B.; Taylor, N. Aesthetic Values in Technology and Engineering Design. In Handbook of the Philosophy of Science, Philosophy of Technology and Engineering Sciences; Meijers, A., Ed.; North Holland: Eindhoven, The Netherlands, 2009; pp. 1031–1068. [Google Scholar] [CrossRef]

- Dosi, C.; Rosati, F.; Vignoli, M. Measuring design thinking mindset. In Design 2018, Proceedings of the 15th International Design Conference, Dubrovnik, Croatia, 21–24 May 2018; Marjanovíc, D., Štorga, M., Škec, S., Bojčetić, N., Pavković, N., Eds.; The Design Society: Dubrovnik, Croatia, 2018; pp. 1991–2002. Available online: https://www.designsociety.org/publication/40597/MEASURING+DESIGN+THINKING+MINDSET (accessed on 13 August 2023).

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 8th ed.; Cengage: Hampshire, UK, 2019. [Google Scholar]

- Pituch, K.A.; Stevens, J.P. Applied Multivariate Statistics for the Social Sciences; Routledge: New York, NY, USA, 2015. [Google Scholar]

- Hayes, A.F.; Coutts, J.J. Use Omega Rather than Cronbach’s Alpha for Estimating Reliability. But…. Commun. Methods Meas. 2020, 14, 1–24. [Google Scholar] [CrossRef]

- Malkewitz, C.P.; Schwall, P.; Meesters, C.; Hardt, J. Estimating reliability: A comparison of Cronbach’s α, McDonald’s ωt and the greatest lower bound. Soc. Sci. Hum. Open 2023, 7, 100368. [Google Scholar] [CrossRef]

- Cohen, J.; Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences, 3rd ed.; Routledge: New York, NY, USA, 2003; pp. 32–35. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Lachowicz, M.J.; Preacher, K.J.; Kelley, K. A novel measure of effect size for mediation analysis. Psychol. Methods 2018, 23, 244–261. [Google Scholar] [CrossRef]

- Ogbeibu, S.; Jabbour, C.; Gaskin, J.; Senadjki, A.; Hughes, M. Leveraging STARA competencies and green creativity to boost green organisational innovative evidence: A praxis for sustainable development. Bus. Strat. Environ. 2021, 30, 2421–2440. [Google Scholar] [CrossRef]

- Gaskin, J.; Ogbeibu, S.; Lowry, P.B. Demystifying Prediction in Mediation Research and the Use of Specific Indirect Effects and Indirect Effect Sizes. In Partial Least Squares Path Modeling: Basic Concepts, Methodological Issues, and Applications, 2nd ed.; Latan, H., Hair, J.F., Noonan, R., Eds.; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Cheung, G.W.; Cooper-Thomas, H.D.; Lau, R.S.; Wang, L.C. Reporting reliability, convergent and discriminant validity with structural equation modeling: A review and best-practice recommendations. Asia Pac. J. Manag. 2023, 1–39. [Google Scholar] [CrossRef]

- Carlson, K.D.; Herdman, A.O. Understanding the impact of convergent validity on research results. Organ. Res. Methods 2012, 15, 17–32. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Shaffer, J.A.; DeGreest, D.; Li, A. Tackling the problem of construct proliferation: A guide to assessing the discriminant validity of conceptually related constructs. Organ. Res. Methods 2016, 19, 80–110. [Google Scholar] [CrossRef]

- Fornell, C.G.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Rönkkö, M.; Cho, E. An updated guideline for assessing discriminant validity. Organ. Res. Methods 2022, 25, 6–14. [Google Scholar] [CrossRef]

- Henseler, J.A. Adanco 2.0.1: User Manual, 1st ed.; Composite Modeling GmbH & Co.: Kleve, Germany, 2017. [Google Scholar]

- Roemer, E.; Schuberth, F.; Henseler, J. HTMT2–An improved criterion for assessing discriminant validity in structural equation modeling. Ind. Manag. Data Syst. 2021, 121, 2637–2650. [Google Scholar] [CrossRef]

- Kock, N. Common method bias in PLS-SEM: A full collinearity assessment approach. Int. J. E-Collab. 2015, 11, 1–10. [Google Scholar] [CrossRef]

- Kock, F.; Berbekova, A.; Assaf, A.G. Understanding and managing the threat 3 of common method bias: Detection, prevention and control. Tour. Manag. 2021, 86, 104330. [Google Scholar] [CrossRef]

- Hair, J.; Hollingsworth, C.L.; Randolph, A.B.; Chong, A.Y.L. An updated and expanded assessment of PLS-SEM in information systems research. Ind. Manag. Data Syst. 2017, 117, 442–458. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Podsakoff, N.P. Sources of Method Bias in Social Science Research and Recommendations on How to Control It. Annu. Rev. Psychol. 2012, 63, 539–569. [Google Scholar] [CrossRef]

- Byrne, B.M. Structural Equation Modeling with AMOS: Basic Concepts, Applications, and Programming, 3rd ed.; Routledge: New York, NY, USA, 2016. [Google Scholar]

- Hayes, A.F. Partial, conditional, and moderated mediation: Quantification, inference, and interpretation. Commun. Monogr. 2018, 85, 4–40. [Google Scholar] [CrossRef]

- Preacher, K.J.; Hayes, A.F. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav. Res. Methods 2008, 40, 879–891. Available online: http://www.ncbi.nlm.nih.gov/pubmed/18697684 (accessed on 14 July 2023). [CrossRef] [PubMed]

| Factor | Number of Items | McDonald’s ω |

|---|---|---|

| DT1—Abductive thinking, creativity, and envisioning new things/future knowledge | 8 | 0.91 |

| DT2—Embracing risk and being comfortable with uncertainty | 5 | 0.81 |

| DT3—Empathy | 5 | 0.82 |

| DT4—Teamwork and collaboration | 4 | 0.72 |

| DT5—Experiential intelligence | 3 | 0.75 |

| DT6—Learning-oriented and optimistic that they will have an impact | 6 | 0.85 |

| DT7—Problem reframing | 3 | 0.80 |

| DT8—Open to different perspectives/diversity | 3 | 0.78 |

| Latent Constructs | CR | AVE | ST 1 | ST 2 | ST 3 |

|---|---|---|---|---|---|

| ST 1 | 0.83 | 0.53 | 0.72 | ||

| ST 2 | 0.82 | 0.62 | 0.36 | 0.79 | |

| ST 3 | 0.81 | 0.51 | 0.52 | 0.46 | 0.71 |

| Latent Constructs | ST 1 | ST 2 | ST 3 |

|---|---|---|---|

| ST 1 | 0.53 | ||

| ST 2 | 0.40 (0.11) | 0.62 | |

| ST 3 | 0.70 (0.25) | 0.53 (0.14) | 0.51 |

| Latent Constructs | CR | AVE | Behavioral | Cognitive | Emotional | Social | Aesthetic |

|---|---|---|---|---|---|---|---|

| Behavioral | 0.89 | 0.67 | 0.82 | ||||

| Cognitive | 0.86 | 0.62 | 0.61 | 0.79 | |||

| Emotional | 0.86 | 0.58 | 0.55 | 0.44 | 0.76 | ||

| Social | 0.85 | 0.62 | 0.11 | 0.12 | 0.15 | 0.79 | |

| Aesthetic | 0.85 | 0.68 | 0.42 | 0.35 | 0.31 | 0.24 | 0.83 |

| Latent Constructs | Behavioral | Cognitive | Emotional | Social | Aesthetic |

|---|---|---|---|---|---|

| Behavioral | 0.67 | ||||

| Cognitive | 0.69 (0.31) | 0.62 | |||

| Emotional | 0.65 (0.28) | 0.54 (0.14) | 0.58 | ||

| Social | 0.11 (0.04) | 0.10 (0.03) | 0.15 (0.02) | 0.62 | |

| Aesthetic | 0.51(0.16) | 0.44 (0.12) | 0.37 (0.09) | 0.26 (0.05) | 0.68 |

| Latent Constructs | CR | AVE | ICT-SC 1 | ICT-SC 2 | ICT-SC 3 |

|---|---|---|---|---|---|

| ICT-SC 1 | 0.94 | 0.68 | 0.82 | ||

| ICT-SC 2 | 0.93 | 0.78 | 0.65 | 0.88 | |

| ICT-SC 3 | 0.96 | 0.69 | 0.81 | 0.69 | 0.83 |

| Latent Constructs | ICT-SC 1 | ICT-SC 2 | ICT-SC 3 |

|---|---|---|---|

| ICT-SC 1 | 0.68 | ||

| ICT-SC 2 | 0.70 (0.42) | 0.78 | |

| ICT-SC 3 | 0.86 (0.68) | 0.75 (0.48) | 0.69 |

| Latent Constructs | CR | AVE | DT1 | DT2 | DT3 | DT4 | DT5 | DT6 | DT7 | DT8 |

|---|---|---|---|---|---|---|---|---|---|---|

| DT1 | 0.92 | 0.59 | 0.77 | |||||||

| DT2 | 0.87 | 0.58 | 0.24 | 0.76 | ||||||

| DT3 | 0.90 | 0.70 | 0.53 | 0.12 | 0.84 | |||||

| DT4 | 0.82 | 0.54 | 0.43 | 0.33 | 0.39 | 0.73 | ||||

| DT5 | 0.84 | 0.65 | 0.36 | 0.11 | 0.31 | 0.18 | 0.81 | |||

| DT6 | 0.87 | 0.69 | 0.60 | 0.24 | 0.53 | 0.38 | 0.42 | 0.83 | ||

| DT7 | 0.87 | 0.69 | 0.44 | 0.10 | 0.33 | 0.43 | 0.37 | 0.42 | 0.83 | |

| DT8 | 0.88 | 0.59 | 0.61 | 0.32 | 0.43 | 0.41 | 0.33 | 0.51 | 0.41 | 0.77 |

| Latent Constructs | DT1 | DT2 | DT3 | DT4 | DT5 | DT6 | DT7 | DT8 |

|---|---|---|---|---|---|---|---|---|

| DT1 | 0.59 | |||||||

| DT2 | 0.25 (0.04) | 0.58 | ||||||

| DT3 | 0.60 (0.26) | 0.12 (0.02) | 0.70 | |||||

| DT4 | 0.52 (0.17) | 0.41 (0.11) | 0.49 (0.15) | 0.54 | ||||

| DT5 | 0.42(0.12) | 0.07 (0.01) | 0.38 (0.09) | 0.25 (0.03) | 0.65 | |||

| DT6 | 0.71(0.35) | 0.30 (0.05) | 0.65 (0.28) | 0.51 (0.15) | 0.54 (0.17) | 0.69 | ||

| DT7 | 0.52(0.19) | 0.06 (0.03) | 0.39 (0.11) | 0.57 (0.19) | 0.46 (0.14) | 0.54 (0.18) | 0.69 | |

| DT8 | 0.72(0.36) | 0.38 (0.10) | 0.51 (0.18) | 0.52 (0.16) | 0.39 (0.11) | 0.63 (0.25) | 0.52 (0.17) | 0.59 |

| Variables | CUT | PUT | KUT | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | S | K | M | SD | S | K | M | SD | S | K | ||

| Students’ grades | GPA value | 4.30 | 0.39 | −0.42 | −0.15 | 4.32 | 0.31 | −0.26 | −0.97 | 4.20 | 0.26 | −0.19 | −0.31 |

| Design project grade | 4.67 | 0.43 | −0.96 | 0.75 | 4.48 | 0.51 | −0.74 | 0.05 | 4.28 | 0.55 | −0.51 | 0.19 | |

| Systems thinking | ST 1 | 3.91 | 0.79 | −0.19 | −0.75 | 3.75 | 0.81 | −0.38 | −0.77 | 3.26 | 0.91 | −0.71 | −0.18 |

| ST 2 | 3.85 | 0.75 | −0.75 | 0.97 | 3.51 | 0.68 | 0.09 | −0.45 | 3.08 | 0.82 | 0.09 | −0.49 | |

| ST 3 | 3.31 | 0.61 | −0.15 | −0.98 | 3.25 | 0.78 | −0.61 | 0.26 | 3.29 | 0.80 | −0.16 | −0.17 | |

| Total ST | 54.75 | 8.26 | −0.18 | −0.86 | 52.39 | 8.69 | −0.25 | −0.20 | 48.41 | 9.91 | −0.49 | 0.53 | |

| Engagement | Behavioral | 5.13 | 0.76 | −0.96 | 0.81 | 4.86 | 0.99 | −0.65 | −0.66 | 4.71 | 0.86 | −0.19 | −0.96 |

| Cognitive | 5.09 | 0.62 | −0.73 | 0.09 | 4.79 | 0.73 | −0.34 | −0.58 | 4.47 | 0.73 | −0.03 | −0.87 | |

| Emotional | 4.42 | 0.97 | −0.37 | −0.46 | 4.23 | 1.04 | −0.71 | 0.26 | 4.75 | 0.67 | −0.98 | 0.99 | |

| Social | 3.53 | 0.75 | 0.22 | −0.35 | 3.66 | 0.89 | −0.22 | −0.03 | 3.81 | 1.06 | −0.15 | −0.99 | |

| Aesthetic | 3.91 | 0.85 | 0.61 | −0.10 | 4.30 | 0.85 | −0.61 | 0.97 | 4.20 | 0.77 | −0.19 | 0.02 | |

| ICT self-concept | ICT-SC 1 | 4.28 | 1.02 | −0.26 | −0.40 | 4.21 | 0.96 | −0.66 | 0.73 | 4.76 | 0.98 | −0.98 | 0.97 |

| ICT-SC 2 | 3.60 | 1.02 | 0.16 | 0.11 | 3.68 | 1.23 | −0.49 | −0.66 | 4.50 | 0.94 | −0.14 | −0.54 | |

| ICT-SC 3 | 4.25 | 1.01 | −0.15 | −0.70 | 3.77 | 1.07 | −0.61 | −0.18 | 3.54 | 1.02 | −0.97 | 0.99 | |

| Design thinking | DT1 | 4.45 | 0.84 | 0.48 | −0.76 | 4.60 | 0.71 | 0.08 | −0.72 | 4.55 | 0.71 | 0.21 | −0.98 |

| DT2 | 3.27 | 1.08 | 0.34 | −0.01 | 3.52 | 0.97 | −0.09 | −0.03 | 3.63 | 0.91 | −0.10 | −0.64 | |

| DT3 | 4.56 | 0.85 | −0.21 | 0.51 | 4.94 | 0.66 | −0.57 | 0.22 | 4.81 | 0.83 | −0.63 | −0.57 | |

| DT4 | 3.89 | 0.77 | 0.28 | 0.77 | 4.03 | 0.89 | −0.32 | −0.03 | 4.36 | 0.86 | −0.26 | −0.28 | |

| DT5 | 4.92 | 0.87 | −0.57 | −0.13 | 4.71 | 0.85 | −0.86 | 0.15 | 4.34 | 0.80 | −0.64 | 0.98 | |

| DT6 | 4.61 | 0.86 | 0.01 | −0.85 | 4.80 | 0.71 | −0.38 | −0.73 | 4.73 | 0.80 | −0.41 | −0.47 | |

| DT7 | 4.74 | 0.81 | −0.18 | −0.98 | 4.87 | 0.76 | −0.83 | 0.72 | 4.86 | 0.87 | −0.70 | −0.14 | |

| DT8 | 4.97 | 0.91 | −0.72 | −0.09 | 4.77 | 0.84 | −0.38 | −0.48 | 4.18 | 0.95 | −0.23 | −0.99 | |

| Model | Unstandardized Coefficients | t | Sig. p-Value | |

|---|---|---|---|---|

| β | Std. Error β | |||

| Constant | 2.76 | 0.40 | 6.30 | 0.000 |

| PUT | 1.13 | 0.55 | 2.13 | 0.042 |

| CUT | 1.05 | 0.57 | 1.75 | 0.072 |

| Systems thinking | 0.04 | 0.01 | 4.31 | 0.000 |

| PUT × systems thinking | −0.03 | 0.01 | −1.98 | 0.048 |

| CUT × systems thinking | −0.02 | 0.01 | −1.41 | 0.146 |

| Model | Unstandardized Coefficients | t | Sig. p-Value | |

|---|---|---|---|---|

| β | Std. Error β | |||

| Constant | 2.09 | 0.44 | 4.76 | 0.000 |

| PUT | 1.86 | 0.55 | 3.40 | 0.001 |

| CUT | 2.06 | 0.60 | 3.42 | 0.001 |

| Behavioral engagement | 0.47 | 0.09 | 5.01 | 0.000 |

| PUT × behavioral engagement | −0.36 | 0.11 | −3.14 | 0.002 |

| CUT × behavioral engagement | −0.37 | 0.12 | −3.02 | 0.003 |

| Model | Unstandardized Coefficients | t | Sig. p-Value | |

|---|---|---|---|---|

| β | Std. Error β | |||

| Constant | 1.99 | 0.45 | 4.35 | 0.000 |

| PUT | 1.97 | 0.58 | 3.38 | 0.001 |

| CUT | 2.05 | 0.67 | 3.04 | 0.003 |

| Cognitive engagement | 0.52 | 0.10 | 5.12 | 0.000 |

| PUT × cognitive engagement | −0.40 | 0.12 | −3.22 | 0.002 |

| CUT × cognitive engagement | −0.39 | 0.14 | −2.79 | 0.006 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Avsec, S.; Jagiełło-Kowalczyk, M.; Żabicka, A.; Gawlak, A.; Gil-Mastalerczyk, J. Leveraging Systems Thinking, Engagement, and Digital Competencies to Enhance First-Year Architecture Students’ Achievement in Design-Based Learning. Sustainability 2023, 15, 15115. https://doi.org/10.3390/su152015115

Avsec S, Jagiełło-Kowalczyk M, Żabicka A, Gawlak A, Gil-Mastalerczyk J. Leveraging Systems Thinking, Engagement, and Digital Competencies to Enhance First-Year Architecture Students’ Achievement in Design-Based Learning. Sustainability. 2023; 15(20):15115. https://doi.org/10.3390/su152015115

Chicago/Turabian StyleAvsec, Stanislav, Magdalena Jagiełło-Kowalczyk, Agnieszka Żabicka, Agata Gawlak, and Joanna Gil-Mastalerczyk. 2023. "Leveraging Systems Thinking, Engagement, and Digital Competencies to Enhance First-Year Architecture Students’ Achievement in Design-Based Learning" Sustainability 15, no. 20: 15115. https://doi.org/10.3390/su152015115

APA StyleAvsec, S., Jagiełło-Kowalczyk, M., Żabicka, A., Gawlak, A., & Gil-Mastalerczyk, J. (2023). Leveraging Systems Thinking, Engagement, and Digital Competencies to Enhance First-Year Architecture Students’ Achievement in Design-Based Learning. Sustainability, 15(20), 15115. https://doi.org/10.3390/su152015115