Maize Disease Classification System Design Based on Improved ConvNeXt

Abstract

:1. Introduction

- This study employs the ConvNeXt model, a pure convolutional neural network, for the extraction of features from maize disease images. To enhance the performance of the downsample module, a parameter-free SimAM [30] attention module is introduced, which improves the model’s ability to extract crucial features and reduces the risk of overfitting, effectively enhancing maize diseases classification performance.

- The incorporation of transfer learning into the ConvNeXt model enhances its applicability for maize disease classification tasks. This approach leads to improvements in accuracy, generalization performance, and training efficiency, thereby enhancing the usability of the model in practical applications.

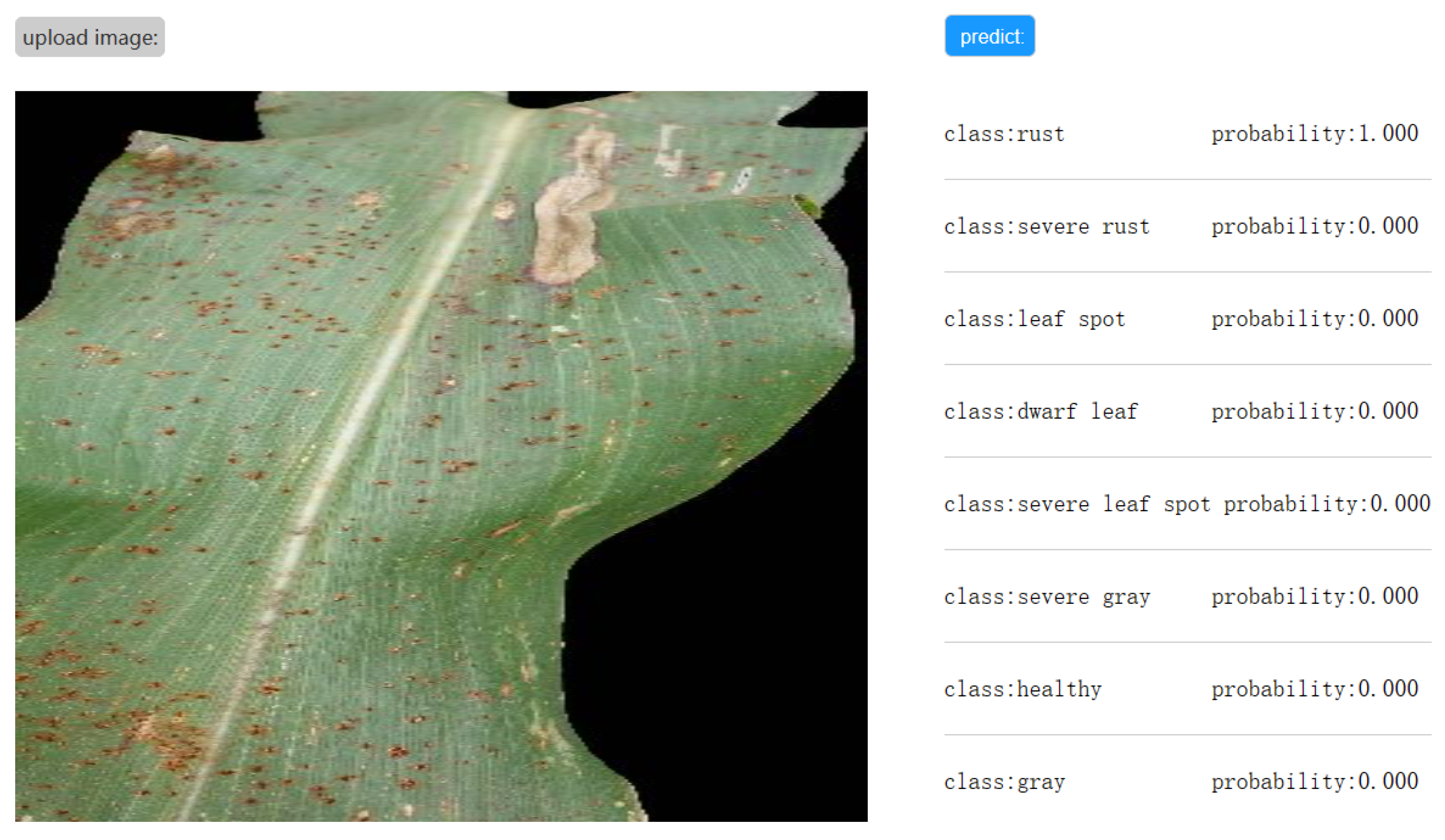

- In response to the limitations encountered in practical applications of maize disease classification, this study develops a website utilizing the Flask framework. The website allows users to conveniently upload relevant disease images for efficient classification, significantly lowering manual efforts and associated costs.

2. Methods

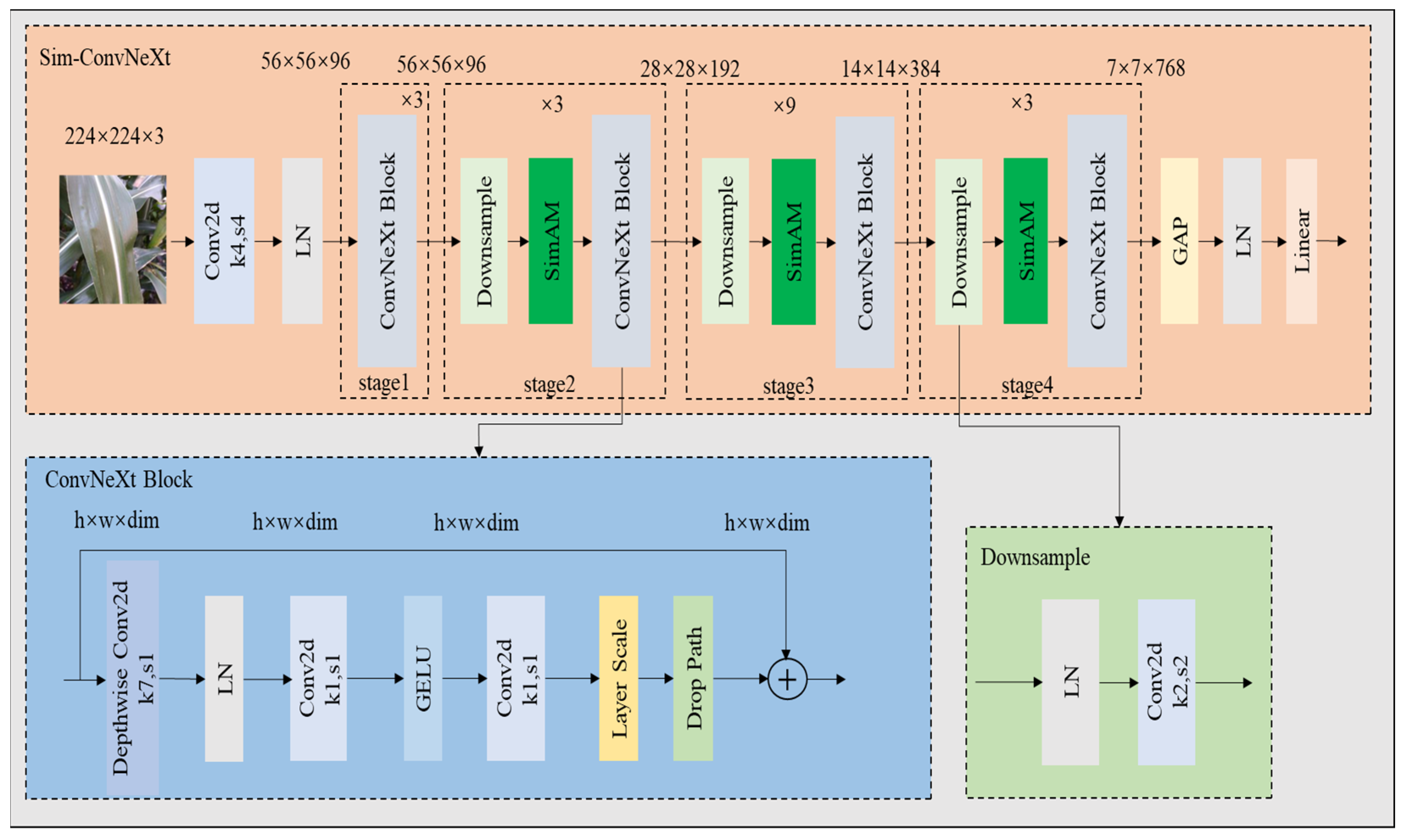

2.1. The Overall Design of SimAM-ConvNeXt Model

2.2. ConvNeXt-T Model

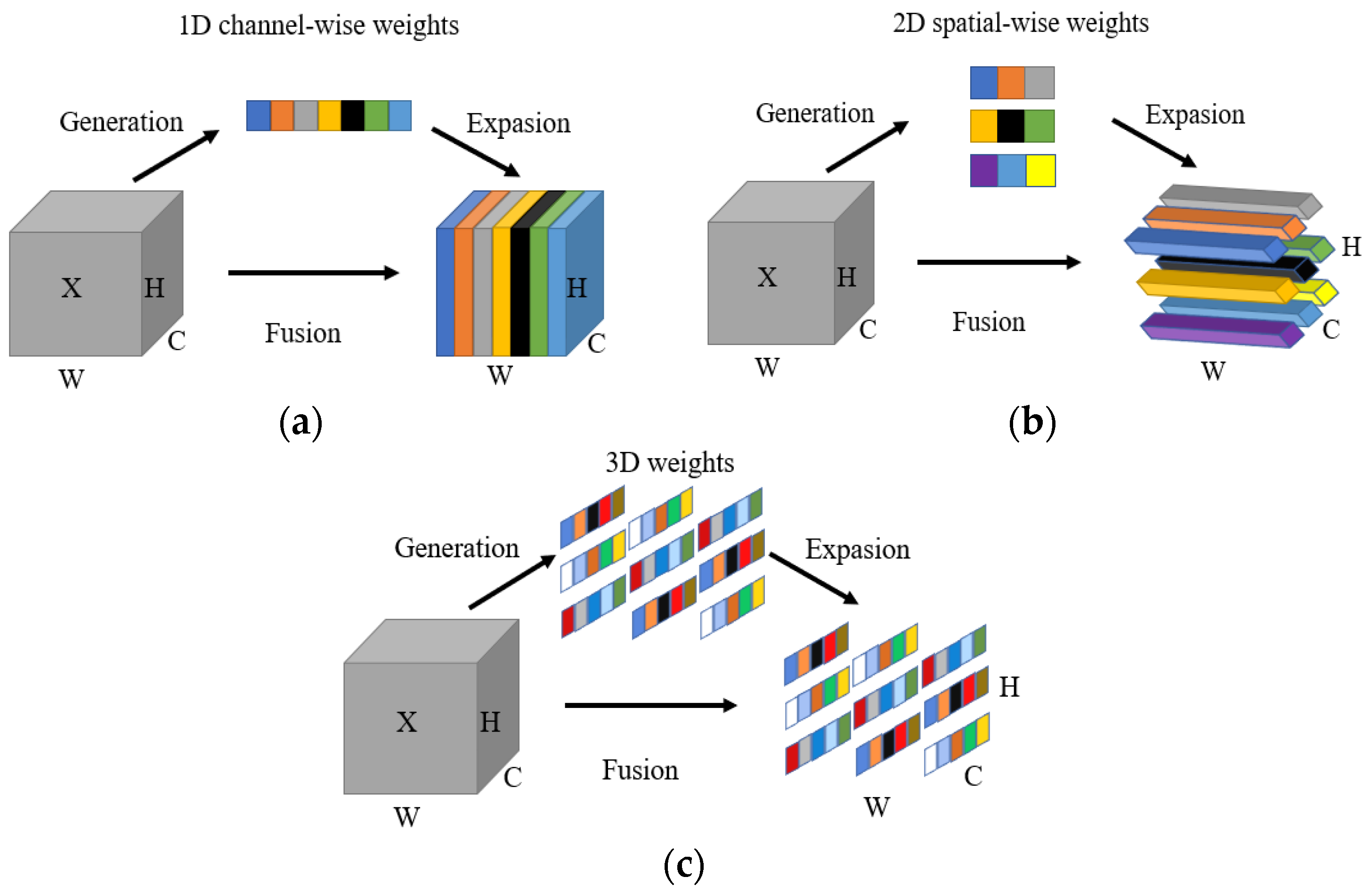

2.3. SimAM Attention Mechanism

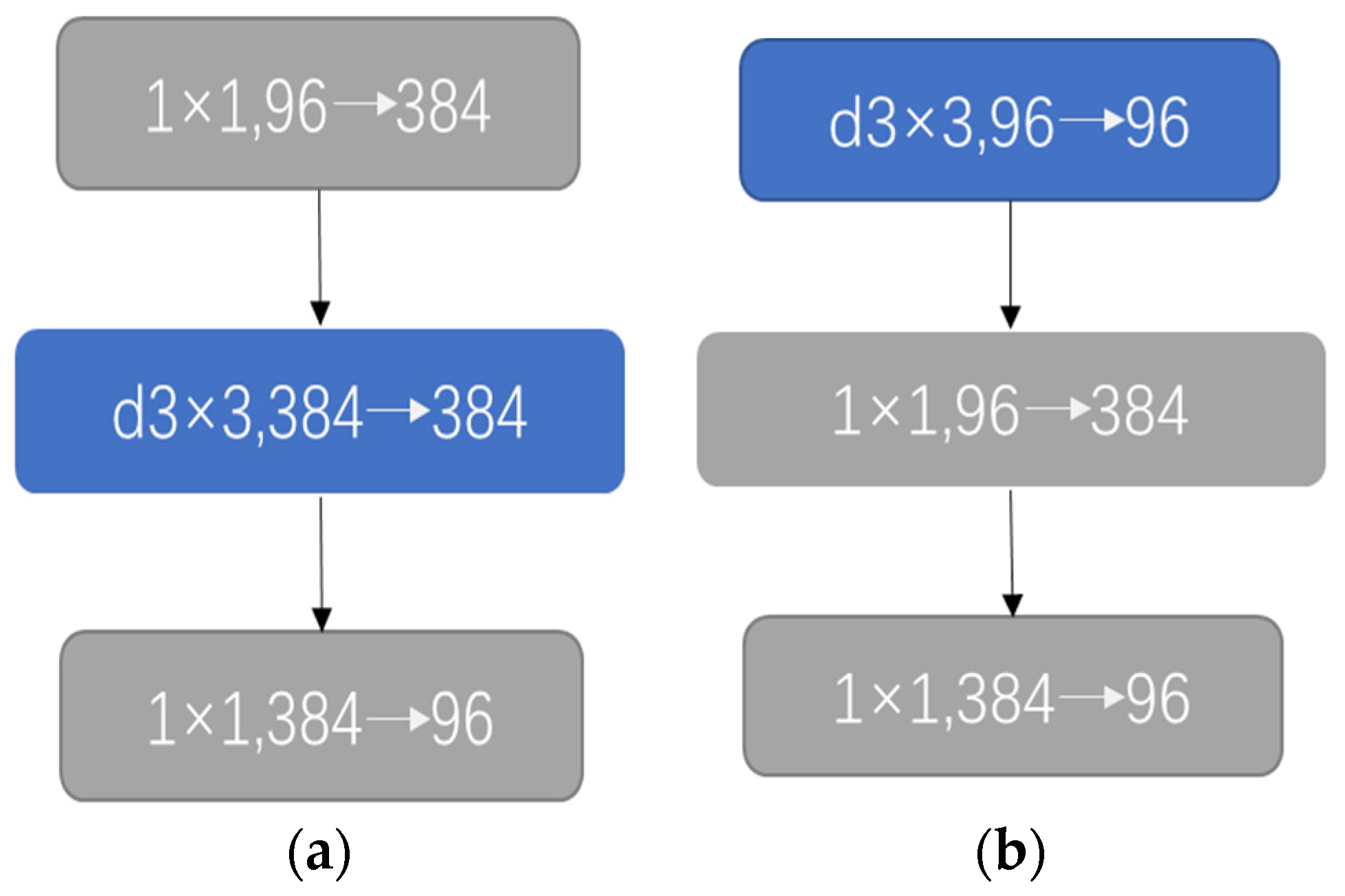

2.4. Improved Downsample Module

2.5. Design of Maize Disease Classification System

3. Experiments and Results

3.1. Dataset and Augmentation

3.2. Experimental Environment

3.3. Feature Extraction

3.4. Model Training

3.5. Model Evaluation

3.5.1. Utilization of Transfer Learning

3.5.2. Performance Comparison of Different Attention Modules

3.5.3. Performance Comparison of Different Models

3.6. Maize Disease Classification System

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gulzar, Y. Fruit Image Classification Model Based on MobileNetV2 with Deep Transfer Learning Technique. Sustainability 2023, 15, 1906. [Google Scholar] [CrossRef]

- Li, F.; Li, Y.; Novoselov, K.S.; Liang, F.; Meng, J.; Ho, S.-H.; Zhao, T.; Zhou, H.; Ahmad, A.; Zhu, Y.; et al. Bioresource Upgrade for Sustainable Energy, Environment, and Biomedicine. Nano-Micro Lett. 2023, 15, 35. [Google Scholar] [CrossRef] [PubMed]

- Kannan, M.; Ismail, I.; Bunawan, H. Maize Dwarf Mosaic Virus: From Genome to Disease Management. Viruses 2018, 10, 492. [Google Scholar] [CrossRef] [PubMed]

- Dhami, N.B.; Kim, S.K.; Paudel, A.; Shrestha, J.; Rijal, T.R. A Review on Threat of Gray Leaf Spot Disease of Maize in Asia. J. Maize Res. Dev. 2015, 1, 71–85. [Google Scholar] [CrossRef]

- Olukolu, B.A.; Tracy, W.F.; Wisser, R.; De Vries, B.; Balint-Kurti, P.J. A genome-wide association study for partial resistance to maize common rust. Phytopathology 2016, 106, 745–751. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Qi, X.; Wang, W.; Liu, X.; Zhao, H.; Wu, C.; Chang, X.; Zhang, M.; Chen, H.; Gong, G. Etiology and Symptoms of Maize Leaf Spot Caused by Bipolaris spp. in Sichuan, China. Pathogens 2020, 9, 229. [Google Scholar] [CrossRef]

- Du, H.P.; Fang, C.; Li, Y.R.; Kong, F.J.; Liu, B.H. Understandings and future challenges in soybean functional genomics and molecular breeding. J. Integr. Plant Biol. 2023, 65, 468–495. [Google Scholar] [CrossRef]

- Spiertz, H. Challenges for Crop Production Research in Improving Land Use, Productivity and Sustainability. Sustainability 2013, 5, 1632–1644. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, D.H.; Lu, X.T.; Yang, F.; Yao, M.; Dong, W.S.; Shi, J.B.; Li, G.Q. Efficient Visual Recognition: A Survey on Recent Advances and Brain-inspired Methodologies. Mach. Intell. Res. 2022, 19, 366–411. [Google Scholar] [CrossRef]

- Li, X.F.; Liu, B.; Zheng, G.; Ren, Y.B.; Zhang, S.S.; Liu, Y.J.; Gao, L.; Liu, Y.H.; Zhang, B.; Wang, F. Deep-learning-based information mining from ocean remote-sensing imagery. Natl. Sci. Rev. 2020, 7, 1584–1605. [Google Scholar] [CrossRef]

- Gangsar, P.; Tiwari, R. A support vector machine based fault diagnostics of Induction motors for practical situation of multi-sensor limited data case. Measurement 2019, 135, 694–711. [Google Scholar] [CrossRef]

- Kotsiantis, S.B. Decision trees: A recent overview. Artif. Intell. Rev. 2013, 39, 261–283. [Google Scholar] [CrossRef]

- Liang, Y.; Li, K.J.; Ma, Z.; Lee, W.J. Multilabel classification model for type recognition of single-phase-to-ground fault based on KNN-bayesian method. IEEE Trans. Ind. Appl. 2021, 57, 1294–1302. [Google Scholar] [CrossRef]

- Li, X.R.; Lin, Y.T.; Qiu, K.B. Stellar spectral classification and feature evaluation based on a random forest. Res. Astron. Astrophys. 2019, 19, 56–62. [Google Scholar] [CrossRef]

- Noola, D.A.; Basavaraju, D.R. Corn leaf image classification based on machine learning techniques for accurate leaf disease detection. Int. J. Electr. Comput. Eng. 2022, 12, 2509–2516. [Google Scholar] [CrossRef]

- Kusumo, B.S.; Heryana, A.; Mahendra, O.; Pardede, H.F. Machine learning-based for automatic detection of corn-plant diseases using image processing. In Proceedings of the 2018 International Conference on Computer, Control, Informatics and Its Applications (IC3INA), Tangerang, Indonesia, 1–2 November 2018; pp. 93–97. [Google Scholar]

- Alahmari, F.; Naim, A.; Alqahtani, H. E-Learning Modeling Technique and Convolution Neural Networks in Online Education. In IoT-Enabled Convolutional Neural Networks: Techniques and Applications, 1st ed.; Naved, M., Devi, V.A., Gaur, L., Elngar, A.A., Eds.; River Publishers: New York, NY, USA, 2023; pp. 261–295. [Google Scholar]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Liu, J.C.; Wang, M.T.; Bao, L.; Li, X.F. EfficientNet based recognition of maize diseases by leaf image classification. J. Phys. Conf. Ser. 2020, 1693, 012148. [Google Scholar] [CrossRef]

- Sun, J.; Yang, Y.; He, X.; Wu, X. Northern Maize Leaf Blight Detection Under Complex Field Environment Based on Deep Learning. IEEE Access 2020, 8, 33679–33688. [Google Scholar] [CrossRef]

- Haque, M.; Marwaha, S.; Deb, C.K.; Nigam, S.; Arora, A.; Hooda, K.S.; Soujanya, P.L.; Aggarwal, S.K.; Lall, B.; Kumar, M. Deep Learning-Based Approach for Identification of Diseases of Maize Crop. Sci. Rep. 2022, 12, 6334. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. arXiv 2022, arXiv:2201.03545. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1492–1500. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar]

- Liu, Y.; Shao, Z.; Teng, Y.; Hoffmann, N. NAM: Normalization-based Attention Module. arXiv 2021, arXiv:2111.12419. [Google Scholar]

- Hendrycks, D.; Gimpel, K. Gaussian error linear units (gelus). arXiv 2016, arXiv:1606.08415. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural net-works. J. Mach. Learn. Res. 2011, 15, 315–323. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Ba, J.; Kiros, J.; Hinton, G. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 18–21 June 2018; pp. 9423–9433. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoplu, K. Spatial transformer networks. arXiv 2015, arXiv:1506.02025. [Google Scholar]

- Saleem, M.H.; Potgieter, J.; Arif, K. Plant Disease Detection and Classification by Deep Learning. Plants 2019, 8, 468. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Cai, W.; Wang, X.; Zhou, Y.; Feng, D.D.; Chen, M. Medical image classification with convolutional neural network. In Proceedings of the 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 10–12 December 2014; pp. 844–848. [Google Scholar]

| Layer | ConvNeXt-T | Input | Output |

|---|---|---|---|

| conv1 | k4, s4, dim = 96 | 224 × 224 × 3 | 56 × 56 × 96 |

| conv2_x | 56 × 56 × 96 | 56 × 56 × 96 | |

| conv3_x | Downsample | 56 × 56 × 96 | 28 × 28 × 192 |

| conv4_x | Downsample | 28 × 28 × 192 | 14 × 14 × 384 |

| conv5_x | Downsample | 14 × 14 × 384 | 7 × 7 × 768 |

| Global Avg Pooling Layer Norm Linear | 7 × 7 × 768 | 1000 |

| Classes | Before | After | Train | Val | Test |

|---|---|---|---|---|---|

| Dwarf leaf | 931 | 4655 | 2793 | 931 | 931 |

| Healthy | 430 | 2150 | 1290 | 430 | 430 |

| Gray | 191 | 955 | 573 | 191 | 191 |

| Severe gray | 218 | 1090 | 654 | 218 | 218 |

| Rust | 552 | 2760 | 1656 | 552 | 552 |

| Severe rust | 406 | 2030 | 1218 | 406 | 406 |

| Leaf spot | 237 | 1185 | 711 | 237 | 237 |

| Severe leaf spot | 569 | 2845 | 1707 | 569 | 569 |

| Total | 3534 | 17,670 | 10,602 | 3534 | 3534 |

| Method | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| Non-Use | 87.0 | 83.2 | 82.5 | 82.8 |

| Use | 94.0 | 92.4 | 91.8 | 92.1 |

| Attention Module | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| +CBAM | 93.4 | 91.4 | 90.6 | 91.0 |

| +SE | 93.9 | 92.5 | 91.3 | 91.9 |

| +NAM | 94.4 | 93.0 | 92.2 | 92.6 |

| +SimAM(Ours) | 95.2 | 93.9 | 93.3 | 93.6 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| ResNet34 | 92.0 | 89.7 | 89.0 | 89.3 |

| ResNeXt50 | 92.8 | 90.9 | 90.2 | 90.5 |

| MobileNetV2 | 81.4 | 76.0 | 72.9 | 74.4 |

| DenseNet121 | 91.4 | 89.0 | 88.1 | 88.5 |

| VIT | 89.3 | 85.9 | 85.4 | 85.6 |

| Swin-T | 93.7 | 92.0 | 91.2 | 91.6 |

| ConvNeXt-T | 94.0 | 92.4 | 91.8 | 92.1 |

| Sim-ConvNeXt | 95.2 | 93.9 | 93.3 | 93.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Qi, M.; Du, B.; Li, Q.; Gao, H.; Yu, J.; Bi, C.; Yu, H.; Liang, M.; Ye, G.; et al. Maize Disease Classification System Design Based on Improved ConvNeXt. Sustainability 2023, 15, 14858. https://doi.org/10.3390/su152014858

Li H, Qi M, Du B, Li Q, Gao H, Yu J, Bi C, Yu H, Liang M, Ye G, et al. Maize Disease Classification System Design Based on Improved ConvNeXt. Sustainability. 2023; 15(20):14858. https://doi.org/10.3390/su152014858

Chicago/Turabian StyleLi, Han, Mingyang Qi, Baoxia Du, Qi Li, Haozhang Gao, Jun Yu, Chunguang Bi, Helong Yu, Meijing Liang, Guanshi Ye, and et al. 2023. "Maize Disease Classification System Design Based on Improved ConvNeXt" Sustainability 15, no. 20: 14858. https://doi.org/10.3390/su152014858

APA StyleLi, H., Qi, M., Du, B., Li, Q., Gao, H., Yu, J., Bi, C., Yu, H., Liang, M., Ye, G., & Tang, Y. (2023). Maize Disease Classification System Design Based on Improved ConvNeXt. Sustainability, 15(20), 14858. https://doi.org/10.3390/su152014858