Conceptualizing and Validating a Model for Benchlearning Capability: Results from the Greek Public Sector

Abstract

1. Introduction

2. Reviewing the Literature

2.1. The Learning Organization and Organizational Learning Approaches

2.2. The Common Assessment Framework, Self-Assessment, Organizational Learning and “Benchlearning”

2.3. Benchmarking, Organizational Learning and Benchlearning

2.4. The Capability of Organizations for Benchlearning

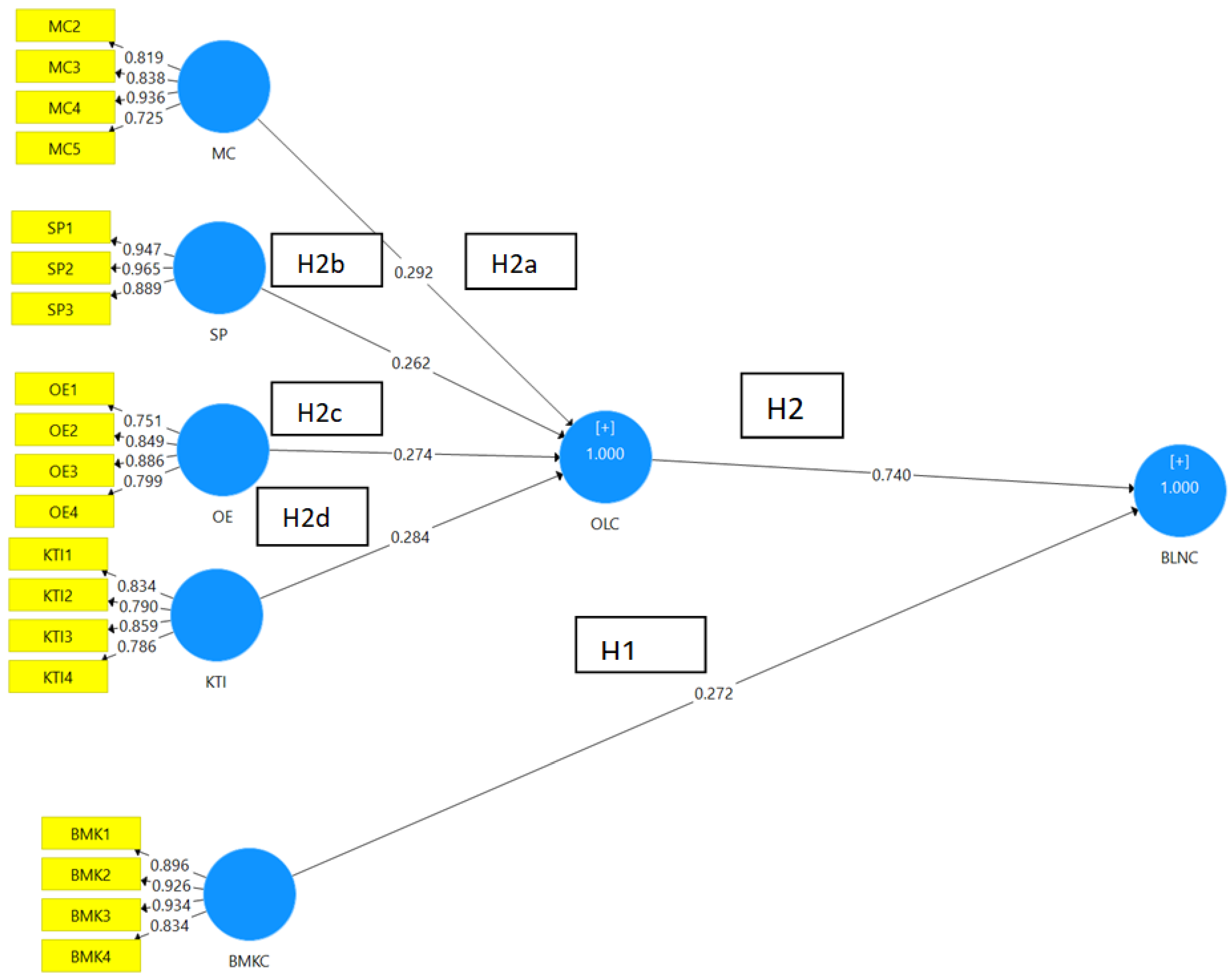

- The capability of management to foster a learning culture (managerial commitment—MC)—includes long-term organizational learning and managerial efforts to instill the importance of learning among its members as well as to discard perceptions that delay organizational success.

- The capability of the organization’s members to influence each other under a “shared vision, mission and practices” (systems perspective—SP)—each member understands the collective goals and his/her position and acts respectively in the organizational network of implicit and explicit relationships, enabling the transition from individual to collective learning.

- The capability of the organization to promote “generative learning” (openness and experimentation—OE)—addresses an interactive experience with the environment and encompasses acceptance of new values, perceptions, attitudes and practices (openness) as well as risk tolerance and creativity.

- The capability of the organization to create enabling processes and structures of “knowledge transfer and integration” (KTI)—this means that by incorporating social elements (communication, dialogue and debate) and technological facilitators (information systems), the organizational members interact in order to develop "organizational memory" [36] and collective knowledge.

2.5. Hypotheses Development

- H1:Benchmarking Capability (BMKC) is positively related to Benchlearning Capability (BLNC);

- H2:Organizational Learning Capability (OLC)is positively related to BLNC;

- o

- H2a:MC is positively related to OLC;

- o

- H2b:OE is positively related to OLC;

- o

- H2c:SP is positively related to OLC;

- o

- H2d:KTI is positively related to OLC.

3. Methodology

3.1. Developing the Instrument

3.2. Data Collection

3.3. Data Analysis

4. Results

4.1. The Proposed Model with Loadings

4.2. Construct Validity and Reliability of the Measurement Model

4.3. Structural Model Assessment

4.4. Quality of the Structural Model Assessment

4.5. Structural Model Analysis

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kordab, M.; Raudeliūnienė, J.; Meidutė-Kavaliauskienė, I. Mediating role of knowledge management in the relationship between organizational learning and sustainable organizational performance. Sustainability 2020, 12, 10061. [Google Scholar] [CrossRef]

- Walsh, K. Quality through markets: The new public service management. In Making Quality Critical: New Perspectives on Organizational Change; Wilkinson, A., Willmott, H., Eds.; Routledge: London, UK, 1995; pp. 82–104. [Google Scholar]

- Freeman-Bell, G.; Grover, R. The use of quality management in local authorities. Local Gov. Stud. 1994, 20, 554–569. [Google Scholar] [CrossRef]

- Randall, L.; Senior, M. A model for achieving quality in hospital hotel services. Int. J. Contemp. Hosp. Manag. 1994, 6, 68–74. [Google Scholar] [CrossRef]

- Sinha, M. Gaining perspectives: The future of TQM in public sectors. TQM Mag. 1999, 11, 414–419. [Google Scholar] [CrossRef]

- Boyne, G.A.; Walker, R.M. Total quality management and performance: An evaluation of the evidence and lessons for research on public organizations. Public Perform. Manag. Rev. 2002, 26, 111–131. [Google Scholar] [CrossRef]

- Redman, T.; Mathews, B.; Wilkinson, A.; Snape, E. Quality management in services: Is the public sector keeping pace? Int. J. Public Sect. Manag. 1995, 8, 21–34. [Google Scholar] [CrossRef]

- Barouch, G.; Kleinhans, S. Learning from criticisms of quality management. Int. J. Qual. Serv. Sci. 2015, 7, 201–216. [Google Scholar] [CrossRef]

- Walsh, K. Quality and public services. Public Adm. 1991, 69, 503–514. [Google Scholar] [CrossRef]

- Swiss, J. Adapting total quality management (TQM) to government. Public Adm. Rev. 1992, 52, 356–362. [Google Scholar] [CrossRef]

- Radin, B.A.; Coffee, J.N. A critique of TQM: Problems of implementation in the public sector. Public Adm. Q. 1993, 17, 42–54. [Google Scholar]

- Dewhurst, F.; Martínez-Lorente, A.R.; Dale, B.G. TQM in public organisations: An examination of the issues. Manag. Serv. Qual. Int. J. 1999, 9, 265–273. [Google Scholar] [CrossRef]

- Tarí, J.J. Self-assessment exercises: A comparison between a private sector organisation and higher education institutions. Int. J. Prod. Econ. 2008, 114, 105–118. [Google Scholar] [CrossRef]

- CAF Resource Center. The Common Assessment Framework (CAF). Improving and Organisation through Self-Assessment. 2006. Available online: https://www.eupan.eu/wp-content/uploads/2019/05/EUPAN_CAF_2006_The_Common_Assessment_Framework_CAF_2006.pdf (accessed on 1 October 2019).

- CAF Resource Center. CAF 2020—The European Model for Improving Public Organisations through Self-Assessment. 2020. Available online: https://www.eupan.eu/wp-content/uploads/2019/11/20191118-CAF-2020-FINAL.pdf (accessed on 20 December 2019).

- Camp, R. Benchmarking: The Search for Industry Best Practices that Lead to Superior Performance; Quality Press: Milwaukee, WI, USA, 1989. [Google Scholar]

- Karlöf, B.; Lundgren, K.; Froment, M.E. Benchlearning: Good Examples as a Lever for Development; John Wiley & Sons: Chichester, UK, 2001. [Google Scholar]

- Ammons, D.; Roenigk, D. Benchmarking and interorganizational learning in local government. J. Public Adm. Res. Theory 2014, 25, 309–335. [Google Scholar] [CrossRef]

- Kyrö, P. Revising the concept and forms of benchmarking. Benchmarking Int. J. 2003, 10, 210–225. [Google Scholar] [CrossRef]

- Ellis, J. All inclusive benchmarking. J. Nurs. Manag. 2006, 14, 377–383. [Google Scholar] [CrossRef] [PubMed]

- Wolfram Cox, J.; Mann, L.; Samson, D. Benchmarking as a mixed metaphor: Disentangling assumptions of competition and collaboration. J. Manag. Stud. 1997, 34, 285–314. [Google Scholar] [CrossRef]

- Easterby-Smith, M.; Araujo, L.; Burgoyne, J. Organizational Learning and the Learning Organization: Developments in Theory and Practice; Sage: London, UK, 2011. [Google Scholar]

- Garratt, B.; Marsick, V.J.; Watkins, K.E.; Dixon, N.M.; Smith, P.A. The Learning Organization: And the Need for Directors Who Think; Gower: Aldershot, UK, 1987. [Google Scholar]

- Senge, P. The Fifth Discipline: The Art & Practice of the Learning Organization; Doubleday: New York, NY, USA, 2000. [Google Scholar]

- Garvin, D.A. Building a learning organization. Harv. Bus. Rev. 1993, 71, 78–91. [Google Scholar]

- Nevis, E.C.; DiBella, A.J.; Gould, J.M. Understanding organizations as learning systems. Sloan Manag. Rev. 1997, 36, 73–85. [Google Scholar]

- Pedler, M.; Burgoyne, J.G.; Boydell, T. The Learning Company: A Strategy for Sustainable Development; McGraw-Hill: London, UK, 1991; p. 243. [Google Scholar]

- McGill, M.E.; Slocum, J.W., Jr. Unlearning the organization. Organ. Dyn. 1993, 22, 67–79. [Google Scholar] [CrossRef]

- Nonaka, I. A dynamic theory of organizational knowledge creation. Organ. Sci. 1994, 5, 14–37. [Google Scholar] [CrossRef]

- Rebelo, T.M.; Gomes, A.D. Organizational learning and the learning organization: Reviewing evolution for prospecting the future. Learn. Organ. 2008, 15, 294–308. [Google Scholar] [CrossRef]

- Grieves, J. Why we should abandon the idea of the learning organization. Learn. Organ. 2008, 15, 463–473. [Google Scholar] [CrossRef]

- Cyert, R.M.; March, J.G. A Behavioral Theory of the Firm; Prentice-Hall: Englewood Cliffs, NJ, USA, 1963. [Google Scholar]

- Argyris, C.; Schön, D.A. Organizational Learning: A Theory of Action Perspective; Addison-Welsey: Boston, MA, USA, 1978. [Google Scholar]

- Shrivastava, P. A typology of organizational learning systems. J. Manag. Stud. 1983, 20, 7–28. [Google Scholar] [CrossRef]

- Fiol, C.M.; Lyles, M.A. Organizational learning. Acad. Manag. Rev. 1985, 10, 803–813. [Google Scholar] [CrossRef]

- Huber, G.P. Organizational learning: The contributing processes and the literatures. Organ. Sci. 1991, 2, 88–115. [Google Scholar] [CrossRef]

- Rashman, L.; Withers, E.; Hartley, J. Organizational learning and knowledge in public service organizations: A systematic review of the literature. Int. J. Manag. Rev. 2009, 11, 463–494. [Google Scholar] [CrossRef]

- Barette, J.; Lemyre, L.; Corneil, W.; Beauregard, N. Organizational learning facilitators in the Canadian public sector. Int. J. Public Adm. 2012, 35, 137–149. [Google Scholar] [CrossRef]

- Skinner, B.F. The Behavior of Organisms: An Experimental Analysis; D. Appleton-Century Company: New York, NY, USA, 1938. [Google Scholar]

- DeFillippi, R.; Ornstein, S. Psychological perspectives underlying theories of organizational learning. In The Blackwell Handbook of Organizational Learning and Knowledge Management; Easterby-Smith, M., Lyles, M.A., Eds.; Blackwell Publishing Ltd.: Malden, MA, USA, 2003; pp. 19–35. [Google Scholar]

- Elkjaer, B. In search of a social learning theory. In Organizational Learning and the Learning Organization: Developments in Theory and Practice; Easterby-Smith, M., Araujo, L., Burgoyne, J., Eds.; Sage: London, UK, 1999; pp. 75–91. [Google Scholar]

- Hawkins, P. Organizational learning: Taking stock and facing the challenge. Manag. Learn. 1994, 25, 71–82. [Google Scholar] [CrossRef]

- Hedberg, B. How organizations learn and unlearn. In Handbook of Organizational Design; Nystrom, P.C., Starbuck, W.H., Eds.; Oxford University Press: New York, NY, USA, 1981; pp. 3–27. [Google Scholar]

- Staes, P.; Thijs, N. Quality Management on the European Agenda; European Institute of Public Administration: Luxembourg, 2005; pp. 33–41. [Google Scholar]

- Dale, B.; Zairi, M.; Van der Wiele, A.; Williams, A. Quality is dead in Europe–long live excellence-true or false? Meas. Bus. Excell. 2000, 4, 4–10. [Google Scholar] [CrossRef]

- Balbastre, F.; Luzón, M.M. Self-assessment application and learning in organizations: A special reference to the ontological dimension. Total Qual. Manag. Bus. Excell. 2003, 14, 367–388. [Google Scholar] [CrossRef]

- CAF Resource Center. CAF 2013—Improving Public Organisations through Self-Assessment. 2013. Available online: https://ec.europa.eu/eurostat/ramon/statmanuals/files/CAF_2013.pdf (accessed on 1 October 2019).

- Bruder, K.; Gray, E. Public-sector benchmarking: A practical approach. Public Manag. 1994, 76, S9–S14. [Google Scholar]

- Bullivant, J. Benchmarking in the UK national health service. Int. J. Health Care Qual. Assur. 1996, 9, 9–14. [Google Scholar] [CrossRef]

- Howat, G.; Murray, D.; Crilley, G. Reducing measurement overload: Rationalizing performance measures for public aquatic centres in Australia. Manag. Leis. 2005, 10, 128–142. [Google Scholar] [CrossRef]

- Ammons, D. Municipal Benchmarks: Assessing Local Performance and Establishing Community Standards; Routledge: London, UK, 2012. [Google Scholar]

- Mugion, R.G.; Musella, F. Customer satisfaction and statistical techniques for the implementation of benchmarking in the public sector. Total Qual. Manag. Bus. Excell. 2013, 24, 619–640. [Google Scholar] [CrossRef]

- Huijben, M.; Geurtsen, A.; van Helden, J. Managing overhead in public sector organizations through benchmarking. Public Money Manag. 2014, 34, 27–34. [Google Scholar] [CrossRef]

- Rendon, R. Benchmarking contract management process maturity: A case study of the US Navy. Benchmarking Int. J. 2015, 22, 1481–1508. [Google Scholar] [CrossRef]

- Bowerman, M.; Francis, G.; Ball, A.; Fry, J. The evolution of benchmarking in UK local authorities. Benchmarking Int. J. 2002, 9, 424–449. [Google Scholar] [CrossRef]

- Karlöf, B.; Östblom, S. Benchmarking: A Signpost to Excellence in Quality and Productivity; John Wiley & Sons Inc.: Chichester, UK, 1993. [Google Scholar]

- Watson, G.H. Strategic Benchmarking: How to Rate Your Company’s Performance against The World’s Best; John Wiley & Sons Inc.: New York, NY, USA, 1993. [Google Scholar]

- Deming, E. Out of the Crisis: Quality, Productivity and Competitive Position; Massachusetts Institute of Technology: Cambridge, MA, USA, 1986. [Google Scholar]

- Fernandez, P.; McCarthy, I.P.; Rakotobe-Joel, T. An evolutionary approach to benchmarking. Benchmarking Int. J. 2001, 8, 281–305. [Google Scholar] [CrossRef]

- Auluck, R. Benchmarking: A tool for facilitating organizational learning? Public Adm. Dev. Int. J. Manag. Res. Pract. 2002, 22, 109–122. [Google Scholar] [CrossRef]

- American Productivity and Quality Center. What Is Best Practice? Available online: http://www.apqc.org (accessed on 30 March 2022).

- Boxwell, R. Benchmarking for Competitive Advantage; McGraw Hill: New York, NY, USA, 1994. [Google Scholar]

- Osborne, D.; Gaebler, T. Reinventing Government: How the Entrepreneurial Spirit is Transforming Government; Addison-Wesley: Boston, MA, USA, 1992. [Google Scholar]

- Kouzmin, A.; Löffler, E.; Klages, H.; Korac-Kakabadse, N. Benchmarking and performance measurement in public sectors: Towards learning for agency effectiveness. Int. J. Public Sect. Manag. 1999, 12, 121–144. [Google Scholar] [CrossRef]

- Braadbaart, O. Collaborative benchmarking, transparency and performance: Evidence from The Netherlands water supply industry. Benchmarking Int. J. 2007, 14, 677–692. [Google Scholar] [CrossRef]

- Buckmaster, N. Benchmarking as a learning tool in voluntary non-profit organizations: An exploratory study. Public Manag. Int. J. Res. Theory 1999, 1, 603–616. [Google Scholar] [CrossRef]

- Askim, J.; Johnsen, Å.; Christophersen, K.-A. Factors behind organizational learning from benchmarking: Experiences from Norwegian municipal benchmarking networks. J. Public Adm. Res. Theory 2008, 18, 297–320. [Google Scholar] [CrossRef]

- Johnstad, T.; Berger, S. Nordic Benchlearning: A Community of Practice between Six Clusters. In Proceedings of the Conference on Regional Development and Innovation Processes, Porvoo, Finland, 5–7 March 2008. [Google Scholar]

- Batlle-Montserrat, J.; Blat, J.; Abadal, E. Local e-government benchlearning: Impact analysis and applicability to smart cities benchmarking. Inf. Polity 2016, 21, 43–59. [Google Scholar] [CrossRef]

- Torbjorn, S. Regional and institutional innovation in a peripheral economy: An analysis of a pioneer industrial endeavour and benchlearning between Norway and Canada. In Proceedings of the Regional Development and Innovation Processes, Porvoo, Finland, 5–7 March 2008. [Google Scholar]

- DiBella, A.J.; Nevis, E.C.; Gould, J.M. Understanding organizational learning capability. J. Manag. Stud. 1996, 33, 361–379. [Google Scholar] [CrossRef]

- Goh, S. Toward a learning organization: The strategic building blocks. SAM Adv. Manag. J. 1998, 63, 15–22. [Google Scholar]

- Hult, G.T.M.; Ferrell, O. Global organizational learning capacity in purchasing: Construct and measurement. J. Bus. Res. 1997, 40, 97–111. [Google Scholar] [CrossRef]

- Popper, M.; Lipshitz, R. Organizational learning mechanisms and a structural and cultural approach to organizational learning. J. Appl. Behav. Sci. 1998, 34, 161–179. [Google Scholar] [CrossRef]

- Goh, S.; Richards, G. Benchmarking the learning capability of organizations. Eur. Manag. J. 1997, 15, 575–583. [Google Scholar] [CrossRef]

- Chiva, R.; Alegre, J.; Lapiedra, R. Measuring organisational learning capability among the workforce. Int. J. Manpow. 2007, 28, 224–242. [Google Scholar] [CrossRef]

- Camps, J.; Alegre, J.; Torres, F. Towards a methodology to assess organizational learning capability: A study among faculty members. Int. J. Manpow. 2011, 32, 687–703. [Google Scholar] [CrossRef]

- Kassim, N.A.; Shoid, M.S.M. Organizational learning capabilities and knowledge performance in universititeknologi Mara (UiTM) Library, Malaysia. World Appl. Sci. J. 2013, 21, 93–97. [Google Scholar]

- Jerez-Gomez, P.; Céspedes-Lorente, J.; Valle-Cabrera, R. Organizational learning capability: A proposal of measurement. J. Bus. Res. 2005, 58, 715–725. [Google Scholar] [CrossRef]

- Infrastractures and Project Authority. Benchmarking Capability Tool. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/888966/6.6408_IPA_Benchmarking_Capability_Tool_v7_web.pdf (accessed on 30 March 2022).

- Liu, Y.-D. Implementing and evaluating performance measurement initiative in public leisure facilities: An action research project. Syst. Pract. Action Res. 2009, 22, 15–30. [Google Scholar] [CrossRef]

- Hooper, P.; Greenall, A. Exploring the potential for environmental performance benchmarking in the airline sector. Benchmarking Int. J. 2005, 12, 151–165. [Google Scholar] [CrossRef]

- Nieva, V.; Sorra, J. Safety culture assessment: A tool for improving patient safety in healthcare organizations. BMJ Qual. Saf. 2003, 12, ii17–ii23. [Google Scholar] [CrossRef]

- Kurnia, S.; Rahim, M.M.; Samson, D.; Prakash, S. Sustainable Supply Chain Management Capability Maturity: Framework Development and Initial Evaluation. In Proceedings of the European Conference on Information Systems (ECIS), Tel Aviv, Israel, 9–11 June 2014. [Google Scholar]

- Acocella, I. The focus groups in social research: Advantages and disadvantages. Qual. Quant. 2012, 46, 1125–1136. [Google Scholar] [CrossRef]

- Morgan, D. Focus groups. Annu. Rev. Sociol. 1996, 22, 129–152. [Google Scholar] [CrossRef]

- Wang, R.; Wiesemes, R. Enabling and supporting remote classroom teaching observation: Live video conferencing uses in initial teacher education. Technol. Pedagog. Educ. 2012, 21, 351–360. [Google Scholar] [CrossRef]

- Bryman, A. Social Research Methods; Oxford University Press: New York, NY, USA, 2012. [Google Scholar]

- Hoskisson, R.E.; Hitt, M.A.; Johnson, R.A.; Moesel, D.D. Construct validity of an objective (entropy) categorical measure of diversification strategy. Strateg. Manag. J. 1993, 14, 215–235. [Google Scholar] [CrossRef]

- Freytag, P.; Hollensen, S. The process of benchmarking, benchlearning and benchaction. TQM Mag. 2001, 13, 25–34. [Google Scholar] [CrossRef]

- Noordhoek, M. Municipal Benchmarking: Organisational Learning and Network Performance in the Public Sector; Aston University: Birmingham, UK, 2013. [Google Scholar]

- Bridge-IT. Deliverable D.2 “Benchlearning Methodology and Data Gathering Template”, Greek Tax Agency Benchlearning and Evaluation Project. Gov3, for the Greek Information Society Observatory, Athens. Available online: https://www.yumpu.com/en/document/view/44486911/benchlearning-methodology-and-data-gathering-template (accessed on 30 March 2022).

- Ringle, C.; Wende, S.; Becker, J. SmartPLS 3. Available online: http://www.smartpls.com (accessed on 1 October 2021).

- Hair, J.; Hult, T.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Hadi, S.; Baskaran, S. Examining sustainable business performance determinants in Malaysia upstream petroleum industry. J. Clean. Prod. 2021, 294, 126231. [Google Scholar] [CrossRef]

- Oyewobi, L.O.; Adedayo, O.F.; Olorunyomi, S.O.; Jimoh, R. Social media adoption and business performance: The mediating role of organizational learning capability (OLC). J. Facil. Manag. 2021, 19, 413–436. [Google Scholar] [CrossRef]

- Hair, J.; Matthews, L.; Matthews, R.; Sarstedt, M. PLS-SEM or CB-SEM: Updated guidelines on which method to use. Int. J. Multivar. Data Anal. 2017, 1, 107–123. [Google Scholar] [CrossRef]

- Lowry, P.B.; Gaskin, J. Partial least squares (PLS) structural equation modeling (SEM) for building and testing behavioral causal theory: When to choose it and how to use it. IEEE Trans. Prof. Commun. 2014, 57, 123–146. [Google Scholar] [CrossRef]

- Cenfetelli, R.; Bassellier, G. Interpretation of formative measurement in information systems research. MIS Q. 2009, 33, 689–707. [Google Scholar] [CrossRef]

- Chin, W.W. The partial least squares approach to structural equation modeling. In Modern Methods for Business Research; Marcoulides, A., Ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1998; pp. 295–336. [Google Scholar]

- Mihail, D.M.; Kloutsiniotis, P.V. Modeling patient care quality: An empirical high-performance work system approach. Pers. Rev. 2016, 45, 1176–1199. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Al-Ahbabi, S.; Singh, S.K.; Gaur, S.S.; Balasubramanian, S. A knowledge management framework for enhancing public sector performance. Int. J. Knowl. Manag. Stud. 2017, 8, 329–350. [Google Scholar] [CrossRef]

- Venkatraman, N. Strategic orientation of business enterprises: The construct, dimensionality, and measurement. Manag. Sci. 1989, 35, 942–962. [Google Scholar] [CrossRef]

| Question 17 | There is a culture of continuous searching for new processes and practices and learning from others |

| Question 18 | The organization favors internal best-practice knowledge sharing |

| Question 19 | The organizational culture favors continuous improvement |

| Question 20 | Customer (internal and external) satisfaction is very important in all parts of the organization |

| Loadings | Cronbach’s Alpha | Composite Reliability | Average Variance Extracted (AVE) | |

|---|---|---|---|---|

| BMK1 | 0.896 | |||

| BMK2 | 0.927 | |||

| BMK3 | 0.934 | |||

| BMK4 | 0.833 | |||

| BMK | 0.920 | 0.943 | 0.807 | |

| KTI1 | 0.850 | |||

| KTI2 | 0.771 | |||

| KTI3 | 0.869 | |||

| KTI4 | 0.771 | |||

| KTI | 0.836 | 0.889 | 0.667 | |

| MC2 | 0.823 | |||

| MC3 | 0.834 | |||

| MC4 | 0.937 | |||

| MC5 | 0.723 | |||

| MC | 0.858 | 0.900 | 0.694 | |

| OE1 | 0.735 | |||

| OE2 | 0.838 | |||

| OE3 | 0.890 |

| BMKC | KTI | MC | OE | SP | |

|---|---|---|---|---|---|

| BMKC | 0.898 | ||||

| KTI | 0.813 | 0.817 | |||

| MC | 0.829 | 0.821 | 0.833 | ||

| OE | 0.818 | 0.773 | 0.756 | 0.822 | |

| SP | 0.816 | 0.726 | 0.708 | 0.713 | 0.934 |

| BMKC | KTI | MC | OE | SP | |

|---|---|---|---|---|---|

| BMKC | |||||

| KTI | 0.850 | ||||

| MC | 0.808 | 0.804 | |||

| OE | 0.802 | 0.887 | 0.871 | ||

| SP | 0.876 | 0.807 | 0.788 | 0.784 |

| VIF | |

|---|---|

| BMK1 | 2.985 |

| BMK2 | 4.190 |

| BMK3 | 4.349 |

| BMK4 | 2.226 |

| KTI1 | 2.590 |

| KTI2 | 2.432 |

| KTI3 | 2.747 |

| KTI4 | 2.370 |

| MC2 | 2.718 |

| MC3 | 1.993 |

| MC4 | 4.265 |

| MC5 | 1.634 |

| OE1 | 1.789 |

| OE2 | 2.510 |

| OE3 | 2.743 |

| OE4 | 1.837 |

| SP1 | 4.341 |

| SP2 | 4.650 |

| SP3 | 2.478 |

| Original Sample (O) | Sample Mean (M) | Standard Deviation (STDEV) | T Statistics (|O/STDEV|) | p Values | |

|---|---|---|---|---|---|

| BMKC -> BLNC | 0.272 | 0.273 | 0.009 | 28.917 | 0.000 |

| KTI -> OLC | 0.284 | 0.284 | 0.012 | 24.673 | 0.000 |

| MC -> OLC | 0.292 | 0.293 | 0.011 | 27.254 | 0.000 |

| OE -> OLC | 0.274 | 0.274 | 0.010 | 27.626 | 0.000 |

| OLC -> BLNC | 0.740 | 0.740 | 0.009 | 84.452 | 0.000 |

| SP -> OLC | 0.262 | 0.261 | 0.010 | 26.892 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kessopoulou, E.; Gotzamani, K.; Xanthopoulou, S.; Tsiotras, G. Conceptualizing and Validating a Model for Benchlearning Capability: Results from the Greek Public Sector. Sustainability 2023, 15, 1383. https://doi.org/10.3390/su15021383

Kessopoulou E, Gotzamani K, Xanthopoulou S, Tsiotras G. Conceptualizing and Validating a Model for Benchlearning Capability: Results from the Greek Public Sector. Sustainability. 2023; 15(2):1383. https://doi.org/10.3390/su15021383

Chicago/Turabian StyleKessopoulou, Eftychia, Katerina Gotzamani, Styliani Xanthopoulou, and George Tsiotras. 2023. "Conceptualizing and Validating a Model for Benchlearning Capability: Results from the Greek Public Sector" Sustainability 15, no. 2: 1383. https://doi.org/10.3390/su15021383

APA StyleKessopoulou, E., Gotzamani, K., Xanthopoulou, S., & Tsiotras, G. (2023). Conceptualizing and Validating a Model for Benchlearning Capability: Results from the Greek Public Sector. Sustainability, 15(2), 1383. https://doi.org/10.3390/su15021383