Passenger Occupancy Estimation in Vehicles: A Review of Current Methods and Research Challenges

Abstract

1. Introduction

2. Invasive Passenger Detection Methods

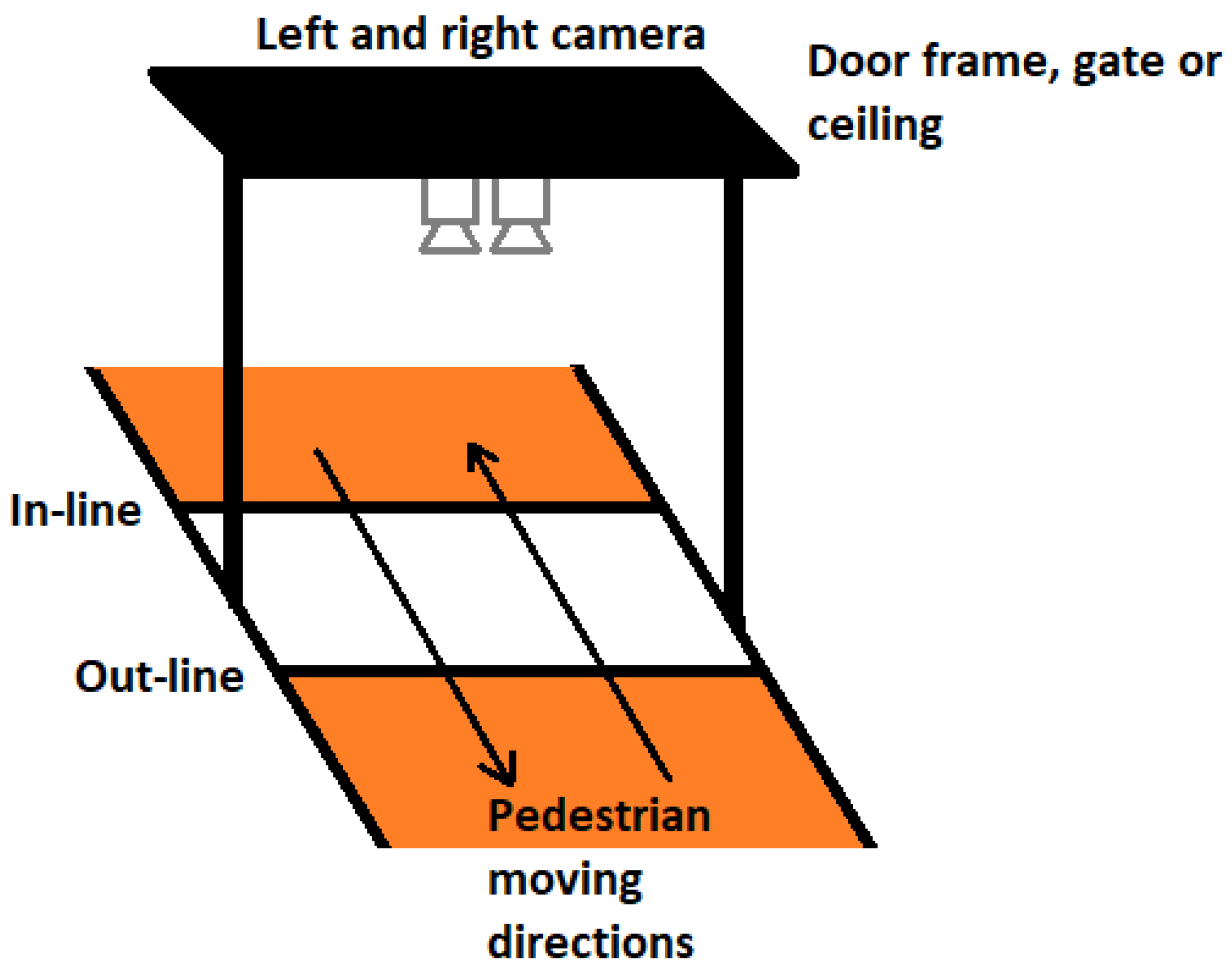

2.1. Vision-Based Systems

2.2. Pressure Sensing

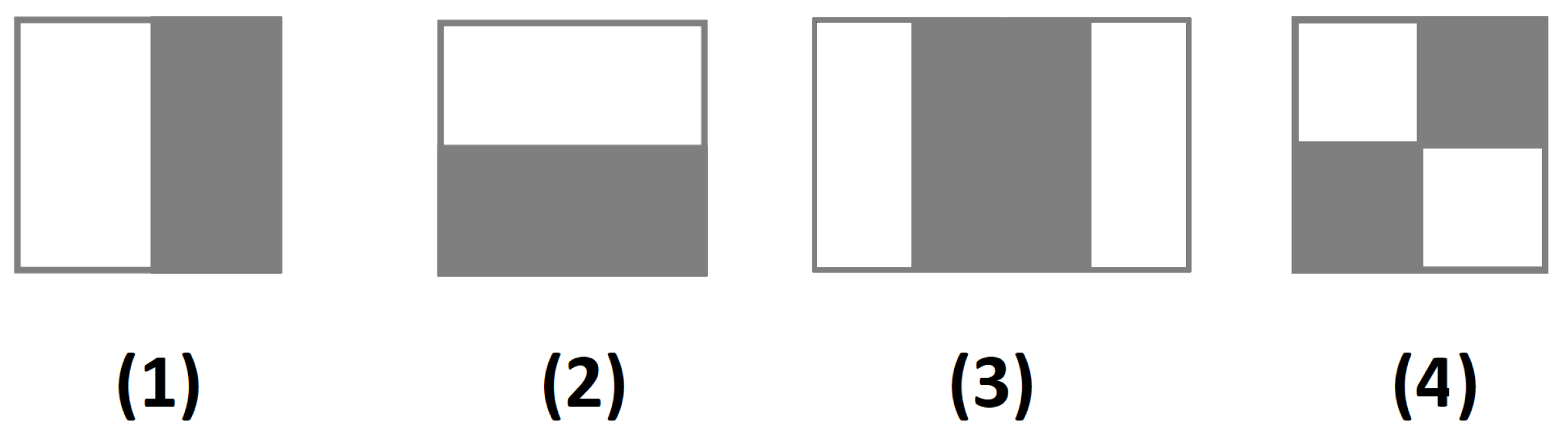

2.3. Capacitive Sensing

2.4. Seat Belt Detection with Vision Systems

2.5. Ultrasonic and Radar

2.6. Smart Cards, Identity Documents, and Cell Phones

3. Noninvasive Occupancy Estimation Methods

4. Counting Passengers at Public Transport Stations

5. Passenger Discrimination for Airbag Suppression

6. Mathematical Models

6.1. You Only Look Once

6.2. Cascade Classifiers

7. Discussion

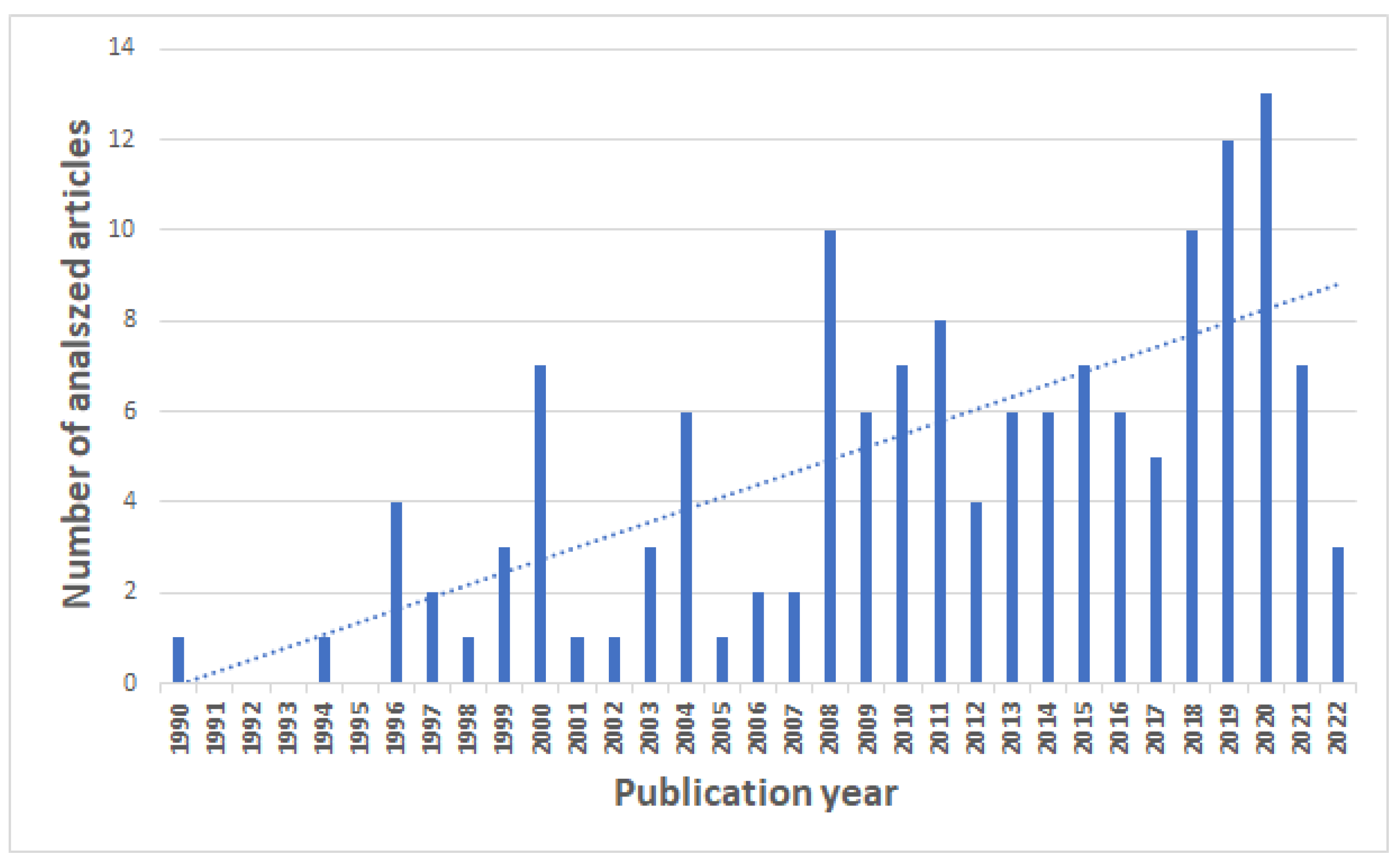

7.1. Paper Selection Analysis

7.2. Limitations and Research Challenges

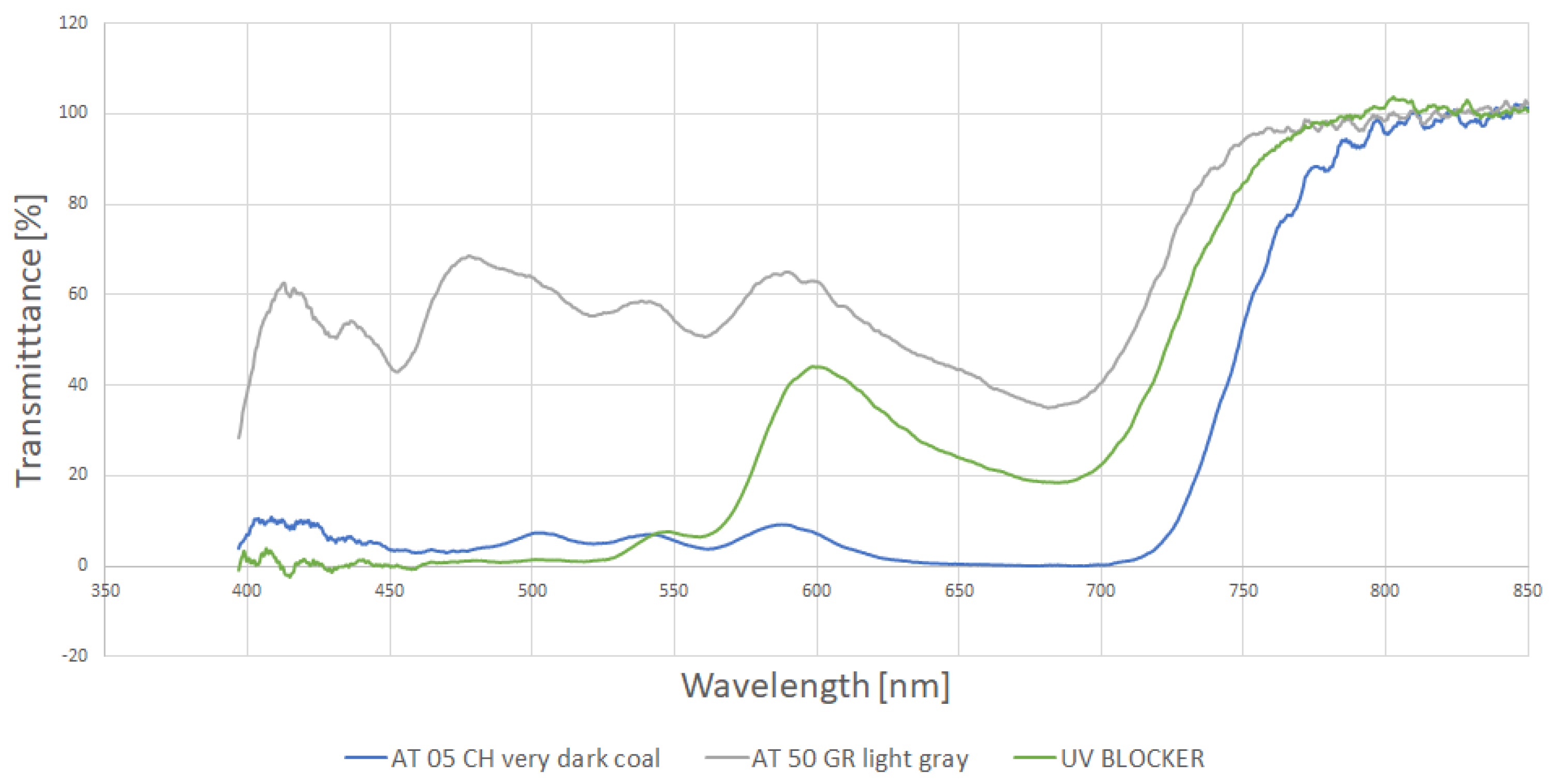

7.3. Effects of Window Tinting on Passenger Detection

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Billheimer, J.W.; Kaylor, K.; Shade, C. Use of Videotape in HOV Lane Surveillance and Enforcement; Technical Report; Federal Highway Administration: Los Altos, CA, USA, 1990. [Google Scholar]

- Pavlidis, I.; Fritz, B.; Symosek, P.; Papanikolopoulos, N.; Morellas, V.; Sfarzo, R. Automatic passenger counting in the high occupancy vehicle (HOV) lanes. In Proceedings of the 9th ITS America Meeting, Washington, DC, USA, 17 November 1999. [Google Scholar]

- Gautama, S.; Lacroix, S.; Devy, M. Evaluation of stereo matching algorithms for occupant detection. In Proceedings of the Proceedings International Workshop on Recognition, Analysis, and Tracking of Faces and Gestures in Real-Time Systems. In Conjunction with ICCV’99 (Cat. No.PR00378), Corfu, Greece, 26–27 September 1999; pp. 177–184. [Google Scholar] [CrossRef]

- Faber, P. Seat occupation detection inside vehicles. In Proceedings of the 4th IEEE Southwest Symposium on Image Analysis and Interpretation, Austin, TX, USA, 2–4 April 2000; pp. 187–191. [Google Scholar] [CrossRef]

- Faber, P. Image-based passenger detection and localization inside vehicles. Int. Arch. Photogramm. Remote Sens. 2000, 33, 230–237. [Google Scholar]

- Devy, M.; Giralt, A.; Marin-Hernandez, A. Detection and classification of passenger seat occupancy using stereovision. In Proceedings of the IEEE Intelligent Vehicles Symposium 2000 (Cat. No.00TH8511), Dearborn, MI, USA, 5 October 2000; pp. 714–719. [Google Scholar] [CrossRef]

- Schoenmackers, T.; Trivedi, M. Real-time stereo-based vehicle occupant posture determination for intelligent airbag deployment. In Proceedings of the IEEE IV2003 Intelligent Vehicles Symposium. Proceedings (Cat. No.03TH8683), Columbus, OH, USA, 9–11 June 2003; pp. 570–574. [Google Scholar] [CrossRef]

- Klomark, M. Occupant Detection Using Computer Vision. Master’s Thesis, Linköping University, Linköping, Sweden, 2000. Available online: https://www.diva-portal.org/smash/get/diva2:303034/FULLTEXT01.pdf (accessed on 17 April 2022).

- Morris, T.; Morellas, V.; Canelon-Suarez, D.; Papanikolopoulos, N.P.; Department of Computer Science University of Minnesota. Sensing for Hov/Hot Lanes Enforcement; Technical Report; University of Minnesota, Department of Computer Science and Engineering: Minneapolis, MN, USA, 2017. [Google Scholar]

- Kim, M.; Jang, J.W. A Study of The Unmanned System Design of Occupant Number Counter of Inside A Vehicle for High Occupancy Vehicle Lanes. In Proceedings of the Korean Institute of Information and Commucation Sciences Conference, Jeju, Republic of Korea, 18–20 October 2018; pp. 49–51. [Google Scholar]

- Schijns, S. Automated vehicle occupancy monitoring systems for HOV/HOT facilities. In Enterprise Pooled Fund Research Program; McCormick Rankin Corporation: Mississauga, ON, Canada, 2004. [Google Scholar]

- Petitjean, P.; Purson, E.; Aliaga, F.; Stein, C.; Taraschini, L.; Klein, E.; Bacelar, A.; Lacoste, F.R.; Gobert, O. Experimentation of a Sensor for Measuring the Occupancy Rate of Vehicles on the Network of the Direction des Routes of Ile-de-France (DiRIF); Cerema-Centre d’Etudes et d’Expertise sur les Risques, l’Environnement; Hyper Articles en Ligne: Lyon, France, 2019. [Google Scholar]

- Wender, S.; Loehlein, O. A cascade detector approach applied to vehicle occupant monitoring with an omni-directional camera. In Proceedings of the IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 345–350. [Google Scholar] [CrossRef]

- Géczy, A.; De Jorge Melgar, R.; Bonyár, A.; Harsányi, G. Passenger detection in cars with small form-factor IR sensors (Grid-eye). In Proceedings of the 2020 IEEE 8th Electronics System-Integration Technology Conference (ESTC), Tønsberg, Norway, 15–18 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Makrushin, A.; Langnickel, M.; Schott, M.; Vielhauer, C.; Dittmann, J.; Seifert, K. Car-seat occupancy detection using a monocular 360° NIR camera and advanced template matching. In Proceedings of the 2009 16th International Conference on Digital Signal Processing, Santorini, Greece, 5–7 July 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Yebes, J.J.; Alcantarilla, P.F.; Bergasa, L.M.; González, A. Occupant monitoring system for traffic control in HOV lanes and parking lots. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Proceedings of Workshop on Emergent Cooperative Technologies in Intelligent Transportation Systems, Madeira Island, Portugal, 19–22 September 2010. [Google Scholar]

- Yebes, J.; Fernández Alcantarilla, P.; Bergasa, L. Occupant Monitoring System for Traffic Control Based on Visual Categorization. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 5–9 June 2011; pp. 212–217. [Google Scholar] [CrossRef]

- Erlik Nowruzi, F.; El Ahmar, W.A.; Laganiere, R.; Ghods, A.H. In-Vehicle Occupancy Detection With Convolutional Networks on Thermal Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Amanatiadis, A.; Karakasis, E.; Bampis, L.; Ploumpis, S.; Gasteratos, A. ViPED: On-road vehicle passenger detection for autonomous vehicles. Robot. Auton. Syst. 2019, 112, 282–290. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, A.; Santra, B.; Lalitha, K.; Kolla, M.; Gupta, M.; Singh, R. VPDS: An AI-Based Automated Vehicle Occupancy and Violation Detection System. Proc. Aaai Conf. Artif. Intell. 2019, 33, 9498–9503. [Google Scholar] [CrossRef]

- Zhu, F.; Song, J.; Yang, R.; Gu, J. Research on Counting Method of Bus Passenger Flow Based on Kinematics of Human Body. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; Volume 1, pp. 201–204. [Google Scholar] [CrossRef]

- Zhu, F.; Gu, J.; Yang, R.; Zhao, Z. Research on Counting Method of Bus Passenger Flow Based on Kinematics of Human Body and SVM. In Proceedings of the 2008 Second International Symposium on Intelligent Information Technology Application, Shanghai, China, 20–22 December 2008; Volume 3, pp. 14–18. [Google Scholar] [CrossRef]

- Luo, D.; Lu, J.; Guo, G. An Indirect Occupancy Detection and Occupant Counting System Using Motion Sensors; Technical Report; Chongqing University, Ford Motor Company: Chongqing, China, 2017. [Google Scholar]

- Satz, A.; Hammerschmidt, D. New Modeling amp; Evaluation Approach for Capacitive Occupant Detection in Vehicles. In Proceedings of the 2008 IEEE International Behavioral Modeling and Simulation Workshop, San Jose, CA, USA, 25–26 September 2008; pp. 25–28. [Google Scholar] [CrossRef]

- George, B.; Zangl, H.; Bretterklieber, T.; Brasseur, G. A Novel Seat Occupancy Detection System based on Capacitive Sensing. In Proceedings of the 2008 IEEE Instrumentation and Measurement Technology Conference, Victoria, BC, Canada, 12–15 May 2008; pp. 1515–1519. [Google Scholar] [CrossRef]

- Zangl, H.; Bretterklieber, T.; Hammerschmidt, D.; Werth, T. Seat Occupancy Detection Using Capacitive Sensing Technology. In Proceedings of the SAE World Congress and Exhibition; SAE International: Warrendale, PA, USA, 2008. [Google Scholar] [CrossRef]

- Tumpold, D.; Satz, A. Contactless seat occupation detection system based on electric field sensing. In Proceedings of the 2009 35th Annual Conference of IEEE Industrial Electronics, Porto, Portugal, 3–5 November 2009; pp. 1823–1828. [Google Scholar] [CrossRef]

- George, B.; Zangl, H.; Bretterklieber, T.; Brasseur, G. A combined inductive-capacitive proximity sensor and its application to seat occupancy sensing. In Proceedings of the 2009 IEEE Instrumentation and Measurement Technology Conference, Singapore, 5–7 May 2009; pp. 13–17. [Google Scholar] [CrossRef]

- George, B.; Zangl, H.; Bretterklieber, T.; Brasseur, G. A Combined Inductive–Capacitive Proximity Sensor for Seat Occupancy Detection. IEEE Trans. Instrum. Meas. 2010, 59, 1463–1470. [Google Scholar] [CrossRef]

- Satz, A.; Hammerschmidt, D.; Tumpold, D. Capacitive passenger detection utilizing dielectric dispersion in human tissues. Sens. Actuators A Phys. 2009, 152, 1–4. [Google Scholar] [CrossRef]

- George, B.; Zangl, H.; Bretterklieber, T.; Brasseur, G. Seat Occupancy Detection Based on Capacitive Sensing. IEEE Trans. Instrum. Meas. 2009, 58, 1487–1494. [Google Scholar] [CrossRef]

- Walter, M.; Eilebrecht, B.; Wartzek, T.; Leonhardt, S. The smart car seat: Personalized monitoring of vital signs in automotive applications. Pers. Ubiquitous Comput. 2011, 15, 707–715. [Google Scholar] [CrossRef]

- Guo, H.; Lin, H.; Zhang, S.; Li, S. Image-based seat belt detection. In Proceedings of the 2011 IEEE International Conference on Vehicular Electronics and Safety, Beijing, China, 10–12 July 2011; pp. 161–164. [Google Scholar] [CrossRef]

- Chen, Y.; Tao, G.; Ren, H.; Lin, X.; Zhang, L. Accurate seat belt detection in road surveillance images based on CNN and SVM. Neurocomputing 2018, 274, 80–87. [Google Scholar] [CrossRef]

- Naik, D.B.; Lakshmi, G.S.; Sajja, V.R.; Venkatesulu, D.; Rao, J.N. Driver’s Seat Belt Detection Using CNN. Turk. J. Comput. Math. Educ. 2021, 12, 776–785. [Google Scholar]

- Kashevnik, A.; Ali, A.; Lashkov, I.; Shilov, N. Seat Belt Fastness Detection Based on Image Analysis from Vehicle In-abin Camera. In Proceedings of the 2020 26th Conference of Open Innovations Association (FRUCT), Yaroslavl, Russia, 23–24 April 2020; pp. 143–150. [Google Scholar] [CrossRef]

- Li, W.; Lu, J.; Li, Y.; Zhang, Y.; Wang, J.; Li, H. Seatbelt detection based on cascade Adaboost classifier. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; Volume 2, pp. 783–787. [Google Scholar] [CrossRef]

- Huh, J.h.; Cho, S.h. Seat Belt Reminder System In Vehicle Using IR-UWB Radar. In Proceedings of the 2018 International Conference on Network Infrastructure and Digital Content (IC-NIDC), Guiyang, China, 22–24 August 2018; pp. 256–259. [Google Scholar] [CrossRef]

- Elihos, A.; Alkan, B.; Balci, B.; Artan, Y. Comparison of Image Classification and Object Detection for Passenger Seat Belt Violation Detection Using NIR amp; RGB Surveillance Camera Images. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Yavari, E.; Jou, H.; Lubecke, V.; Boric-Lubecke, O. Doppler radar sensor for occupancy monitoring. In Proceedings of the 2013 IEEE Topical Conference on Power Amplifiers for Wireless and Radio Applications, Austin, TX, USA, 20 January 2013; pp. 145–147. [Google Scholar] [CrossRef]

- Lazaro, A.; Lazaro, M.; Villarino, R.; Girbau, D. Seat-Occupancy Detection System and Breathing Rate Monitoring Based on a Low-Cost mm-Wave Radar at 60 GHz. IEEE Access 2021, 9, 115403–115414. [Google Scholar] [CrossRef]

- Ma, Y.; Zeng, Y.; Jain, V. CarOSense: Car Occupancy Sensing with the Ultra-Wideband Keyless Infrastructure. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 91–119. [Google Scholar] [CrossRef]

- Alizadeh, M.; Abedi, H.; Shaker, G. Low-cost low-power in-vehicle occupant detection with mm-wave FMCW radar. In Proceedings of the 2019 IEEE SENSORS, Montreal, QC, Canada, 27–30 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Hoffmann, M.; Tatarinov, D.; Landwehr, J.; Diewald, A.R. A four-channel radar system for rear seat occupancy detection in the 24 GHz ISM band. In Proceedings of the 2018 11th German Microwave Conference (GeMiC), Freiburg, Germany, 12–14 March 2018; pp. 95–98. [Google Scholar] [CrossRef]

- Abedi, H.; Luo, S.; Shaker, G. On the Use of Low-Cost Radars and Machine Learning for In-Vehicle Passenger Monitoring. In Proceedings of the 2020 IEEE 20th Topical Meeting on Silicon Monolithic Integrated Circuits in RF Systems (SiRF), San Antonio, TX, USA, 26–29 January 2020; pp. 63–65. [Google Scholar] [CrossRef]

- Liu, J.; Mu, H.; Vakil, A.; Ewing, R.; Shen, X.; Blasch, E.; Li, J. Human Occupancy Detection via Passive Cognitive Radio. Sensors 2020, 20, 4248. [Google Scholar] [CrossRef] [PubMed]

- Song, H.; Yoo, Y.; Shin, H.C. In-Vehicle Passenger Detection Using FMCW Radar. In Proceedings of the 2021 International Conference on Information Networking (ICOIN), Jeju Island, Republic of Korea, 13–16 January 2021; pp. 644–647. [Google Scholar] [CrossRef]

- Tang, C.; Li, W.; Vishwakarma, S.; Chetty, K.; Julier, S.; Woodbridge, K. Occupancy Detection and People Counting Using WiFi Passive Radar. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Sterner, H.; Aichholzer, W.; Haselberger, M. Development of an Antenna Sensor for Occupant Detection in Passenger Transportation. Procedia Eng. 2012, 47, 178–183. [Google Scholar] [CrossRef]

- Diewald, A.R.; Landwehr, J.; Tatarinov, D.; Di Mario Cola, P.; Watgen, C.; Mica, C.; Lu-Dac, M.; Larsen, P.; Gomez, O.; Goniva, T. RF-based child occupation detection in the vehicle interior. In Proceedings of the 2016 17th International Radar Symposium (IRS), Krakow, Poland, 10–12 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Wu, L.; Wang, Y. A Low-Power Electric-Mechanical Driving Approach for True Occupancy Detection Using a Shuttered Passive Infrared Sensor. IEEE Sensors J. 2019, 19, 47–57. [Google Scholar] [CrossRef]

- Rahmatulloh, A.; Nursuwars, F.M.S.; Darmawan, I.; Febrizki, G. Applied Internet of Things (IoT): The Prototype Bus Passenger Monitoring System Using PIR Sensor. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Smith, B.L.; Yook, D. Investigation of Enforcement Techniques and Technologies to Support High-Occupancy Vehicle and High-Occupancy Toll Operations; Technical Report; Virginia Transportation Research Council: Charlottesville, VA, USA, 2009. [Google Scholar]

- Pattanusorn, W.; Nilkhamhang, I.; Kittipiyakul, S.; Ekkachai, K.; Takahashi, A. Passenger estimation system using Wi-Fi probe request. In Proceedings of the 2016 7th International Conference of Information and Communication Technology for Embedded Systems (IC-ICTES), Bangkok, Thailand, 20–22 March 2016; pp. 67–72. [Google Scholar] [CrossRef]

- Mehmood, U.; Moser, I.; Jayaraman, P.P.; Banerjee, A. Occupancy Estimation using WiFi: A Case Study for Counting Passengers on Busses. In Proceedings of the 2019 IEEE 5th World Forum on Internet of Things (WF-IoT), Limerick, Ireland, 15–18 April 2019; pp. 165–170. [Google Scholar] [CrossRef]

- Bonyár, A.; Géczy, A.; Harsanyi, G.; Hanák, P. Passenger Detection and Counting Inside Vehicles For eCall- a Review on Current Possibilities. In Proceedings of the 2018 IEEE 24th International Symposium for Design and Technology in Electronic Packaging (SIITME), Iasi, Romania, 25–28 October 2018; pp. 221–225. [Google Scholar] [CrossRef]

- Lupinska-Dubicka, A.; Tabędzki, M.; Adamski, M.; Rybnik, M.; Szymkowski, M.; Omieljanowicz, M.; Gruszewski, M.; Klimowicz, A.; Rubin, G.; Zienkiewicz, L. Vehicle Passengers Detection for Onboard eCall-Compliant Devices. In Proceedings of the Advances in Soft and Hard Computing; Pejaś, J., El Fray, I., Hyla, T., Kacprzyk, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 408–419. [Google Scholar]

- Kalikova, J.; Krcal, J.; Koukol, M. Detection of persons in a vehicle using IR cameras and RFID technologies. In Proceedings of the 2015 Smart Cities Symposium Prague (SCSP), Prague, Czech Republic, 24–25 June 2015; pp. 1–3. [Google Scholar] [CrossRef]

- Birch, P.M.; Young, R.C.D.; Claret-Tournier, F.; Chatwin, C.R. Automated vehicle occupancy monitoring. Opt. Eng. 2004, 43, 1828–1832. [Google Scholar] [CrossRef]

- Mecocci, A.; Bartolini, F.; Cappellini, V. Image sequence analysis for counting in real time people getting in and out of a bus. Signal Process. 1994, 35, 105–116. [Google Scholar] [CrossRef]

- Silva, B.; Martins, P.; Batista, J. Vehicle Occupancy Detection for HOV/HOT Lanes Enforcement. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 311–318. [Google Scholar] [CrossRef]

- Pavlidis, I.; Symosek, P.; Fritz, B.; Bazakos, M.; Papanikolopoulos, N. Automatic detection of vehicle occupants: The imaging problemand its solution. Mach. Vis. Appl. 2000, 11, 313–320. [Google Scholar] [CrossRef]

- Pavlidis, I.; Morellas, V.; Papanikolopoulos, N. A vehicle occupant counting system based on near-infrared phenomenology and fuzzy neural classification. IEEE Trans. Intell. Transp. Syst. 2000, 1, 72–85. [Google Scholar] [CrossRef]

- Lee, J.; Byun, J.; Lim, J.; Lee, J. A Framework for Detecting Vehicle Occupancy Based on the Occupant Labeling Method. J. Adv. Transp. 2020, 2020, 8870211. [Google Scholar] [CrossRef]

- Lee, J.; Lee, D.; Jang, S.; Choi, D.; Jang, J. Analysis of Deep Learning Model for the Development of an Optimized Vehicle Occupancy Detection System. J. Korea Inst. Inf. Commun. Eng. 2021, 25, 146–151. [Google Scholar]

- Wood, J.W.; Gimmestad, G.G.; Roberts, D.W. Covert camera for screening of vehicle interiors and HOV enforcement. In Proceedings of the Sensors, and Command, Control, Communications, and Intelligence (C3I) Technologies for Homeland Defense and Law Enforcement II, Orlando, FL, USA, 21–25 April 2003; Volume 5071, pp. 411–420. [Google Scholar] [CrossRef]

- Lee, Y.S.; Bae, C.S. An Vision System for Automatic Detection of Vehicle Passenger. J. Korea Inst. Inf. Commun. Eng. 2005, 9, 622–626. [Google Scholar]

- Hao, X.; Chen, H.; Li, J. An Automatic Vehicle Occupant Counting Algorithm Based on Face Detection. In Proceedings of the 2006 8th international Conference on Signal Processing, Guilin, China, 16–20 November 2006; Volume 3. [Google Scholar] [CrossRef]

- Hao, X.; Chen, H.; Yao, C.; Yang, N.; Bi, H.; Wang, C. A near-infrared imaging method for capturing the interior of a vehicle through windshield. In Proceedings of the 2010 IEEE Southwest Symposium on Image Analysis Interpretation (SSIAI), Austin, TX, USA, 23–25 May 2010; pp. 109–112. [Google Scholar] [CrossRef]

- Hao, X.; Chen, H.; Yang, Y.; Yao, C.; Yang, H.; Yang, N. Occupant Detection through Near-Infrared Imaging. J. Appl. Sci. Eng. 2011, 14, 275–283. [Google Scholar] [CrossRef]

- Yuan, X.; Meng, Y.; Hao, X.; Chen, H.; Wei, X. A vehicle occupant counting system using near-infrared (NIR) image. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; Volume 1, pp. 716–719. [Google Scholar] [CrossRef]

- Tyrer, J.R.; Lobo, L.M. An optical method for automated roadside detection and counting of vehicle occupants. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2008, 222, 765–774. [Google Scholar] [CrossRef]

- Pérez-Jiménez, A.J.; Guardiola, J.L.; Pérez-Cortés, J.C. High Occupancy Vehicle Detection. In Proceedings of the Structural, Syntactic, and Statistical Pattern Recognition; da Vitoria Lobo, N., Kasparis, T., Roli, F., Kwok, J.T., Georgiopoulos, M., Anagnostopoulos, G.C., Loog, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 782–789. [Google Scholar]

- Alves, J.F. High Occupancy Vehicle (HOV) Lane Enforcement. U.S. Patent 7,786,897, 31 August 2010. [Google Scholar]

- Fan, Z.; Islam, A.S.; Paul, P.; Xu, B.; Mestha, L.K. Front Seat Vehicle Occupancy Detection via Seat Pattern Recognition. U.S. Patent 8,611,608, 17 December 2013. [Google Scholar]

- Yuan, X.; Meng, Y.; Wei, X. A method of location the vehicle windshield region for vehicle occupant detection system. In Proceedings of the 2012 IEEE 11th International Conference on Signal Processing, Beijing, China, 21–25 October 2012; Volume 1, pp. 712–715. [Google Scholar] [CrossRef]

- Artan, Y.; Paul, P. Occupancy Detection in Vehicles Using Fisher Vector Image Representation. arXiv 2013, arXiv:cs.CV/1312.6024. [Google Scholar]

- Xu, B.; Paul, P.; Artan, Y.; Perronnin, F. A machine learning approach to vehicle occupancy detection. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 1232–1237. [Google Scholar] [CrossRef]

- Artan, Y.; Paul, P.; Perronin, F.; Burry, A. Comparison of face detection and image classification for detecting front seat passengers in vehicles. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 1006–1012. [Google Scholar] [CrossRef]

- Artan, Y.; Bulan, O.; Loce, R.P.; Paul, P. Driver Cell Phone Usage Detection from HOV/HOT NIR Images. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 225–230. [Google Scholar] [CrossRef]

- Artan, Y.; Bulan, O.; Loce, R.P.; Paul, P. Passenger Compartment Violation Detection in HOV/HOT Lanes. IEEE Trans. Intell. Transp. Syst. 2016, 17, 395–405. [Google Scholar] [CrossRef]

- Balci, B.; Alkan, B.; Elihos, A.; Artan, Y. Front Seat Child Occupancy Detection Using Road Surveillance Camera Images. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1927–1931. [Google Scholar] [CrossRef]

- Xu, B.; Paul, P.; Perronnin, F. Vehicle Occupancy Detection Using Passenger to Driver Feature Distance. U.S. Patent 9,760,783, 12 September 2017. [Google Scholar]

- Wang, Y.R.; Xu, B.; Paul, P. Determining a Pixel Classification Threshold for Vehicle Occupancy Detection. U.S. Patent 9,202,118, 1 December 2015. [Google Scholar]

- Xu, B.; Bulan, O.; Kumar, J.; Wshah, S.; Kozitsky, V.; Paul, P. Comparison of Early and Late Information Fusion for Multi-camera HOV Lane Enforcement. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 913–918. [Google Scholar] [CrossRef]

- Wshah, S.; Xu, B.; Bulan, O.; Kumar, J.; Paul, P. Deep learning architectures for domain adaptation in HOV/HOT lane enforcement. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Berri, R.A.; Silva, A.G.; Parpinelli, R.S.; Girardi, E.; Arthur, R. A pattern recognition system for detecting use of mobile phones while driving. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; Volume 2, pp. 411–418. [Google Scholar]

- Seshadri, K.; Juefei-Xu, F.; Pal, D.K.; Savvides, M.; Thor, C.P. Driver cell phone usage detection on Strategic Highway Research Program (SHRP2) face view videos. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 35–43. [Google Scholar] [CrossRef]

- Xu, B.; Loce, R.P. A machine learning approach for detecting cell phone usage. In Proceedings of the Video Surveillance and Transportation Imaging Applications 2015, San Francisco, NC, USA, 10–12 February 2015; Volume 9407, pp. 70–77. [Google Scholar] [CrossRef]

- Cornett, D.; Yen, A.; Nayola, G.; Montez, D.; Johnson, C.R.; Baird, S.T.; Santos-Villalobos, H.; Bolme, D.S. Through the windshield driver recognition. Electron. Imaging 2019, 2019, 140–141. [Google Scholar] [CrossRef]

- Ruby, M.; Bolme, D.S.; Brogan, J.; III, D.C.; Delgado, B.; Jager, G.; Johnson, C.; Martinez-Mendoza, J.; Santos-Villalobos, H.; Srinivas, N. The Mertens Unrolled Network (MU-Net): A high dynamic range fusion neural network for through the windshield driver recognition. In Proceedings of the Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2020, Baltimore, MD, USA, 27 April–8 May 2020; Volume 11415, pp. 72–83. [Google Scholar] [CrossRef]

- Lumentut, J.S.; Gunawan, F.E.; Diana. Evaluation of Recursive Background Subtraction Algorithms for Real-Time Passenger Counting at Bus Rapid Transit System. Procedia Comput. Sci. 2015, 59, 445–453. [Google Scholar] [CrossRef]

- Deparis, J.; Khoudour, L.; Meunier, B.; Duvieubourg, L. A device for counting passengers making use of two active linear cameras: Comparison of algorithms. In Proceedings of the 1996 IEEE International Conference on Systems, Man and Cybernetics. Information Intelligence and Systems (Cat. No.96CH35929), Beijing, China, 14–17 October 1996; Volume 3, pp. 1629–1634. [Google Scholar] [CrossRef]

- Khoudour, L.; Duvieubourg, L.; Deparis, J.P. Real-time passenger counting by active linear cameras. In Proceedings of the Electronic Imaging: Science and Technology, 1996, San Jose, CA, USA, 22–25 January 2001; Volume 2661, pp. 106–117. [Google Scholar] [CrossRef]

- Gerland, H.E.; Sutter, K. Automatic passenger counting (apc): Infra-red motion analyzer for accurate counts in stations and rail, light-rail and bus operations. In Proceedings of the 1999 APTA Bus Conference, ProceedingsAmerican Public Transportation Association, Cleveland, OH, USA, 2–6 May 1999. [Google Scholar]

- Bernini, N.; Bombini, L.; Buzzoni, M.; Cerri, P.; Grisleri, P. An embedded system for counting passengers in public transportation vehicles. In Proceedings of the 2014 IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA), Senigallia, Italy, 10–12 September 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Qiuyu, Z.; Li, T.; Yiping, J.; Wei-jun, D. A novel approach of counting people based on stereovision and DSP. In Proceedings of the 2010 The 2nd International Conference on Computer and Automation Engineering (ICCAE), Singapore, 26–28 February 2010; Volume 1, pp. 81–84. [Google Scholar] [CrossRef]

- Li, F.; Yang, F.W.; Liang, H.W.; Yang, W.M. Automatic Passenger Counting System for Bus Based on RGB-D Video. In Proceedings of the 2nd Annual International Conference on Electronics, Electrical Engineering and Information Science (EEEIS 2016), Xi’an, China, 2–4 December 2016; pp. 209–220. [Google Scholar] [CrossRef]

- Yang, T.; Zhang, Y.; Shao, D.; Li, Y. Clustering method for counting passengers getting in a bus with single camera. Opt. Eng. 2010, 49, 1–10. [Google Scholar] [CrossRef]

- Perng, J.W.; Wang, T.Y.; Hsu, Y.W.; Wu, B.F. The design and implementation of a vision-based people counting system in buses. In Proceedings of the 2016 International Conference on System Science and Engineering (ICSSE), Puli, Taiwan, 7–9 July 2016; pp. 1–3. [Google Scholar] [CrossRef]

- Yahiaoui, T.; Khoudour, L.; Meurie, C. Real-time passenger counting in buses using dense stereovision. J. Electron. Imaging 2010, 19, 1–11. [Google Scholar] [CrossRef]

- Lengvenis, P.; Simutis, R.; Vaitkus, V.; Maskeliunas, R. Application of Computer Vision Systems for Passenger Counting in Public Transport. Elektron. Elektrotechnika 2013, 19, 69–72. [Google Scholar] [CrossRef]

- Chen, C.H.; Chang, Y.C.; Chen, T.Y.; Wang, D.J. People Counting System for Getting In/Out of a Bus Based on Video Processing. In Proceedings of the 2008 Eighth International Conference on Intelligent Systems Design and Applications, Kaohsuing, Taiwan, 26–28 November 2008; Volume 3, pp. 565–569. [Google Scholar] [CrossRef]

- Hussain Khan, S.; Haroon Yousaf, M.; Murtaza, F.; Velastin Carroza, S.A. Passenger Detection and Counting during Getting on and off from Public Transport Systems. NED Univ. J. Res. 2020, 2, 35–46. [Google Scholar] [CrossRef]

- Liciotti, D.; Cenci, A.; Frontoni, E.; Mancini, A.; Zingaretti, P. An Intelligent RGB-D Video System for Bus Passenger Counting. In Proceedings of the Intelligent Autonomous Systems 14, Shanghai, China, 3–7 July 2016; Chen, W., Hosoda, K., Menegatti, E., Shimizu, M., Wang, H., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 473–484. [Google Scholar]

- Van Oosterhout, T.; Bakkes, S.; Kröse, B.J. Head Detection in Stereo Data for People Counting and Segmentation. In Proceedings of the VISAPP, Vilamoura, Portugal, 5–7 March 2011; pp. 620–625. [Google Scholar]

- Chen, J.; Wen, Q.; Zhuo, C.; Mete, M. Automatic head detection for passenger flow analysis in bus surveillance videos. In Proceedings of the 2012 5th International Congress on Image and Signal Processing, Chongqing, China, 16–18 October 2012; pp. 143–147. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, L.; Wang, J. Passenger detection for subway transportation based on video. In Proceedings of the 2014 10th International Conference on Natural Computation (ICNC), Xiamen, China, 19–21 August 2014; pp. 720–724. [Google Scholar] [CrossRef]

- Qian, X.; Yu, X.; Fa, C. The passenger flow counting research of subway video based on image processing. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 5195–5198. [Google Scholar] [CrossRef]

- Nasir, A.; Gharib, N.; Jaafar, H. Automatic Passenger Counting System Using Image Processing Based on Skin Colour Detection Approach. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA), Kuching, Malaysia, 15–17 August 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Nakashima, H.; Arai, I.; Fujikawa, K. Proposal of a Method for Estimating the Number of Passengers with Using Drive Recorder and Sensors Equipped in Buses. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5396–5398. [Google Scholar] [CrossRef]

- Nakashima, H.; Arai, I.; Fujikawa, K. Passenger Counter Based on Random Forest Regressor Using Drive Recorder and Sensors in Buses. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019; pp. 561–566. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, Y.; Men, C.; Li, X. Tiny YOLO optimization oriented bus passenger object detection. Chin. J. Electron. 2020, 29, 132–138. [Google Scholar] [CrossRef]

- Belloc, M.; Velastin, S.; Fernandez, R.; Jara, M. Detection of people boarding/alighting a metropolitan train using computer vision. In Proceedings of the 9th International Conference on Pattern Recognition Systems (ICPRS 2018), Valparaiso, Chile, 22–24 May 2018. [Google Scholar]

- Amin, I.; Taylor, A.; Junejo, F.; Al-Habaibeh, A.; Parkin, R. Automated people-counting by using low-resolution infrared and visual cameras. Measurement 2008, 41, 589–599. [Google Scholar] [CrossRef]

- Kuchár, P.; Pirník, R.; Tichý, T.; Rástočný, K.; Skuba, M.; Tettamanti, T. Noninvasive Passenger Detection Comparison Using Thermal Imager and IP Cameras. Sustainability 2021, 13, 2928. [Google Scholar] [CrossRef]

- Klauser, D.; Bärwolff, G.; Schwandt, H. A TOF-based automatic passenger counting approach in public transportation systems. AIP Conf. Proc. 2015, 1648, 850113. [Google Scholar] [CrossRef]

- Mukherjee, S.; Saha, B.; Jamal, I.; Leclerc, R.; Ray, N. A novel framework for automatic passenger counting. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2969–2972. [Google Scholar] [CrossRef]

- Meister, J.B.; Walcott, B.L. Occupant and Infant Seat Detection in a Vehicle Supplemental Restraint System. U.S. Patent 5,570,903, 15 March 1996. [Google Scholar]

- Paula, G. Sensors help make air bags safer. Mech. Eng. 1997, 119, 62. [Google Scholar]

- Corrado, A.P.; Decker, S.W.; Benbow, P.K. Automotive Occupant Sensor System and Method of Operation by Sensor Fusion. U.S. Patent 5,482,314, 19 October 1995. [Google Scholar]

- Jinno, K.; Ofuji, M.; Saito, T.; Sekido, S. Occupant sensing utilizing perturbation of electric fields. SAE 971051. In Proceedings of the SAE International Congress and Exposition, Detroit, MI, USA, 24–27 February 1997; pp. 117–129, Reprinted from: Anthropomorphic Dummies and Crash Instrumtation Sensors-SP-1261, Occupant Detection and Sensing for Smarter Air Bag Systems-PT-107. [Google Scholar]

- Krumm, J.; Kirk, G. Video occupant detection for airbag deployment. In Proceedings of the Proceedings Fourth IEEE Workshop on Applications of Computer Vision. WACV’98 (Cat. No.98EX201), Princeton, NJ, USA, 19–21 October 1998; pp. 30–35. [Google Scholar] [CrossRef]

- Saito, T.; Ofuji, M.; Jinno, K.; Sugino, M. Passenger Detecting System and Passenger Detecting Method. U.S. Patent 6,043,743, 28 March 2000. [Google Scholar]

- Reyna, R.; Giralt, A.; Esteve, D. Head detection inside vehicles with a modified SVM for safer airbags. In Proceedings of the ITSC 2001. 2001 IEEE Intelligent Transportation Systems. Proceedings (Cat. No.01TH8585), Oakland, CA, USA, 25–29 August 2001; pp. 268–272. [Google Scholar] [CrossRef]

- Lu, Y.; Marschner, C.; Eisenmann, L.; Sauer, S. The new generation of the BMW child seat and occupant detection system SBE 2. INternational J. Automot. Technol. 2002, 3, 53–56. [Google Scholar]

- Farmer, M.; Jain, A. Occupant classification system for automotive airbag suppression. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 1, p. I. [Google Scholar] [CrossRef]

- Farmer, M.E.; Jain, A.K. Smart Automotive Airbags: Occupant Classification and Tracking. IEEE Trans. Veh. Technol. 2007, 56, 60–80. [Google Scholar] [CrossRef]

- Fritzsche, M.; Prestele, C.; Becker, G.; Castillo-Franco, M.; Mirbach, B. Vehicle occupancy monitoring with optical range-sensors. In Proceedings of the IEEE Intelligent Vehicles Symposium, 2004, Parma, Italy, 14–17 June 2004; pp. 90–94. [Google Scholar] [CrossRef]

- Krotosky, S.; Trivedi, M. Occupant posture analysis using reflectance and stereo image for “smart” airbag deployment. In Proceedings of the IEEE Intelligent Vehicles Symposium, 2004, Parma, Italy, 14–17 June 2004; pp. 698–703. [Google Scholar] [CrossRef]

- Trivedi, M.; Cheng, S.Y.; Childers, E.; Krotosky, S. Occupant posture analysis with stereo and thermal infrared video: Algorithms and experimental evaluation. IEEE Trans. Veh. Technol. 2004, 53, 1698–1712. [Google Scholar] [CrossRef]

- Trivedi, M.M.; Gandhi, T.; McCall, J. Looking-In and Looking-Out of a Vehicle: Computer-Vision-Based Enhanced Vehicle Safety. IEEE Trans. Intell. Transp. Syst. 2007, 8, 108–120. [Google Scholar] [CrossRef]

- Yang, Y.; Zao, G.; Sheng, J. Occupant Pose and Location Detect for Intelligent Airbag System Based on Computer Vision. In Proceedings of the 2008 Fourth International Conference on Natural Computation, Jinan, China, 18–20 October 2008; Volume 6, pp. 179–182. [Google Scholar] [CrossRef]

- Vamsi, M.; Soman, K. In-Vehicle Occupancy Detection And Classification Using Machine Learning. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar] [CrossRef]

- Hou, Y.L.; Pang, G.K.H. People Counting and Human Detection in a Challenging Situation. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2011, 41, 24–33. [Google Scholar] [CrossRef]

- Tichý, T.; Brož, J.; Bělinová, Z.; Pirník, R. Analysis of Predictive Maintenance for Tunnel Systems. Sustainability 2021, 13, 3977. [Google Scholar] [CrossRef]

- Tichý, T.; Brož, J.; Bělinová, Z.; Kouba, P. Predictive diagnostics usage for telematic systems maintenance. In Proceedings of the 2020 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 25 June 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Brož, J.; Tichý, T.; Angelakis, V.; Bělinová, Z. Usage of V2X Applications in Road Tunnels. Appl. Sci. 2022, 12, 4624. [Google Scholar] [CrossRef]

- Tichý, T.; Švorc, D.; Růžička, M.; Bělinová, Z. Thermal Feature Detection of Vehicle Categories in the Urban Area. Sustainability 2021, 13, 6873. [Google Scholar] [CrossRef]

- Tichy, T.; Broz, J.; Smerda, T.; Lokaj, Z. Application of Cybersecurity Approaches within Smart Cities and ITS. In Proceedings of the 2022 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Růžička, J.; Tichý, T.; Hajčiarová, E. Big Data Application for Urban Transport Solutions. In Proceedings of the 2022 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Daley, W.; Arif, O.; Stewart, J.; Wood, J.; Usher, C.; Hanson, E.; Turgeson, J.; Britton, D. Sensing System Development for HOV/HOT (High Occupancy Vehicle) Lane Monitoring; Technical Report; Georgia Department of Transportation: Atlanta, GA, USA, 2011. [Google Scholar]

- Daley, W.; Usher, C.; Arif, O.; Stewart, J.; Wood, J.; Turgeson, J.; Hanson, E. Detection of vehicle occupants in HOV lanes: Exploration of image sensing for detection of vehicle occupants. In Proceedings of the Video Surveillance and Transportation Imaging Applications, Burlingame, CA, USA, 4–7 February 2013; Volume 8663, pp. 215–229. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, M.; Beurier, G.; Wang, X. Automobile driver posture monitoring systems: A review. China J. Highw. Transp 2019, 2, 1–18. [Google Scholar]

| Method | Advantages | Drawbacks | Reference | Invasivity |

|---|---|---|---|---|

| NIR systems | No driver distraction. Sees through window tinting. Suitable for both I and N applications. | Harder detection and less robust. A system might get expensive. Creates false positives with pets, luggage, etc. | [2,13,15,63,66,69,70,71,72,80], | I + N |

| Vision systems | Good execution speed, reliability, and good results during daytime. | Low tolerance to light conditions. A system might become expensive and is influenced by the weather when used noninvasively. Sensitive to colours. | [1,16,60,68,103,115,125,128,133,137], | I + N |

| Stereo cameras | Insensitive to illumination, and suited for irregular environments. | Trade-off between the reliability and the computation time, incorrect detection during various passenger poses. | [3,4,5,6,7,96,97,101,123,130,131], | I |

| Thermal images | Can detect also back seating passengers when used invasively. | Not suitable for outdoor applications, problems with similar surrounding temperatures. | [18,116,131], | I * |

| Radar | Requires less power than camera sensors. Emits fewer EM waves than WiFi. Suitable for low-light conditions. Contactless solution. | Requires additional communication channels for sending results. | [38,40,41,43,44,45,47], | I |

| WiFi | Does not require interactions with passengers | Requires mobile phones with enabled WiFi. Usually overestimates with many surrounding vehicles. | [48,54,55] | I + N |

| Seat belts | System is already installed in vehicles. | Requires additional communication channel and access to car electronics. | [56] | I |

| Capacitive systems | Reliable and fast, robust to external interference. | Requires additional communication channel and has problems when passengers are grounded. | [24,25,26,27,28,29,30,31] | I |

| PIR | Does not count irrelevant objects, small dimensions. | Requires additional communication channel, is not consistent and has varying accuracy. | [52,95] | I |

| TOF | Requires less sensors, does not trigger false positives with rucksacks or clothes. | Insufficient results with low resolution, tendency to yield less counting results. | [56,117,129] | I |

| Electric fields | Low-cost system with hidden electrodes. Is not affected by heat, light, dust, humidity. | Covering electrodes disables the whole system. | [49,122] | I |

| Floor pressure sensing | Can easily distinguish direction of movement and is relatively cheap. | Suitable only for public transport with difficult sensor placement. | [21,22] | I |

| Reference | Image Type | Camera Placement and Orientation | Windows Tinting | Use | Notes |

|---|---|---|---|---|---|

| [78] | NIR > 750 nm | Only windshield | Not mentioned. | HOV/HOT | Speeds up to 130 km/h. |

| [1] | Monochrome | Windshield + side images. | They theoretically describe the limitations of tinting in the 1990s. | HOV/HOT and violations | Tinting causes uncertainty in estimation. |

| [2,62,63] | NIR 1.4-1.7 μm | Windshield + side images. | Graphs of the transmission of wavelengths depending on the used glass, tinting or purity is proposed. | HOV/HOT | An overview of detection methods. |

| [86] | NIR > 750 nm | Various | Not mentioned. | HOV/HOT | Comparison of different CNN architectures. |

| [65] | Visible spectrum | Only windshield | Not mentioned. | Violations | Comparison of different CNN architectures. |

| [77] | NIR > 750 nm | Only windshield | Not mentioned. | HOV/HOT | Binary classification |

| [64] | NIR > 750 nm | Two side views. | Using NIR to overcome tinting. | HOV/HOT | Detection of 1+, 2+ and 3+. |

| [73] | Visible spectrum | Only windshield | Not mentioned. | HOV/HOT | Synthesis of face and seat belt detection. |

| [19] | Visible spectrum | Windshield and rear glass. | Not mentioned. | Emergency situations | Camera placed on the vehicle. |

| [20] | NIR > 750 nm | Windshield + side images. | They mention the tinting effect, but do not discuss it further. | HOV/HOT and violations | Violations in HOV 3+ scenario. |

| [75] | Visible spectrum | Only windshield. | Not mentioned. | HOV/HOT | Seat edge detection. |

| [84] | Multi-band IR | Only windshield. | Not mentioned. | HOV/HOT | Detection in HOV 2+ scenario. |

| [9] | NIR > 750 nm | Windshield + side images. | SCW (Solar controlled window) | HOV/HOT | |

| [70] | NIR 850 nm | Only windshield. | Experiments with tinting. Required adaptive lighting. | HOV/HOT | Unstable image quality. |

| [90,91] | Three-camera setup | Only windshield. | Efforts to improve systems with NIR. | Biometric recognition | HDR fusion of images. |

| [81] | NIR > 750 nm | Windshield + side images. | Not mentioned. | HOV/HOT | Passenger and violation detection. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuchár, P.; Pirník, R.; Janota, A.; Malobický, B.; Kubík, J.; Šišmišová, D. Passenger Occupancy Estimation in Vehicles: A Review of Current Methods and Research Challenges. Sustainability 2023, 15, 1332. https://doi.org/10.3390/su15021332

Kuchár P, Pirník R, Janota A, Malobický B, Kubík J, Šišmišová D. Passenger Occupancy Estimation in Vehicles: A Review of Current Methods and Research Challenges. Sustainability. 2023; 15(2):1332. https://doi.org/10.3390/su15021332

Chicago/Turabian StyleKuchár, Pavol, Rastislav Pirník, Aleš Janota, Branislav Malobický, Jozef Kubík, and Dana Šišmišová. 2023. "Passenger Occupancy Estimation in Vehicles: A Review of Current Methods and Research Challenges" Sustainability 15, no. 2: 1332. https://doi.org/10.3390/su15021332

APA StyleKuchár, P., Pirník, R., Janota, A., Malobický, B., Kubík, J., & Šišmišová, D. (2023). Passenger Occupancy Estimation in Vehicles: A Review of Current Methods and Research Challenges. Sustainability, 15(2), 1332. https://doi.org/10.3390/su15021332