Abstract

In the vehicle-to-everything scenario, the fuel cell bus can accurately obtain the surrounding traffic information, and quickly optimize the energy management problem while controlling its own safe and efficient driving. This paper proposes an energy management strategy (EMS) that considers speed control based on deep reinforcement learning (DRL) in complex traffic scenarios. Using SUMO simulation software (Version 1.15.0), a two-lane urban expressway is designed as a traffic scenario, and a hydrogen fuel cell bus speed control and energy management system is designed through the soft actor–critic (SAC) algorithm to effectively reduce the equivalent hydrogen consumption and fuel cell output power fluctuation while ensuring the safe, efficient and smooth driving of the vehicle. Compared with the SUMO–IDM car-following model, the average speed of vehicles is kept the same, and the average acceleration and acceleration change value decrease by 10.22% and 11.57% respectively. Compared with deep deterministic policy gradient (DDPG), the average speed is increased by 1.18%, and the average acceleration and acceleration change value are decreased by 4.82% and 5.31% respectively. In terms of energy management, the hydrogen consumption of SAC–OPT-based energy management strategy reaches 95.52% of that of the DP algorithm, and the fluctuation range is reduced by 32.65%. Compared with SAC strategy, the fluctuation amplitude is reduced by 15.29%, which effectively improves the durability of fuel cells.

1. Introduction

As the problems of environmental pollution and energy shortage become more and more serious, the concepts of energy conservation and environmental protection have received more attention [1,2,3]. The continuous development of new energy vehicle technologies has not only effectively alleviated the environmental problems caused by the automobile industry but has come to be regarded as forming a promising field [4,5,6]. Among these technologies, hydrogen fuel cell vehicles have high-quality characteristics such as zero emissions, long battery life, and high durability [7], and their breakthroughs in related technologies have received extensive attention and participation from scholars. A good energy management strategy (EMS) can further improve the cruising range of hydrogen fuel cell vehicles and effectively reduce hydrogen energy consumption [8]. At the same time, the development of vehicle-to-everything technology has greatly improved the ability of vehicles to obtain environmental information [9] and is an important technical means for fuel cell vehicles to achieve better autonomous driving technology and energy management strategies [10]. Additionally, research on the energy management of hydrogen fuel cell vehicles, combined with environmental information, is an important part of the promotion and application of hydrogen fuel cell vehicles [11].

EMS is a key technology for fuel cell buses, and its role is to rationally distribute the power of fuel cells and power cells through the current vehicle state to improve vehicle driving efficiency and energy consumption economy [12,13]. At present, scholars have conducted much research on EMS for fuel cell buses, and research methods include rule-based EMS [14], optimization-based EMS [15] and learning-based EMS [16]. Among these, rule-based EMS appeared mostly in early research, and, while its calculation is small and easy to implement, it relies on expert experience and cannot be reasonably and effectively processed in the face of complex working conditions [17]. As a popular direction of EMS research, optimization-based EMS includes dynamic programming (DP) [18,19,20], Pontriagin’s minimum principle (PMP) [21,22], model predictive control (MPC) [23], etc.

Optimized EMS is based on optimizing the control rate to reduce the energy consumption required for vehicle driving. Fu et al. [24], aiming to address the impact of fuel cell power fluctuations on fuel cell life, proposed an EMS based on fuzzy control method. The EMS controls the power fluctuation of the fuel cell within 300 W/s and prolongs the service life of the fuel cell. Additionally, it has been verified in highway fuel economy test (HFET), urban dynamometer driving schedule (UDDS), and new European driving cycle (NEDC) operating conditions. Saman Ahmadi et al. [25], aiming to address the problems of the high hydrogen consumption of fuel cells and poor state-of-charge (SOC) retention of power batteries, proposed an EMS based on fuzzy logic control. Equivalent fuel economy and power performance of the vehicle reduce SOC fluctuations of the power battery. Huang et al. [26], aiming to address the balance between fuel cell economy and durability, proposed an EMS based on the PMP. Wang et al. [27], aiming to address the economic problem of fuel cells, proposed a fuzzy control optimization strategy based on cycle recognition. Compared with the traditional fuzzy control strategy, fuel cell hydrogen consumption was reduced by 40.5%; compared with the genetic algorithm fuzzy control strategy, the fuel cell hydrogen consumption was reduced by 16.55%. Additionally, optimized EMS has been verified in the economic commission of Europe (ECE) and other working conditions.

With the development of machine learning technology, learning-based EMS has gradually become a popular direction for fuel cell bus energy management research, including support vector machines, neural networks, Markov chains, genetic algorithms, etc. Min et al. [28] proposed a neural network optimized by a genetic algorithm as an EMS. Aiming at the shortening of fuel cell life caused by frequent start–stop and load changes of vehicles, according to the optimization ability of the algorithm, unnecessary start–stop and load changes are effectively avoided, energy consumption is reduced and fuel cell life is extended. Wu et al. [29] proposed a DRL-based continuous state parameter fuel cell EMS, which improves the cost effectiveness of energy management strategies based on reinforcement learning. By studying the mean square error and the Huber loss function, the highly random load distribution is effectively processed, and the cost-effectiveness of the EMS is higher. In order to improve the economy of fuel cell vehicles and prolong the service life of fuel cells, Tang et al. [30] designed a deep Q network with priority experience playback based on the traditional deep Q network (DQN) and the DQN with priority experience playback. Based on this, an EMS was proposed which realizes the optimization of fuel economy and battery durability by adjusting the corresponding weight of the objective function in the fuel cell system. Huang et al. [31], aiming to address the power and cruising range of fuel vehicles, designed a double-layer DDPG and proposed a dual-mode operation scheme for extended-range fuel cell hybrid electric vehicles to achieve the optimal power distribution under dual modes. The EMS of the layer algorithm optimizes the power and economy of fuel vehicles. Huo et al. [32], aiming to address the problems of fuel cell hybrid electric vehicle economy and durability, proposed an EMS based on deep Q-learning (DQL) with priority empirical playback and DDPG. Their strategy incorporates fuel economy and power fluctuation factors into the multi-objective reward function, which realizes a reduction of fuel consumption and the improvement of life.

The above studies have greatly promoted the development of fuel cell bus energy management technology. The optimization-based and learning-based EMSs perform better in optimization effect and accuracy and can achieve global or local optimal results [33]; however, there remain problems: focusing on fuel cell vehicles and using standard working conditions for training optimization leads to a lack of the full consideration of uncertain working conditions. The expressway, as an important part of urban traffic, has a more complex traffic environment due to its large traffic flow and fast average speed. When a fuel cell bus is driving on an expressway, it must first consider the traffic environment, control its own driving speed, and perform energy management at this speed, so as to achieve safe and stable driving and to reduce equivalent hydrogen consumption. In this actual situation, using an EMS trained under the existing working conditions may not achieve better results. In response to changes in traffic information, real-time fuel cell bus speed control is carried out, and the optimization of fuel cell bus energy management under this condition obviously has higher generalization and greater application significance [34].

At present, the advanced driver assistance system (ADAS) for vehicles has become relatively mature, and adaptive cruise control (ACC) has also become widely used in vehicle longitudinal speed control [35]. The self-learning ability of DRL enables it to complete more efficient, safe and stable vehicle driving control in complex traffic scenarios [36]. Wang et al. [37] used deep Q-learning to control a vehicle such that it was able to automatically drive from a ramp to a main road under the premise of ensuring safe driving. Zhu et al. [38] used a deterministic policy layer (DPG) method to learn human driving data and proposed a vehicle-following controller. The application of DRL to the above methods has achieved good results in vehicle-following control; however, in the speed control of fuel cell buses, driving economy is also important and cannot be ignored. At present, scholars have promoted the theory of ecological driving [39], paying more attention to the interaction between the vehicle itself and the traffic environment to achieve the safe driving of the vehicle while also saving energy and protecting the environmental [40].

Combined with vehicle-to-everything technology, vehicles can easily obtain information on the surrounding traffic environment, and machine learning methods can simultaneously manage vehicle speed and energy management issues. This paper proposes a deep reinforcement learning-based hydrogen fuel cell bus energy management study that considers speed control in urban expressway scenarios. A two-lane urban expressway is built using SUMO simulation software. In this traffic environment, the speed control of the hydrogen fuel cell bus based on the SAC algorithm enables it to run safely and stably while at the same time, the fuel cell output power is reasonably allocated so as to reduce it accordingly. The consumption of hydrogen improves the energy consumption economy and reduces the fluctuation of the fuel cell output power to improve the durability of the fuel cell.

The main contributions of this paper are:

- Considering the impact of the traffic environment on vehicle driving and the energy management of hydrogen fuel cell buses, a more complex traffic environment is constructed to improve the generalization of EMS;

- Using a DRL algorithm SAC to control a vehicle’s speed and fuel cell output power to effectively improve vehicle driving efficiency, safety, energy consumption economy, and to improve fuel cell life by reducing fuel cell output power;

- In the action space of the SAC algorithm, the action space is redirected to select an interval, thereby accelerating the convergence of deep reinforcement learning training and improving the effect of EMS.

The structure of this paper is organized as follows. Section 2 introduces the hydrogen fuel cell bus model, speed control model and traffic environment model; Section 3 introduces the speed control and fuel cell EMS based on DRL; Section 4 analyzes and compares the results; finally, Section 5 gives conclusions.

2. System Model

The research content of this paper is manifest in the concept that, when hydrogen fuel cell buses drive on urban expressways, energy management and energy saving optimizations are carried out under the guarantee of safe and stable driving. On the basis of this research content, a hydrogen fuel cell bus and its power system model—forming a hydrogen fuel cell bus—are established, as are a speed control model and a traffic environment model.

2.1. Fuel Cell Bus and Power System Model

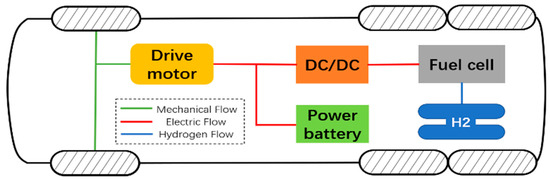

The basic parameters of the fuel cell hybrid electric bus used in this paper are shown in Table 1. The fuel cell system is composed mainly of a proton exchange membrane fuel cell and other accessories, and the vehicle is composed mainly of a fuel cell and a power cell that work together. The structure diagram of this is shown in Figure 1. In this hydrogen fuel cell bus, the fuel cell system boosts its output voltage through a DC/DC converter, and then connects in parallel with the power battery pack. Both the fuel cell system and the power battery system can directly transfer energy to the drive motor. In addition, the two can output energy at the same time to drive the motor with a large output power to drive the vehicle together.

Table 1.

Fuel cell hybrid bus vehicle parameters.

Figure 1.

Configuration diagram of fuel cell system.

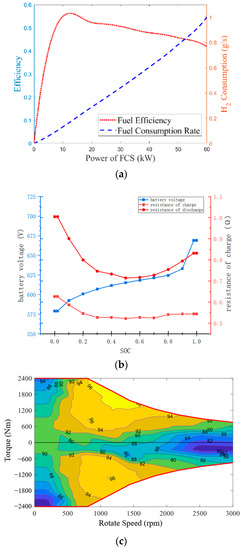

The relationship between the power system of the fuel cell bus is shown in Figure 2. The relationship between hydrogen consumption, efficiency and fuel cell power is shown in Figure 2a and is obtained by the interpolation method of the specific parameters of the vehicle. Figure 2b shows the power battery internal resistance characteristics. Finally, Figure 2c shows the relationship between the torque, speed and efficiency of the drive motor.

Figure 2.

Fuel cell model relationship. (a) Relationship between fuel cell efficiency and hydrogen consumption; (b) Internal resistance characteristics and voltage characteristics of power battery; (c) Drive motor efficiency.

In this fuel cell stack, the relationship between the output voltages of the cells is shown in Formulas (1) and (2), and the total voltage of the stack is shown in Formula (3).

In the formulae, the cell voltage, spark loss voltage, ohmic loss voltage, concentration loss voltage, and proton exchange membrane fuel cell stack voltage are expressed as , respectively. Additionally, activation loss resistance, ohmic loss resistance, and concentration loss resistance are expressed as , respectively. The over-the-voltage source is expressed as , while the output current is expressed as and the number of fuel cell cells is expressed as .

The power battery system in this paper can be expressed as shown in Formulas (4)–(7).

In the formulae, the output voltage of the power battery, and the voltage drop generated by the polarization phenomenon are expressed as , respectively. The ideal voltage source is expressed as , the power battery ammeter is shown as , and ohmic resistance and polarization resistance are expressed as , respectively. The polarization capacitance is expressed as , the load power of the power battery is expressed as , the initial charge of the power battery and the remaining charge are expressed as , respectively, and indicates the initial capacity of the battery.

The driving motor relationship is shown in Formula (8).

In the formula, the power of the drive motor is expressed as , the torque of the drive motor and the drive motor are expressed as , respectively, and the efficiency of the drive motor is expressed as .

In addition, the fuel cell bus powertrain receives physical limitations such as those shown in Formula (9).

where and are engine generator speed and torque, respectively.

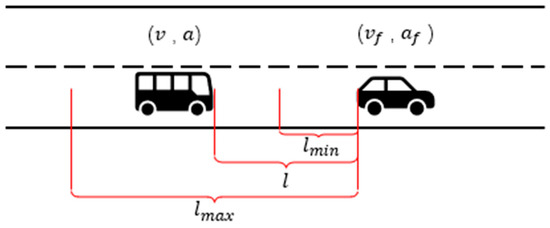

2.2. Velocity Control Model

As shown in Figure 3, for this study, the fuel cell bus (target vehicle) is defined as driving in an urban expressway. This is because the relevant passenger car model is restricted by relevant traffic laws and regulations and can only drive in the far-right lane, while, in real traffic, other cars and other models in the environment may have different driving styles and driving routes, and are able to drive in any lane under the premise of ensuring their own safe and efficient driving. This means that the target vehicle inevitably faces the problem of replacing the car in front. In this scenario, the conventional car-following model may have some hidden dangers with regard to the safe driving problem. For example, the vehicle in the adjacent lane may jump in line to the front of the target vehicle, causing the target vehicle to follow the car and, as a result, the distance is suddenly reduced. Therefore, in order to ensure that the vehicle is capable of emergency braking safely in the event of an emergency, this paper uses the maximum and minimum follow-up distances to ensure the safe driving of the vehicle. The minimum follow-up distance is defined as (10).

where is the speed of the hydrogen fuel cell bus.

Figure 3.

Velocity control model.

In order to ensure the normal driving of the target vehicle, its speed must also be kept within a relatively normal range to reduce its impact on the way in which the environmental vehicle is driven. However, it is expensive to obtain the speed information of other vehicles in the traffic environment, so the maximum following distance is defined to ensure that the speed of the target vehicle is not too different from the speed of the surrounding vehicles. The maximum following distance is as follows (11):

With the limitation of the maximum–minimum following distance, the longitudinal driving of the vehicle can be reasonably controlled to ensure the safe and stable driving of the vehicle under the condition that it matches the target vehicle’s own speed.

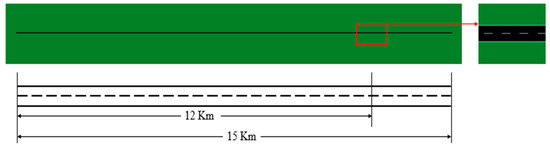

2.3. Traffic Environment Model

This paper mainly studies the EMS of fuel cell passenger cars based on longitudinal speed control in urban expressways, so a two-lane expressway is established, as shown in Figure 4. It is worth mentioning that the vehicle is arranged to drive in the far-right lane without considering the lane change factor, while other vehicles in the environment are not affected. This means that the target vehicle only needs to pay attention to its own speed to control the distance from the car in front and to ensure its own safe and efficient driving. Additionally, after changing the car in front (the car in front changes to the left or there are vehicles in adjacent lanes), the target vehicle must first make reasonable adjustments to its own speed to continue safe passage. According to statistics, the settings of traffic flow are shown in Table 2.

Figure 4.

Traffic environment model.

Table 2.

Traffic environment parameters.

3. Fuel Cell Bus EMS and Speed Control Based on DRL

3.1. Problem Description

The main research purpose of this paper is to ensure the safe and stable driving of the fuel cell bus by controlling its longitudinal driving. On this basis, we are able to optimize the fuel cell energy management system to reduce hydrogen consumption and minimize the large fluctuation of the fuel cell output power to prolong its life. The research purpose can be summarized as Formula (12):

where represent user-defined weight parameters for different targets, represents the equivalent cost of hydrogen consumption of the engine, represents the difference between the output power fluctuations of the fuel cell at adjacent times, represents the fuel cell bus and represents comfortable follow-up cost.

is determined by Figure 2a, and the output power directly determines the hydrogen consumption. By calculating the power battery consumption, it is converted into equivalent hydrogen consumption, which is added to the direct hydrogen consumption to become the final equivalent hydrogen consumption, which is then defined in its cost function as reaching an optimum as it becomes ever smaller.

is determined by the output power difference of adjacent time steps, and its cost function is defined as Formula (13):

where , and are the fuel cell output power at time and time , respectively.

In the speed control model, the collision of the fuel cell bus or the absence of the maximum–minimum following distance will lead to an increase in the safety cost, so its cost function is defined as Equation (14):

where is the following distance of the fuel cell bus at the current moment.

When the following distance of the vehicle is less than the minimum following distance, the current vehicle speed is set as the safety cost, i.e., the greater the speed at this time, the greater the cost of ensuring the safe driving of the vehicle. Additionally, when the following distance of the vehicle is greater than the maximum following distance, the cost function set for the vehicle speed is not sufficient to reflect the safety performance, so the difference between the following distance and the maximum following distance of the vehicle at this time is set as the safety cost.

During the driving process of the fuel cell bus, the real-time change of the vehicle acceleration not only affects vehicle comfort, but also has a certain impact on the fuel cell hydrogen consumption level. Thus, the acceleration of the vehicle and the jerk value are closely related to the comfortable driving of the vehicle and the reduction of hydrogen consumption. The larger the jerk value, the lower the comfort and the higher the hydrogen consumption. Therefore, its cost function is set as Formula (15):

where is the acceleration of the vehicle, and the acceleration value of the hydrogen fuel cell bus involved in this paper is .

3.2. Deep Reinforcement Learning

In DRL, the agent makes random actions to interact with the environment and teaches itself according to the rewards (including negative rewards, or punishments) obtained from the interaction, and thus becomes more adaptable to the environment. This paper uses the soft actor–critic (SAC) algorithm to solve the problem proposed above.

Compared with the DDPG algorithm, which is widely used in deep reinforcement learning algorithms, the SAC algorithm also includes actor networks and critic networks. However, unlike the DDPG algorithm, the SAC algorithm integrates maximum entropy learning into the actor–critic network, i.e., in SAC, when the reward obtained by the agent in the interaction is maximized, the entropy of its policy is also maximized, which ensures that the algorithm has a better exploration ability and therefore offers a better performance.

3.3. Speed Control and EMS of Fuel Cell Bus Based on SAC

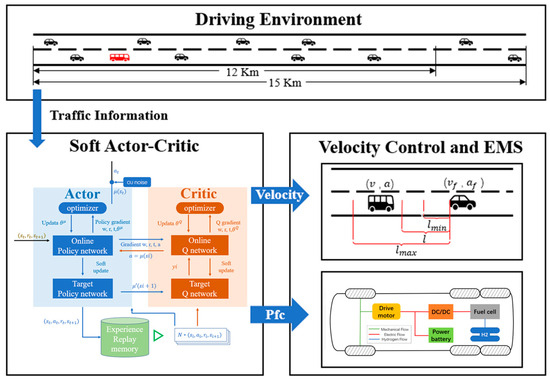

Based on the problem of speed control and energy management of hydrogen fuel cell buses proposed above, a speed planning and fuel cell bus energy management model based on SAC algorithm is designed. The agent controls the longitudinal speed of the vehicle based on the environmental information provided by the state space to ensure the safe and comfortable driving of the vehicle; at the same time, the optimization of the energy management system is completed through the speed information to reduce the equivalent hydrogen consumption and power fluctuation of the fuel cell bus.

In the SAC algorithm, the goal of solving is the maximum value of the cumulative reward, and the reward function is:

The selection of the state space needs to ensure the complete sampling of the state space. In this study, the action space includes the speed and acceleration of the hydrogen fuel cell bus, the speed of the front vehicle of the hydrogen fuel cell bus , the acceleration and the distance between the target vehicle , the hydrogen fuel cell bus equivalent hydrogen consumption , the hydrogen fuel cell output power , and power battery remaining charge . Expressed as:

In the speed control of hydrogen fuel cell buses, because there is no need to consider the lane change factor, only the longitudinal acceleration is defined in the speed control action space; in the energy management system, the output power of the power system is directly related to the torque and speed of the drive motor, and the output power can be determined. The optimal speed and torque can be determined, so that, in energy management, the action space is defined as the output power of the fuel cell. That is, the action space is:

The SAC algorithm hyperparameter settings are shown in Table 3.

Table 3.

SAC algorithm hyperparameters.

In particular, and as shown in Figure 2a, the output of the fuel cell power is directly related to the efficiency, and a greater efficiency is achieved between the fuel cell output power . This shows, to a certain extent, that the fuel cell output power can obtain a larger output power in this interval under the condition that the hydrogen consumption is small, which is critical for the reduction of the equivalent hydrogen consumption. Therefore, this paper optimizes the selection of the action space of the SAC algorithm (The optimized SAC algorithm is called SAC–OPT) and sets the action value selection function of the fuel cell output power to the expected value, which is more biased towards the high-efficiency working interval of the fuel cell. Under the premise of not affecting the randomness strategy of the action space of the SAC algorithm, this change can effectively reduce the consumption of hydrogen and reduce the fluctuation of the output power of the fuel cell to a certain extent, which in turn can effectively improve the life of the hydrogen fuel cell.

After determining the key elements of the SAC algorithm, the complete framework of the research content of this paper is proposed as shown in Figure 5.

Figure 5.

Overall process.

3.4. Simulation Scene Construction

This paper uses Simulation of Urban Mobility (SUMO) as a simulation platform to construct a car-following scene. In SUMO, one can use the Traffic Control Interface (Traci interface) to connect with Python and easily obtain all necessary information in the traffic environment, such as the main-vehicle speed, acceleration, and surrounding vehicle information. The acquisition of this information allows us to better complete the control of the target vehicle by the DRL algorithm. At the same time, the SUMO simulation software includes a variety of complete car-following models and lane-changing models, which can more realistically simulate the normal driving of vehicles in the traffic environment to improve the simulation effect.

Through the traffic environment model established above, a simulation environment is built in the SUMO simulation software, and the specific road and vehicle parameters are shown in Table 4. In the simulation environment established in this paper, the detailed road design is shown in Figure 6.

Table 4.

Road and vehicle parameters.

Figure 6.

Simulation of the road.

In order to improve the authenticity of the simulation, when the target vehicle enters the road, it is necessary to ensure that the traffic flow in the traffic environment is already in a normal state, so that, in each episode, the target vehicle will not enter the road until the 150th step. This ensures that there are enough vehicles in front of the environment to affect the driving of the target vehicle. At the same time, it should be noted that, in order to ensure that the simulation program does not make any errors due to the target vehicle leaving the road, only the first 12 km of road data are counted in the data analytics processing.

4. Results and Analysis

In this paper, the SAC algorithm in-depth reinforcement learning is used to solve the speed control and energy management problems of hydrogen fuel cell buses. Therefore, in the comparative analysis, the DDPG algorithm of the same DRL is established to compare the differences in training efficiency and training effect of the algorithm. At the same time, in speed control and energy management, the traditional IDM follow-up model and DP dynamic optimization algorithm are used to increase the effectiveness of the comparative analysis.

4.1. DDPG Algorithm

The DDPG algorithm is used to solve the optimization goal proposed in the problem description of this paper. The algorithm reward, state space and action space are consistent with the SAC algorithm. The hyperparameters of the DDPG algorithm are shown in Table 5.

Table 5.

DDPG algorithm hyperparameters.

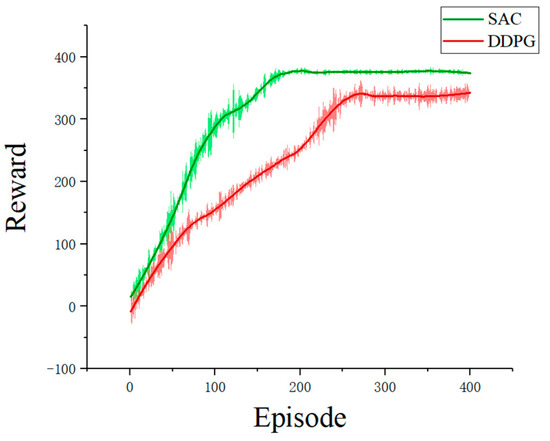

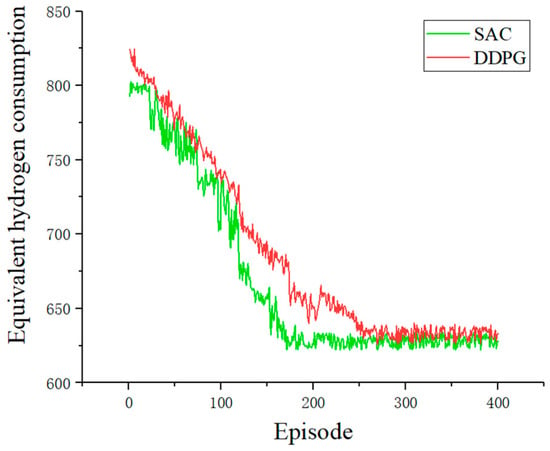

In the training of deep reinforcement learning, when the agent and the environment complete the interaction, the maximum reward can be obtained, i.e., the reward convergence of the deep reinforcement learning algorithm is completed. The convergence speed of the reward function is very important, as it is the high efficiency of the deep reinforcement learning algorithm. To enable intuitive performance, the speed control cost function and the energy management cost function are added to the total reward function in the training stage. In order to facilitate the intuitive comparison of the training effects of the two algorithms on the two cost functions, the two cost functions are analyzed separately. The convergence rates of the reward function of the SAC algorithm and the DDPG algorithm are shown in Figure 7.

Figure 7.

Comparison of the convergence efficiencies of the two algorithms.

The DDPG algorithm adopts a deterministic strategy to select actions for environment exploration. This means that the exploration efficiency of the agent is lower than that of the SAC algorithm, so the convergence speed is slower. As can be seen in Figure 7, the SAC algorithm can complete the convergence of the reward function in 180 episodes, while the DDPG algorithm completed the convergence of the reward function in 260 episodes. At the same time, thanks to the principle of maximum entropy in the SAC algorithm, the SAC algorithm is more sufficient in the exploration of action selection, which also leads to the final convergence. The reward value has been improved compared with the DDPG algorithm, and the average value of the converged reward function has increased by 8.22%.

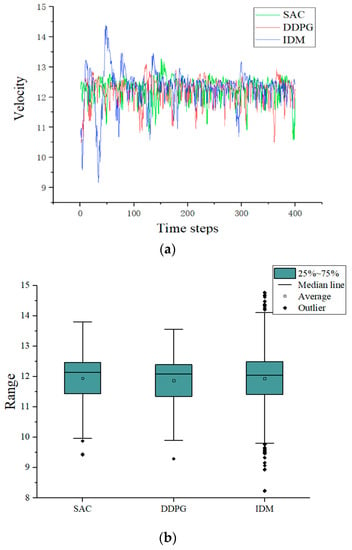

4.2. Comparative Analysis of Speed Control

In terms of the speed control of hydrogen fuel cell passenger cars, the impact of the traffic environment designed in this paper on the driving of the target vehicle mainly comes from the driving of the original following vehicle of the target vehicle and the change of the following target caused by the entry of vehicles in adjacent lanes. In addition to the premise that the target vehicle must drive safely, smooth driving is also an important consideration. This paper uses the speed control model based on the DDPG algorithm to compare and analyze with the SUMO–IDM following model. The SUMO–IDM following model is a self-contained model in the SUMO simulation software. It is an intelligent driver model by Martin Treiber, one which has high generality and will adjust the driving speed of the vehicle in real time according to the distance and speed of the car in front to ensure more efficient driving. However, its driving style is extreme, and the vehicle acceleration changes are in a high range, which has a certain impact on comfort. The speed effect comparison is shown in Figure 8a, and the acceleration effect comparison is shown in Figure 8b. As can be seen from Figure 8a, the vehicle speed fluctuates greatly based on the IDM follow-up model, which makes the vehicle acceleration change more obvious. Therefore, in Figure 8b, there are more outliers under the IDM model.

Figure 8.

Comparison of the speeds of the three methods. (a) Speed conditions of bus under different strategies; (b) Speed diffusion of bus under different strategies.

As can be seen in Table 6, the IDM model does not have a reward function constraint, and it determines the following effect of the hydrogen fuel cell bus through the internal function of the model, which sacrifices the greater comfort performance under the premise of giving priority to the driving efficiency, so the average speed is larger. However, the acceleration and acceleration change values are at the highest level. Compared with the other two methods, the smoothness of the form is poor, which greatly reduces the ride comfort of the hydrogen fuel cell bus. At the same time, for the energy management system, frequent and large accelerations and decelerations will inevitably lead to higher hydrogen consumption. Additionally, the DDPG algorithm has a lower average vehicle speed due to poor training effect. Among the three speed control methods, SAC-based speed control ensures the fastest average speed, which is consistent with the effect of SUMO–IDM. Additionally, the speed control based on SAC maintains a stable and gentle acceleration change, which makes the acceleration and acceleration change value achieve the best effect among the three methods. Compared with the SUMO–IDM model, the acceleration and acceleration variation values decreased by 10.22% and 11.57%, respectively. Compared with DDPG, the average speed increased by 1.18%, and the average acceleration and acceleration change values decreased by 4.82% and 5.31%, respectively. This is enough to prove that the optimization of the SAC algorithm under speed control is very effective.

Table 6.

Data under different speed control strategies.

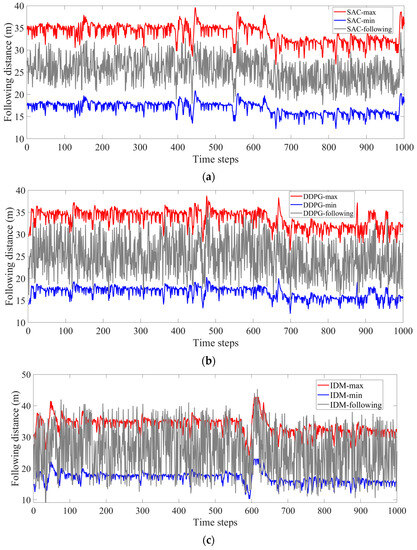

In terms of speed control, the following distance is also an important criterion. The maximum and minimum following distances proposed according to Equations (10) and (11) form a reasonable following distance interval. The target vehicle traveling in this interval is considered to be safe. The following distances of the three methods are shown in Figure 9. Figure 9a–c show the following distances under SAC, DDPG, and SUMO–IDM models, respectively.

Figure 9.

Comparison of following distances under three strategies.

It can be clearly seen from the figure that there is a large gap between the following effects of the three different methods. In the actual situation of vehicle driving, the driver’s grasp of the following distance mainly comes from the speed of the vehicle being driven, the speed of the vehicle in front, and the distance between the two vehicles. The following range applied in this paper can readily meet the requirements of safe driving. In the simulation, although the IDM model did not collide, the following distance did not meet the following range proposed in this paper, and the following effect was very unstable due to frequent changes in acceleration. This is because the maximum following distance and minimum following distance used in this paper are directly related to their own vehicle speed to ensure that there is enough distance to deal with emergencies encountered during vehicle driving. While this makes vehicle driving safer and more stable, the IDM model requires that the following distance, the speed, and the distance of the previous vehicle be considered, so, as can be seen in Figure 9c, it will exceed the following range adopted in this paper for the target vehicle trained by the deep reinforcement learning algorithm. Though the following effect has been greatly improved, and there is enough reaction distance in the face of unsafe and unpredictable situations, such as sudden deceleration of the car in front, the following effect is still insufficient due to the small reward of the final convergence of the DDPG algorithm compared with the SAC algorithm.

4.3. Comparative Analysis of Energy Management

The EMS proposed in this paper is mainly aimed at the energy-saving optimization of equivalent hydrogen consumption and the reduction of fuel cell output power fluctuations. The reduction effect of the SAC algorithm compared with the DDPG algorithm for equivalent hydrogen consumption is shown in Figure 10. It can be seen in the figure that due to the difference in reward functions, once the two algorithms have converged, their equivalent hydrogen consumption levels are also different. The EMS based on the SAC algorithm is slightly better than the EMS based on the DDPG algorithm in the equivalent hydrogen consumption effect.

Figure 10.

Comparison of equivalent hydrogen consumption between the SAC and DDPG algorithms.

It can be seen from the figure that, due to the influence of the convergence speed of the speed control model and the value of the reward function after convergence, the equivalent hydrogen consumption of the hydrogen fuel cell bus under the control of the DDPG algorithm is higher than that of the SAC algorithm after driving 12 km.

Using the driving conditions obtained by the SAC algorithm in Figure 8, the DP algorithm is used as the EMS for energy saving optimization. Additionally, the DP algorithm is used as the benchmark for the comparison of equivalent hydrogen consumption. As shown in Table 7, the SAC algorithm has been optimized, and the equivalent hydrogen consumption of 100 km is 95.52% of the DP algorithm, while the EMS based on the DDPG algorithm can only reach 93.88% of the DP algorithm.

Table 7.

Equivalent hydrogen consumption of 100 km under different EMS methods.

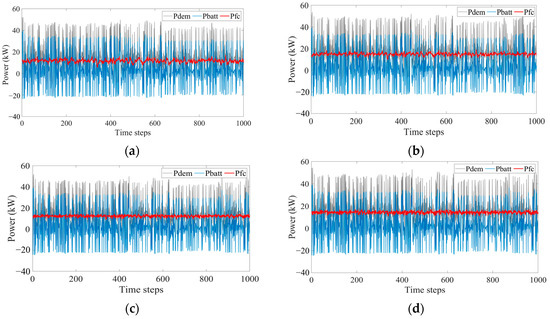

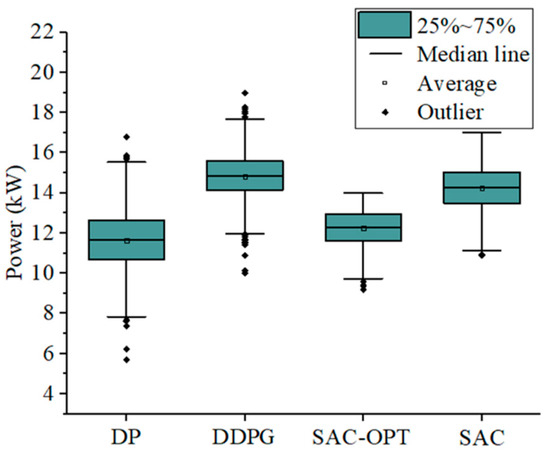

The fuel cell and power cell output power and total power obtained by the energy management strategies established by the three different methods are shown in Figure 11. It can be clearly seen in Figure 11a–c that the three methods have no obvious deficiencies. However, in the fuel cell output power fluctuation, the deep reinforcement learning algorithm is significantly optimized for the fuel cell output power, as shown in Figure 12. As mentioned above, the SAC algorithm is optimized in the action space selection strategy, i.e., that in the action selection randomness strategy of the SAC algorithm, the expected value of the normal distribution is changed to ensure it is more biased towards a high-efficiency working interval of the fuel cell. At the same time, this change also greatly reduces the fluctuation range of the fuel cell output power. The fuel cell output power before and after optimization of the action space is shown in Figure 11c,d.

Figure 11.

Power under different EMS methods. (a) The power sources of DP; (b) The power sources of DDPG; (c) The power sources of SAC-OPT; (d) The power sources of SAC.

Figure 12.

Power fluctuation under different methods.

The traffic environment in this paper requires the hydrogen fuel cell bus to be in the expressway and to maintain a high speed. In the deep reinforcement learning algorithm, the text incorporates the fluctuation value of the fuel cell output power into the reward function, which effectively reduces the fluctuation of the fuel cell output power, which is critical for improving the life of the fuel cell. As shown in Figure 12 and Table 8, DP and DDPG have more outliers than the other two cases. Because the agent is more fully explored under the SAC strategy, it has a better ability to reduce output power fluctuations. The interval between the 75th percentile and the 25th percentile is the main fluctuation range. The fluctuation range of fuel cell output power under the DP strategy is 1.97 kW, while the fluctuation ranges of fuel cell output power under the DDPG, SAC and SAC–OPT strategies are 1.46 kW, 1.57 kW and 1.33 kW, respectively. Compared with the DP strategy, the SAC–OPT strategy with optimized action space has a fluctuation range of 32.65%. The optimization of the action space also ensures that the fluctuation range of the SAC–OPT strategy decreases by 15.29% compared with the SAC strategy.

Table 8.

Output power fluctuation of fuel cell with four strategies (kW).

5. Conclusions

Aiming at the energy-saving driving problem of hydrogen fuel cell buses in complex traffic environments, this paper simultaneously optimizes the speed control and energy management of hydrogen fuel cell buses through deep reinforcement learning algorithms. Using SUMO to build a 12 km effective distance urban expressway as a complex traffic environment, the longitudinal speed control of the fuel cell bus is carried out to restrain its following distance and to ensure its safe and efficient driving; at the same time, the real-time vehicle speed is used as the working condition data. The fuel cell energy management system is optimized, and the expected value of the action selection function is optimized in the algorithm action space, effectively reducing the equivalent hydrogen consumption of the hydrogen fuel cell bus, reducing the fluctuation of the fuel cell output power, and improving the fuel cell’s durability.

In terms of speed control, compared with the SUMO–IDM car-following model, the average speed of vehicles is kept the same, and the average acceleration and acceleration change values decrease by 10.22% and 11.57%, respectively. Compared with DDPG, the average speed increases by 1.18%, and the average acceleration and acceleration change values are decreased by 4.82% and 5.31%, respectively. In terms of energy management, the hydrogen consumption of the SAC–OPT-based energy management strategy reaches 95.52% of that of the DP algorithm, and the fluctuation range is reduced by 32.65%. Compared with the SAC strategy, the fluctuation range is reduced by 15.29%. The durability of that fuel cell is effectively improved.

Judging from the current research, DRL has achieved exciting results in simulation scenarios, including the field of autonomous driving and the energy management of hydrogen fuel cell vehicles, and has developed more mature and accessible autonomous driving simulation systems and energy management strategies. However, in terms of real vehicle tests and practical applications, the capture of many environmental factors and the development and application of on-board hardware are still key issues that need to be solved. This is also the deficiency of this paper, i.e., that effective experiments with real vehicles cannot be carried out. However, with the continuous updating of DRL, the upgrading of on-board sensors and the development of on-board hardware, DRL will become ever more mature in the automatic driving and energy management of hydrogen fuel cell vehicles and may even become the primary technology for high-level unmanned driving.

Author Contributions

Conceptualization, F.Y. and X.S.; Methodology, Y.S., F.Y. and X.S.; Software, F.Y. and W.G.; Validation, Y.S., C.P. and W.G.; Formal analysis, J.Z. (Jinming Zhang); Investigation, G.W.; Resources, G.W.; Writing—original draft, Y.S.; Writing—review & editing, C.P.; Supervision, J.Z. (Jiaming Zhou); Project administration, J.Z. (Jiaming Zhou) and J.Z. (Jinming Zhang); Funding acquisition, J.Z. (Jiaming Zhou), J.Z. (Jinming Zhang) and G.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by Weifang University of Science and Technology High-level Talent Research Start-up Fund Project with KJRC2023001. This research was funded by the National Natural Science Foundation of China Grant No. 52272367.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code, data and sources involved in this paper need to be kept confidential for special reasons and therefore cannot be made public.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, H.; Ravey, A.; N’diaye, A.; Djerdir, A. Online adaptive equivalent consumption minimization strategy for fuel cell hybrid electric vehicle considering power sources degradation. Energy Convers. Manag. 2019, 192, 133–149. [Google Scholar] [CrossRef]

- Ma, Y.; Li, C.; Wang, S. Multi-objective energy management strategy for fuel cell hybrid electric vehicle based on stochastic model predictive control. ISA Trans. 2022, 131, 178–196. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Obeid, H.; Laghrouche, S.; Hilairet, M.; Djerdir, A. A novel second-order sliding mode control of hybrid fuel cell/super capacitors power system considering the degradation of the fuel cell. Energy Convers. Manag. 2021, 229, 113766. [Google Scholar] [CrossRef]

- Yue, M.; Jemei, S.; Gouriveau, R.; Zerhouni, N. Review on health-conscious energy management strategies for fuel cell hybrid electric vehicles: Degradation models and strategies. Int. J. Hydrogen Energy 2019, 44, 6844–6861. [Google Scholar] [CrossRef]

- Yue, M.; Al Masry, Z.; Jemei, S.; Zerhouni, N. An online prognostics-based health management strategy for fuel cell hybrid electric vehicles. Int. J. Hydrogen Energy 2021, 46, 13206–13218. [Google Scholar] [CrossRef]

- Jiang, H.; Xu, L.; Li, J.; Hu, Z.; Ouyang, M. Energy management and component sizing for a fuel cell/battery/supercapacitor hybrid powertrain based on two-dimensional optimization algorithms. Energy 2019, 177, 386–396. [Google Scholar] [CrossRef]

- Hu, H.; Yuan, W.W.; Su, M.; Ou, K. Optimizing fuel economy and durability of hybrid fuel cell electric vehicles using deep reinforcement learning-based energy management systems. Energy Convers. Manag. 2023, 291, 117288. [Google Scholar] [CrossRef]

- Han, J.; Park, Y.; Kum, D. Optimal adaptation of equivalent factor of equivalent consumption minimization strategy for fuel cell hybrid electric vehicles under active state inequality constraints. J. Power Sources 2014, 267, 491–502. [Google Scholar] [CrossRef]

- Boban, M.; Kousaridas, A.; Manolakis, K.; Eichinger, J.; Xu, W. Connected roads of the future: Use cases, requirements, and design considerations for vehicle-to-everything communications. IEEE Veh. Technol. Mag. 2018, 13, 110–123. [Google Scholar] [CrossRef]

- Pourrahmani, H.; Yavarinasab, A.; Zahedi, R.; Gharehghani, A.; Mohammadi, M.H.; Bastani, P.; Van Herle, J. The applications of Internet of Things in the automotive industry: A review of the batteries, fuel cells, and engines. Internet Things 2022, 19, 100579. [Google Scholar] [CrossRef]

- Li, K.; Zhou, J.; Jia, C.; Yi, F.; Zhang, C. Energy sources durability energy management for fuel cell hybrid electric bus based on deep reinforcement learning considering future terrain information. Int. J. Hydrogen Energy 2023, in press. [Google Scholar] [CrossRef]

- Lu, D.; Yi, F.; Hu, D.; Li, J.; Yang, Q.; Wang, J. Online optimization of energy management strategy for FCV control parameters considering dual power source lifespan decay synergy. Appl. Energy 2023, 348, 121516. [Google Scholar] [CrossRef]

- Lü, X.; Wu, Y.; Lian, J.; Zhang, Y.; Chen, C.; Wang, P.; Meng, L. Energy management of hybrid electric vehicles: A review of energy optimization of fuel cell hybrid power system based on genetic algorithm. Energy Convers. Manag. 2020, 205, 112474. [Google Scholar] [CrossRef]

- Ramadan, H.S.; Becherif, M.; Claude, F. Energy management improvement of hybrid electric vehicles via combined GPS/rule-based methodology. IEEE Trans. Autom. Sci. Eng. 2017, 14, 586–597. [Google Scholar] [CrossRef]

- Liu, T.; Hu, X. A bi-level control for energy efficiency improvement of a hybrid tracked vehicle. IEEE Trans. Ind. Inform. 2018, 14, 1616–1625. [Google Scholar] [CrossRef]

- Yang, D.; Wang, L.; Yu, K.; Liang, J. A reinforcement learning-based energy management strategy for fuel cell hybrid vehicle considering real-time velocity prediction. Energy Convers. Manag. 2022, 274, 116453. [Google Scholar] [CrossRef]

- Li, Q.; Meng, X.; Gao, F.; Zhang, G.; Chen, W.; Rajashekara, K. Reinforcement learning energy management for fuel cell hybrid system: A review. IEEE Ind. Electron. Mag. 2022, 2–11. [Google Scholar] [CrossRef]

- Xie, S.; Sun, F.; He, H.; Peng, J. Plug-in hybrid electric bus energy management based on dynamic programming. Energy Procedia 2016, 104, 378–383. [Google Scholar] [CrossRef]

- Liu, T.; Yu, H.; Guo, H.; Qin, Y.; Zou, Y. Online energy management for multimode plug-in hybrid electric vehicles. IEEE Trans. Ind. Inform. 2018, 15, 4352–4361. [Google Scholar] [CrossRef]

- Škugor, B.; Deur, J. Dynamic programming-based optimisation of charging an electric vehicle fleet system represented by an aggregate battery model. Energy 2015, 92, 456–465. [Google Scholar] [CrossRef]

- Ahmadizadeh, P.; Mashadi, B.; Lodaya, D. Energy management of a dual-mode power-split powertrain based on the Pontryagin’s minimum principle. IET Intell. Transp. Syst. 2017, 11, 561–571. [Google Scholar] [CrossRef]

- Hou, C.; Ouyang, M.; Xu, L.; Wang, H. Approximate Pontryagin’s minimum principle applied to the energy management of plug-in hybrid electric vehicles. Appl. Energy 2014, 115, 174–189. [Google Scholar] [CrossRef]

- Li, X.; Han, L.; Liu, H.; Wang, W.; Xiang, C. Real-time optimal energy management strategy for a dual-mode power-split hybrid electric vehicle based on an explicit model predictive control algorithm. Energy 2019, 172, 1161–1178. [Google Scholar] [CrossRef]

- Fu, Z.; Zhu, L.; Tao, F.; Si, P.; Sun, L. Optimization based energy management strategy for fuel cell/battery/ultracapacitor hybrid vehicle considering fuel economy and fuel cell lifespan. Int. J. Hydrogen Energy 2020, 45, 8875–8886. [Google Scholar] [CrossRef]

- Ahmadi, S.; Bathaee, S.M.T.; Hosseinpour, A.H. Improving fuel economy and performance of a fuel-cell hybrid electric vehicle (fuel-cell, battery, and ultra-capacitor) using optimized energy management strategy. Energy Convers. Manag. 2018, 160, 74–84. [Google Scholar] [CrossRef]

- Li, P.; Huangfu, Y.; Tian, C.; Quan, S.; Zhang, Y.; Wei, J. An Improved Energy Management Strategy for Fuel Cell Hybrid Vehicles Based on Pontryagin’s Minimum Principle. IEEE Trans. Ind. Appl. 2022, 58, 4086–4097. [Google Scholar]

- Wang, Y.; Zhang, Y.; Zhang, C.; Zhou, J.; Hu, D.; Yi, F.; Fan, Z.; Zeng, T. Genetic algorithm-based fuzzy optimization of energy management strategy for fuel cell vehicles considering driving cycles recognition. Energy 2023, 263, 126112. [Google Scholar] [CrossRef]

- Min, D.; Song, Z.; Chen, H.; Wang, T.; Zhang, T. Genetic algorithm optimized neural network based fuel cell hybrid electric vehicle energy management strategy under start-stop condition. Appl. Energy 2022, 306, 118036. [Google Scholar] [CrossRef]

- Wu, P.; Partridge, J.; Anderlini, E.; Liu, Y.; Bucknall, R. Near-optimal energy management for plug-in hybrid fuel cell and battery propulsion using deep reinforcement learning. Int. J. Hydrogen Energy 2021, 46, 40022–40040. [Google Scholar] [CrossRef]

- Tang, X.; Zhou, H.; Wang, F.; Wang, W.; Lin, X. Longevity-conscious energy management strategy of fuel cell hybrid electric Vehicle Based on deep reinforcement learning. Energy 2022, 238, 121593. [Google Scholar] [CrossRef]

- Huang, Y.; Hu, H.; Tan, J.; Lu, C.; Xuan, D. Deep reinforcement learning based energy management strategy for range extend fuel cell hybrid electric vehicle. Energy Convers. Manag. 2023, 277, 116678. [Google Scholar] [CrossRef]

- Huo, W.; Chen, D.; Tian, S.; Li, J.; Zhao, T.; Liu, B. Lifespan-consciousness and minimum-consumption coupled energy management strategy for fuel cell hybrid vehicles via deep reinforcement learning. Int. J. Hydrogen Energy 2022, 47, 24026–24041. [Google Scholar] [CrossRef]

- Sun, H.; Fu, Z.; Tao, F.; Zhu, L.; Si, P. Data-driven reinforcement-learning-based hierarchical energy management strategy for fuel cell/battery/ultracapacitor hybrid electric vehicles. J. Power Sources 2020, 455, 227964. [Google Scholar] [CrossRef]

- Yan, M.; Xu, H.; Li, M.; He, H.; Bai, Y. Hierarchical predictive energy management strategy for fuel cell buses entering bus stops scenario. Green Energy Intell. Transp. 2023, 2, 100095. [Google Scholar] [CrossRef]

- Milanes, V.; Shladover, S.E.; Spring, J.; Nowakowski, C.; Kawazoe, H.; Nakamura, M. Cooperative adaptive cruise control in real traffic situations. IEEE Trans. Intell. Transp. Syst. 2013, 15, 296–305. [Google Scholar] [CrossRef]

- Wolf, P.; Kurzer, K.; Wingert, T.; Kuhnt, F.; Zollner, J.M. Adaptive behavior generation for autonomous driving using deep reinforcement learning with compact semantic states. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 993–1000. [Google Scholar]

- Wang, P.; Chan, C.Y. Autonomous ramp merge maneuver based on reinforcement learning with continuous action space. arXiv 2018, arXiv:1803.09203. [Google Scholar]

- Zhu, M.; Wang, X.; Wang, Y. Human-like autonomous car-following model with deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2018, 97, 348–368. [Google Scholar] [CrossRef]

- Barkenbus, J.N. Eco-driving: An overlooked climate change initiative. Energy Policy 2010, 38, 762–769. [Google Scholar] [CrossRef]

- Barth, M.; Boriboonsomsin, K. Energy and emissions impacts of a freeway-based dynamic eco-driving system. Transp. Res. Part D Transp. Environ. 2009, 14, 400–410. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).