Abstract

In recent years, the protection and management of water environments have garnered heightened attention due to their critical importance. Detection of small objects in unmanned aerial vehicle (UAV) images remains a persistent challenge due to the limited pixel values and interference from background noise. To address this challenge, this paper proposes an integrated object detection approach that utilizes an improved YOLOv5 model for real-time detection of small water surface floaters. The proposed improved YOLOv5 model effectively detects small objects by better integrating shallow and deep features and addressing the issue of missed detections and, therefore, aligns with the characteristics of the water surface floater dataset. Our proposed model has demonstrated significant improvements in detecting small water surface floaters when compared to previous studies. Specifically, the average precision (AP), recall (R), and frames per second (FPS) of our model achieved 86.3%, 79.4%, and 92%, respectively. Furthermore, when compared to the original YOLOv5 model, our model exhibits a notable increase in both AP and R, with improvements of 5% and 6.1%, respectively. As such, the proposed improved YOLOv5 model is well-suited for the real-time detection of small objects on the water’s surface. Therefore, this method will be essential for large-scale, high-precision, and intelligent water surface floater monitoring.

1. Introduction

Water is the source of life, and oceans and rivers cover about 71% of the Earth’s area [1]. Rivers constitute a vital component of the global water cycle and serve a crucial function in facilitating the transfer [2]. However, recent global economic expansion and urbanization have caused significant damage to the natural environment [3] and severe water pollution [4]. Therefore, real-time monitoring of water environments has become crucial due to the ongoing deterioration of water quality. At present, the water’s surface is susceptible to problems, such as exposure or dim light due to the intensity of sunlight, which results in less information about the target features and difficult recognition results.

Object detection is one of the most widely used applications in UAV missions. Due to the angle and flight height of UAV photography, small objects account for a large proportion of UAV images compared to general scenes [5]. Small object detection has been one of the key difficulties in the field of object detection [6] with problems, such as invalid image feature information and blurred object features. To solve this problem, many scholars have proposed solutions. Xia et al. proposed an automated driving system (ADS) data acquisition and analytics platform for vehicle trajectory extraction, reconstruction, and evaluation. In addition to collecting various sensor data, the platform can also use deep learning to detect small targets, such as vehicle trajectories [7]. Liu et al. proposed a model for tassel detection in maize, named yolov5-tassel. The authors trained the yolov5-tassel model based on UAV remote sensing images and achieved an mAP value of 44.7%, which is an improvement compared to FCOS, RetinaNet, and YOLOv5 in terms of detecting small tassels in maize [8]. Liu et al. proposed multibranch parallel feature pyramid networks (MPFPN) to detect small objects under UAV images. The MPFPN model applies the SSAM attention module [9] to attenuate the effect of background noise and uses cascade architecture in the Fast R-CNN stage to achieve a more powerful localization [10]. Chen et al. proposed small object detection networks based on a classification-oriented super-resolution generative adversarial network (CSRGAN), which is a model that adds classification branches and introduces classification losses into a typical SRGAN [11]. The experimental results demonstrate that CSRGAN outperforms VGG16 in classification [12]. In this study, UAV technology and deep learning are applied to small object detection at the same time. However, the water’s surface is susceptible to problems, such as exposure or dim light due to the intensity of sunlight, which results in less information about the water surface floater’s features and difficult recognition results. Deep learning models also face some problems when detecting a small water floater, such as small water surface floaters losing some of their features in the process of down-sampling, which leads to a lack of ability to extract features from global and low detection accuracy and poor feature recognition of a small water surface floater. Therefore, the direct application of the original YOLOv5 to UAV image object detection is not very effective. At the same time, UAV is also known as a powerful complement to the conventional water environment and assessment and has been gradually applied to water environment detection. So, this study proposes an efficient and accurate method based on YOLOV5 for the detection of small water surface floaters in UAV-captured images. The model can effectively locate the water surface floater and thus assist in the water surface floater salvage work.

Our contribution can be summarized as follows:

- We have improved small water surface floater detection in the YOLOv5 network architecture by incorporating a small object detection head from a shallow layer. This integration of spatial and semantic feature information maximizes the retention of critical feature information for small water surface floaters, enhancing their detection performance.

- We have replaced the loss function CIOU with SIOU, which considers the direction of the real frame and the prediction frame, to improve the effectiveness of small water surface floater detection. This modification accelerates the convergence of the model.

- We have replaced the non-maximum suppression function (NMS) with soft-NMS, which significantly improves the detection performance of small water surface floaters in our improved YOLOv5 model. To prevent small object prediction boxes from being deleted and causing missed detection in soft-NMS, we have added a weight function.

The following sections of this paper are organized as follows: Section 2 mainly introduces commonly used object detection methods and object detection based on unmanned aerial vehicle images. Section 3 introduces the methods employed to detect small objects on the water surface floater, the research data sources utilized in this study, and some experimental evaluation metrics. Section 4 analyzes the statistical information of the water surface floaters dataset and summarizes the results of the object detection. Section 5 analyzes the effectiveness of our proposed object detection method for detecting small objects and potential cross-disciplinary applications. Lastly, in Section 6, we conclude the paper and suggest avenues for future research.

2. Related Work

2.1. Object Detection

Computer vision research is mainly concerned with image classification, object detection, object tracking, semantic segmentation, and instance segmentation. Object detection is one of the most fundamental and challenging tasks in the field of computer vision [13]. Exploring efficient real-time object detection models has been a hot research topic in recent years [14]. Traditional object detection methods usually include region proposal, feature extraction, feature fusion, and classifier training, all of which require the laborious manual production of object features and must be completed step by step [15]. Therefore, traditional object detection methods have the drawbacks of massive redundancy, time-consuming computation, and low accuracy. With the rapid development of deep learning theory, deep learning has made great progress in the field of computer vision, such as image classification and object detection [16]. Since then, object detection has entered a new phase. In the 2012 ImageNet competition, compared with the traditional method, A. Krizhevsky et al. used a convolutional neural network (CNN) [17] to make image classification results much improved. CNN gives region suggestions through a region suggestion network (RPN) [18] at a low cost, which can significantly improve the efficiency of object detection. In 2014, R. Girshick et al. [19] used a region convolutional neural network for the first time in the field of object detection. The detection results were much improved. Compared to traditional methods, this end-to-end network is more popular because it reduces the complex steps, such as data preprocessing and manual design of object features [20]. Since then, deep learning has started to develop rapidly in object detection and has been widely used in practice [21].

There are two major categories of mainstream deep learning object detection algorithms: One is the one-stage object detector, such as the YOLO series, SSD [22], RetinaNet [23], FCOS [24], etc. The second is two-stage object detectors, such as R-CNN [25], Fast R-CNN [26], Faster R-CNN [27], Mask R-CNN [28], etc. For example, the two-stage object detector Faster R-CNN integrates RPN (region proposal network) on top of Fast R-CNN. The Faster R-CNN mainly includes the shared convolutional layer module, RPN, and Fast R-CNN detector. However, due to the large number of prediction frames of the two-stage object detector and the large computational effort and slow detection speed, it is not suitable for real-time detection tasks. In 2015, J. Redmon et al. proposed a one-stage object detector, You Only Look Once (YOLO) [29], which directly obtains the class and bounding box information of the object and greatly improves the object detection speed. Ma et al. proposed an improved YOLOv3 [30], which has stronger noise immunity and better generalization ability. Deep learning also has good applications in the detection of the water surface floater. Lieshoutet et al. constructed a plastic floating debris monitoring dataset using videos collected from five different waterway bridges in Jakarta, Indonesia, and used Faster R-CNN to first detect regions that may contain plastic floating materials and then Inception V2 [31], pre-trained based on COCO [32], to determine whether these regions are packed with plastic [33]. Li et al. acquired ocean images of the South China Sea using a camera mounted on an unmanned ship and used a fusion model based on YOLOv3 with DenseNet [34] to detect sea surface objects [35]. Stofa et al. selected the DenseNet model to detect ships in remotely sensed images and determined by fine-tuning the hyperparameters a batch size of 16 and a learning rate of 0.0001. The model uses the Adam optimizer [36] and optimized the parameter settings [37]. In contrast, YOLOv5 [38] discarded the candidate frame generation phase and directly performed classification and regression operations on the objects, which improved the real-time detection speed of the object detection algorithm, and the model complexity of YOLOv5 was reduced by about 10% compared to YOLOv4 [39]. YOLOv5 has a stronger generalization capability and a lighter network structure compared to other object detection models. Moreover, generally speaking, the computational resources of UAV platforms are limited, and the impact of the model on the object detection speed must be considered. One-stage segment object detector is more suitable for object detection in UAV platforms compared to the two-stage object detector. Therefore, the YOLOv5 model is finally selected as the original model for small object detection of a water surface floater in this study.

Small objects usually have lower resolution and less distinctive features. Therefore, achieving precise detection of small objects is a hot issue in the field of object detection. Many scholars have conducted a lot of research work on small object detection. Kim et al. inserted a high-resolution processing module (HRPM) and a sigmoid fusion module (SFM), which not only reduced computational complexity but also improved the detection accuracy of small targets. They obtained good detection results in drone reconnaissance images and small vehicles [40]. Wang et al. proposed a bidirectional attention network called BANet, which solved the problems of inaccurate and inefficient detection of small and multiple targets. The model achieved an AP improvement of 0.55–2.93% compared to YOLOX on the VOC2012 dataset and a, AP improvement of 0.3–1.01% on the MSCOCO2017 dataset [41]. Yang et al. proposed QueryDet, which uses a new query mechanism called cascade sparse query (CSQ) to speed up inference and calculate detection results using sparse queries. The model obtains high-resolution feature maps by avoiding redundant calculations in the background area. QueryDet is applied in FCOS and Faster-R CNN and is tested on the COCO dataset and the visDrone dataset for small objects, achieving better results than the original algorithms in terms of accuracy and inference speed [42]. Liu et al. addressed the problem of low accuracy in small target recognition by changing the ROI alignment method, which reduced the quantization error of Faster R-CNN and improved its accuracy by 7% compared to the original model [43].

2.2. Object Detection in UAV-Captured Images

UAV has been widely applied in industries, such as agriculture, forestry, electric power, atmospheric detection, and mapping, among others. Compared with traditional methods, UAVs [44] have the advantages of flexibility and mobility, high efficiency and energy saving, diversified results, and low operating costs. Lan et al. combined UAV technology and deep learning to complete the detection of diseased plants and abnormal areas in orchards using the Swin-T YOLOX lightweight model. Additionally, the improved model compared with the original YOLOVX had a detection accuracy of 1.9% [45]. Bajić et al. used the improved YOLOV5 for object detection of unexploded remnants of war based on UAV thermal imaging, and the accuracy was above 90% for all 11 detection objects [46]. Liang et al. proposed a UAV-based low-altitude remote-sensing-based helmet detection system, which improved the AP value of small object detection to 88.7% and significantly improved the network’s detection performance for small objects [47]. Wang et al. built enhanced CSPDarknet (ECSP) and weighted aggregate feature re-extraction pyramid modules (WAFR) based on the YOLOX-nano network, which solved the problem of low recognition accuracy for grazing livestock due to the small number of occupied pixels [48]. However, due to the limited number of pixels and features occupied by objects in UAV images, the detection accuracy is low. Thus, small object detection based on UAV imagery remains a significant challenge.

2.3. Object Detection for Small Water Floater

As deep learning is increasingly applied to object detection, some researchers have also applied it to water surface floater detection. The application of deep learning in floating objects on the water’s surface has contributed greatly to timely detection and processing of floating objects and to improving the supervision level of rivers and lakes. Li et al. collected marine images from the South China Sea using a camera mounted on an unmanned boat and obtained a dataset containing 4000 images after data augmentation. A fusion model based on YOLOv3 and DenseNet was used to detect sea surface vessel targets [35]. Zhang et al. used a dataset extracted from three days of video monitoring of a certain location on the Beijing North Canal to detect plastic bags and bottles floating on the water’s surface. The detection model used was Faster R-CNN with VGG16 as the feature extractor, in which conv4_3 and conv5_3 were selected from VGG16 for feature fusion, to improve the detection accuracy of small objects [49]. In 2020, van et al. constructed a plastic floating garbage monitoring dataset using videos collected from five different waterway bridges in Jakarta, Indonesia, and identified plastic floating objects through two rounds of detection. Faster R-CNN was used to detect regions that may contain plastic floating objects, and then Inception V2 based on pre-trained COCO was used to determine whether these regions contain plastic [33].

3. Materials and Methods

3.1. Water Surface Floater Dataset

3.1.1. Acquisition of Water Surface Floater Dataset

To our best knowledge, there is no specific dataset available for water surface floaters, we have created our own dataset for this research. The dataset was collected from river segments in Fuzhou City, Fujian Province, China, primarily from rivers in residential areas. The floater types included in this dataset are representative of domestic waste generated by human activities. The data were collected using a DJI Mavic 2 Pro drone (DJI, Shenzhen, China), with the drone parameters shown in Table 1. To ensure a successful flight, GPS calibration, automated estimation of vehicle sideslip angle considering signal measurement characteristics, IMU calibration by fusing on-board sensor information, and camera calibration were completed before the unmanned aerial vehicle flight. The drone’s flight altitude varied depending on the width of the river, typically ranging from 20 to 80 m with a vertical downward angle. The recorded videos were saved in .mp4 format with a resolution of 3840 2160 pixels.

Table 1.

UAV shooting parameters.

3.1.2. Frame Capture as Images and Dataset Annotation

In this study, the OpenCV library was utilized to capture the frames of the video obtained from UAV photography. Subsequently, images featuring the UAV’s take-off and landing process as well as obscured river areas and the absence of water surface objects, were excluded from the dataset. Eventually, the remaining 1000 images, with a resolution of 3840 2160 pixels, were employed as the water surface object dataset for this investigation.

The acquired images were annotated with the aid of LabelImg software, a visual and interactive graphical interface labeling tool developed in Python and built with the QT framework. A total of 2000 water surface object images obtained after frame interception were labeled using this software. Upon completion of the labeling process, the annotated data weresaved in the .xml file format, containing information, such as the file saving path, image resolution, label name, and coordinates of the four vertices of each labeled frame.

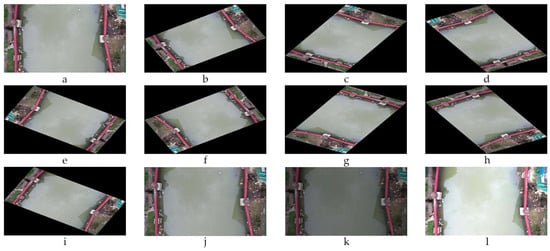

3.1.3. Dataset Augmentation

To improve the generalization ability of the detection model, we performed data augmentation on the water surface floater dataset. This process was aimed at enriching the diversity of the dataset, thereby enhancing the robustness and generalization ability of the model. As depicted in Figure 1, the resolution of the augmented images was uniformly adjusted to 3840 2160. The images before and after the augmentation are illustrated in Figure 1. The dataset was expanded to 12,000 images after the augmentation process. For deep learning training, the dataset was further divided into a training set (60%, 7200), a validation set (20%, 2400), and a test set (20%, 2400), respectively.

Figure 1.

Illustrations of the dataset augmentation: (a) origin; (b) 30 rotation; (c) 60 rotation; (d) 120 rotation; (e) 150 rotation; (f) 210 rotation; (g) 240 rotation; (h) 300 rotation; (i) 330 rotation; (j) Gaussian noise; (k) brightness enhancement (0.6); (l) brightness diminution (1.3).

3.2. Methodology

3.2.1. Method Overview

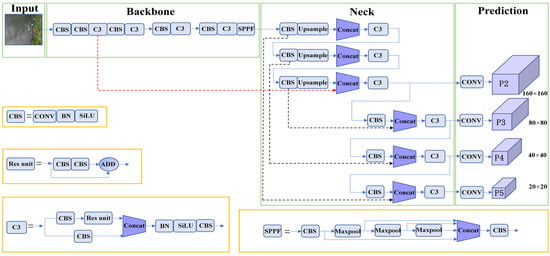

The original YOLOv5 had low resolution in the feature fusion of the feature map, resulting in the model learning too few target features and not enough attention to water surface floaters. Small water surface floaters in the feature map were small in size and weak in intensity and were often obscured by a complex background and noise [50]. The loss function CIOU of the original YOLOv5 took into account the influence of three aspects of the center point distance, aspect ratio, and overlap area on the loss of the bounding box [51]. However, it did not consider the directional relationship between the predicted box and the real box, which may lead to slow convergence and low accuracy of the model. As the detection boxes of small water surface floaters were typically small, the original NMS algorithm of YOLOv5 assigned a score of 0 to the current detection box and the highest scoring detection box if the IOU is greater than a certain threshold [52]. This resulted in many possible correct prediction boxes being missed, leading to incorrect and missed detection of small target floating objects on the water’s surface. To address these issues, we proposed the following improvements to the original YOLOv5: (1) We introduced the small target detection head to help the YOLOv5 model learn more location information of small water surface floaters. The improved model can make predictions on the feature map of 160 160, which increased the prediction of small water surface floater detection probability. (2) We introduced SIOU to enable YOLOv5 to detect small water surface floaters faster and more accurately. (3) We introduced soft-NMS to reduce the missed detection rate of the original YOLOv5 and improve the recall rate of the model for small water surface floaters.

3.2.2. Prediction Head for Small Objects

The YOLOv5 model conducted detection on feature maps of three different scales, namely 80 80, 40 40, and 20 20. Correspondingly, three different sizes of prior frames were employed to examine small, medium, and large objects in the original image. However, due to the continuous down-sampled convolutional layers in the YOLOv5 backbone network, the feature information gradually decreased as the network deepened during feature extraction. As the feature map became deeper, it tended to focus more on the global information of the image, which led to poor detection of small objects. Although the shallow feature map contained more detailed location information that could make up for the deficiencies of deeper features to a certain extent, using only the shallow features to detect objects could cause false detections and missed detections due to the lack of guidance from high-level semantic information.

To address this issue and enhance the detection of small objects, we proposed adding a feature scale that specifically focuses on small objects in the original detection layer of YOLOv5s by fusing shallow features with deep features. Specifically, we added the feature map obtained after up-sampling to 80 80, continued to up-sample the feature map to obtain a feature map of size 160 160, and then fused it with the feature map of the same size in the P2 layer to obtain a larger feature map for small object detection. This approach enhanced the exploitation of both shallow and deep characteristics. The feature maps of 80 80, 40 40, and 20 20 were obtained through convolution after feature concatenation of layer 22 and layer 18, layer 25 and layer 14, and layer 28 and layer 10, respectively.

By adding a 160 160 candidate frame with higher resolution, our improved YOLOv5 structure improved the network’s sensitivity to small objects, better extracted the features of small objects, and predicted small objects more accurately. The improved YOLOv5 structure is illustrated in Figure 2.

Figure 2.

The improved YOLOv5 model. CONV: the convolutional layer; BN: the batch normalization layer; Upsample: the up-sampling layer; Maxpool: the max-pooling layer. The red dashed line represents the newly added prediction head for small objects P2 layer.

3.2.3. SIOU

The loss function of YOLOv5 is composed of three parts: confidence loss, classification loss, and localization loss. Currently, the localization regression loss function includes IOU [50], GIOU [53], DIOU [54], CIOU, EIOU [50], and SIOU, etc. The default localization loss function of YOLOv5 is complete intersection over union (CIOU), which considers three aspects, namely center distance, aspect ratio, and overlap area, in the loss of the bounding box, compared to the common IOU. However, CIOU does not account for the directional relationship between the real box and the predicted box, leading to slow convergence and poor accuracy. To address this issue, our paper proposes two box consistency penalty terms based on DIOU and introduces SCYLLA intersection over union (SIOU), which is suitable for small object prediction box regression. Compared with the CIOU loss function, the SIOU loss function considers the vector angle between the real frame and the predicted frame. It enables the model to more easily and quickly approach the real frame during the training process, leading to improved detection accuracy and speed. SIOU includes four components: angle cost, distance cost, shape cost, and IOU cost. This approach effectively reduces the total degrees of freedom of the loss and improves the accuracy of inference.

The specific definitions of IOU, CIOU, and SIOU are shown in the following equations:

The definition of IOU is shown below:

IOU usually reflects the distance between the region candidate frame and the real object frame by calculating the ratio of the area overlapped by the region candidate frame and the area of the new region where these two regions are combined together, as shown in Equation (1), where ROI denotes the region candidate frame and GT denotes the real object frame.

The definition of CIOU [55] is shown below:

In Equations (2) and (3), and denote the ground-truth box and the predicted box, respectively; and denote the width and height of , respectively; and w and h denote the width and height of , respectively.

In summary, the final SIOU [56] loss function is defined as follows. The IOU cost is defined in Equation (1), while the angle cost, distance cost, shape cost, and SIOU are defined in Equations (4)–(10), respectively.

Angle cost:

In Equations (4) and (5), is the distance between the center point of the real frame and the center point of the predicted frame; is the height difference between the center point of the real frame and the center point of the predicted frame.

Distance cost:

In Equations (6) and (7), and denote the width and height of the minimum outer rectangle of the real box and the predicted box, respectively. and are the coordinate of the center point of the real box.and are the coordinate of the center point of the prediction frame. is angle cost.

Shape cost:

In Equations (8) and (9), and denote the width and height of the real box, respectively. W and h denote the width and height of the prediction box, respectively. use to control the degree of attention to shape loss.

In Equation (10), IOU denotes the degree of overlap between the actual frame and the predicted frame, as expressed by Equation (1). denotes the distance cost, as expressed by Equations (6) and (7). indicates the shape cost between the predicted frame and the actual frame, as expressed by Equations (8) and (9).

3.2.4. Soft-NMS

The traditional NMS algorithm is used in the YOLOV5 algorithm, which directly sets the score of the detection frame to 0 when the IOU between the current detection frame and the detection frame with the highest score are greater than a certain threshold, which will directly lead to the object frame with a larger overlap area being missed, thus resulting in a low recall rate of small objects. To avoid the situation that the detection frame containing the object is deleted, the soft-non-maximum suppression (soft-NMS) algorithm is used in our study, which multiplies the current detection frame score by a weighting function that attenuates the scores of the neighboring frames that overlap with the highest scoring frame. The greater the overlap with the highest scoring frame, the faster the score decays, avoiding the case where the frame has the object but is removed, as well as the circumstance where two comparable frames have the same object. The soft-NMS used in our study takes into account the overlap between detection frames and reduces the false deletion of detection frames by the traditional NMS algorithm, which leads to more accurate and reasonable prediction results for small objects.

The processing of the conventional NMS algorithm can be expressed visually by the fractional reset function in Equation (11).

The soft-NMS algorithm can be expressed visually as a fractional reset function as shown in Equation (12).

In Equations (11) and (12), denotes the score of the i-th detection frame; A indicates the detection frame with the highest confidence in the region of interest; represents the i-th detection box; Iou denotes the overlap of the i-th detection frame with A; indicates the calibrated overlap threshold.

3.2.5. The Improved YOLOv5 Model for Water Surface Floater Detection

YOLOv5 is a widely used one-stage object detector that simplifies the network structure and improves detection accuracy. Its fast detection, high accuracy, and flexible target recognition capabilities make it a popular choice. YOLOv5 consists of four parts: input, backbone, neck, and prediction. The input part is an RGB three-channel image of the size 640 640. The backbone part consists of the CBS, C3, and SPPF parts. C3 increases the model’s perceptual field using three different scales to significantly reduce computation. SPPF uses three different scales of maximum pooling layers to improve the nonlinear expression of the network. The neck part adopts the structure of FPN + PAN; the combination of the two strengthens the fusion ability of the network for different feature layers. The prediction part uses the GIOU function as the bounding box loss function and NMS to filter multi-object boxes.

However, detecting small water surface floaters in UAV-captured images can still pose a challenge due to the limited number of pixels. To address this and in conclusion, we propose an improved YOLOv5s model (Figure 2) so that the new structure can inherit their advantages and preserve both global and local features. The improved YOLOv5 model may be more beneficial for the detection of water surface floater small objects.

3.3. Model Evaluation Metrics

The definition of the confusion matrix is shown in Table 2.

Table 2.

Confusion matrix.

According to the combination of their true and predicted categories, the sample cases are divided into true positive cases, false positive cases, true negative cases, and false negative cases. These cases are denoted as TP, FP, TN, and FN, where TP denotes the number of cases where the object is correctly judged as a positive case, FP denotes the number of positive cases with accurate object judgment, and FN denotes the number of positive cases with incorrect object judgment. The intersection ratio between the detecting frame and the real frame must be greater than the study’s fundamental threshold. A list of the confusion matrix is below.

P denotes precision, which measures the probability that the positive cases predicted by the model are true positive cases, the accuracy rate. R denotes recall, which measures the ability of the model to predict all positive cases, the all-check rate, which are calculated by Equations (13) and (14).

A series of metric pairs (precision, recall) are obtained by setting a series of thresholds, which are then used to plot the coordinates and obtain a PR curve. The performance of the model is measured by calculating the area under the PR curve, called average precision (AP). The AP is the average of the precision in a category. The mAP is the average precision of all categories, as defined in Equations (15) and (16).

3.4. Experimental Environment and Setting

To enhance the model’s generalization ability and save on training time, this study utilized the pre-trained weights on the VOC dataset as the initial weights for the YOLOv5s model. p-value, R-value, AP-value, and FPS were the evaluation metrics used in this study. An IOU of 0.5 served as the training threshold. The default IOU of 0.45 and the confidence of 0.25 were the fundamental parameters for the test. The water surface floater dataset was split into a 60% training set (7200 images), a 20% validation set (2400 images), and a 20% test set (2400 images). The training set and test set remained consistent throughout each experiment. The computer configuration utilized in this study included an Intel(R) Core(TM) i9-10900K CPU 3.70GHz processor with 32GB of RAM, an NVIDIA GeForce GTX 3070 8GB graphics card, and 8GB of memory. The Tensorflow framework, version 2.5.0, and Pytorch framework, version 1.12.1, were used. The training hyperparameters of the models used in this paper can be found in Table 3.

Table 3.

Major hyper-parameters used in this study for Faster R-CNN, SSD, YOLOv5, and Improved YOLOv5.

4. Results

4.1. Statistical Analysis of Water Surface Floaters Dataset

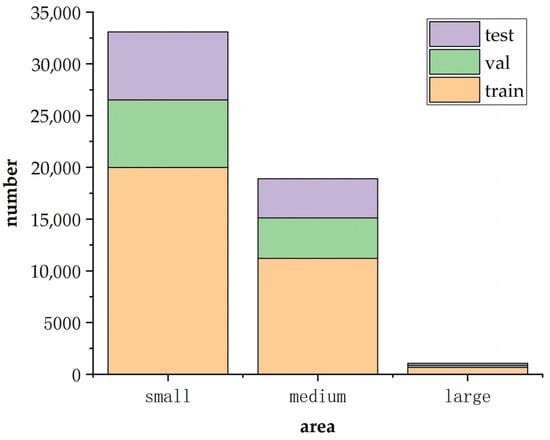

In the context of object detection, small objects can be defined in two ways: relative size and absolute size. In the relative size definition, objects with an area less than 0.12% of the total image area, or less than 256 × 256 pixels, are considered small. Alternatively, the absolute size definition can be used. In the MS COCO dataset, objects with a size less than 32 × 32 pixels are classified as small, while those with a size between 32 × 32 and 96 × 96 and larger than 96 × 96 are classified as medium and large, respectively.

For the purpose of this paper, the definition of small objects is based on absolute size. Specifically, any water surface floater object with less than 32 × 32 pixels in the original UAV image of 3840 2160 pixels is considered small. As shown in Figure 3, more than 50% of the objects in the water surface floater dataset fall into the small object category. The training set, validation set, and test set have a more evenly distributed mix of large and small objects, which indicates a more reasonable distribution and leads to more convincing results. The water surface floater dataset prioritizes small objects over most publicly available datasets, such as ImageNet and COCO.

Figure 3.

Area statistics for object boxes in the water surface floater dataset: The number indicates the number of water surface floaters. Small, medium, and large indicate area < 32 × 32, 32 × 32 < area < 96 × 96 and area > 96 × 96 (pixel), respectively.

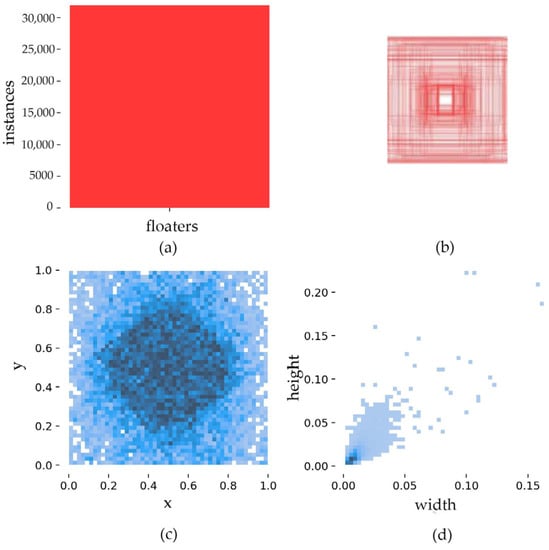

Figure 4 presents the object frames in the surface floater dataset. First, Figure 4a presents the number of object frames in the training set, which are more than 30,000 object frames in this training set; Figure 4b depicts the object frames; Figure 4c depicts the distribution position of the object frames in the whole image the object frames are mostly concentrated in the center of the image, and the number of object frames around it is small; and Figure 4c,d shows the size of the object frames in the water surface floater. In the training set, small objects account for the majority, which coincides with the number of small objects counted in Figure 4.

Figure 4.

Statistic diagram of the water surface floater training model: (a) the number of samples in the training datasets; (b) annotation boxes; (c) locations of the objects (d) spatial size of the objects.

4.2. Comparison of Detection Performance before and after Model Improvement

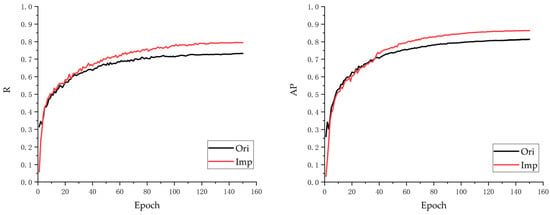

All models in our study were trained and evaluated on the same dataset to ensure a consistent evaluation of the results. We conducted a series of ablation experiments on the sea surface floater dataset to investigate the potential for improving the YOLOV5 model. The experimental results are summarized in Table 4 and Figure 5. (1) On the water surface floater dataset, we found that the original YOLOv5 achieved an AP of 81.3% and an R of 73.3%. We first replaced NMS with soft-NMS, resulting in YOLOv5_soft NMS, which showed a 0.2% increase in AP and a 3.1% increase in R-value. Next, we replaced CIOU with SIOU to obtain YOLOv5_SIOU, which showed a 1.4% increase in AP and a 1.7% increase in R-value. Finally, we modified the original network structure and added a small object detection head to obtain YOLOv5_small, which showed a 3.2% and 4.3% increase in AP and R-value, respectively. (2) In the experiment, improved YOLOv5 achieved the best performance among all models. It achieved an AP of 86.3% and an R of 79.4%, which were 5% and 6.1% higher than those of YOLOv5, respectively. The improved YOLOv5 model solved the challenges of identifying small objects in UAV images with limited features and recognition ability. (3) Although the improved YOLOv5 model is deeper and has slightly more parameters compared to the original YOLOv5 network, it provides a new approach for small object detection. We also compared the AP curve and R curve of the original YOLOv5 and the improved YOLOv5, which are shown in Figure 5. As the epoch increased, the AP and R values gradually increased until they stabilized, demonstrating that our model achieved better convergence.

Table 4.

Comparison of detect results on water surface dataset under different improvement methods.

Figure 5.

The R and AP curves of YOLOv5 and improved YOLOv5. ‘Ori’ and ‘Imp’ denote YOLOv5 and improved YOLOv5, respectively.

4.3. Test of Detection Performance before and after Model Improvement

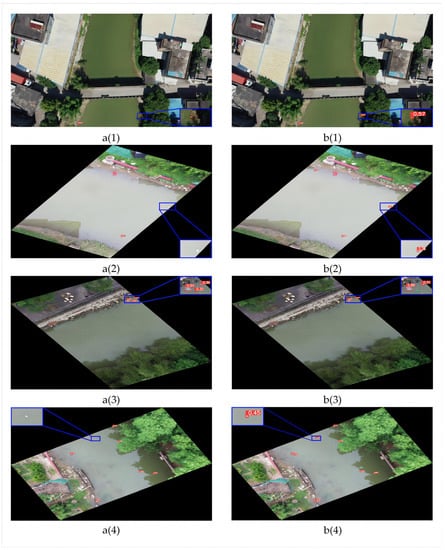

Detection models obtained from the original YOLOv5 network and the improved YOLOv5 network were used to recognize the water surface floater small object dataset. Figure 6 displays representative detection results of the original YOLOv5 and improved YOLOv5 on the dataset, including multiple shooting heights and different backgrounds object detections. By analyzing Figure 6, the improved YOLOv5 outperforms the original YOLOv5 in detecting smaller and more water surface floaters, with a larger recall rate. The detection results of both models are presented in Figure 6.

Figure 6.

Example detection results on the water surface floater test dataset: (a(1)–a(4)) and (b(1)–b(4)) are the detection results of YOLOv5 and improved YOLOv5, respectively.

4.4. Performance Comparison of Detection with Other Object Detection Models

To investigate the applicability of our improved YOLOv5 model for small object detection, we compared its performance with the original YOLOv5, some advanced SSD models, and the Faster R-CNN model. The results are presented in Table 5. Our analysis shows that our improved YOLOv5 model outperforms the Faster R-CNN and SSD models by 32.3% and 76.9% in AP and by 2.1% and 49.6% in R, respectively. These results demonstrate that our proposed model is more accurate and reliable for detecting small objects on the water surface floater. Furthermore, the improved YOLOv5 achieved an average accuracy AP of 86.3% and a recall value of 79.4%, which are 5% and 6.1% higher than those of the original YOLOv5 network, respectively. Our proposed improved YOLOv5 model significantly improves the detection accuracy of floating objects on the water’s surface and is more adaptable to various external conditions, such as lighting, background complexity, and water reflection, proving the practicality and versatility of the model.

Table 5.

Results of the four object detection models.

5. Discussion

Compared with general small objects, such as fish, bottles, crowds, and houses in UAV images, small objects floating on the water’s surface have complex problems, such as uncertainty of shape, complexity of species, reflection of sunlight on the water’s surface, and the presence of mutual occlusion. Therefore, how to use deep learning models to accurately and quickly identify small objects floating on the water’s surface remains a key challenge. In this paper, we perform brightness enhancement and brightness reduction in image pre-processing to attenuate the effect of detection by different light intensities. We investigate the target detection of small objects on the surface of water based on the combination of UAV images and deep learning. After building a dataset of floating objects on water, the data are expanded, and then advanced deep learning models are used to train this dataset. To better fit the detection of small water surface floaters, this study improves on the YOLOv5 model to obtain improved YOLOv5, which is more focused on the detection of small water surface floaters and improves the accuracy and efficiency of the detection of small water surface floaters.

The method improved by Li et al., as mentioned before, achieved a 0.66% increase in AP value compared to the original method, when trained on a ship dataset. Zhang et al., by contrast, trained their improved method on a water surface floater dataset, where the AP value was improved by 8.4% compared to the original method. However, the detection speed is 11 FPS. To be specific, our proposed improved YOLOv5 model demonstrates remarkable performance in detecting small objects of water surface floaters, achieving an AP of 80% and R of 78%. Compared to the original YOLOv5, Faster R-CNN, and SSD models, it exhibits superior detection performance. The detection of small water surface floaters also improved well in the test stage. It also achieves an inference speed of 92 FPS, which is the second best after the YOLOv5, when compared with other models. These results suggest the potential of our proposed model for small object detection and high-precision water surface floater monitoring.

Real-time small object detection methods can also benefit many other fields. For example, in medical image analysis, improved deep learning models can be used to detect and diagnose diseases, such as tumors, cancer, and neurological disorders. In the industrial sector, deep learning models can be used for quality inspection, product classification, production line detection, and other applications. Fields, such as unmanned driving, smart homes, and facial recognition, deep learning models, also have a wide range of applications. Therefore, research on deep learning models for water surface small object detection is not only important for water environment safety and ecological protection but also has broad application prospects.

6. Conclusions

In this study, a lightweight object detection model, improved YOLOv5, for small water surface floaters detection is introduced, which adds a small object detection head to the neck part of the original YOLOv5 model, deepens the network model depth, better integrates shallow and deep features; replaces CIOU with SIOU, and considers the direction between the real frame and the predicted frame, and replaces soft-NMS with NMS, the weight function to solve the problem of missed detection. The proposed model could diminish the effect of illumination on the detection of small water surface floaters by increasing and decreasing the brightness in image pre-processing. All the above improvements improve the accuracy and efficiency of the model for small water surface floaters detection in all aspects. Although the complexity of the improved YOLOv5 model increases compared to the original YOLOv5, the AP improves by 5%, and the R improves by 6.1%.

In comparison to other advanced object detection models, the improved YOLOv5 model achieves a detection speed of 92 FPS, surpassing the Faster R-CNN and SSD. Therefore, when compared to two-stage object detectors, our improved YOLOv5 model is better suited for deployment on mobile devices, enabling real-time identification, and monitoring water surface floaters of small objects. The improved YOLOv5 is a good implementation of an object detection algorithm that balances processing speed and detection accuracy, and the model can be applied to real-time UAV water surface floater small object detection tasks.

However, there are still obvious shortcomings in this paper. For example, it is difficult to distinguish the specific categories of water surface floaters when multiple overlapping and interlocking complex situations are encountered in this study, and it is difficult to keep the UAV at the same shooting height because it is affected by the complex environment on the ground, such as tree shading, power lines, flying birds, and other complex situations.

For water surface floaters detection in UAV-captured images, there is still a possibility to improve the detection effect in future work, and future research can be broadly divided into two directions: (1) Design and develop the front-end interface and back-end to embed the improved YOLOv5 model to achieve real-time monitoring of the water environment. (2) The more categories of water surface floaters, the greater the test of the object detection model, and how to combine various factors to propose a kind of detection applicable to multiple categories of water surface floaters is worthy of further study.

Author Contributions

Conceptualization, F.C.; methodology, F.C. and X.W.; software and experiments, F.C., S.K. and L.C.; validation, F.C. and L.Z.; investigation, X.W. and F.C.; resources, X.W.; data curation, F.C.; writing—original draft preparation, F.C.; writing—review and editing, X.W.; visualization, L.Z., F.C., H.D. and D.L.; supervision, X.W. and L.Z; project administration, X.W., H.D. and D.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jambeck, J.R.; Geyer, R.; Wilcox, C.; Siegler, T.R.; Perryman, M.; Andrady, A.; Narayan, R.; Law, K.L. Plastic waste inputs from land into the ocean. Science 2015, 347, 768–771. [Google Scholar] [CrossRef]

- Suwal, N.; Kuriqi, A.; Huang, X.; Delgado, J.; Młyński, D.; Walega, A. Environmental flows assessment in Nepal: The case of Kaligandaki River. Sustainability 2020, 12, 8766. [Google Scholar] [CrossRef]

- Zhang, L.; Xie, Z.; Xu, M.; Zhang, Y.; Wang, G. EYOLOv3: An Efficient Real-Time Detection Model for Floating Object on River. Appl. Sci. 2023, 13, 2303. [Google Scholar] [CrossRef]

- Qiu, H.; Cao, S.; Xu, R. Cancer incidence, mortality, and burden in China: A time-trend analysis and comparison with the United States and United Kingdom based on the global epidemiological data released in 2020. Cancer Commun. 2021, 41, 1037–1048. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, G.; Feng, X.; Li, X.; Fang, H.; Zhang, J.; Bai, X.; Tao, M.; He, Y. Detecting wheat heads from UAV low-altitude remote sensing images using deep learning based on transformer. Remote Sens. 2022, 14, 5141. [Google Scholar] [CrossRef]

- Liu, C.; Yang, D.; Tang, L.; Zhou, X.; Deng, Y. A Lightweight Object Detector Based on Spatial-Coordinate Self-Attention for UAV Aerial Images. Remote Sens. 2022, 15, 83. [Google Scholar] [CrossRef]

- Xia, X.; Meng, Z.; Han, X.; Li, H.; Tsukiji, T.; Xu, R.; Zheng, Z.; Ma, J. An automated driving systems data acquisition and analytics platform. Transport. Res. Part C-Emerg. Technol. 2023, 151, 104120. [Google Scholar] [CrossRef]

- Liu, W.; Quijano, K.; Crawford, M.M. YOLOv5-Tassel: Detecting tassels in RGB UAV imagery with improved YOLOv5 based on transfer learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8085–8094. [Google Scholar] [CrossRef]

- Xue, M.; Chen, M.; Peng, D.; Guo, Y.; Chen, H. One spatio-temporal sharpening attention mechanism for light-weight YOLO models based on sharpening spatial attention. Sensors 2021, 21, 7949. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, F.; Hu, P. Small-Object Detection in UAV-Captured Images via Multi-branch Parallel Feature Pyramid Networks. IEEE Access 2020, 8, 145740–145750. [Google Scholar] [CrossRef]

- Demiray, B.Z.; Sit, M.; Demir, I. D-SRGAN: DEM super-resolution with generative adversarial networks. SN Comput. Sci. 2021, 2, 48. [Google Scholar] [CrossRef]

- Chen, Y.; Li, J.; Niu, Y.; He, J. Small Object Detection Networks Based on Classification-Oriented Super-Resolution GAN for UAV Aerial Imagery. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019. [Google Scholar]

- Jiang, W.; Ren, Y.; Liu, Y.; Leng, J. Artificial neural networks and deep learning techniques applied to radar target detection: A review. Electronics 2022, 11, 156. [Google Scholar] [CrossRef]

- Ju, M.; Luo, J.; Zhang, P.; He, M.; Luo, H. A simple and efficient network for small target detection. IEEE Access 2019, 7, 85771–85781. [Google Scholar] [CrossRef]

- Lang, K.; Yang, M.; Wang, H.; Wang, H.; Wang, Z.; Zhang, J.; Shen, H. Improved One-Stage Detectors with Neck Attention Block for Object Detection in Remote Sensing. Remote Sens. 2022, 14, 5805. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Benghanem, M.; Mellit, A.; Moussaoui, C. Embedded Hybrid Model (CNN–ML) for Fault Diagnosis of Photovoltaic Modules Using Thermographic Images. Sustainability 2023, 15, 7811. [Google Scholar] [CrossRef]

- Wang, R.; Jiao, L.; Xie, C.; Chen, P.; Du, J.; Li, R. S-RPN: Sampling-balanced region proposal network for small crop pest detection. Comput. Electron. Agric. 2021, 187, 106290. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ding, J.; Zhang, J.; Zhan, Z.; Tang, X.; Wang, X. A Precision Efficient Method for Collapsed Building Detection in Post-Earthquake UAV Images Based on the Improved NMS Algorithm and Faster R-CNN. Remote Sens. 2022, 14, 663. [Google Scholar] [CrossRef]

- Pan, Y.; Zhu, N.; Ding, L.; Li, X.; Goh, H.-H.; Han, C.; Zhang, M. Identification and Counting of Sugarcane Seedlings in the Field Using Improved Faster R-CNN. Remote Sens. 2022, 14, 5846. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Joochim, O.; Satharanond, K.; Kumkun, W. Development of Intelligent Drone for Cassava Farming. In Recent Advances in Manufacturing Engineering and Processes: Proceedings of ICMEP 2022; Springer: Singapore, 2023; pp. 37–45. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3520–3529. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ma, H.; Liu, Y.; Ren, Y.; Yu, J. Detection of collapsed buildings in post-earthquake remote sensing images based on the improved YOLOv3. Remote Sens. 2019, 12, 44. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Kim, D.-H. Evaluation of COCO validation 2017 dataset with YOLOv3. Evaluation 2019, 6, 10356–10360. [Google Scholar]

- van Lieshout, C.; van Oeveren, K.; van Emmerik, T.; Postma, E. Automated river plastic monitoring using deep learning and cameras. Earth Space Sci. 2020, 7, e2019EA000960. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Li, Y.; Guo, J.; Guo, X.; Liu, K.; Zhao, W.; Luo, Y.; Wang, Z. A novel target detection method of the unmanned surface vehicle under all-weather conditions with an improved YOLOV3. Sensors 2020, 20, 4885. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Improved adam optimizer for deep neural networks. In Proceedings of the 2018 IEEE/ACM 26th International Symposium on Quality of SERVICE (IWQoS), Banff, AB, Canada, 4–6 June 2018; pp. 1–2. [Google Scholar]

- Stofa, M.M.; Zulkifley, M.A.; Zaki, S.Z.M. A deep learning approach to ship detection using satellite imagery. Proc. IOP Conf. Ser. Earth Environ. Sci. 2020, 540, 012049. [Google Scholar] [CrossRef]

- Li, R.; Ji, Z.; Hu, S.; Huang, X.; Yang, J.; Li, W. Tomato Maturity Recognition Model Based on Improved YOLOv5 in Greenhouse. Agronomy 2023, 13, 603. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Kim, M.; Kim, H.; Sung, J.; Park, C.; Paik, J. High-resolution processing and sigmoid fusion modules for efficient detection of small objects in an embedded system. Sci. Rep. 2023, 13, 244. [Google Scholar] [CrossRef]

- Wang, S.-Y.; Qu, Z.; Li, C.-J.; Gao, L.-Y. BANet: Small and multi-object detection with a bidirectional attention network for traffic scenes. Eng. Appl. Artif. Intel. 2023, 117, 105504. [Google Scholar] [CrossRef]

- Yang, C.; Huang, Z.; Wang, N. Querydet: Cascaded sparse query for accelerating high-resolution small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13668–13677. [Google Scholar]

- Liu, Q.-p.; Wang, Q.-j.; Hanajima, N.; Su, B. An improved method for small target recognition based on faster RCNN. In Proceedings of the 2021 Chinese Intelligent Systems Conference: Volume II, Fuzhou, China, 16–17 October 2021; pp. 305–313. [Google Scholar]

- Imai, N.; Otokawa, H.; Okamoto, A.; Yamazaki, K.; Tamura, T.; Sakagami, T.; Ishizaka, S.; Shimojima, H. Abandonment of Cropland and Seminatural Grassland in a Mountainous Traditional Agricultural Landscape in Japan. Sustainability 2023, 15, 7742. [Google Scholar] [CrossRef]

- Lan, Y.; Lin, S.; Du, H.; Guo, Y.; Deng, X. Real-Time UAV Patrol Technology in Orchard Based on the Swin-T YOLOX Lightweight Model. Remote Sens. 2022, 14, 5806. [Google Scholar] [CrossRef]

- Bajić, M., Jr.; Potočnik, B. UAV Thermal Imaging for Unexploded Ordnance Detection by Using Deep Learning. Remote Sens. 2023, 15, 967. [Google Scholar] [CrossRef]

- Liang, H.; Seo, S. UAV Low-Altitude Remote Sensing Inspection System Using a Small Target Detection Network for Helmet Wear Detection. Remote Sens. 2023, 15, 196. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, L.; Wang, Q.; Wang, N.; Wang, D.; Wang, X.; Zheng, Q.; Hou, X.; Ouyang, G. A Lightweight and High-Accuracy Deep Learning Method for Grassland Grazing Livestock Detection Using UAV Imagery. Remote Sens. 2023, 15, 1593. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Zhang, Z.; Shen, J.; Wang, H. Real-time water surface object detection based on improved faster R-CNN. Sensors 2019, 19, 3523. [Google Scholar] [CrossRef]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Xue, Q.; Lin, H.; Wang, F. FCDM: An Improved Forest Fire Classification and Detection Model Based on YOLOv5. Forests 2022, 13, 2129. [Google Scholar] [CrossRef]

- Li, S.; Yang, X.; Lin, X.; Zhang, Y.; Wu, J. Real-Time Vehicle Detection from UAV Aerial Images Based on Improved YOLOv5. Sensors 2023, 23, 5634. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Qiu, Z.; Zhao, Z.; Chen, S.; Zeng, J.; Huang, Y.; Xiang, B. Application of an improved YOLOv5 algorithm in real-time detection of foreign objects by ground penetrating radar. Remote Sens. 2022, 14, 1895. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).