Developing an Optimal Ensemble Model to Estimate Building Demolition Waste Generation Rate

Abstract

1. Introduction

2. Methods and Materials

2.1. Data Source Description

2.2. Data Preprocessing and Dataset Size

2.3. Applied Machine Learning Algorithms

2.3.1. Random Forest

2.3.2. Extremely Randomized Trees

2.3.3. Gradient Boosting Machine

2.3.4. Extreme Gradient Boosting

2.4. Feature Selection and Hyper-Parameter Tuning

2.5. Model Validation and Evaluation

3. Results

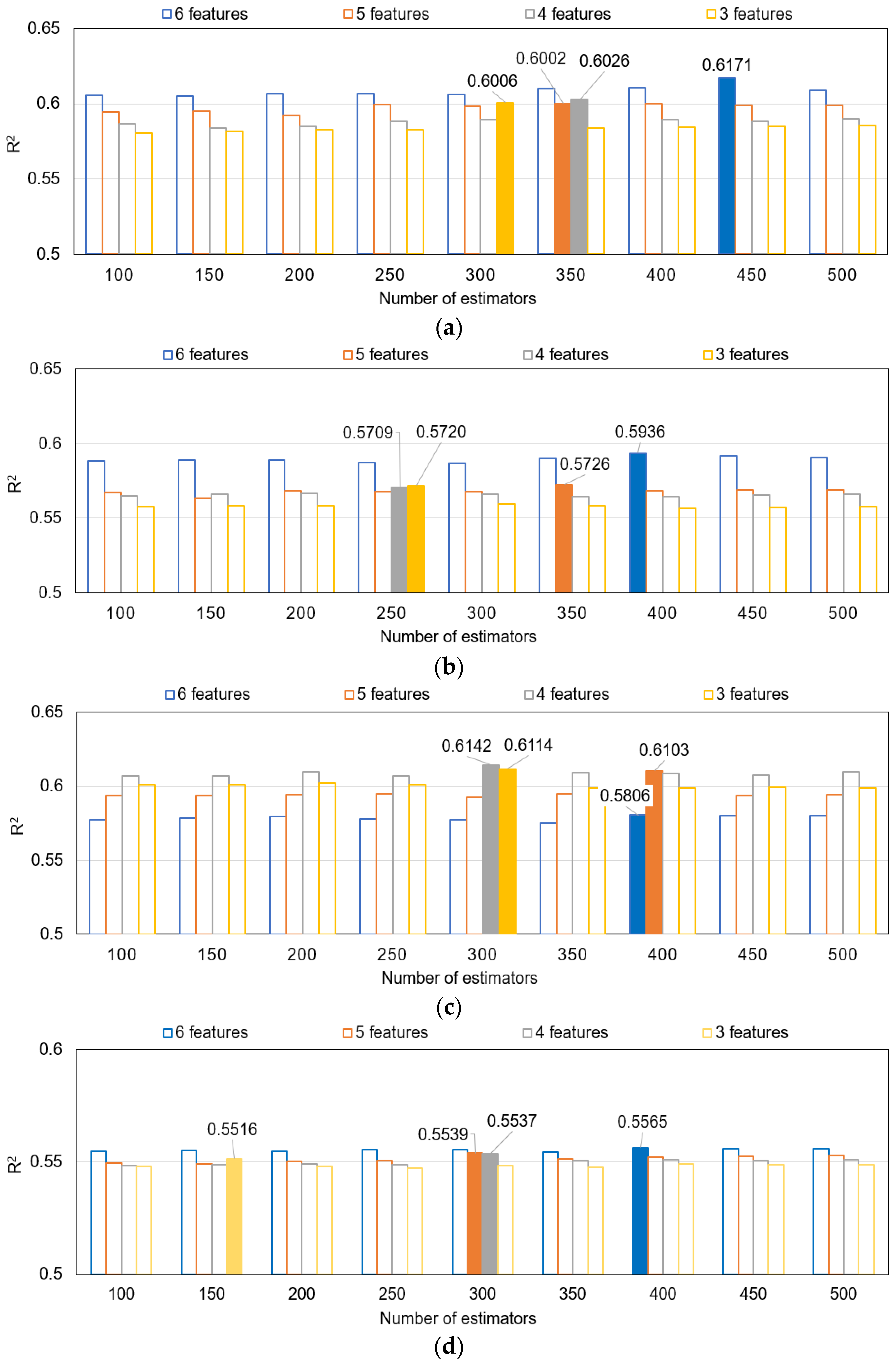

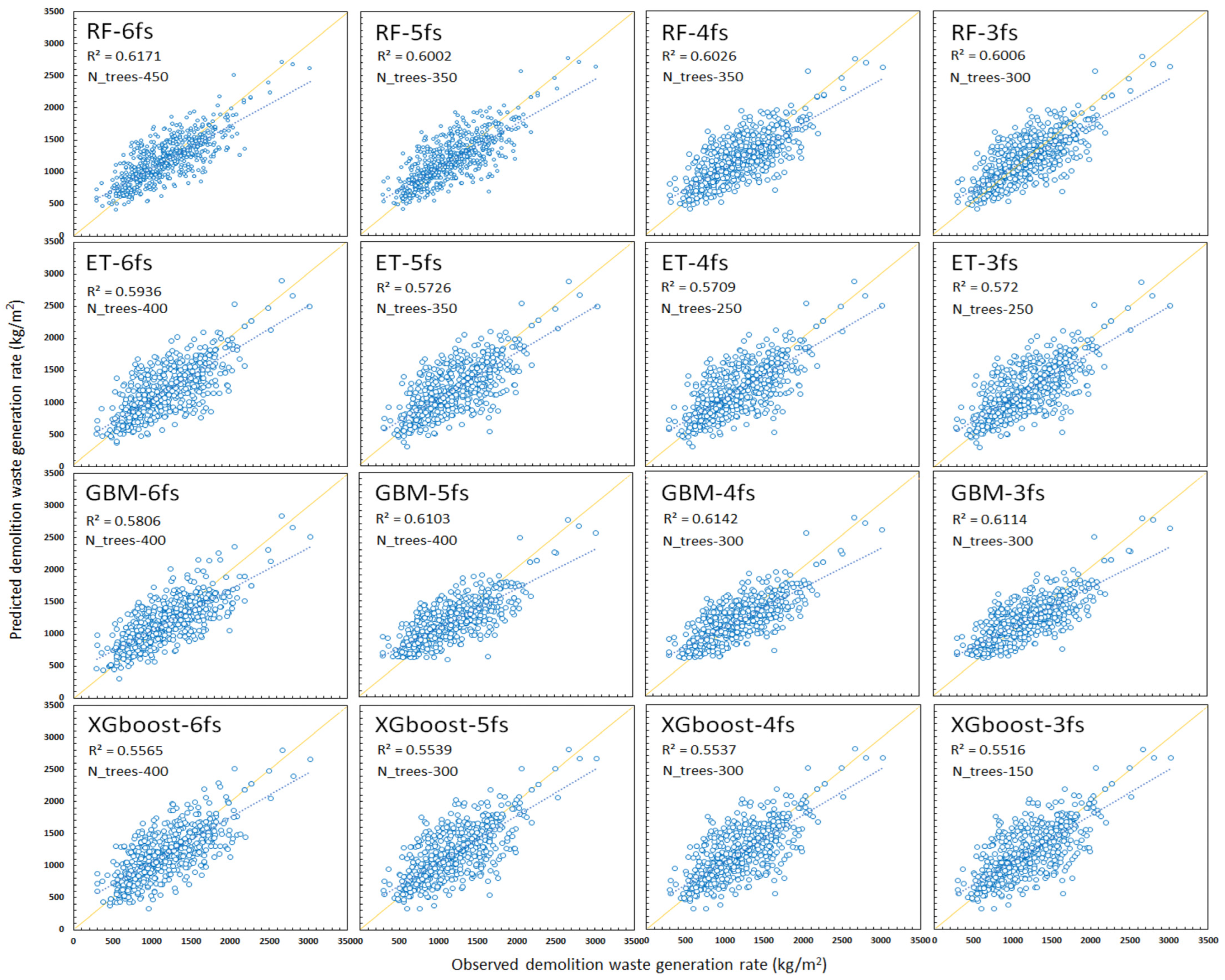

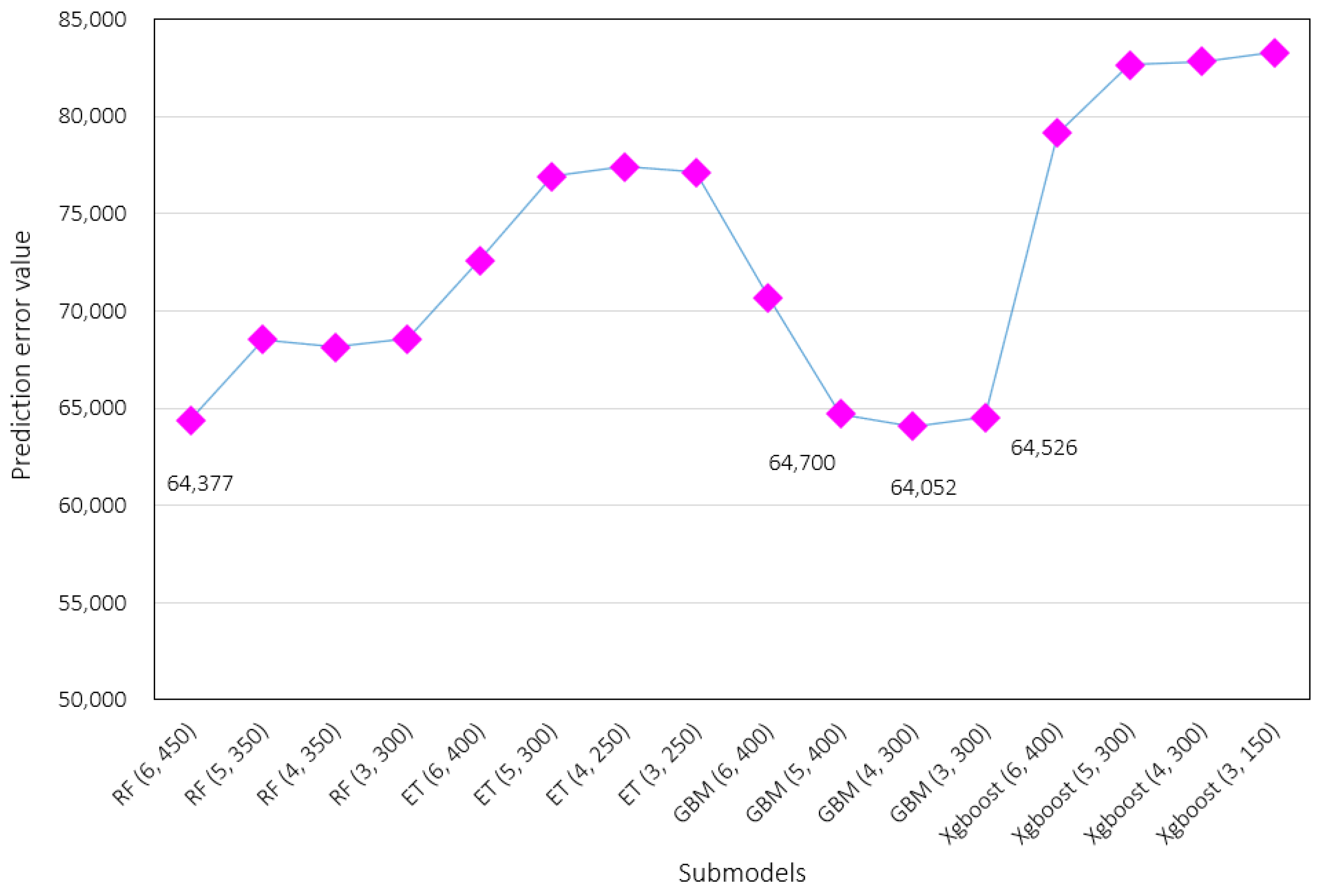

3.1. Optimal Number of Estimators and Features

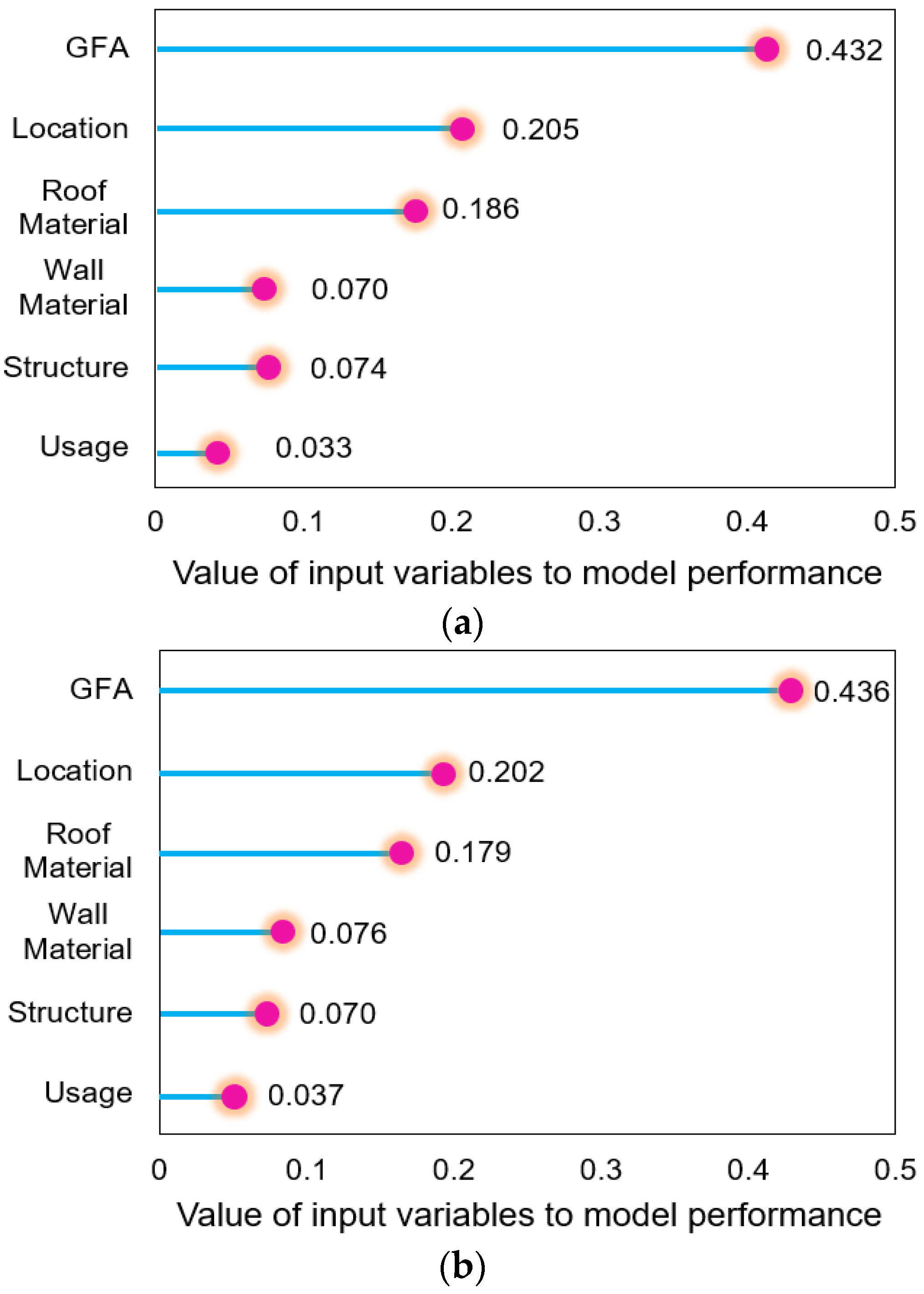

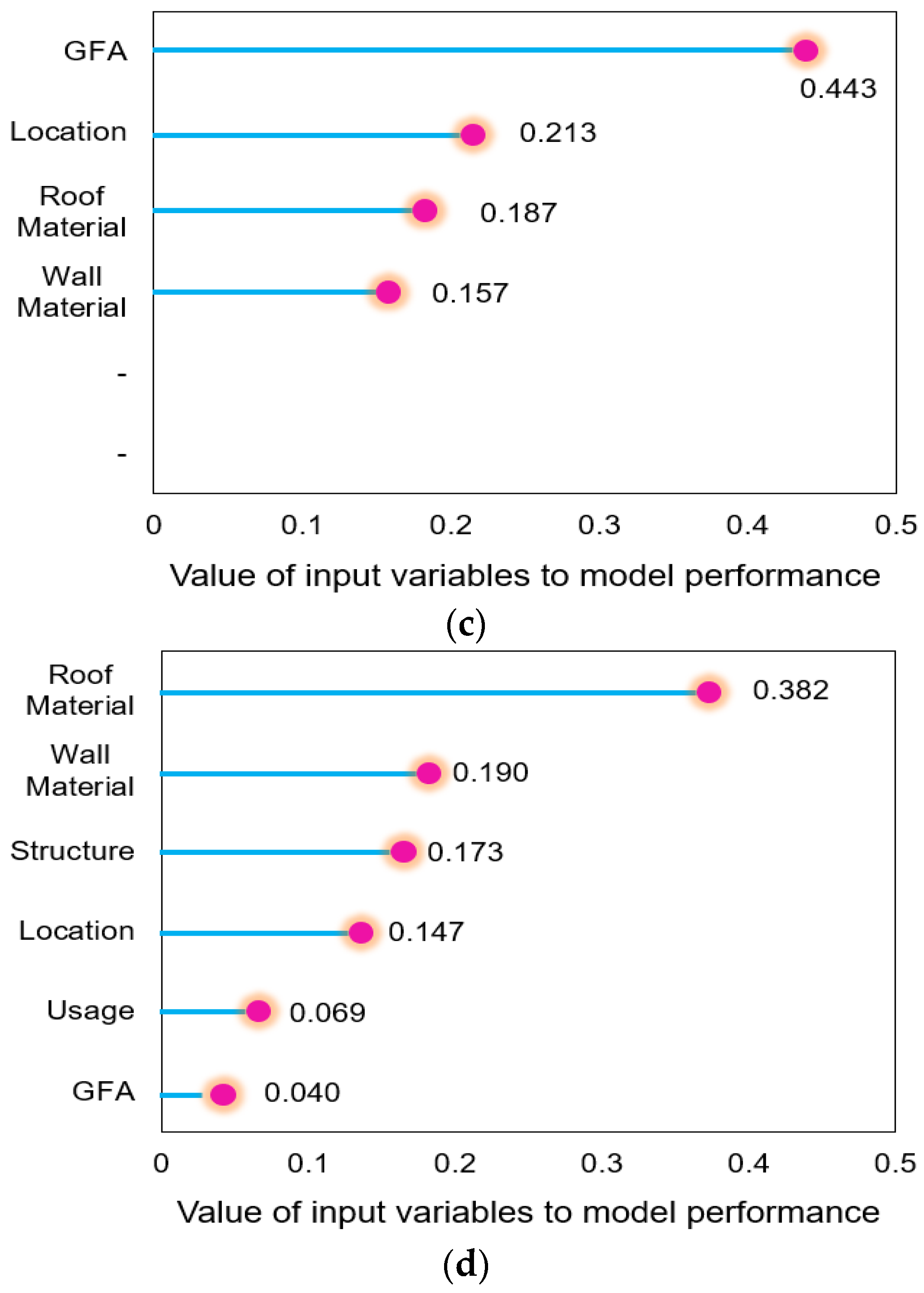

3.2. Feature Importance

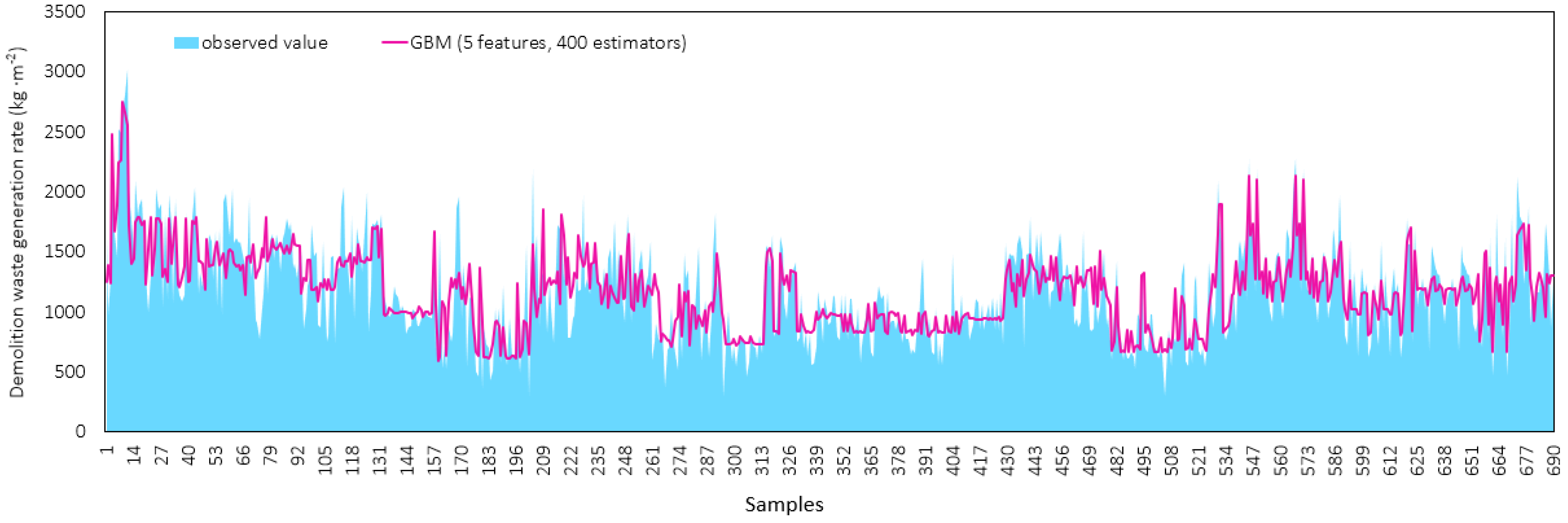

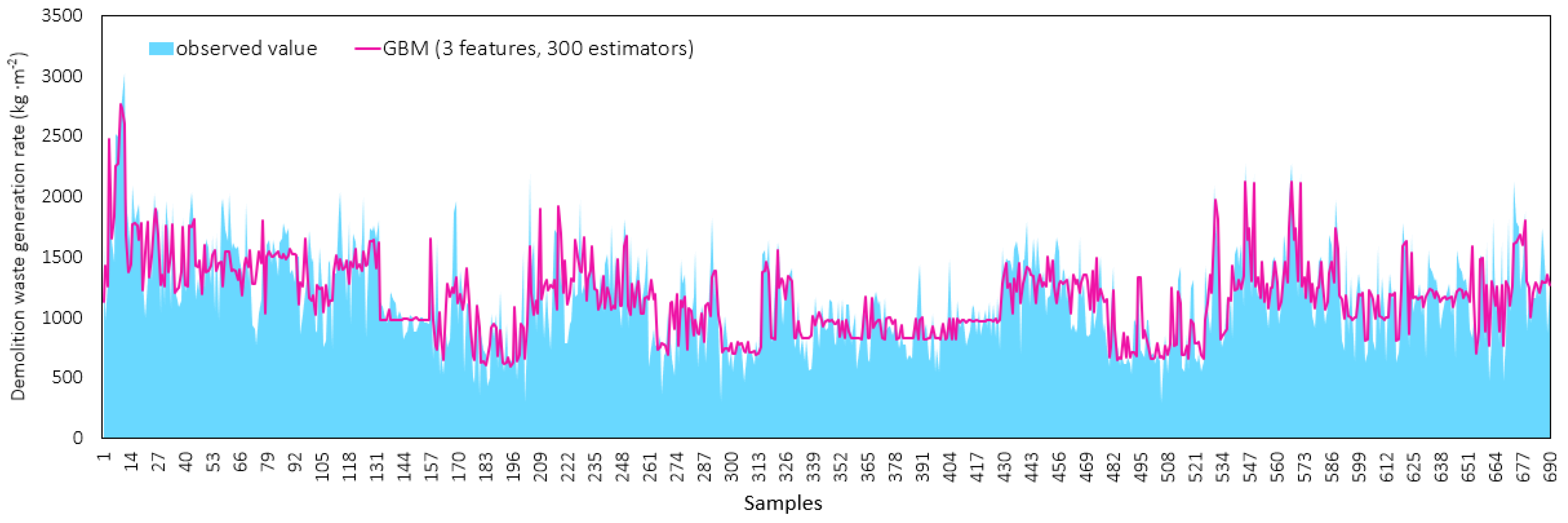

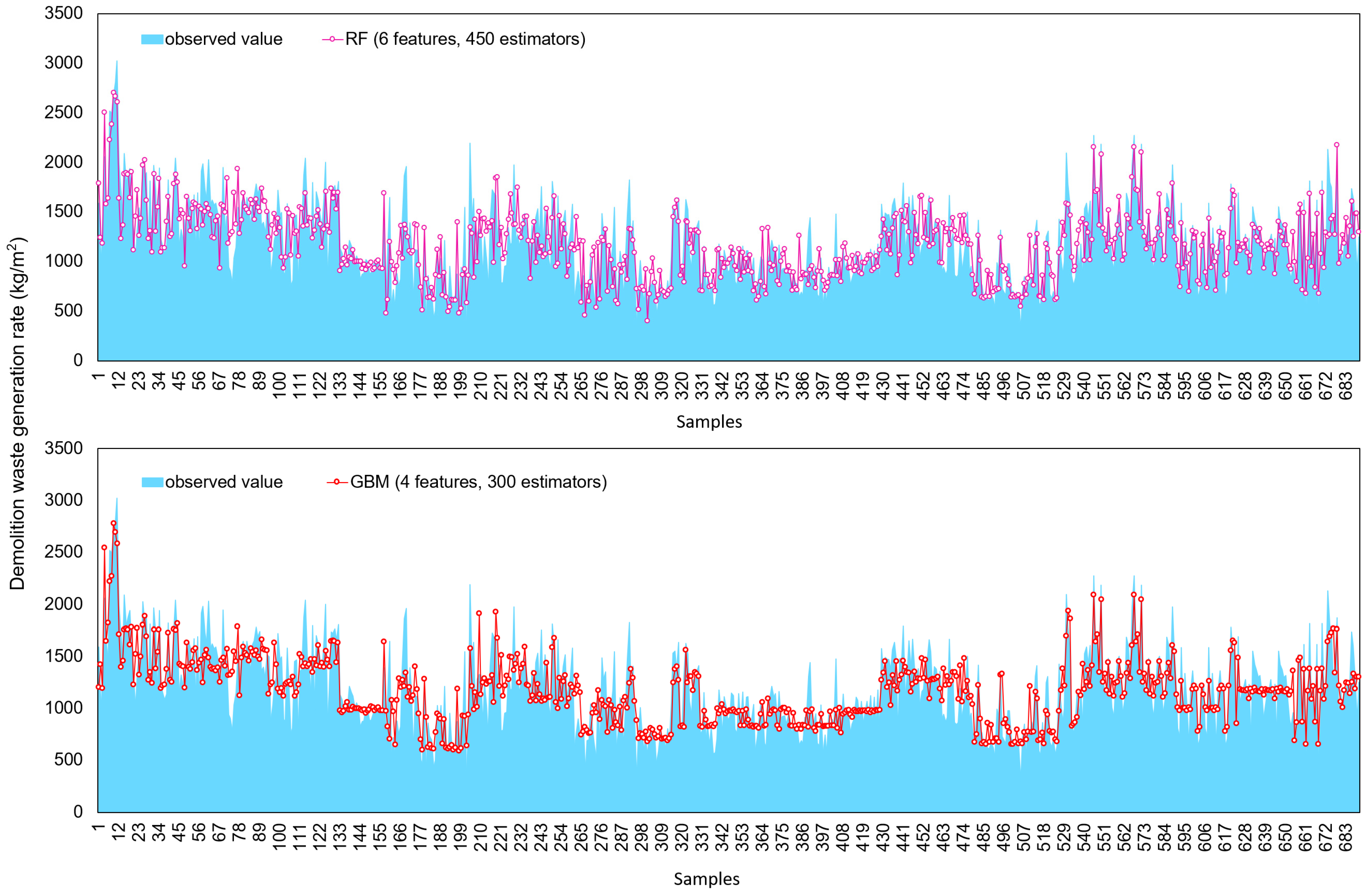

3.3. Performance Evaluation and Optimal Ensemble Model

4. Discussion

5. Conclusions

- (1)

- RF and GBM exhibited superior predictive performances compared to ET and XGboost for the relatively small, categorical data environment.

- (2)

- The most suitable models were RF (6 features, 450 trees) and GBM (4 features, 300 trees), where the predictive performance of the former was: R2, 0.6171; R, 0.7855; RMSE, 253.727; prediction error, 64,377; and latter was R2, 0.6142; R, 0.7837; RMSE, 253.085; and prediction error, 64,052. The mean observed value was 1171.2 kg·m−2, while the means of the RF (6, 450) and GBM (4, 300) models were 1169.94 and 1166.25 kg·m−2, respectively. The GBM model presented excellent performance, even with 3- and 5-features, or 300 and 400 estimators. The mean of the GBM (3, 300) and GBM (5, 400) predictive models was 1167.14 and 1165.22 kg·m−2, respectively.

- (3)

- A different result in feature importance was observed in the RT, ET, GBM, and XGboost models. In particular, the feature importance of GFA (0.432) had the greatest impact on RT, ET, and GBM models, followed by location, roof materials, and wall materials. For XGboost, the highest feature importance of 0.382 was determined to be the roof material, followed by wall material, structure, location, and usage; whereas the lowest feature importance was GFA.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Leao, S.; Bishop, I.; Evans, D. Spatial–temporal model for demand and allocation of waste landfills in growing urban regions. Comput. Environ. Urban Syst. 2004, 28, 353–385. [Google Scholar] [CrossRef]

- Ekanayake, L.L.; Ofori, G. Building waste assessment score: Design-based tool. Build. Environ. 2004, 39, 851–861. [Google Scholar] [CrossRef]

- Huang, X.; Xu, X. Legal regulation perspective of eco-efficiency construction waste reduction and utilization. Urban Dev. Stud. 2011, 9, 90–94. [Google Scholar]

- Islam, R.; Nazifa, T.H.; Yuniarto, A.; Uddin, A.S.; Salmiati, S.; Shahid, S. An empirical study of construction and demolition waste generation and implication of recycling. Waste Manag. 2019, 95, 10–21. [Google Scholar] [CrossRef]

- Li, J.; Ding, Z.; Mi, X.; Wang, J. A model for estimating construction waste generation index for building project in China. Resour. Conserv. Recycl. 2013, 74, 20–26. [Google Scholar] [CrossRef]

- Llatas, C. A model for quantifying construction waste in projects according to the European waste list. Waste Manag. 2011, 31, 1261–1276. [Google Scholar] [CrossRef]

- Wang, J.; Li, Z.; Vivian Tam, W.Y. Identifying best design strategies for construction waste minimization. J. Clean. Prod. 2015, 92, 237–247. [Google Scholar] [CrossRef]

- Butera, S.; Christensen, T.H.; Astrup, T.F. Composition and leaching of construction and demolition waste: Inorganic elements and organic compounds. J. Hazard. Mater. 2014, 276, 302–311. [Google Scholar] [CrossRef]

- Lu, W.S.; Yuan, H.P. A framework for understanding waste management studies in construction. Waste Manag. 2011, 31, 1252–1260. [Google Scholar] [CrossRef]

- Song, Y.; Wang, Y.; Liu, F.; Zhang, Y. Development of a hybrid model to predict construction and demolition waste: China as a case study. Waste Manag. 2017, 59, 350–361. [Google Scholar] [CrossRef]

- Lu, W.; Yuan, H.; Li, J.; Hao, J.J.; Mi, X.; Ding, Z. An empirical investigation of construction and demolition waste generation rates in Shenzhen city, South China. Waste Manag. 2011, 31, 680–687. [Google Scholar] [CrossRef]

- Hurley, J.W. Valuing the Pre-Demolition Audit Process; CIB Rep.: Lake Worth, FL, USA, 2003; Volume 287, Available online: http://cibw117.com/europe/valuing-the-pre-demolition-audit-process/ (accessed on 22 November 2017).

- Jalali, G.Z.M.; Nouri, R.E. Prediction of municipal solid waste generation by use of artificial neural network: A case study of Mashhad Intern. J. Environ. Res. 2008, 2, 13–22. [Google Scholar]

- Milojkovic, J.; Litovski, V. Comparison of some ANN based forecasting methods implemented on short time series. In Proceedings of the 9th Symposium on Neural Network Applications in Electrical Engineering, Belgrade, Serbia, 25–27 September 2008; pp. 175–178. [Google Scholar]

- Noori, R.; Abdoli, M.A.; Ghazizade, M.J.; Samieifard, R. Comparison of neural network and principal component-regression analysis to predict the solid waste generation in Tehran. Iran. J. Public Health 2009, 38, 74–84. [Google Scholar]

- Patel, V.; Meka, S. Forecasting of municipal solid waste generation for medium scale towns located in the state of Gujarat, India. Int. J. Innov. Res. Sci. Engin. Technol. 2013, 2, 4707–4716. [Google Scholar]

- Noori, R.; Abdoli, M.A.; Ghasrodashti, A.A.; Ghazizade, M.J. Prediction of municipal solid waste generation with combination of support vector machine and principal component analysis: A case study of Mashhad. Environ. Prog. Sustain. Energy 2008, 28, 249–258. [Google Scholar] [CrossRef]

- Dai, C.; Li, Y.P.; Huang, G.H. A two-stage support-vector-regression optimization model for municipal solid waste management–a case study of Beijing, China. J. Environ. Manag. 2011, 92, 3023–3037. [Google Scholar] [CrossRef]

- Abdoli, M.A.; Nezhad, M.F.; Sede, R.S.; Behboudian, S. Longterm forecasting of solid waste generation by the artificial neural networks. Environ. Prog. Sustain. Energy 2011, 31, 628–636. [Google Scholar] [CrossRef]

- Afon, A.O.; Okewole, A. Estimating the quantity of solid waste generation in Oyo, Nigeria. Waste Manag. Res. 2007, 25, 371–379. [Google Scholar] [CrossRef]

- Thanh, N.P.; Matsui, Y.; Fujiwara, T. Household solid waste generation and characteristic in a Mekong Delta city, Vietnam. J. Environ. Manag. 2010, 91, 2307–2321. [Google Scholar] [CrossRef]

- Yuan, A.O.; Wu, C.; Huang, Z.W. The prediction of the output of municipal solid waste (MSW) in Nanchong city. Adv. Mater. Res. 2012, 518–523, 3552–3556. [Google Scholar] [CrossRef]

- Kontokosta, C.E.; Hong, B.; Johnson, N.E.; Starobin, D. Using machine learning and small area estimation to predict building-level municipal solid waste generation in cities. Comput. Environ. Urb. Syst. 2018, 70, 151–162. [Google Scholar] [CrossRef]

- Akanbi, L.A.; Oyedele, A.O.; Oyedele, L.O.; Salami, R.O. Deep learning model for demolition waste prediction in a circular economy. J. Clean. Prod. 2020, 274, 122843. [Google Scholar] [CrossRef]

- Nguyen, X.C.; Nguyen, T.T.H.; La, D.D.; Kumar, G.; Rene, E.R.; Nguyen, D.D.; Nguyen, V.K. Development of machine learning-based models to forecast solid waste generation in residential areas: A case study from Vietnam. Res. Conserv. Recycl. 2021, 167, 105381. [Google Scholar] [CrossRef]

- Jayaraman, V.; Parthasarathy, S.; Lakshminarayanan, A.R.; Singh, H.K. Predicting the quantity of municipal solid waste using XGBoost model. In Proceedings of the 3rd International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 2–4 September 2021; pp. 148–152. [Google Scholar]

- Johnson, N.E.; Ianiuk, O.; Cazap, D.; Liu, L.; Starobin, D.; Dobler, G.; Ghandehari, M. Patterns of waste generation: A gradient boosting model for short-term waste prediction in New York City. Waste Manag. 2017, 62, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Samadder, S.R.; Kumar, N.; Singh, C. Estimation of the generation rate of different types of plastic wastes and possible revenue recovery from informal recycling. Waste Manag. 2018, 79, 781–790. [Google Scholar] [CrossRef]

- Kannangara, M.; Dua, R.; Ahmadi, L.; Bensebaa, F. Modeling and prediction of regional municipal solid waste generation and diversion in Canada using machine learning approaches. Waste Manag. 2018, 74, 3–15. [Google Scholar] [CrossRef]

- Lu, W.; Lou, J.; Webster, C.; Xue, F.; Bao, Z.; Chi, B. Estimating construction waste generation in the Greater Bay Area, China using machine learning. Waste Manag. 2021, 134, 78–88. [Google Scholar] [CrossRef]

- Ghanbari, F.; Kamalan, H.; Sarraf, A. An evolutionary machine learning approach for municipal solid waste generation estimation utilizing socioeconomic components Arab. J. Geosci. 2021, 14, 92. [Google Scholar]

- Namoun, A.; Hussein, B.R.; Tufail, A.; Alrehaili, A.; Syed, T.A.; BenRhouma, O. An ensemble learning based classification approach for the prediction of household solid waste generation. Sensors 2022, 22, 3506. [Google Scholar] [CrossRef]

- da Figueira, A.; Pitombo, C.S.; de Oliveira, P.T.M.e.S.; Larocca, A.P.C. Identification of rules induced through decision tree algorithm for detection of traffic accidents with victims: A study case from Brazil. Case Stud. Transp. Polic. 2017, 5, 200–207. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. An assessment of the effectiveness of decision tree methods for land cover classification. Remote Sens. Env. 2003, 86, 554–565. [Google Scholar] [CrossRef]

- Brown, G. Ensemble Learning. In Encyclopedia of Machine Learning and Data Mining; Sammut, C., Webb, G.I., Eds.; US Springer: New York, NY, USA, 2017; pp. 393–402. [Google Scholar]

- Krogh, A.; Vedelsby, J. Neural network ensembles, cross validation, and active learning. Adv. Neural Inf. Process. Syst. 1995, 7, 231–238. [Google Scholar]

- Perrone, M.P.; Cooper, L.N. When networks disagree: Ensemble methods for hybrid neural networks. In How We Learn; How We Remember: Toward an Understanding of Brain and Neural Systems: Selected Papers of Leon N Cooper; World Scientific: Singapore, 1992. [Google Scholar]

- Dietterich, T.G. Ensemble methods in machine learning. In International Workshop on Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; pp. 1–15. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Papadopoulos, S.; Azar, E.; Woon, W.L.; Kontokosta, C.E. Evaluation of tree-based ensemble learning algorithms for building energy performance estimation. J. Build. Perform. Simul. 2018, 11, 322–332. [Google Scholar] [CrossRef]

- Friedman, J.H. Stochastic gradient boosting. Comp. Stat. Data Anal. 2002, 38, 367–378. [Google Scholar] [CrossRef]

- Fan, J.; Yue, W.; Wu, L.; Zhang, F.; Cai, H.; Wang, X.; Xiang, Y. Evaluation of SVM, ELM and four tree-based ensemble models for predicting daily reference evapotranspiration using limited meteorological data in different climates of China. Agric. Forest Meteorol. 2018, 263, 225–241. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Le, L.T.; Nguyen, H.; Zhou, J.; Dou, J.; Moayedi, H. Estimating the heating load of buildings for smart city planning using a novel artificial intelligence technique PSO-XGBoost. Appl. Sci. 2019, 9, 2714. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, J.; Khandelwal, M.; Yang, H.; Yang, P.; Li, C. Performance evaluation of hybrid WOA-XGBoost, GWO-XGBoost and BO-XGBoost models to predict blast-induced ground vibration. Eng. Comput. 2021, 38, 4145–4162. [Google Scholar] [CrossRef]

- Zhou, J.; Qiu, Y.; Khandelwal, M.; Zhu, S.; Zhang, X. Developing a hybrid model of Jaya algorithm-based extreme gradient boosting machine to estimate blast-induced ground vibrations Intern. J. Rock Mech. Min. Sci. 2021, 145, 104856. [Google Scholar] [CrossRef]

- Zhou, J.; Qiu, Y.; Zhu, S.; Armaghani, D.J.; Khandelwal, M.; Mohamad, E.T. Estimation of the TBM advance rate under hard rock conditions using XGBoost and Bayesian optimization. Undergr. Space 2021, 6, 506–515. [Google Scholar] [CrossRef]

- Farooq, F.; Ahmed, W.; Akbar, A.; Aslam, F.; Alyousef, R. Predictive modeling for sustainable high-performance concrete from industrial wastes: A comparison optimization of models using ensemble learners. J. Clean. Prod. 2021, 292, 126032. [Google Scholar] [CrossRef]

- Chen, K.; Peng, Y.; Lu, S.; Lin, B.; Li, X. Bagging based ensemble learning approaches for modeling the emission of PCDD/Fs from municipal solid waste incinerators. Chemosphere 2021, 274, 129802. [Google Scholar] [CrossRef]

- Probst, P.; Boulesteix, A.L. To tune or not to tune the number of trees in random forest. J. Mach. Learn. Res. 2017, 18, 6673–6690. [Google Scholar]

- Cheng, J.; Dekkers, J.C.; Fernando, R.L. Cross-validation of best linear unbiased predictions of breeding values using an efficient leave-one-out strategy. J. Anim. Breed. Genet. 2021, 138, 519–527. [Google Scholar] [CrossRef]

- Wong, T.T. Performance evaluation of classification algorithms by K-fold and leave-one-out cross validation. Pattern Recog. 2015, 48, 2839–2846. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann: Burlington, MA, USA, 2011. [Google Scholar]

- Cha, G.W.; Moon, H.J.; Kim, Y.M.; Hong, W.H.; Hwang, J.H.; Park, W.J.; Kim, Y.C. Development of a prediction model for demolition waste generation using a random forest algorithm based on small datasets Intern. J. Environ. Res. Public Health 2020, 17, 6997. [Google Scholar] [CrossRef]

- Cha, G.W.; Moon, H.J.; Kim, Y.C. Comparison of random forest gradient boosting machine models for predicting demolition waste based on small datasets categorical variables Intern. J. Environ. Res. Public Health 2021, 18, 8530. [Google Scholar] [CrossRef]

- Cheng, H.; Garrick, D.J.; Fernando, R.L. Efficient strategies for leave-one-out cross validation for genomic best linear unbiased prediction. J. Anim. Sci. Biotechnol. 2017, 8, 38. [Google Scholar] [CrossRef]

- Shao, Z.; Er, M.J. Efficient leave-one-out cross-validation-based regularized extreme learning machine. Neurocomputing 2016, 194, 260–270. [Google Scholar] [CrossRef]

- Shahhosseini, M.; Hu, G.; Pham, H. Optimizing ensemble weights and hyperparameters of machine learning models for regression problems. Mach. Learn. Appl. 2022, 7, 100251. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J.; Franklin, J. The elements of statistical learning: Data mining, inference and prediction. Math. Intell. 2005, 27, 83–85. [Google Scholar]

- Chen, X.; Lu, W. Identifying factors influencing demolition waste generation in Hong Kong. J. Clean. Prod. 2017, 141, 799–811. [Google Scholar] [CrossRef]

- Poon, C.S.; Ann, T.W.; Ng, L.H. On-site sorting of construction and demolition waste in Hong Kong. Res. Conserv. Recycl. 2001, 32, 157–172. [Google Scholar] [CrossRef]

- Banias, G.; Achillas, C.; Vlachokostas, C.; Moussiopoulos, N.; Papaioannou, I. A web-based decision support system for the optimal management of construction and demolition waste. Waste Manag. 2011, 31, 2497–2502. [Google Scholar] [CrossRef]

- Andersen, F.M.; Larsen, H.; Skovgaard, M.; Moll, S.; Isoard, S. A European model for waste and material flows. Res. Conserv. Recycl. 2007, 49, 421–435. [Google Scholar] [CrossRef]

- Bergsdal, H.; Bohne, R.A.; Brattebø, H. Projection of construction and demolition waste in Norway. J. Ind. Ecol. 2007, 11, 27–39. [Google Scholar] [CrossRef]

- Bohne, R.A.; Brattebø, H.; Bergsdal, H. Dynamic eco-efficiency projections for construction and demolition waste recycling strategies at the city level. J. Ind. Ecol. 2008, 12, 52–68. [Google Scholar] [CrossRef]

- Byeon, H. Comparing ensemble-based machine learning classifiers developed for distinguishing hypokinetic dysarthria from presbyphonia. Appl. Sci. 2021, 11, 2235. [Google Scholar] [CrossRef]

- Ahmad, M.W.; Reynolds, J.; Rezgui, Y. Predictive modelling for solar thermal energy systems: A comparison of support vector regression random forest extra trees regression trees. J. Clean. Prod. 2018, 203, 810–821. [Google Scholar] [CrossRef]

| Studies | Waste Type | Applied Algorithm | Performance |

|---|---|---|---|

| Song et al. [10] | C&D waste | GM-SVR | the average value of relative percent error < 0.1 |

| Johnson et al. [27] | MSW | GBM | R2 value: 0.685–0.906 |

| Kontokosta et al. [23] | MSW | GBM | R2 value: 0.73–0.87 |

| Kumar et al. [28] | MSW | ANN | R2 value: 0.75 |

| RF | R2 value: 0.66 | ||

| SVM | R2 value: 0.74 | ||

| Kannangara et al. [29] | MSW | ANN | R2 value: 0.72 |

| DT | R2 value: 0.54 | ||

| Paper | ANN | R2 value: 0.31 | |

| DT | R2 value: 0.35 | ||

| Lu et al. [30] | C&D waste | GM | R2 value: 0.977 |

| ANN | R2 value: 0.918 | ||

| MLR | R2 value: 0.777 | ||

| DT | R2 value: 0.764 | ||

| Akanbi et al. [24] | C&D waste | DNN | R2 value: 0.948–0.994 |

| Ghanbari et al. [31] | MSW | Multivariate adaptive regression splines (MARS) | R2 value: 0.90 |

| ANN | R2 value: 0.74 | ||

| RF | R2 value: 0.88 | ||

| Nguyen et al. [25] | MSW | KNN | R2 value: 0.96 |

| RF | R2 value: 0.97 | ||

| DNN | R2 value: 0.91 | ||

| Jayaraman et al. [26] | MSW | ARIMA | R2 value: −0.89 |

| XGboost | R2 value: 0.41 | ||

| Namoun et al. [32] | Household waste | SVR | R2 value: 0.692 |

| XGboost | R2 value: 0.67 | ||

| LightGBM | R2 value: 0.745 | ||

| RF | R2 value: 0.714 | ||

| ET | R2 value: 0.737 | ||

| ANN | R2 value: 0.685 |

| Category | Numbers | GFA (m2) | DWGR (kg·m−2) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Total | Min | Mean | Max | Total | Min | Mean | Max | |||

| Location | 1 | 343 | 31,542 | 21 | 92 | 275 | 450,310 | 298 | 1313 | 6034 |

| 2 | 356 | 40,653 | 19 | 114 | 1127 | 485,037 | 83 | 1362 | 8574 | |

| 3 | 83 | 13,851 | 26 | 167 | 414 | 101,531 | 736 | 1223 | 1808 | |

| Usage | 1 | 595 | 54,929 | 19 | 92 | 514 | 767,578 | 83 | 1290 | 8574 |

| 2 | 172 | 28,706 | 22 | 167 | 1127 | 251,381 | 418 | 1462 | 5718 | |

| 3 | 15 | 2410 | 28 | 161 | 790 | 19,510 | 607 | 1301 | 2474 | |

| Structure | 1 | 87 | 20,783 | 47 | 239 | 1127 | 169,538 | 418 | 1949 | 6034 |

| 2 | 604 | 56,975 | 19 | 94 | 688 | 788,042 | 83 | 1305 | 8574 | |

| 3 | 91 | 8288 | 24 | 91 | 206 | 80,889 | 298 | 889 | 2237 | |

| Wall material | 1 | 9 | 3693 | 48 | 410 | 1127 | 10,357 | 871 | 1151 | 4696 |

| 2 | 236 | 32,584 | 23 | 138 | 790 | 391,259 | 252 | 1658 | 6034 | |

| 3 | 500 | 47,089 | 19 | 94 | 688 | 596,799 | 83 | 1194 | 8574 | |

| 4 | 37 | 2679 | 24 | 72 | 137 | 40,056 | 517 | 1083 | 2591 | |

| Roof material | 1 | 289 | 43,565 | 21 | 151 | 1127 | 479,356 | 252 | 1659 | 6034 |

| 2 | 33 | 4414 | 76 | 134 | 282 | 38,877 | 252 | 1178 | 1808 | |

| 3 | 178 | 12,439 | 23 | 70 | 206 | 227,923 | 306 | 1280 | 8574 | |

| 4 | 282 | 25,627 | 19 | 91 | 688 | 292,314 | 83 | 1037 | 2527 | |

| Data Preprocessing | Number of Samples | Minimum | Maximum | Average | Median | Standard Deviation | Variance |

|---|---|---|---|---|---|---|---|

| Before | 782 | 83.34 | 8573.79 | 1327.97 | 1162.25 | 809.2 | 654,032.4 |

| After | 690 | 298.30 | 3024.04 | 1165.04 | 1138.30 | 407.7 | 166,016.7 |

| Algorithm | Hyper-Parameter | Definition | Tested Value |

|---|---|---|---|

| RF | n_estimators | The number of trees in the forest | 100, 150, 200, 250, 300, 350, 400, 450, 500 |

| min_samples_split | The minimum number of samples required to split an internal node | 1, 2, 3, 4, 5 | |

| min_samples_leaf | The minimum number of samples required to be at a leaf node | 1, 2, 3, 4, 5 | |

| max_depth | The maximum depth of the tree | Maximum possible | |

| ET | n_estimators | The minimum number of samples required to split an internal node | 100, 150, 200, 250, 300, 350, 400, 450, 500 |

| min_samples_split | The minimum number of samples required to be at a leaf node | 1, 2, 3, 4, 5 | |

| min_samples_leaf | 1, 2, 3, 4, 5 | ||

| max_depth | The maximum depth of the tree | None | |

| max_leaf_nodes | None | ||

| GBM | n_estimators | The number of boosting stages | 100, 150, 200, 250, 300, 350, 400, 450, 500 |

| min_samples_split | The minimum number of samples required to split an internal node | 1, 2, 3, 4, 5 | |

| loss | Least squares | Least squares | |

| learning rate | Amount of learning | 0.01, 0.1, 1 | |

| subsample | Rate of sampling data to control overfitting | 1.0 | |

| XGboost | n_estimators | The minimum number of samples required to split an internal node | 100, 150, 200, 250, 300, 350, 400, 450, 500 |

| eta | 0.3 step size shrinkage used in update to prevent overfitting | 0.3 | |

| max_depth | The maximum depth of the tree | 10 | |

| min_child_weight | 1 | ||

| max_delta_step | 0 | ||

| subsample | 1 |

| Model | n_Feature | n_Estimator | RMSE | R Square | R |

|---|---|---|---|---|---|

| RF | 6 | 450 | 253.727 | 0.6171 | 0.7855 |

| 5 | 350 | 261.772 | 0.6002 | 0.7747 | |

| 4 | 350 | 260.989 | 0.6026 | 0.7763 | |

| 3 | 300 | 261.836 | 0.6006 | 0.7750 | |

| ET | 6 | 400 | 269.396 | 0.5936 | 0.7704 |

| 5 | 350 | 277.334 | 0.5726 | 0.7567 | |

| 4 | 250 | 278.221 | 0.5709 | 0.7556 | |

| 3 | 250 | 277.690 | 0.5720 | 0.7563 | |

| GBM | 6 | 400 | 265.834 | 0.5806 | 0.7620 |

| 5 | 400 | 254.362 | 0.6103 | 0.7812 | |

| 4 | 300 | 253.085 | 0.6142 | 0.7837 | |

| 3 | 300 | 254.020 | 0.6114 | 0.7819 | |

| XGboost | 6 | 400 | 281.262 | 0.5565 | 0.7460 |

| 5 | 300 | 287.480 | 0.5539 | 0.7442 | |

| 4 | 300 | 287.790 | 0.5537 | 0.7441 | |

| 3 | 150 | 288.590 | 0.5516 | 0.7427 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, G.-W.; Hong, W.-H.; Choi, S.-H.; Kim, Y.-C. Developing an Optimal Ensemble Model to Estimate Building Demolition Waste Generation Rate. Sustainability 2023, 15, 10163. https://doi.org/10.3390/su151310163

Cha G-W, Hong W-H, Choi S-H, Kim Y-C. Developing an Optimal Ensemble Model to Estimate Building Demolition Waste Generation Rate. Sustainability. 2023; 15(13):10163. https://doi.org/10.3390/su151310163

Chicago/Turabian StyleCha, Gi-Wook, Won-Hwa Hong, Se-Hyu Choi, and Young-Chan Kim. 2023. "Developing an Optimal Ensemble Model to Estimate Building Demolition Waste Generation Rate" Sustainability 15, no. 13: 10163. https://doi.org/10.3390/su151310163

APA StyleCha, G.-W., Hong, W.-H., Choi, S.-H., & Kim, Y.-C. (2023). Developing an Optimal Ensemble Model to Estimate Building Demolition Waste Generation Rate. Sustainability, 15(13), 10163. https://doi.org/10.3390/su151310163