Abstract

Satellite images provide continuous access to observations of the Earth, making environmental monitoring more convenient for certain applications, such as tracking changes in land use and land cover (LULC). This paper is aimed to develop a prediction model for mapping LULC using multi-spectral satellite images, which were captured at a spatial resolution of 3 m by a 4-band PlanetScope satellite. The dataset used in the study includes 105 geo-referenced images categorized into 8 LULC different classes. To train this model on both raster and vector data, various machine learning strategies such as Support Vector Machines (SVMs), Decision Trees (DTs), Random Forests (RFs), Normal Bayes (NB), and Artificial Neural Networks (ANNs) were employed. A set of metrics including precision, recall, F-score, and kappa index are utilized to measure the accuracy of the model. Empirical experiments were conducted, and the results show that the ANN achieved a classification accuracy of 97.1%. To the best of our knowledge, this study represents the first attempt to monitor land changes in Egypt that were conducted on high-resolution images with 3 m of spatial resolution. This study highlights the potential of this approach for promoting sustainable land use practices and contributing to the achievement of sustainable development goals. The proposed method can also provide a reliable source for improving geographical services, such as detecting land changes.

1. Introduction

Currently, satellites have Earth look like one compact entity by providing continuous images with fine details [1]. This facility has enabled various applications such as those for agriculture, traffic control, and urban development to be actualized in real time [2]. However, these data need to be processed to infer their semantics. One of these applications is classifying land cover [1]. Land cover is a term used to describe the top layer of the ground, such as water, vegetation, bare soil, urban infrastructure, or another surface covering [3]. The associated processes (e.g., identifying, delineating, and mapping) are crucial for various applications such as global monitoring studies, resource management, and planning activities [4]. To achieve these goals, the first step is to obtain land cover identificationthat allows monitoring activities (e.g., change detection). This also provides information on ground cover for thematic maps’ to learn [3].

Multi-spectral images are images that are composed of data collected from multiple spectral bands or channels of the electromagnetic spectrum. These bands include visible light (red, green, and blue), near-infrared, shortwave infrared, thermal infrared, and others. By collecting data from multiple spectral bands, multi-spectral images can provide information on a variety of surface features, including vegetation health, soil moisture, land use and land cover, and temperature [5]. Applications for these images include mapping of land use and land cover, agricultural monitoring, urban planning, and environmental monitoring. Multi-spectral images are often processed and analyzed using image processing techniques, such as spectral unmixing, classification, and change detection, to extract meaningful pieces of information from the data [6,7].

On the other hand, land use classification aims to define the purpose/function of the land, such as for recreation, wildlife habitat, agriculture, etc. [8]. It draws attention to some applications such as baseline mapping and subsequent monitoring; for instance, the current amount of available land, its type of use, and its changes from year to year [8,9].

Notably, these applications are data- and location-dependent, e.g., land cover and land use vary from place to place because some have problems with illegal use or extinction, such as coastal areas. While most previous studies used pixel-based satellite images to learn Land Use/Land Cover (LULC) classifications, others categorized images as object-based ones. In this research, vector data are used for an object-based “polygon” and raster as a point-based “pixel” to obtain a high-performance classification model [10,11]. Furthermore, unlike previous methods, high-resolution satellite images that offer fine details that can denote the smallest image details are used. As deep learning has advanced, great progress in every field [12,13,14,15], including land cover has witnessed significant improvements [10]. In remote sensing, deep learning has been used in different forms such as LULC change detection [3,4], prediction of LULC [16], dataset and deep learning benchmark [17], image classification of agricultural surfaces [8,9], monitoring of pedestrian detection [10], classification of spatial trajectories [11], and forecasting traffic conditions [18]. Remote sensing, on the other hand, is involved with scanning the Earth using a group of satellites. Deep learning models rely extensively on large-scale datasets. In addition to its usefulness in monitoring land use and land cover changes, predicting LULC can contribute to sustainability efforts. By accurately predicting LULC changes, decision-makers can better plan for sustainable land use practices and mitigate negative impacts on the environment. This information can also be used to inform policies related to conservation, natural resource management, and urban planning. The use of high-resolution satellite imagery for environmental monitoring can also reduce the need for ground-based surveys and inspections, which can be time-consuming, expensive, and potentially harmful to natural habitats. Overall, the integration of satellite imagery and deep learning models can support sustainable development by providing timely and accurate information about changes to the environment.

For these two purposes, a new approach for mapping land use and land cover (LULC) is suggested in this paper, which integrates both raster and vector data using deep learning techniques. The aim of the model is to enhance the precision and effectiveness of LULC mapping, a critical factor in applications such as urban planning and environmental monitoring.

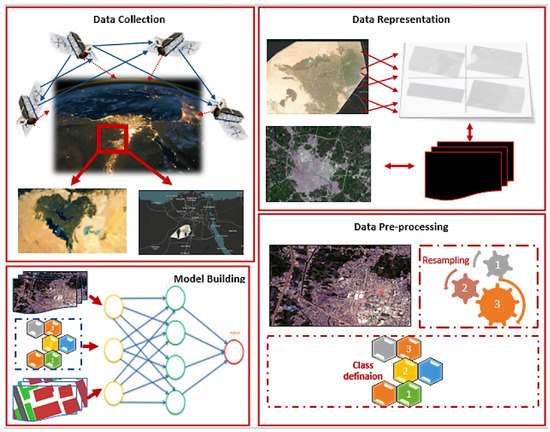

Furthermore, this paper introduces a new dataset for identifying land use and land cover in Fayoum state, Egypt. This state is used as a case study because it has a variety of land use and land cover examples; additional information regarding the geographic location being studied is provided within the “Study Area” Section 3.1. Satellite images are obtained using the 4-band planet scope of 1 June 2022, with each image having 4 bands (red, green, blue, and near-infrared). The ground sample distance for these images is m and their pixel dimensions are . These images undergo a series of procedures before being utilized as input for the proposed model. Figure 1 illustrates the sequential processes and phases utilized for constructing the model, beginning with data collection from satellite imagery. It then proceeds to data representation, followed by pre-processing to enhance the input images and establish various classes, such as numbers 1, 2, and 3, to represent different categories. Finally, the model building phase is conducted to construct the model using the training data.

Figure 1.

Main phases of the proposed model.

Extensive comparisons of the proposed dataset using several models are conducted, with the proposed one trained on two types of data: raster, i.e., high-resolution images, and vector data. Furthermore, we present full benchmarks that demonstrate reliable classification performance and serve as the foundation for developing many applications in the aforementioned domains. How the categorization model may be used to detect changes in land use and/or cover, as well as how it can help improve geographic maps is demonstrated.

The contributions through this work can be summarized as follows:

- Developing a land use and land cover (LULC) model using deep learning that involves utilizing raster and vector data as inputs;

- Proposing a novel large-scale dataset with multi-spectral high-resolution satellite images along with raster data for Fayoum state, Egypt;

- Providing benchmarks for the collected dataset using deep learning/machine learning models;

- Comparing related methods for classifying land cover and land use;

- Comparing between deep learning and machine learning models that accurately classify different types of LULC from satellite imagery;

- Generating a map that shows the various types of land cover and land use in a particular area that involves using remote sensing data and GIS.

2. Related Work

Recent studies have employed deep learning to predict various types of LULC classifications. Some studies were primarily concerned with identifying objects, while others focused specifically on land use and cover.

Venter, Z.S. in [19] compared and evaluated the accuracy and suitability of three global land use and land cover (LULC) maps. The authors have used ground truth data to assess the accuracy of these maps, and have also discussed their relative advantages and disadvantages. The study found that the three LULC maps show strong spatial correspondence for water, built area, tree, and crop classes. However, each map was found to have certain biases in mapping other LULC classes. For instance, Dynamic World (DW) overestimated grass cover, Esri overestimated shrub and scrub cover, and DW overestimated snow and ice cover. In terms of overall accuracy, Esri was found to be the most accurate, followed by DW and World Cover (WC). The most precisely mapped category was water, succeeded by built-up areas, tree cover, and crops. The classes with the lowest accuracies included scrub and shrub, bare ground, grass, and flooded vegetation. The datasets used in this study include DW, WC, and Esri Land Cover (ELC) datasets. DW is a global 10 m resolution LULC dataset developed by the Chinese Academy of Sciences, covering 2015. WC is a global 10 m resolution LULC dataset developed by the European Space Agency, covering 2019. ELC is a global 10 m resolution LULC dataset developed by Esri, covering 2020. The study also found that the accuracy of the LULC maps varied depending on the spatial scale of the ground truth data used. WC was found to have the highest accuracy when European ground truth data from the Land Use/Cover Area Frame Survey (LUCAS) were used, which had a smaller minimum mapping unit than the global ground truth data used in the study. This implies that WC is more suitable for resolving landscape features in greater detail when compared to DW and Esri.

The authors have highlighted the advantages of DW, which offers frequent and near real-time data delivery of categorical predictions and class probability scores. However, they caution that the suitability of global LULC products should be carefully evaluated based on the intended application, such as monitoring aggregate changes in ecosystem accounting versus site-specific change detection. They emphasized the significance of following best practices in design-based inference and area estimation to accurately quantify uncertainty for a specific study area

The major LULC classes of the Athabasca River watershed were found to be coniferous forest (47.30%), agriculture (6.37%), mixed forest (6.65%), water (6.10%), deciduous forest (16.76%), and developed land (3.78%). The study also performed data fusion techniques using the Alberta merged wetland inventory 2017 data to fulfill the data requirements of scientists across a range of disciplines. The data fusion provided two maps which are applicable for hydro-ecological applications, describing two specific categories including different types of burned and wetland classes.

Sertel, E. et al. in [20] discussed the use of deep learning-based segmentation approaches for LULC mapping using very high-resolution (VHR) satellite images. The authors highlighted the increasing importance of LULC mapping in various geospatial applications and the challenges associated with it due to the complexity of LULC classes. A novel benchmark dataset was created by the authors using VHR Worldview-3 images. This dataset has twelve unique LULC classes from two separate geographical areas. Then, the performance of several segmentation architectures and encoders was evaluated to determine the ideal layout for producing extremely precise LULC maps. The metrics used for evaluation included Intersection over Union (IoU), F-1 score, precision, and recall. The most optimal performance across several metric values was achieved by the DeepLabv3+ architecture combined with a ResNeXt50 encoder, which yielded an IoU of 89.46%, an F-1 score of 94.35%, a precision of 94.25%, and a recall of 94.49%. These results demonstrate the potential of deep learning-based segmentation approaches for accurate LULC mapping using VHR satellite images. The authors also propose that supervised, semi-supervised, or weakly supervised deep learning models may be utilized to create new segmentation algorithms using their benchmark dataset as a reference. They may also utilize the model findings to generalize other approaches and transfer learning, providing a valuable resource for future research in this field.

Ali, K. et al. in [21] discuss the use of deep learning methods for LULC classification in semi-arid regions using remote sensing imagery with a medium level of resolution. The authors emphasize the value of comprehensive LULC data for a variety of applications such as urban and rural planning, disaster management, and climate change adaptation. They observed that LULC classification has undergone a paradigm change with the rise of deep learning techniques, and they aimed to assess the efficacy of various Sentinel-2 image band combinations for LULC mapping in semi-arid regions using CNN models.

The results demonstrated that the four-band CNN model exhibits robustness in areas with semi-arid climates, even when dealing with a land cover that is complex in both spectral and spatial aspects. The results also revealed that the ten-band composite did not improve the overall accuracy of the classification, suggesting that using fewer bands may be sufficient for LULC mapping in semi-arid regions.

In [4], Allama, M. et al. examined how remote sensing and GIS techniques can be utilized to monitor changes in land use and land cover within the arid region of Fayoum, Egypt. The authors focused on the period between 1990 and 2018 to analyze the changes that occurred during this time and to identify the driving factors of these changes. The authors used Landsat satellite imagery and a supervised classification approach to categorize the LULC types in the study region. The authors used 4 Landsat images acquired in 1990, 2000, 2010, and 2018 to monitor changes in LULC over time. The study area was classified into six LULC classes, including cultivated land, barren land, water bodies, built-up areas, dunes, and vegetation. The authors used a supervised classification method to classify the Landsat images into four major LULC categories: agriculture, water bodies, urban areas, and bare land. They used the maximum likelihood classifier (MLC) to assign each pixel to one of the four classes based on the spectral signature of the pixel. The authors also used the Normalized Difference Vegetation Index (NDVI) to identify vegetation cover in the study area. The study’s findings demonstrated that LULC in the Fayoum Region has changed significantly over the study period. The authors found that agricultural areas and urban areas had expanded at the expense of water bodies and bare land. They also observed that the NDVI had decreased over time, indicating a decline in vegetation cover.

In [9], a new convolutional neural network (CNN) model called the Weight Feature Value CNN (WFCNN) was developed to conduct remote sensing image segmentation and extract more comprehensive land use information from the images. It comprised an encoder, a classifier, and a set of spectral features, as well as five semantic feature tiers acquired through the encoder. The model utilized linear fusion to hierarchically merge semantic characteristics, while an adjustment layer was employed to fine-tune them for optimal results. The model’s pixel-by-pixel segmentation outperformed other versions in terms of precision, accuracy, recall, and F1-score, indicating its potential advantages. They found that WFCNN can enhance the accuracy and automation of large-scale land use mapping and the extraction of other information using remote sensing imagery. The results indicated that there have been significant changes in LULC in the study area over the last three decades. The analysis showed that the built-up area grew from 0.79% percent in 1990 to 6.35% percent in 2018, while the cultivated land decreased from 32.48% in 1990 to 17.94% in 2018. The results also showed an increase in barren land and sand dunes, which might be attributed to the expansion of urban areas and the over-exploitation of natural resources.

Rousset, G. et al. in [22], aimed to identify the ideal deep learning configuration for LULC mapping in New Caledonia’s complicated subtropical climate. Five sample locations of New Caledonia were labeled by a human operator for a specific dataset based on SPOT6 satellite data, with four serving as training sets and the fifth as a test set. Multiple architectures were trained and evaluated against a cutting-edge machine learning technique and XGBoost. The authors also evaluated the usefulness of common neo-channels that were generated from the raw data in the context of deep learning. The results indicated that the deep learning approach achieved results that were similar to XGBoost for land cover detection while surpassing it for the task of land use detection. Additionally, the accuracy of the deep learning land use classification task was significantly increased by combining the output of the dedicated deep learning architecture’s land cover classification task with the raw channel’s input, achieving an overall accuracy on the LC and LU detection tasks of 61.45% and 51.56%, respectively.

3. Materials and Methods

3.1. Study Area

Fayoum is located in the western region of Egypt, approximately 80 km southwest of Cairo. As depicted in Figure 2, the geographical location can be identified by the coordinates of 291835.82 N latitude and 305030.48 E longitude. It is recognized for its magnificent natural landscapes and its historical significance, dating back to ancient times. In terms of land use and cover, Fayoum is largely dependent on agriculture, with a significant portion of the surrounding areas comprising expansive farmland. The location’s rich soil and the proximity to the Nile River render it an optimal location for farming, with crops such as wheat, corn, and fruit being cultivated in ample amounts. Furthermore, the city is home to many protected natural sites, such as the Wadi El Rayan and Qarun Lake Protected Areas, which attract many nature lovers and tourists. The surrounding land in the region features land cover types such as urban areas and desert landscapes. Furthermore, there is a mixture of modern and traditional architecture, and it is renowned for its vibrant markets and ancient ruins, drawing tourists from around the globe.

Figure 2.

The geographic location of Fayoum, a city situated in the north of the Western region of Egypt.

3.2. Dataset and Building Environment

For this particular case, a dataset covering a 10 km area of Fayoum city is employed. It comprises 65 images, each measuring 7939 × 3775 pixels and possessing a spatial resolution of 3.7 m per pixel. The images are sourced from a 4-band PlanetScope scene in the RGB color space, while additional pictures obtained from Google Earth are utilized to boost the resolution of the extracted images. The dataset does not have a pre-defined classification. Instead, the categories are identified manually, relying on the expertise of professionals. Table 1 presents the proposed eight classes along with the description/definition of the sub-classes for the land use and land cover (LULC) features within the primary class. The QGIS open-source tool, as well as an ArcGIS desktop, is utilized to create the model, and the entire process is conducted on a Windows 10 platform.

Table 1.

Proposed class types (LU), sub-class descriptions, cover (LC), and corresponding colors.

The classification of classes is based on both the color and shape of spatial features which are related to land cover. For example, the class “Tree” contains various samples depicting the different shapes and colors of trees that might appear in raster images. The spatial features, along with their corresponding class number/label, are stored in the vector layer. Therefore, the vector layer is composed of classes, which are used to categorize and organize the features within, a combination of vector layer and raster satellite images are utilized to train the model. Figure 3 displays examples of the classes that have been identified and defined.

Figure 3.

Definition of classes on the vector layer.

3.3. Framework of Proposed LULCRV Classification Model

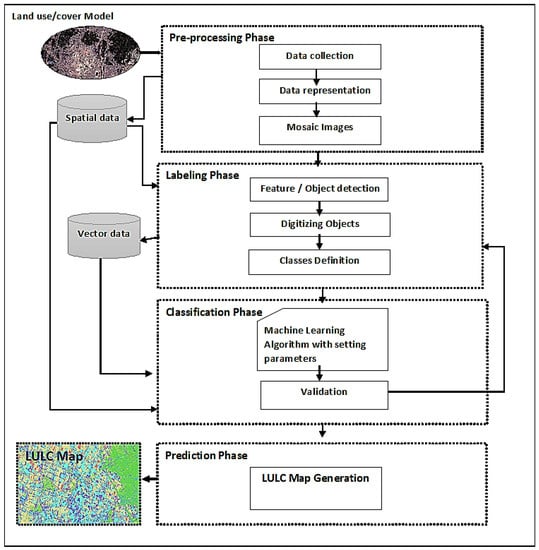

The LULC Raster Vector (LULCRV) classification model was developed based on machine learning (ML). It has four phases including pre-processing, labeling, model building, model classification, and prediction as shown in Figure 4.

Figure 4.

The proposed LULCRV classification model.

The four phases are discussed here in detail as follows:

- The pre-processing phase contains the following elements:

- Data collectionThis is the process of capturing data from the real world and/or satellite. This research is focused on Fayoum governorate in Egypt using satellite images and human visualization of experts. The captured images are 4-band ones with 3 m resolution and ground sample distance of 3.7 m.

- Data representationThis is the process of manually defining classes based on their types, colors, and shape as well as their associated unique attributes. A human expert’s experience is taken into consideration in both the definition and interpretation of classes.

- Mosaic imagesMosaic images are made in LULC mapping by merging satellite photos or aerial photographs obtained at various periods or with different sensors. These photos are then geo-referenced and mosaicked to form a single image covering the whole research region.

- Labeling phaseThis involves a series of processes for assigning specific labels to detected objects using the following three steps:

- Detecting feature and defining objectObject detection relies on spatial features such as shape, color, and boundary, and involves defining samples that represent each feature within a given class.

- Digitizing objectsThis process involves creating a pattern vector layer for each distinct feature or object in a raster dataset, followed by digitizing and storing these features on the respective layer.

- Defining of classesInitially, a group of classes is established based on the interpreted entities and their features. Subsequently, a class label is assigned to each digitized object based on the attributes of each class, as well as the shapes and colors of the objects.

- Model building phaseThe creation of a classifier model involves utilizing one or more machine learning classifiers on the training dataset. This process encompasses the subsequent steps:

- Development of ML classifierThe model classifier building phase is composed of two primary processes, which are the development of the ML classifier and validation. The initial development process is the model selection, which is choosing the appropriate machine learning algorithm or approach for the problem at hand. Then, model training, which is using the dataset to train the machine learning model; this involves finding the optimal values of model parameters and/or hyperparameters through an iterative optimization process. Finally, model evaluation is assessing the performance of the trained model on a validation dataset to ensure that it is generalizing well to new, unseen data.In the proposed model, a set of ML classification algorithms such as SVM, random forests (RFs), Normal Bayes (NB), and ANN are applied to the training dataset. The ML classifier model takes two main inputs, which are a/the composition of the raster mosaic image combined with a vector layer that includes pre-defined samples. The values for the parameters of the machine learning classifier are established and assigned by considering the outcomes obtained. This is given a detailed discussion in Section 4.After the development of the ML classifier, the validation process follows. Validation is the process of re-defining non-categorized areas not defined by the model. This process ensures that the model’s output is reliable and accurate by comparing it with the ground truth or known data. In this way, the model’s performance can be evaluated, and necessary adjustments or modifications can be made to enhance its accuracy and efficiency.

- PredictionThe final phase is the prediction which is a classified map that displays the predicted land use and land cover classes of the new area.

4. Results and Discussion

4.1. Results

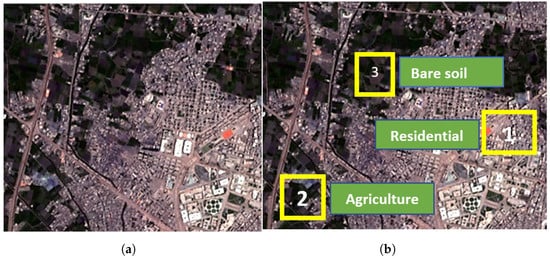

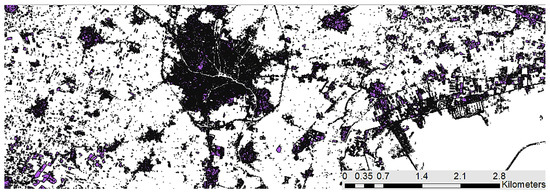

The initial step involved collecting and representing the data, following which a mosaic image was produced from input images that contained multiple bands of data. They are high-resolution images with 4 bands (RGB and near-infrared) generated by re-sampling input images using the k-nearest neighbor approach. In Figure 5a, a mosaic image is generated and then the characteristics of various objects within it are identified and used to classify them according to their shape, color, type, and the land cover they appear on. The resulting data are divided into eight distinct categories, each with detailed descriptions of their sub-categories as listed in Table 1. In this current stage, a fresh set of categories is introduced and a training dataset is generated. The process involves digitizing the detected objects for each class and saving them as a separate category in the vector layer. Subsequently, each category within the training dataset is assigned a distinctive number. As depicted in Figure 5b the numbers 1, 2, and 3 represent three specific types from the defined classes: residential, agriculture, and bare soil. These numbers are used to symbolize different categories, while the shapes depict the corresponding digitized area associated with each type. Finally, a distinct color is assigned to each category, as illustrated in Table 1.

Figure 5.

Training set: (a) Raster mosaic and (b) vector layer. (a) Mosaic output layer that creates multi-input images from 4-band images. (b) Vector layer containing digitized objects and their class number.

The classifiers used to train and build the proposed LULCRV model are SVM, RF, decision tree (DT), NB, and ANN, which are discussed later in detail. To conduct the classification process, the images fed into the model are divided into a collection of samples, based on the vector layer. A sampling technique is employed for all categories, which is based on the number of samples in the smallest category, referred to as the “number”. This “number” adjusts the number of samples based on the smallest class, which in the case of class 2, is 119. These samples are then split into two equal sets of 50% each for training and testing purposes.

The accuracy of classification is measured using five types of measures that is, precision, recall, f-score, kappa index, and confusion matrix. They are defined and calculated as the true positive (TP) for recognized objects, false negative (FN) for non-detected ones, and false positive (FP) for incorrectly identified ones. The f-score, precision, and recall values range from 0 to 1, where 0 means low identification for a specific class and 1 means ideal identification. Equations (1)–(3) describe precision, recall, and f-score, respectively.

4.1.1. Heuristic Models

SVM is a machine learning technique that has been employed in LULC classification. The SVM algorithm identifies a hyperplane that maximizes the separation between the various classes in the data based on the attributes or features of the data. The SVM algorithm is well suited for LULC classification tasks due to its ability to manage complex, non-linear relationships between the features and classes [23]. It can also accommodate large datasets with numerous attributes or features. Moreover, SVM is robust when handling noisy data, which makes it a valuable tool in LULC classification scenarios where the input imagery may be prone to noise or inaccuracies. The SVM formula can be written as follows:

Coefficients () in the model are constrained to be non-negative, and any data point that corresponds to an value greater than zero is considered a support vector. The parameter C is used to balance training accuracy and model complexity, allowing for superior generalization capabilities. Additionally, a kernel function K is employed to transform the data into a higher-dimensional feature space, enabling linear separation.

SVM employs two cost parameters: C and Nu. C is utilized to balance the maximization of the margin of the decision function with the accurate classification of training examples. If the decision function can correctly classify all training points, a smaller margin will be tolerated for higher values of C. Conversely, a lower value of C will produce a wider margin at the expense of training precision. Essentially, C acts as a regularization parameter in the SVM. The parameter “Nu” sets an upper limit on the fraction of margin error and a lower limit on the fraction of support vectors relative to the total number of training examples. For example, if Nu is set to 0.05, the SVM algorithm will ensure that no more than 5% of the training examples are misclassified, although this may result in a smaller margin. Additionally, the algorithm will ensure that the number of support vectors is at least 5% of the total training examples [23].

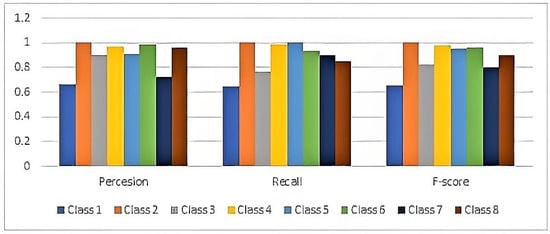

The precision, recall, and f-score values obtained from the SVM/confusion matrix are shown in Table 2. In summary, class 7has the lowest precision of 0.75; class 1 has the lowest recall and f-score values of 0.66 and 0.72, respectively; class 2 has the highest precision, recall, and f-score values of 1; and class 4 has values of 0.98, as shown in Figure 6.

Table 2.

Precision, recall, and f-score values of proposed eight classes using the SVM classifier.

Figure 6.

Graphs of precision, recall, and f-score values of proposed eight classes using SVM classifier.

Random Forest is a type of machine learning algorithm that is capable of performing classification and regression tasks. RF has demonstrated considerable success in LULC mapping tasks, which involve identifying and categorizing various types of LULC present within a given geographic area [24]. The RF algorithm constructs a forest of decision trees using a training set X = x1, x2, …, xn, with Features F = f1, f2, f3, …, fm. A new training set of size N is formed by selecting subsets from the original set with replacement, where each subset is created by randomly choosing N samples with a set of features Y, while Y is the number of features in new samples and Y ≤ M. As a result, some samples may be repeated in the new set while others may be absent [25,26,27].

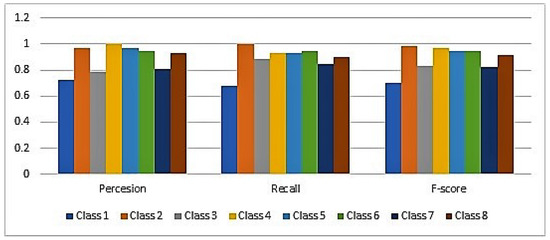

The precision, recall, and f-score values obtained from the RF classifier/confusion matrix are shown in Table 3. Class 1 has the lowest and class 2 the highest of all these measures, followed by classes 4, 5, and 6, as shown in Table 3 and Figure 7.

Table 3.

Precision, recall, and f-score values of proposed eight classes using RF classifier.

Figure 7.

Graphs of precision, recall, and f-score values of proposed eight classes using RF classifier.

DT is a type of machine learning algorithm that can be used for classification and regression tasks. There are several parameters that you can adjust when using decision trees for LULC classification, such as the depth of the tree, the splitting criterion, and the minimum number of samples required to split a node [28]. The DT method can be expressed as follows: given a dataset D = (x1, y1), (x2, y2), …, (xn, yn), where xi represents the features of the ith sample and yi is the corresponding label, a tree T is split based on the selected feature value(s) to create child nodes for each subset. This process is repeated recursively for each child node until a stopping criterion is met, such as reaching a maximum tree depth, having a minimum number of samples per node, or achieving a certain level of homogeneity/impurity. Table 4 shows the default values of parameters for RF and DT [27,29]. As shown in Table 4, we set the maximum depth of tree in RF and DT up to 30 to simplify the tree and improve its generalization performance. The optimal value for parameters of DT and RF depends on the specific dataset and problem being solved, and can be determined through hyperparameter tuning.

Table 4.

Input values of parameters in DT and RF classifiers.

As shown in Table 5, the most reliable prediction is for a large number of class 2 samples and the worst are the class 1 predictions of 0.727273, 0.677966, and 0.701754 for precision, recall, and f-score, respectively. Samples belonging to class 2 were accurately predicted across the board while the prediction accuracy for class 1 was the lowest. Thus, for class 2, the precision, recall, and f-score are 0.967213, 1, and 0.983333, respectively. This implies that the greatest interference is performed between class 1 and the others and, according to the DT algorithm, class 2 is segregated with non-interference. In Figure 8, graphs of the precision, recall, and f-score classes with high vs. lower ratings are illustrated.

Table 5.

Precision, recall, and f-score of proposed eight classes using DT classifier.

Figure 8.

Graphs of precision, recall, and f-score values of proposed eight classes using DT classifier.

NB is a machine learning algorithm that assumes the input features are independent and have a Gaussian distribution. It is often used for classification tasks, such as LULC mapping, where the goal is to classify different types of LULC based on a set of input features [30]. By training on labeled data, NB can learn the relationship between these input features and the corresponding LULC classes, allowing it to accurately predict the class of new, unlabeled data. NB can be expressed as

where variable y is the class variable X that represents the parameters/features and P(Y) the classifier with maximum probability.

The precision, recall, and f-score values obtained from the NB classifier/confusion matrix are shown in Table 6. The lowest ones are for classes 1 and 3, and the highest for classes 2, 4, 5, 8, 6, and 7. These values are mapped in Figure 9.

Table 6.

Precision, recall, and f-score values of proposed eight classes using NB classifier.

Figure 9.

Graphs of precision, recall, and f-score of proposed eight classes using NB classifier.

4.1.2. Artificial Neural Networks

We applied Artificial Neural Networks (ANNs), which are a broad class of machine learning models that can be used for LULC classification. Here is a general equation that describes the forward pass of a feedforward ANN:

The model’s specifications include training with 1000 iterations, 5 nodes in the hidden layer, and classifications into 8 distinct classes. In Equation (6), x is the input, Y is the output (a vector with 8 elements, one for each land cover class), is the weight matrix for the input layer (with dimensions 5 , where n represents the total number of input features included in the model), is the weight matrix for the output layer (with dimensions 8 × 5), is the bias vector for the input layer (with length 5), and is the bias vector for the output layer (with length 8) [26,27]. The activation function f used in the hidden layer is the hyperbolic tangent function (tanh) Equation (7):

The softmax function is applied to the output layer as the activation function [31,32] which normalizes the outputs of the network so that they sum to 1 and represent a probability distribution over the 8 classes, as in Equation (8):

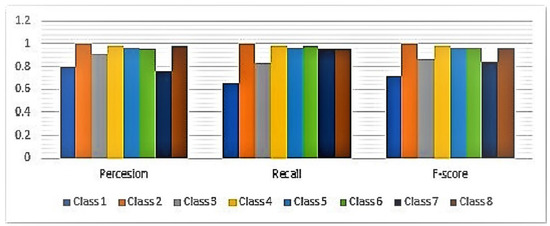

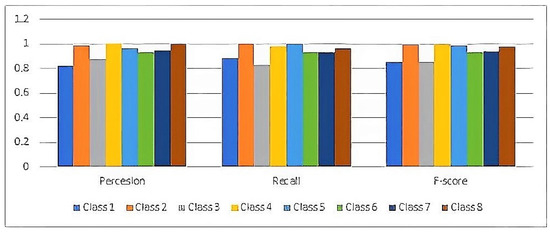

The precision, recall, and f-score values obtained from ANN classifier/confusion matrix are shown in Table 7. Those for all classes are between 0.92 and 1 which are higher than those of the other classifiers used. These values are shown in Table 7 and Figure 10.

Table 7.

Precision, recall, and f-score values of proposed eight classes using ANN classifier.

Figure 10.

Graphs of precision, recall, and f-score values of proposed eight classes using ANN classifier.

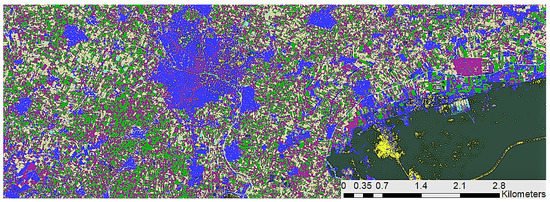

4.2. Prediction

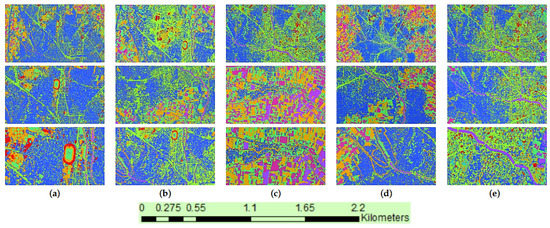

The LULCRV proposed model generates and predicts an LULC map; Figure 11 shows the LULC map generation of the proposed LULCRV. Columns (a), (b), (c), (d), and (e) show the generated LULC maps on different scales from the DT, RF, SVM, NB, and ANN models, respectively.

Figure 11.

LULC map generation of the proposed LULCRV, columns (a–e) show the generated LULC maps on different scales from the DT, RF, SVM, NB, and ANN models, respectively. (a) Prediction map for DT. (b) Prediction map for RF. (c) Prediction map for SVM. (d) Prediction map for NB. (e) Prediction map for the ANN model.

The average area for each class as it appears on the LULC map that was generated is in Table 8.

Table 8.

The average area for each class as it appears on the LULC map.

To evaluate the accuracy of an individual map, we compare it with the reference data and determine the level of agreement between them [33]. The reference data in Figure 12 comprises a vector layer created by generating random samples that are unlabeled initially, as in Figure 13. Subsequently, we assign labels to each of these points.

Figure 12.

Reference data: vector layer of random samples.

Figure 13.

A thematic map that has been produced through prediction.

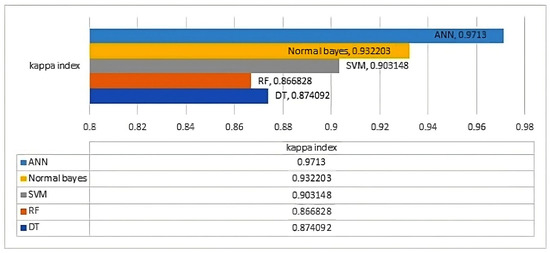

Kappa Index

The kappa index is measured for the five models and recorded in Figure 14. Kappa is derived from Cohen’s kappa formula, where the probability of random agreement is subtracted from the probability of agreement, and then the result is divided by 1 minus the probability of random agreement. The higher this index is, the more efficient and identical prediction is. The ANN is the model with the highest kappa index with 0.97130, NB is the second with 0.932203, SVM is the third with 0.903914, while the and models have lower values.

Figure 14.

Comparison of kappa indices for the , , , , and models.

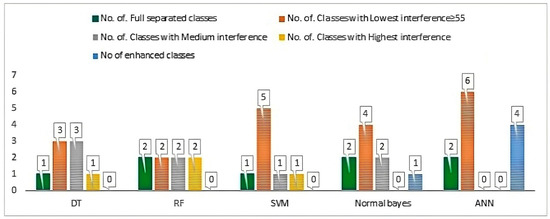

4.3. Analysis and Discussion

In Table 9 is a summary and comparison of a set of elements that enhance each model. They are defined as the number of fully separated classes with non-interference where all samples are correctly predicted; the number of classes with the lowest interference in which the predicted correct samples are ⩾55 of 59; the number of classes with medium interference in the range of (49 to 54) correct of 59 samples; the number of classes with maximum interference in the range of (38 to 48) correct samples of 59. The number of enhanced classes is the number of classes that transfer into low- or non-interference classes from medium or high ones.

Table 9.

Summary of results obtained by various classification models.

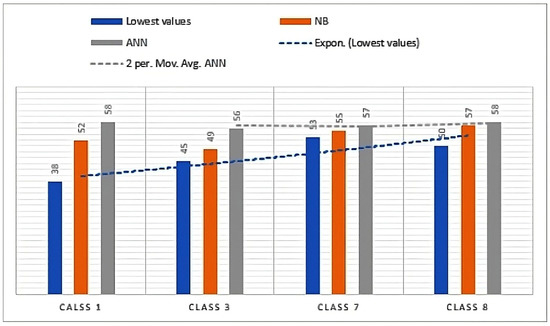

As shown in Table 9 and Figure 15, both NB, and ANN enhance the separation of high-interference classes through higher identifications of objects in the learning process and better prediction of these objects in the classification process, which reduces the number of high-interference classes and transforms them into low-interference ones.

Figure 15.

Comparison of fully separated classes with non-interference and high-interference results.

Regarding the LULCRV model, it is observed that classes 1 and 3 (residential and bare soil, respectively) have high interference with class 7 (roads). The reason for this is that there are common features of the objects belonging to these classes in the captured images. Similar features are described by color, band length, and sometimes shape. To solve the problem of interference, the vector layer with color segments is defined, enhanced, and updated for each class.

While ANN classifier/model achieves better performance when applied to all classes than the others, it uses an accurate input that identifies the network’s pattern and pre-processing by re-sampling the KNN algorithm to the input mosaic and obtaining a finer resolution. Furthermore, high-resolution images and two forms of training vector data are used in the training model. The first one is an object-based model that holds the features of objects such as boundaries, colors, and shapes, and the second is pixel-based that is captured randomly from different samples on the generated LULC map to measure its accuracy.

For the NB classifier, its key to success is to correctly predict an incoming test instance using the class of training instances. It does not necessitate a large number of observations for each potential combination of variables, which is especially useful when working with high-dimensional input data, such as a dataset with dimensions of 7939 × 3775 pixels. Additionally, the effect of a variable on a particular class in this classifier is independent of the value of another variable. Although this assumption simplifies the computation, it can occasionally outperform more complex computations.

Its learning iterations are the key explanation for the more powerful separation of classes and estimation of the ANN. Once the number of layers and the number of nodes in each layer are determined, its back-propagation ANNs configuration can be established. Then, the learning task involves assigning appropriate weights to every edge or link to ensure accurate classification of the unlabeled data. The weights and biases are initially allocated randomly in the range of [0, 1] during the back-propagation ANN learning. Then, an instance is added to the network and produces an output based on its current synaptic weights. This output is compared to a known good output, and a mean-square error is computed. The error is then propagated backwards through the network, and the weights of each layer are adjusted. This entire process is repeated with each instance and then returns to the first instance, continuing in a loop. The loop continues until the cumulative error value falls below a predetermined threshold.

It can be concluded that classes 1 and 3 are transformed from high-interference classes to medium-interference ones by the model and from high- to low-interference ones by the ANN model. In Figure 16 the difference and enhancement of the transformed groups are illustrated; for instance, the well-recognized samples are transformed from 38 into 52 by the model and 58 by the ANN classifier. Finally, the proposed LULCRV model and previous ones are compared. It is found that Allam, M., in [4] used satellite images with a 30 m spatial resolution to identify changes in LULC patterns in the Fayoum city of Egypt. However, The image classification dataset was limited to four distinct classes, while the proposed LULCRV model is applied with 3.0 m spatial resolution satellite images for the same region. High-resolution images can detect large numbers of objects and their characteristics, which is another consideration in the previous study focused on 6 RGB 3-band images. Furthermore, the proposed one uses 65 4-band images and applied 5 models/classifiers, the , , , , and models, while the previous related work used the MLC for classification. Finally, the proposed LULCRV outperforms the related work achieving 98% accuracy against 96%. Krueger, H., in [17] applied and SVM classifiers and attained 0.85 as their accuracy and a kappa of 0.81 for compared with the proposed RF LULCRV’s 0.86. The main difference is that the SVM and were applied to a large data 211 spot. This prevented the use of NNs, including an ANN, which may be more robust to the large size of satellite images, with pictures also captured by 3-band radar. C Marais, S., in [8] developed a WFCNN model that was trained using 6 4-band aerial images and then applied to 587 labeled images for testing. Two versions of the model were tested using Gaofen 6 and aerial images, and their performance was compared against SegNet, U-Net, and RefineNet. The precision, recall, and f1-score values of the WFCNN model were superior to those of the other models, indicating the advantages of performing pixel-by-pixel segmentation. There is little difference between the LULCRV and WFCNN classification models in terms of precision, recall, and f-score values because of the 4-band images and deep neural network used in both. Comparisons of the three elements of dataset, model, and result of the previously mentioned studies and proposed LULCRV model are provided in Table 10.

Figure 16.

Enhancements of interference classes by the and ANN classifier models.

Table 10.

A comparison between the proposed study and related studies.

5. Conclusions

In this research, a prediction model for LULC is proposed. It consists of 8 land use/cover classes using 3.0 m spatial multi-spectral high-resolution satellite images. The main objective is to build an ML classification model for land use/cover called LULCRV. It learns on high-resolution multi-spectral images and vector data; the raster image holds band information of specific pixels while the vector layer contains the definition of classes along with the corresponding class number. LULCRV involves pre-processing, labeling, model building, and prediction phases. During the pre-processing phase, the input data are prepared for the proposed LULCRV model, holding a vector training set and raster mosaic images. In the labeling phase, objects are extracted from input images based on their features, colors, shapes, and boundaries, and then assigned a class number and stored in a vector layer, as these objects. The model building phase builds the proposed LULCRV model using a set of classifier models, that is, , , , , and . The prediction phase generates an LULC map for the entire satellite image. The performance of the model is measured using metrics of precision, recall, f-score, and kappa index ratings. It is observed that the ANN achieves higher accuracy measures than the others.

Author Contributions

Conceptualization, R.M. and R.M.B; methodology, R.M., M.H. and R.M.B. software, R.M. and M.H.; validation, H.A.F. and M.H.; formal analysis, R.M.B and R.M.; resources, R.M.; writing—original draft preparation, R.M.; writing—review and editing, R.M., R.M.B. and H.A.F. visualization, M.H.; supervision, H.A.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study were available for researchers on planet https://www.planet.com/ and https://github.com/RehabMAbdelraheem/lulc.git (accessed on 11 April 2023).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LULC | Land use and land cover |

| LULCRV | Land use and land cover raster vector model |

| LC | Land cover |

| DT | Decision tree |

| SVMs | Support vector machines |

| RFs | Random forests |

| NB | Normal biases |

| ANN | Artificial Neural network |

| ML | Machine learning |

| TP | True positive |

| FN | False negative |

| FP | False positive |

References

- Nguyen, T.T.; Hoang, T.D.; Pham, M.T.; Vu, T.T.; Nguyen, T.H.; Huynh, Q.T.; Jo, J. Monitoring agriculture areas with satellite images and deep learning. Appl. Soft Comput. 2020, 95, 106565. [Google Scholar] [CrossRef]

- Malarvizhi, K.; Kumar, S.V.; Porchelvan, P. Use of high-resolution Google Earth satellite imagery in landuse map preparation for urban related applications. Procedia Technol. 2016, 24, 1835–1842. [Google Scholar] [CrossRef]

- Viana, C.M.; Oliveira, S.; Oliveira, S.C.; Rocha, J. Land Use/Land Cover Change Detection and Urban Sprawl Analysis; Elsevier: Amsterdam, The Netherlands, 2019; pp. 621–651. [Google Scholar]

- Allam, M.; Bakr, N.; Elbably, W. Multi-temporal assessment of land use/land cover change in arid region based on landsat satellite imagery: Case study in Fayoum Region, Egypt. Remote Sens. Appl. Soc. Environ. 2019, 14, 8–19. [Google Scholar] [CrossRef]

- Li, Y.F.; Liu, C.C.; Zhao, W.P.; Huang, Y.F. Multi-spectral remote sensing images feature coverage classification based on improved convolutional neural network. Math. Biosci. Eng. 2020, 17, 4443–4456. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y.; Liu, T.; Gao, G. Multimodal hyperspectral remote sensing: An overview and perspective. Sci. China Inf. Sci. 2021, 64, 121301. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Sicre, C.M.; Fieuzal, R.; Baup, F. Contribution of multispectral (optical and radar) satellite images to the classification of agricultural surfaces. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101972. [Google Scholar]

- Zhang, C.; Chen, Y.; Yang, X.; Gao, S.; Li, F.; Kong, A.; Zu, D.; Sun, L. Improved Remote Sensing Image Classification Based on Multi-Scale Feature Fusion. Remote Sens. 2020, 12, 213. [Google Scholar] [CrossRef]

- Subirats, L.; Ceccaroni, L. Real-Time Pedestrian Detection with Deep Network Cascades; Springer: Berlin/Heidelberg, Germany, 2017; Volume 7094, pp. 549–559. [Google Scholar]

- Van Der Woude, L.; De Groot, S.; Postema, K.; Bussmann, J.; Janssen, T.; Post, M. Classifying spatial trajectories using representation learning. Int. J. Data Sci. Anal. 2016, 35, 1097–1103. [Google Scholar]

- Tomè, D.; Monti, F.; Baroffio, L.; Bondi, L.; Tagliasacchi, M.; Tubaro, S. Deep Convolutional Neural Networks for pedestrian detection. Signal Process. Image Commun. 2016, 47, 482–489. [Google Scholar] [CrossRef]

- Hassanin, M.; Moustafa, N.; Tahtali, M.; Choo, K.K.R. Rethinking maximum-margin softmax for adversarial robustness. Comput. Secur. 2022, 116, 102640. [Google Scholar] [CrossRef]

- Hassanin, M.; Khan, S.; Tahtali, M. Visual affordance and function understanding: A survey. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Hassanin, M.; Anwar, S.; Radwan, I.; Khan, F.S.; Mian, A. Visual Attention Methods in Deep Learning: An In-Depth Survey. arXiv 2022, arXiv:2204.07756. [Google Scholar]

- Hu, Q.; Zhen, L.; Mao, Y.; Zhou, X.; Zhou, G. Automated building extraction using satellite remote sensing imagery. Autom. Constr. 2021, 123, 103509. [Google Scholar] [CrossRef]

- Krueger, H.; Noonan, V.; Williams, D.; Trenaman, L.; Rivers, C. EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. Appl. Earth Obs. Remote Sens. 2017, 51, 260–266. [Google Scholar]

- Cheng, X.; Zhang, R.; Zhou, J.; Xu, W. DeepTransport: Learning Spatial-Temporal Dependency for Traffic Condition Forecasting. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Venter, Z.S.; Barton, D.N.; Chakraborty, T.; Simensen, T.; Singh, G. Global 10 m Land Use Land Cover Datasets: A Comparison of Dynamic World, World Cover and Esri Land Cover. Remote Sens. 2022, 14, 4101. [Google Scholar] [CrossRef]

- Sertel, E.; Ekim, B.; Ettehadi Osgouei, P.; Kabadayi, M.E. Land Use and Land Cover Mapping Using Deep Learning Based Segmentation Approaches and VHR Worldview-3 Images. Remote Sens. 2022, 14, 4558. [Google Scholar] [CrossRef]

- Ali, K.; Johnson, B.A. Land-Use and Land-Cover Classification in Semi-Arid Areas from Medium-Resolution Remote-Sensing Imagery: A Deep Learning Approach. Sensors 2022, 22, 8750. [Google Scholar] [CrossRef]

- Rousset, G.; Despinoy, M.; Schindler, K.; Mangeas, M. Assessment of deep learning techniques for land use land cover classification in southern New Caledonia. Remote Sens. 2021, 13, 2257. [Google Scholar] [CrossRef]

- Liu, D.; Chen, J.; Wu, G.; Duan, H. SVM-Based Remote Sensing Image Classification and Monitoring of Lijiang Chenghai. In Proceedings of the International Conference on Remote Sensing, Environment and Transportation Engineering, Nanjing, China, 1–3 June 2012; pp. 1–4. [Google Scholar]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random Forest Classification of Wetland Landcovers from Multi-Sensor Data in the Arid Region of Xinjiang. J. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Li, F.; Zhu, Q.; Riley, W.J.; Zhao, L.; Xu, L.; Yuan, K.; Randerson, J.T. AttentionFire_v1. 0: Interpretable machine learning fire model for burned-area predictions over tropics. Geosci. Model Dev. 2023, 16, 869–884. [Google Scholar] [CrossRef]

- Shih, H.C.; Stow, D.A.; Tsai, Y.H. Guidance on and comparison of machine learning classifiers for Landsat-based land cover and land use mapping. Int. J. Remote Sens. 2019, 40, 1248–1274. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, W.; Yang, X.; Xie, N.; Cheng, Y. Classification Methods of Remote Sensing Image Based on Decision Tree Technologies. In Proceedings of the Computer and Computing Technologies in Agriculture IV: 4th IFIP TC 12 Conference, CCTA 2010, Nanchang, China, 22–25 October 2010; Li, D., Liu, Y., Chen, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 344, pp. 353–358. [Google Scholar]

- Talukdar, S.; Singha, P.; Mahato, S.; Shahfahad Pal, S.; Liou, Y.-A. Land-use land-cover classification by machine learning classifiers for satellite observations—A review. Remote Sens. 2020, 12, 1135. [Google Scholar] [CrossRef]

- Schraik, D.; Varvia, P.; Korhonen, L.; Rautiainen, M. Bayesian inversion of a forest reflectance model using Sentinel-2 and Landsat 8 satellite images. J. Quant. Spectrosc. Radiat. Transf. 2019, 33, 1–12. [Google Scholar] [CrossRef]

- Kross, A.; Znoj, E.; Callegari, D.; Kaur, G.; Sunohara, M.; Lapen, D.; McNairn, H. “Using Artificial Neural Networks and Remotely Sensed Data to Evaluate the Relative Importance of Variables for Prediction of Within-Field Corn and Soybean Yields. Remote Sens. 2020, 12, 2230. [Google Scholar] [CrossRef]

- Chen, W.; Pourghasemi, H.R.; Kornejady, A.; Zhang, N. Landslide spatial modeling: Introducing new ensembles of ANN, MaxEnt, and SVM machine learning techniques. Geoderma 2017, 305, 314–327. [Google Scholar] [CrossRef]

- Castillo-Santiago, M.Á.; Mondragón-Vázquez, E.; Domínguez-Vera, R. Sample Data for Thematic Accuracy Assessment in QGIS. In Land Use Cover Datasets and Validation Tools; García-Álvarez, D., Camacho Olmedo, M.T., Paegelow, M., Mas, J.F., Eds.; Springer: Cham, Switzerland, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).