Running a Sustainable Social Media Business: The Use of Deep Learning Methods in Online-Comment Short Texts

Abstract

1. Introduction

2. Method

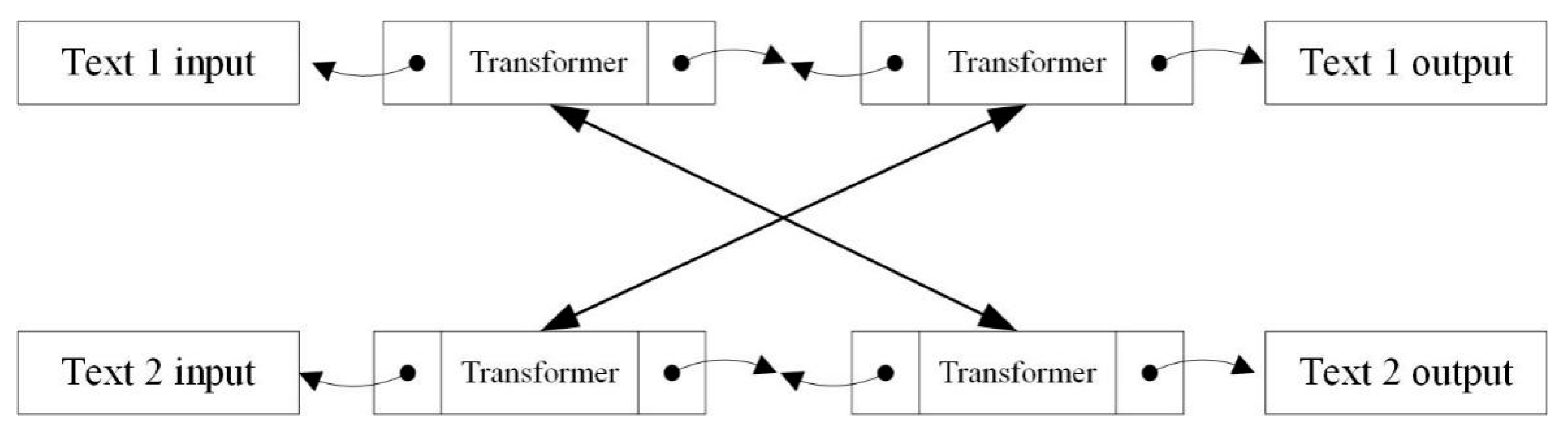

2.1. The BERT Model

2.2. CNN (Convolutional Neural Network)

2.3. Recurrent Neural Network

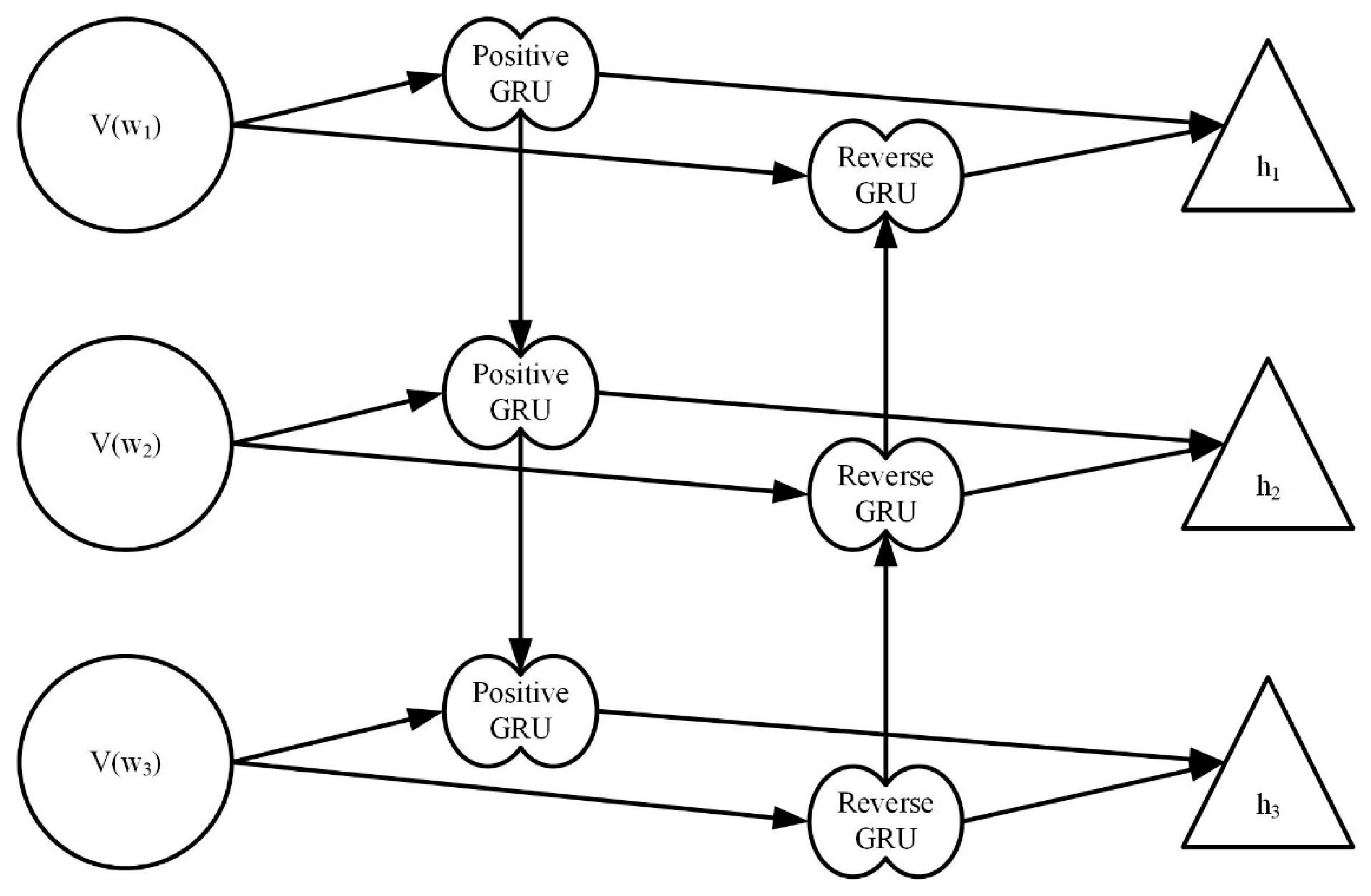

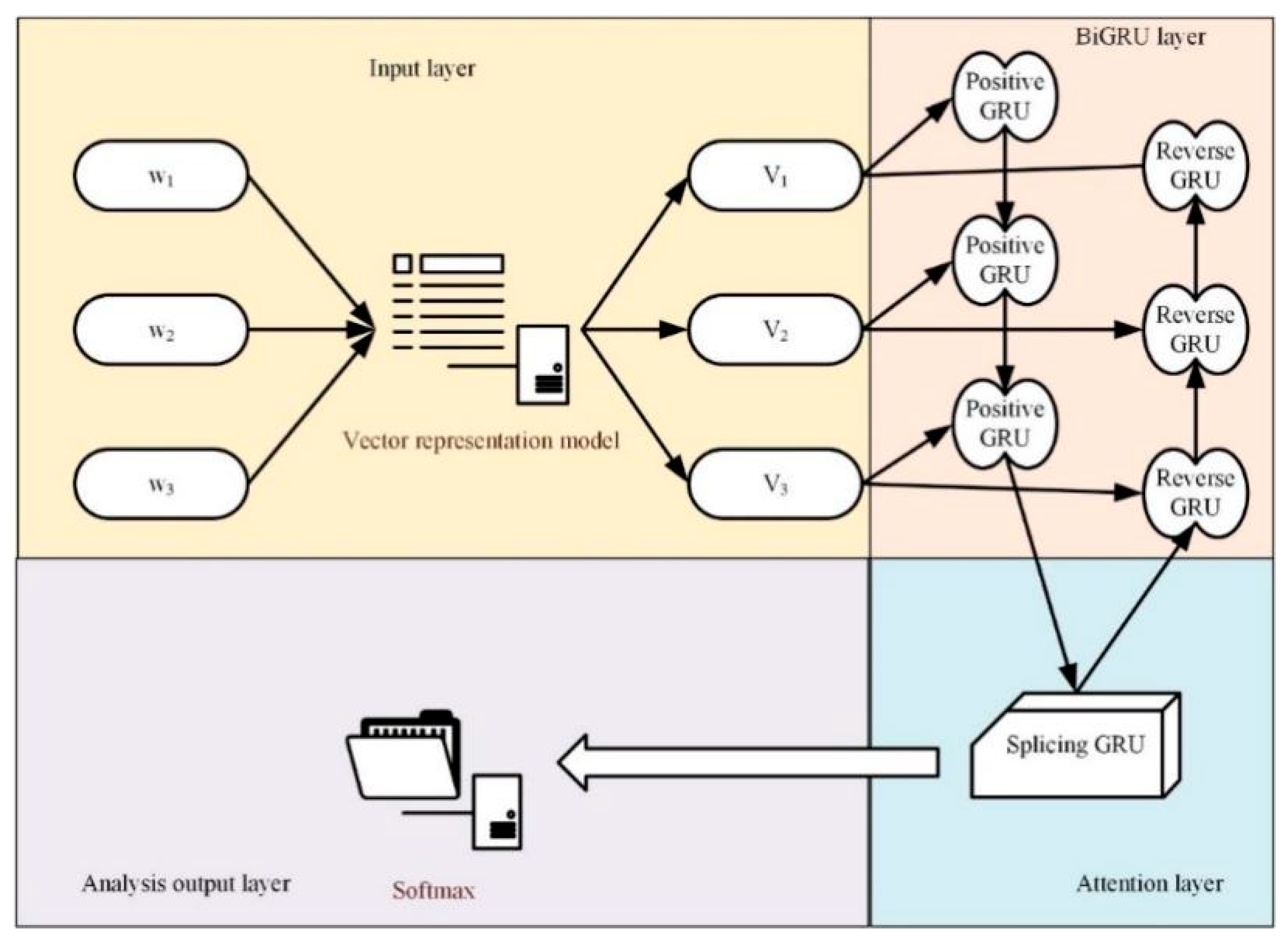

2.4. The BiGRU Network Model

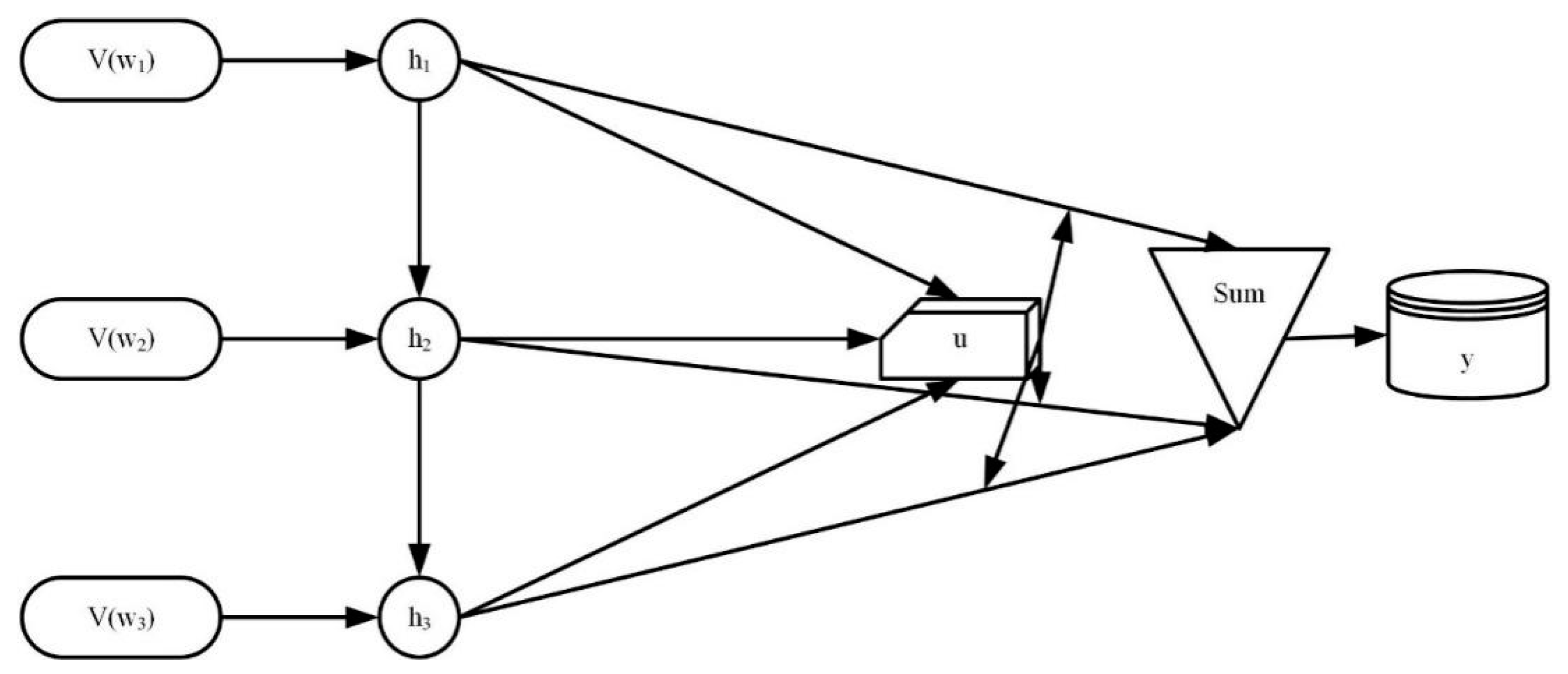

2.5. The Attention Mechanism

2.6. The Vector Representation Model of Online-Comment Short Texts

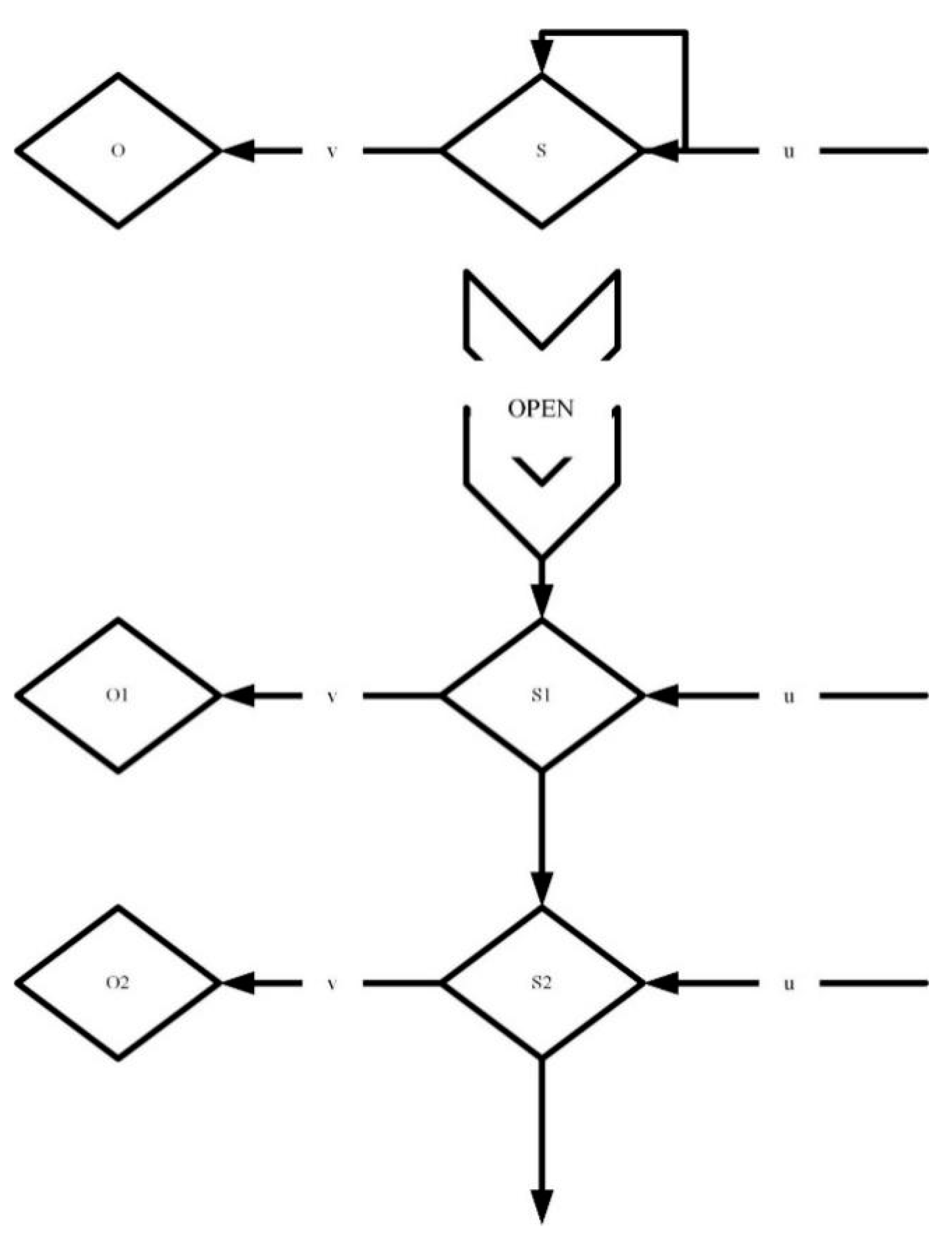

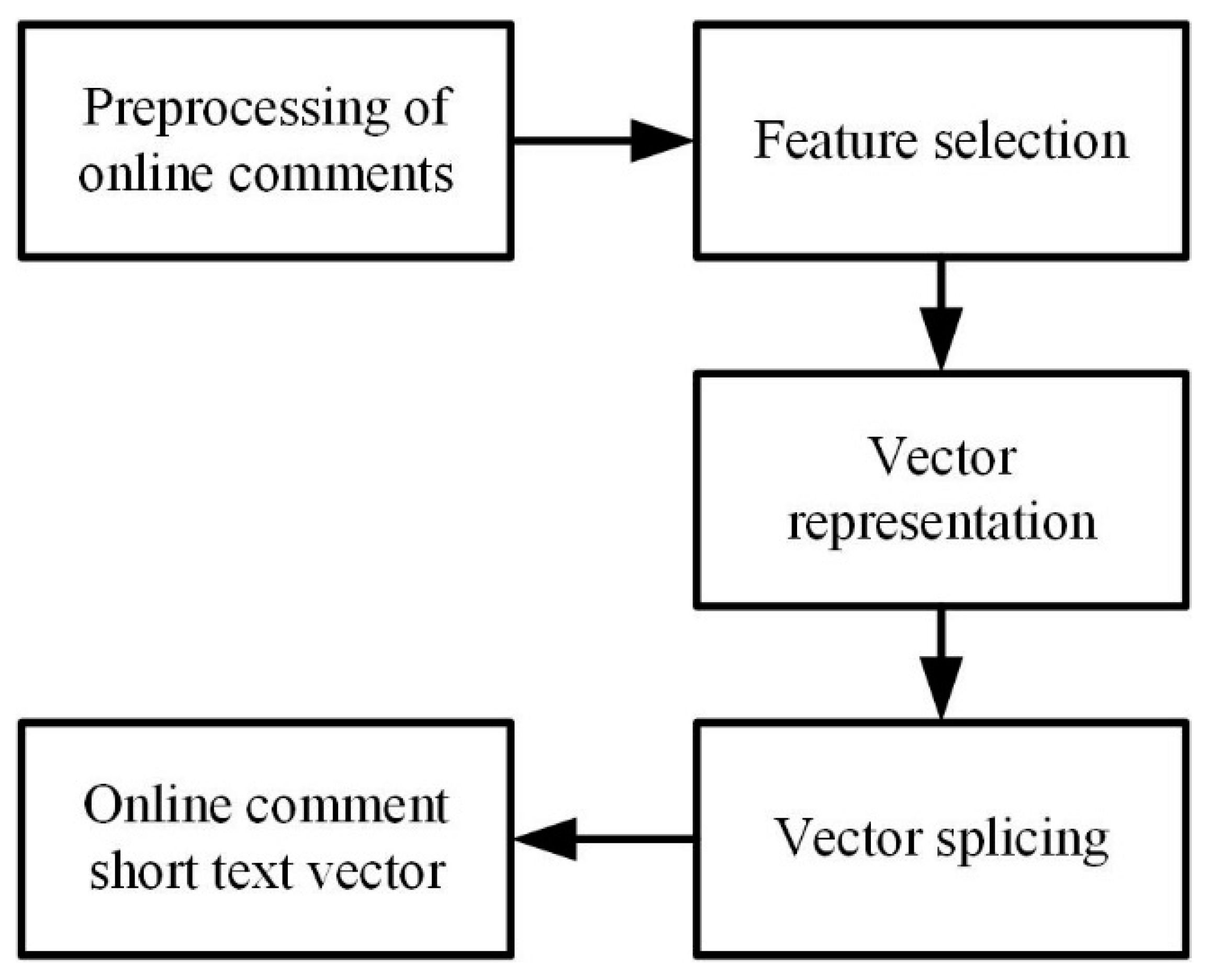

2.6.1. Construction of the Vector Representation Model

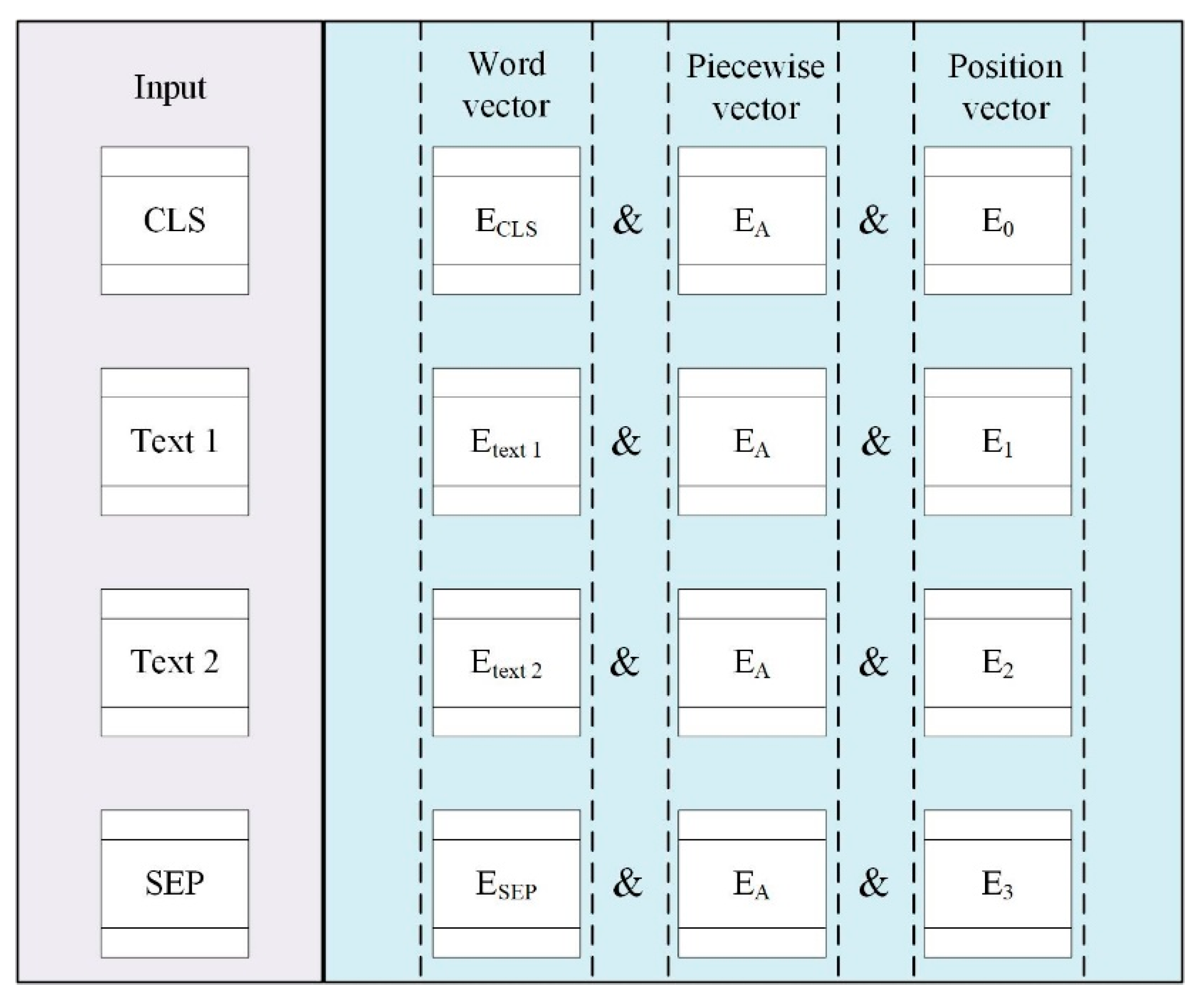

2.6.2. The Vector Representation Model of Short Texts Based on the BERT Model

2.6.3. Evaluation Indicators

2.7. The Sentiment Analysis Model Based on the BiGRU-Att Model

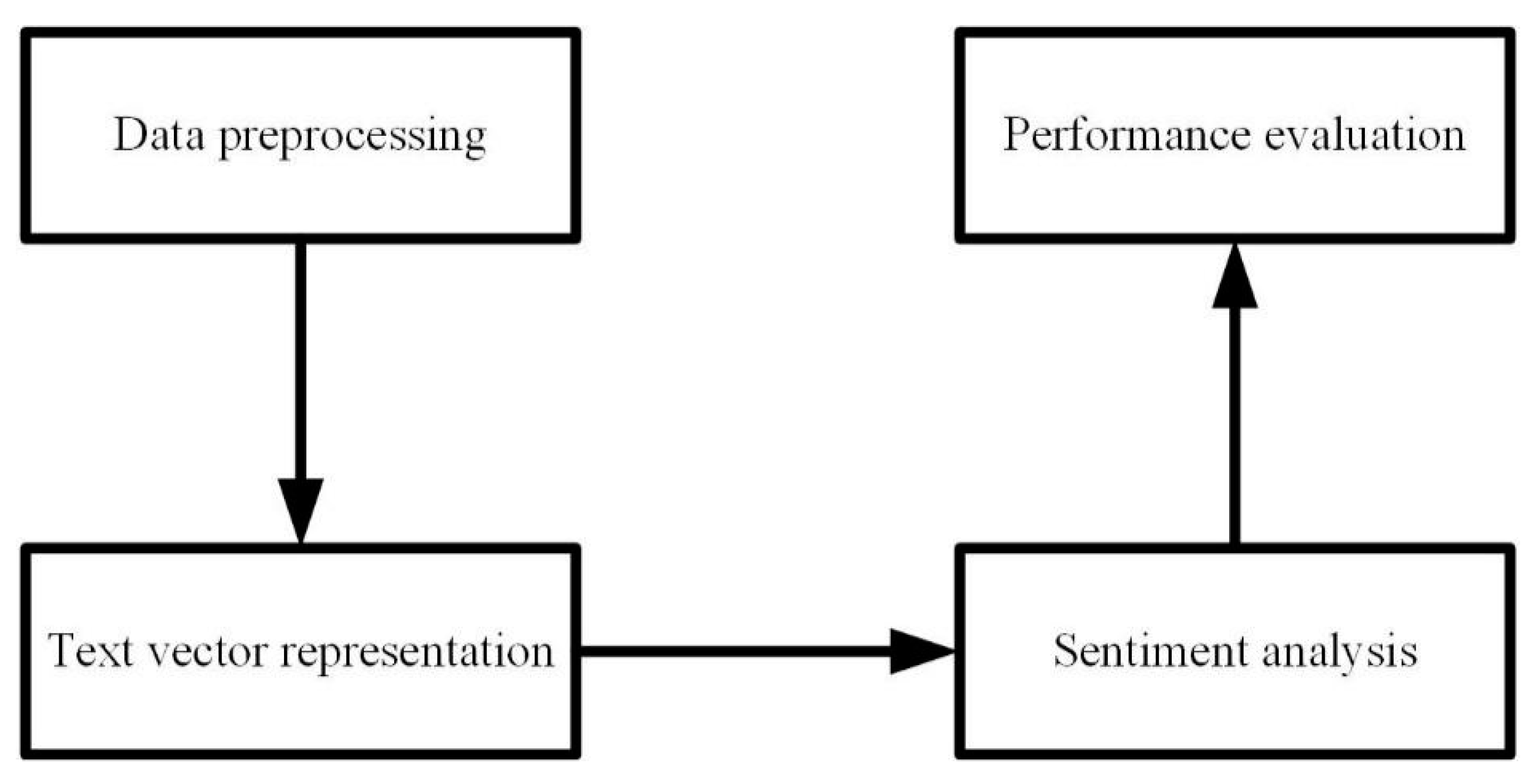

2.7.1. The Construction of the Sentiment Analysis Model Based on the BiGRU-Att Model

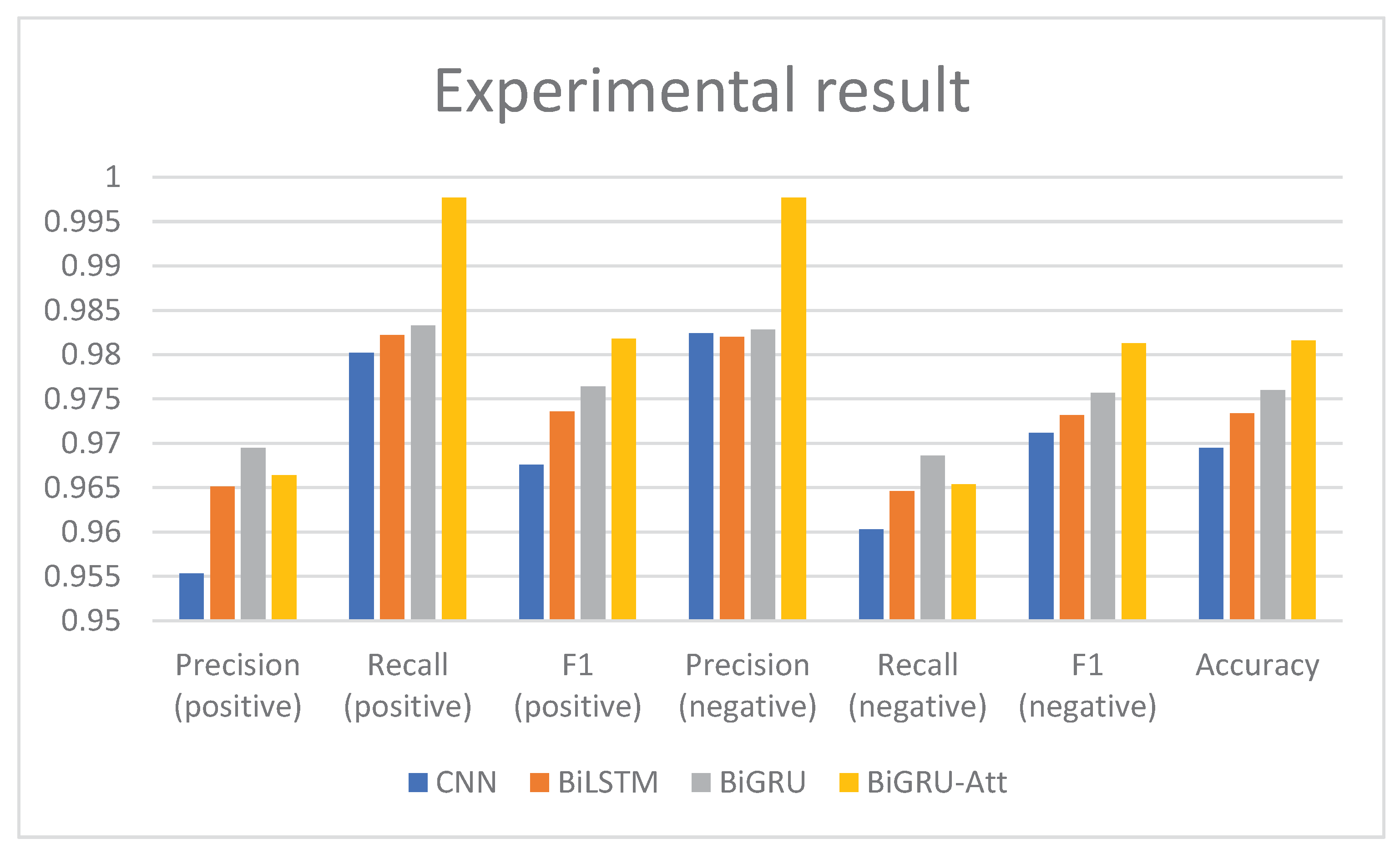

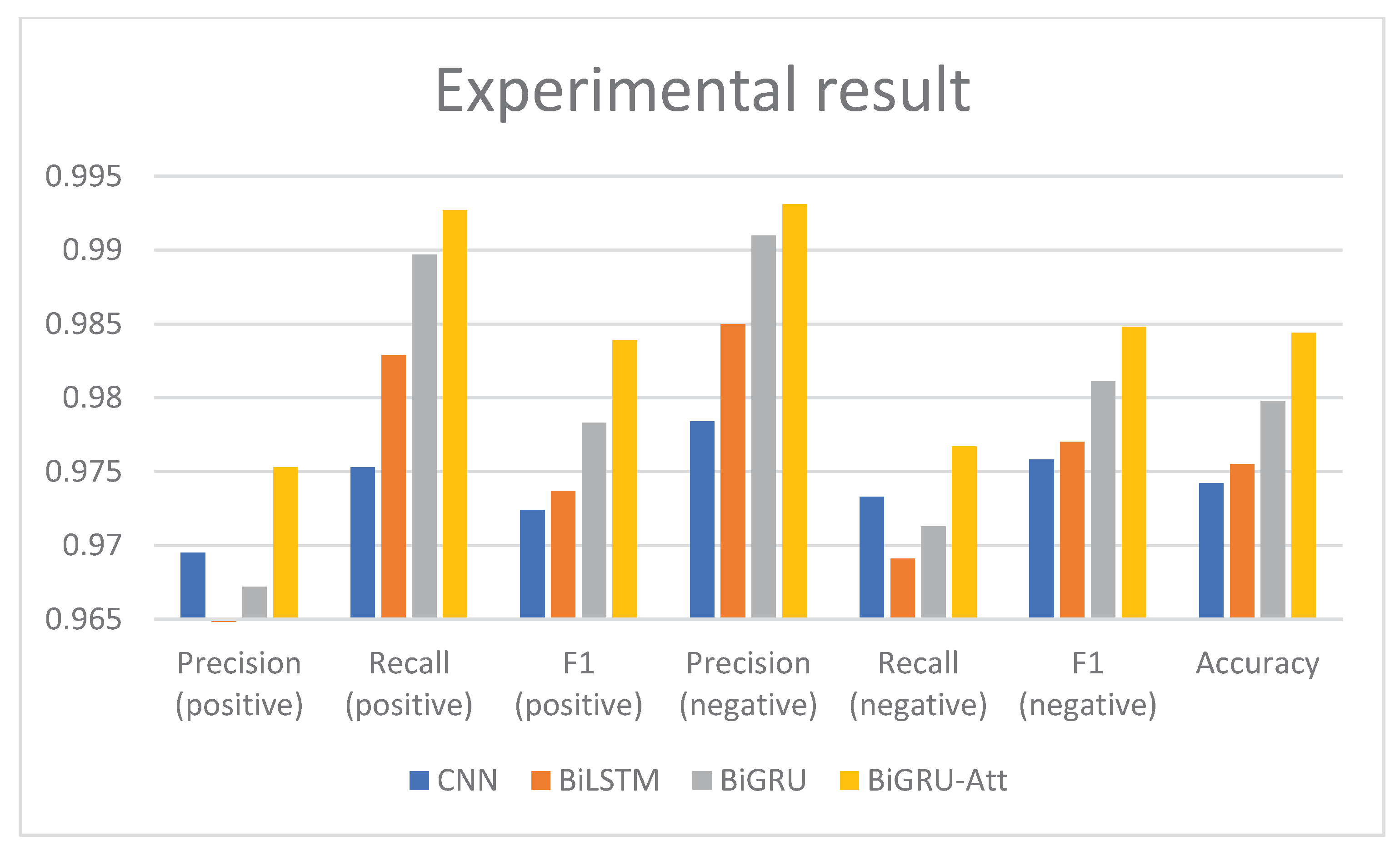

2.7.2. The Contrast Experiment

- The CNN sentiment analysis model.

- The BiLSTM (bi-directional long short-term memory) emotion analysis model. The comparative experiment of the sentiment analysis model based on the BiLSTM model shows that the number of hidden neurons is 100 and the activation function is tanh.

- The BiGRU emotion analysis model. The sentiment analysis model based on BiGRU is the BiGRU-Att model, which directly connects the output results of BiGRU to the output layer of the emotion analysis following the removal of the attention layer.

3. Results and Discussion

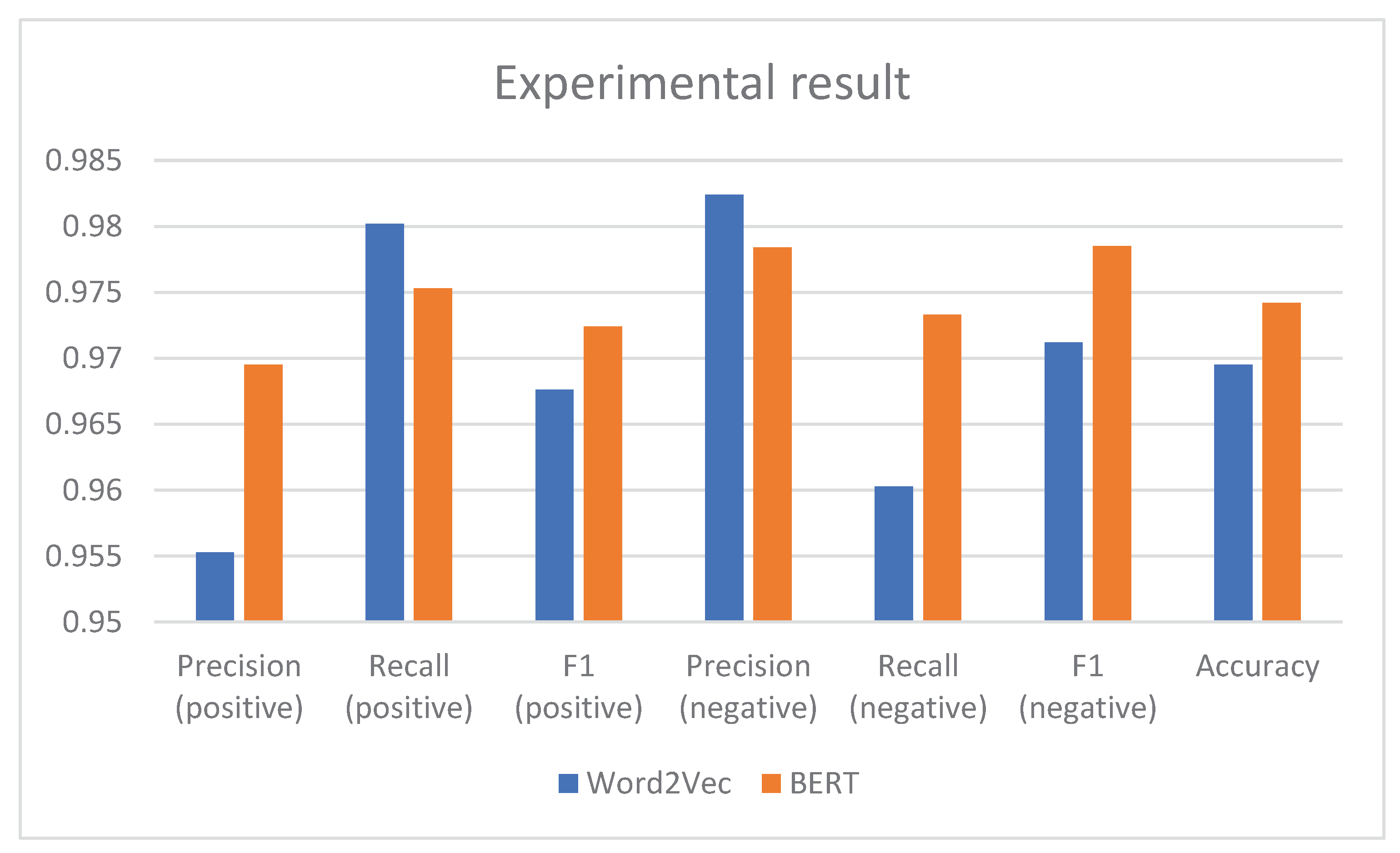

3.1. Experimental Results and Analysis of the Short-Text Vector Representation Model Based on the BERT Model

3.2. Experimental Results and Analysis of Sentiment Analysis Model Based on the BiGRU-Att Model

4. Conclusions

5. Research Limitations and Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, D.; Yang, R.; Shen, C. Sentiment word co-occurrence and knowledge pair feature extraction based LDA short text clustering algorithm. J. Intell. Inf. Syst. 2020, 56, 1–23. [Google Scholar] [CrossRef]

- Zhou, W.S. A Method of Short Text Representation Based on the Feature Probability Embedded Vector. Sensors 2019, 19, 3728. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhou, C.; Wu, J. A deep learning approach to Chinese microblog topic modeling. J. Intell. Fuzzy Syst. 2020, 39, 653–663. [Google Scholar]

- Jiang, L.; Yang, H. A hybrid approach to product quality assessment based on user-generated content in social media. Inf. Process. Manag. 2019, 56, 2015–2026. [Google Scholar]

- Huang, X.; Zhang, L.; Ding, X. Fine-grained emotion analysis in Chinese online reviews with hierarchical deep learning. Inf. Process. Manag. 2019, 56, 874–889. [Google Scholar]

- Nishida, T.; Hara, K.; Yoshikawa, H. Active learning for social media text classification via query generation based on kernel density estimation. Inf. Process. Manag. 2019, 56, 752–766. [Google Scholar]

- Chen, J.; Ren, Y.; Chen, J. A novel deep learning-based approach for detecting fake news on social media. J. Intell. Fuzzy Syst. 2020, 39, 1387–1395. [Google Scholar]

- Li, H.; Liu, T.; Li, S. A survey of deep learning for text mining. Inf. Fusion 2019, 101, 9–19. [Google Scholar]

- Guo, Y.; Zhang, Y. Deep learning based sentiment analysis research: A survey. Inf. Process. Manag. 2019, 56, 1601–1619. [Google Scholar]

- Fang, H.; Guo, Y.; Zhang, Y. A comparative study of convolutional neural network and recurrent neural network for sentiment analysis. Inf. Process. Manag. 2019, 56, 1841–1844. [Google Scholar]

- Wang, H.; He, J.; Zhang, X.; Liu, S. A Short Text Classification Method Based on N-Gram and CNN. Chin. J. Electron. 2020, 29, 248–254. [Google Scholar] [CrossRef]

- Zhang, T.; You, F. Research on Short Text Classification Based on TextCNN. J. Phys. Conf. Ser. 2021, 1757, 12092. [Google Scholar] [CrossRef]

- Li, J.; Feng, L.; Li, C. A novel deep learning approach for event extraction from social media. J. Intell. Fuzzy Syst. 2020, 39, 3183–3193. [Google Scholar] [CrossRef]

- Fu, G.; Liu, Q.; Liu, W. A deep learning approach to rumor detection in social media. J. Intell. Fuzzy Syst. 2020, 38, 7429–7437. [Google Scholar]

- Bueno, P.; Cano, P.A.; Goelen, F.; Hertog, T.; Vercnocke, B. Echoes of Kerr-like wormholes. Phys. Comment D 2018, 97, 24040. [Google Scholar] [CrossRef]

- Gao, W.; Zheng, X.; Zhao, S. Named entity recognition method of Chinese EMR based on BERT-BiLSTM-CRF. J. Phys. Conf. Ser. 2021, 1848, 12083. [Google Scholar] [CrossRef]

- Liu, Y.; Lu, J.; Yang, J.; Mao, F. Sentiment analysis for e-commerce product comments by deep learning model of Bert-BiGRU-Softmax. Math. Biosci. Eng. 2020, 17, 7819–7837. [Google Scholar] [CrossRef]

- Zhuang, H.; Wang, F.; Bo, S.; Huang, Y. A BERT based Chinese Named Entity Recognition method on ASEAN News. J. Phys. Conf. Ser. 2021, 1848, 12101. [Google Scholar] [CrossRef]

- Huang, G.; Wang, J.; Tang, H.; Ye, X. BERT-based Contextual Semantic analysis for English Preposition Error Correction. J. Phys. Conf. Ser. 2020, 1693, 012115. [Google Scholar] [CrossRef]

- Dixon, F.M.; Polson, N.G.; Sokolov, V.O. Deep learning for spatio-temporal modeling: Dynamic traffic flows and high frequency trading. Appl. Stoch. Model. Bus. Ind. 2019, 35, 788–807. [Google Scholar] [CrossRef]

- Nicolas, G.; Andrei, M.; Bradley, G.; Liu, A.; Ucci, G. Deep learning from 21-cm tomography of the cosmic dawn and reionization. Mon. Not. R. Astron. Soc. 2019, 1, 282–293. [Google Scholar]

- Yi, A.; Li, Z.; Mi, G.; Zhang, Y.; Yu, D.; Chen, W.; Ju, Y. A deep learning approach on short-term spatiotemporal distribution forecasting of dockless bike-sharing system. Neural Comput. Appl. 2019, 31, 1665–1677. [Google Scholar]

- Wang, B.; Zhang, N.; Lu, W.; Geng, J.; Huang, X. Intelligent Missing Shots’ Reconstruction Using the Spatial Reciprocity of Green’s Function Based on Deep Learning. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1587–1597. [Google Scholar] [CrossRef]

- Dong, X.; Ba, T.L. Rapid analysis of coal characteristics based on deep learning and visible-infrared spectroscopy. Microchem. J. 2020, 157, 104880. [Google Scholar]

- Sun, X.; Ning, Y.; Yang, D. Research on the Application of Deep Learning in Campus Security Monitoring System. J. Phys. Conf. Ser. 2021, 1744, 42035. [Google Scholar] [CrossRef]

- Yao, L.; Zhao, H. Fuzzy Weighted Entropy Attention Deep Learning Method for Expression Recognition. J. Phys. Conf. Ser. 2021, 1883, 12130. [Google Scholar] [CrossRef]

- Denisenko, A.I.; Krylovetsky, A.A.; Chernikov, I.S. Integral spin images usage in deep learning algorithms for 3D model classification. J. Phys. Conf. Ser. 2021, 1902, 12114. [Google Scholar] [CrossRef]

- Wang, X.; Zeng, W.; Fei, P. A Deep-learning Based Computer Framework for Automatic Anatomical Segmentation of Mouse Brain. J. Phys. Conf. Ser. 2021, 1815, 12012. [Google Scholar] [CrossRef]

- Chang, Z.; Lu, Z.; Mo, F.; Hao, L.; Yu, G. Design of workpiece recognition and sorting system based on deep learning. J. Phys. Conf. Ser. 2021, 1802, 22061. [Google Scholar] [CrossRef]

- Li, N.; Cheng, Z.; Woo, Y.; Ha, J.; Kim, A. Research on the adverse reactions of medicines based on deep learning models. J. Phys. Conf. Ser. 2020, 1629, 12102. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, C.; Wang, L.; Chen, Z.; Wu, Y. Chinese Event Subject Extraction in the Financial Field Integrated with BIGRU and Multi-head Attention. J. Phys. Conf. Ser. 2021, 1828, 12032. [Google Scholar] [CrossRef]

- Rani, M.S.; Subramanian, S. Attention Mechanism with Gated Recurrent Unit Using Convolutional Neural Network for Aspect Level Opinion Mining. Arab. J. Sci. Eng. 2020, 45, 6157–6169. [Google Scholar] [CrossRef]

- Ren, F.; Jiang, Z.; Wang, X.; Liu, J. A DGA domain names detection modeling method based on integrating an attention mechanism and deep neural network. Cybersecurity 2020, 3, 4. [Google Scholar] [CrossRef]

| Indicators | Meanings |

|---|---|

| TP | Both prediction and practice are positive |

| FP | The prediction is positive, but the reality is negative |

| TN | Both prediction and reality are negative |

| FN | The prediction is negative, but the reality is positive |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, W.; Zhang, Q.; Wu, Y.J.; Chen, T.-C. Running a Sustainable Social Media Business: The Use of Deep Learning Methods in Online-Comment Short Texts. Sustainability 2023, 15, 9093. https://doi.org/10.3390/su15119093

Lin W, Zhang Q, Wu YJ, Chen T-C. Running a Sustainable Social Media Business: The Use of Deep Learning Methods in Online-Comment Short Texts. Sustainability. 2023; 15(11):9093. https://doi.org/10.3390/su15119093

Chicago/Turabian StyleLin, Weibin, Qian Zhang, Yenchun Jim Wu, and Tsung-Chun Chen. 2023. "Running a Sustainable Social Media Business: The Use of Deep Learning Methods in Online-Comment Short Texts" Sustainability 15, no. 11: 9093. https://doi.org/10.3390/su15119093

APA StyleLin, W., Zhang, Q., Wu, Y. J., & Chen, T.-C. (2023). Running a Sustainable Social Media Business: The Use of Deep Learning Methods in Online-Comment Short Texts. Sustainability, 15(11), 9093. https://doi.org/10.3390/su15119093