Abstract

Metrics are a set of numbers that are used to obtain information about the operation of a process or system. In our case, metrics are used to assess the level of information security of information and communication infrastructure facilities. Metrics in the field of information security are used to quantify the possibility of damage due to unauthorized hacking of an information system, which make it possible to assess the cyber sustainability of the system. The purpose of the paper is to improve information security metrics using multicriteria decision–making methods (MCDM). This is achieved by proposing aggregated information security metrics and evaluating the effectiveness of their application. Classical information security metrics consist of one size or one variable. We obtained the total value by adding at least two different metrics and evaluating the weighting factors that determine their importance. This is what we call aggregated or multicriteria metrics of information security. Consequently, MCDM methods are applied to compile aggregated metrics of information security. These are derived from expert judgement and are proposed for the three management domains of the ISO/IEC 27001 information security standard. The proposed methods for improving cyber sustainability metrics are also relevant to information security metrics. Using AHP, WASPAS and Fuzzy TOPSIS methods to solve the problem, the weights of classical metrics are calculated and three aggregated metrics are proposed. As a result, to confirm the fulfilment of the task of improving information security metrics, a verification experiment is conducted, during which aggregated and classical information security metrics are compared. The experiment shows that the use of aggregated metrics can be a more convenient and faster process and higher intelligibility is also achieved.

1. Introduction

Information security in the field of informatization (hereinafter, information security) refers to the state of security of electronic information resources, information systems and information and communication infrastructure from external and internal threats [1]. According to the concept of the CIA triad, the main tasks of information security are to ensure the confidentiality, integrity and availability of information–communication infrastructure objects [2].

Most organizations in the world cannot function without information security measures. Working in certain areas, such as the financial sector, requires compliance with various standards and requirements related to information security. Companies from other areas, such as energy, medicine, logistics and national defense, are no exception. To ensure information security, organizations are forced to allocate resources for this. The planning and operation of resources are mainly taken care of by the chief information security officer (CISO). Therefore, the CISO forms the security program and is responsible for its implementation. It is necessary to carry out measurements to verify the effectiveness of the applied program; such measurements are called metrics of information security. Metrics allow one to assess the security situation and, in the long term, understand the effectiveness of the information security program in place. The use of metrics is also determined by the international information security management standard ISO/IEC 27004 [3].

Moreover, cyber sustainability is defined as the ability to continuously achieve the expected results despite negative cybersecurity events [4]. Information security indicators partially include the area of attack sustainability metrics [5]. When choosing cyber sustainability metrics, it is proposed to evaluate a variety of criteria. In order for metrics to be suitable for selection, first of all, it must be possible to evaluate them. It should also be possible to quickly extract the data needed to compile the metrics. The benefits provided by each metric should exceed the losses incurred during its receipt and compilation. There are other requirements that additional metrics must meet. The criteria for selecting metrics should be ordered by priority, which may vary depending on the needs of a particular organization. The specification of metrics is proposed to assess the metrics of cyber sustainability. This allows one to evaluate how a specific metric can be used, what are the values of the metric values or what information a specific numeric value gives [6].

The effectiveness of security programs is usually measured by metrics. Metrics are crucial because they provide direct information about the organization’s information security situation. Using metrics effectively can solve organizations’ security problems [7]. This, accordingly, will reduce the number of crimes in cyberspace [8]. This work aims to improve metrics, which can significantly contribute to improving the organization’s position in the security field [9]. The topic is new and relevant since organizations’ use of information security metrics is not yet a well-established process. The scientific community needs currently applicable metrics to systematize, select the best ones, improve existing ones or offer new ones—aggregated ones. The novelty of the research is the application of a new approach to improving information security metrics through the use of multicriteria decision-making methods. Moreover, it is revealed that previously aggregated metrics were not used in the field of information security in accordance with multicriteria decision-making methods.

In accordance with the current international information security management system standard ISO/IEC 27001, information security metrics are mandatory [10]. It is worth noting that the standard identifies 14 areas of information security management [11]. Consequently, a certain number of classical metrics are allocated for each of these areas. In general, metrics in information security are used to measure and evaluate the effectiveness of the system under study but the application of classical metrics in information security requires a lot of time. In turn, to solve this problem, we propose to build aggregated information security metrics based on classical metrics.

This article describes the application of decision-making methods based on multicriteria methods (MCDM) to improve information security metrics. The goal is to evaluate the current classical information security metrics and propose new aggregated metrics. Aggregated metrics provide a generalized approach to the information security situation of the organization. Their use makes it possible to understand the current security situation more effectively and reduces the resources allocated for monitoring. Using multi-criteria decision-making methods (MCDM) to improve information security metrics. This will be achieved by offering aggregated information security metrics and evaluating the effectiveness of their application.

The article [12] analyzes MCDM methods by application area and indicates the top 25 areas where these methods are used. According to the research data, the largest number of articles relates to the fields of computer science and artificial intelligence. Also, authors [12] indicate that one of the disadvantages of the AHP method is that it requires a large number of inputs. However, in our suggested approach, we use the AHP method without fuzzy sets to avoid requiring a large number of inputs. Consequently, while the AHP method serves primarily to rank the criteria based on the weights, the TOPSIS method is applied in combination with fuzzy theory in order to evaluate the criteria and the selection of an alternative [12]. A review of the MCDM methods used in assessing the sustainability of the system is shown in [13] and it can be concluded that the AHP method takes the leading position in a number of applications. The aggregation techniques have a great effect on MCDM problems and the aggregation operators have been broadly applied to MCDM.

Additionally, in the paper [14], the literature was reviewed for the recent developments in TOPSIS. This article provided a detailed analysis of the methods TOPSIS and Fuzzy TOPSIS for solving many different selection/ranking problems. Based on the research in the paper [14], it was decided to use the Fuzzy TOPSIS method because of its ability to deal with different types of values: crisp, interval, fuzzy or linguistic. The F-TOPSIS algorithm allows all the data to be transformed into a common domain of triangular fuzzy numbers and perform all calculations required using fuzzy arithmetic [14].

The WASPAS method is an MCDM method which integrates the weighted sum model (WSM) and weighted product model (WPM) for decision-making process [15]. WSM and WPM are particularly useful to assess alternatives based on different criteria [16]. WASPAS is based on a weighted linear combination of WSM and WPM for ranking alternatives [17]. In this study, we use linear normalization; in this regard, the classical WASPAS method is applicable.

Section 1 is an introduction to the article. Section 2 defines the concept of information security metrics and summarizes the quantitative approach in information security-related processes. It also provides a classification of security metrics and examples of them. Section 3 provides the classification of MCDM methods and presents a comparison of the advantages and disadvantages of various MCDM methods. Section 4 describes the application of the MCDM methods to create aggregated information security metrics. Expert evaluation is used to apply the method. Section 5 describes the expert evaluation group, the compilation of aggregated metrics and the verification of the reliability of the results.

2. Related Work Analysis on Information Security Metrics

Aggregated Metrics

Ensuring an organization’s information security is necessary due to the constant incidents of information security. In the case of direct hacking of an organization’s information systems, huge damage is caused, which also leads to indirect damage—a decrease in reputation. Such incidents are rare. Quite often, the person responsible for security experiences a sense of insecurity in the company (CIO or CISO), realizing that the protection of the organization’s information may be compromised. It is the use of metrics that can solve this situation. A properly configured metric system should diagnose the information security situation, ensure security, measure the effectiveness of applied metrics and increase security awareness levels [18].

Metrics are sets of numbers that provide information about the operation of a process or system. Information security metrics are a set of interrelated dimensions that allow quantifying the possibility of harm as a result of unauthorized hacking of an information system [19].

Information security metrics are defined in scientific articles quite differently. The concepts and definitions of information security metrics used in scientific papers are given in Table 1.

Table 1.

Definitions of information security metrics as used in scientific papers [18].

The above definitions show that information security metrics are defined differently in different sources. Information security metrics can bring several advantages to an organization [18]:

- Understand security threats;

- Notice emerging problems with information security;

- Understand weaknesses in the security infrastructure;

- Measure the effectiveness of applied measures that are used to solve security problems;

- Disclose recommendations for the development of security technologies and processes.

In order for organizations to not face misunderstandings and difficulties, it is believed that metrics should be simple and understandable. Good metrics are those that meet the following criteria [18]:

- A certain parameter is constantly measured using the same methodology;

- Simple and inexpensive data collection;

- Expressed as a digit or percentage expression (“high”, “medium” or “low” is incorrect);

- Expressed by including certain standard units of measurement (for example time or monetary expression);

- Metrics should be specific, speak about a specific process and provide clear data to those who make organizational decisions.

Metrics that are constantly measured by the same methods allow one to draw conclusions and obtain the same results (regardless of the perception or individual assumptions of the measuring person). Obtaining metrics should be simple and inexpensive. Data collection can be both very simple and quite a complex task. To evaluate some metrics, it is enough to write one SQL query, while for others it may not be enough to make an infinite number of phone calls. It is believed that one of the characteristics of a good metric should be a simple extraction of data to calculate the metric. It is also important that the metrics are clear and understandable, describe a specific process well and allow one to make specific decisions. As an example, the metric “average number of corporate attacks” is too abstract; in this case, the metric “average number of attacks to e-commerce servers” should be applied. Accurate metrics allow adoption of more specific mechanisms that can solve the security problem [18].

We can create an effective metric system by knowing which parameters describe the quality of information security metrics. Such a system enables making decisions related to information security while considering numerical parameters. Currently, in some organizations, information security decisions are made only according to information security experts’ personal opinions and experience. This situation should change, as it will significantly improve the information security management process. For instance, in the paper [26], this task was assigned to 141 specialists from around the world who have a direct connection and working or scientific experience with information security metrics. Specialists had to choose the most important of the 19 quality criteria describing information security metrics [26]. Those 19 criteria were taken from the research of [26]. There were respondents from 21 countries: 61 (43%) from Finland, 20 (14%) from the U.S., 9 (6%) from Spain, 7 (5%) from each Italy and Germany and 5 (4%) from each Austria, Ireland and Norway. The remaining respondents were from Belgium, the Czech Republic, France, Greece, Hungary, India, Luxembourg, The Netherlands, Norway, Singapore, South Africa, Sweden and the U.K. This study was aimed at clarifying the main quality criteria that describe good performance. The results of the study showed that three main quality criteria are the most important, according to respondents. These are correctness, the ability to measure (or measurability) and significance [21]. The fourth-most important criterion is the ability to use metrics. Correctness means that the metric is applied correctly and does not give any misinformation; the metric is obtained without errors. Measurable metrics have a range of possible values, i.e., a clear value determined by numerical units. Meaningful metrics justify the needs that are presented to them and the ability to use them describes the practical benefits that the metric provides. This study gives information security professionals a clear idea of what real criteria describe the quality of metrics [27].

Other key metrics characterizing quality criteria can also be found in scientific sources. These quality criteria are given in Table 2.

Table 2.

Highlights from the literature of the main criteria for the quality of information security metrics [18].

Security metrics should be divided into certain groups depending on their origin. One can distinguish such a classification of metrics as follows [18]:

- Perimeter protection. Defines the risks associated with potential security breaches of the organization that come from outside (firewalls, IDS, email, etc.; see the analysis of emails and the resistance of employees to social engineering attacks). Examples of this type of metric are given in Table 3;

Table 3. Table of information security metrics, based on [18].

Table 3. Table of information security metrics, based on [18]. - Scope and management area determine how well an organization succeeds in implementing an application security program. In other words, whether the factors provided for by the security program within the organization are being observed. While CISO can apply a wide range of methods that improve information security parameters in a company, real endpoints (such as computers, servers, or employees) may not be available. Examples of this type of metric are given in Table 3;

- The availability of information and the stability of systems determine the metrics that characterize the operation of systems that generate profit for the organization (for example, metrics such as MTTR and MTBF). Examples of this type of metric are given in Table 3. Table 3 was compiled on the basis of the information provided in [18].

The information security metrics in Table 3 are among the most significant and widely used in the information security industry. Of course, other metrics are successfully used in various organizations that monitor information security processes. The concept of information security metrics can be equated with key performance metrics used in the field of business management [27].

This is not the only way to classify metrics. Other classifications of metrics are presented in other scientific papers. For example, by the type of input data, metrics are classified into analyzing design and development, analyzing performance processes and analysis of support and updates. Design and development describe and measure the effectiveness of the security engineering process and the development of information systems. The scope describes the specifics of already-created and working systems. The area of support and updates describes the effectiveness of information security management and changes in running processes [22].

It is also possible to classify metrics according to information security management standards. For example, the classification of ISO/IEC 27000 metrics can be performed based on the management areas offered by the standard. Management areas are described in part 27001 of the standard and this classification is applied in this part of the study. In addition, the standard itself defines, as part of the monitoring, measurement and analysis of information security, in the highlighted part of the standard 27004 [5]. The standard describes examples of measurement construction and a possible classification covering 30 areas. Some of the areas offered by the standard are resource allocation, policy verification, risk impact, audit program, password quality, PDA protection, firewall rules, device configuration, vulnerability environment and other areas [5].

Classical information security metrics consist of one size or one variable. As an example, one metric is the number of malicious software codes detected on the organization’s servers per day, which is measured in units. This is a metric of one criterion. We can obtain the total value by adding at least two different metrics and evaluating the weighting factors that determine their importance. This is what we call aggregated or multicriteria metrics of information security.

The concept of aggregated information security metrics is not established in the studied articles on information security issues. Researchers offer various methodologies for assessing the information security situation in an organization using metrics. One of the articles presents unconventional multicriteria information security metrics for assessing the overall security of a database management system. This is carried out by measuring the uncertainty that arises from possible attacks on database management systems [28].

Scientific articles also discuss the importance of aggregated information security metrics. They state that combining individual security metrics is useful but is not easy. To aggregate metrics, one needs to evaluate the dependencies between them to obtain a generalized one. Dependency assessment is a decision-making task, since it is necessary to decide how important a certain measurable factor is in relation to others [29]. This can be performed by entering weighting factors that determine the value of traditional metrics in an aggregated multicriteria metric.

The problem of aggregated metrics in the scientific community has not yet been widely studied and, therefore, a generalized concept has not been agreed upon. For business institutions, the use of aggregated metrics is an understudied phenomenon. Such aggregated, generalized data—providing information security of specific areas of activity—and metrics are offered to their clients by business institutions [30].

3. MCDM Methods

3.1. Comparison of MCDM Methods

Multi-criteria decision-making methods (MCDM) are used to make the optimal decision. MCDM is a well-known multicriteria methodological method used in decision-making. This method is used in various fields of science and business, covered by the fields of management sciences, engineering and IT.

Before delving into specific methods of problem-solving, it is important to clarify the classification characteristic of these methods. Classification of methods is carried out based on various factors. MCDMs are divided into two groups: Multi-Objective Decision Making (MODM) and Multi-Attribute Decision Making (MADM). Multi-purpose methods are further classified by the types of data used to rank alternatives. The methods are classified into deterministic, stochastic (probabilistic) and indeterminate sets (fuzzy). Depending on the number of decision-makers involved in the process, the methods can be divided into single-person or group decision methods [31]. Other classifications of multicriteria methods are also proposed, depending on the type of information that is provided to decision-makers. In accordance with this classification, it is possible to distinguish methods of rank correlation, methods based on the comparison of preferences (priority), methods that allow conversion of qualitative estimates into quantitative ones and methods that calculate the distance from the reference point [32].

When solving MCDM tasks, qualitative criteria are often found. Expert assessment is used to solve such problems. In traditional MCDM methods, decisions made by people are converted into certain numerical values. However, it is often difficult for decision-makers to determine the exact numerical value of a particular problem [33]. In this case, one can use indeterminate sets (fuzzy) methods.

There are various multicriteria methods with different decision-making characteristics. The methods are quite different; all have their advantages and disadvantages. Because of these differences, it is quite difficult to make an objective comparison of these methods. A generalized comparison of the methods is given in Table 4.

Table 4.

Comparison of multicriteria methods [32].

It is worth noting that the AHP method is very often used in scientific articles in the engineering field [34]. This method uses the hierarchical structure of the problem, breaking the problem into smaller parts and, consequently, evaluating all aspects of the problem [35].

A brief description of the MCDM methods is also provided in Table 5. The table describes the advantages and disadvantages of the methods and the most common applications.

Table 5.

Description of MCDM methods [35].

From Table 5, three MCDM methods are selected to compile aggregated information security metrics: AHP, WASPAS and Fuzzy TOPSIS. Since the task of compiling aggregated information security metrics involves the use of purely qualitative values, it is necessary to choose the appropriate research methodology. For this reason, two separate methods of solving the problem are used:

- The AHP method is used to determine the weights of the criteria and these weights are then applied to the calculation using the WASPAS method;

- Calculations and weights are determined using the Fuzzy TOPSIS method.

To compile aggregated multi-criteria metrics of information security, classical metrics of information security are needed. In this regard, it is necessary to select the best metrics from the presented areas of the ISO/IEC 27001 standard and assign them weight coefficients. For this, multi-criteria decision-making methods are applied, where expert assessment is used. In multi-criteria decision-making methods, the correct choice of evaluation criteria is very important.

In accordance with the current international information security management system standard ISO/IEC 27001, information security metrics are mandatory [5]. The standard identifies 14 areas of information security management [10]:

- Information security policy—aims to ensure compliance with information security policies;

- Organizational aspects of information security—includes the procedure for performing specific tasks and the distribution of responsibilities;

- Security of human resources—has the purpose of ensuring the responsibility of employees and their performance;

- Value management—includes the protection of information and information values;

- Access control—has the goal of ensuring that employees can only access information related to their immediate tasks. Extended access rights can be a serious threat to information security;

- Cryptography—aims to ensure data encryption and secure management of confidential information;

- Physical security—includes the security of the premises of the organization and the equipment that is stored in them;

- Operation security—one of the broadest areas, covering organization operations, the military–industrial complex, system backup, logging, software integration, technical vulnerability management and information systems audit;

- Communication security—includes the protection of information in computer networks;

- Acquisition, improvement and maintenance of systems—strives to ensure the importance of information security in the organization’s information systems;

- Relations with suppliers—includes the information security of the organization associated with third parties and partners of the organization;

- Information security incident management—defines the responsibilities of employees and an action plan during a security incident;

- Aspects of information security for business continuity management—includes management of business failures, ensuring the availability of information;

- Compliance with standards—ensures that organizations comply with laws and applicable information security standards.

Information security metrics are used to measure the effectiveness of processes occurring in the scope of all these areas. The specifics of the organization determine which program of metrics should be used. The work creates aggregated information security metrics for three management areas defined by the ISO/IEC 27001 standard: security of operations, security of communications and acquisition and improvement and maintenance of systems. These are the ones chosen since the work tends to focus more on the technical aspects of security. Aggregated metrics are also compiled by region by selecting classical information security metrics and then providing them with weighting coefficients.

Based on the scientific literature and business publications, information is provided on recommendations for security metrics and classical information security metrics are selected for each of the selected areas of information security management. From this list, classical metrics are directed to the selection of the best ones, which are included in the aggregated information security metrics. Classical security metrics in the field of security operations, metrics in the field of communications and in the field of management of acquisition and improvement and maintenance of information systems are shown in Table 6.

Table 6.

Classical metrics of information security in the fields of operation, communication and information systems security, which are used to compile aggregated metrics, based on [36].

The field of operational security management includes several aspects [33]:

- Operations of the organization—ensuring that responsible persons properly carry out procedures;

- MPC—ensuring that the organization is prepared and aware of potential threats from the MPC;

- System backup—listing requirements related to system backup performance to prevent data loss;

- Logging and monitoring—allowing one to have documented evidence and a base for investigating security incidents;

- Ensuring the integrity of operating systems—ensuring the integrity of the software performing operations is carried out by managing the software recorded in the OS;

- Technical vulnerability management—ensuring that unauthorized persons do not exploit system vulnerabilities;

- Audit of information systems—aiming to minimize failures in auditing activities in the OS.

In order for the final aggregated metric to assess most aspects of the security zone of operations, an additional division into areas is carried out. The best one to two classical metrics are selected from each area by expert evaluation. To ensure operational security, the following areas are highlighted: the integrity of the PDA and OS, vulnerability management, backup and event monitoring.

The field of communication security management includes these aspects [37]:

- Network security management, aimed at ensuring the confidentiality, integrity and availability of information contained in networks;

- Security of the information transfer status.

In order for the resulting aggregated metric to evaluate most aspects of the communication security area, an additional division into areas is carried out. The best one or two classical metrics are selected from each area by expert evaluation. To ensure the security of communications, the following areas are highlighted: e-mail system, firewall management, session and traffic monitoring and network attacks.

It is worth noting that the task of compiling aggregated metrics requires the use of qualitative values. Consequently, AHP, WASPS and Fuzzy TOPSIS methods were selected from Table 5 as the research methodology. For this reason, two separate methods of solving the problem are used, as mentioned above.

For further study, it was decided to use the criteria that were defined in one of the scientific articles describing the quality of information security metrics [26]. The quality criteria of the most important metrics have been clarified and an expert assessment has already been applied (141 specialists). The most important classical metric criteria that are used in the study are correctness, ability to measure and significance [26].

The main purpose of the work is to obtain aggregated information security metrics, which consist of classical information security metrics with appropriate weighting coefficients. Often, at least two different solutions are used to solve problems using multi-criteria decision-making methods. The same principle is observed in this work. Two solutions are applied to solve this problem. The first application—the AHP method—results in weighting coefficients of the criteria and then these coefficients are applied to calculations using the MCDM method in the context of calculation using the MCDM WASPAS method. The second way to solve the MCDM problem is the Fuzzy TOPSIS.

3.2. TOPSIS Method for Obtaining Aggregated Metrics of Information Security

The solution to the problem, regardless of the MCDM method used, consists of four steps [38]. The idea of using the aggregated weighted sum assessment approach is based on the work of Turskis and Zavadskas [39].

First of all, a vector of the considered alternatives is formed, from which a rational alternative is selected [40]:

To compile aggregated information security metrics, experts selected 5 metrics out of 15 for 3 areas of information security management of the ISO/IEC 27001 standard. The selection of these metrics was carried out using a questionnaire among experts.

At the second stage, a vector of metrics is formed, according to which alternatives are evaluated:

The decision-making matrix X[m×n] is formed in the form of a quantitative assessment of the i-th alternative by j-th metrics:

Table 7 presents the selected classical information security metrics based on the results of an expert assessment of the three selected areas of information security management.

Table 7.

Selected classical information security metrics based on the results of an expert assessment.

In the third stage, the AHP method is applied to determine the weight criteria and the MCDM method for WASPAS.

In the last stage, the issue is solved by the Fuzzy TOPSIS method. The structure of the MCDM problem to be solved is defined in Table 8.

Table 8.

Matrix of ranking solutions according to classical information security metrics.

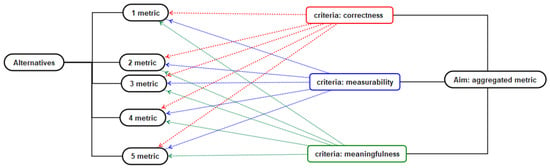

The MCDM solution is explained by the hierarchical structure of the problem presented in Figure 1.

Figure 1.

Compilation of aggregated metrics using MCDM. The hierarchical structure of the task.

3.3. Application of AHP to Determine the Weight of Criteria

Only some of the MCDM methods allow one to set criteria weights. One of them is the AHP method. This makes it possible to solve problems of various kinds by decomposing the problem into a hierarchical structure. AHP is used in various types of scientific work, from those aimed at choosing the best mobile phone [41] to scientific work that compares information security metrics used in companies [40]. The main calculations are performed by another MCDM method in the context of the current task, since the structure of the AHP method becomes too complex.

The solution to the problem of determining the weights of criteria by the AHP method consists of the following steps [38].

In the first stage, a vector of metrics is formed, from which alternatives are evaluated, as presented in Formula (2). Also, in this stage, we determine metrics that are used in the following research. Consequently, three abovementioned metrics are used [26].

In the second stage, we enter the values of metrics that are determined by specialists. Experts are asked to assess the significance of three qualitative criteria in the metric by assigning percentage values to criteria in accordance with the importance that determines the quality of the metric. This is carried out by applying the scale of evaluation of qualitative criteria proposed by the AHP method and creating a comparison matrix as presented in Table 9 [32].

Table 9.

Matrix of paired comparison.

Since qualitative criteria prevail in the problem under consideration, an expert assessment is applied. The expert evaluation is carried out using linguistic terms, which are then converted into numerical values based on Table 10 and Table 11. In the case of our problem that is being solved, this Table 9 reduces to Table 10.

Table 10.

Matrix of paired comparison of qualitative, metrically characterizing criteria [32].

Table 11.

Scale of pairwise comparison of qualitative criteria of the AHP method [32].

Thus, the expert solving the evaluation problem needs to determine the values of pp12, pp13 and pp23 of the coefficients of paired comparison. The values of pp21, pp31 and pp32 are reversed, so they are calculated pp21 = 1/pp12, pp31 = 1/pp13 and pp32 = 1/pp23. Table 11 shows a scale of pairwise comparison of the qualitative criteria of the AHP method.

Evaluation of pp12, pp13, pp21, pp 23, pp 31 and pp32 allows one to determine the weights for these criteria. This is performed with the help of special software [32]. Reference [42] uses the analytical hierarchy of SpiceLogic to process weak details. The obtained values of the criteria weights are used in further calculations performed by the WASPAS method.

Nevertheless, a new method MCDM WASPAS is considered to be quite accurate [43]. The WASPAS method consists of two methods: WSM and WPM.

Steps to configure the method WASPAS [43,44]:

- A decision-making matrix is formed, where the value of the i-th alternative is determined according to criterion j, m is the number of alternatives and n is the number of criteria. Since only qualitative criteria are used to evaluate information security metrics, an expert assessment is required. Linguistic descriptions are used for expert evaluation, which are converted into numerical values. The conversion is performed using Table 12. The weights of the criteria were obtained following the methodology described in the previous section (using the AHP method);

Table 12. Conversion of linguistic characteristics of experts into numerical values, based on [43].

Table 12. Conversion of linguistic characteristics of experts into numerical values, based on [43]. - Normalization is performed using the following formulas [44]:Useful criterion:Useless criterion:Here, refers to the normalized value of ;

- Calculation of the optimality criterion of the i-th alternative (the formula is based on the WSM method):where wj is the weight of the j-th criterion;

- Calculation of the total relative importance (the formula is based on the WPM method):

- The combined generalized optimality criterion is calculated by the following formula:

- For a more generalized equation for calculating the optimality criterion, the value λ is entered. This allows one to achieve higher ranking accuracy [38]:

- Optimal values λ are calculated according to the following formula:

- Variations or are calculated by the following formula:

- Alternatives are sorted by priority row according to their utility values. From these values, an aggregated metric of information security is formed (according to Formula (1)). The resulting utility values are converted to a percentage expression and become weighting factors. The following formula is used for this:where is the weighting factor for classical metrics in aggregated metrics; is the optimality criterion of the i-th alternative; n is the number of alternatives.

The TOPSIS method is designed to solve problems in a certain environment when using quantitative quantities. To work in an uncertain environment, a method specifically designed by Fuzzy TOPSIS is usually used. The Fuzzy TOPSIS method is used to solve the problem in the following steps [45]:

1. The decision-making matrix is formed by Xij [m×n], where Xij- is the value of the i-th alternative concerning criterion j, m is the number of alternatives and n is the number of criteria. Quantitative values are obtained using expert evaluation. Experts are asked to evaluate both quality criteria and alternatives to each criterion using linguistic terms. Linguistic terms are converted to numeric values using Table 13.

Table 13.

Converting linguistic values to numeric values using the Fuzzy TOPSIS method (for ratings).

2. Fuzzy decision matrix:

Normalized values for useful and useless criteria are calculated using the following formulas:

Useful criterion:

Useless criteria:

Here, = max {cij} useful criteria or aj = min {aij} useless criteria;

3. The constructed weighted normalized fuzzy decision-making matrix:

Here, and = weight of j-th alternative;

4. Formulas for calculating positively ideal (FPIS, A+) and negatively ideal (FNIS, A−) solutions are determined:

Usually, scientific papers use two ways to determine the ideal:

In the first option, the ideal solution is defined as follows [45]:

Useful criteria: ;

Useless criteria: .

In the second option, the ideal solution is defined as follows:

Useful criteria: .

In further calculations, the first option is used;

5. Calculating the distance between each alternative and ;

6. Distance calculation d (A, B):

7. The proximity coefficient of each alternative is calculated (CCi):

8. Alternatives are ranked using the resulting proximity coefficient values CCi. The maximum value of the proximity coefficient means that the alternative is the most suitable;

9. Alternatives are sorted by priority row according to their utility values. From these values, an aggregated metric of information security is formed (according to Formula (1)). The resulting utility values are converted to a percentage expression and become weighting factors. The following formula is used for this:

Here, Kai is the weighting factor for classical metrics in aggregated metrics; CCi is the proximity coefficient of the i-th alternative; n is the quantity of alternatives.

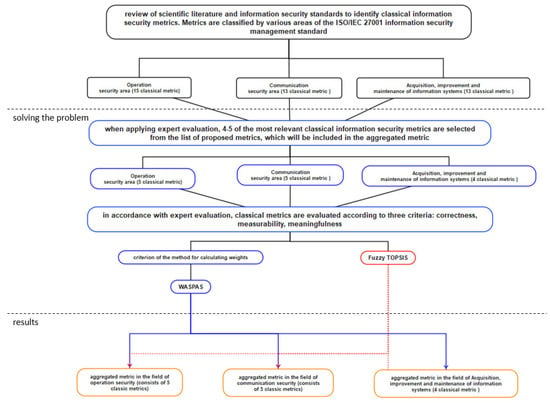

The full progress of the MCDM methods described above is described by the following diagram of the methodology for solving the problem, presented in Figure 2.

Figure 2.

Methodology for solving the problem.

4. Application of MCDM and Expert Assessment on Aggregated Metrics of Information Security

4.1. Expert Assessment of Classical Metrics of Information Security

The accuracy of the expert assessment largely depends on the competence of the experts conducting the assessment. When solving multi-criteria tasks, the optimal group size is from 8 to 10 experts. According to [46], three expert quantities are allocated as the minimum recommended group size. In the current study, the expert group consists of 20 specialists working in the field of information security or engaged in scientific activities. Seven experts participate in the first round of the study and ten more experts participate in the second round. The remaining three experts carried out part of the verification of the metrics. The experts at the second and first stages are different. Equal weights are used for expert assessments.

The expert assessment is carried out according to the progress of the work described in Section 2 of this article. Each expert receives identical questions. The maximum number of possible answers in the questionnaire is eight and they are indicated by symbols from the letter a to h. It should be noted that in questions 1 and 8, experts need to choose two answers. Based on the results of the experts’ responses to the questionnaire, the most suitable four to five information security metrics are selected. These metrics are included in aggregated information security metrics. The results of the selection of classical information security metrics of experts are presented in Table 14.

Table 14.

Expert answers to the questionnaire.

Based on the expert responses presented in Table 14, classical information security metrics are compiled, which are included in the aggregated metrics. The selected classical information security metrics for three areas with assigned symbolic designations are presented in Table 15.

Table 15.

Symbolic designations of classical metrics.

At the next stage, experts are invited to evaluate the criteria characterizing the indicators to determine the weighting factors of the criteria. Experts are invited to perform this task twice, using the AHP and Fuzzy TOPSIS methods. Consequently, Table 16 presents the results of the assessment of criteria by the AHP method. In Table 16, E is expert number x, C is correctness, M is measurability, S is significance and G is generalized assessment.

Table 16.

Results of the assessment of criteria by the AHP method.

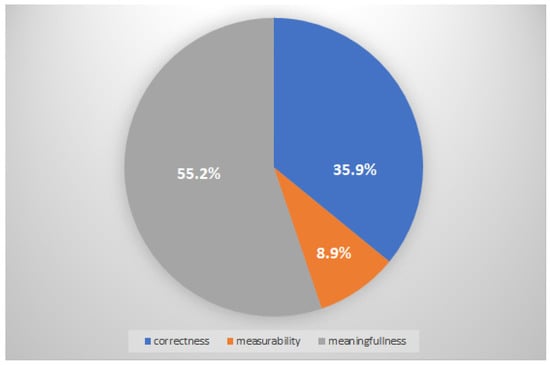

The calculations are performed using special software SpiceLogic Analytical Hierarchy Process Software and Excel [42]. Ten experts responded to the questionnaire, but the estimates submitted by two experts were rejected because their estimates were very contradictory. The selection of ratings is carried out by consistency ratio. When using the AHP method, it is stated that the compatibility rating of the assessments submitted by experts should not exceed 10% [47]. In the present case, the generalized compatibility rating was equal to 7.3% of what is considered a satisfactory size, so the assessment can be considered correct. Another size that is proposed to be calculated is the consensus rating, which, in the existing case, is 75.4%, which means that the consensus of experts when making this decision is “high” [42]. Thus, summing up the assessments of all experts, we obtain the weighting coefficients of the quality criteria of classical information security metrics correctness—35.9%; measurement capability—8.9%; significance—55.2%. The results of experts’ assessment of criteria by the AHP method are presented in Figure 3.

Figure 3.

The results of experts’ assessment of criteria by the AHP method.

Further evaluation of the criteria was carried out using the Fuzzy TOPSIS method. Table 17 presents the results of an expert assessment of quality criteria by the Fuzzy TOPSIS method. In Table 17, E refers to expert number x, C is correctness, M is measurability and S is significance. Linguistic terms are used in the evaluation, the numerical values of which are presented in Table 14. The results of the expert evaluation presented in Table 16 and Table 17 are used in further calculations.

Table 17.

The results of an expert assessment of quality criteria by the Fuzzy TOPSIS method.

In the third stage of the study, experts evaluate the selected classical indicators of information security in accordance with the specified quality criteria. Also, further calculations are carried out by the methods of WASPAS and Fuzzy TOPSIS.

4.1.1. WASPAS

Calculations are carried out by WASPAS using Formulas (4)–(13). Three decision-making matrices are formed, in which the average values of all estimates submitted by experts are calculated. The results of the calculations are presented in Table 18.

Table 18.

Decision-making matrices (WASPAS).

Normalization of the matrix is performed according to Formulas (4)–(6). The normalization results are presented in Table 19.

Table 19.

Normalized decision matrices (WASPAS).

The first optimality criterion is calculated using Formula (6). The values of the first optimality criterion are presented in Table 20.

Table 20.

Values of the first optimality criterion (WASPAS).

The second optimality criterion is calculated using Formula (7). The values of the second optimality criterion are presented in Table 21.

Table 21.

Values of the second optimality criterion (WASPAS).

Therefore, the calculation of the general optimality criterion using Formula (8), as well as using Formula (13), gives the weight of the classical metric in the aggregated metric. The value of the general optimality criterion and the weight of classical metrics in the aggregated metrics are presented in Table 22.

Table 22.

The value of the general optimality criterion and the weight of classical metrics in the aggregated metric (WASPAS).

4.1.2. Fuzzy TOPSIS

When using the Fuzzy TOPSIS method, the initial decision-making matrices are also formed. The decision-making matrix for the Fuzzy TOPSIS method is presented in Table 23.

Table 23.

The decision-making matrix for the Fuzzy TOPSIS method.

Normalization of the decision-making matrix using Formulas (15) and (16) is also carried out. The normalization results are presented in Table 24.

Table 24.

The normalization of the decision-making matrix.

The weighted normalized decision-making matrix is used by Formula (17). The results of the calculations are presented in Table 25.

Table 25.

Weighted normalized decision-making matrix (Fuzzy TOPSIS).

Consequently, the positively ideal (FPIS, A+) and negatively ideal (FNIS, A−) values of alternatives are calculated according to Formulas (18) and (19). The calculation results are presented in Table 26.

Table 26.

The positively ideal (FPIS, A+) and negatively ideal (FNIS, A−) values of alternatives (Fuzzy TOPSIS).

After carrying out all the procedures, the relative distances, the distances to the ideal alternative and the weights of the classical indicators in the aggregated indicators are calculated according to Formulas (20)–(24). Results of calculations performed are shown in Table 27.

Table 27.

Relative distances, distances to the ideal alternative and weights of classical metrics in aggregated metrics (Fuzzy TOPSIS).

After the calculations by the methods of WASPAS and Fuzzy TOPSIS, the weight coefficients of the metrics are obtained. The weight coefficients obtained by different methods are presented in Table 28.

Table 28.

Values of classical metrics obtained by various methods of solving the problem in aggregated metrics.

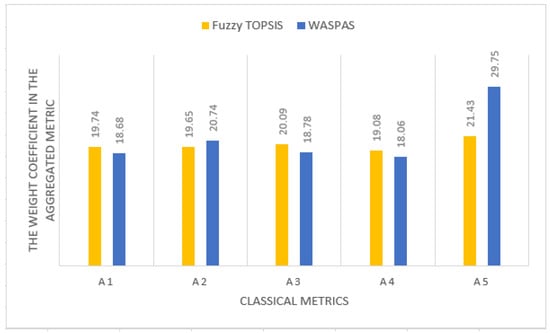

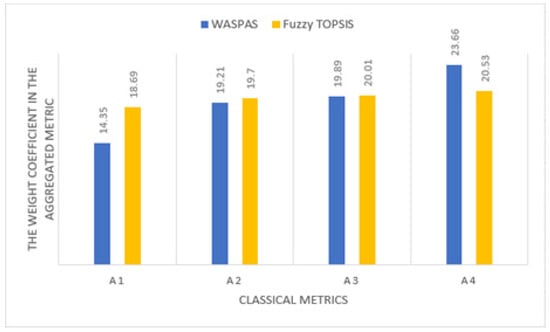

Based on the results obtained by various methods of solving the problem, bar charts are constructed representing the weights of classical metrics in the aggregated metric. The weighting coefficients of the classical metrics of operations security in the aggregated metric are obtained by various methods of solving the problem are presented in Figure 4.

Figure 4.

The weighting coefficients of the classical metrics of operations security in the aggregated metric, as obtained by various methods of solving the problem.

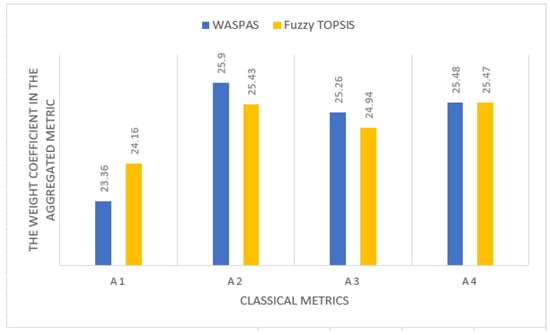

The weighting coefficients of the classical metrics of communication security in the aggregated metric are obtained by various methods of solving the problem, as presented in Figure 5.

Figure 5.

The weighting coefficients of the classical metrics of communication security in the aggregated metric, as obtained by various methods of solving the problem.

The weighting coefficients of the classical metrics of the security of the acquisition, development and maintenance of information systems in the aggregated metric are obtained by various methods of solving the problem, as presented in Figure 6.

Figure 6.

The weighting coefficients of the classical metrics of the security of the acquisition, development and maintenance of information systems in the aggregated metrics, as obtained by various methods of solving the problem.

The values of the weighting coefficients for the classical metrics obtained by two different ways of solving the problem were somewhat different. This was due to the differences between the WASPAS and Fuzzy TOPSIS methods and some computational features. The weight of the quality criteria differed in the calculation by different methods and the Fuzzy TOPSIS method was also used, which is considered not very suitable if there are large differences between the estimates of alternatives [35]. In the Fuzzy TOPSIS computational method, two variants of the ideal solution can be used; this choice can strongly affect the final answers. Based on the obtained coefficients of metrics and the convenience of the methods, it can be concluded that the MCDM WASPAS method is more suitable for solving the existing problem.

With the weight coefficients and average event numbers of classical metrics, we can calculate the aggregated metric. We do this by proposing a formula that can be used to calculate aggregated metrics:

Here, refers to an aggregated metric of real-time, is the number of events in the classical metric in real time, is the provision value (set tolerable number of events), is the i-th weight factor for each classical metric and n is the number of classical metrics forming multicriteria of aggregated metrics.

It should be noted that when applying this formula, the weight coefficients of classical metrics must be 100%:

With weight coefficients, three formulas for calculating aggregated information security metrics are formed, which we designate with symbols Aoperations, Acommunications, Asystems. Weight coefficients are used for calculations in the WASPAS method. Formulas are compiled using Formula (23):

The values are relevant to the average values of the metrics set by the organization. These values should be constantly reviewed and adjusted in accordance with the current state of the organization. The values a1–a5, b1–b5, c1–c4 rely on the values of the classical metrics (converted to a percentage expression).

It should be emphasized that the formulas for calculating these aggregated metrics are indicative. Therefore, when using aggregated indicators in practice, the formulas for calculating the final values should be adapted to individual needs. Aggregated metrics can make it possible to monitor the information security situation in the organization more effectively, to form a generalized picture. In comparison with classical information security metrics, aggregated metrics allow us to save resources spent on the process of monitoring the information security situation and the data can also serve to inform the management of the organization.

5. Discussion

In order to verify the compatibility of the criteria and alternatives presented by experts, we use the concordance coefficient, which characterizes whether the opinions of experts on the significance of the metrics are sufficiently coordinated [43]. The concordance factor is also known as Kendall’s concordance factor (W). Kendall’s compliance coefficient varies from 0 to 1. A value of 0 will mean complete incompatibility (estimates given by experts are random) and 1 means full compatibility (estimates given by experts are the same).

Further, for all opinions submitted by experts, the significance values of the compliance coefficient are calculated (according to Formula (28)). The answers received are presented in Table 29.

Table 29.

Expert assessment compatibility calculations.

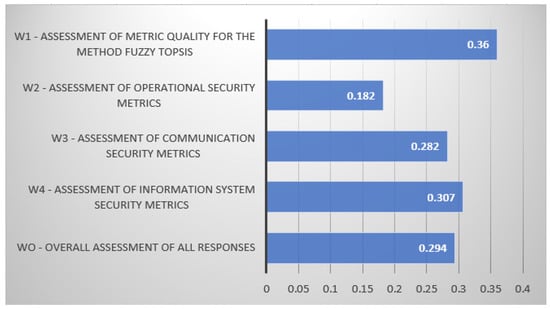

The values obtained are shown in the bar chart in Figure 7.

Figure 7.

Kendall’s W compliance coefficients calculated for various areas of expert evaluation.

The calculated values of the compliance coefficient χ2 confirm that the opinions of experts on all issues are agreed upon. The obtained values of the correspondence coefficient W should be interpreted based on the specifics of each problem. There are no established specific cracks that would state whether a certain coefficient value is acceptable or not. This coefficient can be applied to the rejection of certain expert opinions. This would allow for greater compatibility of opinions but, when solving the existing problem, this practice is decided to not be observed.

An experiment is also conducted to verify the obtained aggregated information security metrics. The essence of the verification experiment is to test the effectiveness of aggregated information security metrics when they are used in practice. The purpose of the experiment is to compare the advantages and disadvantages of classical and aggregated metrics in a situation when a monthly report on information security is being prepared.

The experiment simulates two scenarios:

- Preparation of a monthly information security report using only classical information security metrics;

- Preparation of a monthly report on information security using aggregated information security metrics.

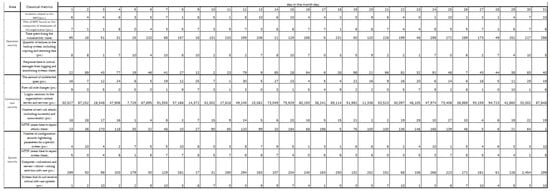

A monthly report on information security will be presented to the management of the organization, which will be prepared by information security specialists. In this case, an expert assessment will be used to perform this task. The study involved three experts from the Corporate Security Department of «Kazakhstantemirzholy» JSC, who had to perform identical tasks: prepare reports on information security in two given scenarios. Later, the experts were asked to answer questions from the questionnaire. The initial data of the verification experiment for the preparation of the report (data from classical metrics) are presented in Figure 8.

Figure 8.

The initial data of the verification experiment for the preparation of the report (data from classical metrics).

The initial data of the verification experiment for the preparation of the report (data from aggregated metrics) are presented in Figure 9.

Figure 9.

The initial data of the verification experiment for the preparation of the report (data from aggregated metrics).

The data are generated using the RANDBETWEEN function in Excel, indicating the minimum and maximum possible values (so that the possible value was logical).

The experiment is aimed at clarifying the results according to the main criteria for the preparation and presentation of the report:

- Report preparation time—shows how long it takes to prepare the report;

- Convenience of report preparation—quantifies quick or convenient report preparation;

- Readability of the results—shows to what extent the results obtained are easily understandable. It should also be borne in mind that the results can be presented to management without an engineering or technical education;

- Significance of the results—shows to what extent the results obtained are significant and allow one to rely on the organization’s decisions on information security;

- Quality of the report—shows how well the report describes the organization’s assistance in the field of security.

Based on these criteria, questions are compiled to compare the process of preparing a report using classical and aggregated metrics. The questionnaire consisted of five questions and the scale of answers ranged from −5 to 5. Here, −5 denotes the effectiveness of using classical metrics and 5 denotes the effectiveness of aggregated metrics. In turn, 0 is an equal value and −4, −3, −2, −1, 1, 2, 3 and 4 are intermediate values. Below are the answers given by experts to the questionnaire questions (Table 30).

Table 30.

Expert responses to the questionnaire of the test experiment.

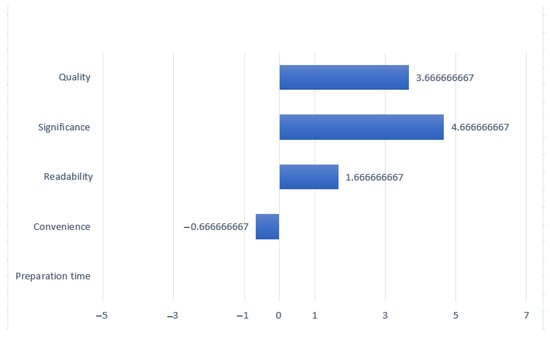

Consequently, the average values of these responses are calculated and the histogram shown in Figure 10 is constructed.

Figure 10.

Results of comparison of the use of aggregated and classical metrics.

Three experts participated in the experiment, which was conducted to verify the obtained aggregated information security metrics. After the verification of the use of aggregated metrics was completed, a survey was conducted among experts regarding the effectiveness of the proposed aggregated metric. All three experts pointed out that the preparation of the report using aggregated metrics took less time and was convenient when preparing the report. Two experts pointed out that the results of the report using classical and aggregated metrics were understandable. Also, all experts indicated that the quality of the report from the use of different metrics had not changed.

According to the answers given by experts, it can be said that the preparation of a report using aggregated metrics is more convenient, faster and achieves higher intelligibility. The materiality of the report suffers somewhat and the quality of the aggregated metrics remains unchanged.

6. Conclusions

In the era of digitalization, ensuring the necessary level of information security for any organization depends on the proper choice of an assessment method, which ensures the sustainable functioning of information and communication infrastructure facilities. In turn, the sustainable functioning of information and communication infrastructure facilities of any country affects the sustainability of national security. However, the number of threats to national security has increased significantly due to the pandemic situation all over the world. In this regard, timely identification of threats to information security compromising national security will ensure the cyber sustainability of each country.

Therefore, after analyzing the literature, it was noticed that most scientific articles are aimed at evaluating information security metrics but not at improving them. The purpose of this article was to improve information security metrics. To achieve the goal, it was decided to propose aggregated information security metrics, which consisted of classical information security metrics, including weighting coefficients describing their importance.

In the course of the work, a methodology for constructing aggregated information security metrics was developed. The compilation of aggregated metrics was carried out based on the areas of the information security management standard ISO/IEC 27001. In the compiled methodology, two methods of solving the problem were applied. Based on the obtained metric coefficients and the convenience of the methods, it was concluded that the MCDM method was more suitable for solving the existing problem.

At the first stage of the study, 14 classical metrics of information security were selected for aggregated metrics. This stage of the study revealed the conclusion of the expert opinion—the most suitable 14 classical metrics. In the second stage of the study, 14 information security metrics were evaluated according to 3 quality criteria. Using two methods to solve the problem, the weights of classical metrics were calculated using various MCDM methods and aggregated metrics were proposed. The values of the weighting coefficients of classical metrics obtained by two different methods of solving the problem were somewhat different. This was due to differences in the methods of the WASPAS and Fuzzy TOPSIS methods, as well as some calculation features. The weights of the quality criteria were different in the calculation method and the Fuzzy TOPSIS method was used, which is considered not very suitable when there are significant differences between alternative estimates [31]. In the Fuzzy TOPSIS calculation method, two variants of the ideal solution can be used; however, this choice can greatly affect the final answers. Sensitivity analysis was not performed in this study. However, we relied on previously conducted sensitivity analyses in [48,49,50,51].

In order to confirm the fulfilment of the task of improving information security metrics, a verification experiment was conducted, during which aggregated and classical information security metrics were compared. The experiment showed that the use of aggregated metrics can be a more convenient and faster process and higher intelligibility is also achieved. The materiality of the results suffers somewhat and the quality when using aggregated metrics remains unchanged compared to the classical ones.

Author Contributions

Conceptualization, N.G.; methodology, N.G.; validation, S.B. and A.A.; formal analysis, A.A., S.B. and N.G.; investigation, A.A., S.B. and N.G.; data curation, S.B. and A.A.; writing—original draft preparation, S.B. and A.A.; writing—review and editing, I.T.; visualization, I.T.; supervision, N.G. and A.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- No. 418-V SAM; The Law “On Informatization” of the Republic of Kazakhstan. Ministry of Justice of the Republic of Kazakhstan: Astana, Kazakhstan, 24 November 2015.

- Qadir, S.; Quadri, S.M.K. Information Availability: An Insight into the Most Important Attribute of Information Security. J. Inf. Secur. 2016, 07, 185–194. [Google Scholar] [CrossRef]

- ISO/IEC 27004:2016E; Information Technology—Security Techniques—Information Security Management—Monitoring, Measurement, Analysis and Evaluation. International Organization for Standardization: Geneva, Switzerland, 2016.

- Ren, Y.; Xiao, Y.; Zhou, Y.; Zhang, Z.; Tian, Z. CSKG4APT: A Cybersecurity Knowledge Graph for Advanced Persistent Threat Organization Attribution. IEEE Trans. Knowl. Data Eng. 2022, 01, 5695–5709. [Google Scholar] [CrossRef]

- Turskis, Z.; Goranin, N.; Nurusheva, A.; Boranbayev, S. A Fuzzy WASPAS-Based Approach to Determine Critical Information Infrastructures of EU Sustainable Development. Sustainability 2019, 11, 424. [Google Scholar] [CrossRef]

- Bodeau, D.; Graubart, R.; McQuaid, R.; Woodill, J. Cyber Resiliency Metrics, Measures of Effectiveness, and Scoring; Defense Technical Information Center: Fort Belvoir, VA, USA, 2018. [Google Scholar]

- Xiang, Y.; Xianfei, Y.; Qinji, T.; Chun, S.; Zhihan, L. An edge computing based anomaly detection method in IoT industrial sustainability. Appl. Soft Comput. 2022, 128, 109486. [Google Scholar]

- Qingfeng, T.; Yue, G.; Jinqiao, S.; Xuebin, W.; Binxing, F.; Zhi, T. Toward a Comprehensive Insight into the Eclipse Attacks of Tor Hidden Services. IEEE Internet Things J. 2018, 6, 1584–1593. [Google Scholar]

- Muhammad, S.; Zhihong, T.; Ali, B.; Alireza, J. Data Mining and Machine Learning Methods for Sustainable Smart Cities Traffic Classification: A Survey. Sustain. Cities Soc. 2020, 60, 102177. [Google Scholar]

- Azuwa, M.P.; Ahmad, R.; Sahib, S.; Shamsuddin, S. Technical Security Metrics Model in Compliance with ISO/IEC 27001 Standard. Int. J. Cyber-Secur. Digit. Forensics 2015, 1, 280–288. [Google Scholar]

- ISO/IEC 27001:2013E; Information Technology—Security Techniques—Information Security Management Systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2013.

- Stojcic, M.; Zavadskas, K.; Pamucar, D.; Stevic, Z.; Mardani, A. Application of MCDM Methods in Sustainability Engineering: A Literature Review 2008–2018. Symmetry 2019, 11, 350. [Google Scholar] [CrossRef]

- Diaz-Balteiro, L.; Gonzalez-Pachon, J.; Romero, C. Measuring systems sustainability with multi-criteria methods: A critical review. Eur. J. Oper. Res. 2017, 258, 607–616. [Google Scholar] [CrossRef]

- Zavadskas, E.; Mardani, A.; Turskis, Z.; Jusoh, A.; Nor, K. Development of TOPSIS method to solve complicated decision-making problems: An overview on developments from 2000 to 2015. Int. J. Inf. Technol. Decis. Mak. 2016, 15, 645–682. [Google Scholar] [CrossRef]

- Keshavarz-Ghorabaee, M.; Zavadskas, E.; Amiri, M.; Esmaeili, A. Multi-criteria evaluation of green suppliers using an extended WASPAS method with interval type-2 fuzzy sets. J. Clean. Prod. 2016, 137, 213–229. [Google Scholar] [CrossRef]

- Davoudabadi, R.; Mousavi, S.M.; Mohagheghi, V. A new last aggregation method of multi-attributes group decision making based on concepts of TODIM, WASPAS and TOPSIS under interval-valued intuitionistic fuzzy uncertainty. Knowl. Inf. Syst. 2020, 62, 1371–1391. [Google Scholar] [CrossRef]

- Rani, P.; Mishra, A.R.; Pardasani, K.R. A novel WASPAS approach for multi-criteria physician selection problem with intuitionistic fuzzy type-2 sets. Soft Comput. 2020, 24, 2355–2367. [Google Scholar] [CrossRef]

- Jaquith, A. Security Metrics: Replacing Fear, Uncertainty, and Doubt; Pearson Education: London, UK, 2007. [Google Scholar]

- Yasasin, E.; Schryen, G. Requirements for it security metrics—An argumentation theory based approach. In Proceedings of the 23rd European Conference on Information Systems, ECIS 2015, Münster, Germany, 26–29 May 2015; pp. 1–16. [Google Scholar]

- Hallberg, J.; Eriksson, M.; Granlund, H.; Kowalski, S.; Lundholm, K.; Monfelt, Y.; Pilemalm, S.; Wätterstam, T.; Yngström, L. Controlled Information Security Results and Conclusions from the Research Project; FOI, Swedish Defence Research Agency: Kista, Sweden, 2011; pp. 1–42.

- Savola, R. Towards a Security Metrics Taxonomy for the Information and Communication Technology Industry. In Proceedings of the International Conference on Software Engineering Advances (ICSEA 2007), Cap Esterel, France, 25–31 August 2007; p. 60. [Google Scholar] [CrossRef]

- Julisch, K. A Unifying Theory of Security Metrics with Applications with Applications; Security; IBM: Armonk, NY, USA, 2009; Volume 19, Available online: http://domino.watson.ibm.com/library/cyberdig.nsf/papers/223F8EBC4CC2C3AC852576F800426C0E/$File/rz3758.pdf (accessed on 14 February 2023).

- Kaur, M.; Jones, A. Security Metrics—A Critical Analysis of Current Methods. In Proceedings of the Australian Information Warfare and Security Conference, Perth, Australia, 1 December 2008; pp. 41–47. [Google Scholar] [CrossRef]

- Ouchani, S.; Debbabi, M. Specification, verification, and quantification of security in model-based systems. Computing 2015, 97, 691–711. [Google Scholar] [CrossRef]

- Chew, E.; Swanson, M.; Stine, K.; Bartol, N.; Brown, A.; Robinson, W. Performance Measurement Guide for Information Security; NIST Special Publication 800-55 Revision 1. July. 2008; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2008.

- Savola, R. Quality of security metrics and measurements. Comput. Secur. 2013, 37, 78–90. [Google Scholar] [CrossRef]

- Peterson, E. The Big Book of Key Performance Indicators; Web Analytics Demystified: Camas, WA, USA, 2006. [Google Scholar]

- Neto, A.A.; Vieira, M. Benchmarking Untrustworthiness. Int. J. Dependable Trust. Inf. Syst. 2011, 1, 32–54. [Google Scholar] [CrossRef]

- Pendleton, M.; Garcia-Lebron, R.; Xu, S. A Survey on Security Metrics. ACM Comput. Surv. 2016, 49, 62. [Google Scholar] [CrossRef]

- Gerwin, T.; Kaveriappa, M.; Stack, S. Next-Gen Unified Security Metrics. Executive Summary. 2019. Available online: https://www.cisco.com/c/dam/en_us/about/doing_business/trust-center/docs/next-gen-unified-security-metrics-white-paper.pdf (accessed on 15 December 2022).

- Wang, P.; Zhu, Z.; Wang, Y. A novel hybrid MCDM model combining the SAW, TOPSIS and GRA methods based on experimental design. Inf. Sci. 2016, 345, 27–45. [Google Scholar] [CrossRef]

- Poškas, G.; Poškas, P.; Sirvydas, A.; Šimonis, A. Daugiakriterinės analizės metodo taikymas parenkant Ignalinos AE V1 pastato įrengimų išmontavimo būdą. ir jos taikymo rezultatai. Energetika 2012, 58, 86–96. [Google Scholar] [CrossRef]

- Kahraman, C.; Öztayşi, B.; Uçal Sari, I.; Turanoǧlu, E. Fuzzy analytic hierarchy process with interval type-2 fuzzy sets. Knowl. -Based Syst. 2014, 59, 48–57. [Google Scholar] [CrossRef]

- Solana-González, P.; Vanti, A.A.; Hackbart Souza Fontana, K. Multicriteria analysis of the compliance for the improvement of information security. J. Inf. Syst. Technol. Manag. 2019, 16, 1–19. [Google Scholar] [CrossRef]

- Siksnelyte-Butkiene, I.; Zavadskas, E.K.; Streimikiene, D. Multi-Criteria Decision-Making (MCDM) for the Assessment of Renewable Energy Technologies in a Household: A Review. Energies 2020, 13, 1164. [Google Scholar] [CrossRef]

- Fasulo, P. Top 20 Cybersecurity KPIs to Track in 2021. 2019. Available online: https://securityscorecard.com/blog/9-cybersecurity-metrics-kpis-to-track (accessed on 14 February 2023).

- Ahmed, Y.; Naqvi, S.; Josephs, M. Aggregation of security metrics for decision making: A reference architecture. In Proceedings of the ACM International Conference Proceeding Series, Madrid, Spain, 24–28 September 2018. [Google Scholar] [CrossRef]

- Stojić, G.; Stević, Ž.; Antuchevičiene, J.; Pamučar, D.; Vasiljević, M. A novel rough WASPAS approach for supplier selection in a company manufacturing PVC carpentry products. Information 2018, 9, 121. [Google Scholar] [CrossRef]

- Zavadskas, E.K.; Turskis, Z.; Antucheviciene, J.; Zakarevicius, A. Optimization of weighted aggregated sum product assessment. Elektron. Elektrotechnika 2012, 122, 3–6. [Google Scholar] [CrossRef]

- Bhol, S.G.; Mohanty, J.R.; Pattnaik, P.K. Cyber Security Metrics Evaluation Using Multi-criteria Decision-Making Approach. In Smart Intelligent Computing and Applications; Satapathy, S., Bhateja, V., Mohanty, J., Udgata, S., Eds.; Smart Innovation, Systems and Technologies; Springer: Singapore, 2020; Volume 160. [Google Scholar] [CrossRef]

- Singh, S.; Pattnaik, P.K. Recommender System for Mobile Phone Selection. Int. J. Comput. Sci. Mob. Appl. 2018, 6, 150–162. [Google Scholar]

- Goepel, K.D. Implementing the Analytic Hierarchy Process as a Standard Method for Multi-Criteria Decision Making in Corporate Enterprises—A New AHP Excel Template with Multiple Inputs. In Proceedings of the International Symposium on the Analytic Hierarchy Process, Kuala Lumpur, Malaysia, 23–26 June 2013; pp. 1–10. [Google Scholar]

- Badalpur, M.; Nurbakhsh, E. An application of WASPAS method in risk qualitative analysis: A case study of a road construction project in Iran. Int. J. Constr. Manag. 2019, 21, 910–918. [Google Scholar] [CrossRef]

- Chakraborty, S.; Zavadskas, E.K.; Antucheviciene, J. Applications of WASPAS method as a multi-criteria decision-making tool. Econ. Comput. Econ. Cybern. Stud. Res. 2015, 49, 5–22. [Google Scholar]

- Mokhtarian, M.N. A note on “extension of fuzzy TOPSIS method based on interval-valued fuzzy sets”. Appl. Soft Comput. J. 2015, 26, 513–514. [Google Scholar] [CrossRef]

- Rules for Auditing Information Systems. Order of the Minister of Information and Communications of the Republic of Kazakhstan Dated June 13, 2018 No. 263. Registered with the Ministry of Justice of the Republic of Kazakhstan on June 29, 2018 No. 17141. Available online: https://adilet.zan.kz/rus/docs/V1800017141 (accessed on 4 April 2023).

- Kubler, S.; Robert, J.; Derigent, W.; Voisin, A.; Traon, Y.L. A state-of the-art survey & testbed of fuzzy AHP (FAHP) applications. Expert Syst. Appl. 2016, 65, 398–422. [Google Scholar] [CrossRef]

- Bakioglu, G.; Atahan, A. AHP integrated TOPSIS and VIKOR methods with Pythagorean fuzzy sets to prioritize risks in self-driving vehicles. Appl. Soft Comput. 2021, 99, 106948. [Google Scholar] [CrossRef]

- Stoilova, S.; Munier, N.; Kendra, M.; Skrúcaný, T. Multi-criteria evaluation of railway network performance in countries of the TEN-T Orient–East Med Corridor. Sustainability 2020, 12, 1482. [Google Scholar] [CrossRef]

- Aldababseh, A.; Temimi, M.; Maghelal, P.; Branch, O.; Wulfmeyer, V. Multi-criteria evaluation of irrigated agriculture suitability to achieve food security in an arid environment. Sustainability 2018, 10, 803. [Google Scholar] [CrossRef]

- Taylan, O.; Alamoudi, R.; Kabli, M.; AlJifri, A.; Ramzi, F.; Herrera-Viedma, E. Assessment of energy systems using extended fuzzy AHP, fuzzy VIKOR, and TOPSIS approaches to manage non-cooperative opinions. Sustainability 2020, 12, 2745. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).