Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving

Abstract

1. Introduction

2. Methodology

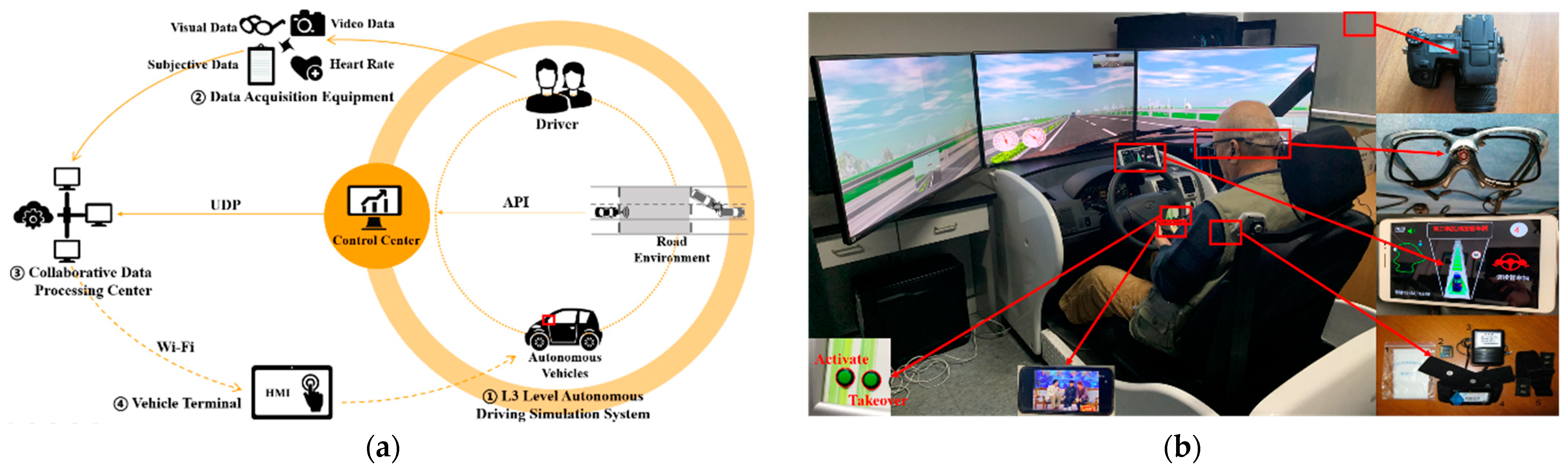

2.1. Development of Test Platform

2.2. Experimental Design

2.2.1. Take-Over Request time (TOR)

2.2.2. No-Driving-Related Task (NDRT)

2.2.3. Takeover Scenario

2.2.4. Takeover Request Method

- Manual driving: Figure 3a shows that the automated driving system is unavailable due to the constraints of the surrounding traffic environment or the failure of the automated driving system. Thus, the driver manually controls the autonomous vehicle;

- Autonomous driving: Figure 3c shows that the automated driving system is activated, and the automated driving system operates normally;

2.3. Participants

2.4. Experimental Procedure

- (1)

- The participant fills out the informed consent form and the basic information form;

- (2)

- Pre-experiment driver training where theoretical training, video training, and practical operation training (30 min to 40 min in total) are conducted;

- (3)

- Experiment 1: Experiment 1 begins when the driver wears the test equipment and the experimenter reads him the instructions. The driver passes through scenarios S1 and S2. Experiment 1 takes about 18 min for the vehicle to go from the start to the end of Road 1, including the automated and the manual-driven modes.

- (4)

- Experimental interval. After Experiment 1, the experimenter sorted out the equipment and prepared for Experiment 2 after having a rest for about 10 to 15 min;

- (5)

- Experiment 2: The process in Experiment 2 is the same as in Experiment 1. The driver passes through scenarios S3 and S4, and the duration of Experiment 2 is also about 18 min;

- (6)

- Experiment ends: Drivers fill out the subjective questionnaire and receive remuneration.

2.5. Data Preprocessing and Indicators Selection

- (1)

- Gaze duration (unit: s)

- (2)

- Pupil area (unit: mm2)

- (3)

- Takeover Response Time (TRT)

2.6. Analytical Method

3. Results

3.1. Characteristic Analysis of Driver’s Perception Level

3.1.1. Driver’s Perception Restored

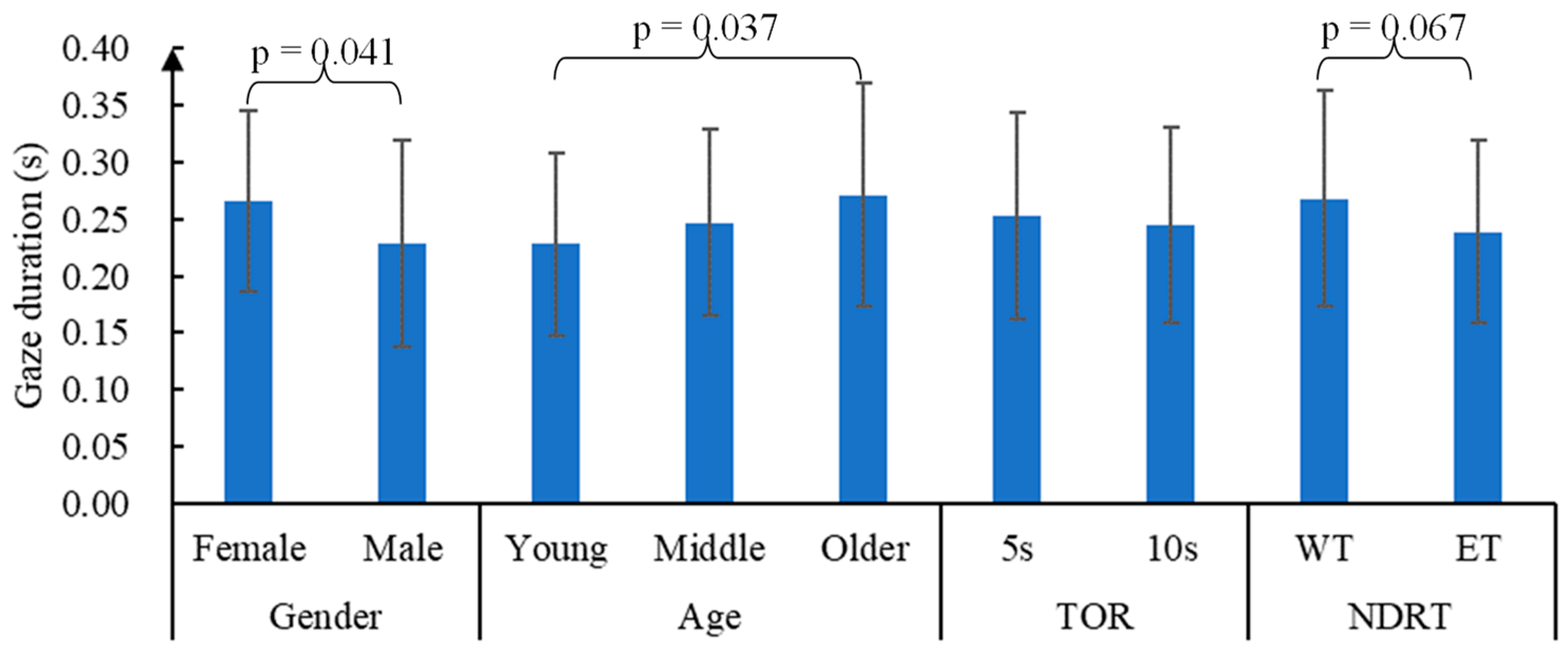

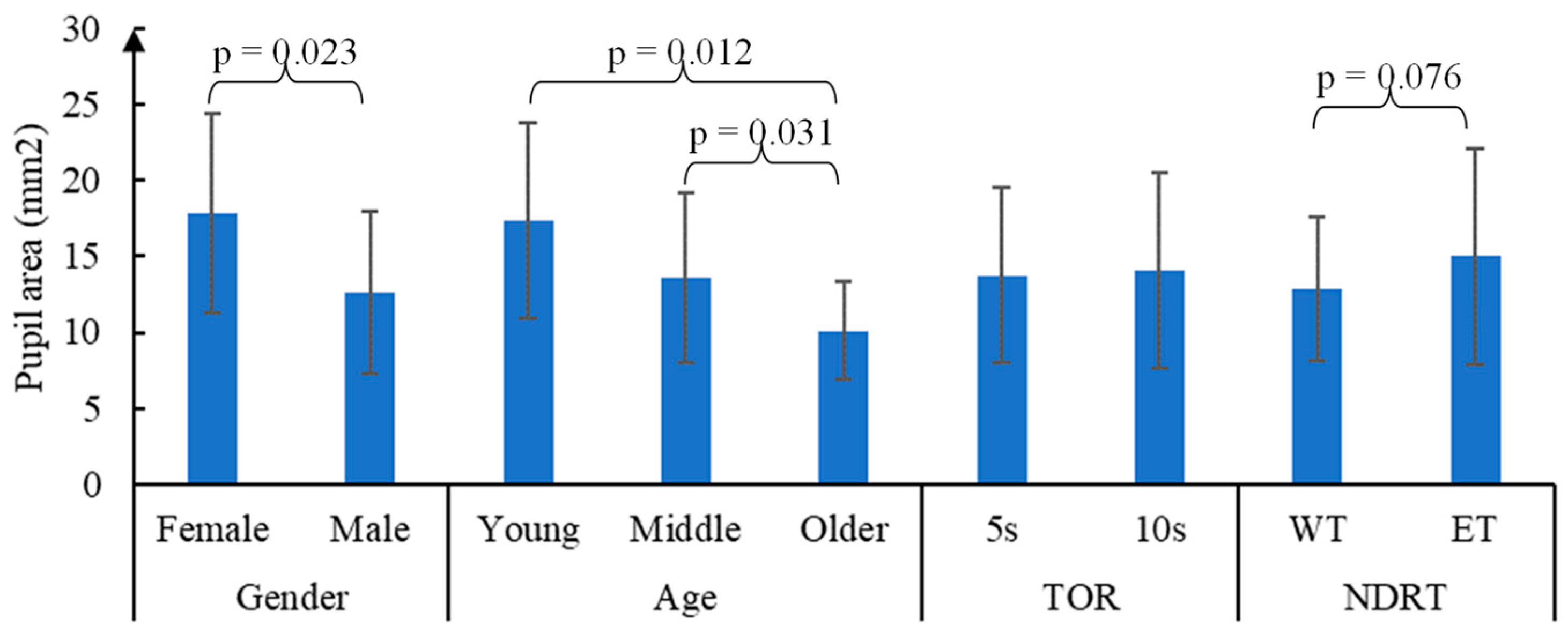

3.1.2. Influencing Factors of Drivers’ Perception Restore

3.2. Analysis of the Driver’s Perception Level on Takeover Performance

- -

- With the increasing level of gaze duration, takeover response time was prolonged by 0.243 s (having a p = 0.017). The takeover response time decreased with the increase of pupil area. This indicates that the improvement of the driver’s perception level helps to enhance the driver’s reaction capacity.

- -

- Taking the female driver as the baseline, the takeover response time of the male driver was reduced. A statistical difference between age and takeover response time is obtained, that is the takeover response time decreased with the increase of age;

- -

- Non-driving related tasks have marginal significance on takeover response time (p = 0.085), while work tasks had longer takeover response time;

- -

- The driver’s gaze duration has an interaction with the gender, the age, and the non-driving related tasks on takeover response time, and the increase of the driver’s gaze duration yields in an increase in the driver’s takeover response time;

- -

- The pupil area has an interaction with the gender, the age, and the non-driving related tasks on takeover response time, and the increase of the pupil area decreased the driver’s takeover response time.

4. Discussion

5. Conclusions

- (1)

- The driver’s perception level was quantified by gaze duration and pupil size. After the takeover request is triggered, the drivers’ perception level was significantly restored. The perception level of male drivers was higher than that of female drivers, and the entertainment task was higher than work task. The drivers’ perception level decreases with the increasing age.

- (2)

- The driver’s takeover performance is quantified by the takeover response time. In GLMM, drivers’ perception has a positive effect on takeover performance. The perception level of drivers interacted with gender, age, and non-driving related tasks on takeover performance. The shorter the gaze duration, the larger the pupil area, and the shorter the takeover response time.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koopman, P.; Wagner, M. Autonomous Vehicle Safety: An Interdisciplinary Challenge. IEEE Intell. Transp. Syst. Mag. 2017, 9, 90–96. [Google Scholar] [CrossRef]

- Guler, S.I.; Menendez, M.; Meier, L. Using connected vehicle technology to improve the efficiency of intersections. Transp. Res. Part C Emerg. Technol. 2014, 46, 121–131. [Google Scholar] [CrossRef]

- Huang, K.; Yang, X.; Lu, Y.; Mi, C.C.; Kondlapudi, P. Ecological Driving System for Connected/Automated Vehicles Using a Two-Stage Control Hierarchy. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2373–2384. [Google Scholar] [CrossRef]

- SAE. J3016—Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE Int. 2018, 1–30. [Google Scholar] [CrossRef]

- Diels, C.; Bos, J.E. Self-driving carsickness. Appl. Ergon. 2016, 53, 374–382. [Google Scholar] [CrossRef]

- Zou, C. The Distribution Characteristics and Risk Assassment of Fog Disaster in Huning Highway. Master’s Thesis, Nanjing University of Information Science & Technology, Nanjing, China, 2011. [Google Scholar]

- Merat, N.; Jamson, A.H.; Lai, F.C.H.; Carsten, O. Highly automated driving, secondary task performance, and driver state. Hum. Factors 2012, 54, 762–771. [Google Scholar] [CrossRef]

- Naujoks, F.; Purucker, C.; Wiedemann, K.; Marberger, C. Noncritical State Transitions During Conditionally Automated Driving on German Freeways: Effects of Non–Driving Related Tasks on Takeover Time and Takeover Quality. Hum. Factors 2019, 61, 596–613. [Google Scholar] [CrossRef]

- Kaber, D.; Zhang, Y.; Jin, S.; Mosaly, P.; Garner, M. Effects of hazard exposure and roadway complexity on young and older driver situation awareness and performance. Transp. Res. Part F Traffic Psychol. Behav. 2012, 15, 600–611. [Google Scholar] [CrossRef]

- Young, K.L.; Salmon, P.M.; Cornelissen, M. Missing links? The effects of distraction on driver situation awareness. Saf. Sci. 2013, 56, 36–43. [Google Scholar] [CrossRef]

- De Winter, J.C.F.; Happee, R.; Martens, M.H.; Stanton, N.A. Effects of adaptive cruise control and highly automated driving on workload and situation awareness: A review of the empirical evidence. Transp. Res. Part F: Traffic Psychol. Behav. 2014, 27, 196–217. [Google Scholar] [CrossRef]

- Lu, G.; Zhao, P.; Wang, Z.; Lin, Q. Impact of Visual Secondary Task on Young Drivers’ Take-over Time in Automated Driving. China J. Highw. Transp. 2018, 31, 165–171. [Google Scholar] [CrossRef]

- Ma, S.; Zhang, W.; Shi, J.; Yang, Z. The human factors of the take-over process in conditional automated driving based on cognitive mechanism. Adv. Psychol. Sci. 2020, 28, 150. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Petermeijer, S.M.; Petrovych, V.; Dodou, D.; de Winter, J.C.F. Take-over requests in highly automated driving: A crowdsourcing survey on auditory, vibrotactile, and visual displays. Transp. Res. Part F Traffic Psychol. Behav. 2018, 56, 82–98. [Google Scholar] [CrossRef]

- Chen, W.; Sawaragi, T.; Hiraoka, T. Adaptive multi-modal interface model concerning mental workload in take-over request during semi-autonomous driving. SICE J. Control. Meas. Syst. Integr. 2021, 14, 10–21. [Google Scholar] [CrossRef]

- Samuel, S.; Borowsky, A.; Zilberstein, S.; Fisher, D.L. Minimum time to situation awareness in scenarios involving transfer of control from an automated driving suite. Transp. Res. Rec. 2016, 2602, 115–120. [Google Scholar] [CrossRef]

- Kim, H.J.; Yang, J.H. Takeover Requests in Simulated Partially Autonomous Vehicles Considering Human Factors. IEEE Trans. Hum. Mach. Syst. 2017, 47, 735–740. [Google Scholar] [CrossRef]

- Zhao, X.; Chen, H.; Li, Z.; Li, H.; Gong, J.; Fu, Q. Influence characteristics of automated driving takeover behavior in different scenarios. China J. Highw. Transp. 2022, 35, 195–214. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, H.; Zhao, X.; Li, H.; Li, Z.; Fu, Q. A Study on the Impact of Immersion Levels of Non-driving-related Tasks on Takeover Behavior. Traffic Inf. Saf. 2022, 40, 135–143. [Google Scholar] [CrossRef]

- Hardman, S.; Berliner, R.; Tal, G. Who will be the early adopters of automated vehicles? Insights from a survey of electric vehicle owners in the United States. Transp. Res. Part D Transp. Environ. 2019, 71, 248–264. [Google Scholar] [CrossRef]

- Robertson, R.D.; Meister, S.R.; Vanlaar, W.G.M.; Mainegra Hing, M. Automated vehicles and behavioural adaptation in Canada. Transp. Res. Part A: Policy Pract. 2017, 104, 50–57. [Google Scholar] [CrossRef]

- Nielsen, T.A.S.; Haustein, S. On sceptics and enthusiasts: What are the expectations towards self-driving cars? Transp. Policy 2018, 66, 49–55. [Google Scholar] [CrossRef]

- Körber, M.; Gold, C.; Lechner, D.; Bengler, K. The influence of age on the take-over of vehicle control in highly automated driving. Transp. Res. Part F: Traffic Psychol. Behav. 2016, 39, 19–32. [Google Scholar] [CrossRef]

- Scott-Parker, B.; De Regt, T.; Jones, C.; Caldwell, J. The situation awareness of young drivers, middle-aged drivers, and older drivers: Same but different? Case Stud. Transp. Policy 2020, 8, 206–214. [Google Scholar] [CrossRef]

- Clark, H.; Feng, J. Age differences in the takeover of vehicle control and engagement in non-driving-related activities in simulated driving with conditional automation. Accid. Anal. Prev. 2017, 106, 468–479. [Google Scholar] [CrossRef] [PubMed]

- Salvia, E.; Petit, C.; Champely, S.; Chomette, R.; Di Rienzo, F.; Collet, C. Effects of Age and Task Load on Drivers’ Response Accuracy and Reaction Time When Responding to Traffic Lights. Front. Aging Neurosci. 2016, 8, 169. [Google Scholar] [CrossRef]

- You, F.; Zhang, J.; Zhang, J.; Deng, H.; Liu, Y. Interaction Design for Trust-based Takeover Systems in Smart Cars. Packag. Eng. 2021, 42, 20–28. [Google Scholar] [CrossRef]

- Sahaï, A.; Barré, J.; Bueno, M. Urgent and non-urgent takeovers during conditional automated driving on public roads: The impact of different training programmes. Transp. Res. Part F: Traffic Psychol. Behav. 2021, 81, 130–143. [Google Scholar] [CrossRef]

- Burnett, G.E.; Large, D.R.; Salanitri, D. How Will Drivers Interact with Vehicles of the Future? RAC Foundation: London, UK, 2019; Available online: https://www.racfoundation.org/wp-content/uploads/Automated_Driver_Simulator_Report_July_2019.pdf (accessed on 2 November 2022).

- Grade of Fog Forecast; Standardization Administration of the People’s Republic of China: Beijing, China, 2012.

- Zeeb, K.; Härtel, M.; Buchner, A.; Schrauf, M. Why is steering not the same as braking? The impact of non-driving related tasks on lateral and longitudinal driver interventions during conditionally automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2017, 50, 65–79. [Google Scholar] [CrossRef]

- Lu, Z.; Zhang, B.; Feldhütter, A.; Happee, R.; Martens, M.; De Winter, J.C. Beyond mere take-over requests: The effects of monitoring requests on driver attention, take-over performance, and acceptance. Transp. Res. Part F Traffic Psychol. Behav. 2019, 63, 22–37. [Google Scholar] [CrossRef]

- Campbell, J.L.; Brown, J.L.; Graving, J.S.; Richard, C.M.; Lichty, M.G.; Bacon, L.P. Human Factors Design Guidance for Level 2 and Level 3 Automated Driving Concepts; NHTSA: Washington, DC, USA, 2018. [Google Scholar]

- Tan, Y. Policy interpretation of Medium- and long-term youth development plan (2016–2025). China Youth Study 2017, 9, 12–18. [Google Scholar] [CrossRef]

- Law of the People’s Republic of China on the Protection of The Rights and Interests of the Elderly; China Civil Affairs: Beijing, China, 2013.

- Pritschet, L.; Powell, D.; Horne, Z. Marginally Significant Effects as Evidence for Hypotheses: Changing Attitudes Over Four Decades. Psychol. Sci. 2016, 27, 1036–1042. [Google Scholar] [CrossRef] [PubMed]

- Fei, Y. Linear and Generalized Linear Mixed Models and Their Statistical Diagnosis; Science Press: Beijing, China, 2013. [Google Scholar]

- Nelder, J.A.; Wedderburn, R.W. Generalized linear models. J. R. Stat. Soc. Ser. A (Gen.) 1972, 135, 370–384. [Google Scholar] [CrossRef]

- Carsten, O.; Jamson, A.H. Driving Simulators as Research Tools in Traffic Psychology. In Handbook of Traffic Psychology; Porter, B.E., Ed.; Elsevier Academic Press: San Diego, CA, USA, 2011; pp. 87–96. [Google Scholar] [CrossRef]

- Stephenson, A.C.; Eimontaite, I.; Caleb-Solly, P.; Morgan, P.L.; Khatun, T.; Davis, J.; Alford, C. Effects of an Unexpected and Expected Event on Older Adults’ Autonomic Arousal and Eye Fixations During Autonomous Driving. Front. Psychol. 2020, 11, 571961. [Google Scholar] [CrossRef] [PubMed]

- Eisma, Y.B.; Eijssen, D.J.; de Winter, J.C.F. What attracts the driver’s eye? Attention as a function of task and events. Information 2022, 13, 333. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, J.; Pan, C.; Chang, R. Effects of automation trust in drivers’ visual distraction during automation. PLoS ONE 2021, 16, e0257201. [Google Scholar] [CrossRef]

- Zihui, G.; Weiwei, G.; Jiyuan, T. Analysis on eye movement characteristics and behavior of drivers taking over automated vehicles. China Saf. Sci. J. 2022, 32, 65–71. [Google Scholar] [CrossRef]

- Lebel, U.; Ben-Shalom, U. Military Leadership in Heroic and Post-Heroic Conditions. In Handbook of the Sociology of the Military; Springer: Berlin/Heidelberg, Germany, 2018; pp. 463–475. [Google Scholar] [CrossRef]

| Attribute | Level | Number | Mean | Standard Deviation |

|---|---|---|---|---|

| Gender | Male | 32 | - | - |

| Female | 10 | - | - | |

| Age | Young (18–35) | 15 | 23.2 | 2.0 |

| Middle-aged (36–60) | 14 | 46.5 | 6.6 | |

| Older (>60) | 13 | 63.7 | 2.9 |

| Indicator | 10 s before TOR Is Triggered | 10 s after TOR Is Triggered | p-Value | ||

|---|---|---|---|---|---|

| Mean Value | Standard Deviation | Mean Value | Standard Deviation | ||

| Gaze duration (s) | 0.281 | 0.095 | 0.255 | 0.115 | 0.037 ** |

| Pupil area (mm2) | 11.743 | 5.114 | 13.957 | 6.097 | 0.051 * |

| Indicator | Group | Range | Numbers | Ratio | p-Value |

|---|---|---|---|---|---|

| Gaze duration (s) | ➀ Low | [0.022, 0.275] | 98 | 0.61 | <0.001 ** |

| ➁ High | (0.275, 0.530] | 62 | 0.39 | ||

| Pupil area (mm2) | ➀ Low | [4.627, 15.618] | 89 | 0.56 | <0.001 ** |

| ➁ High | (15.618, 31.743] | 71 | 0.44 |

| Indicator | Level | Baseline | Takeover Response Time (Unit: s) | |

|---|---|---|---|---|

| β | p | |||

| Intercept | 1.226 | <0.001 ** | ||

| Gaze duration | High | Low | 0.243 | 0.017 ** |

| Pupil area | High | Low | −0.157 | 0.032 ** |

| Gender | Male | Female | −0.032 | 0.087 * |

| Age | Middle | Young | 0.043 | 0.073 * |

| Older | Young | 0.179 | 0.024 ** | |

| TOR | 10 s | 5 s | 0.025 | 0.115 |

| NDRT | WT | ET | 0.037 | 0.085 * |

| Gaze duration × Gender | High × Female | Low × Female | 0.132 | 0.031 ** |

| High × Male | Low × Male | 0.293 | 0.012 ** | |

| Gaze duration × Age | High × Young | Low × Young | 0.213 | 0.026 ** |

| High × Middle | Low × Middle | 0.144 | 0.046 ** | |

| High × Older | Low × Older | 0.078 | 0.061 * | |

| Gaze duration × TOR | High × 5 s | Low × 5 s | 0.017 | 0.332 |

| High × 10 s | Low × 10 s | 0.015 | 0.217 | |

| Gaze duration × NDRT | High × WT | Low × WT | 0.113 | 0.041 ** |

| High × ET | Low × ET | 0.021 | 0.196 | |

| Pupil area × Gender | High × Female | Low × Female | −0.027 | 0.109 |

| High × Male | Low × Male | −0.157 | 0.037 ** | |

| Pupil area × Age | High × Young | Low × Young | −0.189 | 0.031 ** |

| High × Middle | Low × Middle | −0.013 | 0.334 | |

| High × Older | Low × Older | −0.014 | 0.317 | |

| Pupil area × TOR | High × 5 s | Low × 5 s | −0.009 | 0.513 |

| High × 10 s | Low × 10 s | 0.012 | 0.411 | |

| Pupil area × NDRT | High × WT | Low × WT | −0.139 | 0.039 ** |

| High × ET | Low × ET | 0.017 | 0.341 | |

| Omnibus test | χ2 = 18.881, p = 0.016 ** | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Chen, H.; Gong, J.; Zhao, X.; Li, Z. Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving. Sustainability 2023, 15, 445. https://doi.org/10.3390/su15010445

Wang Q, Chen H, Gong J, Zhao X, Li Z. Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving. Sustainability. 2023; 15(1):445. https://doi.org/10.3390/su15010445

Chicago/Turabian StyleWang, Qiuhong, Haolin Chen, Jianguo Gong, Xiaohua Zhao, and Zhenlong Li. 2023. "Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving" Sustainability 15, no. 1: 445. https://doi.org/10.3390/su15010445

APA StyleWang, Q., Chen, H., Gong, J., Zhao, X., & Li, Z. (2023). Studying Driver’s Perception Arousal and Takeover Performance in Autonomous Driving. Sustainability, 15(1), 445. https://doi.org/10.3390/su15010445