1. Introduction

Open government fulfills society’s demand for responsible and responsive government and emphasizes the role of transparency as a determinant of government performance. Thanks to the global initiative and rapid development of the e-government idea, the concept of e-government has been raised to a higher, more sophisticated level of open government [

1,

2]. This new e-government model involves data openness, transparency, and participation as the main openness features, and is driven by modern technologies and created concepts that enable greater online collaboration [

3]. The concept of open data has had the most impact on shaping the open government and directing its growth towards data and not services [

4]. Data openness represents an essential precondition for building transparency, and it is promoted around the world as part of the open government initiatives. Data openness is not solely focused on making information obtainable, but also on ensuring that data are well-known, easily accessible, and open to all. Publishing data is the first step, while the second step is the provision of data in a way that creates opportunities for users to go beyond passive recipients [

5].

Transparency cannot be separated from open data, as the principle of open data is the main prerequisite for building transparency. A transparent government is one that allows free access to open data and guarantees the authenticity of publishing agencies, data integrity, accuracy, and quality, providing users with understandable datasets in reusable formats. Harrison et al. [

6] state that transparency cannot be achieved by mere downloading of data sets. The data must be useful and allow users to create more value. Jaeger and Bertot [

7] emphasize that the issue of transparency does not have only short-term considerations, regarding information availability to all, but also long-term considerations referring to information usability by all. They further elaborate that such demands require establishing tasks related to achieving information usability and accessibility, promoting government and information and technology literacy, making appropriate and accurate content and services available, meeting user expectations, promoting trust, and encouraging lifelong usage. The accomplishment of such defined tasks requires the definition of comprehensive descriptive information [

8], as a form of transparent government information, and its deliverance to the public through online services.

Evaluation of open government as a concept consisting of evaluation of data openness, transparency, participation, and collaboration has not been addressed in the academic literature in the manner we propose with the OpenGovB [

2]. Furthermore, we could not find a benchmark that evaluates transparency in the open government context, in a way that allows automatic calculation of given parameters solely based on metadata of published datasets. Automating evaluation would make the process of open data analysis much faster, and it would contribute to the objectivity of results compared to analysis in which data are gathered and analyzed manually. Another motive behind this evaluation is to provide a comparison of the proposed evaluation framework regarding the existing standards, or globally accepted definitions for values being measured, with a lack of standards. The proposed framework for transparency evaluation in open government is designed to address transparency by using government and data transparency. Combining these indicators, we have gained a unique transparency indicator for open government, which will be explained more thoroughly in the next sections of the paper. We will explain the theoretical background of the evaluation model and provide results from the analysis of transparency on 22 open data portals.

2. Background

Transparency is a noun that represents the condition of being transparent. However, if we observe the adjective, it means that the associated object may be interpreted differently, depending on the object itself. This preliminary remark is necessary since in this paper we will look at data transparency and government transparency and embrace a particular approach accordingly. Keeping this in mind, in the following paragraphs we will provide a revision of the literature on transparency made up of these two important factors.

In literature, data transparency is often not separated from the concept of data openness and is defined accordingly [

9,

10]. Tauberer [

10] points out three principles of openness: accessibility, authenticity, and accuracy. The accessibility principle complies with openness demands which promote online availability of data and free access without discrimination or the need to agree to a license. Authenticity relates to users’ trust in published data, as well as data relevance. This principle refers to the authenticity of data sources in terms of their reliability and reputation. Accuracy refers to data precision and represents one aspect of data quality. Seen through these principles, transparency could be defined as a measure of openness, data sources’ authenticity, and data accuracy and integrity. However, there is more to transparency. Veljković et al. see data transparency as not only authenticity of data and data sources, but also as data understandability and reusability [

11]. Understandability denotes clarity of data—the principle that each user should comprehend the information contained—and reusability refers to the possibility of using the same data in a different manner.

The United Nations Global E-Government Survey on the quality of government services and products for the UN Member States addresses e-government readiness and e-participation [

12]. An e-participation index addresses quality and information usefulness and services aiming at engaging citizens in public policy through information and communication technologies. It is related to data transparency since it focuses on data quality and usability, as well as the aspect of data openness towards citizens. The Accenture E-Government Leadership Survey shows the government performance by measuring service maturity and delivery maturity, which reflects different delivery aspects of government [

13], but it could not be related to the data transparency feature. The e-government benchmark conducted by [

14], popularly named eGovBE, has initially been focused on reviewing twenty basic public services and e-government progress in terms of core indicators: sophistication, availability of services, user orientation, and national portal [

15,

16]. In 2009, the model’s measuring criteria and core indicators were enhanced by replacing national portal and user-centricity with eProcurement and user experience indicators, making the benchmark more oriented towards outcomes and impact. In the light of these new features, data transparency could be seen in user-centricity as an attempt to make citizens more involved with governmental procedures.

These benchmarks were applied to earlier e-government models, which is why data transparency is not their concrete area of focus. Considering that an official open government benchmark still does not exist, we can come to the conclusion that there is no unique evaluation model of data transparency as part of open government in general. This claim is confirmed by the systematic research of Matheus and Janssen, who suggest a comprehensive model of determinants that will foster transparency enabled by open government data [

17]. These data are exploited through open data portals, which, according to Lnenicka and Nikiforova [

18], play the role of an interface that creates transparency. Release of government data on open data portals influences the processes that go toward contribution to transparency where corruption, wastage, and inefficiency take place the most. However, there are some initiatives, amongst which Osimo’s is the most referenced one. Osimo proposes a set of basic public data to measure data reusability and transparency [

19]. For the evaluation of basic public data, he recommends a five-level scale, the levels of which gradually change from no data to sheer data availability and reusability. A similar proposal for assessing the availability of open-linked data comes from Sir Tim Berners-Lee who has defined a 5-star model for assessing data reusability based on formats of published data [

20]. As data availability is one of the key features of data transparency, this model could also be considered for building a framework for transparency evaluation in open government.

Based on addressed benchmarks and initiatives, we have identified that data transparency is also affected by data quality in the form of data accuracy, data accessibility and availability, data reusability, and data openness. These features are used to gain insight into the e-government openness, but have not been analyzed as part of one transparency evaluation framework. Our intention is to use all these features jointly in our open data transparency evaluation, since their importance is clearly significant, considering their presence in evaluation models applied in earlier stages of e-government. Keeping that in mind, we will utilize OpenGovB transparency indicator and extend it to include identified quality components.

Government transparency evaluation is a process of measuring the extent of transparency against a predefined set of indicators. As transparency is also an e-government feature, existing e-government benchmarks need to be considered to obtain a comprehensive view on assessment methods that will unveil the role of transparency in open government. There are a variety of studies on the impact of transparency in the government domain [

21,

22,

23,

24], but none of them consider quantitative indicators that will relate transparency to the potential usage of government information by stakeholders.

When it comes to creating indicators of government transparency, we should start from its definition. Government transparency is mostly defined as a measure of citizens’ insight into business, processes, and operations of the government [

25]. It is about disclosure, providing citizens with a window to find and view information, process flows, issues, events, projects, policies, and other matters of their interest. Meijer et al. [

26] distinguish three types of government transparency: active, passive, and forced release of information. Having this in mind, transparency is a complex measure that must encompass all aspects of government interaction with the public. However, transparency is often simplified to a single domain: the provision of information of public importance. For this simplified view on government transparency, there are some straightforward and generally accepted indicators.

If we look at the government transparency through enabling data openness, then we can talk about two groups of indicators; first, one relies on generally accepted rules of openness, while others are bound to legal regulations [

27,

28]. Generally accepted rules of data openness depend on the country itself, its history and traditions, political preferences of the nation, tradition, culture, etc. The methodologies that are based on these rules depend on the views of researchers and can be freer in the choice of indicators. On the other hand, legally binding regulations are defined by the law in the field and, therefore, methodologies that accompany these regulations have predictable indicators. One of the most easily measurable and comparable elements of government transparency is the legislation on freedom of information (FOI) [

24,

29,

30]. Methodologies for assessing FOI are mainly based on expert assessment of whether there is an appropriate legal framework that enables access to information of public importance. This kind of transparency is often called passive transparency since dissemination occurs upon user request and is not actively released by authorities. What governments often do is publish information of public importance, which is proactive disclosure of information, and these published data are considered by some authors as a proxy for measuring active transparency [

31]. Nevertheless, Gonzálvez-Gallego and Nieto-Torrejón [

32] reported that the availability of government data to the relevant stakeholders, of which the most important are the citizens, leads to them having more trust in public institutions, as well as the creation of trustworthy governments [

33]. In particular, this is achieved by promoting open government data usefulness among them, thus making governments more accountable and trustable.

The Centre for Law and Democracy (CLD), a non-profit corporation from Canada, created a methodology for comparative assessment of a legal framework for the right to information (RTI). They define 61 indicators divided into seven groups: the right of access, scope, requesting procedures, exceptions and refusals, appeals, sanctions and protections, and promotional measures. Each indicator is scored between 0–2 points, and the total number of points that can be achieved is 150. This methodology was launched in 2013, and since it was oriented towards the legal framework only and not towards the implementation of the framework, some of the most developed countries in Europe had a very low ranking. For example, Scotland has an FOI law originating from 1766 and is ranked 40 (92 out of 150 points), even though they had a long history of implementation. On the other hand, Serbia, with its law on FOI from 2003 and very weak implementation of this law, is ranked as first on the list (135 out of 150 points). If we exclude these negative effects of the methodology, we can say that strong law is of great importance to government transparency since it supports government openness and facilitates access to information of public importance.

Other methodologies for measuring government transparency are aimed at certain transparency aspects, such as budget transparency. The International Budget Partnership (IBP) organization ranks countries based on a questionnaire with 125 questions related to the transparency of government budget [

34].

We can also observe the absence of corruption as one of the government transparency aspects. The Corruption Perceptions Index (CPI) or Control of Corruption (CC) are the two most famous indexes of corruption [

35]. CPI takes the value from the range (0, 100) where 0 indicates high corruption and 100 denotes the absence of corruption in the government administration. CC indicator takes values from the range (−2.5, +2.5), whereas the upper and lower range limits are related to low and high corruption respectively.

As can be noted, government transparency is a multifaceted concept that is often measured by looking at only some of the facets, such as corruption or FOI law. Moreover, as there is a lot of effort coming from non-governmental organizations and academic institutions to measure different aspects of government transparency, they will be included in our transparency calculation. According to the above, we can conclude that RTI and CPI indicators represent a valuable source for measuring government transparency. These two will be used for the utilization and extension of the OpenGovB transparency indicator, which will be further evaluated as a constituent of transparency in open government.

3. OpenGovB Transparency Indicator

OpenGovB is a model for assessing the extent of a government’s openness in accordance with well-defined and globally embraced openness principles [

2]. The benchmark addresses openness of government through four main principles: open data, transparency, participation, and collaboration, and is unique in this approach. In essence, it serves to determine the extent of fulfillment of the main goals of open government. The focus of this paper is on the transparency evaluation using the transparency indicators given in OpenGovB and the usage demonstration on open data portals.

Online data are organized into various data categories; however, OpenGovB examines only nine categories: Finance and Economy, Environment, Health, Energy, Education, Transportation, Infrastructure, Employment, and Population [

4], to establish a standard evaluation measurement model. This source is used for assessing BDS, DOI, and T indicators. Additionally, BDS indicates the presence of a predefined set of high-value open data categories, which is necessary for the automatic evaluation of data transparency. User involvement is considered to be a valuable indicator of government transparency, and it is included in the benchmark model in order to express the willingness and readiness of the government to accept and utilize users’ perspectives and points of view. It only influences the evaluation of user participation indicators in the proposed model. In OpenGovB, transparency is viewed as the average function of all types of transparency, data, and government, which are equally important for the constitution of the transparent government.

3.1. Transparency Evaluation Model

Based on research on transparency and its meaning in the context of open government, we define transparency in open government through two separate indicators: data transparency and government transparency. Data transparency is measured through authenticity, understandability, and reusability of data available on the government’s open data portal. These three measures tell us whether the government publishes necessary information about data sources, whether their formats are reusable, and if they are properly described, which are prerequisites for automatic processing. Furthermore, they are also used for the calculation of e-government openness. Government transparency utilizes existing transparency indicators, primarily RTI and CPI. The final value of transparency is the average value of the two indicators as given in Equation (1).

3.2. Data Transparency (DT) Indicator

Keeping in mind the fact that an open data portal publishes large amounts of datasets organized in different data categories, using the complete data collection may have large time and cost impacts. To calculate data transparency, it is necessary to choose a relevant subset of datasets for each category as a sample for the calculation process. Data transparency evaluation is thus a two-phase process where the first phase relates to choosing a relevant subset of datasets, while the other implies formulas’ application to key dataset features.

Generating results from sample data, rather than from the complete population, is a statistical challenge that we are confronted with. When we have a reason to believe that the sampled and the unsampled data have essentially similar or equivalent characteristics, we can make conclusions about the entire population based on an incomplete subset of the population. To ensure that the sample is representative not only of itself but also of the unsampled group, it is necessary to consider the level of confidence, the margin of error, and the expected accuracy [

36]. The confidence level usually takes a value of 95% or 99%, which corresponds to the standardized value of the confidence interval of ±1.96 and ±2.58. The confidence interval is the interval within which we expect the mean of the population to be, while confidence level denotes the probability that an unknown population mean value is within the interval. We have chosen a 95% confidence level for which Z takes the value of 1.96 in the calculation, according to the table of standard normal curve areas values. The margin of error allows for a deviation of the sample size results in comparison to population results. In our calculations, the margin of error is valued at 10%. Accuracy denotes a percentage of sampled data that would truly satisfy the required characteristics. As there is no trustworthy way for a reliable prediction of such percentage, we have used the 50% value. For choosing the sample size of the population, we are using the following equations [

37]:

Equation (2) determines the sample size using the confidence level (Z), the margin of error (e), and expected accuracy (p), while Equation (3) represents a correction of calculated sample size according to the true size of data category (N).

After choosing a sample size for each data category, the data transparency indicator is calculated as an average of authenticity (A), understandability (U), and reusability (R) for datasets contained in the sample (see Equation (4)). We equally weighted all three components as our conducted research on transparency definition and its meaning showed that they are all equally important for building transparency.

Authenticity is a measure of trust in data publishers and the accuracy of data itself, and it could be seen as a complex feature comprised of two parameters: Data Sources (DS) and Data Accuracy and Integrity (DAI). Considering that DAI represents a summary value based on data characteristics from a sample subset and that, by nature, it is a measure of trust in the precision and credibility of published data, while DS is a global view on data providers by which we can obtain insight into the reliability of data providers, we have decided that DS should constitute 40%, and DAI 60%, of the Authenticity indicator (Equation (5)).

Data sources are governmental and non-governmental authorities, institutions, and agencies that provide raw data for publishing on an open data portal. For users to utilize data safely and without prejudice and trust in published data integrity, it is necessary for publishers to be well-known and have a good reputation. Most of the responsibility for this requirement is on governmental authorities and we define it through the maintenance of a list of data sources available on the data portal (F1), the possibility of reviewing datasets published by a particular data source (F2), and the existence of data source description (F3). Users are another important source of feedback through grading data sources (F4) based on their experience with data published by the source. Information provided by the government (F1, F2, and F3) makes up 80% of the DS value, while user-provided feedback is involved with 20%. Features marked as F1 and F2 are considered more influential on building DS parameters, as they represent high-frequency user requests, while features F3 and F4 are considered to have less influence. F1, F2, and F3 are scored 1 if satisfied and 0 if unsatisfied, while F4 presents an average grade scaled to a range (0, 1). The formula for DS calculation is given in Equation (6).

DS takes its values from the range (0, 1) where 0 signifies that none of the features are present on open data portals, while 1 marks maximum data sources’ credibility.

Data Accuracy and Integrity is a parameter that represents a measure of trust in the precision and credibility of published data. To provide a meaningful and reliable evaluation method for calculating such delicate characteristics, three assessment features need to be addressed: government grading (E1), user grading (E2), and quality certification (E3). Government grading relates to government-provided feedback on data accuracy and integration for each published dataset. The establishment of the evaluation procedure is left to the government itself, while the final feature value is calculated as an average grade of all grades in a sample data subset and scaled to range (0, 1). User grading represents numerable user feedback on published data, usually obtained via online scoring of data. Government and user grading are very important since they represent an opinion, an experience, and a point of view, which is why they are equally scored and together represent 70% of the DAI parameter. The third feature is quality certification, and it simply acknowledges the existence of a certifying document for a dataset that represents an electronic proof of data accuracy and integrity of contained information and is provided by the data publisher. The final value of the DAI feature is calculated according to Equation (7).

DAI takes its values from the range (0, 1) where 0 denotes complete data inaccuracy, while 1 signifies maximal satisfaction of all three features.

The understandability feature reflects the existence of comprehendible descriptions for each dataset category, as well as contained raw data. This could be accomplished by publishing textual descriptions of data categories with detailed explanations of contained data types, as well as descriptions of each contained dataset. Understandability is a complex measure comprised of two parameters: Data Categories Description (DCD) and Data Sets Description (DSD), where the first one represents descriptions of data categories, while the second one denotes the existence of a description for each dataset. Understandability is calculated based on DCD and DSD parameters as given in Equation (8). DCD parameter constitutes 40% of the final Understandability value, while stronger influence is given to the DSD feature due to its direct relation to data. The Understandability indicator ranges from 0 to 1, where 1 implies a complete availability of both data categories and datasets descriptions, and 0 implies the lack of descriptions.

DCD parameter is evaluated against three features: textual description (D1) as existence of textual data description for data category, tags (D2) as the existence of searchable tags associated with data category, and linked information (D3) in a form of links towards additional information regarding data category. All three are considered equally important and are thus equally scored. Each category is separately scored against all three features as (0.33*D1+0.33*D2+0.33*D3) with a final value from range (0, 1). DCD is then calculated as an average value of all categories’ values. Value 0 of the DCD parameter means that not even one data category satisfies any of the three features, while value 1 signifies that all dataset categories have adequate descriptions, tags, and linked information. DSD is calculated against the same three features as DCD, but for each dataset from a chosen sample subset. Each dataset is valued from range (0, 1), following the same equation applied to data categories in the DCD parameter. The final DSD value represents an average value of all datasets. DSD values range from 0 to 1 where 0 implies a total lack of data descriptions, tags, and linked information, while 1 signifies that all datasets are properly described.

Data Reusability (R) is a parameter that refers to providing data in open formats so that a user can search, index, and download data via common tools without any prior knowledge of data structures. We have defined an assessment scale, inspired by Sir Tim Berners-Lee’s 5-star model for measuring openness of linked open data [

20], by adapting it to a 4-level model that meets the requirements of estimating data reusability in an open government context.

The calculation of the R parameter is performed against each dataset in the chosen sample subset by determining its reusability level. A dataset is scored according to the level it belongs to and then scaled to the range (0, 1). Reusability’s final value is an average value of the dataset’s reusability. It ranges from 0 to 1, where 0 represents the lowest data availability level and denotes that no dataset is available online, while the value of 3 represents that all datasets are not only available but also maximally reusable in the open data context.

Keeping in mind the complexity of sub-indicators, we present the final equation for the data transparency indicator as the average data transparency for all categories of open data:

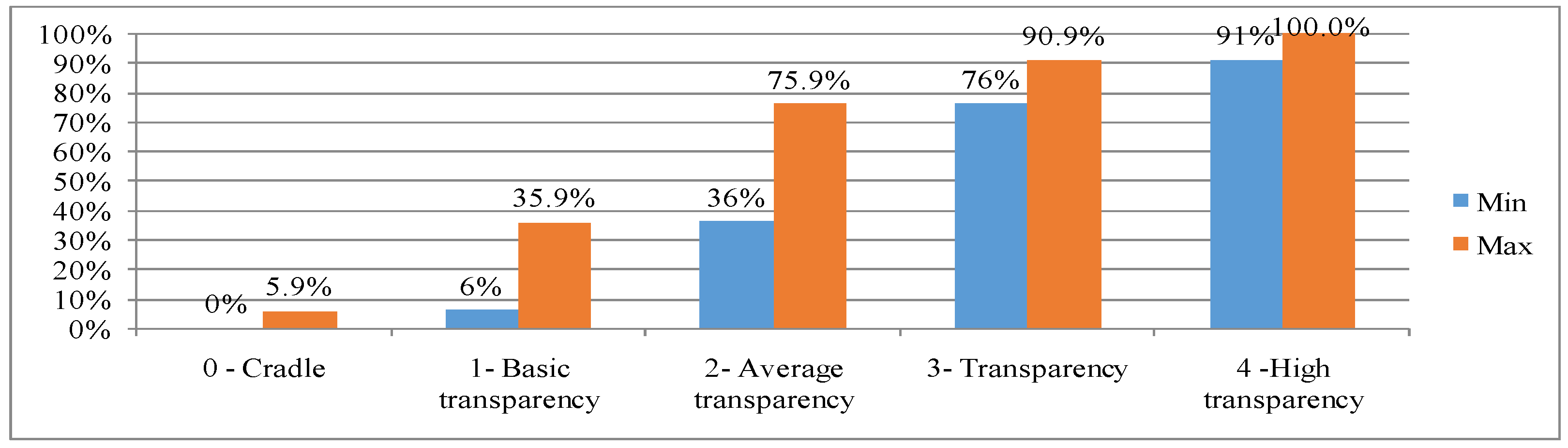

Data transparency values are in range (0, 1) or (0–100%) and spread over five transparency levels, as illustrated in

Figure 1. Each level represents a range of min-max values of authenticity, understandability, and reusability with equal participation. To explain this further, let us look at an example for level 2. When all three sub-indicators (A, U, R) have values ranging from 0.036 to 0.759, then, looking at Equation (3), data transparency is in the range of 36–75.9%. The lowest level in the data transparency scale, named cradle, represents either a complete lack of transparency or the beginnings of the transparency initiative. As a government advances in opening and enriching open data, it will rise higher on a transparency scale through basic, average, and transparency levels up until the final (high transparency) level.

3.3. Government Transparency (GT) Indicator

For government transparency, we adopted two mentioned indicators, RTI and CPI. We used both for comparison purposes. It is interesting that RTI acts as a static measure since it only changes when a new FOI law is enacted, while CPI is more dynamic and changes each year. Since GT and DT are included in the overall transparency indicator value with an equal percentage, we had to scale RTI and CPI to range (0, 1) since transparency final value should be in that range. Using RTI we calculated GT as presented in Equation (10), while the CPI-dependent calculation is given in Equation (11).

4. Use Case: Transparency Evaluation

In the beginning, open data initiatives were heterogeneous in nature, open licenses differed between initiatives, and there were still heterogeneous formats and a lack of metadata [

38,

39]. Datasets are often published in a format defined by the system from which the data originate but without the syntax, semantics, and context, i.e., without the crucial elements which make data more usable [

40]. Openly publishing datasets should include syntax (structure), semantics (understanding), and context (metadata) to enable data reusability. Luckily, more recently open-source data portals have begun to offer a standardized system for data publishing, viewing, and retrieval. Comprehensive Active Knowledge Network (CKAN) developed an open-source software framework built on open standards which ensures that published data are compatible with other such portals. CKAN uses Data Catalog Vocabulary (DCAT), which is a standard vocabulary for describing datasets in data catalogues. It also exposes API for developers, to allow retrieving datasets and their metadata properties based on DCAT.

For the practical evaluation of the transparency indicator, we have chosen 22 open government data portals that publish their open data using the CKAN platform. These portals were selected based on the software platform they use for the back-end since the CKAN offers the ability to query the portal’s data using a defined API. All the calculations for the data transparency (DT) feature are done based on the API call results, i.e., open data JSON representation. For the government transparency (GT) we took two indexes: RTI and CPI.

Table 1 presents results for the Transparency indicator (T) of the OpenGovB benchmark. In the table, we presented results for the indicators: data transparency, government transparency using RTI, government transparency using CPI, and final transparency when using RTI or CPI. Since RTI and CPI indexes have different maximal values, 150 and 100 respectively, to have comparable results we used scaled values in the calculation. As can be seen from

Table 1, the first five countries have the best results when scoring by T-RTI. Finland also has an excellent score for T-CPI and DT values just above the countries’ average. Italy and Russia are best in DT with a score of 0.39 but, as can be observed in

Figure 1, they are only at the average data transparency level. Serbia has the best score in GT-RTI, which resulted in a better overall rank, but looking at the data transparency only it is very low: two times lower than the DT average.

To demonstrate the process of the data transparency calculation (

Figure 2), we will use the Australian open data portal as an example. It has a high value for data transparency, and it also has high rankings in the CPI and RTI indexes.

On the Australian open data portal all BDS categories were present and therefore we applied data transparency calculation for each category separately.

For the identified categories, we calculated the total number of datasets in category (N) and a sample size (n) for each category (

Table 2).

Authenticity sub-indicator is not dependent on individual datasets and therefore it can be calculated based on API calls that return data on organizations that publish data on open data portals. For calculating Authenticity, we used the API call:

http://data.gov.au/api/3/action/organization_list which returns a list of all organizations that publish data on the Australian open data portal. There were exactly 288 organizations in total and for each one we examined required subcomponents of Authenticity. The first four sub-indicators are related to data sources (F1–F4) and the other three (E1–E3) on the data themselves. F2 and F4 describe the possibility for users to rate data sources portal and can provide feedback on the assumed data, but this information could not be obtained through the CKAN API calls, and thus the sub-indicators received score 0. F1 sub-indicator relates to the data sources list and it received score 1 since there was a list of organizations available on the open data portal. F3 sub-indicator, relating to the existence of the meta-data description of the data source, receives a value 1 if there is a value for the [description] and [is_organization] meta-tags; otherwise, the obtained value is 0. This is evaluated for each data-publishing organization separately and then a final F3 value is taken as the average.

To get the score for E1 and E2 (

Table 3), it was not possible to use existing data on the portal, since the information about user grading and government grading could not be obtained; therefore, they received value 0. On the other hand, the E3 sub-indicator can be viewed at the organizational level so that if an organization has certification on data quality it will mean that published data are also certified. To score E3 on the organizational level we examined values for meta-data tag [state] on value “active”, [approval_status] on value “approved” and if [is_organization] is set to “true”. If all three conditions are met, E3 received score 1 for that organization. In the future, upon the availability of the possibility to query and obtain information on data certification, this calculation will be changed so that it is applied to the data inside each data category within the organization.

In

Table 4 we presented a calculation for the Understandability indicator in the light of the two sub-indicators, DCD—related to categories and DSD—related to datasets. Understandability of data sets is analyzed by looking for defined properties of random datasets within the sample, while data categories’ understandability analyzes properties of categories. If [description] property has value, D1 is scored 1, otherwise 0. For D2 we were looking for the value of [tags] property, and D2 has scored 1 or 0 accordingly. Finally, for D3 both [extras] and [links] properties were examined and, if both had values, D3 received score 1, otherwise score 0. Calculating values for DCD and DSD uses scoring of D1-D3 as described. For the Australian portal, the understandability of data categories received a value of 0 since there were no values for D1–D3 sub-indicators. Understandability of datasets is an average value of understandability for all analyzed data, and it has the value of 0.2.

For example, the Climate BDS category has the results for DSD as given in

Table 5. To obtain these results, we executed API call

https://data.qld.gov.au/api/action/tag/climate to find out that there are six datasets tagged with the word climate, and then we requested each dataset and checked their properties [description], [tags], [extras], and [extras[links]]. We obtained a score of 0.33 for this category.

Reusability is calculated on the dataset level. For the sample size of each dataset category, we chose random datasets. As datasets can have many resources, we randomly picked their sample and checked the formats. Each format was scored as given in

Table 6, and the average level was determined for the dataset.

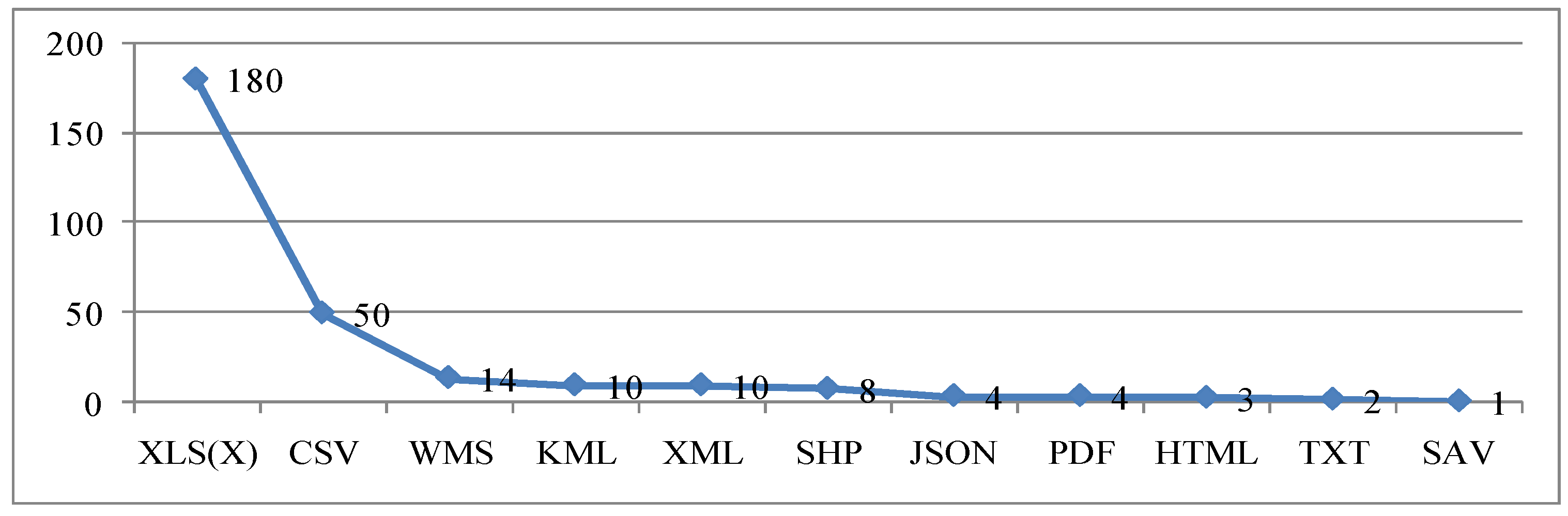

Data resources in the Australian portal are made available in a variety of formats (

Figure 3). Approximately 63% of the analyzed resources are in XLS and XLSX formats, and a large number of resources are available in other machine-readable formats, most commonly CSV, XML, KML, and JSON. Only a small part (up to 4%) of resources are published in non-reusable formats such as PDF, plain text, or images, which is a direct barrier for further use.

For the Australian open data portal, we obtained the results for data understandability and reusability for each data category and the authenticity indicator of the portal. Then we could calculate data transparency for each data category, as presented in

Table 7, and DT for the portal by applying Equation (9).

Australia has scored 83 for RTI and 79 for CPI, using the values for DT sub-indicators and applying Equation (1), we receive 0.4 and 0.6 as transparency indicator values respectively.

The steps-based explanation serves as a guide for anyone who wants to try to calculate transparency. We automated the calculation process by developing a web-based application for calculating OpenGovB indicators, including transparency. The calculation is initiated upon entering the URL of the open data portal and represents the current indicator value that depends on the randomly chosen datasets within the sample.

What have we learned from assessing data transparency of 22 open government portals? Who can benefit from this study, and how do they benefit?

Currently, all portals have their weaknesses, which mainly originate in poor data quality. Reasons for producing poor data quality are varied, starting from insufficient budget, lack of internal manual resources, lack of relevant technology, inadequate data strategy, human error, lack of internal communication between departments, or inadequate management support. By improving data quality, bars would raise for all transparency sub-indicators. By adding missing dataset descriptions, category descriptions, and responsible organizations that maintain data, and by linking relevant datasets, tagging categories, and datasets, governments would significantly improve the score for the Understandability indicator. Exposing the list of trusted data sources and enabling open-source modules that would allow user grading of data sources and datasets could improve Authenticity indicator scores. To gain better results for the Reusability indicator, readability of resources should be improved, meaning that open data formats need to prevail over proprietary data formats. That would be the prerequisite for reusing the data, building new datasets, services, and solutions.

The transparency evaluation results should be considered by governments to improve their own open data policies, and in so doing improve government transparency and users’ trust. The results are also a good starting point for the researchers who want to build new transparency models or suggest future improvements for the current ones. It is necessary to monitor improvement on certain model features and scale weights accordingly as governments progress to a more mature transparency model.

5. Conclusions

Transparency in open government is a relatively new concept that has arisen from the previous e-government models and initiatives. It has proven to be one of the most significant features of open government and a concept every government strives towards. The idea of building a transparent government in the context of openness towards citizens has quickly spread from Obama’s office to governments around the globe. Today, a great number of countries emphasize transparency as a major milestone in their smart-city strategies and action plans. Transparency in open government makes steps forward towards the usage of data in a meaningful way. When there are no obstacles for data transparency, there are no obstacles for data consumption. A smart city vision can gain value from monitoring transparency and our transparency evaluation model can be the right tool for this.

The model for evaluating transparency that was proposed here offers an assessment method for determining the level of progression based on the most critical open data concerns: data accuracy and integrity, data quality, data sources’ credibility, data clarity, and reusability, which we consider a significant advantage of such an approach. Numerous sources in the literature that already dealt with benchmarking of open government data support this claim [

2,

27,

41,

42]. They all directly relate to open data, making the model completely applicable for open government. The other part of the model is government transparency, for which we decided to include some of the existing indicators. At the same time, this could present a drawback, since the model addresses only some of the transparency features, while flexibility and comparison of transparency are overshadowed by different features. This we see as the challenge that we need to overcome in the following period to strengthen the model even more and make it more trustworthy. We applied the model on 22 open data portals that are built on the CKAN platform to demonstrate the assessment possibilities, and in the future we plan to enable support for other data platforms as well. This is especially important in order to obtain comprehensive overview of open data portals powered by other platforms (DKAN, Udata, Socrata, etc.). Increasing the number of surveyed portals will also contribute to the validity of this study.

Future research aspects should be focused on the development of transparency indicators related to back-office experience and efforts. Governmental representatives need to be actively involved in evaluating transparency, as they could provide valuable information regarding legislative background, initiatives, and strategies, which are all important parts of a transparent government. Although the Authenticity indicator does involve government feedback in the evaluation process, the model needs to be further enriched with back domain information that would increase the administration’s participation in the final assessment. This could be achieved through thoroughly designed questionnaires that would be filled in by government representatives. Consequently, this will provide an adequate environment for the comparison of those indicators with our benchmark model and its indicators from which unambiguous conclusions can be drawn about how the problem of transparency in open government should be observed. Comparative analysis of qualitative and quantitative indicators of transparency in the government domain also can contribute to the future establishment of a standard evaluation model for transparency in open government.